license: mit

language:

- en

Master Thesis: High-Fidelity Video Background Music Generation using Transformers

This repository contains the pretrained models for the adaptation of MusicGen(https://arxiv.org/abs/2306.05284) to video-based music generation, which have been created during my master's thesis at RWTH Aachen University.

Abstract

Current AI music generation models are mainly controlled with a single input modality: text. Adapting these models to accept alternative input modalities extends their field of use. Video input is one such modality, with remarkably different requirements for the generation of background music accompanying it. Even though alternative methods for generating video background music exist, none achieve the music quality and diversity of the text-based models. Hence, this thesis aims to efficiently reuse text-based models' high-fidelity music generation capabilities by adapting them for video background music generation. This is accomplished by training a model to represent video information inside a format that the text-based model can naturally process. To test the capabilities of our approach, we apply two datasets for model training with various levels of variation in the visual and audio parts. We evaluate our approach by analyzing the audio quality and diversity of the results. A case study is also performed to determine the video encoder's ability to capture the video-audio relationship successfully.

Installation

- install PyTorch

2.1.0with CUDA enabled by following the instructions from https://pytorch.org/get-started/previous-versions/ - install the local fork of audiocraft (https://github.com/facebookresearch/audiocraft) which allows MusicGen to accept video input with

pip install git+https://github.com/IntelliNik/audiocraft.git@main - install the remaining dependencies with

pip install peft moviepy omegaconf

Model Usage

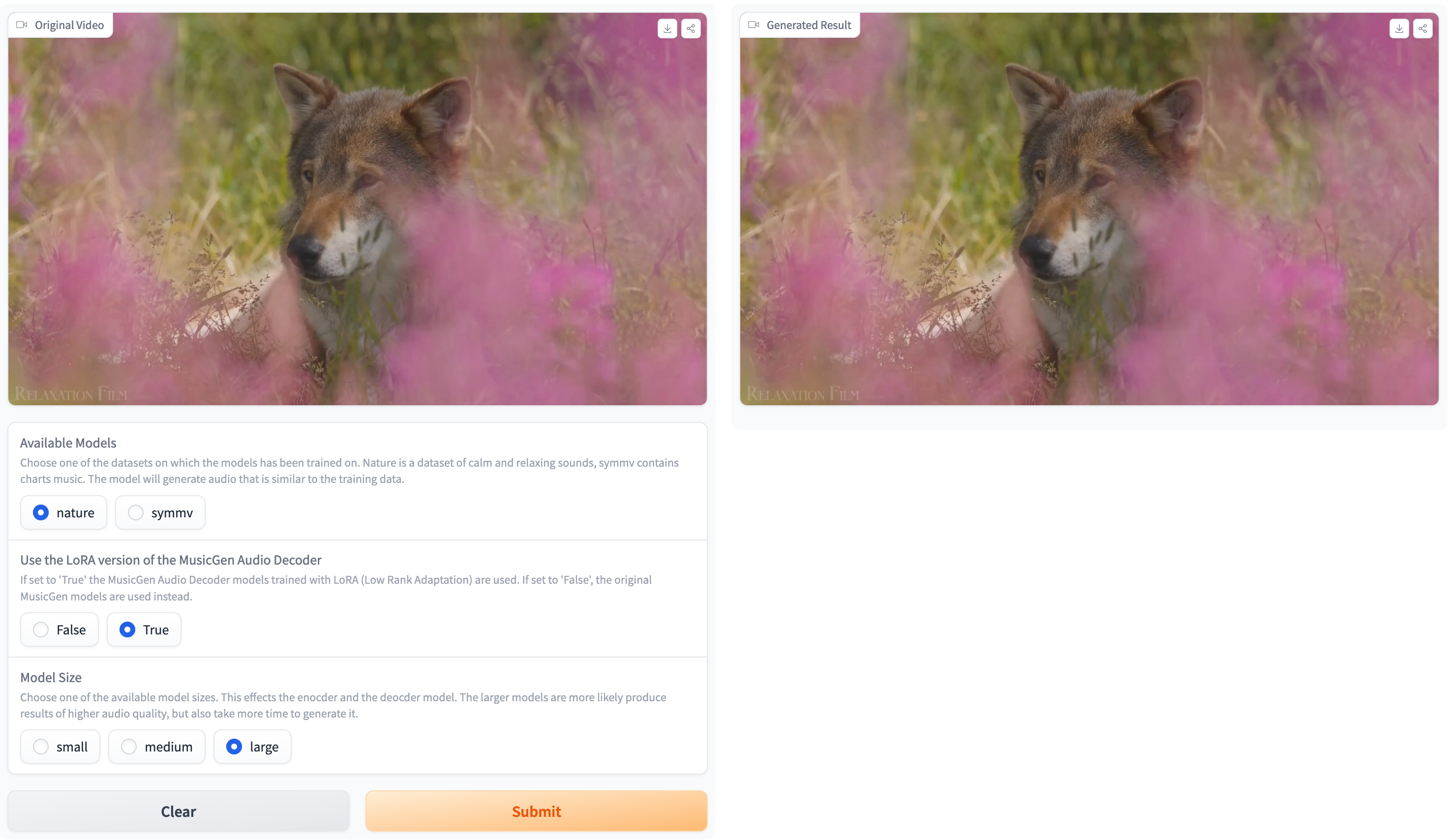

- to start the Gradio interface run

python app.py - select an example input video and start the generation by clicking "Submit"

Screenshot of the Gradio Interface

Limitations and Usage Advice

- not all models generate audible results, especially the smaller ones

- the best results in terms of audio quality are generated with the parameters

nature, peft=true, large

Contact

For any questions feel free to contact me at niklas.schulte@rwth-aachen.de