Commit

•

55ec5be

1

Parent(s):

d863a32

Upload 14 files

Browse files- .chainlit/config.toml +72 -0

- .gitattributes +5 -0

- __pycache__/model.cpython-311.pyc +0 -0

- chainlit.md +8 -0

- conversession e.g/ChatBot Conversession img-1.png +0 -0

- conversession e.g/ChatBot Conversession img-2.png +0 -0

- conversession e.g/ChatBot Conversession img-3.pdf +3 -0

- conversession e.g/ChatBot Conversession img-3.png +3 -0

- conversession e.g/ChatBot Conversession vid.mp4 +3 -0

- data/71763-gale-encyclopedia-of-medicine.-vol.-1.-2nd-ed.pdf +3 -0

- ingest.py +23 -0

- model.py +98 -0

- requirements.txt +11 -0

- vectorstores/db_faiss/index.faiss +3 -0

- vectorstores/db_faiss/index.pkl +3 -0

.chainlit/config.toml

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[project]

|

| 2 |

+

# If true (default), the app will be available to anonymous users.

|

| 3 |

+

# If false, users will need to authenticate and be part of the project to use the app.

|

| 4 |

+

public = true

|

| 5 |

+

|

| 6 |

+

# The project ID (found on https://cloud.chainlit.io).

|

| 7 |

+

# The project ID is required when public is set to false or when using the cloud database.

|

| 8 |

+

#id = ""

|

| 9 |

+

|

| 10 |

+

# Uncomment if you want to persist the chats.

|

| 11 |

+

# local will create a database in your .chainlit directory (requires node.js installed).

|

| 12 |

+

# cloud will use the Chainlit cloud database.

|

| 13 |

+

# custom will load use your custom client.

|

| 14 |

+

# database = "local"

|

| 15 |

+

|

| 16 |

+

# Whether to enable telemetry (default: true). No personal data is collected.

|

| 17 |

+

enable_telemetry = true

|

| 18 |

+

|

| 19 |

+

# List of environment variables to be provided by each user to use the app.

|

| 20 |

+

user_env = []

|

| 21 |

+

|

| 22 |

+

# Duration (in seconds) during which the session is saved when the connection is lost

|

| 23 |

+

session_timeout = 3600

|

| 24 |

+

|

| 25 |

+

# Enable third parties caching (e.g LangChain cache)

|

| 26 |

+

cache = false

|

| 27 |

+

|

| 28 |

+

# Follow symlink for asset mount (see https://github.com/Chainlit/chainlit/issues/317)

|

| 29 |

+

# follow_symlink = false

|

| 30 |

+

|

| 31 |

+

# Chainlit server address

|

| 32 |

+

# chainlit_server = ""

|

| 33 |

+

|

| 34 |

+

[UI]

|

| 35 |

+

# Name of the app and chatbot.

|

| 36 |

+

name = "Chatbot"

|

| 37 |

+

|

| 38 |

+

# Description of the app and chatbot. This is used for HTML tags.

|

| 39 |

+

# description = ""

|

| 40 |

+

|

| 41 |

+

# The default value for the expand messages settings.

|

| 42 |

+

default_expand_messages = false

|

| 43 |

+

|

| 44 |

+

# Hide the chain of thought details from the user in the UI.

|

| 45 |

+

hide_cot = false

|

| 46 |

+

|

| 47 |

+

# Link to your github repo. This will add a github button in the UI's header.

|

| 48 |

+

github = "https://github.com/ThisIs-Developer"

|

| 49 |

+

|

| 50 |

+

# Override default MUI light theme. (Check theme.ts)

|

| 51 |

+

[UI.theme.light]

|

| 52 |

+

#background = "#FAFAFA"

|

| 53 |

+

#paper = "#FFFFFF"

|

| 54 |

+

|

| 55 |

+

[UI.theme.light.primary]

|

| 56 |

+

#main = "#F80061"

|

| 57 |

+

#dark = "#980039"

|

| 58 |

+

#light = "#FFE7EB"

|

| 59 |

+

|

| 60 |

+

# Override default MUI dark theme. (Check theme.ts)

|

| 61 |

+

[UI.theme.dark]

|

| 62 |

+

#background = "#FAFAFA"

|

| 63 |

+

#paper = "#FFFFFF"

|

| 64 |

+

|

| 65 |

+

[UI.theme.dark.primary]

|

| 66 |

+

#main = "#F80061"

|

| 67 |

+

#dark = "#980039"

|

| 68 |

+

#light = "#FFE7EB"

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

[meta]

|

| 72 |

+

generated_by = "0.6.402"

|

.gitattributes

CHANGED

|

@@ -33,3 +33,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

conversession[[:space:]]e.g/ChatBot[[:space:]]Conversession[[:space:]]img-3.pdf filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

conversession[[:space:]]e.g/ChatBot[[:space:]]Conversession[[:space:]]img-3.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

conversession[[:space:]]e.g/ChatBot[[:space:]]Conversession[[:space:]]vid.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

data/71763-gale-encyclopedia-of-medicine.-vol.-1.-2nd-ed.pdf filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

vectorstores/db_faiss/index.faiss filter=lfs diff=lfs merge=lfs -text

|

__pycache__/model.cpython-311.pyc

ADDED

|

Binary file (4.52 kB). View file

|

|

|

chainlit.md

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Llama-2-GGML Medical Chatbot! 🚀🤖

|

| 2 |

+

|

| 3 |

+

Llama-2-GGML Medical Chatbot is a medical chatbot that uses the **Llama-2-7B-Chat-GGML** model which is a *large language model (LLM)* that has been fine-tuned on a dataset of medical text and PDF **"The GALE ENCYCLOPEDIA of MEDICINE"** is a comprehensive medical reference that provides information on a wide range of medical topics.

|

| 4 |

+

### The chatbot is still under development

|

| 5 |

+

## Useful Links 🔗

|

| 6 |

+

|

| 7 |

+

- **Model:** Know more about model [Llama-2-7B-Chat-GGML](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML) 📚

|

| 8 |

+

- **GitHub:** Check out the repository [ThisIs-Developer/Llama-2-GGML-Medical-Chatbot](https://github.com/ThisIs-Developer/Llama-2-GGML-Medical-Chatbot) feel free to commit in the github repo ! 💬

|

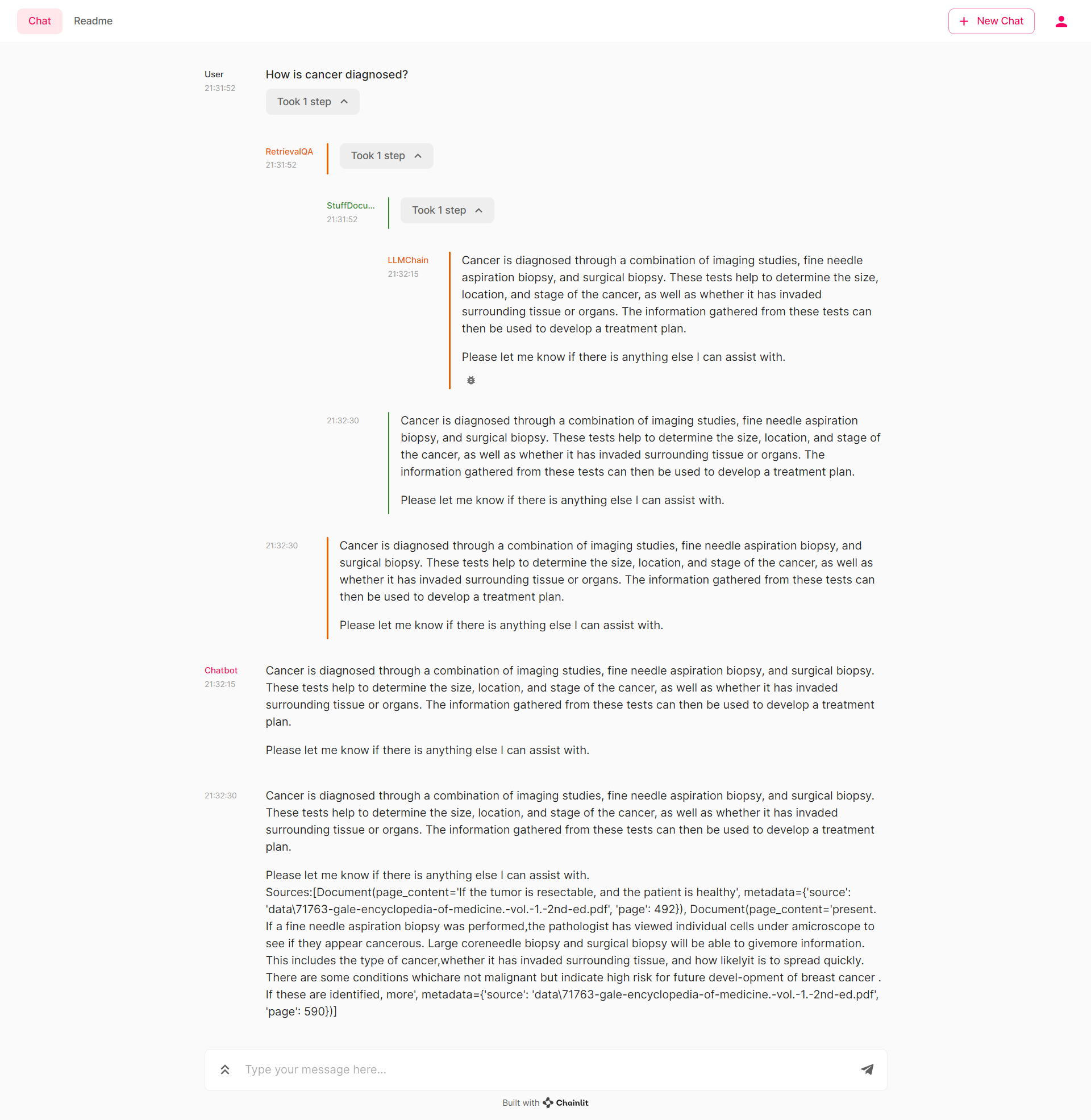

conversession e.g/ChatBot Conversession img-1.png

ADDED

|

conversession e.g/ChatBot Conversession img-2.png

ADDED

|

conversession e.g/ChatBot Conversession img-3.pdf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a1c678b11a684144d33f839c9a108455f47c1c9d0ab96f7bb86a454bbc11df9b

|

| 3 |

+

size 10246322

|

conversession e.g/ChatBot Conversession img-3.png

ADDED

|

Git LFS Details

|

conversession e.g/ChatBot Conversession vid.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:23cd2ec7b5e1665a21a1020c2a9ef4f380266407845e39909665725fd4ab0536

|

| 3 |

+

size 4579961

|

data/71763-gale-encyclopedia-of-medicine.-vol.-1.-2nd-ed.pdf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:753cd53b7a3020bbd91f05629b0e3ddcfb6a114d7bbedb22c2298b66f5dd00cc

|

| 3 |

+

size 16127037

|

ingest.py

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from langchain.text_splitter import RecursiveCharacterTextSplitter

|

| 2 |

+

from langchain.document_loaders import PyPDFLoader, DirectoryLoader

|

| 3 |

+

from langchain.embeddings import HuggingFaceEmbeddings

|

| 4 |

+

from langchain.vectorstores import FAISS

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

DATA_PATH="data/"

|

| 8 |

+

DB_FAISS_PATH="vectorstores/db_faiss"

|

| 9 |

+

|

| 10 |

+

def create_vector_db():

|

| 11 |

+

loader = DirectoryLoader(DATA_PATH, glob='*.pdf', loader_cls=PyPDFLoader)

|

| 12 |

+

documents =loader.load()

|

| 13 |

+

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=50)

|

| 14 |

+

texts = text_splitter.split_documents(documents)

|

| 15 |

+

|

| 16 |

+

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2",

|

| 17 |

+

model_kwargs = {'device': 'cpu'})

|

| 18 |

+

|

| 19 |

+

db = FAISS.from_documents(texts, embeddings)

|

| 20 |

+

db.save_local(DB_FAISS_PATH)

|

| 21 |

+

|

| 22 |

+

if __name__ == "__main__":

|

| 23 |

+

create_vector_db()

|

model.py

ADDED

|

@@ -0,0 +1,98 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import asyncio

|

| 2 |

+

from langchain.document_loaders import PyPDFLoader, DirectoryLoader

|

| 3 |

+

from langchain import PromptTemplate

|

| 4 |

+

from langchain.embeddings import HuggingFaceEmbeddings

|

| 5 |

+

from langchain.vectorstores import FAISS

|

| 6 |

+

from langchain.llms import CTransformers

|

| 7 |

+

from langchain.chains import RetrievalQA

|

| 8 |

+

import chainlit as cl

|

| 9 |

+

|

| 10 |

+

DB_FAISS_PATH = 'vectorstores/db_faiss'

|

| 11 |

+

|

| 12 |

+

custom_prompt_template = """Use the following pieces of information to answer the user's question.

|

| 13 |

+

If you don't know the answer, just say that you don't know, don't try to make up an answer.

|

| 14 |

+

|

| 15 |

+

Context: {context}

|

| 16 |

+

Question: {question}

|

| 17 |

+

|

| 18 |

+

Only return the helpful answer below and nothing else.

|

| 19 |

+

Helpful answer:

|

| 20 |

+

"""

|

| 21 |

+

|

| 22 |

+

def set_custom_prompt():

|

| 23 |

+

"""

|

| 24 |

+

Prompt template for QA retrieval for each vectorstore

|

| 25 |

+

"""

|

| 26 |

+

prompt = PromptTemplate(template=custom_prompt_template,

|

| 27 |

+

input_variables=['context', 'question'])

|

| 28 |

+

return prompt

|

| 29 |

+

|

| 30 |

+

# Retrieval QA Chain

|

| 31 |

+

def retrieval_qa_chain(llm, prompt, db):

|

| 32 |

+

qa_chain = RetrievalQA.from_chain_type(llm=llm,

|

| 33 |

+

chain_type='stuff',

|

| 34 |

+

retriever=db.as_retriever(search_kwargs={'k': 2}),

|

| 35 |

+

return_source_documents=True,

|

| 36 |

+

chain_type_kwargs={'prompt': prompt}

|

| 37 |

+

)

|

| 38 |

+

return qa_chain

|

| 39 |

+

|

| 40 |

+

# Loading the model

|

| 41 |

+

def load_llm():

|

| 42 |

+

# Load the locally downloaded model here

|

| 43 |

+

llm = CTransformers(

|

| 44 |

+

model="TheBloke/Llama-2-7B-Chat-GGML",

|

| 45 |

+

model_type="llama",

|

| 46 |

+

max_new_tokens=512,

|

| 47 |

+

temperature=0.5

|

| 48 |

+

)

|

| 49 |

+

return llm

|

| 50 |

+

|

| 51 |

+

# QA Model Function

|

| 52 |

+

async def qa_bot():

|

| 53 |

+

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2",

|

| 54 |

+

model_kwargs={'device': 'cpu'})

|

| 55 |

+

db = FAISS.load_local(DB_FAISS_PATH, embeddings)

|

| 56 |

+

llm = load_llm()

|

| 57 |

+

qa_prompt = set_custom_prompt()

|

| 58 |

+

qa = retrieval_qa_chain(llm, qa_prompt, db)

|

| 59 |

+

|

| 60 |

+

return qa

|

| 61 |

+

|

| 62 |

+

# Output function

|

| 63 |

+

async def final_result(query):

|

| 64 |

+

qa_result = await qa_bot()

|

| 65 |

+

response = await qa_result({'query': query})

|

| 66 |

+

return response

|

| 67 |

+

|

| 68 |

+

# chainlit code

|

| 69 |

+

@cl.on_chat_start

|

| 70 |

+

async def start():

|

| 71 |

+

chain = await qa_bot()

|

| 72 |

+

# msg = cl.Message(content="Starting the bot...")

|

| 73 |

+

# await msg.send()

|

| 74 |

+

# msg.content = "Hi, Welcome to Medical Bot. What is your query?"

|

| 75 |

+

# await msg.update()

|

| 76 |

+

|

| 77 |

+

cl.user_session.set("chain", chain)

|

| 78 |

+

|

| 79 |

+

@cl.on_message

|

| 80 |

+

async def main(message):

|

| 81 |

+

chain = cl.user_session.get("chain")

|

| 82 |

+

cb = cl.AsyncLangchainCallbackHandler(

|

| 83 |

+

stream_final_answer=True, answer_prefix_tokens=["FINAL", "ANSWER"]

|

| 84 |

+

)

|

| 85 |

+

cb.answer_reached = True

|

| 86 |

+

res = await chain.acall(message, callbacks=[cb])

|

| 87 |

+

answer = res["result"]

|

| 88 |

+

sources = res["source_documents"]

|

| 89 |

+

|

| 90 |

+

if sources:

|

| 91 |

+

answer += f"\nSources:" + str(sources)

|

| 92 |

+

else:

|

| 93 |

+

answer += "\nNo sources found"

|

| 94 |

+

|

| 95 |

+

await cl.Message(content=answer).send()

|

| 96 |

+

|

| 97 |

+

if __name__ == "__main__":

|

| 98 |

+

asyncio.run(cl.main())

|

requirements.txt

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pypdf==3.15.5

|

| 2 |

+

accelerate==0.22.0

|

| 3 |

+

bitsandbytes==0.41.1

|

| 4 |

+

chainlit==0.6.402

|

| 5 |

+

ctransformers==0.2.26

|

| 6 |

+

faiss-cpu==1.7.4

|

| 7 |

+

huggingface-hub==0.16.4

|

| 8 |

+

langchain==0.0.281

|

| 9 |

+

sentence-transformers==2.2.2

|

| 10 |

+

torch==2.0.1

|

| 11 |

+

transformers==4.33.0

|

vectorstores/db_faiss/index.faiss

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:41b1dd53e3fc2abc2535c8c24111b40ede2386c32a1604eaec17f3232646e7ee

|

| 3 |

+

size 10983981

|

vectorstores/db_faiss/index.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4007c732db0ecbd2a226c55a6f83f1bb9bf8d899079a2e52b971f8da3d78cea5

|

| 3 |

+

size 3567746

|