Add checkpoint

Browse files- README.md +4 -4

- all_results.json +9 -9

- eval_results.json +4 -4

- model-00001-of-00004.safetensors +1 -1

- model-00002-of-00004.safetensors +1 -1

- model-00003-of-00004.safetensors +1 -1

- model-00004-of-00004.safetensors +1 -1

- runs/Nov02_11-36-19_n124-167-248/events.out.tfevents.1730547445.n124-167-248.2750283.0 +3 -0

- runs/Nov02_11-36-19_n124-167-248/events.out.tfevents.1730588909.n124-167-248.2750283.1 +3 -0

- train_results.json +5 -5

- trainer_log.jsonl +0 -0

- trainer_state.json +0 -0

- training_args.bin +1 -1

- training_eval_loss.png +0 -0

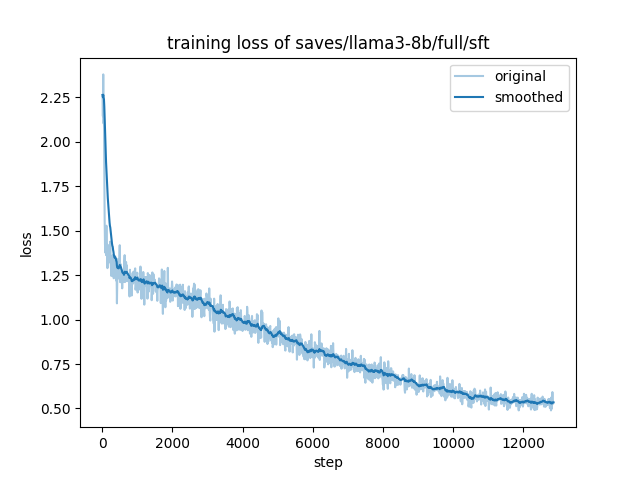

- training_loss.png +0 -0

README.md

CHANGED

|

@@ -13,12 +13,12 @@ model-index:

|

|

| 13 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 14 |

should probably proofread and complete it, then remove this comment. -->

|

| 15 |

|

| 16 |

-

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="200" height="32"/>](https://wandb.ai/sierkinhane/huggingface/runs/

|

| 17 |

# sft

|

| 18 |

|

| 19 |

This model is a fine-tuned version of [meta-llama/Meta-Llama-3-8B-Instruct](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct) on the storyboard20k dataset.

|

| 20 |

It achieves the following results on the evaluation set:

|

| 21 |

-

- Loss: 0.

|

| 22 |

|

| 23 |

## Model description

|

| 24 |

|

|

@@ -55,12 +55,12 @@ The following hyperparameters were used during training:

|

|

| 55 |

|

| 56 |

| Training Loss | Epoch | Step | Validation Loss |

|

| 57 |

|:-------------:|:------:|:-----:|:---------------:|

|

| 58 |

-

| 0.

|

| 59 |

|

| 60 |

|

| 61 |

### Framework versions

|

| 62 |

|

| 63 |

- Transformers 4.43.2

|

| 64 |

-

- Pytorch 2.3.

|

| 65 |

- Datasets 2.16.0

|

| 66 |

- Tokenizers 0.19.1

|

|

|

|

| 13 |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

|

| 14 |

should probably proofread and complete it, then remove this comment. -->

|

| 15 |

|

| 16 |

+

[<img src="https://raw.githubusercontent.com/wandb/assets/main/wandb-github-badge-28.svg" alt="Visualize in Weights & Biases" width="200" height="32"/>](https://wandb.ai/sierkinhane/huggingface/runs/06jvgsys)

|

| 17 |

# sft

|

| 18 |

|

| 19 |

This model is a fine-tuned version of [meta-llama/Meta-Llama-3-8B-Instruct](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct) on the storyboard20k dataset.

|

| 20 |

It achieves the following results on the evaluation set:

|

| 21 |

+

- Loss: 0.4572

|

| 22 |

|

| 23 |

## Model description

|

| 24 |

|

|

|

|

| 55 |

|

| 56 |

| Training Loss | Epoch | Step | Validation Loss |

|

| 57 |

|:-------------:|:------:|:-----:|:---------------:|

|

| 58 |

+

| 0.4899 | 0.7771 | 10000 | 0.5172 |

|

| 59 |

|

| 60 |

|

| 61 |

### Framework versions

|

| 62 |

|

| 63 |

- Transformers 4.43.2

|

| 64 |

+

- Pytorch 2.3.1+cu121

|

| 65 |

- Datasets 2.16.0

|

| 66 |

- Tokenizers 0.19.1

|

all_results.json

CHANGED

|

@@ -1,12 +1,12 @@

|

|

| 1 |

{

|

| 2 |

"epoch": 1.0,

|

| 3 |

-

"eval_loss": 0.

|

| 4 |

-

"eval_runtime":

|

| 5 |

-

"eval_samples_per_second":

|

| 6 |

-

"eval_steps_per_second":

|

| 7 |

-

"total_flos":

|

| 8 |

-

"train_loss": 0.

|

| 9 |

-

"train_runtime":

|

| 10 |

-

"train_samples_per_second": 5.

|

| 11 |

-

"train_steps_per_second": 0.

|

| 12 |

}

|

|

|

|

| 1 |

{

|

| 2 |

"epoch": 1.0,

|

| 3 |

+

"eval_loss": 0.4571681022644043,

|

| 4 |

+

"eval_runtime": 470.7912,

|

| 5 |

+

"eval_samples_per_second": 23.019,

|

| 6 |

+

"eval_steps_per_second": 2.878,

|

| 7 |

+

"total_flos": 626183508787200.0,

|

| 8 |

+

"train_loss": 0.7687553066651168,

|

| 9 |

+

"train_runtime": 40960.0959,

|

| 10 |

+

"train_samples_per_second": 5.027,

|

| 11 |

+

"train_steps_per_second": 0.314

|

| 12 |

}

|

eval_results.json

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

{

|

| 2 |

"epoch": 1.0,

|

| 3 |

-

"eval_loss": 0.

|

| 4 |

-

"eval_runtime":

|

| 5 |

-

"eval_samples_per_second":

|

| 6 |

-

"eval_steps_per_second":

|

| 7 |

}

|

|

|

|

| 1 |

{

|

| 2 |

"epoch": 1.0,

|

| 3 |

+

"eval_loss": 0.4571681022644043,

|

| 4 |

+

"eval_runtime": 470.7912,

|

| 5 |

+

"eval_samples_per_second": 23.019,

|

| 6 |

+

"eval_steps_per_second": 2.878

|

| 7 |

}

|

model-00001-of-00004.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 4976698672

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f538567582c2690a4c8cc515e8bb3a5314a37694b85b5cdce909434f6b399c6d

|

| 3 |

size 4976698672

|

model-00002-of-00004.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 4999802720

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:004d9467dc9a25dc2a3bf0462f6721a302a0de9b1d6eab3c7695e7ea6fdd1ae9

|

| 3 |

size 4999802720

|

model-00003-of-00004.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 4915916176

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2c618bd643771be4b19406ea5d63c729d9a84213bfedcae5f494a92182e6c4a1

|

| 3 |

size 4915916176

|

model-00004-of-00004.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 1168138808

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ad1e546d9d59f8d602e393f3915006419d4d61b5cc366c8368a0422ea8421fd8

|

| 3 |

size 1168138808

|

runs/Nov02_11-36-19_n124-167-248/events.out.tfevents.1730547445.n124-167-248.2750283.0

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0910539d6fe4ad9b7351bdfb000b3ae136e02fe858d157cd1499ceef8bfa19d6

|

| 3 |

+

size 277095

|

runs/Nov02_11-36-19_n124-167-248/events.out.tfevents.1730588909.n124-167-248.2750283.1

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c1f04e47d9cb2071533f28bdcf8fb25caa78a34fb3a732a68dea6485f194f2c9

|

| 3 |

+

size 311

|

train_results.json

CHANGED

|

@@ -1,8 +1,8 @@

|

|

| 1 |

{

|

| 2 |

"epoch": 1.0,

|

| 3 |

-

"total_flos":

|

| 4 |

-

"train_loss": 0.

|

| 5 |

-

"train_runtime":

|

| 6 |

-

"train_samples_per_second": 5.

|

| 7 |

-

"train_steps_per_second": 0.

|

| 8 |

}

|

|

|

|

| 1 |

{

|

| 2 |

"epoch": 1.0,

|

| 3 |

+

"total_flos": 626183508787200.0,

|

| 4 |

+

"train_loss": 0.7687553066651168,

|

| 5 |

+

"train_runtime": 40960.0959,

|

| 6 |

+

"train_samples_per_second": 5.027,

|

| 7 |

+

"train_steps_per_second": 0.314

|

| 8 |

}

|

trainer_log.jsonl

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|

trainer_state.json

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|

training_args.bin

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 7032

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0a34e349a8d6fadfd31677dbafeefa4b8df29285519f825d66b67b02acbfca55

|

| 3 |

size 7032

|

training_eval_loss.png

CHANGED

|

|

training_loss.png

CHANGED

|

|