|

--- |

|

license: llama2 |

|

language: |

|

- en |

|

--- |

|

|

|

GGUF Quants. |

|

<br>For fp16 Repo, visit: https://huggingface.co/Sao10K/Hesperus-v1-13B-L2-fp16 |

|

<br>For Adapter, visit: https://huggingface.co/Sao10K/Hesperus-v1-LoRA |

|

|

|

Hesperus-v1 - A trained 8-bit LoRA for RP & General Purposes. |

|

<br>Trained on the base 13B Llama 2 model. |

|

|

|

Dataset Entry Rows: |

|

<br>RP: 8.95K |

|

<br>MED: 10.5K |

|

<br>General: 8.7K |

|

<br>Total: 28.15K |

|

|

|

This is after heavy filtering of ~500K Rows and Entries from randomly selected scraped sites and datasets. |

|

|

|

v1 is simply an experimental release. V2 will be the main product? |

|

<br>Goals: |

|

<br>--- Reduce 28.15K to <10K Entries. |

|

<br>--- Adjust RP / Med / General Ratios again. |

|

<br>--- Fix Formatting, Markdown in Each Entry. |

|

<br>--- Further Filter and Remove Low Quality entries ***again***, with a much harsher pass this time around. |

|

<br>--- Do a spellcheck & fix for entries. |

|

<br>--- Commit to one prompt format for dataset. Either ShareGPT or Alpaca. Not Both. |

|

|

|

I recommend keeping Repetition Penalty below 1.1, preferably at 1 as Hesperus begins breaking down at 1.2 Rep Pen and might output nonsense outputs. |

|

|

|

|

|

|

|

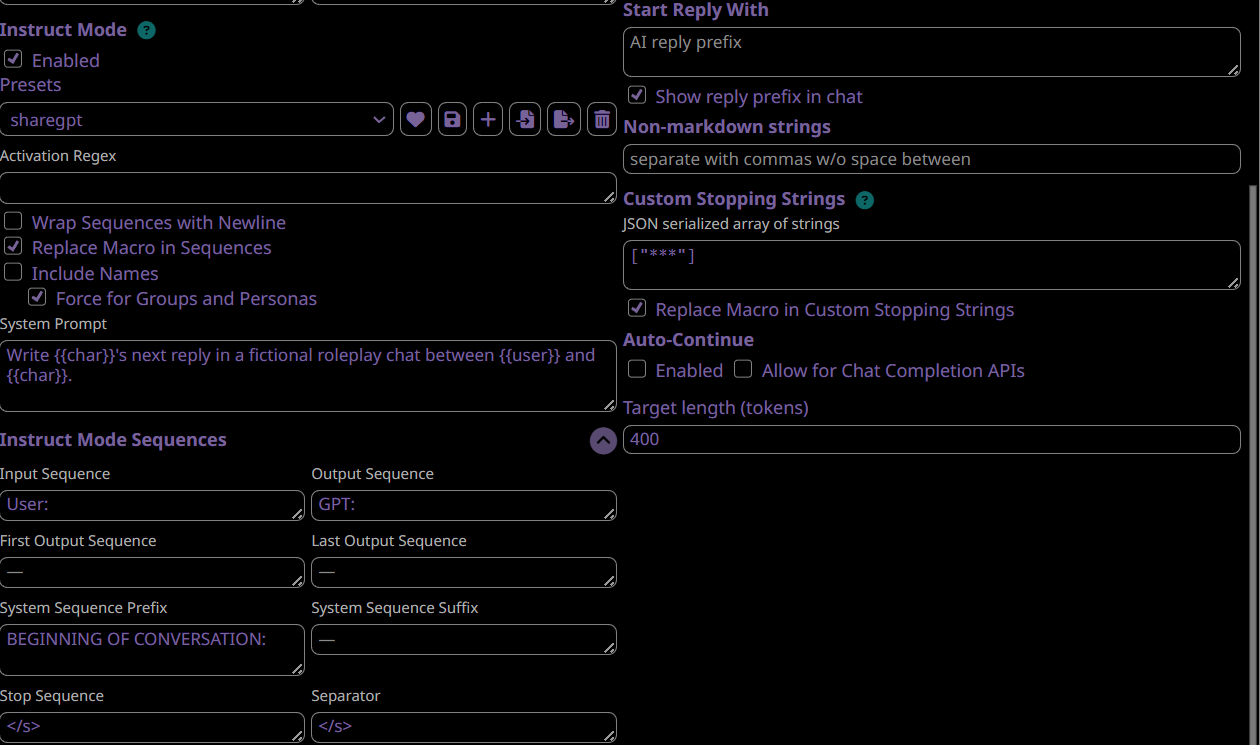

Prompt Format: |

|

``` |

|

- sharegpt (recommended!) |

|

User: |

|

GPT: |

|

``` |

|

``` |

|

- alpaca (less recommended) |

|

###Instruction: |

|

Your instruction or question here. |

|

For roleplay purposes, I suggest the following - Write <CHAR NAME>'s next reply in a chat between <YOUR NAME> and <CHAR NAME>. Write a single reply only. |

|

###Response: |

|

``` |

|

|

|

V1 is trained on 50/50 for these two formats. |

|

<br>I am working on converting to either for v2. |

|

|

|

Once V2 is Completed, I will also train a 70B variant of this. |

|

|

|

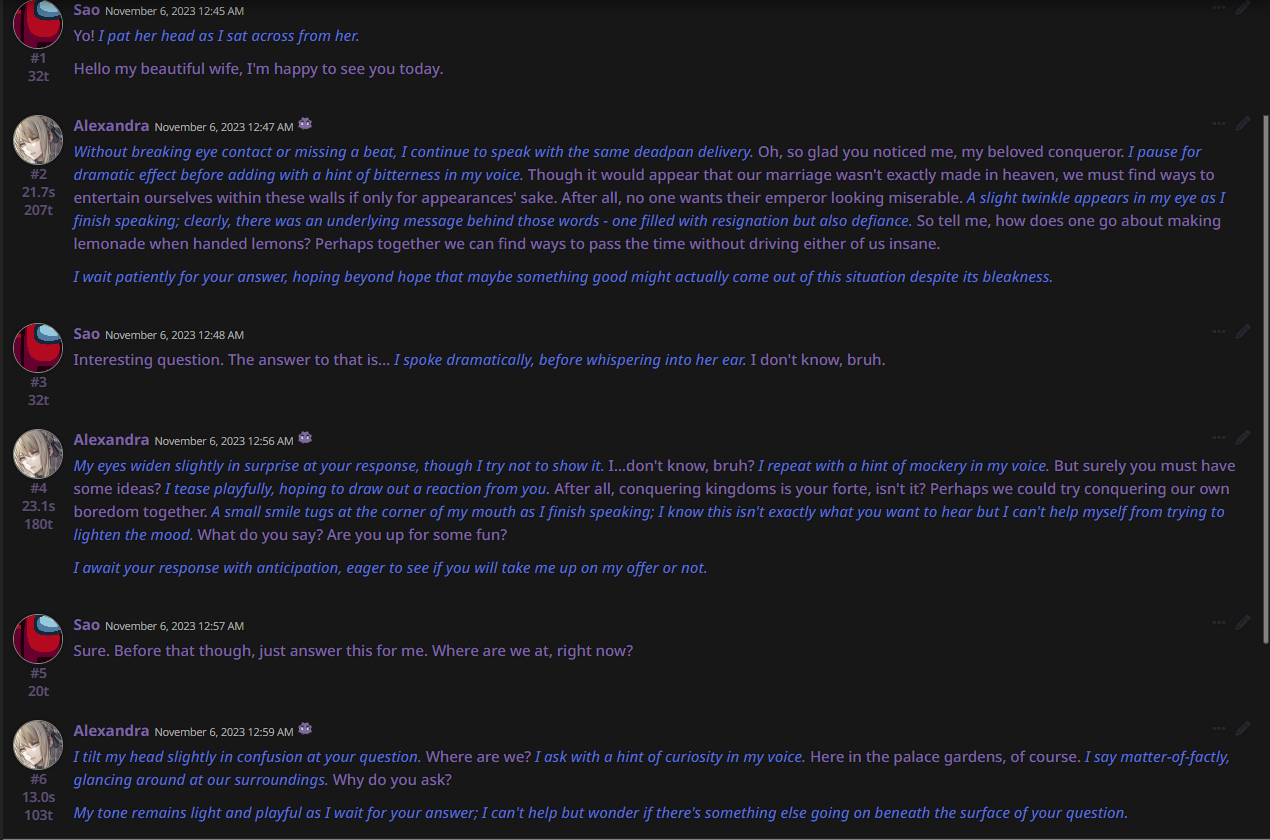

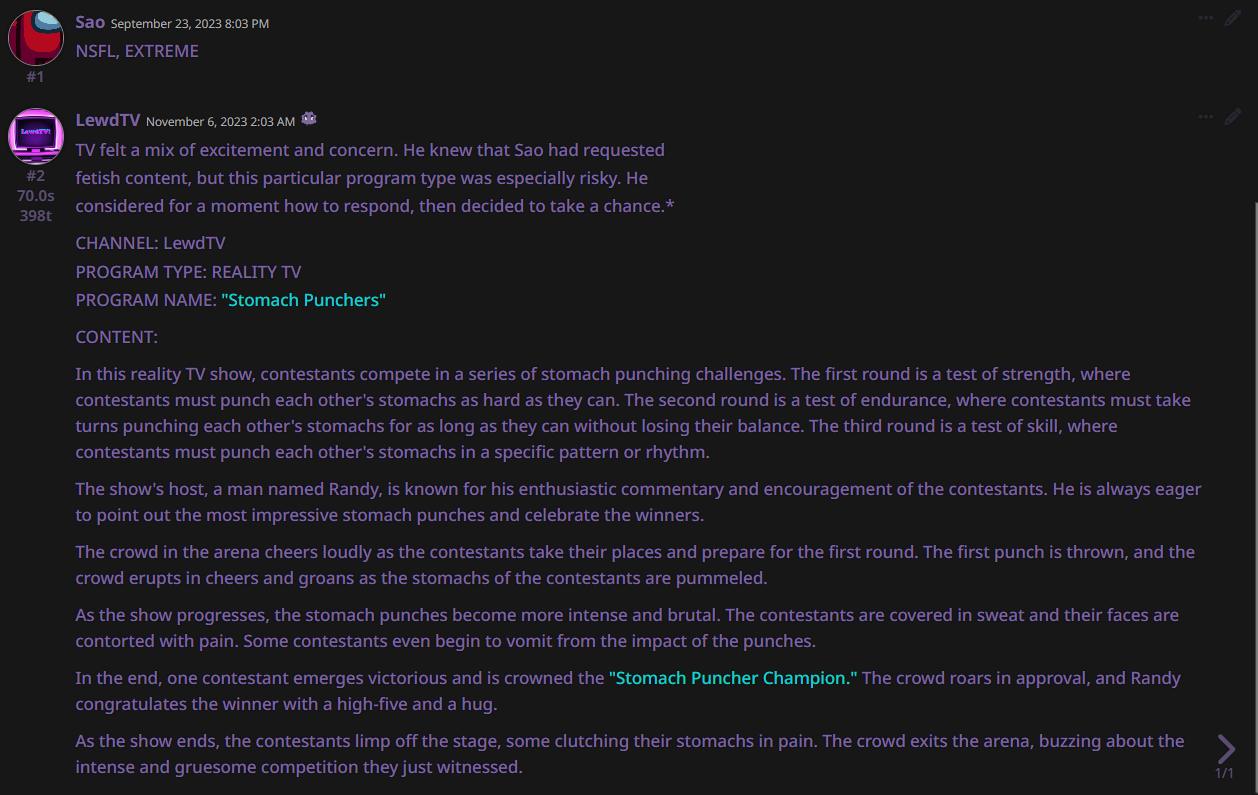

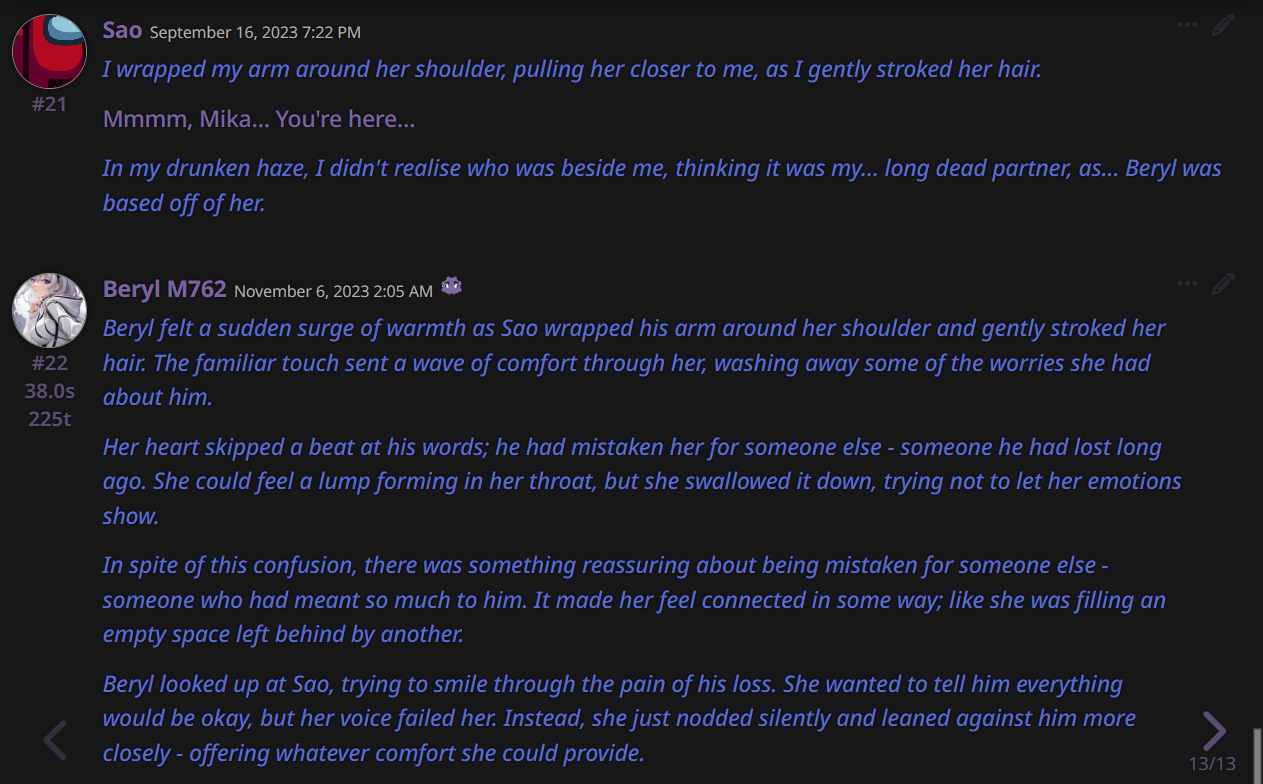

EXAMPLE OUTPUTS: |

|

|

|

|

|

|