SamMorgan

commited on

Commit

•

20e841b

1

Parent(s):

7f7b618

Adding more yolov4-tflite files

Browse files- CODE_OF_CONDUCT.md +76 -0

- LICENSE +21 -0

- README.md +181 -0

- benchmarks.py +134 -0

- convert_tflite.py +80 -0

- convert_trt.py +104 -0

- core/__pycache__/backbone.cpython-37.pyc +0 -0

- core/__pycache__/common.cpython-37.pyc +0 -0

- core/__pycache__/config.cpython-37.pyc +0 -0

- core/__pycache__/utils.cpython-37.pyc +0 -0

- core/__pycache__/yolov4.cpython-37.pyc +0 -0

- core/backbone.py +167 -0

- core/common.py +67 -0

- core/config.py +53 -0

- core/dataset.py +382 -0

- core/utils.py +375 -0

- core/yolov4.py +367 -0

- data/anchors/basline_anchors.txt +1 -0

- data/anchors/basline_tiny_anchors.txt +1 -0

- data/anchors/yolov3_anchors.txt +1 -0

- data/anchors/yolov4_anchors.txt +1 -0

- data/classes/coco.names +80 -0

- data/classes/voc.names +20 -0

- data/classes/yymnist.names +10 -0

- data/dataset/val2014.txt +0 -0

- data/dataset/val2017.txt +0 -0

- data/girl.png +0 -0

- data/kite.jpg +0 -0

- data/performance.png +0 -0

- data/road.mp4 +0 -0

- detect.py +92 -0

- detectvideo.py +127 -0

- evaluate.py +143 -0

- mAP/extra/intersect-gt-and-pred.py +60 -0

- mAP/extra/remove_space.py +96 -0

- mAP/main.py +775 -0

- requirements-gpu.txt +8 -0

- requirements.txt +8 -0

- result.png +0 -0

- save_model.py +60 -0

- train.py +162 -0

CODE_OF_CONDUCT.md

ADDED

|

@@ -0,0 +1,76 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Contributor Covenant Code of Conduct

|

| 2 |

+

|

| 3 |

+

## Our Pledge

|

| 4 |

+

|

| 5 |

+

In the interest of fostering an open and welcoming environment, we as

|

| 6 |

+

contributors and maintainers pledge to making participation in our project and

|

| 7 |

+

our community a harassment-free experience for everyone, regardless of age, body

|

| 8 |

+

size, disability, ethnicity, sex characteristics, gender identity and expression,

|

| 9 |

+

level of experience, education, socio-economic status, nationality, personal

|

| 10 |

+

appearance, race, religion, or sexual identity and orientation.

|

| 11 |

+

|

| 12 |

+

## Our Standards

|

| 13 |

+

|

| 14 |

+

Examples of behavior that contributes to creating a positive environment

|

| 15 |

+

include:

|

| 16 |

+

|

| 17 |

+

* Using welcoming and inclusive language

|

| 18 |

+

* Being respectful of differing viewpoints and experiences

|

| 19 |

+

* Gracefully accepting constructive criticism

|

| 20 |

+

* Focusing on what is best for the community

|

| 21 |

+

* Showing empathy towards other community members

|

| 22 |

+

|

| 23 |

+

Examples of unacceptable behavior by participants include:

|

| 24 |

+

|

| 25 |

+

* The use of sexualized language or imagery and unwelcome sexual attention or

|

| 26 |

+

advances

|

| 27 |

+

* Trolling, insulting/derogatory comments, and personal or political attacks

|

| 28 |

+

* Public or private harassment

|

| 29 |

+

* Publishing others' private information, such as a physical or electronic

|

| 30 |

+

address, without explicit permission

|

| 31 |

+

* Other conduct which could reasonably be considered inappropriate in a

|

| 32 |

+

professional setting

|

| 33 |

+

|

| 34 |

+

## Our Responsibilities

|

| 35 |

+

|

| 36 |

+

Project maintainers are responsible for clarifying the standards of acceptable

|

| 37 |

+

behavior and are expected to take appropriate and fair corrective action in

|

| 38 |

+

response to any instances of unacceptable behavior.

|

| 39 |

+

|

| 40 |

+

Project maintainers have the right and responsibility to remove, edit, or

|

| 41 |

+

reject comments, commits, code, wiki edits, issues, and other contributions

|

| 42 |

+

that are not aligned to this Code of Conduct, or to ban temporarily or

|

| 43 |

+

permanently any contributor for other behaviors that they deem inappropriate,

|

| 44 |

+

threatening, offensive, or harmful.

|

| 45 |

+

|

| 46 |

+

## Scope

|

| 47 |

+

|

| 48 |

+

This Code of Conduct applies both within project spaces and in public spaces

|

| 49 |

+

when an individual is representing the project or its community. Examples of

|

| 50 |

+

representing a project or community include using an official project e-mail

|

| 51 |

+

address, posting via an official social media account, or acting as an appointed

|

| 52 |

+

representative at an online or offline event. Representation of a project may be

|

| 53 |

+

further defined and clarified by project maintainers.

|

| 54 |

+

|

| 55 |

+

## Enforcement

|

| 56 |

+

|

| 57 |

+

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

| 58 |

+

reported by contacting the project team at hunglc007@gmail.com. All

|

| 59 |

+

complaints will be reviewed and investigated and will result in a response that

|

| 60 |

+

is deemed necessary and appropriate to the circumstances. The project team is

|

| 61 |

+

obligated to maintain confidentiality with regard to the reporter of an incident.

|

| 62 |

+

Further details of specific enforcement policies may be posted separately.

|

| 63 |

+

|

| 64 |

+

Project maintainers who do not follow or enforce the Code of Conduct in good

|

| 65 |

+

faith may face temporary or permanent repercussions as determined by other

|

| 66 |

+

members of the project's leadership.

|

| 67 |

+

|

| 68 |

+

## Attribution

|

| 69 |

+

|

| 70 |

+

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

|

| 71 |

+

available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

|

| 72 |

+

|

| 73 |

+

[homepage]: https://www.contributor-covenant.org

|

| 74 |

+

|

| 75 |

+

For answers to common questions about this code of conduct, see

|

| 76 |

+

https://www.contributor-covenant.org/faq

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2020 Việt Hùng

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

ADDED

|

@@ -0,0 +1,181 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# tensorflow-yolov4-tflite

|

| 2 |

+

[](LICENSE)

|

| 3 |

+

|

| 4 |

+

YOLOv4, YOLOv4-tiny Implemented in Tensorflow 2.0.

|

| 5 |

+

Convert YOLO v4, YOLOv3, YOLO tiny .weights to .pb, .tflite and trt format for tensorflow, tensorflow lite, tensorRT.

|

| 6 |

+

|

| 7 |

+

Download yolov4.weights file: https://drive.google.com/open?id=1cewMfusmPjYWbrnuJRuKhPMwRe_b9PaT

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

### Prerequisites

|

| 11 |

+

* Tensorflow 2.3.0rc0

|

| 12 |

+

|

| 13 |

+

### Performance

|

| 14 |

+

<p align="center"><img src="data/performance.png" width="640"\></p>

|

| 15 |

+

|

| 16 |

+

### Demo

|

| 17 |

+

|

| 18 |

+

```bash

|

| 19 |

+

# Convert darknet weights to tensorflow

|

| 20 |

+

## yolov4

|

| 21 |

+

python save_model.py --weights ./data/yolov4.weights --output ./checkpoints/yolov4-416 --input_size 416 --model yolov4

|

| 22 |

+

|

| 23 |

+

## yolov4-tiny

|

| 24 |

+

python save_model.py --weights ./data/yolov4-tiny.weights --output ./checkpoints/yolov4-tiny-416 --input_size 416 --model yolov4 --tiny

|

| 25 |

+

|

| 26 |

+

# Run demo tensorflow

|

| 27 |

+

python detect.py --weights ./checkpoints/yolov4-416 --size 416 --model yolov4 --image ./data/kite.jpg

|

| 28 |

+

|

| 29 |

+

python detect.py --weights ./checkpoints/yolov4-tiny-416 --size 416 --model yolov4 --image ./data/kite.jpg --tiny

|

| 30 |

+

|

| 31 |

+

```

|

| 32 |

+

If you want to run yolov3 or yolov3-tiny change ``--model yolov3`` in command

|

| 33 |

+

|

| 34 |

+

#### Output

|

| 35 |

+

|

| 36 |

+

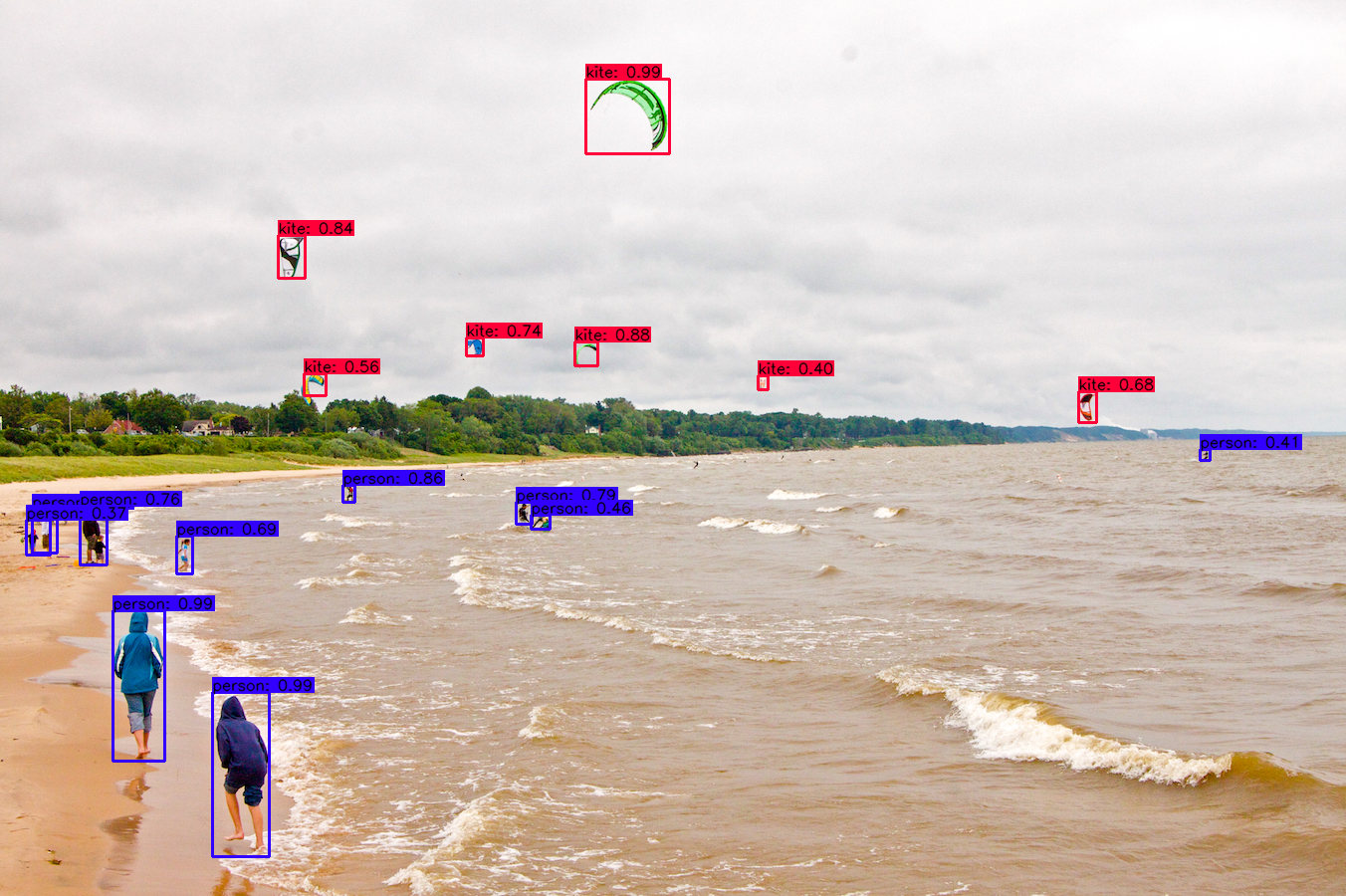

##### Yolov4 original weight

|

| 37 |

+

<p align="center"><img src="result.png" width="640"\></p>

|

| 38 |

+

|

| 39 |

+

##### Yolov4 tflite int8

|

| 40 |

+

<p align="center"><img src="result-int8.png" width="640"\></p>

|

| 41 |

+

|

| 42 |

+

### Convert to tflite

|

| 43 |

+

|

| 44 |

+

```bash

|

| 45 |

+

# Save tf model for tflite converting

|

| 46 |

+

python save_model.py --weights ./data/yolov4.weights --output ./checkpoints/yolov4-416 --input_size 416 --model yolov4 --framework tflite

|

| 47 |

+

|

| 48 |

+

# yolov4

|

| 49 |

+

python convert_tflite.py --weights ./checkpoints/yolov4-416 --output ./checkpoints/yolov4-416.tflite

|

| 50 |

+

|

| 51 |

+

# yolov4 quantize float16

|

| 52 |

+

python convert_tflite.py --weights ./checkpoints/yolov4-416 --output ./checkpoints/yolov4-416-fp16.tflite --quantize_mode float16

|

| 53 |

+

|

| 54 |

+

# yolov4 quantize int8

|

| 55 |

+

python convert_tflite.py --weights ./checkpoints/yolov4-416 --output ./checkpoints/yolov4-416-int8.tflite --quantize_mode int8 --dataset ./coco_dataset/coco/val207.txt

|

| 56 |

+

|

| 57 |

+

# Run demo tflite model

|

| 58 |

+

python detect.py --weights ./checkpoints/yolov4-416.tflite --size 416 --model yolov4 --image ./data/kite.jpg --framework tflite

|

| 59 |

+

```

|

| 60 |

+

Yolov4 and Yolov4-tiny int8 quantization have some issues. I will try to fix that. You can try Yolov3 and Yolov3-tiny int8 quantization

|

| 61 |

+

### Convert to TensorRT

|

| 62 |

+

```bash# yolov3

|

| 63 |

+

python save_model.py --weights ./data/yolov3.weights --output ./checkpoints/yolov3.tf --input_size 416 --model yolov3

|

| 64 |

+

python convert_trt.py --weights ./checkpoints/yolov3.tf --quantize_mode float16 --output ./checkpoints/yolov3-trt-fp16-416

|

| 65 |

+

|

| 66 |

+

# yolov3-tiny

|

| 67 |

+

python save_model.py --weights ./data/yolov3-tiny.weights --output ./checkpoints/yolov3-tiny.tf --input_size 416 --tiny

|

| 68 |

+

python convert_trt.py --weights ./checkpoints/yolov3-tiny.tf --quantize_mode float16 --output ./checkpoints/yolov3-tiny-trt-fp16-416

|

| 69 |

+

|

| 70 |

+

# yolov4

|

| 71 |

+

python save_model.py --weights ./data/yolov4.weights --output ./checkpoints/yolov4.tf --input_size 416 --model yolov4

|

| 72 |

+

python convert_trt.py --weights ./checkpoints/yolov4.tf --quantize_mode float16 --output ./checkpoints/yolov4-trt-fp16-416

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

### Evaluate on COCO 2017 Dataset

|

| 76 |

+

```bash

|

| 77 |

+

# run script in /script/get_coco_dataset_2017.sh to download COCO 2017 Dataset

|

| 78 |

+

# preprocess coco dataset

|

| 79 |

+

cd data

|

| 80 |

+

mkdir dataset

|

| 81 |

+

cd ..

|

| 82 |

+

cd scripts

|

| 83 |

+

python coco_convert.py --input ./coco/annotations/instances_val2017.json --output val2017.pkl

|

| 84 |

+

python coco_annotation.py --coco_path ./coco

|

| 85 |

+

cd ..

|

| 86 |

+

|

| 87 |

+

# evaluate yolov4 model

|

| 88 |

+

python evaluate.py --weights ./data/yolov4.weights

|

| 89 |

+

cd mAP/extra

|

| 90 |

+

python remove_space.py

|

| 91 |

+

cd ..

|

| 92 |

+

python main.py --output results_yolov4_tf

|

| 93 |

+

```

|

| 94 |

+

#### mAP50 on COCO 2017 Dataset

|

| 95 |

+

|

| 96 |

+

| Detection | 512x512 | 416x416 | 320x320 |

|

| 97 |

+

|-------------|---------|---------|---------|

|

| 98 |

+

| YoloV3 | 55.43 | 52.32 | |

|

| 99 |

+

| YoloV4 | 61.96 | 57.33 | |

|

| 100 |

+

|

| 101 |

+

### Benchmark

|

| 102 |

+

```bash

|

| 103 |

+

python benchmarks.py --size 416 --model yolov4 --weights ./data/yolov4.weights

|

| 104 |

+

```

|

| 105 |

+

#### TensorRT performance

|

| 106 |

+

|

| 107 |

+

| YoloV4 416 images/s | FP32 | FP16 | INT8 |

|

| 108 |

+

|---------------------|----------|----------|----------|

|

| 109 |

+

| Batch size 1 | 55 | 116 | |

|

| 110 |

+

| Batch size 8 | 70 | 152 | |

|

| 111 |

+

|

| 112 |

+

#### Tesla P100

|

| 113 |

+

|

| 114 |

+

| Detection | 512x512 | 416x416 | 320x320 |

|

| 115 |

+

|-------------|---------|---------|---------|

|

| 116 |

+

| YoloV3 FPS | 40.6 | 49.4 | 61.3 |

|

| 117 |

+

| YoloV4 FPS | 33.4 | 41.7 | 50.0 |

|

| 118 |

+

|

| 119 |

+

#### Tesla K80

|

| 120 |

+

|

| 121 |

+

| Detection | 512x512 | 416x416 | 320x320 |

|

| 122 |

+

|-------------|---------|---------|---------|

|

| 123 |

+

| YoloV3 FPS | 10.8 | 12.9 | 17.6 |

|

| 124 |

+

| YoloV4 FPS | 9.6 | 11.7 | 16.0 |

|

| 125 |

+

|

| 126 |

+

#### Tesla T4

|

| 127 |

+

|

| 128 |

+

| Detection | 512x512 | 416x416 | 320x320 |

|

| 129 |

+

|-------------|---------|---------|---------|

|

| 130 |

+

| YoloV3 FPS | 27.6 | 32.3 | 45.1 |

|

| 131 |

+

| YoloV4 FPS | 24.0 | 30.3 | 40.1 |

|

| 132 |

+

|

| 133 |

+

#### Tesla P4

|

| 134 |

+

|

| 135 |

+

| Detection | 512x512 | 416x416 | 320x320 |

|

| 136 |

+

|-------------|---------|---------|---------|

|

| 137 |

+

| YoloV3 FPS | 20.2 | 24.2 | 31.2 |

|

| 138 |

+

| YoloV4 FPS | 16.2 | 20.2 | 26.5 |

|

| 139 |

+

|

| 140 |

+

#### Macbook Pro 15 (2.3GHz i7)

|

| 141 |

+

|

| 142 |

+

| Detection | 512x512 | 416x416 | 320x320 |

|

| 143 |

+

|-------------|---------|---------|---------|

|

| 144 |

+

| YoloV3 FPS | | | |

|

| 145 |

+

| YoloV4 FPS | | | |

|

| 146 |

+

|

| 147 |

+

### Traning your own model

|

| 148 |

+

```bash

|

| 149 |

+

# Prepare your dataset

|

| 150 |

+

# If you want to train from scratch:

|

| 151 |

+

In config.py set FISRT_STAGE_EPOCHS=0

|

| 152 |

+

# Run script:

|

| 153 |

+

python train.py

|

| 154 |

+

|

| 155 |

+

# Transfer learning:

|

| 156 |

+

python train.py --weights ./data/yolov4.weights

|

| 157 |

+

```

|

| 158 |

+

The training performance is not fully reproduced yet, so I recommended to use Alex's [Darknet](https://github.com/AlexeyAB/darknet) to train your own data, then convert the .weights to tensorflow or tflite.

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

### TODO

|

| 163 |

+

* [x] Convert YOLOv4 to TensorRT

|

| 164 |

+

* [x] YOLOv4 tflite on android

|

| 165 |

+

* [ ] YOLOv4 tflite on ios

|

| 166 |

+

* [x] Training code

|

| 167 |

+

* [x] Update scale xy

|

| 168 |

+

* [ ] ciou

|

| 169 |

+

* [ ] Mosaic data augmentation

|

| 170 |

+

* [x] Mish activation

|

| 171 |

+

* [x] yolov4 tflite version

|

| 172 |

+

* [x] yolov4 in8 tflite version for mobile

|

| 173 |

+

|

| 174 |

+

### References

|

| 175 |

+

|

| 176 |

+

* YOLOv4: Optimal Speed and Accuracy of Object Detection [YOLOv4](https://arxiv.org/abs/2004.10934).

|

| 177 |

+

* [darknet](https://github.com/AlexeyAB/darknet)

|

| 178 |

+

|

| 179 |

+

My project is inspired by these previous fantastic YOLOv3 implementations:

|

| 180 |

+

* [Yolov3 tensorflow](https://github.com/YunYang1994/tensorflow-yolov3)

|

| 181 |

+

* [Yolov3 tf2](https://github.com/zzh8829/yolov3-tf2)

|

benchmarks.py

ADDED

|

@@ -0,0 +1,134 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import tensorflow as tf

|

| 3 |

+

import time

|

| 4 |

+

import cv2

|

| 5 |

+

from core.yolov4 import YOLOv4, YOLOv3_tiny, YOLOv3, decode

|

| 6 |

+

from absl import app, flags, logging

|

| 7 |

+

from absl.flags import FLAGS

|

| 8 |

+

from tensorflow.python.saved_model import tag_constants

|

| 9 |

+

from core import utils

|

| 10 |

+

from core.config import cfg

|

| 11 |

+

from tensorflow.compat.v1 import ConfigProto

|

| 12 |

+

from tensorflow.compat.v1 import InteractiveSession

|

| 13 |

+

|

| 14 |

+

flags.DEFINE_boolean('tiny', False, 'yolo or yolo-tiny')

|

| 15 |

+

flags.DEFINE_string('framework', 'tf', '(tf, tflite, trt')

|

| 16 |

+

flags.DEFINE_string('model', 'yolov4', 'yolov3 or yolov4')

|

| 17 |

+

flags.DEFINE_string('weights', './data/yolov4.weights', 'path to weights file')

|

| 18 |

+

flags.DEFINE_string('image', './data/kite.jpg', 'path to input image')

|

| 19 |

+

flags.DEFINE_integer('size', 416, 'resize images to')

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def main(_argv):

|

| 23 |

+

if FLAGS.tiny:

|

| 24 |

+

STRIDES = np.array(cfg.YOLO.STRIDES_TINY)

|

| 25 |

+

ANCHORS = utils.get_anchors(cfg.YOLO.ANCHORS_TINY, FLAGS.tiny)

|

| 26 |

+

else:

|

| 27 |

+

STRIDES = np.array(cfg.YOLO.STRIDES)

|

| 28 |

+

if FLAGS.model == 'yolov4':

|

| 29 |

+

ANCHORS = utils.get_anchors(cfg.YOLO.ANCHORS, FLAGS.tiny)

|

| 30 |

+

else:

|

| 31 |

+

ANCHORS = utils.get_anchors(cfg.YOLO.ANCHORS_V3, FLAGS.tiny)

|

| 32 |

+

NUM_CLASS = len(utils.read_class_names(cfg.YOLO.CLASSES))

|

| 33 |

+

XYSCALE = cfg.YOLO.XYSCALE

|

| 34 |

+

|

| 35 |

+

config = ConfigProto()

|

| 36 |

+

config.gpu_options.allow_growth = True

|

| 37 |

+

session = InteractiveSession(config=config)

|

| 38 |

+

input_size = FLAGS.size

|

| 39 |

+

physical_devices = tf.config.experimental.list_physical_devices('GPU')

|

| 40 |

+

if len(physical_devices) > 0:

|

| 41 |

+

tf.config.experimental.set_memory_growth(physical_devices[0], True)

|

| 42 |

+

if FLAGS.framework == 'tf':

|

| 43 |

+

input_layer = tf.keras.layers.Input([input_size, input_size, 3])

|

| 44 |

+

if FLAGS.tiny:

|

| 45 |

+

feature_maps = YOLOv3_tiny(input_layer, NUM_CLASS)

|

| 46 |

+

bbox_tensors = []

|

| 47 |

+

for i, fm in enumerate(feature_maps):

|

| 48 |

+

bbox_tensor = decode(fm, NUM_CLASS, i)

|

| 49 |

+

bbox_tensors.append(bbox_tensor)

|

| 50 |

+

model = tf.keras.Model(input_layer, bbox_tensors)

|

| 51 |

+

utils.load_weights_tiny(model, FLAGS.weights)

|

| 52 |

+

else:

|

| 53 |

+

if FLAGS.model == 'yolov3':

|

| 54 |

+

feature_maps = YOLOv3(input_layer, NUM_CLASS)

|

| 55 |

+

bbox_tensors = []

|

| 56 |

+

for i, fm in enumerate(feature_maps):

|

| 57 |

+

bbox_tensor = decode(fm, NUM_CLASS, i)

|

| 58 |

+

bbox_tensors.append(bbox_tensor)

|

| 59 |

+

model = tf.keras.Model(input_layer, bbox_tensors)

|

| 60 |

+

utils.load_weights_v3(model, FLAGS.weights)

|

| 61 |

+

elif FLAGS.model == 'yolov4':

|

| 62 |

+

feature_maps = YOLOv4(input_layer, NUM_CLASS)

|

| 63 |

+

bbox_tensors = []

|

| 64 |

+

for i, fm in enumerate(feature_maps):

|

| 65 |

+

bbox_tensor = decode(fm, NUM_CLASS, i)

|

| 66 |

+

bbox_tensors.append(bbox_tensor)

|

| 67 |

+

model = tf.keras.Model(input_layer, bbox_tensors)

|

| 68 |

+

utils.load_weights(model, FLAGS.weights)

|

| 69 |

+

elif FLAGS.framework == 'trt':

|

| 70 |

+

saved_model_loaded = tf.saved_model.load(FLAGS.weights, tags=[tag_constants.SERVING])

|

| 71 |

+

signature_keys = list(saved_model_loaded.signatures.keys())

|

| 72 |

+

print(signature_keys)

|

| 73 |

+

infer = saved_model_loaded.signatures['serving_default']

|

| 74 |

+

|

| 75 |

+

logging.info('weights loaded')

|

| 76 |

+

|

| 77 |

+

@tf.function

|

| 78 |

+

def run_model(x):

|

| 79 |

+

return model(x)

|

| 80 |

+

|

| 81 |

+

# Test the TensorFlow Lite model on random input data.

|

| 82 |

+

sum = 0

|

| 83 |

+

original_image = cv2.imread(FLAGS.image)

|

| 84 |

+

original_image = cv2.cvtColor(original_image, cv2.COLOR_BGR2RGB)

|

| 85 |

+

original_image_size = original_image.shape[:2]

|

| 86 |

+

image_data = utils.image_preprocess(np.copy(original_image), [FLAGS.size, FLAGS.size])

|

| 87 |

+

image_data = image_data[np.newaxis, ...].astype(np.float32)

|

| 88 |

+

img_raw = tf.image.decode_image(

|

| 89 |

+

open(FLAGS.image, 'rb').read(), channels=3)

|

| 90 |

+

img_raw = tf.expand_dims(img_raw, 0)

|

| 91 |

+

img_raw = tf.image.resize(img_raw, (FLAGS.size, FLAGS.size))

|

| 92 |

+

batched_input = tf.constant(image_data)

|

| 93 |

+

for i in range(1000):

|

| 94 |

+

prev_time = time.time()

|

| 95 |

+

# pred_bbox = model.predict(image_data)

|

| 96 |

+

if FLAGS.framework == 'tf':

|

| 97 |

+

pred_bbox = []

|

| 98 |

+

result = run_model(image_data)

|

| 99 |

+

for value in result:

|

| 100 |

+

value = value.numpy()

|

| 101 |

+

pred_bbox.append(value)

|

| 102 |

+

if FLAGS.model == 'yolov4':

|

| 103 |

+

pred_bbox = utils.postprocess_bbbox(pred_bbox, ANCHORS, STRIDES, XYSCALE)

|

| 104 |

+

else:

|

| 105 |

+

pred_bbox = utils.postprocess_bbbox(pred_bbox, ANCHORS, STRIDES)

|

| 106 |

+

bboxes = utils.postprocess_boxes(pred_bbox, original_image_size, input_size, 0.25)

|

| 107 |

+

bboxes = utils.nms(bboxes, 0.213, method='nms')

|

| 108 |

+

elif FLAGS.framework == 'trt':

|

| 109 |

+

pred_bbox = []

|

| 110 |

+

result = infer(batched_input)

|

| 111 |

+

for key, value in result.items():

|

| 112 |

+

value = value.numpy()

|

| 113 |

+

pred_bbox.append(value)

|

| 114 |

+

if FLAGS.model == 'yolov4':

|

| 115 |

+

pred_bbox = utils.postprocess_bbbox(pred_bbox, ANCHORS, STRIDES, XYSCALE)

|

| 116 |

+

else:

|

| 117 |

+

pred_bbox = utils.postprocess_bbbox(pred_bbox, ANCHORS, STRIDES)

|

| 118 |

+

bboxes = utils.postprocess_boxes(pred_bbox, original_image_size, input_size, 0.25)

|

| 119 |

+

bboxes = utils.nms(bboxes, 0.213, method='nms')

|

| 120 |

+

# pred_bbox = pred_bbox.numpy()

|

| 121 |

+

curr_time = time.time()

|

| 122 |

+

exec_time = curr_time - prev_time

|

| 123 |

+

if i == 0: continue

|

| 124 |

+

sum += (1 / exec_time)

|

| 125 |

+

info = str(i) + " time:" + str(round(exec_time, 3)) + " average FPS:" + str(round(sum / i, 2)) + ", FPS: " + str(

|

| 126 |

+

round((1 / exec_time), 1))

|

| 127 |

+

print(info)

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

if __name__ == '__main__':

|

| 131 |

+

try:

|

| 132 |

+

app.run(main)

|

| 133 |

+

except SystemExit:

|

| 134 |

+

pass

|

convert_tflite.py

ADDED

|

@@ -0,0 +1,80 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import tensorflow as tf

|

| 2 |

+

from absl import app, flags, logging

|

| 3 |

+

from absl.flags import FLAGS

|

| 4 |

+

import numpy as np

|

| 5 |

+

import cv2

|

| 6 |

+

from core.yolov4 import YOLOv4, YOLOv3, YOLOv3_tiny, decode

|

| 7 |

+

import core.utils as utils

|

| 8 |

+

import os

|

| 9 |

+

from core.config import cfg

|

| 10 |

+

|

| 11 |

+

flags.DEFINE_string('weights', './checkpoints/yolov4-416', 'path to weights file')

|

| 12 |

+

flags.DEFINE_string('output', './checkpoints/yolov4-416-fp32.tflite', 'path to output')

|

| 13 |

+

flags.DEFINE_integer('input_size', 416, 'path to output')

|

| 14 |

+

flags.DEFINE_string('quantize_mode', 'float32', 'quantize mode (int8, float16, float32)')

|

| 15 |

+

flags.DEFINE_string('dataset', "/Volumes/Elements/data/coco_dataset/coco/5k.txt", 'path to dataset')

|

| 16 |

+

|

| 17 |

+

def representative_data_gen():

|

| 18 |

+

fimage = open(FLAGS.dataset).read().split()

|

| 19 |

+

for input_value in range(10):

|

| 20 |

+

if os.path.exists(fimage[input_value]):

|

| 21 |

+

original_image=cv2.imread(fimage[input_value])

|

| 22 |

+

original_image = cv2.cvtColor(original_image, cv2.COLOR_BGR2RGB)

|

| 23 |

+

image_data = utils.image_preprocess(np.copy(original_image), [FLAGS.input_size, FLAGS.input_size])

|

| 24 |

+

img_in = image_data[np.newaxis, ...].astype(np.float32)

|

| 25 |

+

print("calibration image {}".format(fimage[input_value]))

|

| 26 |

+

yield [img_in]

|

| 27 |

+

else:

|

| 28 |

+

continue

|

| 29 |

+

|

| 30 |

+

def save_tflite():

|

| 31 |

+

converter = tf.lite.TFLiteConverter.from_saved_model(FLAGS.weights)

|

| 32 |

+

|

| 33 |

+

if FLAGS.quantize_mode == 'float16':

|

| 34 |

+

converter.optimizations = [tf.lite.Optimize.DEFAULT]

|

| 35 |

+

converter.target_spec.supported_types = [tf.compat.v1.lite.constants.FLOAT16]

|

| 36 |

+

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS, tf.lite.OpsSet.SELECT_TF_OPS]

|

| 37 |

+

converter.allow_custom_ops = True

|

| 38 |

+

elif FLAGS.quantize_mode == 'int8':

|

| 39 |

+

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

|

| 40 |

+

converter.optimizations = [tf.lite.Optimize.DEFAULT]

|

| 41 |

+

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS, tf.lite.OpsSet.SELECT_TF_OPS]

|

| 42 |

+

converter.allow_custom_ops = True

|

| 43 |

+

converter.representative_dataset = representative_data_gen

|

| 44 |

+

|

| 45 |

+

tflite_model = converter.convert()

|

| 46 |

+

open(FLAGS.output, 'wb').write(tflite_model)

|

| 47 |

+

|

| 48 |

+

logging.info("model saved to: {}".format(FLAGS.output))

|

| 49 |

+

|

| 50 |

+

def demo():

|

| 51 |

+

interpreter = tf.lite.Interpreter(model_path=FLAGS.output)

|

| 52 |

+

interpreter.allocate_tensors()

|

| 53 |

+

logging.info('tflite model loaded')

|

| 54 |

+

|

| 55 |

+

input_details = interpreter.get_input_details()

|

| 56 |

+

print(input_details)

|

| 57 |

+

output_details = interpreter.get_output_details()

|

| 58 |

+

print(output_details)

|

| 59 |

+

|

| 60 |

+

input_shape = input_details[0]['shape']

|

| 61 |

+

|

| 62 |

+

input_data = np.array(np.random.random_sample(input_shape), dtype=np.float32)

|

| 63 |

+

|

| 64 |

+

interpreter.set_tensor(input_details[0]['index'], input_data)

|

| 65 |

+

interpreter.invoke()

|

| 66 |

+

output_data = [interpreter.get_tensor(output_details[i]['index']) for i in range(len(output_details))]

|

| 67 |

+

|

| 68 |

+

print(output_data)

|

| 69 |

+

|

| 70 |

+

def main(_argv):

|

| 71 |

+

save_tflite()

|

| 72 |

+

demo()

|

| 73 |

+

|

| 74 |

+

if __name__ == '__main__':

|

| 75 |

+

try:

|

| 76 |

+

app.run(main)

|

| 77 |

+

except SystemExit:

|

| 78 |

+

pass

|

| 79 |

+

|

| 80 |

+

|

convert_trt.py

ADDED

|

@@ -0,0 +1,104 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from absl import app, flags, logging

|

| 2 |

+

from absl.flags import FLAGS

|

| 3 |

+

import tensorflow as tf

|

| 4 |

+

physical_devices = tf.config.experimental.list_physical_devices('GPU')

|

| 5 |

+

if len(physical_devices) > 0:

|

| 6 |

+

tf.config.experimental.set_memory_growth(physical_devices[0], True)

|

| 7 |

+

import numpy as np

|

| 8 |

+

import cv2

|

| 9 |

+

from tensorflow.python.compiler.tensorrt import trt_convert as trt

|

| 10 |

+

import core.utils as utils

|

| 11 |

+

from tensorflow.python.saved_model import signature_constants

|

| 12 |

+

import os

|

| 13 |

+

from tensorflow.compat.v1 import ConfigProto

|

| 14 |

+

from tensorflow.compat.v1 import InteractiveSession

|

| 15 |

+

|

| 16 |

+

flags.DEFINE_string('weights', './checkpoints/yolov4-416', 'path to weights file')

|

| 17 |

+

flags.DEFINE_string('output', './checkpoints/yolov4-trt-fp16-416', 'path to output')

|

| 18 |

+

flags.DEFINE_integer('input_size', 416, 'path to output')

|

| 19 |

+

flags.DEFINE_string('quantize_mode', 'float16', 'quantize mode (int8, float16)')

|

| 20 |

+

flags.DEFINE_string('dataset', "/media/user/Source/Data/coco_dataset/coco/5k.txt", 'path to dataset')

|

| 21 |

+

flags.DEFINE_integer('loop', 8, 'loop')

|

| 22 |

+

|

| 23 |

+

def representative_data_gen():

|

| 24 |

+

fimage = open(FLAGS.dataset).read().split()

|

| 25 |

+

batched_input = np.zeros((FLAGS.loop, FLAGS.input_size, FLAGS.input_size, 3), dtype=np.float32)

|

| 26 |

+

for input_value in range(FLAGS.loop):

|

| 27 |

+

if os.path.exists(fimage[input_value]):

|

| 28 |

+

original_image=cv2.imread(fimage[input_value])

|

| 29 |

+

original_image = cv2.cvtColor(original_image, cv2.COLOR_BGR2RGB)

|

| 30 |

+

image_data = utils.image_preporcess(np.copy(original_image), [FLAGS.input_size, FLAGS.input_size])

|

| 31 |

+

img_in = image_data[np.newaxis, ...].astype(np.float32)

|

| 32 |

+

batched_input[input_value, :] = img_in

|

| 33 |

+

# batched_input = tf.constant(img_in)

|

| 34 |

+

print(input_value)

|

| 35 |

+

# yield (batched_input, )

|

| 36 |

+

# yield tf.random.normal((1, 416, 416, 3)),

|

| 37 |

+

else:

|

| 38 |

+

continue

|

| 39 |

+

batched_input = tf.constant(batched_input)

|

| 40 |

+

yield (batched_input,)

|

| 41 |

+

|

| 42 |

+

def save_trt():

|

| 43 |

+

|

| 44 |

+

if FLAGS.quantize_mode == 'int8':

|

| 45 |

+

conversion_params = trt.DEFAULT_TRT_CONVERSION_PARAMS._replace(

|

| 46 |

+

precision_mode=trt.TrtPrecisionMode.INT8,

|

| 47 |

+

max_workspace_size_bytes=4000000000,

|

| 48 |

+

use_calibration=True,

|

| 49 |

+

max_batch_size=8)

|

| 50 |

+

converter = trt.TrtGraphConverterV2(

|

| 51 |

+

input_saved_model_dir=FLAGS.weights,

|

| 52 |

+

conversion_params=conversion_params)

|

| 53 |

+

converter.convert(calibration_input_fn=representative_data_gen)

|

| 54 |

+

elif FLAGS.quantize_mode == 'float16':

|

| 55 |

+

conversion_params = trt.DEFAULT_TRT_CONVERSION_PARAMS._replace(

|

| 56 |

+

precision_mode=trt.TrtPrecisionMode.FP16,

|

| 57 |

+

max_workspace_size_bytes=4000000000,

|

| 58 |

+

max_batch_size=8)

|

| 59 |

+

converter = trt.TrtGraphConverterV2(

|

| 60 |

+

input_saved_model_dir=FLAGS.weights, conversion_params=conversion_params)

|

| 61 |

+

converter.convert()

|

| 62 |

+

else :

|

| 63 |

+

conversion_params = trt.DEFAULT_TRT_CONVERSION_PARAMS._replace(

|

| 64 |

+

precision_mode=trt.TrtPrecisionMode.FP32,

|

| 65 |

+

max_workspace_size_bytes=4000000000,

|

| 66 |

+

max_batch_size=8)

|

| 67 |

+

converter = trt.TrtGraphConverterV2(

|

| 68 |

+

input_saved_model_dir=FLAGS.weights, conversion_params=conversion_params)

|

| 69 |

+

converter.convert()

|

| 70 |

+

|

| 71 |

+

# converter.build(input_fn=representative_data_gen)

|

| 72 |

+

converter.save(output_saved_model_dir=FLAGS.output)

|

| 73 |

+

print('Done Converting to TF-TRT')

|

| 74 |

+

|

| 75 |

+

saved_model_loaded = tf.saved_model.load(FLAGS.output)

|

| 76 |

+

graph_func = saved_model_loaded.signatures[

|

| 77 |

+

signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY]

|

| 78 |

+

trt_graph = graph_func.graph.as_graph_def()

|

| 79 |

+

for n in trt_graph.node:

|

| 80 |

+

print(n.op)

|

| 81 |

+

if n.op == "TRTEngineOp":

|

| 82 |

+

print("Node: %s, %s" % (n.op, n.name.replace("/", "_")))

|

| 83 |

+

else:

|

| 84 |

+

print("Exclude Node: %s, %s" % (n.op, n.name.replace("/", "_")))

|

| 85 |

+

logging.info("model saved to: {}".format(FLAGS.output))

|

| 86 |

+

|

| 87 |

+

trt_engine_nodes = len([1 for n in trt_graph.node if str(n.op) == 'TRTEngineOp'])

|

| 88 |

+

print("numb. of trt_engine_nodes in TensorRT graph:", trt_engine_nodes)

|

| 89 |

+

all_nodes = len([1 for n in trt_graph.node])

|

| 90 |

+

print("numb. of all_nodes in TensorRT graph:", all_nodes)

|

| 91 |

+

|

| 92 |

+

def main(_argv):

|

| 93 |

+

config = ConfigProto()

|

| 94 |

+

config.gpu_options.allow_growth = True

|

| 95 |

+

session = InteractiveSession(config=config)

|

| 96 |

+

save_trt()

|

| 97 |

+

|

| 98 |

+

if __name__ == '__main__':

|

| 99 |

+

try:

|

| 100 |

+

app.run(main)

|

| 101 |

+

except SystemExit:

|

| 102 |

+

pass

|

| 103 |

+

|

| 104 |

+

|

core/__pycache__/backbone.cpython-37.pyc

ADDED

|

Binary file (4.06 kB). View file

|

|

|

core/__pycache__/common.cpython-37.pyc

ADDED

|

Binary file (2.47 kB). View file

|

|

|

core/__pycache__/config.cpython-37.pyc

ADDED

|

Binary file (1.31 kB). View file

|

|

|

core/__pycache__/utils.cpython-37.pyc

ADDED

|

Binary file (9.6 kB). View file

|

|

|

core/__pycache__/yolov4.cpython-37.pyc

ADDED

|

Binary file (9.28 kB). View file

|

|

|

core/backbone.py

ADDED

|

@@ -0,0 +1,167 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#! /usr/bin/env python

|

| 2 |

+

# coding=utf-8

|

| 3 |

+

|

| 4 |

+

import tensorflow as tf

|

| 5 |

+

import core.common as common

|

| 6 |

+

|

| 7 |

+

def darknet53(input_data):

|

| 8 |

+

|

| 9 |

+

input_data = common.convolutional(input_data, (3, 3, 3, 32))

|

| 10 |

+

input_data = common.convolutional(input_data, (3, 3, 32, 64), downsample=True)

|

| 11 |

+

|

| 12 |

+

for i in range(1):

|

| 13 |

+

input_data = common.residual_block(input_data, 64, 32, 64)

|

| 14 |

+

|

| 15 |

+

input_data = common.convolutional(input_data, (3, 3, 64, 128), downsample=True)

|

| 16 |

+

|

| 17 |

+

for i in range(2):

|

| 18 |

+

input_data = common.residual_block(input_data, 128, 64, 128)

|

| 19 |

+

|

| 20 |

+

input_data = common.convolutional(input_data, (3, 3, 128, 256), downsample=True)

|

| 21 |

+

|

| 22 |

+

for i in range(8):

|

| 23 |

+

input_data = common.residual_block(input_data, 256, 128, 256)

|

| 24 |

+

|

| 25 |

+

route_1 = input_data

|

| 26 |

+

input_data = common.convolutional(input_data, (3, 3, 256, 512), downsample=True)

|

| 27 |

+

|

| 28 |

+

for i in range(8):

|

| 29 |

+

input_data = common.residual_block(input_data, 512, 256, 512)

|

| 30 |

+

|

| 31 |

+

route_2 = input_data

|

| 32 |

+

input_data = common.convolutional(input_data, (3, 3, 512, 1024), downsample=True)

|

| 33 |

+

|

| 34 |

+

for i in range(4):

|

| 35 |

+

input_data = common.residual_block(input_data, 1024, 512, 1024)

|

| 36 |

+

|

| 37 |

+

return route_1, route_2, input_data

|

| 38 |

+

|

| 39 |

+

def cspdarknet53(input_data):

|

| 40 |

+

|

| 41 |

+

input_data = common.convolutional(input_data, (3, 3, 3, 32), activate_type="mish")

|

| 42 |

+

input_data = common.convolutional(input_data, (3, 3, 32, 64), downsample=True, activate_type="mish")

|

| 43 |

+

|

| 44 |

+

route = input_data

|

| 45 |

+

route = common.convolutional(route, (1, 1, 64, 64), activate_type="mish")

|

| 46 |

+

input_data = common.convolutional(input_data, (1, 1, 64, 64), activate_type="mish")

|

| 47 |

+

for i in range(1):

|

| 48 |

+

input_data = common.residual_block(input_data, 64, 32, 64, activate_type="mish")

|

| 49 |

+

input_data = common.convolutional(input_data, (1, 1, 64, 64), activate_type="mish")

|

| 50 |

+

|

| 51 |

+

input_data = tf.concat([input_data, route], axis=-1)

|

| 52 |

+

input_data = common.convolutional(input_data, (1, 1, 128, 64), activate_type="mish")

|

| 53 |

+

input_data = common.convolutional(input_data, (3, 3, 64, 128), downsample=True, activate_type="mish")

|

| 54 |

+

route = input_data

|

| 55 |

+

route = common.convolutional(route, (1, 1, 128, 64), activate_type="mish")

|

| 56 |

+

input_data = common.convolutional(input_data, (1, 1, 128, 64), activate_type="mish")

|

| 57 |

+

for i in range(2):

|

| 58 |

+

input_data = common.residual_block(input_data, 64, 64, 64, activate_type="mish")

|

| 59 |

+

input_data = common.convolutional(input_data, (1, 1, 64, 64), activate_type="mish")

|

| 60 |

+

input_data = tf.concat([input_data, route], axis=-1)

|

| 61 |

+

|

| 62 |

+

input_data = common.convolutional(input_data, (1, 1, 128, 128), activate_type="mish")

|

| 63 |

+

input_data = common.convolutional(input_data, (3, 3, 128, 256), downsample=True, activate_type="mish")

|

| 64 |

+

route = input_data

|

| 65 |

+

route = common.convolutional(route, (1, 1, 256, 128), activate_type="mish")

|

| 66 |

+

input_data = common.convolutional(input_data, (1, 1, 256, 128), activate_type="mish")

|

| 67 |

+

for i in range(8):

|

| 68 |

+

input_data = common.residual_block(input_data, 128, 128, 128, activate_type="mish")

|

| 69 |

+

input_data = common.convolutional(input_data, (1, 1, 128, 128), activate_type="mish")

|

| 70 |

+

input_data = tf.concat([input_data, route], axis=-1)

|

| 71 |

+

|

| 72 |

+

input_data = common.convolutional(input_data, (1, 1, 256, 256), activate_type="mish")

|

| 73 |

+

route_1 = input_data

|

| 74 |

+

input_data = common.convolutional(input_data, (3, 3, 256, 512), downsample=True, activate_type="mish")

|

| 75 |

+

route = input_data

|

| 76 |

+

route = common.convolutional(route, (1, 1, 512, 256), activate_type="mish")

|

| 77 |

+

input_data = common.convolutional(input_data, (1, 1, 512, 256), activate_type="mish")

|

| 78 |

+

for i in range(8):

|

| 79 |

+

input_data = common.residual_block(input_data, 256, 256, 256, activate_type="mish")

|

| 80 |

+

input_data = common.convolutional(input_data, (1, 1, 256, 256), activate_type="mish")

|

| 81 |

+

input_data = tf.concat([input_data, route], axis=-1)

|

| 82 |

+

|

| 83 |

+

input_data = common.convolutional(input_data, (1, 1, 512, 512), activate_type="mish")

|

| 84 |

+

route_2 = input_data

|

| 85 |

+

input_data = common.convolutional(input_data, (3, 3, 512, 1024), downsample=True, activate_type="mish")

|

| 86 |

+

route = input_data

|

| 87 |

+

route = common.convolutional(route, (1, 1, 1024, 512), activate_type="mish")

|

| 88 |

+

input_data = common.convolutional(input_data, (1, 1, 1024, 512), activate_type="mish")

|

| 89 |

+

for i in range(4):

|

| 90 |

+

input_data = common.residual_block(input_data, 512, 512, 512, activate_type="mish")

|

| 91 |

+

input_data = common.convolutional(input_data, (1, 1, 512, 512), activate_type="mish")

|

| 92 |

+

input_data = tf.concat([input_data, route], axis=-1)

|

| 93 |

+

|

| 94 |

+

input_data = common.convolutional(input_data, (1, 1, 1024, 1024), activate_type="mish")

|

| 95 |

+

input_data = common.convolutional(input_data, (1, 1, 1024, 512))

|

| 96 |

+

input_data = common.convolutional(input_data, (3, 3, 512, 1024))

|

| 97 |

+

input_data = common.convolutional(input_data, (1, 1, 1024, 512))

|

| 98 |

+

|

| 99 |

+

input_data = tf.concat([tf.nn.max_pool(input_data, ksize=13, padding='SAME', strides=1), tf.nn.max_pool(input_data, ksize=9, padding='SAME', strides=1)

|

| 100 |

+

, tf.nn.max_pool(input_data, ksize=5, padding='SAME', strides=1), input_data], axis=-1)

|

| 101 |

+

input_data = common.convolutional(input_data, (1, 1, 2048, 512))

|

| 102 |

+

input_data = common.convolutional(input_data, (3, 3, 512, 1024))

|

| 103 |

+

input_data = common.convolutional(input_data, (1, 1, 1024, 512))

|

| 104 |

+

|

| 105 |

+

return route_1, route_2, input_data

|

| 106 |

+

|

| 107 |

+

def cspdarknet53_tiny(input_data):

|

| 108 |

+

input_data = common.convolutional(input_data, (3, 3, 3, 32), downsample=True)

|

| 109 |

+

input_data = common.convolutional(input_data, (3, 3, 32, 64), downsample=True)

|

| 110 |

+

input_data = common.convolutional(input_data, (3, 3, 64, 64))

|

| 111 |

+

|

| 112 |

+

route = input_data

|

| 113 |

+

input_data = common.route_group(input_data, 2, 1)

|

| 114 |

+

input_data = common.convolutional(input_data, (3, 3, 32, 32))

|

| 115 |

+

route_1 = input_data

|

| 116 |

+

input_data = common.convolutional(input_data, (3, 3, 32, 32))

|

| 117 |

+

input_data = tf.concat([input_data, route_1], axis=-1)

|

| 118 |

+

input_data = common.convolutional(input_data, (1, 1, 32, 64))

|

| 119 |

+

input_data = tf.concat([route, input_data], axis=-1)

|

| 120 |

+

input_data = tf.keras.layers.MaxPool2D(2, 2, 'same')(input_data)

|

| 121 |

+

|

| 122 |

+

input_data = common.convolutional(input_data, (3, 3, 64, 128))

|

| 123 |

+

route = input_data

|

| 124 |

+

input_data = common.route_group(input_data, 2, 1)

|

| 125 |

+

input_data = common.convolutional(input_data, (3, 3, 64, 64))

|

| 126 |

+

route_1 = input_data

|

| 127 |

+

input_data = common.convolutional(input_data, (3, 3, 64, 64))

|

| 128 |

+

input_data = tf.concat([input_data, route_1], axis=-1)

|

| 129 |

+

input_data = common.convolutional(input_data, (1, 1, 64, 128))

|

| 130 |

+

input_data = tf.concat([route, input_data], axis=-1)

|

| 131 |

+

input_data = tf.keras.layers.MaxPool2D(2, 2, 'same')(input_data)

|

| 132 |

+

|

| 133 |

+

input_data = common.convolutional(input_data, (3, 3, 128, 256))

|

| 134 |

+

route = input_data

|

| 135 |

+

input_data = common.route_group(input_data, 2, 1)

|

| 136 |

+

input_data = common.convolutional(input_data, (3, 3, 128, 128))

|

| 137 |

+

route_1 = input_data

|

| 138 |

+

input_data = common.convolutional(input_data, (3, 3, 128, 128))

|

| 139 |

+

input_data = tf.concat([input_data, route_1], axis=-1)

|

| 140 |

+

input_data = common.convolutional(input_data, (1, 1, 128, 256))

|

| 141 |

+

route_1 = input_data

|

| 142 |

+

input_data = tf.concat([route, input_data], axis=-1)

|

| 143 |

+

input_data = tf.keras.layers.MaxPool2D(2, 2, 'same')(input_data)

|

| 144 |

+

|

| 145 |

+

input_data = common.convolutional(input_data, (3, 3, 512, 512))

|

| 146 |

+

|

| 147 |

+

return route_1, input_data

|

| 148 |

+

|

| 149 |

+

def darknet53_tiny(input_data):

|

| 150 |

+

input_data = common.convolutional(input_data, (3, 3, 3, 16))

|

| 151 |

+

input_data = tf.keras.layers.MaxPool2D(2, 2, 'same')(input_data)

|

| 152 |

+

input_data = common.convolutional(input_data, (3, 3, 16, 32))

|

| 153 |

+

input_data = tf.keras.layers.MaxPool2D(2, 2, 'same')(input_data)

|

| 154 |

+

input_data = common.convolutional(input_data, (3, 3, 32, 64))

|

| 155 |

+

input_data = tf.keras.layers.MaxPool2D(2, 2, 'same')(input_data)

|

| 156 |

+