metadata

license: apache-2.0

library_name: pruna-engine

thumbnail: >-

https://assets-global.website-files.com/646b351987a8d8ce158d1940/64ec9e96b4334c0e1ac41504_Logo%20with%20white%20text.svg

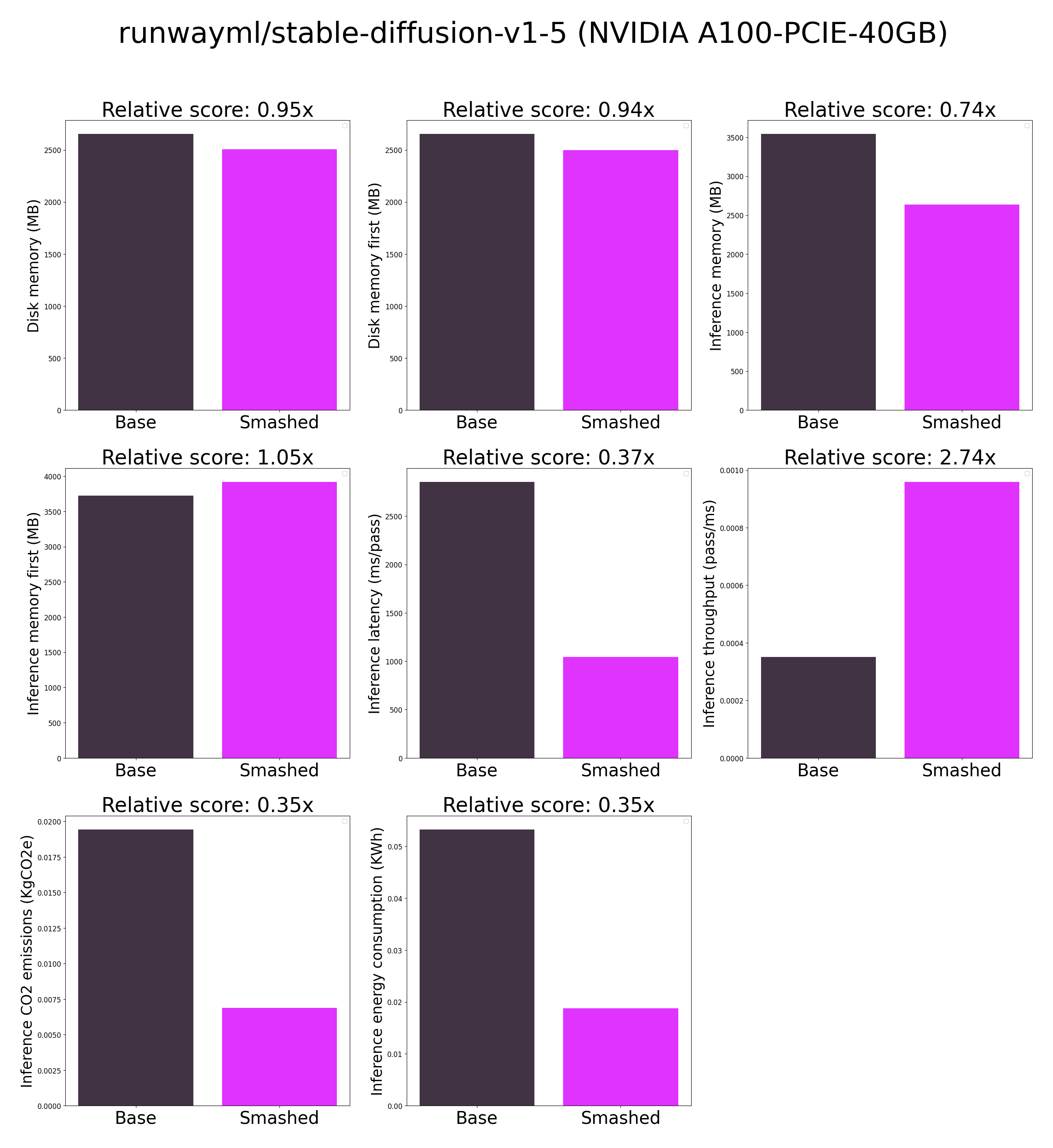

metrics:

- memory_disk

- memory_inference

- inference_latency

- inference_throughput

- inference_CO2_emissions

- inference_energy_consumption

Simply make AI models cheaper, smaller, faster, and greener!

Results

Setup

You can run the smashed model by (1) installing and importing the pruna-engine (version 0.2.5) package with the Pypi instructions,(2) downloading the model files at model_path. This can be done using huggingface with this repository name or with manual downloading, and (3) loading the model, (4) running the model. You can achieve this by running the following code:

from transformers.utils.hub import cached_file

from pruna_engine.PrunaModel import PrunaModel # Step (1): install and import `pruna-engine` package.

...

model_path = cached_file("PrunaAI/REPO", "model") # Step (2): download the model files at `model_path`.

smashed_model = PrunaModel.load_model(model_path) # Step (3): load the model.

y = smashed_model(x) # Step (4): run the model.

Configurations

The configuration info are in config.json.

License

We follow the same license as the original model. Please check the license of the original model before using this model.