Upload folder using huggingface_hub (#2)

Browse files- 720fbd8f03299c943466aa69ada29217613a2aae8fd0468b95f35cb781797798 (e814d3c7d2e673e333f00baa0e6140dd76f89ead)

- config.json +1 -1

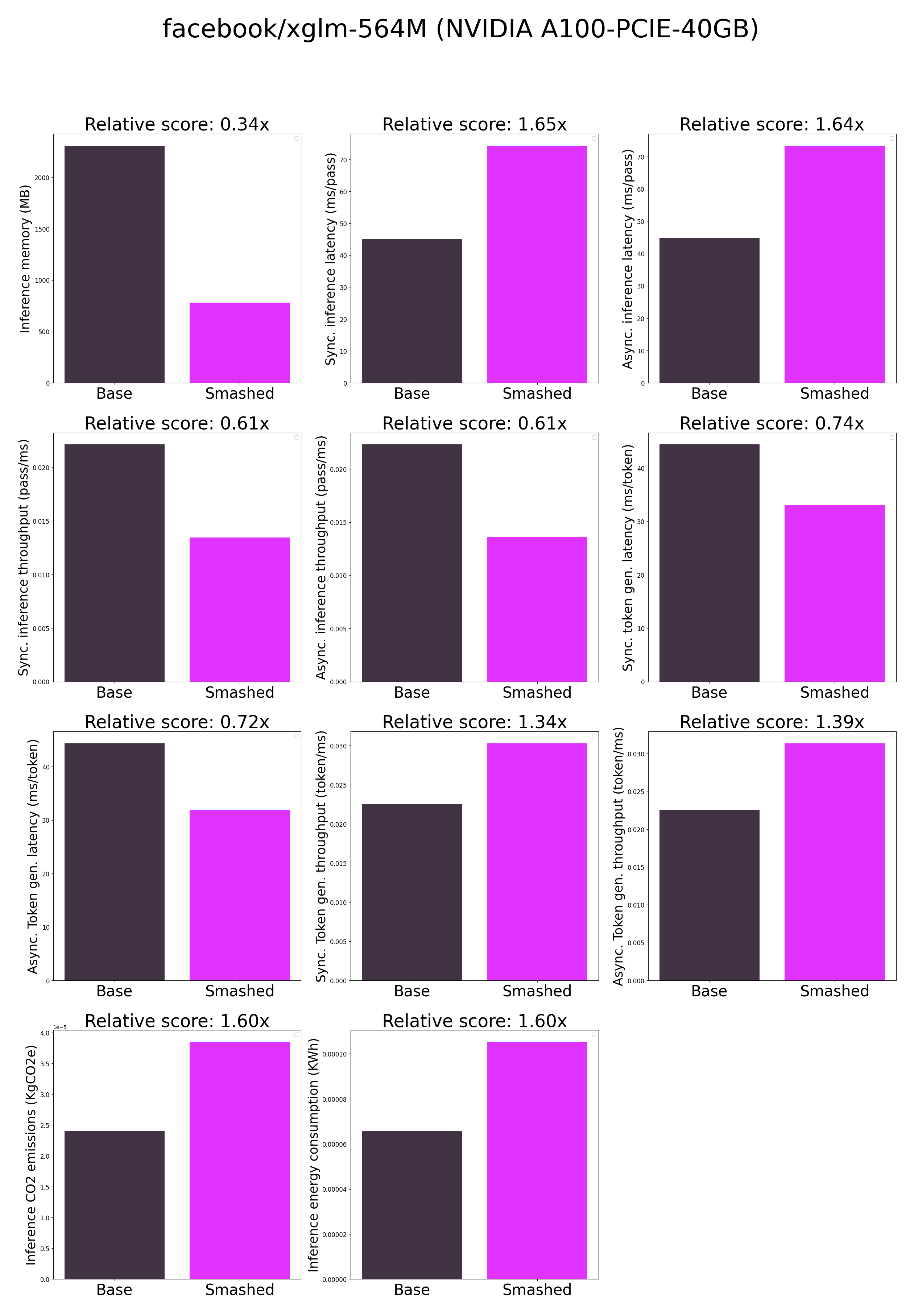

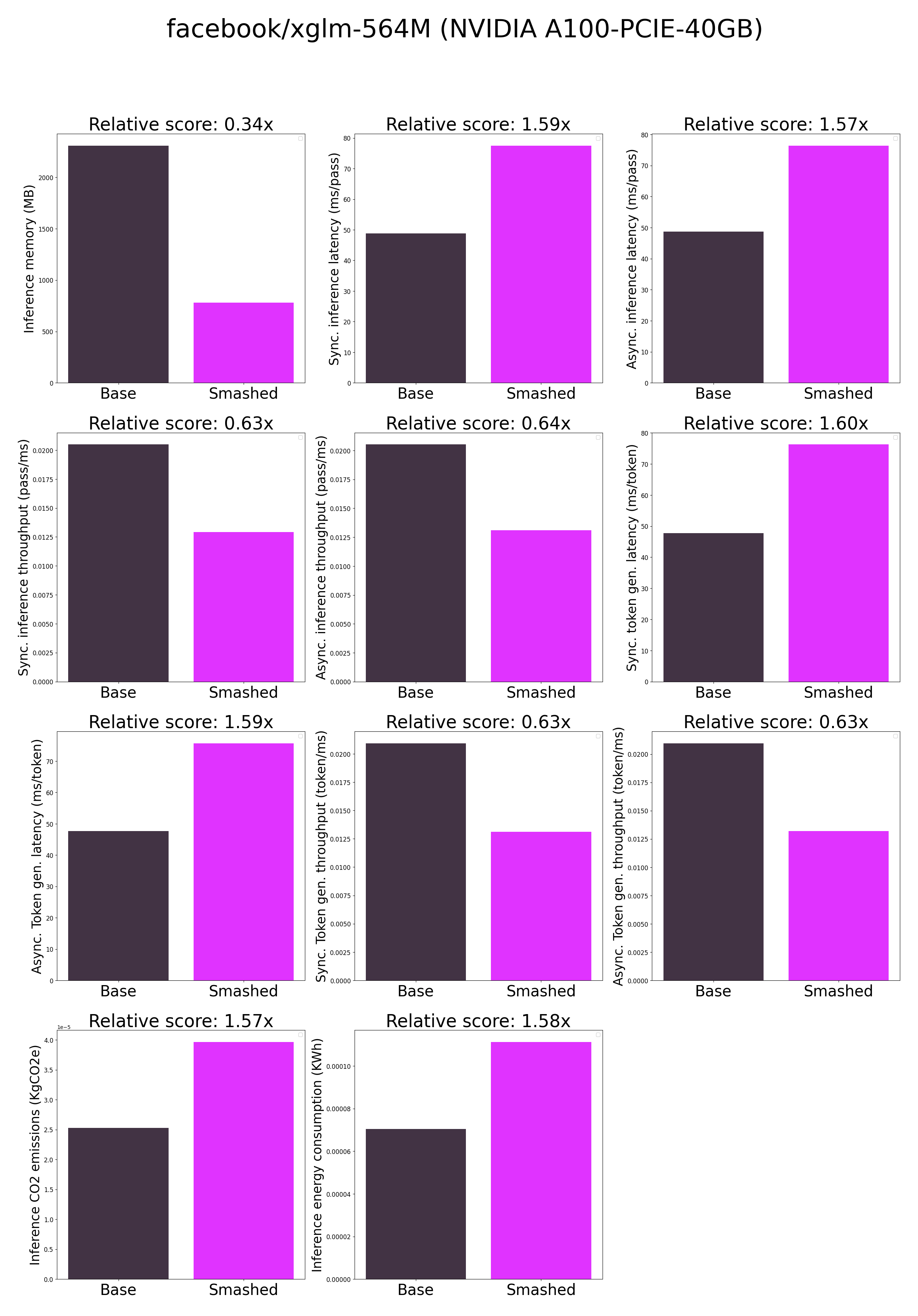

- plots.png +0 -0

- smash_config.json +2 -2

config.json

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

{

|

| 2 |

-

"_name_or_path": "/tmp/

|

| 3 |

"activation_dropout": 0,

|

| 4 |

"activation_function": "gelu",

|

| 5 |

"architectures": [

|

|

|

|

| 1 |

{

|

| 2 |

+

"_name_or_path": "/tmp/tmps_3vt0jk",

|

| 3 |

"activation_dropout": 0,

|

| 4 |

"activation_function": "gelu",

|

| 5 |

"architectures": [

|

plots.png

CHANGED

|

|

smash_config.json

CHANGED

|

@@ -6,7 +6,7 @@

|

|

| 6 |

"pruning_ratio": 0.0,

|

| 7 |

"factorizers": "None",

|

| 8 |

"quantizers": "['gptq']",

|

| 9 |

-

"

|

| 10 |

"output_deviation": 0.005,

|

| 11 |

"compilers": "None",

|

| 12 |

"static_batch": true,

|

|

@@ -14,7 +14,7 @@

|

|

| 14 |

"controlnet": "None",

|

| 15 |

"unet_dim": 4,

|

| 16 |

"device": "cuda",

|

| 17 |

-

"cache_dir": "/ceph/hdd/staff/charpent/.cache/

|

| 18 |

"batch_size": 1,

|

| 19 |

"model_name": "facebook/xglm-564M",

|

| 20 |

"task": "text_text_generation",

|

|

|

|

| 6 |

"pruning_ratio": 0.0,

|

| 7 |

"factorizers": "None",

|

| 8 |

"quantizers": "['gptq']",

|

| 9 |

+

"weight_quantization_bits": 8,

|

| 10 |

"output_deviation": 0.005,

|

| 11 |

"compilers": "None",

|

| 12 |

"static_batch": true,

|

|

|

|

| 14 |

"controlnet": "None",

|

| 15 |

"unet_dim": 4,

|

| 16 |

"device": "cuda",

|

| 17 |

+

"cache_dir": "/ceph/hdd/staff/charpent/.cache/modelshhx18fkc",

|

| 18 |

"batch_size": 1,

|

| 19 |

"model_name": "facebook/xglm-564M",

|

| 20 |

"task": "text_text_generation",

|