Upload folder using huggingface_hub (#2)

Browse files- 2a13503a7e2cec7614f025bcaada50840bb21b3e41dc7ec291187e6e879d3a5c (5c744185674991e592c46e47c06129666617160c)

- config.json +1 -1

- model.safetensors +1 -1

- plots.png +0 -0

- smash_config.json +9 -5

config.json

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

{

|

| 2 |

-

"_name_or_path": "/tmp/

|

| 3 |

"architectures": [

|

| 4 |

"LlamaForCausalLM"

|

| 5 |

],

|

|

|

|

| 1 |

{

|

| 2 |

+

"_name_or_path": "/tmp/tmp61xxbtu2",

|

| 3 |

"architectures": [

|

| 4 |

"LlamaForCausalLM"

|

| 5 |

],

|

model.safetensors

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

size 1534631736

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7ade3a95b6c19f19dc77b1987e8af899a62f9baa2f166e258586468aa23cf31f

|

| 3 |

size 1534631736

|

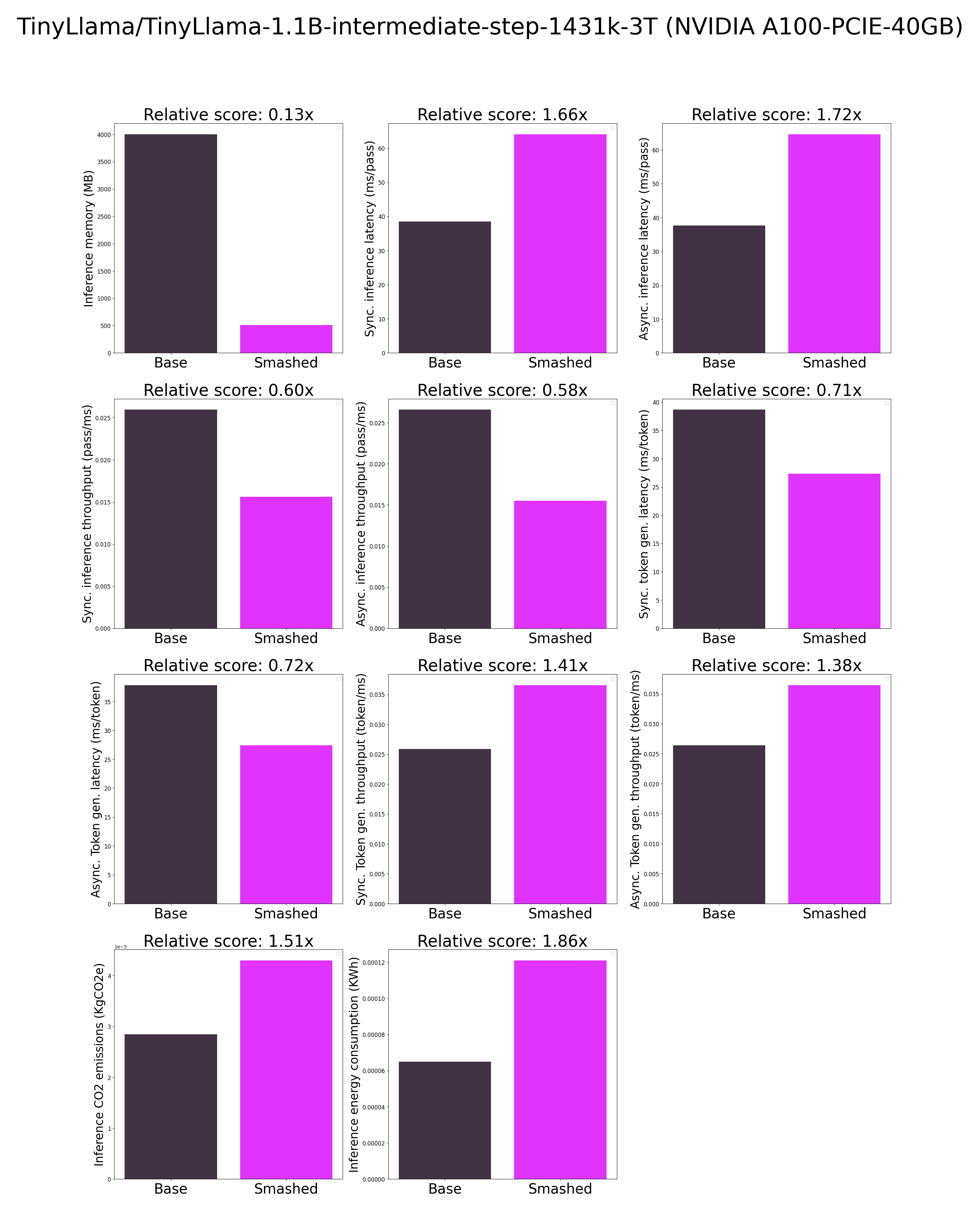

plots.png

CHANGED

|

|

smash_config.json

CHANGED

|

@@ -3,17 +3,21 @@

|

|

| 3 |

"verify_url": "http://johnrachwan.pythonanywhere.com",

|

| 4 |

"smash_config": {

|

| 5 |

"pruners": "None",

|

|

|

|

| 6 |

"factorizers": "None",

|

| 7 |

"quantizers": "['gptq']",

|

|

|

|

|

|

|

| 8 |

"compilers": "None",

|

| 9 |

-

"

|

|

|

|

|

|

|

|

|

|

| 10 |

"device": "cuda",

|

| 11 |

-

"cache_dir": "/ceph/hdd/staff/charpent/.cache/

|

| 12 |

"batch_size": 1,

|

| 13 |

"model_name": "TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T",

|

| 14 |

-

"

|

| 15 |

-

"n_quantization_bits": 8,

|

| 16 |

-

"output_deviation": 0.005,

|

| 17 |

"max_batch_size": 1,

|

| 18 |

"qtype_weight": "torch.qint8",

|

| 19 |

"qtype_activation": "torch.quint8",

|

|

|

|

| 3 |

"verify_url": "http://johnrachwan.pythonanywhere.com",

|

| 4 |

"smash_config": {

|

| 5 |

"pruners": "None",

|

| 6 |

+

"pruning_ratio": 0.0,

|

| 7 |

"factorizers": "None",

|

| 8 |

"quantizers": "['gptq']",

|

| 9 |

+

"weight_quantization_bits": 8,

|

| 10 |

+

"output_deviation": 0.005,

|

| 11 |

"compilers": "None",

|

| 12 |

+

"static_batch": true,

|

| 13 |

+

"static_shape": true,

|

| 14 |

+

"controlnet": "None",

|

| 15 |

+

"unet_dim": 4,

|

| 16 |

"device": "cuda",

|

| 17 |

+

"cache_dir": "/ceph/hdd/staff/charpent/.cache/modelsc0ox81qm",

|

| 18 |

"batch_size": 1,

|

| 19 |

"model_name": "TinyLlama/TinyLlama-1.1B-intermediate-step-1431k-3T",

|

| 20 |

+

"task": "text_text_generation",

|

|

|

|

|

|

|

| 21 |

"max_batch_size": 1,

|

| 22 |

"qtype_weight": "torch.qint8",

|

| 23 |

"qtype_activation": "torch.quint8",

|