Commit

•

b94fca7

1

Parent(s):

5ebc2d0

initial commit

Browse files- LICENSE +201 -0

- README.MD +1 -0

- README.md +728 -0

- README_en.md +1 -0

- README_zh.MD +1 -0

- assets/imgs/llama_cpp_1.png +0 -0

- assets/imgs/model_cap_en.png +0 -0

- assets/imgs/model_cap_zh.png +0 -0

- assets/imgs/opencompass_en.png +0 -0

- assets/imgs/opencompass_zh.png +0 -0

- assets/imgs/orion_start.PNG +0 -0

- assets/imgs/vllm_1.png +0 -0

- assets/imgs/wechat_group.jpg +0 -0

- config.json +31 -0

- configuration.json +1 -0

- configuration_orion.py +82 -0

- modeling_orion.py +1097 -0

- pytorch_model-00001-of-00002.bin +3 -0

- pytorch_model-00002-of-00002.bin +3 -0

- pytorch_model.bin.index.json +491 -0

- special_tokens_map.json +24 -0

- tokenization_orion.py +269 -0

- tokenizer.model +3 -0

- tokenizer_config.json +46 -0

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

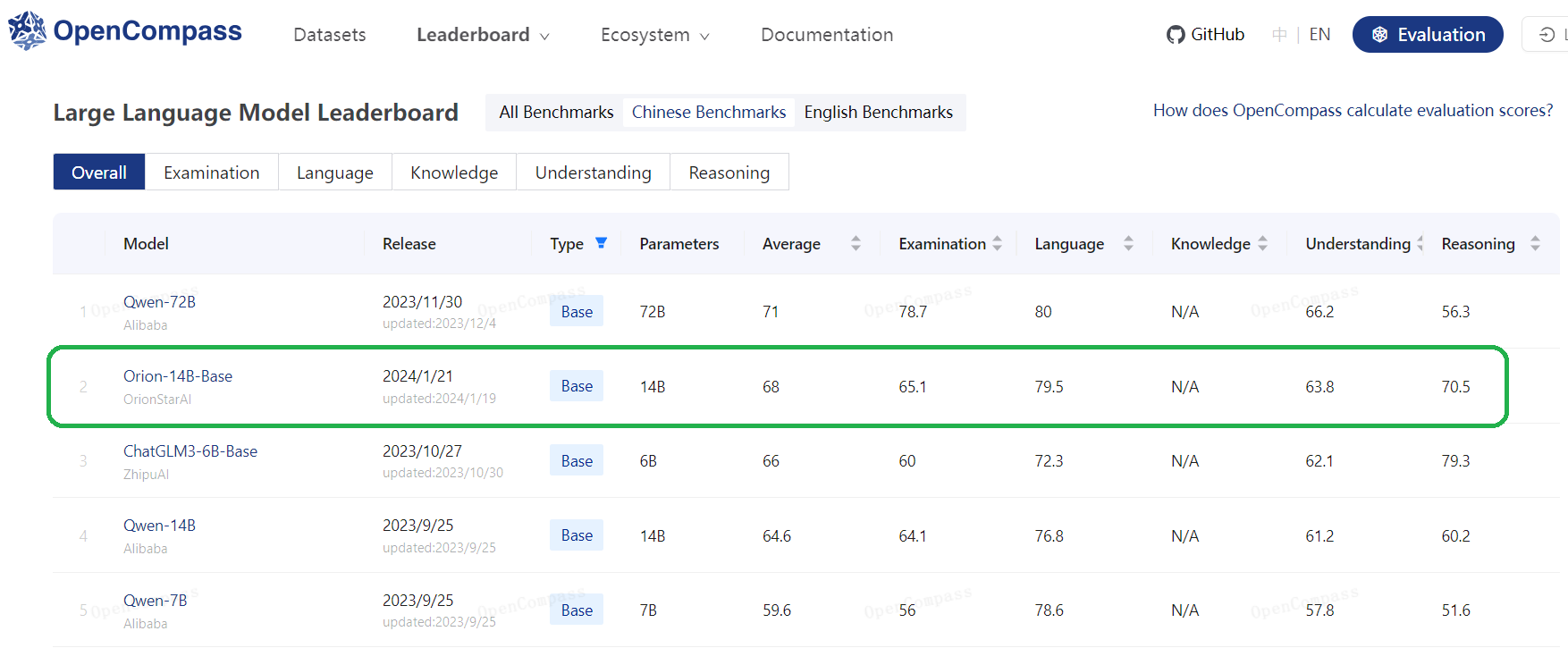

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright (C) 2023 ORION STAR Robotics

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.MD

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

obsolete

|

README.md

ADDED

|

@@ -0,0 +1,728 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!-- markdownlint-disable first-line-h1 -->

|

| 2 |

+

<!-- markdownlint-disable html -->

|

| 3 |

+

<div align="center">

|

| 4 |

+

<img src="./assets/imgs/orion_start.PNG" alt="logo" width="30%" />

|

| 5 |

+

</div>

|

| 6 |

+

|

| 7 |

+

<div align="center">

|

| 8 |

+

<h1>

|

| 9 |

+

Orion-14B

|

| 10 |

+

</h1>

|

| 11 |

+

</div>

|

| 12 |

+

|

| 13 |

+

<div align="center">

|

| 14 |

+

|

| 15 |

+

<div align="center">

|

| 16 |

+

<b>🇨🇳中文</b> | <a href="#table-of-contents">🌐English</a>

|

| 17 |

+

</div>

|

| 18 |

+

|

| 19 |

+

<h4 align="center">

|

| 20 |

+

<p>

|

| 21 |

+

🤗 <a href="https://huggingface.co/OrionStarAI" target="_blank">HuggingFace主页</a> | 🤖 <a href="https://modelscope.cn/organization/OrionStarAI" target="_blank">ModelScope主页</a><br>🎬 <a href="https://huggingface.co/spaces/OrionStarAI/Orion-14B-App-Demo" target="_blank">HuggingFace在线试用</a> | 🎫 <a href="https://modelscope.cn/studios/OrionStarAI/Orion-14B-App-Demo/summary" target="_blank">ModelScope在线试用</a><br>😺 <a href="https://github.com/OrionStarAI/Orion" target="_blank">GitHub</a><br>📖 <a href="https://github.com/OrionStarAI/Orion/blob/master/doc/Orion14B_v3.pdf" target="_blank">技术报告</a>

|

| 22 |

+

<p>

|

| 23 |

+

</h4>

|

| 24 |

+

|

| 25 |

+

</div>

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

# 目录

|

| 30 |

+

|

| 31 |

+

- [📖 模型介绍](#zh_model-introduction)

|

| 32 |

+

- [🔗 下载路径](#zh_model-download)

|

| 33 |

+

- [🔖 评估结果](#zh_model-benchmark)

|

| 34 |

+

- [📊 模型推理](#zh_model-inference) [<img src="./assets/imgs/vllm_1.png" alt="vllm" style="margin: 0;display: initial;" height="20" />](#vllm) [<img src="./assets/imgs/llama_cpp_1.png" alt="llamacpp" style="margin: 0;display: initial;" height="20" />](#llama-cpp)

|

| 35 |

+

- [📜 声明协议](#zh_declarations-license)

|

| 36 |

+

- [🥇 企业介绍](#zh_company-introduction)

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

<a name="zh_model-introduction"></a><br>

|

| 40 |

+

# 1. 模型介绍

|

| 41 |

+

|

| 42 |

+

- Orion-14B-Base是一个具有140亿参数的多语种大模型,该模型在一个包含2.5万亿token的多样化数据集上进行了训练,涵盖了中文、英语、日语、韩语等多种语言。在多语言环境下的一系列任务中展现出卓越的性能。在主流的公开基准评测中,Orion-14B系列模型表现优异,多项指标显著超越同等参数基本的其他模型。具体技术细节请参考[技术报告](https://github.com/OrionStarAI/Orion/blob/master/doc/Orion14B_v3.pdf)。

|

| 43 |

+

|

| 44 |

+

- Orion-14B系列大模型有以下几个特点:

|

| 45 |

+

- 基座20B参数级别大模型综合评测效果表现优异

|

| 46 |

+

- 多语言能力强,在日语、韩语测试集上显著领先

|

| 47 |

+

- 微调模型适应性强,在人类标注盲测中,表现突出

|

| 48 |

+

- 长上下文版本支持超长文本,在200k token长度上效果优异,最长可支持可达320k

|

| 49 |

+

- 量化版本模型大小缩小70%,推理速度提升30%,性能损失小于1%

|

| 50 |

+

|

| 51 |

+

<table style="border-collapse: collapse; width: 100%;">

|

| 52 |

+

<tr>

|

| 53 |

+

<td style="border: none; padding: 10px; box-sizing: border-box;">

|

| 54 |

+

<img src="./assets/imgs/opencompass_zh.png" alt="opencompass" style="width: 100%; height: auto;">

|

| 55 |

+

</td>

|

| 56 |

+

<td style="border: none; padding: 10px; box-sizing: border-box;">

|

| 57 |

+

<img src="./assets/imgs/model_cap_zh.png" alt="modelcap" style="width: 100%; height: auto;">

|

| 58 |

+

</td>

|

| 59 |

+

</tr>

|

| 60 |

+

</table>

|

| 61 |

+

|

| 62 |

+

- 具体而言,Orion-14B系列大语言模型包含:

|

| 63 |

+

- **Orion-14B-Base:** 基于2.5万亿tokens多样化数据集训练处的140亿参数量级的多语言基座模型。

|

| 64 |

+

- **Orion-14B-Chat:** 基于高质量语料库微调的对话类模型,旨在为大模型社区提供更好的用户交互体验。

|

| 65 |

+

- **Orion-14B-LongChat:** 在200k token长度上效果优异,最长可支持可达320k,在长文本评估集上性能比肩专有模型。

|

| 66 |

+

- **Orion-14B-Chat-RAG:** 在一个定制的检索增强生成数据集上进行微调的聊天模型,在检索增强生成任务中取得了卓越的性能。

|

| 67 |

+

- **Orion-14B-Chat-Plugin:** 专门针对插件和函数调用任务定制的聊天模型,非常适用于使用代理的相关场景,其中大语言模型充当插件和函数调用系统。

|

| 68 |

+

- **Orion-14B-Base-Int4:** 一个使用int4进行量化的基座模型。它将模型大小显著减小了70%,同时提高了推理速度30%,仅引入了1%的最小性能损失。

|

| 69 |

+

- **Orion-14B-Chat-Int4:** 一个使用int4进行量化的对话模型。

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

<a name="zh_model-download"></a><br>

|

| 73 |

+

# 2. 下载路径

|

| 74 |

+

|

| 75 |

+

发布模型和下载链接见下表:

|

| 76 |

+

|

| 77 |

+

| 模型名称 | HuggingFace下载链接 | ModelScope下载链接 |

|

| 78 |

+

|---------------------|-----------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------|

|

| 79 |

+

| ⚾ 基座模型 | [Orion-14B-Base](https://huggingface.co/OrionStarAI/Orion-14B-Base) | [Orion-14B-Base](https://modelscope.cn/models/OrionStarAI/Orion-14B-Base/summary) |

|

| 80 |

+

| 😛 对话模型 | [Orion-14B-Chat](https://huggingface.co/OrionStarAI/Orion-14B-Chat) | [Orion-14B-Chat](https://modelscope.cn/models/OrionStarAI/Orion-14B-Chat/summary) |

|

| 81 |

+

| 📃 长上下文模型 | [Orion-14B-LongChat](https://huggingface.co/OrionStarAI/Orion-14B-LongChat) | [Orion-14B-LongChat](https://modelscope.cn/models/OrionStarAI/Orion-14B-LongChat/summary) |

|

| 82 |

+

| 🔎 检索增强模型 | [Orion-14B-Chat-RAG](https://huggingface.co/OrionStarAI/Orion-14B-Chat-RAG) | [Orion-14B-Chat-RAG](https://modelscope.cn/models/OrionStarAI/Orion-14B-Chat-RAG/summary) |

|

| 83 |

+

| 🔌 插件模型 | [Orion-14B-Chat-Plugin](https://huggingface.co/OrionStarAI/Orion-14B-Chat-Plugin) | [Orion-14B-Chat-Plugin](https://modelscope.cn/models/OrionStarAI/Orion-14B-Chat-Plugin/summary)|

|

| 84 |

+

| 💼 基座Int4量化模型 | [Orion-14B-Base-Int4](https://huggingface.co/OrionStarAI/Orion-14B-Base-Int4) | [Orion-14B-Base-Int4](https://modelscope.cn/models/OrionStarAI/Orion-14B-Base-Int4/summary) |

|

| 85 |

+

| 📦 对话Int4量化模型 | [Orion-14B-Chat-Int4](https://huggingface.co/OrionStarAI/Orion-14B-Chat-Int4) | [Orion-14B-Chat-Int4](https://modelscope.cn/models/OrionStarAI/Orion-14B-Chat-Int4/summary) |

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

<a name="zh_model-benchmark"></a><br>

|

| 89 |

+

# 3. 评估结果

|

| 90 |

+

|

| 91 |

+

## 3.1. 基座模型Orion-14B-Base评估

|

| 92 |

+

|

| 93 |

+

### 3.1.1. 专业知识与试题评估结果

|

| 94 |

+

| 模型名称 | C-Eval | CMMLU | MMLU | AGIEval | Gaokao | BBH |

|

| 95 |

+

|--------------------|----------|----------|----------|----------|----------|----------|

|

| 96 |

+

| LLaMA2-13B | 41.4 | 38.4 | 55.0 | 30.9 | 18.2 | 45.6 |

|

| 97 |

+

| Skywork-13B | 59.1 | 61.4 | 62.7 | 43.6 | 56.1 | 48.3 |

|

| 98 |

+

| Baichuan2-13B | 59.0 | 61.3 | 59.5 | 37.4 | 45.6 | 49.0 |

|

| 99 |

+

| QWEN-14B | 71.7 | 70.2 | 67.9 | 51.9 | **62.5** | 53.7 |

|

| 100 |

+

| InternLM-20B | 58.8 | 59.0 | 62.1 | 44.6 | 45.5 | 52.5 |

|

| 101 |

+

| **Orion-14B-Base** | **72.9** | **70.6** | **69.9** | **54.7** | 62.1 | **56.5** |

|

| 102 |

+

|

| 103 |

+

### 3.1.2. 理解与通识评估结果

|

| 104 |

+

| 模型名称 |RACE-middle|RACE-high| HellaSwag| PIQA | Lambada | WSC |

|

| 105 |

+

|--------------------|----------|----------|----------|----------|----------|----------|

|

| 106 |

+

| LLaMA 2-13B | 63.0 | 58.9 | 77.5 | 79.8 | 76.5 | 66.3 |

|

| 107 |

+

| Skywork-13B | 87.6 | 84.1 | 73.7 | 78.3 | 71.8 | 66.3 |

|

| 108 |

+

| Baichuan 2-13B | 68.9 | 67.2 | 70.8 | 78.1 | 74.1 | 66.3 |

|

| 109 |

+

| QWEN-14B | 93.0 | 90.3 | **80.2** | 79.8 | 71.4 | 66.3 |

|

| 110 |

+

| InternLM-20B | 86.4 | 83.3 | 78.1 | **80.3** | 71.8 | 68.3 |

|

| 111 |

+

| **Orion-14B-Base** | **93.2** | **91.3** | 78.5 | 79.5 | **78.8** | **70.2** |

|

| 112 |

+

|

| 113 |

+

### 3.1.3. OpenCompass评测集评估结果

|

| 114 |

+

| 模型名称 | Average | Examination | Language | Knowledge | Understanding | Reasoning |

|

| 115 |

+

|------------------|----------|----------|----------|----------|----------|----------|

|

| 116 |

+

| LLaMA 2-13B | 47.3 | 45.2 | 47.0 | 58.3 | 50.9 | 43.6 |

|

| 117 |

+

| Skywork-13B | 53.6 | 61.1 | 51.3 | 52.7 | 64.5 | 45.2 |

|

| 118 |

+

| Baichuan 2-13B | 49.4 | 51.8 | 47.5 | 48.9 | 58.1 | 44.2 |

|

| 119 |

+

| QWEN-14B | 62.4 | 71.3 | 52.67 | 56.1 | 68.8 | 60.1 |

|

| 120 |

+

| InternLM-20B | 59.4 | 62.5 | 55.0 | **60.1** | 67.3 | 54.9 |

|

| 121 |

+

|**Orion-14B-Base**| **64.3** | **71.4** | **55.0** | 60.0 | **71.9** | **61.6** |

|

| 122 |

+

|

| 123 |

+

### 3.1.4. 日语测试集评估结果

|

| 124 |

+

| 模型名称 |**Average**| JCQA | JNLI | MARC | JSQD | JQK | XLS | XWN | MGSM |

|

| 125 |

+

|--------------------|----------|----------|----------|----------|----------|----------|----------|----------|----------|

|

| 126 |

+

| PLaMo-13B | 52.3 | 56.7 | 42.8 | 95.8 | 70.6 | 71.0 | 8.70 | 70.5 | 2.40 |

|

| 127 |

+

| WebLab-10B | 50.7 | 66.6 | 53.7 | 82.1 | 62.9 | 56.2 | 10.0 | 72.0 | 2.40 |

|

| 128 |

+

| ELYZA-jp-7B | 48.8 | 71.7 | 25.3 | 86.6 | 70.8 | 64.1 | 2.50 | 62.1 | 7.20 |

|

| 129 |

+

| StableLM-jp-7B | 51.1 | 33.4 | 43.3 | **96.7** | 70.6 | 78.1 | 10.7 | 72.8 | 2.80 |

|

| 130 |

+

| LLaMA 2-13B | 46.3 | 75.0 | 47.6 | 38.8 | 76.1 | 67.7 | 18.1 | 63.2 | 10.4 |

|

| 131 |

+

| Baichuan 2-13B | 57.1 | 73.7 | 31.3 | 91.6 | 80.5 | 63.3 | 18.6 | 72.2 | 25.2 |

|

| 132 |

+

| QWEN-14B | 65.8 | 85.9 | 60.7 | 97.0 | 83.3 | 71.8 | 18.8 | 70.6 | 38.0 |

|

| 133 |

+

| Yi-34B | 67.1 | 83.8 | 61.2 | 95.2 | **86.1** | 78.5 | **27.2** | 69.2 | 35.2 |

|

| 134 |

+

| **Orion-14B-Base** | **69.1** | **88.2** | **75.8** | 94.1 | 75.7 | **85.1** | 17.3 | **78.8** | **38.0** |

|

| 135 |

+

|

| 136 |

+

### 3.1.5. 韩语测试集n-shot评估结果

|

| 137 |

+

| 模型名称 | **Average**<br>n=0 n=5 | HellaSwag<br>n=0 n=5 | COPA<br> n=0 n=5 | BooIQ<br>n=0 n=5 | SentiNeg<br>n=0 n=5|

|

| 138 |

+

|------------------|------------------------------|------------------------------|------------------------------|------------------------------|------------------------------|

|

| 139 |

+

| KoGPT | 53.0 70.1 | 55.9 58.3 | 73.5 72.9 | 45.1 59.8 | 37.5 89.4 |

|

| 140 |

+

| Polyglot-ko-13B | 69.6 73.7 |**59.5** **63.1**|**79.4** **81.1**| 48.2 60.4 | 91.2 90.2 |

|

| 141 |

+

| LLaMA 2-13B | 46.7 63.7 | 41.3 44.0 | 59.3 63.8 | 34.9 73.8 | 51.5 73.4 |

|

| 142 |

+

| Baichuan 2-13B | 52.1 58.7 | 39.2 39.6 | 60.6 60.6 | 58.4 61.5 | 50.3 72.9 |

|

| 143 |

+

| QWEN-14B | 53.8 73.7 | 45.3 46.8 | 64.9 68.9 | 33.4 83.5 | 71.5 95.7 |

|

| 144 |

+

| Yi-34B | 54.2 72.1 | 44.6 44.7 | 58.0 60.6 | 65.9 90.2 | 48.3 92.9 |

|

| 145 |

+

|**Orion-14B-Base**|**74.5** **79.6**| 47.0 49.6 | 77.7 79.4 |**81.6** **90.7**|**92.4** **98.7**|

|

| 146 |

+

|

| 147 |

+

### 3.1.6. 多语言评估结果

|

| 148 |

+

| 模型名称 | Train Lang | Japanese | Korean | Chinese | English |

|

| 149 |

+

|--------------------|------------|----------|----------|----------|----------|

|

| 150 |

+

| PLaMo-13B | En,Jp | 52.3 | * | * | * |

|

| 151 |

+

| Weblab-10B | En,Jp | 50.7 | * | * | * |

|

| 152 |

+

| ELYZA-jp-7B | En,Jp | 48.8 | * | * | * |

|

| 153 |

+

| StableLM-jp-7B | En,Jp | 51.1 | * | * | * |

|

| 154 |

+

| KoGPT-6B | En,Ko | * | 70.1 | * | * |

|

| 155 |

+

| Polyglot-ko-13B | En,Ko | * | 70.7 | * | * |

|

| 156 |

+

| Baichuan2-13B | Multi | 57.1 | 58.7 | 50.8 | 57.1 |

|

| 157 |

+

| Qwen-14B | Multi | 65.8 | 73.7 | 64.5 | 65.4 |

|

| 158 |

+

| Llama2-13B | Multi | 46.3 | 63.7 | 41.4 | 55.3 |

|

| 159 |

+

| Yi-34B | Multi | 67.1 | 72.2 | 58.7 | **68.8** |

|

| 160 |

+

| **Orion-14B-Base** | Multi | **69.1** | **79.5** | **67.9** | 67.3 |

|

| 161 |

+

|

| 162 |

+

## 3.2. 对话模型Orion-14B-Chat评估

|

| 163 |

+

### 3.2.1. 对话模型MTBench主观评估

|

| 164 |

+

| 模型名称 | 第一轮 | 第二轮 | **平均** |

|

| 165 |

+

|----------------------|----------|----------|----------|

|

| 166 |

+

| Baichuan2-13B-Chat | 7.05 | 6.47 | 6.76 |

|

| 167 |

+

| Qwen-14B-Chat | 7.30 | 6.62 | 6.96 |

|

| 168 |

+

| Llama2-13B-Chat | 7.10 | 6.20 | 6.65 |

|

| 169 |

+

| InternLM-20B-Chat | 7.03 | 5.93 | 6.48 |

|

| 170 |

+

| **Orion-14B-Chat** | **7.68** | **7.07** | **7.37** |

|

| 171 |

+

|

| 172 |

+

\*这里评测使用vllm进行推理

|

| 173 |

+

|

| 174 |

+

### 3.2.2. 对话模型AlignBench主观评估

|

| 175 |

+

| 模型名称 | 数学能力 | 逻辑推理 | 基本能力 | 中文理解 | 综合问答 | 写作能力 | 角色扮演 | 专业知识 | **平均** |

|

| 176 |

+

|--------------------|----------|----------|----------|----------|----------|----------|----------|----------|----------|

|

| 177 |

+

| Baichuan2-13B-Chat | 3.76 | 4.07 | 6.22 | 6.05 | 7.11 | 6.97 | 6.75 | 6.43 | 5.25 |

|

| 178 |

+

| Qwen-14B-Chat | **4.91** | **4.71** | **6.90** | 6.36 | 6.74 | 6.64 | 6.59 | 6.56 | **5.72** |

|

| 179 |

+

| Llama2-13B-Chat | 3.05 | 3.79 | 5.43 | 4.40 | 6.76 | 6.63 | 6.99 | 5.65 | 4.70 |

|

| 180 |

+

| InternLM-20B-Chat | 3.39 | 3.92 | 5.96 | 5.50 | **7.18** | 6.19 | 6.49 | 6.22 | 4.96 |

|

| 181 |

+

| **Orion-14B-Chat** | 4.00 | 4.24 | 6.18 | **6.57** | 7.16 | **7.36** | **7.16** | **6.99** | 5.51 |

|

| 182 |

+

|

| 183 |

+

\*这里评测使用vllm进行推理

|

| 184 |

+

|

| 185 |

+

## 3.3. 长上下文模型Orion-14B-LongChat评估

|

| 186 |

+

### 3.3.1. 长上下文模型LongBench评估

|

| 187 |

+

| 模型名称 | NarrativeQA| MultiFieldQA-en| MultiFieldQA-zh | DuReader | QMSum | VCSUM | TREC | TriviaQA | LSHT | RepoBench-P |

|

| 188 |

+

|--------------------------|-----------|-----------|-----------|-----------|-----------|-----------|-----------|-----------|-----------|-----------|

|

| 189 |

+

| GPT-3.5-Turbo-16k | **23.60** | **52.30** | **61.20** | 28.70 | 23.40 | **16.00** | 68.00 | **91.40** | 29.20 | 53.60 |

|

| 190 |

+

| LongChat-v1.5-7B-32k | 16.90 | 41.40 | 29.10 | 19.50 | 22.70 | 9.90 | 63.50 | 82.30 | 23.20 | 55.30 |

|

| 191 |

+

| Vicuna-v1.5-7B-16k | 19.40 | 38.50 | 43.00 | 19.30 | 22.80 | 15.10 | 71.50 | 86.20 | 28.80 | 43.50 |

|

| 192 |

+

| Yi-6B-200K | 14.11 | 36.74 | 22.68 | 14.01 | 20.44 | 8.08 | 72.00 | 86.61 | 38.00 | **63.29** |

|

| 193 |

+

| Orion-14B-LongChat | 19.47 | 48.11 | 55.84 | **37.02** | **24.87** | 15.44 | **77.00** | 89.12 | **45.50** | 54.31 |

|

| 194 |

+

|

| 195 |

+

## 3.4. 检索增强模型Orion-14B-Chat-RAG评估

|

| 196 |

+

### 3.4.1. 自建检索增强测试集评估结果

|

| 197 |

+

|模型名称|回复效果(关键字)|*回复效果(主观打分)|引用能力|兜底能力|*AutoQA|*抽取数据|

|

| 198 |

+

|---------------------|------|------|------|------|------|------|

|

| 199 |

+

| Baichuan2-13B-Chat | 85 | 76 | 1 | 0 | 69 | 51 |

|

| 200 |

+

| Qwen-14B-Chat | 79 | 77 | 75 | 47 | 68 | 72 |

|

| 201 |

+

| Qwen-72B-Chat(Int4) | 87 | 89 | 90 | 32 | 67 | 76 |

|

| 202 |

+

| GPT-4 | 91 | 94 | 96 | 95 | 75 | 86 |

|

| 203 |

+

| Orion-14B-Chat-RAG | 86 | 87 | 91 | 97 | 73 | 71 |

|

| 204 |

+

\* 表示人工评判结果

|

| 205 |

+

|

| 206 |

+

## 3.5. 插件模型Orion-14B-Chat-Plugin评估

|

| 207 |

+

### 3.5.1. 自建插件测试集评估结果

|

| 208 |

+

| 模型名称 | 全参数意图识别 | 缺参数意图识别 | 非插件调用识别 |

|

| 209 |

+

|-----------------------|--------|-----------|--------|

|

| 210 |

+

| Baichuan2-13B-Chat | 25 | 0 | 0 |

|

| 211 |

+

| Qwen-14B-Chat | 55 | 0 | 50 |

|

| 212 |

+

| GPT-4 | **95** | 52.38 | 70 |

|

| 213 |

+

| Orion-14B-Chat-Plugin | 92.5 | **60.32** | **90** |

|

| 214 |

+

|

| 215 |

+

## 3.6. 量化模型Orion-14B-Base-Int4评估

|

| 216 |

+

### 3.6.1. 量化前后整体对比

|

| 217 |

+

|模型名称|模型大小(GB)|推理速度(令牌数/秒)|C-Eval |CMMLU |MMLU |RACE | HellaSwag|

|

| 218 |

+

|-------------------------|------|-----|------|------|------|------|------|

|

| 219 |

+

| OrionStar-14B-Base | 28.0 | 135 | 72.8 | 70.6 | 70.0 | 93.3 | 78.5 |

|

| 220 |

+

| OrionStar-14B-Base-Int4 | 8.3 | 178 | 71.8 | 69.8 | 69.2 | 93.1 | 78.0 |

|

| 221 |

+

|

| 222 |

+

|

| 223 |

+

<a name="zh_model-inference"></a><br>

|

| 224 |

+

# 4. 模型推理

|

| 225 |

+

|

| 226 |

+

推理所需的模型权重、源码、配置已发布在 Hugging Face,下载链接见本文档最开始的表格。我们在此示范多种推理方式。程序会自动从

|

| 227 |

+

Hugging Face 下载所需资源。

|

| 228 |

+

|

| 229 |

+

## 4.1. Python 代码方式

|

| 230 |

+

|

| 231 |

+

```python

|

| 232 |

+

import torch

|

| 233 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 234 |

+

from transformers.generation.utils import GenerationConfig

|

| 235 |

+

|

| 236 |

+

tokenizer = AutoTokenizer.from_pretrained("OrionStarAI/Orion-14B", use_fast=False, trust_remote_code=True)

|

| 237 |

+

model = AutoModelForCausalLM.from_pretrained("OrionStarAI/Orion-14B", device_map="auto",

|

| 238 |

+

torch_dtype=torch.bfloat16, trust_remote_code=True)

|

| 239 |

+

|

| 240 |

+

model.generation_config = GenerationConfig.from_pretrained("OrionStarAI/Orion-14B")

|

| 241 |

+

messages = [{"role": "user", "content": "你好! 你叫什么名字!"}]

|

| 242 |

+

response = model.chat(tokenizer, messages, streaming=False)

|

| 243 |

+

print(response)

|

| 244 |

+

|

| 245 |

+

```

|

| 246 |

+

|

| 247 |

+

在上述两段代码中,模型加载指定 `device_map='auto'`

|

| 248 |

+

,会使用所有可用显卡。如需指定使用的设备,可以使用类似 `export CUDA_VISIBLE_DEVICES=0,1`(使用了0、1号显卡)的方式控制。

|

| 249 |

+

|

| 250 |

+

## 4.2. 命令行工具方式

|

| 251 |

+

|

| 252 |

+

```shell

|

| 253 |

+

CUDA_VISIBLE_DEVICES=0 python cli_demo.py

|

| 254 |

+

```

|

| 255 |

+

|

| 256 |

+

本命令行工具是为 Chat 场景设计,因此我们不支持使用该工具调用 Base 模型。

|

| 257 |

+

|

| 258 |

+

## 4.3. 脚本直接推理

|

| 259 |

+

|

| 260 |

+

```shell

|

| 261 |

+

# base model

|

| 262 |

+

CUDA_VISIBLE_DEVICES=0 python demo/text_generation_base.py --model OrionStarAI/Orion-14B --tokenizer OrionStarAI/Orion-14B --prompt 你好,你叫什么名字

|

| 263 |

+

|

| 264 |

+

# chat model

|

| 265 |

+

CUDA_VISIBLE_DEVICES=0 python demo/text_generation.py --model OrionStarAI/Orion-14B-Chat --tokenizer OrionStarAI/Orion-14B-Chat --prompt 你好,你叫什么名字

|

| 266 |

+

|

| 267 |

+

```

|

| 268 |

+

|

| 269 |

+

<a name="vllm"></a><br>

|

| 270 |

+

## 4.4. 使用vllm推理

|

| 271 |

+

- 工程地址<br>

|

| 272 |

+

https://github.com/vllm-project/vllm

|

| 273 |

+

|

| 274 |

+

- 拉取请求<br>

|

| 275 |

+

https://github.com/vllm-project/vllm/pull/2539

|

| 276 |

+

|

| 277 |

+

<a name="llama-cpp"></a><br>

|

| 278 |

+

## 4.5. 使用llama.cpp推理

|

| 279 |

+

|

| 280 |

+

- 工程地址<br>

|

| 281 |

+

https://github.com/ggerganov/llama.cpp

|

| 282 |

+

|

| 283 |

+

- 拉取请求<br>

|

| 284 |

+

https://github.com/ggerganov/llama.cpp/pull/5118

|

| 285 |

+

|

| 286 |

+

- 如何转换GGUF格式模型

|

| 287 |

+

|

| 288 |

+

```shell

|

| 289 |

+

python convert-hf-to-gguf.py path/to/Orion-14B-Chat --outfile chat.gguf

|

| 290 |

+

```

|

| 291 |

+

|

| 292 |

+

- 如何运行GGUF格式模型推理

|

| 293 |

+

|

| 294 |

+

```shell

|

| 295 |

+

./main --frequency-penalty 0.5 --frequency-penalty 0.5 --top-k 5 --top-p 0.9 -m chat.gguf -p "Building a website can be done in 10 simple steps:\nStep 1:" -n 400 -e

|

| 296 |

+

```

|

| 297 |

+

|

| 298 |

+

|

| 299 |

+

|

| 300 |

+

|

| 301 |

+

## 4.6. 示例输出

|

| 302 |

+

|

| 303 |

+

### 4.6.1. 闲聊

|

| 304 |

+

|

| 305 |

+

`````

|

| 306 |

+

用户:你好,你叫什么名字

|

| 307 |

+

Orion-14B:你好!我是一个人工智能助手,没有固定的名字。你可以随意称呼我。有什么我可以帮助你的吗?

|

| 308 |

+

用户:讲个故事

|

| 309 |

+

Orion-14B:从前有一个小男孩叫杰克,他住在一个小村庄里。杰克非常喜欢冒险和探索,总是梦想着能够发现一些神奇的事情。

|

| 310 |

+

|

| 311 |

+

有一天,杰克听说在附近的森林深处有一座传说中的魔法城堡,里面住着一个邪恶的巨人。这个巨人的名字叫格里姆,他被困在城堡中,无法离开。

|

| 312 |

+

|

| 313 |

+

杰克决定要去解救这个巨人,因为他相信格里姆一定有自己的原因被困在那里。于是,他带着勇气和决心,踏上了前往魔法城堡的旅程。

|

| 314 |

+

|

| 315 |

+

经过长时间的跋涉,杰克终��来到了城堡的大门前。他小心翼翼地推开大门,进入了一个充满神秘气息的房间。

|

| 316 |

+

|

| 317 |

+

房间里弥漫着黑暗的气息,但杰克并没有被吓倒。他勇敢地向城堡深处走去,最终找到了格里姆。

|

| 318 |

+

|

| 319 |

+

格里姆告诉杰克,他是被一个邪恶的女巫诅咒的,只有通过解开咒语才能获得自由。杰克决定要帮助格里姆,他开始寻找线索和解开咒语的方法。

|

| 320 |

+

|

| 321 |

+

经过一番努力,杰克终于找到了一条通往女巫的秘密实验室的道路。他在那里发现了女巫留下的魔法书,书中记载了解开咒语的方法。

|

| 322 |

+

|

| 323 |

+

杰克按照书上所写的步骤进行操作,成功地解开了格里姆身上的诅咒。巨人感激不已,他答应帮助杰克实现他的愿望。

|

| 324 |

+

|

| 325 |

+

从此以后,杰克和格里姆成为了好朋友,他们一起经历了许多惊险刺激的冒险,并用他们的勇气和智慧战胜了各种困难。

|

| 326 |

+

|

| 327 |

+

这个故事告诉我们,只要有勇气和决心,我们就能克服一切困难,实现自己的梦想。

|

| 328 |

+

`````

|

| 329 |

+

|

| 330 |

+

### 4.6.2. 日韩

|

| 331 |

+

|

| 332 |

+

`````

|

| 333 |

+

用户:自己を紹介してください

|

| 334 |

+

Orion-14B:こんにちは、私の名前はChatMaxで、OrionStarによって開発されたAIアシスタントです。私はユーザーに正確で、客観的で、有用な回答を提供し、彼らが問題を解決し、情報を取得するのを助けることができます。

|

| 335 |

+

用户:자기소개를 해주세요.

|

| 336 |

+

Orion-14B:안녕하세요, 제 이름은 ChatMax입니다. OrionStar에서 개발한 AI 어시스턴트입니다. 저는 사용자들에게 정확하고 객관적이며 유용한 답변을 제공하여 문제를 해결하고 정보를 얻는 데 도움을 줄 수 있습니다.

|

| 337 |

+

`````

|

| 338 |

+

|

| 339 |

+

|

| 340 |

+

<a name="zh_declarations-license"></a><br>

|

| 341 |

+

# 5. 声明、协议

|

| 342 |

+

|

| 343 |

+

## 5.1. 声明

|

| 344 |

+

|

| 345 |

+

我们强烈呼吁所有使用者,不要利用 Orion-14B 模型进行任何危害国家社会安全或违法的活动。另外,我们也要求使用者不要将

|

| 346 |

+

Orion-14B 模型用于未经适当安全审查和备案的互联网服务。

|

| 347 |

+

|

| 348 |

+

我们希望所有的使用者都能遵守这个原则,确保科技的发展能在规范和合法的环境下进行。

|

| 349 |

+

我们已经尽我们所能,来确保模型训练过程中使用的数据的合规性。然而,尽管我们已经做出了巨大的努力,但由于模型和数据的复杂性,仍有可能存在一些无法预见的问题。因此,如果由于使用

|

| 350 |

+

Orion-14B 开源模型而导致的任何问题,包括但不限于数据安全问题、公共舆论风险,或模型被误导、滥用、传播或不当利用所带来的任何风险和问题,我们将不承担任何责任。

|

| 351 |

+

|

| 352 |

+

## 5.2. 协议

|

| 353 |

+

|

| 354 |

+

社区使用Orion-14B系列模型

|

| 355 |

+

- 代码请遵循 [Apache License Version 2.0](./LICENSE)<br>

|

| 356 |

+

- 模型请遵循 [Orion-14B系列模型社区许可协议](./ModelsCommunityLicenseAgreement)

|

| 357 |

+

|

| 358 |

+

|

| 359 |

+

<a name="zh_company-introduction"></a><br>

|

| 360 |

+

# 6. 企业介绍

|

| 361 |

+

|

| 362 |

+

猎户星空(OrionStar)是一家全球领先的服务机器人解决方案公司,成立于2016年9月。猎户星空致力于基于人工智能技术打造下一代革命性机器人,使人们能够摆脱重复的体力劳动,使人类的工作和生活更加智能和有趣,通过技术使社会和世界变得更加美好。

|

| 363 |

+

|

| 364 |

+

猎户星空拥有完全自主开发的全链条人工智能技术,如语音交互和视觉导航。它整合了产品开发能力和技术应用能力。基于Orion机械臂平台,它推出了ORION

|

| 365 |

+

STAR AI Robot Greeting、AI Robot Greeting Mini、Lucki、Coffee

|

| 366 |

+

Master等产品,并建立了Orion机器人的开放平台OrionOS。通过为 **真正有用的机器人而生** 的理念实践,它通过AI技术为更多人赋能。

|

| 367 |

+

|

| 368 |

+

凭借7年AI经验积累,猎户星空已推出的大模型深度应用“聚言”,并陆续面向行业客户提供定制化AI大模型咨询与服务解决方案,真正帮助客户实现企业经营效率领先同行目标。

|

| 369 |

+

|

| 370 |

+

**猎户星空具备全链条大模型应用能力的核心优势**,包括拥有从海量数据处理、大模型预训练、二次预训练、微调(Fine-tune)、Prompt

|

| 371 |

+

Engineering 、Agent开发的全链条能力和经验积累;拥有完整的端到端模型训练能力,包括系统化的数据处理流程和数百张GPU的并行模型训练能力,现已在大政务、云服务、出海电商、快消等多个行业场景落地。

|

| 372 |

+

|

| 373 |

+

***欢迎有大模型应用落地需求的企业联系我们进行商务合作***<br>

|

| 374 |

+

**咨询电话:** 400-898-7779<br>

|

| 375 |

+

**电子邮箱:** ai@orionstar.com<br>

|

| 376 |

+

**Discord社区链接:** https://discord.gg/zumjDWgdAs

|

| 377 |

+

|

| 378 |

+

<div align="center">

|

| 379 |

+

<img src="./assets/imgs/wechat_group.jpg" alt="wechat" width="40%" />

|

| 380 |

+

</div>

|

| 381 |

+

|

| 382 |

+

|

| 383 |

+

|

| 384 |

+

|

| 385 |

+

# Table of Contents

|

| 386 |

+

|

| 387 |

+

- [📖 Model Introduction](#model-introduction)

|

| 388 |

+

- [🔗 Model Download](#model-download)

|

| 389 |

+

- [🔖 Model Benchmark](#model-benchmark)

|

| 390 |

+

- [📊 Model Inference](#model-inference)

|

| 391 |

+

- [📜 Declarations & License](#declarations-license)

|

| 392 |

+

- [🥇 Company Introduction](#company-introduction)

|

| 393 |

+

|

| 394 |

+

<a name="model-introduction"></a><br>

|

| 395 |

+

# 1. Model Introduction

|

| 396 |

+

|

| 397 |

+

- Orion-14B series models are open-source multilingual large language models trained from scratch by OrionStarAI. The base model is trained on 2.5T multilingual corpus, including Chinese, English, Japanese, Korean, etc, and it exhibits superior performance in these languages. For details, please refer to [tech report](https://github.com/OrionStarAI/Orion/blob/master/doc/Orion14B_v3.pdf).

|

| 398 |

+

|

| 399 |

+

- The Orion-14B series models exhibit the following features:

|

| 400 |

+

- Among models with 20B-parameter scale level, Orion-14B-Base model shows outstanding performance in comprehensive evaluations.

|

| 401 |

+

- Strong multilingual capabilities, significantly outperforming in Japanese and Korean testsets.

|

| 402 |

+

- The fine-tuned models demonstrate strong adaptability, excelling in human-annotated blind tests.

|

| 403 |

+

- The long-chat version supports extremely long texts, performing exceptionally well at a token length of 200k and can support up to a maximum of 320k.

|

| 404 |

+

- The quantized versions reduce model size by 70%, improve inference speed by 30%, with performance loss less than 1%.

|

| 405 |

+

<table style="border-collapse: collapse; width: 100%;">

|

| 406 |

+

<tr>

|

| 407 |

+

<td style="border: none; padding: 10px; box-sizing: border-box;">

|

| 408 |

+

<img src="./assets/imgs/opencompass_en.png" alt="opencompass" style="width: 100%; height: auto;">

|

| 409 |

+

</td>

|

| 410 |

+

<td style="border: none; padding: 10px; box-sizing: border-box;">

|

| 411 |

+

<img src="./assets/imgs/model_cap_en.png" alt="modelcap" style="width: 100%; height: auto;">

|

| 412 |

+

</td>

|

| 413 |

+

</tr>

|

| 414 |

+

</table>

|

| 415 |

+

|

| 416 |

+

- Orion-14B series models including:

|

| 417 |

+

- **Orion-14B-Base:** A multilingual large language foundational model with 14 billion parameters, pretrained on a diverse dataset of 2.5 trillion tokens.

|

| 418 |

+

- **Orion-14B-Chat:** A chat-model fine-tuned on a high-quality corpus aims to provide an excellence interactive experience for users in the large model community.

|

| 419 |

+

- **Orion-14B-LongChat:** The long-context version excels at handling extremely lengthy texts, performing exceptionally well at a token length of 200k and can support up to a maximum of 320k.

|

| 420 |

+

- **Orion-14B-Chat-RAG:** A chat-model fine-tuned on a custom retrieval augmented generation dataset, achieving superior performance in retrieval augmented generation tasks.

|

| 421 |

+

- **Orion-14B-Chat-Plugin:** A chat-model specifically tailored for plugin and function calling tasks, ideal for agent-related scenarios where the LLM acts as a plugin and function call system.

|

| 422 |

+

- **Orion-14B-Base-Int4:** A quantized base model utilizing 4-bit integer weights. It significantly reduces the model size by 70% and increases the inference speed by 30% while incurring a minimal performance loss of only 1%.

|

| 423 |

+

- **Orion-14B-Chat-Int4:** A quantized chat model utilizing 4-bit integer weights.

|

| 424 |

+

|

| 425 |

+

|

| 426 |

+

<a name="model-download"></a><br>

|

| 427 |

+

# 2. Model Download

|

| 428 |

+

|

| 429 |

+

Model release and download links are provided in the table below:

|

| 430 |

+

|

| 431 |

+

| Model Name | HuggingFace Download Links | ModelScope Download Links |

|

| 432 |

+

|-------------------------|-----------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------------|

|

| 433 |

+

| ⚾Orion-14B-Base | [Orion-14B-Base](https://huggingface.co/OrionStarAI/Orion-14B-Base) | [Orion-14B-Base](https://modelscope.cn/models/OrionStarAI/Orion-14B-Base/summary) |

|

| 434 |

+

| 😛Orion-14B-Chat | [Orion-14B-Chat](https://huggingface.co/OrionStarAI/Orion-14B-Chat) | [Orion-14B-Chat](https://modelscope.cn/models/OrionStarAI/Orion-14B-Chat/summary) |

|

| 435 |

+

| 📃Orion-14B-LongChat | [Orion-14B-LongChat](https://huggingface.co/OrionStarAI/Orion-14B-LongChat) | [Orion-14B-LongChat](https://modelscope.cn/models/OrionStarAI/Orion-14B-LongChat/summary) |

|

| 436 |

+