metadata

language: en

tags:

- summarization

- bart

- medical question answering

- medical question understanding

- consumer health question

- prompt engineering

- LLM

license: apache-2.0

datasets:

- bigbio/meqsum

widget:

- text: ' SUBJECT: high inner eye pressure above 21 possible glaucoma MESSAGE: have seen inner eye pressure increase as I have begin taking Rizatriptan. I understand the med narrows blood vessels. Can this med. cause or effect the closed or wide angle issues with the eyelense/glacoma.'

model-index:

- name: medqsum-bart-large-xsum-meqsum

results:

- task:

type: summarization

name: Summarization

dataset:

name: Dataset for medical question summarization

type: bigbio/meqsum

split: valid

metrics:

- type: rogue-1

value: 54.32

name: Validation ROGUE-1

- type: rogue-2

value: 38.08

name: Validation ROGUE-2

- type: rogue-l

value: 51.98

name: Validation ROGUE-L

- type: rogue-l-sum

value: 51.99

name: Validation ROGUE-L-SUM

library_name: transformers

MedQSum

TL;DR

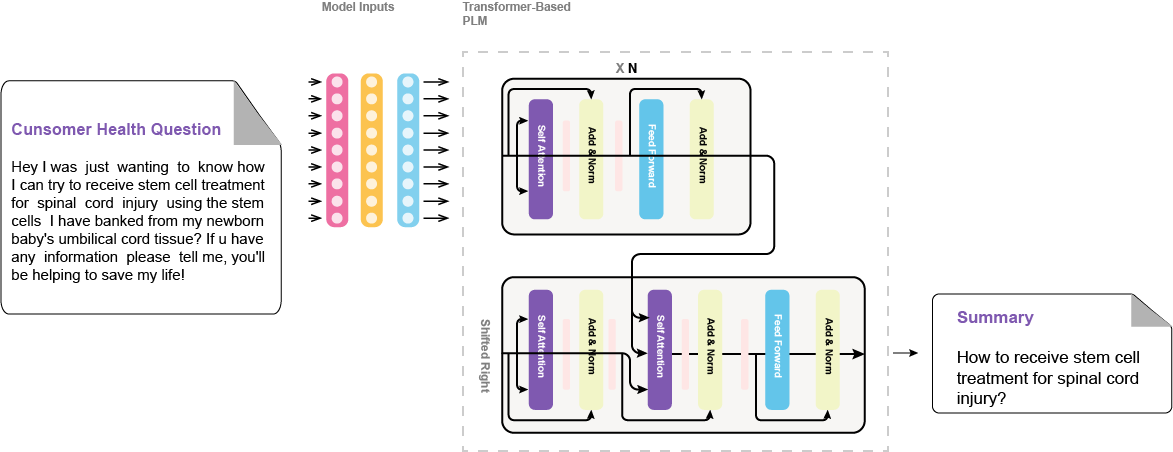

medqsum-bart-large-xsum-meqsum is the best fine-tuned model in the paper Enhancing Large Language Models' Utility for Medical Question-Answering: A Patient Health Question Summarization Approach, which introduces a solution to get the most out of LLMs, when answering health-related questions. We address the challenge of crafting accurate prompts by summarizing consumer health questions (CHQs) to generate clear and concise medical questions. Our approach involves fine-tuning Transformer-based models, including Flan-T5 in resource-constrained environments and three medical question summarization datasets.

Hyperparameters

{

"dataset_name": "MeQSum",

"learning_rate": 3e-05,

"model_name_or_path": "facebook/bart-large-xsum",

"num_train_epochs": 4,

"per_device_eval_batch_size": 4,

"per_device_train_batch_size": 4,

"predict_with_generate": true,

}

Usage

from transformers import pipeline

summarizer = pipeline("summarization", model="NouRed/medqsum-bart-large-xsum-meqsum")

chq = '''SUBJECT: high inner eye pressure above 21 possible glaucoma

MESSAGE: have seen inner eye pressure increase as I have begin taking

Rizatriptan. I understand the med narrows blood vessels. Can this med.

cause or effect the closed or wide angle issues with the eyelense/glacoma.

'''

summarizer(chq)

Results

| key | value |

|---|---|

| eval_rouge1 | 54.32 |

| eval_rouge2 | 38.08 |

| eval_rougeL | 51.98 |

| eval_rougeLsum | 51.99 |

Cite This

@INPROCEEDINGS{10373720,

author={Zekaoui, Nour Eddine and Yousfi, Siham and Mikram, Mounia and Rhanoui, Maryem},

booktitle={2023 14th International Conference on Intelligent Systems: Theories and Applications (SITA)},

title={Enhancing Large Language Models’ Utility for Medical Question-Answering: A Patient Health Question Summarization Approach},

year={2023},

volume={},

number={},

pages={1-8},

doi={10.1109/SITA60746.2023.10373720}}