Initial commit.

Browse files- .gitattributes +3 -0

- README.md +20 -0

- binary_mask.jpg +0 -0

- config.json +7 -0

- hircin_the_cat.png +0 -0

- image/.DS_Store +0 -0

- image/binary_mask.jpg +0 -0

- image/mask.jpg +0 -0

- image/raw_output.jpg +0 -0

- keras_metadata.pb +3 -0

- mask.jpg +0 -0

- pipeline.py +79 -0

- raw_output.jpg +0 -0

- tf_model.h5 +3 -0

- variables/variables.data-00000-of-00001 +3 -0

- variables/variables.index +0 -0

.gitattributes

CHANGED

|

@@ -25,3 +25,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 25 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 26 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 27 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 25 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 26 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 27 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

keras_metadata.pb filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

variables/ filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

variables/variables.data-00000-of-00001 filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- image-segmentation

|

| 4 |

+

- generic

|

| 5 |

+

library_name: generic

|

| 6 |

+

dataset:

|

| 7 |

+

- oxfort-iit pets

|

| 8 |

+

license: apache-2.0

|

| 9 |

+

---

|

| 10 |

+

## Keras semantic segmentation models on the 🤗Hub! 🐶 🐕 🐩

|

| 11 |

+

|

| 12 |

+

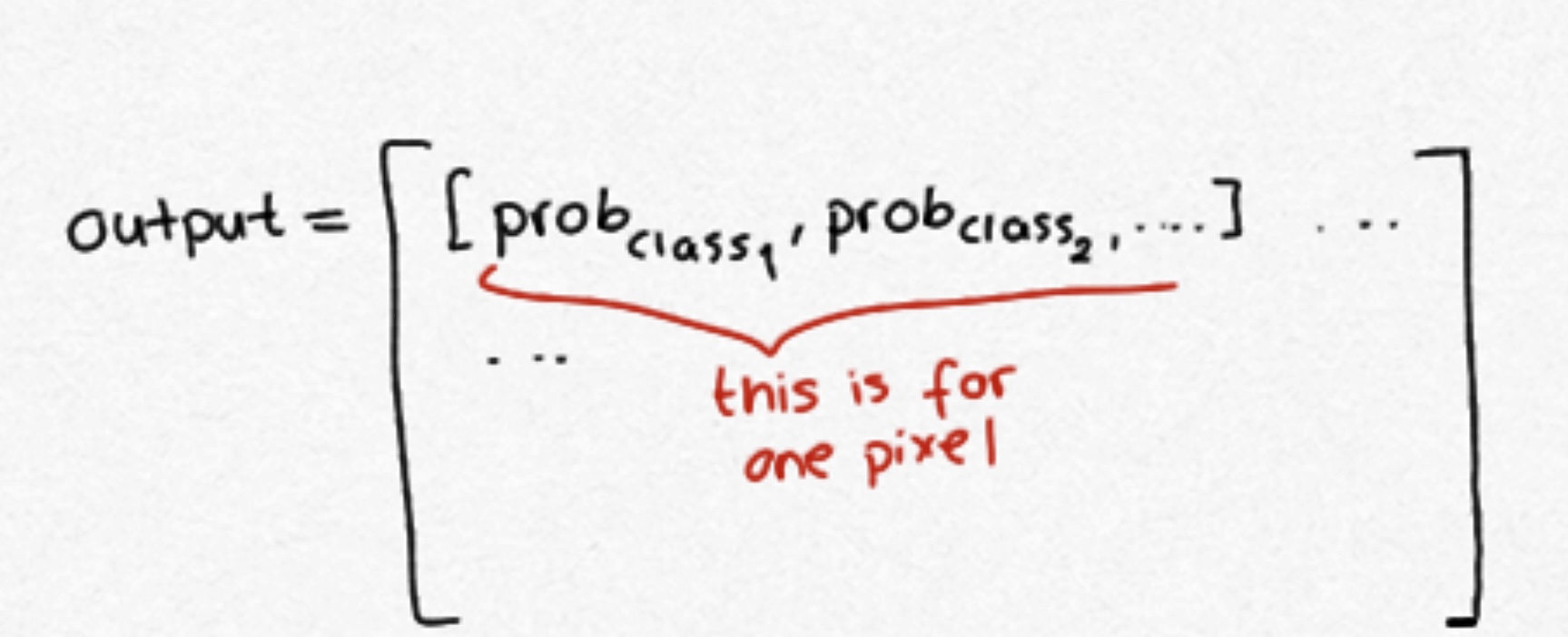

Image classification task tells us about a class assigned to an image, and object detection task creates a boundary box on an object in an image. But what if we want to know about the shape of the image? Segmentation models helps us segment images and reveal their shapes. It has many variants. You can host your Keras segmentation models on the Hub.

|

| 13 |

+

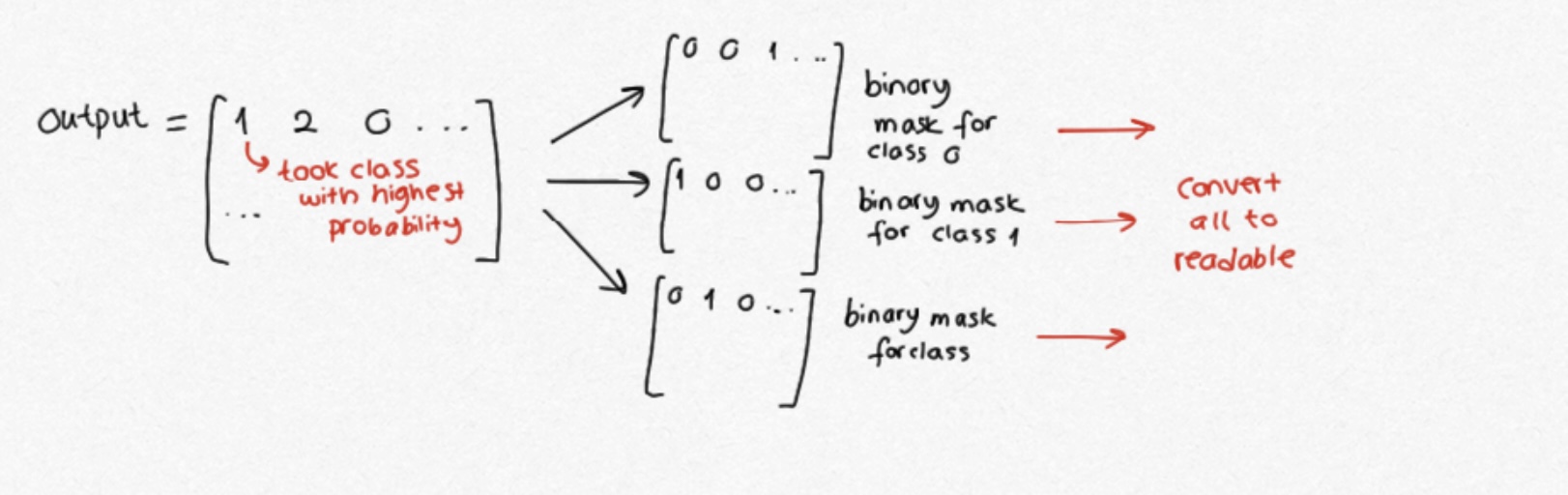

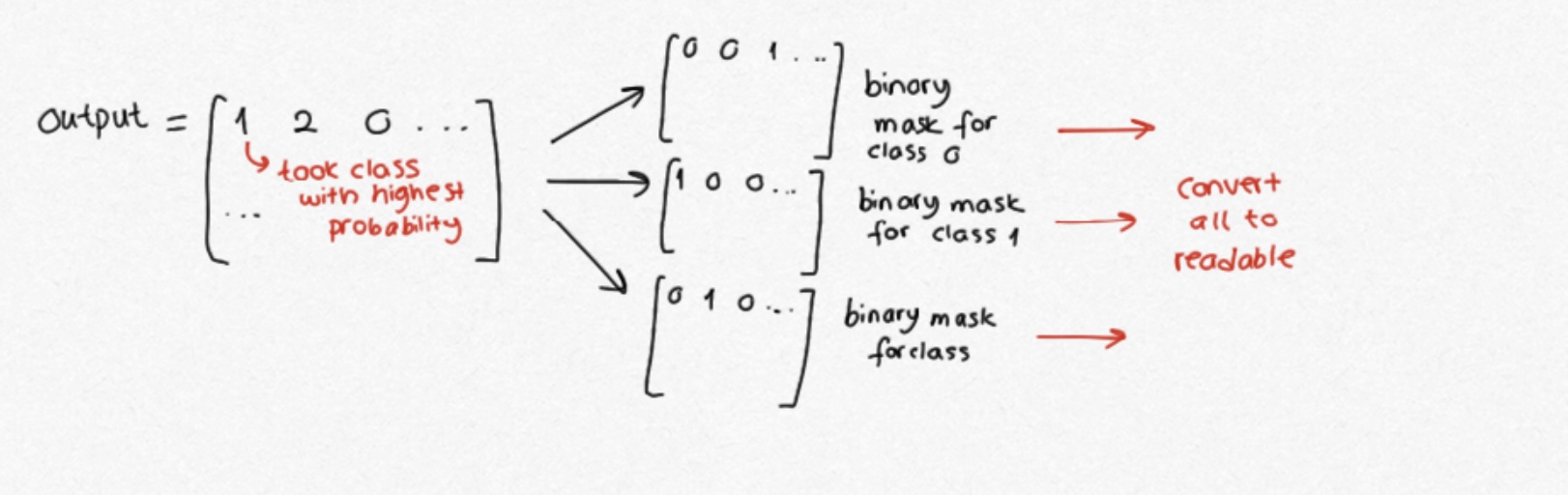

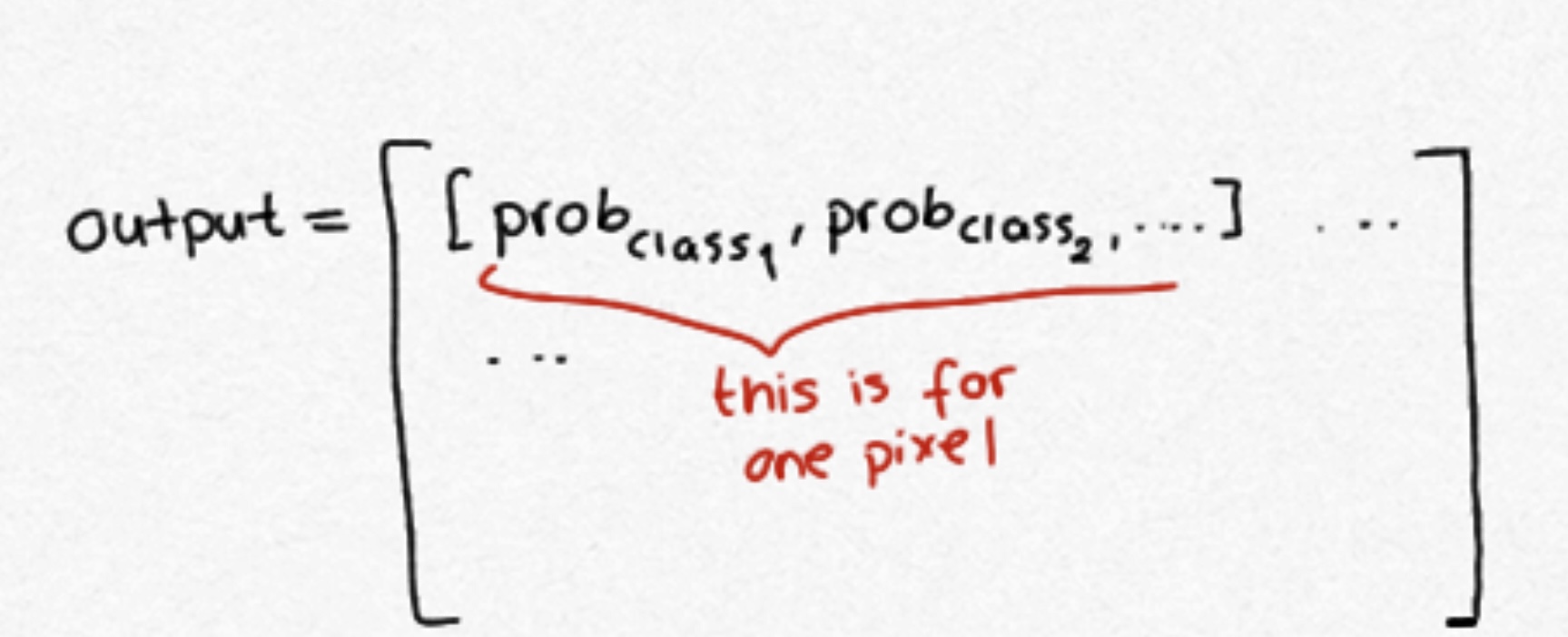

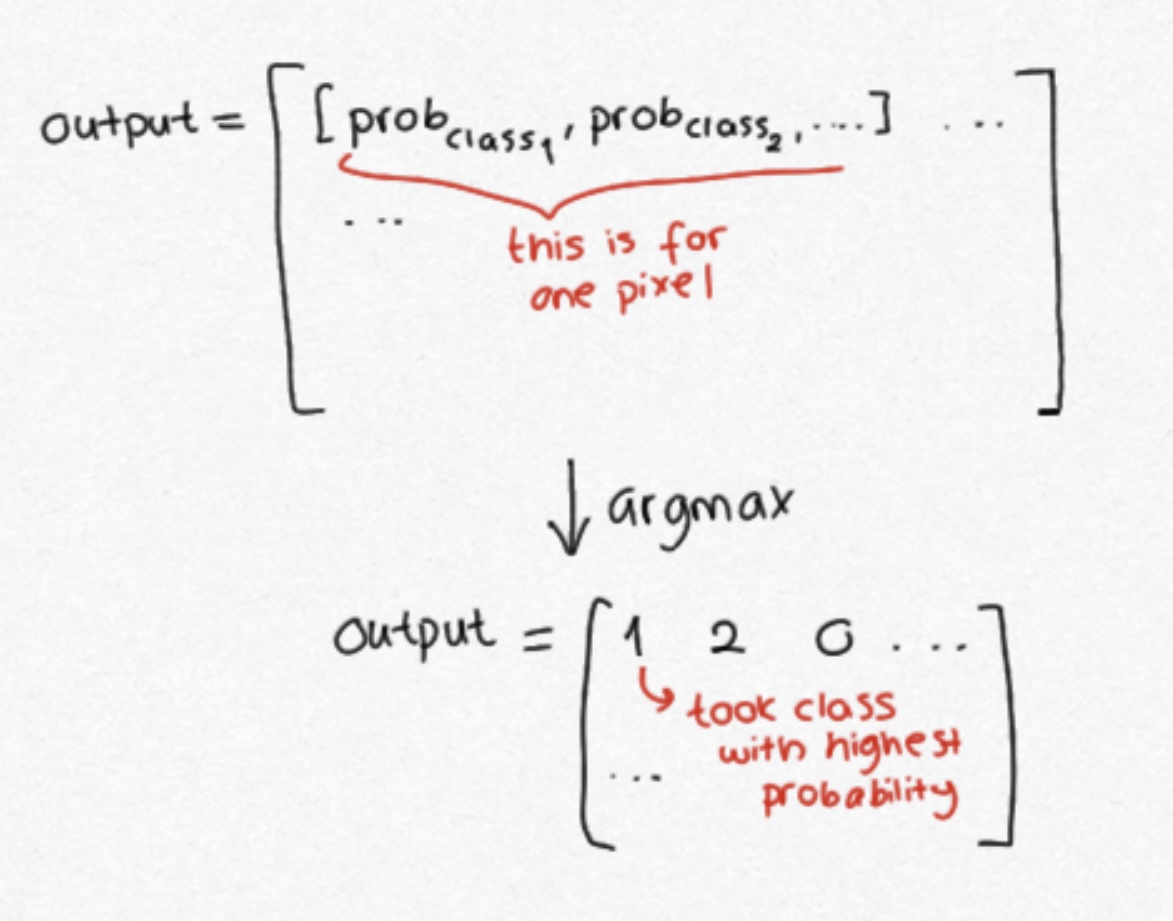

Semantic segmentation models classify pixels, meaning, they assign a class (can be cat or dog) to each pixel. The output of a model looks like following.

|

| 14 |

+

|

| 15 |

+

We need to get the best prediction for every pixel.

|

| 16 |

+

|

| 17 |

+

This is still not readable. We have to convert this into different binary masks for each class and convert to a readable format by converting each mask into base64. We will return a list of dicts, and for each dictionary, we have the label itself, the base64 code and a score (semantic segmentation models don't return a score, so we have to return 1.0 for this case). You can find the full implementation in ```pipeline.py```.

|

| 18 |

+

|

| 19 |

+

Now that you know the expected output by the model, you can host your Keras segmentation models (and other semantic segmentation models) in the similar fashion. Try it yourself and host your segmentation models!

|

| 20 |

+

|

binary_mask.jpg

ADDED

|

config.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"id2label": {

|

| 3 |

+

"0": 0,

|

| 4 |

+

"1": 1,

|

| 5 |

+

"2": 2

|

| 6 |

+

}

|

| 7 |

+

}

|

hircin_the_cat.png

ADDED

|

image/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

image/binary_mask.jpg

ADDED

|

image/mask.jpg

ADDED

|

image/raw_output.jpg

ADDED

|

keras_metadata.pb

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e7844f7cdd94bacbd2dceb2000b172c07e3a3db345cd29ea0d51d66107ff28e9

|

| 3 |

+

size 563033

|

mask.jpg

ADDED

|

pipeline.py

ADDED

|

@@ -0,0 +1,79 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

from typing import Any, Dict, List

|

| 3 |

+

|

| 4 |

+

import tensorflow as tf

|

| 5 |

+

from tensorflow import keras

|

| 6 |

+

import base64

|

| 7 |

+

import io

|

| 8 |

+

import os

|

| 9 |

+

import numpy as np

|

| 10 |

+

from PIL import Image

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

class PreTrainedPipeline():

|

| 15 |

+

def __init__(self, path: str):

|

| 16 |

+

# load the model

|

| 17 |

+

self.model = keras.models.load_model(os.path.join(path, "tf_model.h5"))

|

| 18 |

+

|

| 19 |

+

def __call__(self, inputs: "Image.Image")-> List[Dict[str, Any]]:

|

| 20 |

+

|

| 21 |

+

# convert img to numpy array, resize and normalize to make the prediction

|

| 22 |

+

#with Image.open(inputs) as img:

|

| 23 |

+

img = np.array(inputs)

|

| 24 |

+

|

| 25 |

+

im = tf.image.resize(img, (128, 128))

|

| 26 |

+

im = tf.cast(im, tf.float32) / 255.0

|

| 27 |

+

pred_mask = model.predict(im[tf.newaxis, ...])

|

| 28 |

+

|

| 29 |

+

# take the best performing class for each pixel

|

| 30 |

+

# the output of argmax looks like this [[1, 2, 0], ...]

|

| 31 |

+

pred_mask_arg = tf.argmax(pred_mask, axis=-1)

|

| 32 |

+

|

| 33 |

+

labels = []

|

| 34 |

+

|

| 35 |

+

# convert the prediction mask into binary masks for each class

|

| 36 |

+

binary_masks = {}

|

| 37 |

+

mask_codes = {}

|

| 38 |

+

|

| 39 |

+

# when we take tf.argmax() over pred_mask, it becomes a tensor object

|

| 40 |

+

# the shape becomes TensorShape object, looking like this TensorShape([128])

|

| 41 |

+

# we need to take get shape, convert to list and take the best one

|

| 42 |

+

|

| 43 |

+

rows = pred_mask_arg[0][1].get_shape().as_list()[0]

|

| 44 |

+

cols = pred_mask_arg[0][2].get_shape().as_list()[0]

|

| 45 |

+

|

| 46 |

+

for cls in range(pred_mask.shape[-1]):

|

| 47 |

+

|

| 48 |

+

binary_masks[f"mask_{cls}"] = np.zeros(shape = (pred_mask.shape[1], pred_mask.shape[2])) #create masks for each class

|

| 49 |

+

|

| 50 |

+

for row in range(rows):

|

| 51 |

+

|

| 52 |

+

for col in range(cols):

|

| 53 |

+

|

| 54 |

+

if pred_mask_arg[0][row][col] == cls:

|

| 55 |

+

|

| 56 |

+

binary_masks[f"mask_{cls}"][row][col] = 1

|

| 57 |

+

else:

|

| 58 |

+

binary_masks[f"mask_{cls}"][row][col] = 0

|

| 59 |

+

|

| 60 |

+

mask = binary_masks[f"mask_{cls}"]

|

| 61 |

+

mask *= 255

|

| 62 |

+

img = Image.fromarray(mask.astype(np.int8), mode="L")

|

| 63 |

+

|

| 64 |

+

# we need to make it readable for the widget

|

| 65 |

+

with io.BytesIO() as out:

|

| 66 |

+

img.save(out, format="PNG")

|

| 67 |

+

png_string = out.getvalue()

|

| 68 |

+

mask = base64.b64encode(png_string).decode("utf-8")

|

| 69 |

+

|

| 70 |

+

mask_codes[f"mask_{cls}"] = mask

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

# widget needs the below format, for each class we return label and mask string

|

| 74 |

+

labels.append({

|

| 75 |

+

"label": f"LABEL_{cls}",

|

| 76 |

+

"mask": mask_codes[f"mask_{cls}"],

|

| 77 |

+

"score": 1.0,

|

| 78 |

+

})

|

| 79 |

+

return labels

|

raw_output.jpg

ADDED

|

tf_model.h5

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0258ea75c11d977fae78f747902e48541c5e6996d3d5c700175454ffeb42aa0f

|

| 3 |

+

size 63661584

|

variables/variables.data-00000-of-00001

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e3c27336aaecafd070749d833780e6603b9c25caf01a037c0ef9a93ff3b0c36c

|

| 3 |

+

size 63405929

|

variables/variables.index

ADDED

|

Binary file (17.9 kB). View file

|

|

|