license: creativeml-openrail-m

datasets:

- laion/laion400m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

language:

- en

pipeline_tag: text-to-3d

LDM3D-VR model

The LDM3D-VR model was proposed in "LDM3D-VR: Latent Diffusion Model for 3D" by Gabriela Ben Melech Stan, Diana Wofk, Estelle Aflalo, Shao-Yen Tseng, Zhipeng Cai, Michael Paulitsch, Vasudev Lal.

LDM3D-VR got accepted to [NeurIPS Workshop'23 on Diffusion Models][https://neurips.cc/virtual/2023/workshop/66539].

This new checkpoint related to the upscaler called LDM3D-sr.

Model description

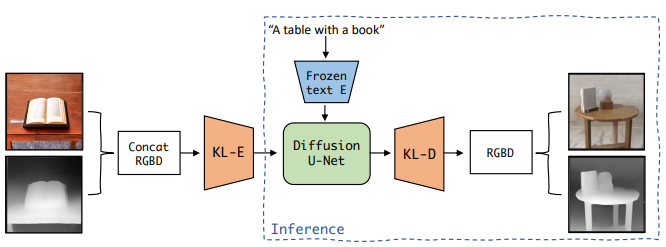

The abstract from the paper is the following: Latent diffusion models have proven to be state-of-the-art in the creation and manipulation of visual outputs. However, as far as we know, the generation of depth maps jointly with RGB is still limited. We introduce LDM3D-VR, a suite of diffusion models targeting virtual reality development that includes LDM3D-pano and LDM3D-SR. These models enable the generation of panoramic RGBD based on textual prompts and the upscaling of low-resolution inputs to high-resolution RGBD, respectively. Our models are fine-tuned from existing pretrained models on datasets containing panoramic/high-resolution RGB images, depth maps and captions. Both models are evaluated in comparison to existing related methods.

LDM3D overview taken from the original paper

LDM3D overview taken from the original paper

How to use

Here is how to use this model to get the features of a given text in PyTorch:

from diffusers import StableDiffusionLDM3DPipeline

pipe = StableDiffusionLDM3DPipeline.from_pretrained("Intel/ldm3d-pano")

pipe.to("cuda")

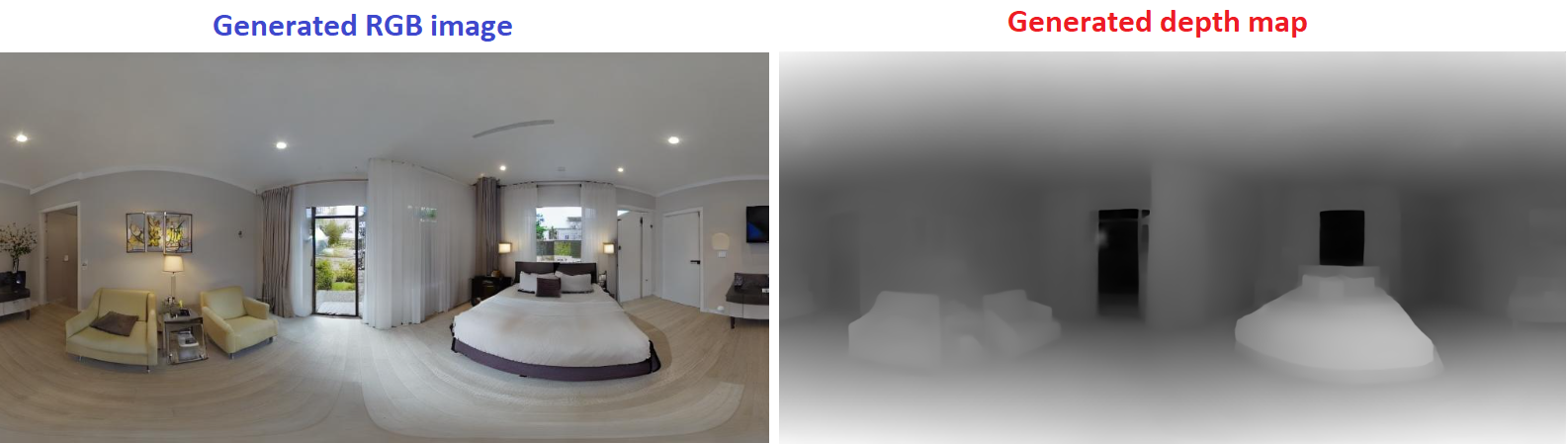

prompt ="360 view of a large bedroom"

name = "bedroom_pano"

output = pipe(

prompt,

width=1024,

height=512,

guidance_scale=7.0,

num_inference_steps=50,

)

rgb_image, depth_image = output.rgb, output.depth

rgb_image[0].save(name+"_ldm3d_rgb.jpg")

depth_image[0].save(name+"_ldm3d_depth.png")

This is the result:

Finetuning

This checkpoint finetunes the previous ldm3d-4c on 2 panoramic-images datasets:

- polyhaven: 585 images for the training set, 66 images for the validation set

- ihdri: 57 outdoor images for the training set, 7 outdoor images for the validation set.

These datasets were augmented using Text2Light to create a dataset containing 13852 training samples and 1606 validation samples.

In order to generate the depth map of those samples, we used DPT-large and to generate the caption we used BLIP-2

BibTeX entry and citation info

@misc{stan2023ldm3dvr, title={LDM3D-VR: Latent Diffusion Model for 3D VR}, author={Gabriela Ben Melech Stan and Diana Wofk and Estelle Aflalo and Shao-Yen Tseng and Zhipeng Cai and Michael Paulitsch and Vasudev Lal}, year={2023}, eprint={2311.03226}, archivePrefix={arXiv}, primaryClass={cs.CV} }