Commit

•

779abe8

1

Parent(s):

b706adf

Upload folder using huggingface_hub

Browse files- LICENSE +352 -0

- README.md +260 -0

- added_tokens.json +4 -0

- assets/infimm-logo.webp +0 -0

- assets/infimm-zephyr-mmmu-test.jpeg +0 -0

- assets/infimm-zephyr-mmmu-val.jpeg +0 -0

- config.json +66 -0

- configuration_infimm_zephyr.py +42 -0

- convert_infi_zephyr_tokenizer_to_hf.py +29 -0

- convert_infi_zephyr_weights_to_hf.py +6 -0

- eva_vit.py +948 -0

- flamingo.py +261 -0

- flamingo_lm.py +256 -0

- generation_config.json +7 -0

- helpers.py +410 -0

- modeling_infimm_zephyr.py +138 -0

- preprocessor_config.json +7 -0

- processing_infimm_zephyr.py +345 -0

- pytorch_model.bin +3 -0

- special_tokens_map.json +46 -0

- tokenizer.json +0 -0

- tokenizer.model +3 -0

- tokenizer_config.json +62 -0

- utils.py +48 -0

LICENSE

ADDED

|

@@ -0,0 +1,352 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Creative Commons Attribution-NonCommercial 4.0 International

|

| 2 |

+

|

| 3 |

+

Creative Commons Corporation ("Creative Commons") is not a law firm and

|

| 4 |

+

does not provide legal services or legal advice. Distribution of

|

| 5 |

+

Creative Commons public licenses does not create a lawyer-client or

|

| 6 |

+

other relationship. Creative Commons makes its licenses and related

|

| 7 |

+

information available on an "as-is" basis. Creative Commons gives no

|

| 8 |

+

warranties regarding its licenses, any material licensed under their

|

| 9 |

+

terms and conditions, or any related information. Creative Commons

|

| 10 |

+

disclaims all liability for damages resulting from their use to the

|

| 11 |

+

fullest extent possible.

|

| 12 |

+

|

| 13 |

+

Using Creative Commons Public Licenses

|

| 14 |

+

|

| 15 |

+

Creative Commons public licenses provide a standard set of terms and

|

| 16 |

+

conditions that creators and other rights holders may use to share

|

| 17 |

+

original works of authorship and other material subject to copyright and

|

| 18 |

+

certain other rights specified in the public license below. The

|

| 19 |

+

following considerations are for informational purposes only, are not

|

| 20 |

+

exhaustive, and do not form part of our licenses.

|

| 21 |

+

|

| 22 |

+

- Considerations for licensors: Our public licenses are intended for

|

| 23 |

+

use by those authorized to give the public permission to use

|

| 24 |

+

material in ways otherwise restricted by copyright and certain other

|

| 25 |

+

rights. Our licenses are irrevocable. Licensors should read and

|

| 26 |

+

understand the terms and conditions of the license they choose

|

| 27 |

+

before applying it. Licensors should also secure all rights

|

| 28 |

+

necessary before applying our licenses so that the public can reuse

|

| 29 |

+

the material as expected. Licensors should clearly mark any material

|

| 30 |

+

not subject to the license. This includes other CC-licensed

|

| 31 |

+

material, or material used under an exception or limitation to

|

| 32 |

+

copyright. More considerations for licensors :

|

| 33 |

+

wiki.creativecommons.org/Considerations\_for\_licensors

|

| 34 |

+

|

| 35 |

+

- Considerations for the public: By using one of our public licenses,

|

| 36 |

+

a licensor grants the public permission to use the licensed material

|

| 37 |

+

under specified terms and conditions. If the licensor's permission

|

| 38 |

+

is not necessary for any reason–for example, because of any

|

| 39 |

+

applicable exception or limitation to copyright–then that use is not

|

| 40 |

+

regulated by the license. Our licenses grant only permissions under

|

| 41 |

+

copyright and certain other rights that a licensor has authority to

|

| 42 |

+

grant. Use of the licensed material may still be restricted for

|

| 43 |

+

other reasons, including because others have copyright or other

|

| 44 |

+

rights in the material. A licensor may make special requests, such

|

| 45 |

+

as asking that all changes be marked or described. Although not

|

| 46 |

+

required by our licenses, you are encouraged to respect those

|

| 47 |

+

requests where reasonable. More considerations for the public :

|

| 48 |

+

wiki.creativecommons.org/Considerations\_for\_licensees

|

| 49 |

+

|

| 50 |

+

Creative Commons Attribution-NonCommercial 4.0 International Public

|

| 51 |

+

License

|

| 52 |

+

|

| 53 |

+

By exercising the Licensed Rights (defined below), You accept and agree

|

| 54 |

+

to be bound by the terms and conditions of this Creative Commons

|

| 55 |

+

Attribution-NonCommercial 4.0 International Public License ("Public

|

| 56 |

+

License"). To the extent this Public License may be interpreted as a

|

| 57 |

+

contract, You are granted the Licensed Rights in consideration of Your

|

| 58 |

+

acceptance of these terms and conditions, and the Licensor grants You

|

| 59 |

+

such rights in consideration of benefits the Licensor receives from

|

| 60 |

+

making the Licensed Material available under these terms and conditions.

|

| 61 |

+

|

| 62 |

+

- Section 1 – Definitions.

|

| 63 |

+

|

| 64 |

+

- a. Adapted Material means material subject to Copyright and

|

| 65 |

+

Similar Rights that is derived from or based upon the Licensed

|

| 66 |

+

Material and in which the Licensed Material is translated,

|

| 67 |

+

altered, arranged, transformed, or otherwise modified in a

|

| 68 |

+

manner requiring permission under the Copyright and Similar

|

| 69 |

+

Rights held by the Licensor. For purposes of this Public

|

| 70 |

+

License, where the Licensed Material is a musical work,

|

| 71 |

+

performance, or sound recording, Adapted Material is always

|

| 72 |

+

produced where the Licensed Material is synched in timed

|

| 73 |

+

relation with a moving image.

|

| 74 |

+

- b. Adapter's License means the license You apply to Your

|

| 75 |

+

Copyright and Similar Rights in Your contributions to Adapted

|

| 76 |

+

Material in accordance with the terms and conditions of this

|

| 77 |

+

Public License.

|

| 78 |

+

- c. Copyright and Similar Rights means copyright and/or similar

|

| 79 |

+

rights closely related to copyright including, without

|

| 80 |

+

limitation, performance, broadcast, sound recording, and Sui

|

| 81 |

+

Generis Database Rights, without regard to how the rights are

|

| 82 |

+

labeled or categorized. For purposes of this Public License, the

|

| 83 |

+

rights specified in Section 2(b)(1)-(2) are not Copyright and

|

| 84 |

+

Similar Rights.

|

| 85 |

+

- d. Effective Technological Measures means those measures that,

|

| 86 |

+

in the absence of proper authority, may not be circumvented

|

| 87 |

+

under laws fulfilling obligations under Article 11 of the WIPO

|

| 88 |

+

Copyright Treaty adopted on December 20, 1996, and/or similar

|

| 89 |

+

international agreements.

|

| 90 |

+

- e. Exceptions and Limitations means fair use, fair dealing,

|

| 91 |

+

and/or any other exception or limitation to Copyright and

|

| 92 |

+

Similar Rights that applies to Your use of the Licensed

|

| 93 |

+

Material.

|

| 94 |

+

- f. Licensed Material means the artistic or literary work,

|

| 95 |

+

database, or other material to which the Licensor applied this

|

| 96 |

+

Public License.

|

| 97 |

+

- g. Licensed Rights means the rights granted to You subject to

|

| 98 |

+

the terms and conditions of this Public License, which are

|

| 99 |

+

limited to all Copyright and Similar Rights that apply to Your

|

| 100 |

+

use of the Licensed Material and that the Licensor has authority

|

| 101 |

+

to license.

|

| 102 |

+

- h. Licensor means the individual(s) or entity(ies) granting

|

| 103 |

+

rights under this Public License.

|

| 104 |

+

- i. NonCommercial means not primarily intended for or directed

|

| 105 |

+

towards commercial advantage or monetary compensation. For

|

| 106 |

+

purposes of this Public License, the exchange of the Licensed

|

| 107 |

+

Material for other material subject to Copyright and Similar

|

| 108 |

+

Rights by digital file-sharing or similar means is NonCommercial

|

| 109 |

+

provided there is no payment of monetary compensation in

|

| 110 |

+

connection with the exchange.

|

| 111 |

+

- j. Share means to provide material to the public by any means or

|

| 112 |

+

process that requires permission under the Licensed Rights, such

|

| 113 |

+

as reproduction, public display, public performance,

|

| 114 |

+

distribution, dissemination, communication, or importation, and

|

| 115 |

+

to make material available to the public including in ways that

|

| 116 |

+

members of the public may access the material from a place and

|

| 117 |

+

at a time individually chosen by them.

|

| 118 |

+

- k. Sui Generis Database Rights means rights other than copyright

|

| 119 |

+

resulting from Directive 96/9/EC of the European Parliament and

|

| 120 |

+

of the Council of 11 March 1996 on the legal protection of

|

| 121 |

+

databases, as amended and/or succeeded, as well as other

|

| 122 |

+

essentially equivalent rights anywhere in the world.

|

| 123 |

+

- l. You means the individual or entity exercising the Licensed

|

| 124 |

+

Rights under this Public License. Your has a corresponding

|

| 125 |

+

meaning.

|

| 126 |

+

|

| 127 |

+

- Section 2 – Scope.

|

| 128 |

+

|

| 129 |

+

- a. License grant.

|

| 130 |

+

- 1. Subject to the terms and conditions of this Public

|

| 131 |

+

License, the Licensor hereby grants You a worldwide,

|

| 132 |

+

royalty-free, non-sublicensable, non-exclusive, irrevocable

|

| 133 |

+

license to exercise the Licensed Rights in the Licensed

|

| 134 |

+

Material to:

|

| 135 |

+

- A. reproduce and Share the Licensed Material, in whole

|

| 136 |

+

or in part, for NonCommercial purposes only; and

|

| 137 |

+

- B. produce, reproduce, and Share Adapted Material for

|

| 138 |

+

NonCommercial purposes only.

|

| 139 |

+

- 2. Exceptions and Limitations. For the avoidance of doubt,

|

| 140 |

+

where Exceptions and Limitations apply to Your use, this

|

| 141 |

+

Public License does not apply, and You do not need to comply

|

| 142 |

+

with its terms and conditions.

|

| 143 |

+

- 3. Term. The term of this Public License is specified in

|

| 144 |

+

Section 6(a).

|

| 145 |

+

- 4. Media and formats; technical modifications allowed. The

|

| 146 |

+

Licensor authorizes You to exercise the Licensed Rights in

|

| 147 |

+

all media and formats whether now known or hereafter

|

| 148 |

+

created, and to make technical modifications necessary to do

|

| 149 |

+

so. The Licensor waives and/or agrees not to assert any

|

| 150 |

+

right or authority to forbid You from making technical

|

| 151 |

+

modifications necessary to exercise the Licensed Rights,

|

| 152 |

+

including technical modifications necessary to circumvent

|

| 153 |

+

Effective Technological Measures. For purposes of this

|

| 154 |

+

Public License, simply making modifications authorized by

|

| 155 |

+

this Section 2(a)(4) never produces Adapted Material.

|

| 156 |

+

- 5. Downstream recipients.

|

| 157 |

+

- A. Offer from the Licensor – Licensed Material. Every

|

| 158 |

+

recipient of the Licensed Material automatically

|

| 159 |

+

receives an offer from the Licensor to exercise the

|

| 160 |

+

Licensed Rights under the terms and conditions of this

|

| 161 |

+

Public License.

|

| 162 |

+

- B. No downstream restrictions. You may not offer or

|

| 163 |

+

impose any additional or different terms or conditions

|

| 164 |

+

on, or apply any Effective Technological Measures to,

|

| 165 |

+

the Licensed Material if doing so restricts exercise of

|

| 166 |

+

the Licensed Rights by any recipient of the Licensed

|

| 167 |

+

Material.

|

| 168 |

+

- 6. No endorsement. Nothing in this Public License

|

| 169 |

+

constitutes or may be construed as permission to assert or

|

| 170 |

+

imply that You are, or that Your use of the Licensed

|

| 171 |

+

Material is, connected with, or sponsored, endorsed, or

|

| 172 |

+

granted official status by, the Licensor or others

|

| 173 |

+

designated to receive attribution as provided in Section

|

| 174 |

+

3(a)(1)(A)(i).

|

| 175 |

+

- b. Other rights.

|

| 176 |

+

- 1. Moral rights, such as the right of integrity, are not

|

| 177 |

+

licensed under this Public License, nor are publicity,

|

| 178 |

+

privacy, and/or other similar personality rights; however,

|

| 179 |

+

to the extent possible, the Licensor waives and/or agrees

|

| 180 |

+

not to assert any such rights held by the Licensor to the

|

| 181 |

+

limited extent necessary to allow You to exercise the

|

| 182 |

+

Licensed Rights, but not otherwise.

|

| 183 |

+

- 2. Patent and trademark rights are not licensed under this

|

| 184 |

+

Public License.

|

| 185 |

+

- 3. To the extent possible, the Licensor waives any right to

|

| 186 |

+

collect royalties from You for the exercise of the Licensed

|

| 187 |

+

Rights, whether directly or through a collecting society

|

| 188 |

+

under any voluntary or waivable statutory or compulsory

|

| 189 |

+

licensing scheme. In all other cases the Licensor expressly

|

| 190 |

+

reserves any right to collect such royalties, including when

|

| 191 |

+

the Licensed Material is used other than for NonCommercial

|

| 192 |

+

purposes.

|

| 193 |

+

|

| 194 |

+

- Section 3 – License Conditions.

|

| 195 |

+

|

| 196 |

+

Your exercise of the Licensed Rights is expressly made subject to

|

| 197 |

+

the following conditions.

|

| 198 |

+

|

| 199 |

+

- a. Attribution.

|

| 200 |

+

- 1. If You Share the Licensed Material (including in modified

|

| 201 |

+

form), You must:

|

| 202 |

+

- A. retain the following if it is supplied by the

|

| 203 |

+

Licensor with the Licensed Material:

|

| 204 |

+

- i. identification of the creator(s) of the Licensed

|

| 205 |

+

Material and any others designated to receive

|

| 206 |

+

attribution, in any reasonable manner requested by

|

| 207 |

+

the Licensor (including by pseudonym if designated);

|

| 208 |

+

- ii. a copyright notice;

|

| 209 |

+

- iii. a notice that refers to this Public License;

|

| 210 |

+

- iv. a notice that refers to the disclaimer of

|

| 211 |

+

warranties;

|

| 212 |

+

- v. a URI or hyperlink to the Licensed Material to

|

| 213 |

+

the extent reasonably practicable;

|

| 214 |

+

- B. indicate if You modified the Licensed Material and

|

| 215 |

+

retain an indication of any previous modifications; and

|

| 216 |

+

- C. indicate the Licensed Material is licensed under this

|

| 217 |

+

Public License, and include the text of, or the URI or

|

| 218 |

+

hyperlink to, this Public License.

|

| 219 |

+

- 2. You may satisfy the conditions in Section 3(a)(1) in any

|

| 220 |

+

reasonable manner based on the medium, means, and context in

|

| 221 |

+

which You Share the Licensed Material. For example, it may

|

| 222 |

+

be reasonable to satisfy the conditions by providing a URI

|

| 223 |

+

or hyperlink to a resource that includes the required

|

| 224 |

+

information.

|

| 225 |

+

- 3. If requested by the Licensor, You must remove any of the

|

| 226 |

+

information required by Section 3(a)(1)(A) to the extent

|

| 227 |

+

reasonably practicable.

|

| 228 |

+

- 4. If You Share Adapted Material You produce, the Adapter's

|

| 229 |

+

License You apply must not prevent recipients of the Adapted

|

| 230 |

+

Material from complying with this Public License.

|

| 231 |

+

|

| 232 |

+

- Section 4 – Sui Generis Database Rights.

|

| 233 |

+

|

| 234 |

+

Where the Licensed Rights include Sui Generis Database Rights that

|

| 235 |

+

apply to Your use of the Licensed Material:

|

| 236 |

+

|

| 237 |

+

- a. for the avoidance of doubt, Section 2(a)(1) grants You the

|

| 238 |

+

right to extract, reuse, reproduce, and Share all or a

|

| 239 |

+

substantial portion of the contents of the database for

|

| 240 |

+

NonCommercial purposes only;

|

| 241 |

+

- b. if You include all or a substantial portion of the database

|

| 242 |

+

contents in a database in which You have Sui Generis Database

|

| 243 |

+

Rights, then the database in which You have Sui Generis Database

|

| 244 |

+

Rights (but not its individual contents) is Adapted Material;

|

| 245 |

+

and

|

| 246 |

+

- c. You must comply with the conditions in Section 3(a) if You

|

| 247 |

+

Share all or a substantial portion of the contents of the

|

| 248 |

+

database.

|

| 249 |

+

|

| 250 |

+

For the avoidance of doubt, this Section 4 supplements and does not

|

| 251 |

+

replace Your obligations under this Public License where the

|

| 252 |

+

Licensed Rights include other Copyright and Similar Rights.

|

| 253 |

+

|

| 254 |

+

- Section 5 – Disclaimer of Warranties and Limitation of Liability.

|

| 255 |

+

|

| 256 |

+

- a. Unless otherwise separately undertaken by the Licensor, to

|

| 257 |

+

the extent possible, the Licensor offers the Licensed Material

|

| 258 |

+

as-is and as-available, and makes no representations or

|

| 259 |

+

warranties of any kind concerning the Licensed Material, whether

|

| 260 |

+

express, implied, statutory, or other. This includes, without

|

| 261 |

+

limitation, warranties of title, merchantability, fitness for a

|

| 262 |

+

particular purpose, non-infringement, absence of latent or other

|

| 263 |

+

defects, accuracy, or the presence or absence of errors, whether

|

| 264 |

+

or not known or discoverable. Where disclaimers of warranties

|

| 265 |

+

are not allowed in full or in part, this disclaimer may not

|

| 266 |

+

apply to You.

|

| 267 |

+

- b. To the extent possible, in no event will the Licensor be

|

| 268 |

+

liable to You on any legal theory (including, without

|

| 269 |

+

limitation, negligence) or otherwise for any direct, special,

|

| 270 |

+

indirect, incidental, consequential, punitive, exemplary, or

|

| 271 |

+

other losses, costs, expenses, or damages arising out of this

|

| 272 |

+

Public License or use of the Licensed Material, even if the

|

| 273 |

+

Licensor has been advised of the possibility of such losses,

|

| 274 |

+

costs, expenses, or damages. Where a limitation of liability is

|

| 275 |

+

not allowed in full or in part, this limitation may not apply to

|

| 276 |

+

You.

|

| 277 |

+

- c. The disclaimer of warranties and limitation of liability

|

| 278 |

+

provided above shall be interpreted in a manner that, to the

|

| 279 |

+

extent possible, most closely approximates an absolute

|

| 280 |

+

disclaimer and waiver of all liability.

|

| 281 |

+

|

| 282 |

+

- Section 6 – Term and Termination.

|

| 283 |

+

|

| 284 |

+

- a. This Public License applies for the term of the Copyright and

|

| 285 |

+

Similar Rights licensed here. However, if You fail to comply

|

| 286 |

+

with this Public License, then Your rights under this Public

|

| 287 |

+

License terminate automatically.

|

| 288 |

+

- b. Where Your right to use the Licensed Material has terminated

|

| 289 |

+

under Section 6(a), it reinstates:

|

| 290 |

+

|

| 291 |

+

- 1. automatically as of the date the violation is cured,

|

| 292 |

+

provided it is cured within 30 days of Your discovery of the

|

| 293 |

+

violation; or

|

| 294 |

+

- 2. upon express reinstatement by the Licensor.

|

| 295 |

+

|

| 296 |

+

For the avoidance of doubt, this Section 6(b) does not affect

|

| 297 |

+

any right the Licensor may have to seek remedies for Your

|

| 298 |

+

violations of this Public License.

|

| 299 |

+

|

| 300 |

+

- c. For the avoidance of doubt, the Licensor may also offer the

|

| 301 |

+

Licensed Material under separate terms or conditions or stop

|

| 302 |

+

distributing the Licensed Material at any time; however, doing

|

| 303 |

+

so will not terminate this Public License.

|

| 304 |

+

- d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

|

| 305 |

+

License.

|

| 306 |

+

|

| 307 |

+

- Section 7 – Other Terms and Conditions.

|

| 308 |

+

|

| 309 |

+

- a. The Licensor shall not be bound by any additional or

|

| 310 |

+

different terms or conditions communicated by You unless

|

| 311 |

+

expressly agreed.

|

| 312 |

+

- b. Any arrangements, understandings, or agreements regarding the

|

| 313 |

+

Licensed Material not stated herein are separate from and

|

| 314 |

+

independent of the terms and conditions of this Public License.

|

| 315 |

+

|

| 316 |

+

- Section 8 – Interpretation.

|

| 317 |

+

|

| 318 |

+

- a. For the avoidance of doubt, this Public License does not, and

|

| 319 |

+

shall not be interpreted to, reduce, limit, restrict, or impose

|

| 320 |

+

conditions on any use of the Licensed Material that could

|

| 321 |

+

lawfully be made without permission under this Public License.

|

| 322 |

+

- b. To the extent possible, if any provision of this Public

|

| 323 |

+

License is deemed unenforceable, it shall be automatically

|

| 324 |

+

reformed to the minimum extent necessary to make it enforceable.

|

| 325 |

+

If the provision cannot be reformed, it shall be severed from

|

| 326 |

+

this Public License without affecting the enforceability of the

|

| 327 |

+

remaining terms and conditions.

|

| 328 |

+

- c. No term or condition of this Public License will be waived

|

| 329 |

+

and no failure to comply consented to unless expressly agreed to

|

| 330 |

+

by the Licensor.

|

| 331 |

+

- d. Nothing in this Public License constitutes or may be

|

| 332 |

+

interpreted as a limitation upon, or waiver of, any privileges

|

| 333 |

+

and immunities that apply to the Licensor or You, including from

|

| 334 |

+

the legal processes of any jurisdiction or authority.

|

| 335 |

+

|

| 336 |

+

Creative Commons is not a party to its public licenses. Notwithstanding,

|

| 337 |

+

Creative Commons may elect to apply one of its public licenses to

|

| 338 |

+

material it publishes and in those instances will be considered the

|

| 339 |

+

"Licensor." The text of the Creative Commons public licenses is

|

| 340 |

+

dedicated to the public domain under the CC0 Public Domain Dedication.

|

| 341 |

+

Except for the limited purpose of indicating that material is shared

|

| 342 |

+

under a Creative Commons public license or as otherwise permitted by the

|

| 343 |

+

Creative Commons policies published at creativecommons.org/policies,

|

| 344 |

+

Creative Commons does not authorize the use of the trademark "Creative

|

| 345 |

+

Commons" or any other trademark or logo of Creative Commons without its

|

| 346 |

+

prior written consent including, without limitation, in connection with

|

| 347 |

+

any unauthorized modifications to any of its public licenses or any

|

| 348 |

+

other arrangements, understandings, or agreements concerning use of

|

| 349 |

+

licensed material. For the avoidance of doubt, this paragraph does not

|

| 350 |

+

form part of the public licenses.

|

| 351 |

+

|

| 352 |

+

Creative Commons may be contacted at creativecommons.org.

|

README.md

ADDED

|

@@ -0,0 +1,260 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

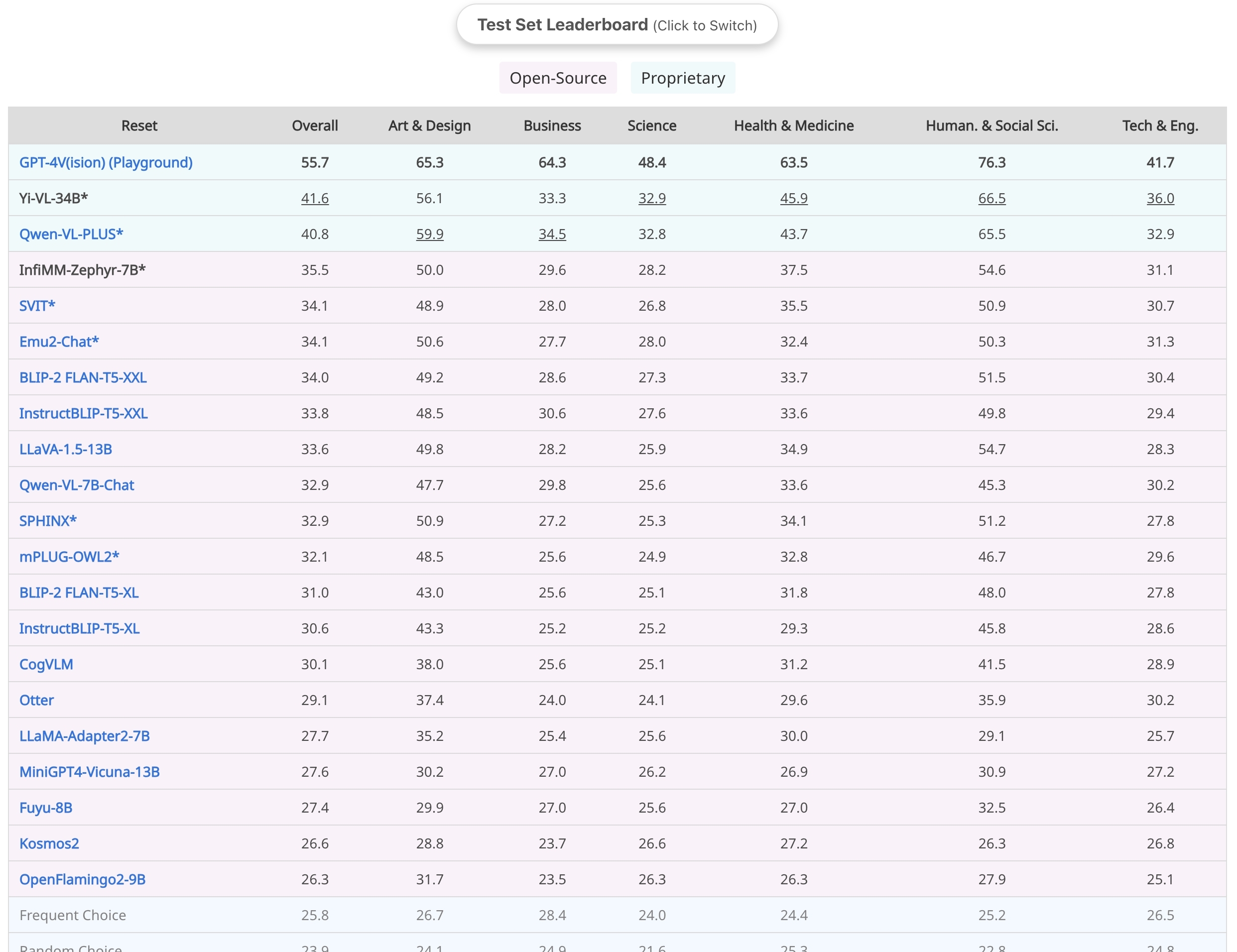

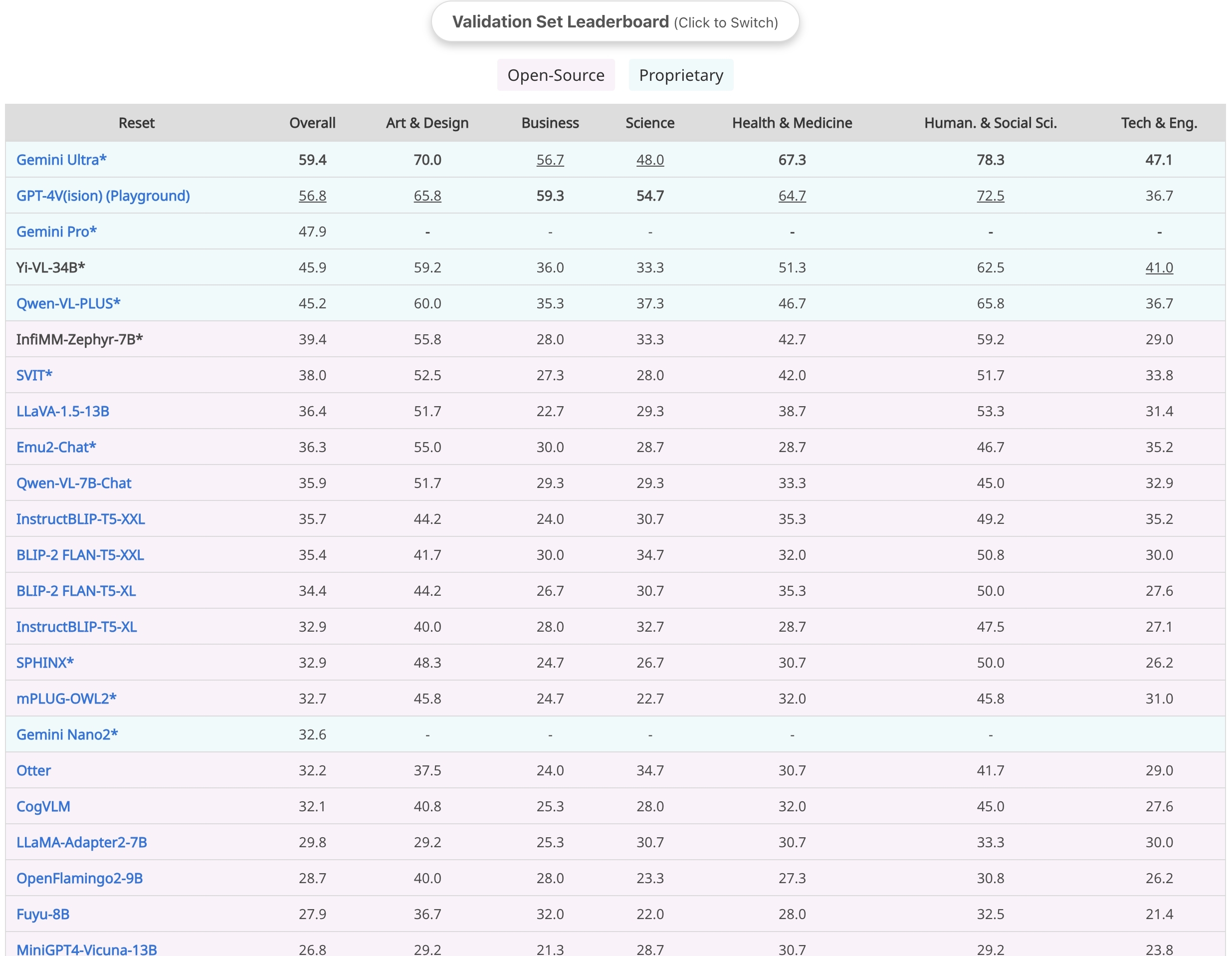

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language: en

|

| 3 |

+

tags:

|

| 4 |

+

- multimodal

|

| 5 |

+

- text

|

| 6 |

+

- image

|

| 7 |

+

- image-to-text

|

| 8 |

+

license: mit

|

| 9 |

+

datasets:

|

| 10 |

+

- HuggingFaceM4/OBELICS

|

| 11 |

+

- laion/laion2B-en

|

| 12 |

+

- coyo-700m

|

| 13 |

+

- mmc4

|

| 14 |

+

pipeline_tag: text-generation

|

| 15 |

+

inference: true

|

| 16 |

+

---

|

| 17 |

+

|

| 18 |

+

<h1 align="center">

|

| 19 |

+

<br>

|

| 20 |

+

<img src="assets/infimm-logo.webp" alt="Markdownify" width="200"></a>

|

| 21 |

+

</h1>

|

| 22 |

+

|

| 23 |

+

# InfiMM

|

| 24 |

+

|

| 25 |

+

InfiMM, inspired by the Flamingo architecture, sets itself apart with unique training data and diverse large language models (LLMs). This approach allows InfiMM to maintain the core strengths of Flamingo while offering enhanced capabilities. As the premier open-sourced variant in this domain, InfiMM excels in accessibility and adaptability, driven by community collaboration. It's more than an emulation of Flamingo; it's an innovation in visual language processing.

|

| 26 |

+

|

| 27 |

+

Our model is another attempt to produce the result reported in the paper "Flamingo: A Large-scale Visual Language Model for Multimodal Understanding" by DeepMind.

|

| 28 |

+

Compared with previous open-sourced attempts ([OpenFlamingo](https://github.com/mlfoundations/open_flamingo) and [IDEFIC](https://huggingface.co/blog/idefics)), InfiMM offers a more flexible models, allowing for a wide range of applications.

|

| 29 |

+

In particular, InfiMM integrates the latest LLM models into VLM domain the reveals the impact of LLMs with different scales and architectures.

|

| 30 |

+

|

| 31 |

+

Please note that InfiMM is currently in beta stage and we are continuously working on improving it.

|

| 32 |

+

|

| 33 |

+

## Model Details

|

| 34 |

+

|

| 35 |

+

- **Developed by**: Institute of Automation, Chinese Academy of Sciences and ByteDance

|

| 36 |

+

- **Model Type**: Visual Language Model (VLM)

|

| 37 |

+

- **Language**: English

|

| 38 |

+

- **LLMs**: [Zephyr](https://huggingface.co/HuggingFaceH4/zephyr-7b-beta), [LLaMA2-13B](https://ai.meta.com/llama/), [Vicuna-13B](https://huggingface.co/lmsys/vicuna-13b-v1.5)

|

| 39 |

+

- **Vision Model**: [EVA CLIP](https://huggingface.co/QuanSun/EVA-CLIP)

|

| 40 |

+

- **Language(s) (NLP):** en

|

| 41 |

+

- **License:** see [License section](#license)

|

| 42 |

+

<!---

|

| 43 |

+

- **Parent Models:** [QuanSun/EVA-CLIP](https://huggingface.co/QuanSun/EVA-CLIP/blob/main/EVA02_CLIP_L_336_psz14_s6B.pt) and [HuggingFaceH4/zephyr-7b--beta ta](https://huggingface.co/HuggingFaceH4/zephyr-7b-beta)

|

| 44 |

+

-->

|

| 45 |

+

|

| 46 |

+

## Model Family

|

| 47 |

+

|

| 48 |

+

Our model consists of several different model. Please see the details below.

|

| 49 |

+

| Model | LLM | Vision Encoder | IFT |

|

| 50 |

+

| ---------------------- | -------------- | -------------- | --- |

|

| 51 |

+

| InfiMM-Zephyr | Zehpyr-7B-beta | ViT-L-336 | No |

|

| 52 |

+

| InfiMM-Llama-13B | Llama2-13B | ViT-G-224 | No |

|

| 53 |

+

| InfiMM-Vicuna-13B | Vicuna-13B | ViT-E-224 | No |

|

| 54 |

+

| InfiMM-Zephyr-Chat | Zehpyr-7B-beta | ViT-L-336 | Yes |

|

| 55 |

+

| InfiMM-Llama-13B-Chat | Llama2-13B | ViT-G-224 | Yes |

|

| 56 |

+

| InfiMM-Vicuna-13B-Chat | Vicuna-13B | ViT-E-224 | Yes |

|

| 57 |

+

|

| 58 |

+

<!-- InfiMM-Zephyr-Chat is an light-weighted, open-source re-production of Flamingo-style Multimodal large language models with chat capability that takes sequences of interleaved images and texts as inputs and generates text outputs, with only 9B parameters.

|

| 59 |

+

-->

|

| 60 |

+

|

| 61 |

+

## Demo

|

| 62 |

+

|

| 63 |

+

Will be released soon.

|

| 64 |

+

|

| 65 |

+

Our model adopts the Flamingo architecture, leveraging EVA CLIP as the visual encoder and employing LLaMA2, Vicuna, and Zephyr as language models. The visual and language modalities are connected through a Cross Attention module.

|

| 66 |

+

|

| 67 |

+

## Quickstart

|

| 68 |

+

|

| 69 |

+

Use the code below to get started with the base model:

|

| 70 |

+

```python

|

| 71 |

+

import torch

|

| 72 |

+

from transformers import AutoModelForCausalLM, AutoProcessor

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

processor = AutoProcessor.from_pretrained("InfiMM/infimm-zephyr", trust_remote_code=True)

|

| 76 |

+

|

| 77 |

+

prompts = [

|

| 78 |

+

{

|

| 79 |

+

"role": "user",

|

| 80 |

+

"content": [

|

| 81 |

+

{"image": "assets/infimm-logo.webp"},

|

| 82 |

+

"Please explain this image to me.",

|

| 83 |

+

],

|

| 84 |

+

}

|

| 85 |

+

]

|

| 86 |

+

inputs = processor(prompts)

|

| 87 |

+

|

| 88 |

+

# use bf16

|

| 89 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 90 |

+

"InfiMM/infimm-zephyr",

|

| 91 |

+

local_files_only=True,

|

| 92 |

+

torch_dtype=torch.bfloat16,

|

| 93 |

+

trust_remote_code=True,

|

| 94 |

+

).eval()

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

inputs = inputs.to(model.device)

|

| 98 |

+

inputs["batch_images"] = inputs["batch_images"].to(torch.bfloat16)

|

| 99 |

+

generated_ids = model.generate(

|

| 100 |

+

**inputs,

|

| 101 |

+

min_generation_length=0,

|

| 102 |

+

max_generation_length=256,

|

| 103 |

+

)

|

| 104 |

+

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)

|

| 105 |

+

print(generated_text)

|

| 106 |

+

```

|

| 107 |

+

|

| 108 |

+

## Training Details

|

| 109 |

+

|

| 110 |

+

We employed three stages to train our model: pretraining (PT), multi-task training (MTT), and instruction finetuning (IFT). Refer to the table below for detailed configurations in each stage. Due to significant noise in the pretraining data, we aimed to enhance the model's accuracy by incorporating higher-quality data. In the multi-task training (MTT) phase, we utilized substantial training data from diverse datasets. However, as the answer in these data mainly consisted of single words or phrases, the model's conversational ability was limited. Therefore, in the third stage, we introduced a considerable amount of image-text dialogue data (llava665k) for fine-tuning the model's instructions.

|

| 111 |

+

|

| 112 |

+

### Pretraining (PT)

|

| 113 |

+

|

| 114 |

+

We follow similar training procedures used in [IDEFICS](https://huggingface.co/HuggingFaceM4/idefics-9b-instruct/blob/main/README.md).

|

| 115 |

+

|

| 116 |

+

The model is trained on a mixture of image-text pairs and unstructured multimodal web documents. All data are from public sources. Many image URL links are expired, we are capable of only downloading partial samples. We filter low quality data, here are resulting data we used:

|

| 117 |

+

|

| 118 |

+

| Data Source | Type of Data | Number of Tokens in Source | Number of Images in Source | Number of Samples | Epochs |

|

| 119 |

+

| ---------------------------------------------------------------- | ------------------------------------- | -------------------------- | -------------------------- | ----------------- | ------ |

|

| 120 |

+

| [OBELICS](https://huggingface.co/datasets/HuggingFaceM4/OBELICS) | Unstructured Multimodal Web Documents | - | - | 101M | 1 |

|

| 121 |

+

| [MMC4](https://github.com/allenai/mmc4) | Unstructured Multimodal Web Documents | - | - | 53M | 1 |

|

| 122 |

+

| [LAION](https://huggingface.co/datasets/laion/laion2B-en) | Image-Text Pairs | - | 115M | 115M | 1 |

|

| 123 |

+

| [COYO](https://github.com/kakaobrain/coyo-dataset) | Image-Text Pairs | - | 238M | 238M | 1 |

|

| 124 |

+

| [LAION-COCO](https://laion.ai/blog/laion-coco/) | Image-Text Pairs | - | 140M | 140M | 1 |

|

| 125 |

+

| [PMD\*](https://huggingface.co/datasets/facebook/pmd) | Image-Text Pairs | - | 20M | 1 |

|

| 126 |

+

|

| 127 |

+

\*PMD is only used in models with 13B LLMs, not the 7B Zephyr model.

|

| 128 |

+

|

| 129 |

+

During pretraining of interleaved image text sample, we apply masked cross-attention, however, we didn't strictly follow Flamingo, which alternate attention of image to its previous text or later text by change of 0.5.

|

| 130 |

+

|

| 131 |

+

We use the following hyper parameters:

|

| 132 |

+

| Categories | Parameters | Value |

|

| 133 |

+

| ------------------------ | -------------------------- | -------------------- |

|

| 134 |

+

| Perceiver Resampler | Number of Layers | 6 |

|

| 135 |

+

| | Number of Latents | 64 |

|

| 136 |

+

| | Number of Heads | 16 |

|

| 137 |

+

| | Resampler Head Dimension | 96 |

|

| 138 |

+

| Training | Sequence Length | 384 (13B) / 792 (7B) |

|

| 139 |

+

| | Effective Batch Size | 40\*128 |

|

| 140 |

+

| | Max Images per Sample | 6 |

|

| 141 |

+

| | Weight Decay | 0.1 |

|

| 142 |

+

| | Optimizer | Adam(0.9, 0.999) |

|

| 143 |

+

| | Gradient Accumulation Step | 2 |

|

| 144 |

+

| Learning Rate | Initial Max | 1e-4 |

|

| 145 |

+

| | Decay Schedule | Constant |

|

| 146 |

+

| | Warmup Step rate | 0.005 |

|

| 147 |

+

| Large-scale Optimization | Gradient Checkpointing | False |

|

| 148 |

+

| | Precision | bf16 |

|

| 149 |

+

| | ZeRO Optimization | Stage 2 |

|

| 150 |

+

|

| 151 |

+

### Multi-Task Training (MTT)

|

| 152 |

+

|

| 153 |

+

Here we use mix_cap_vqa to represent the mixed training set from COCO caption, TextCap, VizWiz Caption, VQAv2, OKVQA, VizWiz VQA, TextVQA, OCRVQA, STVQA, DocVQA, GQA and ScienceQA-image. For caption, we add prefix such as "Please describe the image." before the question. And for QA, we add "Answer the question using a single word or phrase.". Specifically, for VizWiz VQA, we use "When the provided information is insufficient, respond with 'Unanswerable'. Answer the question using a single word or phrase.". While for ScienceQA-image, we use "Answer with the option's letter from the given choices directly."

|

| 154 |

+

|

| 155 |

+

### Instruction Fine-Tuning (IFT)

|

| 156 |

+

|

| 157 |

+

For instruction fine-tuning stage, we use the recently released [LLaVA-MIX-665k](https://huggingface.co/datasets/liuhaotian/LLaVA-Instruct-150K/tree/main).

|

| 158 |

+

|

| 159 |

+

We use the following hyper parameters:

|

| 160 |

+

| Categories | Parameters | Value |

|

| 161 |

+

| ------------------------ | -------------------------- | -------------------- |

|

| 162 |

+

| Perceiver Resampler | Number of Layers | 6 |

|

| 163 |

+

| | Number of Latents | 64 |

|

| 164 |

+

| | Number of Heads | 16 |

|

| 165 |

+

| | Resampler Head Dimension | 96 |

|

| 166 |

+

| Training | Sequence Length | 384 (13B) / 792 (7B) |

|

| 167 |

+

| | Effective Batch Size | 64 |

|

| 168 |

+

| | Max Images per Sample | 6 |

|

| 169 |

+

| | Weight Decay | 0.1 |

|

| 170 |

+

| | Optimizer | Adam(0.9, 0.999) |

|

| 171 |

+

| | Gradient Accumulation Step | 2 |

|

| 172 |

+

| Learning Rate | Initial Max | 1e-5 |

|

| 173 |

+

| | Decay Schedule | Constant |

|

| 174 |

+

| | Warmup Step rate | 0.005 |

|

| 175 |

+

| Large-scale Optimization | Gradient Checkpointing | False |

|

| 176 |

+

| | Precision | bf16 |

|

| 177 |

+

| | ZeRO Optimization | Stage 2 |

|

| 178 |

+

|

| 179 |

+

During IFT, similar to pretrain, we keep ViT and LLM frozen for both chat-based LLM (Vicuna and Zephyr). For Llama model, we keep LLM trainable during the IFT stage. We also apply chat-template to process the training samples.

|

| 180 |

+

|

| 181 |

+

## Evaluation

|

| 182 |

+

|

| 183 |

+

### PreTraining Evaluation

|

| 184 |

+

|

| 185 |

+

We evaluate the pretrained models on the following downstream tasks: Image Captioning and VQA. We also compare with our results with [IDEFICS](https://huggingface.co/blog/idefics).

|

| 186 |

+

|

| 187 |

+

| Model | Shots | COCO CIDEr | Flickr30K CIDEr | VQA v2 Acc | TextVQA Acc | OK-VQA Acc |

|

| 188 |

+

| ----------------- | ----- | ---------- | --------------- | ---------- | ----------- | ---------- |

|

| 189 |

+

| IDEFICS-9B | 0 | 46 | 27.3 | 50.9 | 25.9 | 38.4 |

|

| 190 |

+

| | 4 | 93 | 59.7 | 55.4 | 27.6 | 45.5 |

|

| 191 |

+

| IDEFICS-80B | 0 | 91.8 | 53.7 | 60 | 30.9 | 45.2 |

|

| 192 |

+

| | 4 | 110.3 | 73.7 | 64.6 | 34.4 | 52.4 |

|

| 193 |

+

| InfiMM-Zephyr-7B | 0 | 78.8 | 60.7 | 33.7 | 15.2 | 17.1 |

|

| 194 |

+

| | 4 | 108.6 | 71.9 | 59.1 | 34.3 | 50.5 |

|

| 195 |

+

| InfiMM-Llama2-13B | 0 | 85.4 | 54.6 | 51.6 | 24.2 | 26.4 |

|

| 196 |

+

| | 4 | 125.2 | 87.1 | 66.1 | 38.2 | 55.5 |

|

| 197 |

+

| InfiMM-Vicuna13B | 0 | 69.6 | 49.6 | 60.4 | 32.8 | 49.2 |

|

| 198 |

+

| | 4 | 118.1 | 81.4 | 64.2 | 38.4 | 53.7 |

|

| 199 |

+

|

| 200 |

+

### IFT Evaluation

|

| 201 |

+

|

| 202 |

+

In our analysis, we concentrate on two primary benchmarks for evaluating MLLMs: 1) Multi-choice Question Answering (QA) and 2) Open-ended Evaluation. We've observed that the evaluation metrics for tasks like Visual Question Answering (VQA) and Text-VQA are overly sensitive to exact answer matches. This approach can be misleading, particularly when models provide synonymous but technically accurate responses. Therefore, these metrics have been omitted from our comparison for a more precise assessment. The evaluation results are shown in the table below.

|

| 203 |

+

|

| 204 |

+

| Model | ScienceQA-Img | MME | MM-VET | InfiMM-Eval | MMbench | MMMU-Val | MMMU-Test |

|

| 205 |

+

| ------------------- | ------------- | --------------------- | ------ | ------------ | ------- | -------- | --------- |

|

| 206 |

+

| Otter-9B | - | 1292/306 | 24.6 | 32.2 | - | 22.69 | - |

|

| 207 |

+

| IDEFICS-9B-Instruct | 60.6 | -/- | - | - | - | 24.53 | - |

|

| 208 |

+

| InfiMM-Zephyr-7B | 71.1 | P: 1406<br>C:327 | 32.8 | 36.0 | 59.7 | 39.4 | 35.5 |

|

| 209 |

+

| InfiMM-Llama-13b | 73.0 | P: 1444.5<br>C: 337.6 | 39.2 | 0.4559/0.414 | 66.4 | 39.1 | 35.2 |

|

| 210 |

+

| InfiMM-Vicuna-13B | 74.0 | P: 1461.2<br>C: 323.5 | 36.0 | 40.0 | 66.7 | 37.6 | 34.6 |

|

| 211 |

+

|

| 212 |

+

<!--

|

| 213 |

+

| Model | TextVQA (no ocr) | OK-VQA | VQAv2 | ScienceQA-Img | GQA | MME | MM-VET | MMMU | InfiMM-Eval | MMbench |

|

| 214 |

+

| ----------------- | ---------------- | ------ | ----- | ------------- | ---- | --------------------- | ------ | ---- | ------------ | ------- |

|

| 215 |

+

| InfiMM-Zephyr-7B | 36.7 | 55.4 | / | 71.1 | | P: 1406<br>C:327 | 32.8 | 39.4 | 36.0 | 59.7 |

|

| 216 |

+

| InfiMM-Llama-13b | 44.6 | 62.3 | 78.5 | 73.0 | 61.2 | P: 1444.5<br>C: 337.6 | 39.2 | 39.1 | 0.4559/0.414 | 66.4 |

|

| 217 |

+

| InfiMM-Vicuna-13B | 41.7 | 58.5 | 73.0 | 74.0 | 58.5 | P: 1461.2<br>C: 323.5 | 36.0 | 37.6 | 40.0 | 66.7 |

|

| 218 |

+

|

| 219 |

+

We select checkpoint after 1 epoch instruction fine-tuning.

|

| 220 |

+

|

| 221 |

+

| Model | <nobr>ScienceQA <br>acc.</nobr> | <nobr>MME <br>P/C</nobr> | <nobr>MM-Vet</nobr> | <nobr>InfiMM-Eval</nobr> | <nobr>MMMU (val)</nobr> |

|

| 222 |

+

| :------------------ | ------------------------------: | -----------------------: | ------------------: | -----------------------: | ----------------------: |

|

| 223 |

+

| Otter-9B | - | 1292/306 | 24.6 | 22.69 | 32.2 |

|

| 224 |

+

| IDEFICS-9B-Instruct | 60.6 | -/- | - | 24.53 | - |

|

| 225 |

+

| InfiMM-Zephyr-Chat | 71.14 | 1406/327 | 33.3 | 35.97 | 39.4 |

|

| 226 |

+

-->

|

| 227 |

+

|

| 228 |

+

<details>

|

| 229 |

+

<summary>Leaderboard Details</summary>

|

| 230 |

+

|

| 231 |

+

<img src="assets/infimm-zephyr-mmmu-val.jpeg" style="zoom:40%;" />

|

| 232 |

+

<br>MMMU-Val split results<br>

|

| 233 |

+

<img src="assets/infimm-zephyr-mmmu-test.jpeg" style="zoom:40%;" />

|

| 234 |

+

<br>MMMU-Test split results<br>

|

| 235 |

+

|

| 236 |

+

</details>

|

| 237 |

+

|

| 238 |

+

## Citation

|

| 239 |

+

|

| 240 |

+

@misc{infimm-v1,

|

| 241 |

+

title={InfiMM: },

|

| 242 |

+

author={InfiMM Team},

|

| 243 |

+

year={2024}

|

| 244 |

+

}

|

| 245 |

+

|

| 246 |

+

## License

|

| 247 |

+

|

| 248 |

+

<a href="https://creativecommons.org/licenses/by-nc/4.0/deed.en">

|

| 249 |

+

<img src="https://upload.wikimedia.org/wikipedia/commons/thumb/d/d3/Cc_by-nc_icon.svg/600px-Cc_by-nc_icon.svg.png" width="160">

|

| 250 |

+

</a>

|

| 251 |

+

|

| 252 |

+

This project is licensed under the **CC BY-NC 4.0**.

|

| 253 |

+

|

| 254 |

+

The copyright of the images belongs to the original authors.

|

| 255 |

+

|

| 256 |

+

See [LICENSE](LICENSE) for more information.

|

| 257 |

+

|

| 258 |

+

## Contact Us

|

| 259 |

+

|

| 260 |

+

Please feel free to contact us via email [infimmbytedance@gmail.com](infimmbytedance@gmail.com) if you have any questions.

|

added_tokens.json

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"<image>": 32001,

|

| 3 |

+

"<|endofchunk|>": 32000

|

| 4 |

+

}

|

assets/infimm-logo.webp

ADDED

|

assets/infimm-zephyr-mmmu-test.jpeg

ADDED

|

assets/infimm-zephyr-mmmu-val.jpeg

ADDED

|

config.json

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "./",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"InfiMMZephyrModel"

|

| 5 |

+

],

|

| 6 |

+

"auto_map": {

|

| 7 |

+

"AutoConfig": "configuration_infimm_zephyr.InfiMMConfig",

|

| 8 |

+

"AutoModelForCausalLM": "modeling_infimm_zephyr.InfiMMZephyrModel"

|

| 9 |

+

},

|

| 10 |

+

"model_type": "infimm-zephyr",

|

| 11 |

+

"seq_length": 1024,

|

| 12 |

+

"tokenizer_type": "LlamaTokenizer",

|

| 13 |

+

"torch_dtype": "bfloat16",

|

| 14 |

+

"transformers_version": "4.35.2",

|

| 15 |

+

"use_cache": true,

|

| 16 |

+

"use_flash_attn": false,

|

| 17 |

+

"cross_attn_every_n_layers": 2,

|

| 18 |

+

"use_grad_checkpoint": false,

|

| 19 |

+

"freeze_llm": true,

|

| 20 |

+

"image_token_id": 32001,

|

| 21 |

+

"eoc_token_id": 32000,

|

| 22 |

+

"visual": {

|

| 23 |

+

"image_size": 336,

|

| 24 |

+

"layers": 24,

|

| 25 |

+

"width": 1024,

|

| 26 |

+

"head_width": 64,

|

| 27 |

+

"patch_size": 14,

|

| 28 |

+

"mlp_ratio": 2.6667,

|

| 29 |

+

"eva_model_name": "eva-clip-l-14-336",

|

| 30 |

+

"drop_path_rate": 0.0,

|

| 31 |

+

"xattn": false,

|

| 32 |

+

"fusedLN": true,

|

| 33 |

+

"rope": true,

|

| 34 |

+

"pt_hw_seq_len": 16,

|

| 35 |

+

"intp_freq": true,

|

| 36 |

+

"naiveswiglu": true,

|

| 37 |

+

"subln": true,

|

| 38 |

+

"embed_dim": 768

|

| 39 |

+

},

|

| 40 |

+

"language": {

|

| 41 |

+

"_name_or_path": "HuggingFaceH4/zephyr-7b-beta",

|

| 42 |

+

"architectures": [

|

| 43 |

+

"MistralForCausalLM"

|

| 44 |

+

],

|

| 45 |

+

"bos_token_id": 1,

|

| 46 |

+

"eos_token_id": 2,

|

| 47 |

+

"hidden_act": "silu",

|

| 48 |

+

"hidden_size": 4096,

|

| 49 |

+

"initializer_range": 0.02,

|

| 50 |

+

"intermediate_size": 14336,

|

| 51 |

+

"max_position_embeddings": 32768,

|

| 52 |

+

"model_type": "mistral",

|

| 53 |

+

"num_attention_heads": 32,

|

| 54 |

+

"num_hidden_layers": 32,

|

| 55 |

+

"num_key_value_heads": 8,

|

| 56 |

+

"pad_token_id": 2,

|

| 57 |

+

"rms_norm_eps": 1e-05,

|

| 58 |

+

"rope_theta": 10000.0,

|

| 59 |

+

"sliding_window": 4096,

|

| 60 |

+

"tie_word_embeddings": false,

|

| 61 |

+

"torch_dtype": "bfloat16",

|

| 62 |

+

"transformers_version": "4.35.0",

|

| 63 |

+

"use_cache": true,

|

| 64 |

+

"vocab_size": 32002

|

| 65 |

+

}

|

| 66 |

+

}

|

configuration_infimm_zephyr.py

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# This source code is licensed under the license found in the

|

| 2 |

+

# LICENSE file in the root directory of this source tree.

|

| 3 |

+

|

| 4 |

+

from transformers import PretrainedConfig

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class InfiMMConfig(PretrainedConfig):

|

| 8 |

+

model_type = "infimm"

|

| 9 |

+

|

| 10 |

+

def __init__(

|

| 11 |

+

self,

|

| 12 |

+

model_type="infimm-zephyr",

|

| 13 |

+

seq_length=1024,

|

| 14 |

+

tokenizer_type="ZephyrTokenizer",

|

| 15 |

+

torch_dtype="bfloat16",

|

| 16 |

+

transformers_version="4.35.2",

|

| 17 |

+

use_cache=True,

|

| 18 |

+

use_flash_attn=False,

|

| 19 |

+

cross_attn_every_n_layers=2,

|

| 20 |

+

use_grad_checkpoint=False,

|

| 21 |

+

freeze_llm=True,

|

| 22 |

+

visual=None,

|

| 23 |

+

language=None,

|

| 24 |

+

image_token_id=None,

|

| 25 |

+

eoc_token_id=None,

|

| 26 |

+

**kwargs,

|

| 27 |

+