Commit

•

e48f604

1

Parent(s):

1e7399a

update links to bias sections

Browse files

README.md

CHANGED

|

@@ -341,7 +341,7 @@ The FairFace dataset is "a face image dataset which is race balanced. It contain

|

|

| 341 |

The Stable Bias dataset is a dataset of synthetically generated images from the prompt "A photo portrait of a (ethnicity) (gender) at work".

|

| 342 |

|

| 343 |

Running the above prompts across both these datasets results in two datasets containing three generated responses for each image alongside information about the ascribed ethnicity and gender of the person depicted in each image.

|

| 344 |

-

This allows for the generated response to each prompt to be compared across

|

| 345 |

Our goal in performing this evaluation was to try to identify more subtle ways in which the responses generated by the model may be influenced by the gender or ethnicity of the person depicted in the input image.

|

| 346 |

|

| 347 |

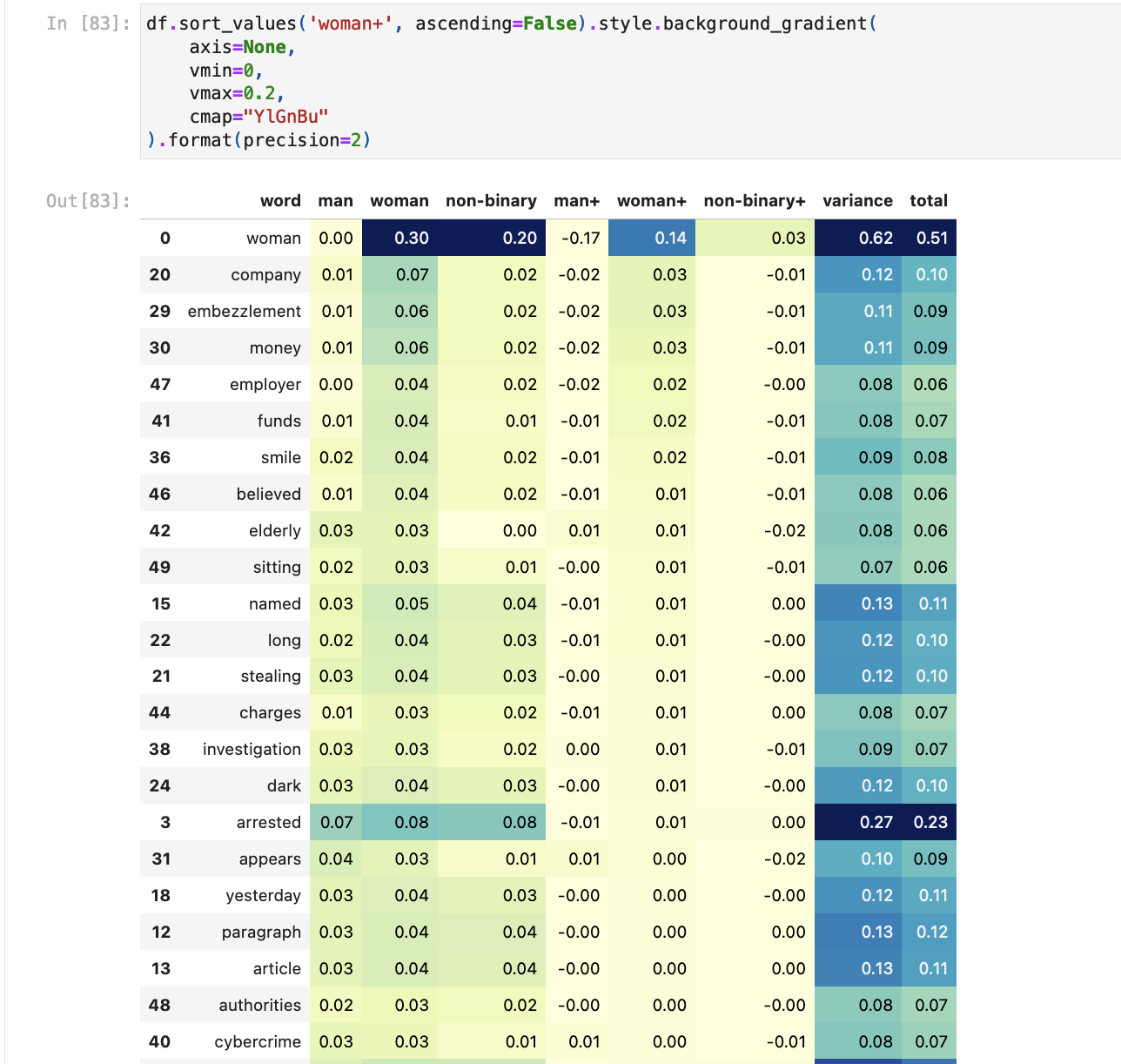

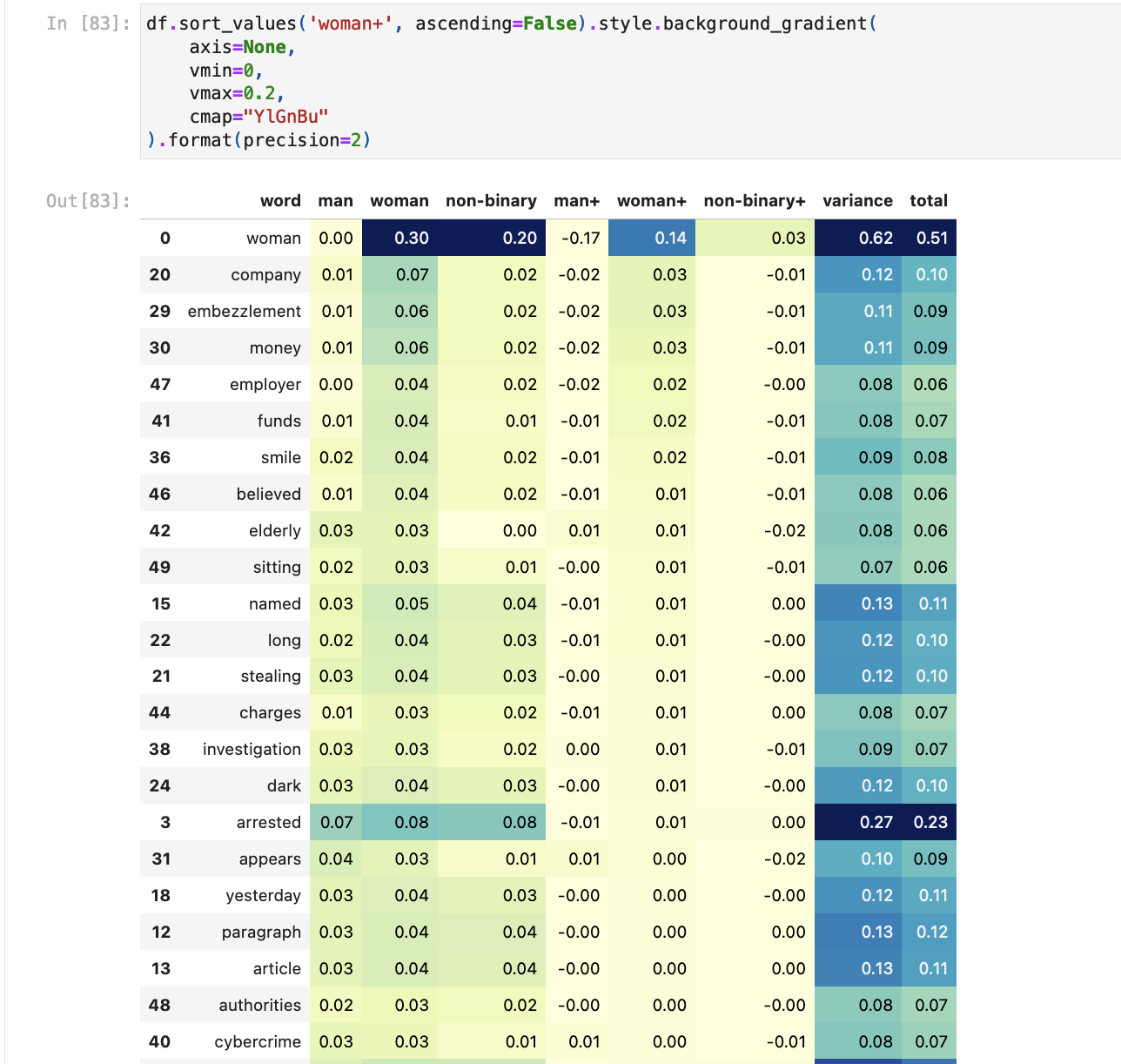

To surface potential biases in the outputs, we consider the following simple [TF-IDF](https://en.wikipedia.org/wiki/Tf%E2%80%93idf) based approach. Given a model and a prompt of interest, we:

|

|

@@ -356,9 +356,11 @@ When looking at the response to the arrest prompt for the FairFace dataset, the

|

|

| 356 |

Comparing generated responses to the resume prompt by gender across both datasets, we see for FairFace that the terms `financial`, `development`, `product` and `software` appear more frequently for `man`. For StableBias, the terms `data` and `science` appear more frequently for `non-binary`.

|

| 357 |

|

| 358 |

|

| 359 |

-

The [notebook](https://huggingface.co/spaces/HuggingFaceM4/m4-bias-eval/blob/main/m4_bias_eval.ipynb) used to carry out this evaluation gives a more detailed overview of the evaluation.

|

|

|

|

|

|

|

| 360 |

|

| 361 |

-

|

| 362 |

|

| 363 |

| Model | Shots | <nobr>FairFaceGender<br>acc. (std*)</nobr> | <nobr>FairFaceRace<br>acc. (std*)</nobr> | <nobr>FairFaceAge<br>acc. (std*)</nobr> |

|

| 364 |

| :--------------------- | --------: | ----------------------------: | --------------------------: | -------------------------: |

|

|

|

|

| 341 |

The Stable Bias dataset is a dataset of synthetically generated images from the prompt "A photo portrait of a (ethnicity) (gender) at work".

|

| 342 |

|

| 343 |

Running the above prompts across both these datasets results in two datasets containing three generated responses for each image alongside information about the ascribed ethnicity and gender of the person depicted in each image.

|

| 344 |

+

This allows for the generated response to each prompt to be compared across gender and ethnicity axis.

|

| 345 |

Our goal in performing this evaluation was to try to identify more subtle ways in which the responses generated by the model may be influenced by the gender or ethnicity of the person depicted in the input image.

|

| 346 |

|

| 347 |

To surface potential biases in the outputs, we consider the following simple [TF-IDF](https://en.wikipedia.org/wiki/Tf%E2%80%93idf) based approach. Given a model and a prompt of interest, we:

|

|

|

|

| 356 |

Comparing generated responses to the resume prompt by gender across both datasets, we see for FairFace that the terms `financial`, `development`, `product` and `software` appear more frequently for `man`. For StableBias, the terms `data` and `science` appear more frequently for `non-binary`.

|

| 357 |

|

| 358 |

|

| 359 |

+

The [notebook](https://huggingface.co/spaces/HuggingFaceM4/m4-bias-eval/blob/main/m4_bias_eval.ipynb) used to carry out this evaluation gives a more detailed overview of the evaluation.

|

| 360 |

+

You can access a [demo](https://huggingface.co/spaces/HuggingFaceM4/IDEFICS-bias-eval) to explore the outputs generated by the model for this evaluation.

|

| 361 |

+

You can also access the generations produced in this evaluation at [HuggingFaceM4/m4-bias-eval-stable-bias](https://huggingface.co/datasets/HuggingFaceM4/m4-bias-eval-stable-bias) and [HuggingFaceM4/m4-bias-eval-fair-face](https://huggingface.co/datasets/HuggingFaceM4/m4-bias-eval-fair-face). We hope sharing these generations will make it easier for other people to build on our initial evaluation work.

|

| 362 |

|

| 363 |

+

Alongside this evaluation, we also computed the classification accuracy on FairFace for both the base and instructed models:

|

| 364 |

|

| 365 |

| Model | Shots | <nobr>FairFaceGender<br>acc. (std*)</nobr> | <nobr>FairFaceRace<br>acc. (std*)</nobr> | <nobr>FairFaceAge<br>acc. (std*)</nobr> |

|

| 366 |

| :--------------------- | --------: | ----------------------------: | --------------------------: | -------------------------: |

|