Commit

•

1138740

1

Parent(s):

a7e32ef

Update readme and doc from the 80b repo

Browse files- .gitattributes +2 -0

- README.md +8 -4

- assets/Figure_Evals_IDEFICS.png +3 -0

- assets/IDEFICS.png +3 -0

- assets/Idefics_colab.png +3 -0

- assets/guarding_baguettes.png +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/Figure_Evals_IDEFICS.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -19,6 +19,8 @@ pipeline_tag: text-generation

|

|

| 19 |

|

| 20 |

# IDEFICS

|

| 21 |

|

|

|

|

|

|

|

| 22 |

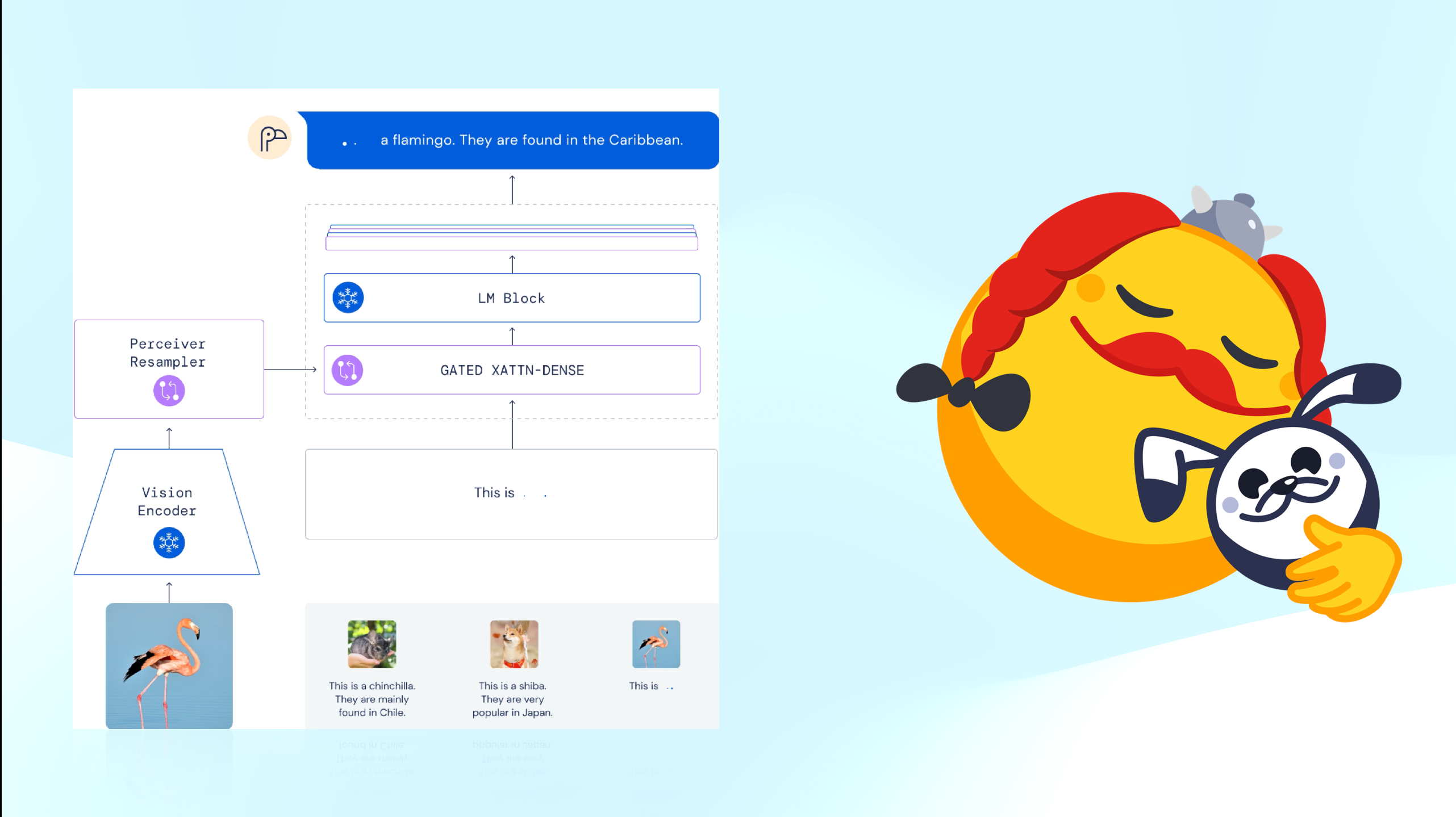

IDEFICS (**I**mage-aware **D**ecoder **E**nhanced à la **F**lamingo with **I**nterleaved **C**ross-attention**S**) is an open-access reproduction of [Flamingo](https://huggingface.co/papers/2204.14198), a closed-source visual language model developed by Deepmind. Like GPT-4, the multimodal model accepts arbitrary sequences of image and text inputs and produces text outputs. IDEFICS is built solely on public available data and models.

|

| 23 |

|

| 24 |

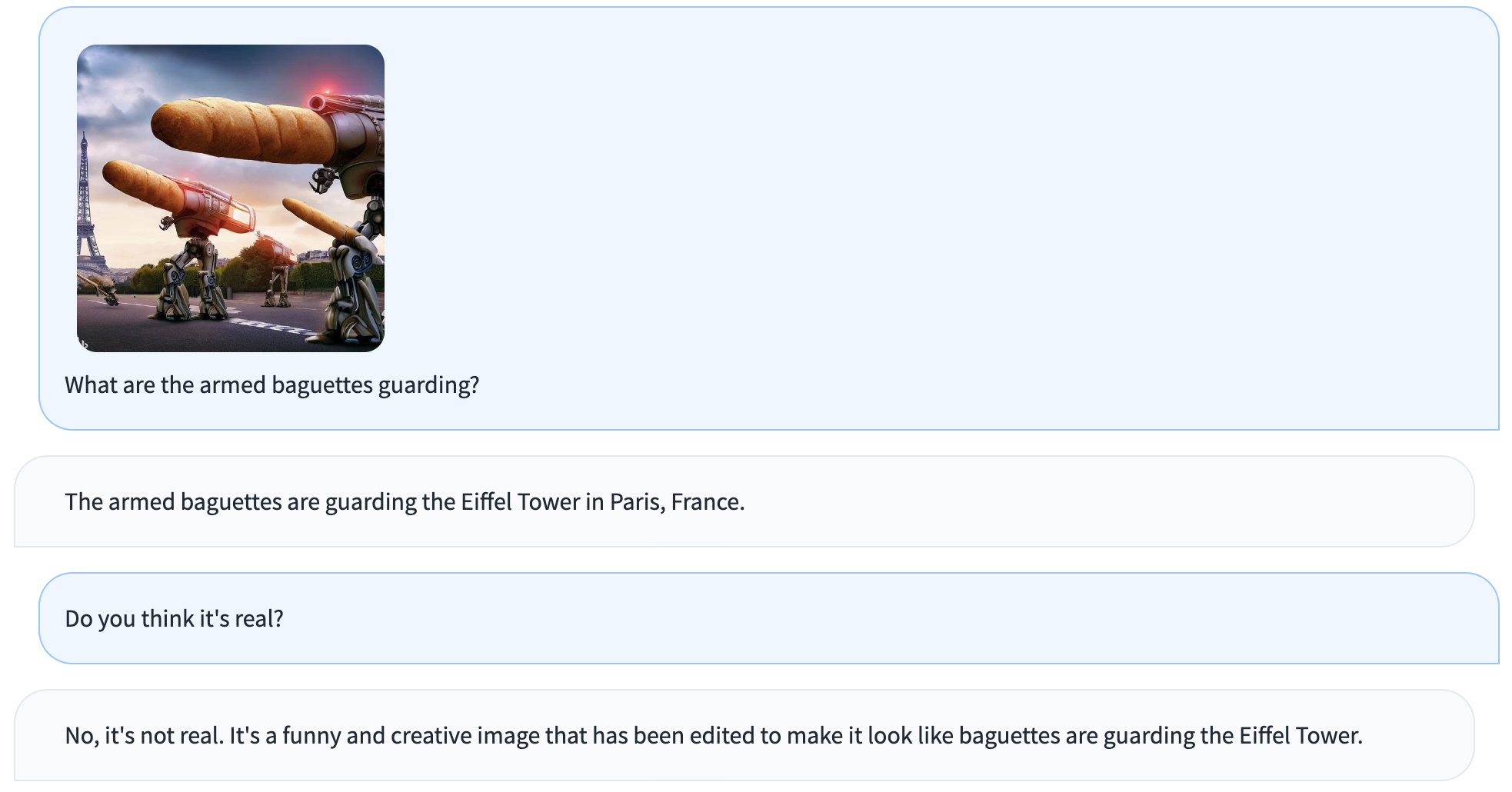

The model can answer questions about images, describe visual contents, create stories grounded on multiple images, or simply behave as a pure language model without visual inputs.

|

|

@@ -29,7 +31,7 @@ We also fine-tune these base models on a mixture of supervised and instruction f

|

|

| 29 |

|

| 30 |

Read more about some of the technical challenges we encountered during training IDEFICS [here](https://github.com/huggingface/m4-logs/blob/master/memos/README.md).

|

| 31 |

|

| 32 |

-

|

| 33 |

|

| 34 |

# Model Details

|

| 35 |

|

|

@@ -39,7 +41,7 @@ Read more about some of the technical challenges we encountered during training

|

|

| 39 |

- **License:** see [License section](#license)

|

| 40 |

- **Parent Model:** [laion/CLIP-ViT-H-14-laion2B-s32B-b79K](https://huggingface.co/laion/CLIP-ViT-H-14-laion2B-s32B-b79K) and [huggyllama/llama-65b](https://huggingface.co/huggyllama/llama-65b)

|

| 41 |

- **Resources for more information:**

|

| 42 |

-

- [GitHub Repo](https://github.com/huggingface/m4/)

|

| 43 |

- Description of [OBELICS](https://huggingface.co/datasets/HuggingFaceM4/OBELICS): [OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents

|

| 44 |

](https://huggingface.co/papers/2306.16527)

|

| 45 |

- Original Paper: [Flamingo: a Visual Language Model for Few-Shot Learning](https://huggingface.co/papers/2204.14198)

|

|

@@ -420,9 +422,11 @@ We release the additional weights we trained under an MIT license.

|

|

| 420 |

}

|

| 421 |

```

|

| 422 |

|

| 423 |

-

# Model Card Authors

|

|

|

|

|

|

|

| 424 |

|

| 425 |

-

Stas Bekman

|

| 426 |

|

| 427 |

# Model Card Contact

|

| 428 |

|

|

|

|

| 19 |

|

| 20 |

# IDEFICS

|

| 21 |

|

| 22 |

+

*How do I pronounce the name? [Youtube tutorial](https://www.youtube.com/watch?v=YKO0rWnPN2I&ab_channel=FrenchPronunciationGuide)*

|

| 23 |

+

|

| 24 |

IDEFICS (**I**mage-aware **D**ecoder **E**nhanced à la **F**lamingo with **I**nterleaved **C**ross-attention**S**) is an open-access reproduction of [Flamingo](https://huggingface.co/papers/2204.14198), a closed-source visual language model developed by Deepmind. Like GPT-4, the multimodal model accepts arbitrary sequences of image and text inputs and produces text outputs. IDEFICS is built solely on public available data and models.

|

| 25 |

|

| 26 |

The model can answer questions about images, describe visual contents, create stories grounded on multiple images, or simply behave as a pure language model without visual inputs.

|

|

|

|

| 31 |

|

| 32 |

Read more about some of the technical challenges we encountered during training IDEFICS [here](https://github.com/huggingface/m4-logs/blob/master/memos/README.md).

|

| 33 |

|

| 34 |

+

**Try out the [demo](https://huggingface.co/spaces/HuggingFaceM4/idefics_playground)!**

|

| 35 |

|

| 36 |

# Model Details

|

| 37 |

|

|

|

|

| 41 |

- **License:** see [License section](#license)

|

| 42 |

- **Parent Model:** [laion/CLIP-ViT-H-14-laion2B-s32B-b79K](https://huggingface.co/laion/CLIP-ViT-H-14-laion2B-s32B-b79K) and [huggyllama/llama-65b](https://huggingface.co/huggyllama/llama-65b)

|

| 43 |

- **Resources for more information:**

|

| 44 |

+

<!-- - [GitHub Repo](https://github.com/huggingface/m4/) -->

|

| 45 |

- Description of [OBELICS](https://huggingface.co/datasets/HuggingFaceM4/OBELICS): [OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents

|

| 46 |

](https://huggingface.co/papers/2306.16527)

|

| 47 |

- Original Paper: [Flamingo: a Visual Language Model for Few-Shot Learning](https://huggingface.co/papers/2204.14198)

|

|

|

|

| 422 |

}

|

| 423 |

```

|

| 424 |

|

| 425 |

+

# Model Builders, Card Authors, and contributors

|

| 426 |

+

|

| 427 |

+

The core team (*) was supported in many different ways by these contributors at Hugging Face:

|

| 428 |

|

| 429 |

+

Stas Bekman*, Léo Tronchon*, Hugo Laurençon*, Lucile Saulnier*, Amanpreet Singh*, Anton Lozhkov, Thomas Wang, Siddharth Karamcheti, Daniel Van Strien, Giada Pistilli, Yacine Jernite, Sasha Luccioni, Ezi Ozoani, Younes Belkada, Sylvain Gugger, Amy E. Roberts, Lysandre Debut, Arthur Zucker, Nicolas Patry, Lewis Tunstall, Zach Mueller, Sourab Mangrulkar, Chunte Lee, Yuvraj Sharma, Dawood Khan, Abubakar Abid, Ali Abid, Freddy Boulton, Omar Sanseviero, Carlos Muñoz Ferrandis, Guillaume Salou, Guillaume Legendre, Quentin Lhoest, Douwe Kiela, Alexander M. Rush, Matthieu Cord, Julien Chaumond, Thomas Wolf, Victor Sanh*

|

| 430 |

|

| 431 |

# Model Card Contact

|

| 432 |

|

assets/Figure_Evals_IDEFICS.png

ADDED

|

Git LFS Details

|

assets/IDEFICS.png

ADDED

|

Git LFS Details

|

assets/Idefics_colab.png

ADDED

|

Git LFS Details

|

assets/guarding_baguettes.png

ADDED

|

Git LFS Details

|