license: mit

SegGPT: Segmenting Everything In Context

Xinlong Wang1*, Xiaosong Zhang1*, Yue Cao1*, Wen Wang2, Chunhua Shen2, Tiejun Huang1,3

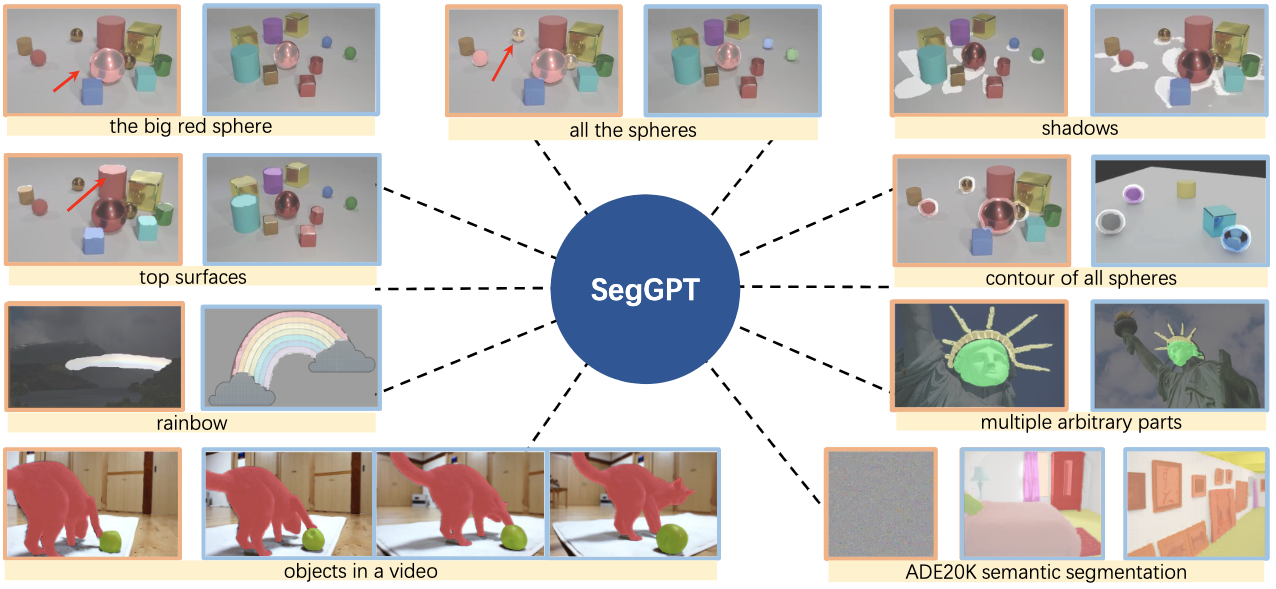

We present SegGPT, a generalist model for segmenting everything in context. With only one single model, SegGPT can perform arbitrary segmentation tasks in images or videos via in-context inference, such as object instance, stuff, part, contour, and text. SegGPT is evaluated on a broad range of tasks, including few-shot semantic segmentation, video object segmentation, semantic segmentation, and panoptic segmentation. Our results show strong capabilities in segmenting in-domain and out-of-domain targets, either qualitatively or quantitatively.

Model

A pre-trained SegGPT model is available at 🤗 HF link.

Citation

@article{SegGPT,

title={SegGPT: Segmenting Everything In Context},

author={Wang, Xinlong and Zhang, Xiaosong and Cao, Yue and Wang, Wen and Shen, Chunhua and Huang, Tiejun},

journal={arXiv preprint arXiv:2304.03284},

year={2023}

}

Contact

We are hiring at all levels at BAAI Vision Team, including full-time researchers, engineers and interns.

If you are interested in working with us on foundation model, visual perception and multimodal learning, please contact Xinlong Wang (wangxinlong@baai.ac.cn) and Yue Cao (caoyue@baai.ac.cn).