Add information about SOP vs. NSP to README

Browse files

README.md

CHANGED

|

@@ -16,11 +16,11 @@ This repository contains our pre-trained BERT-based model. It was initialised fr

|

|

| 16 |

|

| 17 |

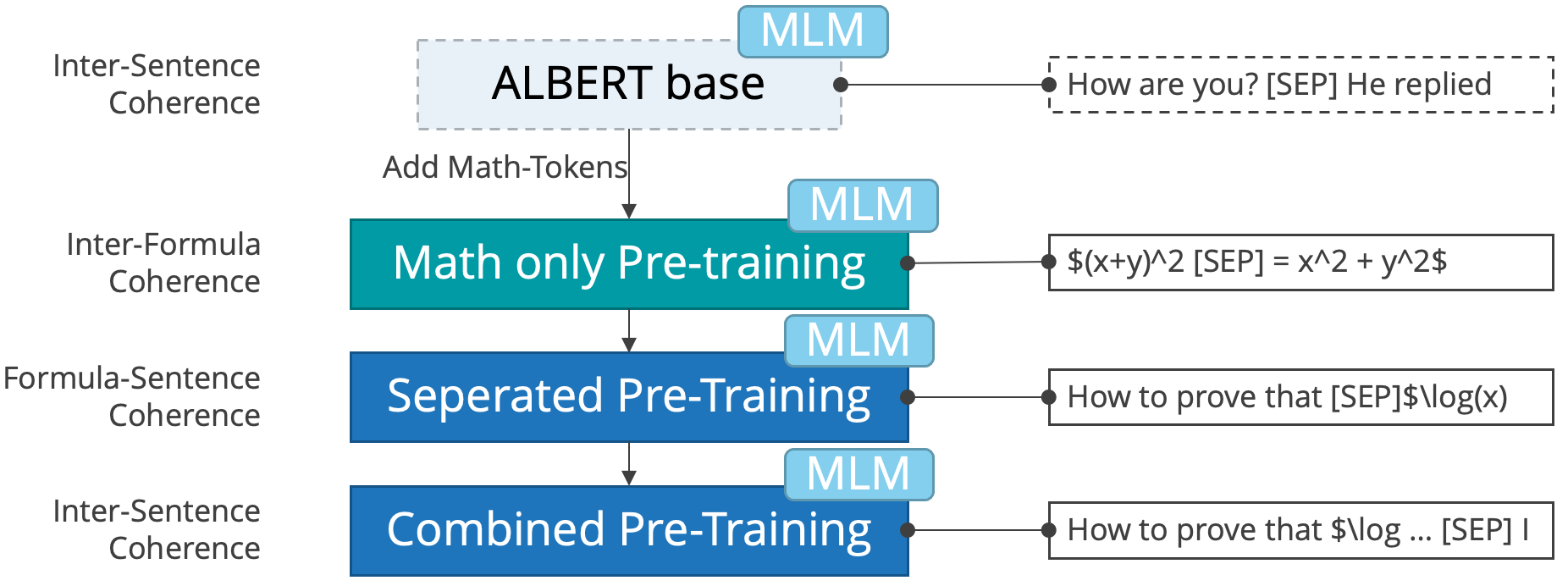

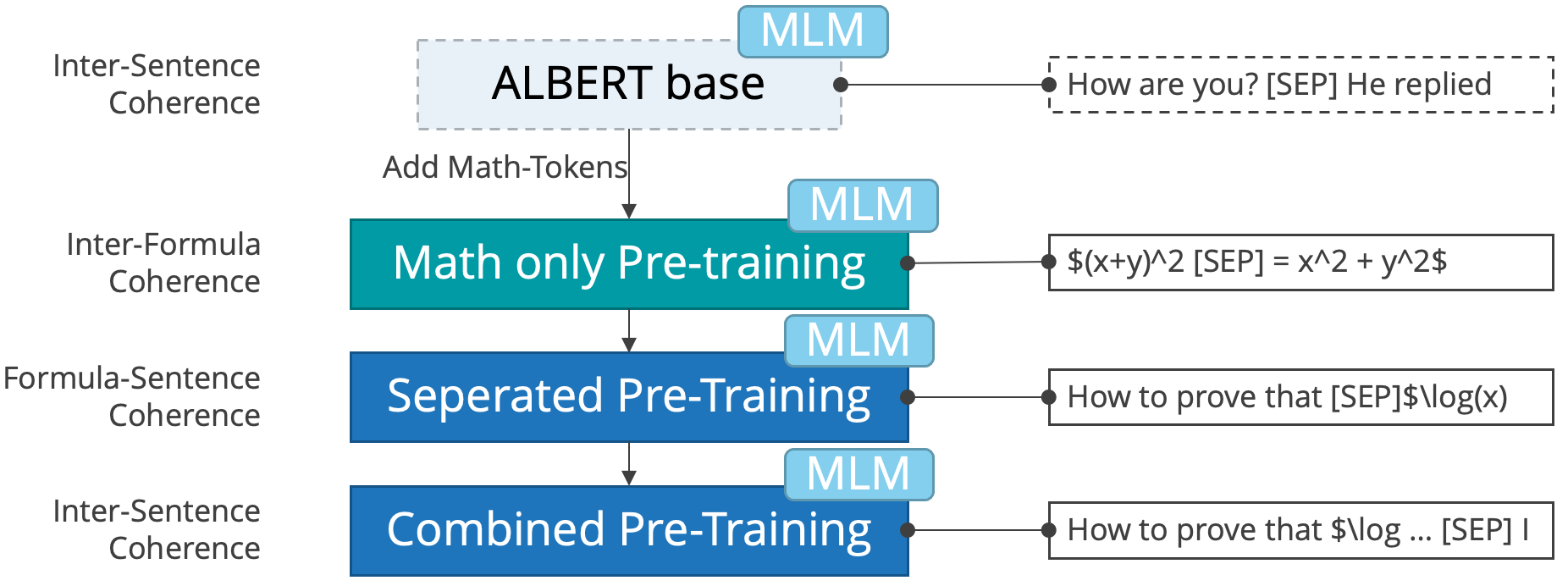

The model was instantiated from BERT-base-cased weights and further pre-trained in three stages using different data for the sentence order prediction. During all three stages, the mask language modelling task was trained simultaneously. In addition, we added around 500 LaTeX tokens to the tokenizer to better cope with mathematical formulas.

|

| 18 |

|

| 19 |

-

The image illustrates the three pre-training stages: First, we train on mathematical formulas only. The

|

| 20 |

|

| 21 |

|

| 22 |

|

| 23 |

-

It is trained in exactly the same way as our ALBERT model which was our best-performing model in ARQMath 3 (2022). Details about our ALBERT Model can be found [here](https://huggingface.co/AnReu/

|

| 24 |

|

| 25 |

|

| 26 |

# Usage

|

|

|

|

| 16 |

|

| 17 |

The model was instantiated from BERT-base-cased weights and further pre-trained in three stages using different data for the sentence order prediction. During all three stages, the mask language modelling task was trained simultaneously. In addition, we added around 500 LaTeX tokens to the tokenizer to better cope with mathematical formulas.

|

| 18 |

|

| 19 |

+

The image illustrates the three pre-training stages: First, we train on mathematical formulas only. The NSP classifier predicts which segment contains the left hand side of the formula and which one contains the right hand side. This way we model inter-formula-coherence. The second stages models formula-sentence-coherence, i.e., whether the formula comes first in the original document or whether the natural language part comes first. Finally, we add the inter-sentence-coherence stage that is default for ALBERT/BERT. In this stage, sentences were split by a sentence separator. Note, that in all three stages we do not use sentences from different documents as the NSP task for BERT would usually do, but instead we are only switching two consecutive sequences (formulas, sentences, ...). This would be the default behaviour of ALBERT's SOP task.

|

| 20 |

|

| 21 |

|

| 22 |

|

| 23 |

+

It is trained in exactly the same way as our ALBERT model which was our best-performing model in ARQMath 3 (2022). Details about our ALBERT Model can be found [here](https://huggingface.co/AnReu/math_albert).

|

| 24 |

|

| 25 |

|

| 26 |

# Usage

|