Upload 187 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +8 -0

- Framework.png +0 -0

- README.md +86 -3

- main/.gitignore +129 -0

- main/LICENSE +21 -0

- main/assets/example_action_names_humanact12.txt +2 -0

- main/assets/example_action_names_uestc.txt +7 -0

- main/assets/example_stick_fig.gif +0 -0

- main/assets/example_text_prompts.txt +8 -0

- main/assets/in_between_edit.gif +3 -0

- main/assets/upper_body_edit.gif +0 -0

- main/body_models/README.md +3 -0

- main/data_loaders/a2m/dataset.py +255 -0

- main/data_loaders/a2m/humanact12poses.py +57 -0

- main/data_loaders/a2m/uestc.py +226 -0

- main/data_loaders/get_data.py +52 -0

- main/data_loaders/humanml/README.md +1 -0

- main/data_loaders/humanml/common/quaternion.py +423 -0

- main/data_loaders/humanml/common/skeleton.py +199 -0

- main/data_loaders/humanml/data/__init__.py +0 -0

- main/data_loaders/humanml/data/dataset.py +783 -0

- main/data_loaders/humanml/motion_loaders/__init__.py +0 -0

- main/data_loaders/humanml/motion_loaders/comp_v6_model_dataset.py +262 -0

- main/data_loaders/humanml/motion_loaders/dataset_motion_loader.py +27 -0

- main/data_loaders/humanml/motion_loaders/model_motion_loaders.py +91 -0

- main/data_loaders/humanml/networks/__init__.py +0 -0

- main/data_loaders/humanml/networks/evaluator_wrapper.py +187 -0

- main/data_loaders/humanml/networks/modules.py +438 -0

- main/data_loaders/humanml/networks/trainers.py +1089 -0

- main/data_loaders/humanml/scripts/motion_process.py +529 -0

- main/data_loaders/humanml/utils/get_opt.py +81 -0

- main/data_loaders/humanml/utils/metrics.py +146 -0

- main/data_loaders/humanml/utils/paramUtil.py +63 -0

- main/data_loaders/humanml/utils/plot_script.py +132 -0

- main/data_loaders/humanml/utils/utils.py +168 -0

- main/data_loaders/humanml/utils/word_vectorizer.py +80 -0

- main/data_loaders/humanml_utils.py +54 -0

- main/data_loaders/tensors.py +70 -0

- main/dataset/README.md +6 -0

- main/dataset/humanml_opt.txt +54 -0

- main/dataset/kit_mean.npy +3 -0

- main/dataset/kit_opt.txt +54 -0

- main/dataset/kit_std.npy +3 -0

- main/dataset/t2m_mean.npy +3 -0

- main/dataset/t2m_std.npy +3 -0

- main/diffusion/fp16_util.py +236 -0

- main/diffusion/gaussian_diffusion.py +1613 -0

- main/diffusion/logger.py +495 -0

- main/diffusion/losses.py +77 -0

- main/diffusion/nn.py +197 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,11 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

main/assets/in_between_edit.gif filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

main/mydiffusion_zeggs/0001-0933.mkv filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

main/mydiffusion_zeggs/0001-0933.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

main/mydiffusion_zeggs/015_Happy_4_x_1_0.wav filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

ubisoft-laforge-ZeroEGGS-main/ZEGGS/bvh2fbx/LaForgeFemale.fbx filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

ubisoft-laforge-ZeroEGGS-main/ZEGGS/bvh2fbx/Rendered/001_Neutral_0_x_0_9.bvh filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

ubisoft-laforge-ZeroEGGS-main/ZEGGS/bvh2fbx/Rendered/001_Neutral_0_x_0_9.fbx filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

ubisoft-laforge-ZeroEGGS-main/ZEGGS/bvh2fbx/Rendered/001_Neutral_0_x_0_9.wav filter=lfs diff=lfs merge=lfs -text

|

Framework.png

ADDED

|

README.md

CHANGED

|

@@ -1,3 +1,86 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

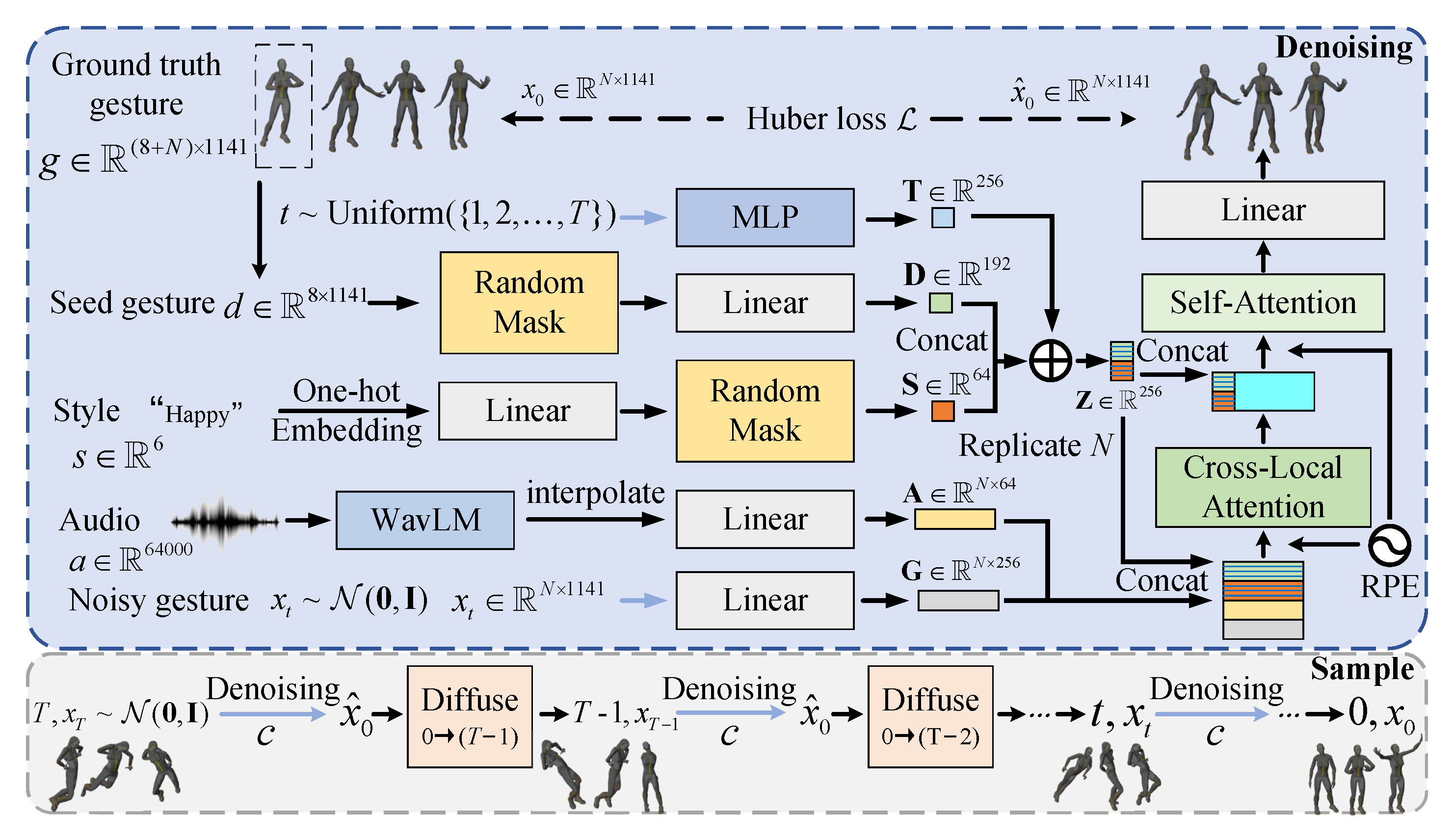

+

# DiffuseStyleGesture: Stylized Audio-Driven Co-Speech Gesture Generation with Diffusion Models

|

| 2 |

+

|

| 3 |

+

[](https://arxiv.org/abs/2305.04919)

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

<div align=center>

|

| 8 |

+

<img src="Framework.png" width="500px">

|

| 9 |

+

</div>

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## News

|

| 13 |

+

|

| 14 |

+

📢 **9/May/23** - First release - arxiv, code and pre-trained models.

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

## 1. Getting started

|

| 18 |

+

|

| 19 |

+

This code was tested on `NVIDIA GeForce RTX 2080 Ti` and requires:

|

| 20 |

+

|

| 21 |

+

* conda3 or miniconda3

|

| 22 |

+

|

| 23 |

+

```

|

| 24 |

+

conda create -n DiffuseStyleGesture python=3.7

|

| 25 |

+

pip install -r requirements.txt

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

[//]: # (-i https://pypi.tuna.tsinghua.edu.cn/simple)

|

| 29 |

+

|

| 30 |

+

## 2. Quick Start

|

| 31 |

+

|

| 32 |

+

1. Download pre-trained model from [Tsinghua Cloud](https://cloud.tsinghua.edu.cn/f/8ade7c73e05c4549ac6b/) or [Google Cloud](https://drive.google.com/file/d/1RlusxWJFJMyauXdbfbI_XreJwVRnrBv_/view?usp=share_link)

|

| 33 |

+

and put it into `./main/mydiffusion_zeggs/`.

|

| 34 |

+

2. Download the [WavLM Large](https://github.com/microsoft/unilm/tree/master/wavlm) and put it into `./main/mydiffusion_zeggs/WavLM/`.

|

| 35 |

+

3. cd `./main/mydiffusion_zeggs/` and run

|

| 36 |

+

```python

|

| 37 |

+

python sample.py --config=./configs/DiffuseStyleGesture.yml --no_cuda 0 --gpu 0 --model_path './model000450000.pt' --audiowavlm_path "./015_Happy_4_x_1_0.wav" --max_len 320

|

| 38 |

+

```

|

| 39 |

+

You will get the `.bvh` file named `yyyymmdd_hhmmss_smoothing_SG_minibatch_320_[1, 0, 0, 0, 0, 0]_123456.bvh` in the `sample_dir` folder, which can then be visualized using [Blender](https://www.blender.org/).

|

| 40 |

+

|

| 41 |

+

## 3. Train your own model

|

| 42 |

+

|

| 43 |

+

### (1) Get ZEGGS dataset

|

| 44 |

+

|

| 45 |

+

Same as [ZEGGS](https://github.com/ubisoft/ubisoft-laforge-ZeroEGGS).

|

| 46 |

+

|

| 47 |

+

An example is as follows.

|

| 48 |

+

Download original ZEGGS datasets from [here](https://github.com/ubisoft/ubisoft-laforge-ZeroEGGS) and put it in `./ubisoft-laforge-ZeroEGGS-main/data/` folder.

|

| 49 |

+

Then `cd ./ubisoft-laforge-ZeroEGGS-main/ZEGGS` and run `python data_pipeline.py` to process the dataset.

|

| 50 |

+

You will get `./ubisoft-laforge-ZeroEGGS-main/data/processed_v1/trimmed/train/` and `./ubisoft-laforge-ZeroEGGS-main/data/processed_v1/trimmed/test/` folders.

|

| 51 |

+

|

| 52 |

+

If you find it difficult to obtain and process the data, you can download the data after it has been processed by ZEGGS from [Tsinghua Cloud](https://cloud.tsinghua.edu.cn/f/ba5f3b33d94b4cba875b/) or [Baidu Cloud](https://pan.baidu.com/s/1KakkGpRZWfaJzfN5gQvPAw?pwd=vfuc).

|

| 53 |

+

And put it in `./ubisoft-laforge-ZeroEGGS-main/data/processed_v1/trimmed/` folder.

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

### (2) Process ZEGGS dataset

|

| 57 |

+

|

| 58 |

+

```

|

| 59 |

+

cd ./main/mydiffusion_zeggs/

|

| 60 |

+

python zeggs_data_to_lmdb.py

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

### (3) Train

|

| 64 |

+

|

| 65 |

+

```

|

| 66 |

+

python end2end.py --config=./configs/DiffuseStyleGesture.yml --no_cuda 0 --gpu 0

|

| 67 |

+

```

|

| 68 |

+

The model will save in `./main/mydiffusion_zeggs/zeggs_mymodel3_wavlm/` folder.

|

| 69 |

+

|

| 70 |

+

## Reference

|

| 71 |

+

Our work mainly inspired by: [MDM](https://github.com/GuyTevet/motion-diffusion-model), [Text2Gesture](https://github.com/youngwoo-yoon/Co-Speech_Gesture_Generation), [Listen, denoise, action!](https://arxiv.org/abs/2211.09707)

|

| 72 |

+

|

| 73 |

+

## Citation

|

| 74 |

+

If you find this code useful in your research, please cite:

|

| 75 |

+

|

| 76 |

+

```

|

| 77 |

+

@inproceedings{yang2023DiffuseStyleGesture,

|

| 78 |

+

author = {Sicheng Yang and Zhiyong Wu and Minglei Li and Zhensong Zhang and Lei Hao and Weihong Bao and Ming Cheng and Long Xiao},

|

| 79 |

+

title = {DiffuseStyleGesture: Stylized Audio-Driven Co-Speech Gesture Generation with Diffusion Models},

|

| 80 |

+

booktitle = {Proceedings of the 32nd International Joint Conference on Artificial Intelligence, {IJCAI} 2023},

|

| 81 |

+

publisher = {ijcai.org},

|

| 82 |

+

year = {2023},

|

| 83 |

+

}

|

| 84 |

+

```

|

| 85 |

+

|

| 86 |

+

Please feel free to contact us ([yangsc21@mails.tsinghua.edu.cn](yangsc21@mails.tsinghua.edu.cn)) with any question or concerns.

|

main/.gitignore

ADDED

|

@@ -0,0 +1,129 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

pip-wheel-metadata/

|

| 24 |

+

share/python-wheels/

|

| 25 |

+

*.egg-info/

|

| 26 |

+

.installed.cfg

|

| 27 |

+

*.egg

|

| 28 |

+

MANIFEST

|

| 29 |

+

|

| 30 |

+

# PyInstaller

|

| 31 |

+

# Usually these files are written by a python script from a template

|

| 32 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 33 |

+

*.manifest

|

| 34 |

+

*.spec

|

| 35 |

+

|

| 36 |

+

# Installer logs

|

| 37 |

+

pip-log.txt

|

| 38 |

+

pip-delete-this-directory.txt

|

| 39 |

+

|

| 40 |

+

# Unit test / coverage reports

|

| 41 |

+

htmlcov/

|

| 42 |

+

.tox/

|

| 43 |

+

.nox/

|

| 44 |

+

.coverage

|

| 45 |

+

.coverage.*

|

| 46 |

+

.cache

|

| 47 |

+

nosetests.xml

|

| 48 |

+

coverage.xml

|

| 49 |

+

*.cover

|

| 50 |

+

*.py,cover

|

| 51 |

+

.hypothesis/

|

| 52 |

+

.pytest_cache/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

target/

|

| 76 |

+

|

| 77 |

+

# Jupyter Notebook

|

| 78 |

+

.ipynb_checkpoints

|

| 79 |

+

|

| 80 |

+

# IPython

|

| 81 |

+

profile_default/

|

| 82 |

+

ipython_config.py

|

| 83 |

+

|

| 84 |

+

# pyenv

|

| 85 |

+

.python-version

|

| 86 |

+

|

| 87 |

+

# pipenv

|

| 88 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 89 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 90 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 91 |

+

# install all needed dependencies.

|

| 92 |

+

#Pipfile.lock

|

| 93 |

+

|

| 94 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 95 |

+

__pypackages__/

|

| 96 |

+

|

| 97 |

+

# Celery stuff

|

| 98 |

+

celerybeat-schedule

|

| 99 |

+

celerybeat.pid

|

| 100 |

+

|

| 101 |

+

# SageMath parsed files

|

| 102 |

+

*.sage.py

|

| 103 |

+

|

| 104 |

+

# Environments

|

| 105 |

+

.env

|

| 106 |

+

.venv

|

| 107 |

+

env/

|

| 108 |

+

venv/

|

| 109 |

+

ENV/

|

| 110 |

+

env.bak/

|

| 111 |

+

venv.bak/

|

| 112 |

+

|

| 113 |

+

# Spyder project settings

|

| 114 |

+

.spyderproject

|

| 115 |

+

.spyproject

|

| 116 |

+

|

| 117 |

+

# Rope project settings

|

| 118 |

+

.ropeproject

|

| 119 |

+

|

| 120 |

+

# mkdocs documentation

|

| 121 |

+

/site

|

| 122 |

+

|

| 123 |

+

# mypy

|

| 124 |

+

.mypy_cache/

|

| 125 |

+

.dmypy.json

|

| 126 |

+

dmypy.json

|

| 127 |

+

|

| 128 |

+

# Pyre type checker

|

| 129 |

+

.pyre/

|

main/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022 Guy Tevet

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

main/assets/example_action_names_humanact12.txt

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

drink

|

| 2 |

+

lift_dumbbell

|

main/assets/example_action_names_uestc.txt

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

jumping-jack

|

| 2 |

+

left-lunging

|

| 3 |

+

left-stretching

|

| 4 |

+

raising-hand-and-jumping

|

| 5 |

+

rotation-clapping

|

| 6 |

+

front-raising

|

| 7 |

+

pulling-chest-expanders

|

main/assets/example_stick_fig.gif

ADDED

|

main/assets/example_text_prompts.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

person got down and is crawling across the floor.

|

| 2 |

+

a person walks forward with wide steps.

|

| 3 |

+

a person drops their hands then brings them together in front of their face clasped.

|

| 4 |

+

a person lifts their right arm and slaps something, then repeats the motion again.

|

| 5 |

+

a person walks forward and stops.

|

| 6 |

+

a person marches forward, turns around, and then marches back.

|

| 7 |

+

a person is stretching their arms.

|

| 8 |

+

person is making attention gesture

|

main/assets/in_between_edit.gif

ADDED

|

Git LFS Details

|

main/assets/upper_body_edit.gif

ADDED

|

main/body_models/README.md

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Body models

|

| 2 |

+

|

| 3 |

+

Put SMPL models here (full instractions in the main README)

|

main/data_loaders/a2m/dataset.py

ADDED

|

@@ -0,0 +1,255 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import random

|

| 2 |

+

|

| 3 |

+

import numpy as np

|

| 4 |

+

import torch

|

| 5 |

+

# from utils.action_label_to_idx import action_label_to_idx

|

| 6 |

+

from data_loaders.tensors import collate

|

| 7 |

+

from utils.misc import to_torch

|

| 8 |

+

import utils.rotation_conversions as geometry

|

| 9 |

+

|

| 10 |

+

class Dataset(torch.utils.data.Dataset):

|

| 11 |

+

def __init__(self, num_frames=1, sampling="conseq", sampling_step=1, split="train",

|

| 12 |

+

pose_rep="rot6d", translation=True, glob=True, max_len=-1, min_len=-1, num_seq_max=-1, **kwargs):

|

| 13 |

+

self.num_frames = num_frames

|

| 14 |

+

self.sampling = sampling

|

| 15 |

+

self.sampling_step = sampling_step

|

| 16 |

+

self.split = split

|

| 17 |

+

self.pose_rep = pose_rep

|

| 18 |

+

self.translation = translation

|

| 19 |

+

self.glob = glob

|

| 20 |

+

self.max_len = max_len

|

| 21 |

+

self.min_len = min_len

|

| 22 |

+

self.num_seq_max = num_seq_max

|

| 23 |

+

|

| 24 |

+

self.align_pose_frontview = kwargs.get('align_pose_frontview', False)

|

| 25 |

+

self.use_action_cat_as_text_labels = kwargs.get('use_action_cat_as_text_labels', False)

|

| 26 |

+

self.only_60_classes = kwargs.get('only_60_classes', False)

|

| 27 |

+

self.leave_out_15_classes = kwargs.get('leave_out_15_classes', False)

|

| 28 |

+

self.use_only_15_classes = kwargs.get('use_only_15_classes', False)

|

| 29 |

+

|

| 30 |

+

if self.split not in ["train", "val", "test"]:

|

| 31 |

+

raise ValueError(f"{self.split} is not a valid split")

|

| 32 |

+

|

| 33 |

+

super().__init__()

|

| 34 |

+

|

| 35 |

+

# to remove shuffling

|

| 36 |

+

self._original_train = None

|

| 37 |

+

self._original_test = None

|

| 38 |

+

|

| 39 |

+

def action_to_label(self, action):

|

| 40 |

+

return self._action_to_label[action]

|

| 41 |

+

|

| 42 |

+

def label_to_action(self, label):

|

| 43 |

+

import numbers

|

| 44 |

+

if isinstance(label, numbers.Integral):

|

| 45 |

+

return self._label_to_action[label]

|

| 46 |

+

else: # if it is one hot vector

|

| 47 |

+

label = np.argmax(label)

|

| 48 |

+

return self._label_to_action[label]

|

| 49 |

+

|

| 50 |

+

def get_pose_data(self, data_index, frame_ix):

|

| 51 |

+

pose = self._load(data_index, frame_ix)

|

| 52 |

+

label = self.get_label(data_index)

|

| 53 |

+

return pose, label

|

| 54 |

+

|

| 55 |

+

def get_label(self, ind):

|

| 56 |

+

action = self.get_action(ind)

|

| 57 |

+

return self.action_to_label(action)

|

| 58 |

+

|

| 59 |

+

def get_action(self, ind):

|

| 60 |

+

return self._actions[ind]

|

| 61 |

+

|

| 62 |

+

def action_to_action_name(self, action):

|

| 63 |

+

return self._action_classes[action]

|

| 64 |

+

|

| 65 |

+

def action_name_to_action(self, action_name):

|

| 66 |

+

# self._action_classes is either a list or a dictionary. If it's a dictionary, we 1st convert it to a list

|

| 67 |

+

all_action_names = self._action_classes

|

| 68 |

+

if isinstance(all_action_names, dict):

|

| 69 |

+

all_action_names = list(all_action_names.values())

|

| 70 |

+

assert list(self._action_classes.keys()) == list(range(len(all_action_names))) # the keys should be ordered from 0 to num_actions

|

| 71 |

+

|

| 72 |

+

sorter = np.argsort(all_action_names)

|

| 73 |

+

actions = sorter[np.searchsorted(all_action_names, action_name, sorter=sorter)]

|

| 74 |

+

return actions

|

| 75 |

+

|

| 76 |

+

def __getitem__(self, index):

|

| 77 |

+

if self.split == 'train':

|

| 78 |

+

data_index = self._train[index]

|

| 79 |

+

else:

|

| 80 |

+

data_index = self._test[index]

|

| 81 |

+

|

| 82 |

+

# inp, target = self._get_item_data_index(data_index)

|

| 83 |

+

# return inp, target

|

| 84 |

+

return self._get_item_data_index(data_index)

|

| 85 |

+

|

| 86 |

+

def _load(self, ind, frame_ix):

|

| 87 |

+

pose_rep = self.pose_rep

|

| 88 |

+

if pose_rep == "xyz" or self.translation:

|

| 89 |

+

if getattr(self, "_load_joints3D", None) is not None:

|

| 90 |

+

# Locate the root joint of initial pose at origin

|

| 91 |

+

joints3D = self._load_joints3D(ind, frame_ix)

|

| 92 |

+

joints3D = joints3D - joints3D[0, 0, :]

|

| 93 |

+

ret = to_torch(joints3D)

|

| 94 |

+

if self.translation:

|

| 95 |

+

ret_tr = ret[:, 0, :]

|

| 96 |

+

else:

|

| 97 |

+

if pose_rep == "xyz":

|

| 98 |

+

raise ValueError("This representation is not possible.")

|

| 99 |

+

if getattr(self, "_load_translation") is None:

|

| 100 |

+

raise ValueError("Can't extract translations.")

|

| 101 |

+

ret_tr = self._load_translation(ind, frame_ix)

|

| 102 |

+

ret_tr = to_torch(ret_tr - ret_tr[0])

|

| 103 |

+

|

| 104 |

+

if pose_rep != "xyz":

|

| 105 |

+

if getattr(self, "_load_rotvec", None) is None:

|

| 106 |

+

raise ValueError("This representation is not possible.")

|

| 107 |

+

else:

|

| 108 |

+

pose = self._load_rotvec(ind, frame_ix)

|

| 109 |

+

if not self.glob:

|

| 110 |

+

pose = pose[:, 1:, :]

|

| 111 |

+

pose = to_torch(pose)

|

| 112 |

+

if self.align_pose_frontview:

|

| 113 |

+

first_frame_root_pose_matrix = geometry.axis_angle_to_matrix(pose[0][0])

|

| 114 |

+

all_root_poses_matrix = geometry.axis_angle_to_matrix(pose[:, 0, :])

|

| 115 |

+

aligned_root_poses_matrix = torch.matmul(torch.transpose(first_frame_root_pose_matrix, 0, 1),

|

| 116 |

+

all_root_poses_matrix)

|

| 117 |

+

pose[:, 0, :] = geometry.matrix_to_axis_angle(aligned_root_poses_matrix)

|

| 118 |

+

|

| 119 |

+

if self.translation:

|

| 120 |

+

ret_tr = torch.matmul(torch.transpose(first_frame_root_pose_matrix, 0, 1).float(),

|

| 121 |

+

torch.transpose(ret_tr, 0, 1))

|

| 122 |

+

ret_tr = torch.transpose(ret_tr, 0, 1)

|

| 123 |

+

|

| 124 |

+

if pose_rep == "rotvec":

|

| 125 |

+

ret = pose

|

| 126 |

+

elif pose_rep == "rotmat":

|

| 127 |

+

ret = geometry.axis_angle_to_matrix(pose).view(*pose.shape[:2], 9)

|

| 128 |

+

elif pose_rep == "rotquat":

|

| 129 |

+

ret = geometry.axis_angle_to_quaternion(pose)

|

| 130 |

+

elif pose_rep == "rot6d":

|

| 131 |

+

ret = geometry.matrix_to_rotation_6d(geometry.axis_angle_to_matrix(pose))

|

| 132 |

+

if pose_rep != "xyz" and self.translation:

|

| 133 |

+

padded_tr = torch.zeros((ret.shape[0], ret.shape[2]), dtype=ret.dtype)

|

| 134 |

+

padded_tr[:, :3] = ret_tr

|

| 135 |

+

ret = torch.cat((ret, padded_tr[:, None]), 1)

|

| 136 |

+

ret = ret.permute(1, 2, 0).contiguous()

|

| 137 |

+

return ret.float()

|

| 138 |

+

|

| 139 |

+

def _get_item_data_index(self, data_index):

|

| 140 |

+

nframes = self._num_frames_in_video[data_index]

|

| 141 |

+

|

| 142 |

+

if self.num_frames == -1 and (self.max_len == -1 or nframes <= self.max_len):

|

| 143 |

+

frame_ix = np.arange(nframes)

|

| 144 |

+

else:

|

| 145 |

+

if self.num_frames == -2:

|

| 146 |

+

if self.min_len <= 0:

|

| 147 |

+

raise ValueError("You should put a min_len > 0 for num_frames == -2 mode")

|

| 148 |

+

if self.max_len != -1:

|

| 149 |

+

max_frame = min(nframes, self.max_len)

|

| 150 |

+

else:

|

| 151 |

+

max_frame = nframes

|

| 152 |

+

|

| 153 |

+

num_frames = random.randint(self.min_len, max(max_frame, self.min_len))

|

| 154 |

+

else:

|

| 155 |

+

num_frames = self.num_frames if self.num_frames != -1 else self.max_len

|

| 156 |

+

|

| 157 |

+

if num_frames > nframes:

|

| 158 |

+

fair = False # True

|

| 159 |

+

if fair:

|

| 160 |

+

# distills redundancy everywhere

|

| 161 |

+

choices = np.random.choice(range(nframes),

|

| 162 |

+

num_frames,

|

| 163 |

+

replace=True)

|

| 164 |

+

frame_ix = sorted(choices)

|

| 165 |

+

else:

|

| 166 |

+

# adding the last frame until done

|

| 167 |

+

ntoadd = max(0, num_frames - nframes)

|

| 168 |

+

lastframe = nframes - 1

|

| 169 |

+

padding = lastframe * np.ones(ntoadd, dtype=int)

|

| 170 |

+

frame_ix = np.concatenate((np.arange(0, nframes),

|

| 171 |

+

padding))

|

| 172 |

+

|

| 173 |

+

elif self.sampling in ["conseq", "random_conseq"]:

|

| 174 |

+

step_max = (nframes - 1) // (num_frames - 1)

|

| 175 |

+

if self.sampling == "conseq":

|

| 176 |

+

if self.sampling_step == -1 or self.sampling_step * (num_frames - 1) >= nframes:

|

| 177 |

+

step = step_max

|

| 178 |

+

else:

|

| 179 |

+

step = self.sampling_step

|

| 180 |

+

elif self.sampling == "random_conseq":

|

| 181 |

+

step = random.randint(1, step_max)

|

| 182 |

+

|

| 183 |

+

lastone = step * (num_frames - 1)

|

| 184 |

+

shift_max = nframes - lastone - 1

|

| 185 |

+

shift = random.randint(0, max(0, shift_max - 1))

|

| 186 |

+

frame_ix = shift + np.arange(0, lastone + 1, step)

|

| 187 |

+

|

| 188 |

+

elif self.sampling == "random":

|

| 189 |

+

choices = np.random.choice(range(nframes),

|

| 190 |

+

num_frames,

|

| 191 |

+

replace=False)

|

| 192 |

+

frame_ix = sorted(choices)

|

| 193 |

+

|

| 194 |

+

else:

|

| 195 |

+

raise ValueError("Sampling not recognized.")

|

| 196 |

+

|

| 197 |

+

inp, action = self.get_pose_data(data_index, frame_ix)

|

| 198 |

+

|

| 199 |

+

|

| 200 |

+

output = {'inp': inp, 'action': action}

|

| 201 |

+

|

| 202 |

+

if hasattr(self, '_actions') and hasattr(self, '_action_classes'):

|

| 203 |

+

output['action_text'] = self.action_to_action_name(self.get_action(data_index))

|

| 204 |

+

|

| 205 |

+

return output

|

| 206 |

+

|

| 207 |

+

|

| 208 |

+

def get_mean_length_label(self, label):

|

| 209 |

+

if self.num_frames != -1:

|

| 210 |

+

return self.num_frames

|

| 211 |

+

|

| 212 |

+

if self.split == 'train':

|

| 213 |

+

index = self._train

|

| 214 |

+

else:

|

| 215 |

+

index = self._test

|

| 216 |

+

|

| 217 |

+

action = self.label_to_action(label)

|

| 218 |

+

choices = np.argwhere(self._actions[index] == action).squeeze(1)

|

| 219 |

+

lengths = self._num_frames_in_video[np.array(index)[choices]]

|

| 220 |

+

|

| 221 |

+

if self.max_len == -1:

|

| 222 |

+

return np.mean(lengths)

|

| 223 |

+

else:

|

| 224 |

+

# make the lengths less than max_len

|

| 225 |

+

lengths[lengths > self.max_len] = self.max_len

|

| 226 |

+

return np.mean(lengths)

|

| 227 |

+

|

| 228 |

+

def __len__(self):

|

| 229 |

+

num_seq_max = getattr(self, "num_seq_max", -1)

|

| 230 |

+

if num_seq_max == -1:

|

| 231 |

+

from math import inf

|

| 232 |

+

num_seq_max = inf

|

| 233 |

+

|

| 234 |

+

if self.split == 'train':

|

| 235 |

+

return min(len(self._train), num_seq_max)

|

| 236 |

+

else:

|

| 237 |

+

return min(len(self._test), num_seq_max)

|

| 238 |

+

|

| 239 |

+

def shuffle(self):

|

| 240 |

+

if self.split == 'train':

|

| 241 |

+

random.shuffle(self._train)

|

| 242 |

+

else:

|

| 243 |

+

random.shuffle(self._test)

|

| 244 |

+

|

| 245 |

+

def reset_shuffle(self):

|

| 246 |

+

if self.split == 'train':

|

| 247 |

+

if self._original_train is None:

|

| 248 |

+

self._original_train = self._train

|

| 249 |

+

else:

|

| 250 |

+

self._train = self._original_train

|

| 251 |

+

else:

|

| 252 |

+

if self._original_test is None:

|

| 253 |

+

self._original_test = self._test

|

| 254 |

+

else:

|

| 255 |

+

self._test = self._original_test

|

main/data_loaders/a2m/humanact12poses.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pickle as pkl

|

| 2 |

+

import numpy as np

|

| 3 |

+

import os

|

| 4 |

+

from .dataset import Dataset

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class HumanAct12Poses(Dataset):

|

| 8 |

+

dataname = "humanact12"

|

| 9 |

+

|

| 10 |

+

def __init__(self, datapath="dataset/HumanAct12Poses", split="train", **kargs):

|

| 11 |

+

self.datapath = datapath

|

| 12 |

+

|

| 13 |

+

super().__init__(**kargs)

|

| 14 |

+

|

| 15 |

+

pkldatafilepath = os.path.join(datapath, "humanact12poses.pkl")

|

| 16 |

+

data = pkl.load(open(pkldatafilepath, "rb"))

|

| 17 |

+

|

| 18 |

+

self._pose = [x for x in data["poses"]]

|

| 19 |

+

self._num_frames_in_video = [p.shape[0] for p in self._pose]

|

| 20 |

+

self._joints = [x for x in data["joints3D"]]

|

| 21 |

+

|

| 22 |

+

self._actions = [x for x in data["y"]]

|

| 23 |

+

|

| 24 |

+

total_num_actions = 12

|

| 25 |

+

self.num_actions = total_num_actions

|

| 26 |

+

|

| 27 |

+

self._train = list(range(len(self._pose)))

|

| 28 |

+

|

| 29 |

+

keep_actions = np.arange(0, total_num_actions)

|

| 30 |

+

|

| 31 |

+

self._action_to_label = {x: i for i, x in enumerate(keep_actions)}

|

| 32 |

+

self._label_to_action = {i: x for i, x in enumerate(keep_actions)}

|

| 33 |

+

|

| 34 |

+

self._action_classes = humanact12_coarse_action_enumerator

|

| 35 |

+

|

| 36 |

+

def _load_joints3D(self, ind, frame_ix):

|

| 37 |

+

return self._joints[ind][frame_ix]

|

| 38 |

+

|

| 39 |

+

def _load_rotvec(self, ind, frame_ix):

|

| 40 |

+

pose = self._pose[ind][frame_ix].reshape(-1, 24, 3)

|

| 41 |

+

return pose

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

humanact12_coarse_action_enumerator = {

|

| 45 |

+

0: "warm_up",

|

| 46 |

+

1: "walk",

|

| 47 |

+

2: "run",

|

| 48 |

+

3: "jump",

|

| 49 |

+

4: "drink",

|

| 50 |

+

5: "lift_dumbbell",

|

| 51 |

+

6: "sit",

|

| 52 |

+

7: "eat",

|

| 53 |

+

8: "turn steering wheel",

|

| 54 |

+

9: "phone",

|

| 55 |

+

10: "boxing",

|

| 56 |

+

11: "throw",

|

| 57 |

+

}

|

main/data_loaders/a2m/uestc.py

ADDED

|

@@ -0,0 +1,226 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from tqdm import tqdm

|

| 3 |

+

import numpy as np

|

| 4 |

+

import pickle as pkl

|

| 5 |

+

import utils.rotation_conversions as geometry

|

| 6 |

+

import torch

|

| 7 |

+

|

| 8 |

+

from .dataset import Dataset

|

| 9 |

+

# from torch.utils.data import Dataset

|

| 10 |

+

|

| 11 |

+

action2motion_joints = [8, 1, 2, 3, 4, 5, 6, 7, 0, 9, 10, 11, 12, 13, 14, 21, 24, 38]

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

def get_z(cam_s, cam_pos, joints, img_size, flength):

|

| 15 |

+

"""

|

| 16 |

+

Solves for the depth offset of the model to approx. orth with persp camera.

|

| 17 |

+

"""

|

| 18 |

+

# Translate the model itself: Solve the best z that maps to orth_proj points

|

| 19 |

+

joints_orth_target = (cam_s * (joints[:, :2] + cam_pos) + 1) * 0.5 * img_size

|

| 20 |

+

height3d = np.linalg.norm(np.max(joints[:, :2], axis=0) - np.min(joints[:, :2], axis=0))

|

| 21 |

+

height2d = np.linalg.norm(np.max(joints_orth_target, axis=0) - np.min(joints_orth_target, axis=0))

|

| 22 |

+

tz = np.array(flength * (height3d / height2d))

|

| 23 |

+

return float(tz)

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def get_trans_from_vibe(vibe, index, use_z=True):

|

| 27 |

+

alltrans = []

|

| 28 |

+

for t in range(vibe["joints3d"][index].shape[0]):

|

| 29 |

+

# Convert crop cam to orig cam

|

| 30 |

+

# No need! Because `convert_crop_cam_to_orig_img` from demoutils of vibe

|

| 31 |

+

# does this already for us :)

|

| 32 |

+

# Its format is: [sx, sy, tx, ty]

|

| 33 |

+

cam_orig = vibe["orig_cam"][index][t]

|

| 34 |

+

x = cam_orig[2]

|

| 35 |

+

y = cam_orig[3]

|

| 36 |

+

if use_z:

|

| 37 |

+

z = get_z(cam_s=cam_orig[0], # TODO: There are two scales instead of 1.

|

| 38 |

+

cam_pos=cam_orig[2:4],

|

| 39 |

+

joints=vibe['joints3d'][index][t],

|

| 40 |

+

img_size=540,

|

| 41 |

+

flength=500)

|

| 42 |

+

# z = 500 / (0.5 * 480 * cam_orig[0])

|

| 43 |

+

else:

|

| 44 |

+

z = 0

|

| 45 |

+

trans = [x, y, z]

|

| 46 |

+

alltrans.append(trans)

|

| 47 |

+

alltrans = np.array(alltrans)

|

| 48 |

+

return alltrans - alltrans[0]

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

class UESTC(Dataset):

|

| 52 |

+

dataname = "uestc"

|

| 53 |

+

|

| 54 |

+

def __init__(self, datapath="dataset/uestc", method_name="vibe", view="all", **kargs):

|

| 55 |

+

|

| 56 |

+

self.datapath = datapath

|

| 57 |

+

self.method_name = method_name

|

| 58 |

+

self.view = view

|

| 59 |

+

super().__init__(**kargs)

|

| 60 |

+

|

| 61 |

+

# Load pre-computed #frames data

|

| 62 |

+

with open(os.path.join(datapath, 'info', 'num_frames_min.txt'), 'r') as f:

|

| 63 |

+

num_frames_video = np.asarray([int(s) for s in f.read().splitlines()])

|

| 64 |

+

|

| 65 |

+

# Out of 118 subjects -> 51 training, 67 in test

|

| 66 |

+

all_subjects = np.arange(1, 119)

|

| 67 |

+

self._tr_subjects = [

|

| 68 |

+

1, 2, 6, 12, 13, 16, 21, 24, 28, 29, 30, 31, 33, 35, 39, 41, 42, 45, 47, 50,

|

| 69 |

+

52, 54, 55, 57, 59, 61, 63, 64, 67, 69, 70, 71, 73, 77, 81, 84, 86, 87, 88,

|

| 70 |

+

90, 91, 93, 96, 99, 102, 103, 104, 107, 108, 112, 113]

|

| 71 |

+

self._test_subjects = [s for s in all_subjects if s not in self._tr_subjects]

|

| 72 |

+

|

| 73 |

+

# Load names of 25600 videos

|

| 74 |

+

with open(os.path.join(datapath, 'info', 'names.txt'), 'r') as f:

|

| 75 |

+

videos = f.read().splitlines()

|

| 76 |

+

|

| 77 |

+

self._videos = videos

|

| 78 |

+

|

| 79 |

+

if self.method_name == "vibe":

|

| 80 |

+

vibe_data_path = os.path.join(datapath, "vibe_cache_refined.pkl")

|

| 81 |

+

vibe_data = pkl.load(open(vibe_data_path, "rb"))

|

| 82 |

+

|

| 83 |

+

self._pose = vibe_data["pose"]

|

| 84 |

+

num_frames_method = [p.shape[0] for p in self._pose]

|

| 85 |

+

globpath = os.path.join(datapath, "globtrans_usez.pkl")

|

| 86 |

+

|

| 87 |

+

if os.path.exists(globpath):

|

| 88 |

+

self._globtrans = pkl.load(open(globpath, "rb"))

|

| 89 |

+

else:

|

| 90 |

+

self._globtrans = []

|

| 91 |

+

for index in tqdm(range(len(self._pose))):

|

| 92 |

+

self._globtrans.append(get_trans_from_vibe(vibe_data, index, use_z=True))

|

| 93 |

+

pkl.dump(self._globtrans, open("globtrans_usez.pkl", "wb"))

|

| 94 |

+

self._joints = vibe_data["joints3d"]

|

| 95 |

+

self._jointsIx = action2motion_joints

|

| 96 |

+

else:

|

| 97 |

+

raise ValueError("This method name is not recognized.")

|

| 98 |

+

|

| 99 |

+

num_frames_video = np.minimum(num_frames_video, num_frames_method)

|

| 100 |

+

num_frames_video = num_frames_video.astype(int)

|

| 101 |

+

self._num_frames_in_video = [x for x in num_frames_video]

|

| 102 |

+

|

| 103 |

+

N = len(videos)

|

| 104 |

+

self._actions = np.zeros(N, dtype=int)

|

| 105 |

+

for ind in range(N):

|

| 106 |

+

self._actions[ind] = self.parse_action(videos[ind])

|

| 107 |

+

|

| 108 |

+

self._actions = [x for x in self._actions]

|

| 109 |

+

|

| 110 |

+

total_num_actions = 40

|

| 111 |

+

self.num_actions = total_num_actions

|

| 112 |

+

keep_actions = np.arange(0, total_num_actions)

|

| 113 |

+

|

| 114 |

+

self._action_to_label = {x: i for i, x in enumerate(keep_actions)}

|

| 115 |

+

self._label_to_action = {i: x for i, x in enumerate(keep_actions)}

|

| 116 |

+

self.num_classes = len(keep_actions)

|

| 117 |

+

|

| 118 |

+

self._train = []

|

| 119 |

+

self._test = []

|

| 120 |

+

|

| 121 |

+

self.info_actions = []

|

| 122 |

+

|

| 123 |

+

def get_rotation(view):

|

| 124 |

+

theta = - view * np.pi/4

|

| 125 |

+

axis = torch.tensor([0, 1, 0], dtype=torch.float)

|

| 126 |

+

axisangle = theta*axis

|

| 127 |

+

matrix = geometry.axis_angle_to_matrix(axisangle)

|

| 128 |

+

return matrix

|

| 129 |

+

|

| 130 |

+

# 0 is identity if needed

|

| 131 |

+

rotations = {key: get_rotation(key) for key in [0, 1, 2, 3, 4, 5, 6, 7]}

|

| 132 |

+

|

| 133 |

+

for index, video in enumerate(tqdm(videos, desc='Preparing UESTC data..')):

|

| 134 |

+

act, view, subject, side = self._get_action_view_subject_side(video)

|

| 135 |

+

self.info_actions.append({"action": act,

|

| 136 |

+

"view": view,

|

| 137 |

+

"subject": subject,

|

| 138 |

+

"side": side})

|

| 139 |

+

if self.view == "frontview":

|

| 140 |

+

if side != 1:

|

| 141 |

+

continue

|

| 142 |

+

# rotate to front view

|

| 143 |

+

if side != 1:

|

| 144 |

+

# don't take the view 8 in side 2

|

| 145 |

+

if view == 8:

|

| 146 |

+

continue

|

| 147 |

+

rotation = rotations[view]

|

| 148 |

+

global_matrix = geometry.axis_angle_to_matrix(torch.from_numpy(self._pose[index][:, :3]))

|

| 149 |

+

# rotate the global pose

|

| 150 |

+

self._pose[index][:, :3] = geometry.matrix_to_axis_angle(rotation @ global_matrix).numpy()

|

| 151 |

+

# rotate the joints

|

| 152 |

+

self._joints[index] = self._joints[index] @ rotation.T.numpy()

|

| 153 |

+

self._globtrans[index] = (self._globtrans[index] @ rotation.T.numpy())

|

| 154 |

+

|

| 155 |

+

# add the global translation to the joints

|

| 156 |

+

self._joints[index] = self._joints[index] + self._globtrans[index][:, None]

|

| 157 |

+

|

| 158 |

+

if subject in self._tr_subjects:

|

| 159 |

+

self._train.append(index)

|

| 160 |

+

elif subject in self._test_subjects:

|

| 161 |

+

self._test.append(index)

|

| 162 |

+

else:

|

| 163 |

+

raise ValueError("This subject doesn't belong to any set.")

|

| 164 |

+

|

| 165 |

+

# if index > 200:

|

| 166 |

+

# break

|

| 167 |

+

|

| 168 |

+

# Select only sequences which have a minimum number of frames

|

| 169 |

+

if self.num_frames > 0:

|

| 170 |

+

threshold = self.num_frames*3/4

|

| 171 |

+

else:

|

| 172 |

+

threshold = 0

|

| 173 |

+

|

| 174 |

+

method_extracted_ix = np.where(num_frames_video >= threshold)[0].tolist()

|

| 175 |

+

self._train = list(set(self._train) & set(method_extracted_ix))

|

| 176 |

+

# keep the test set without modification

|

| 177 |

+

self._test = list(set(self._test))

|

| 178 |

+

|

| 179 |

+

action_classes_file = os.path.join(datapath, "info/action_classes.txt")

|

| 180 |

+

with open(action_classes_file, 'r') as f:

|

| 181 |

+

self._action_classes = np.array(f.read().splitlines())

|

| 182 |

+

|

| 183 |

+

# with open(processd_path, 'wb') as file:

|

| 184 |

+

# pkl.dump(xxx, file)

|

| 185 |

+

|

| 186 |

+

def _load_joints3D(self, ind, frame_ix):

|

| 187 |

+

if len(self._joints[ind]) == 0:

|

| 188 |

+

raise ValueError(

|

| 189 |

+

f"Cannot load index {ind} in _load_joints3D function.")

|

| 190 |

+

if self._jointsIx is not None:

|

| 191 |

+

joints3D = self._joints[ind][frame_ix][:, self._jointsIx]

|

| 192 |

+

else:

|

| 193 |

+

joints3D = self._joints[ind][frame_ix]

|

| 194 |

+

|

| 195 |

+

return joints3D

|

| 196 |

+

|

| 197 |

+

def _load_rotvec(self, ind, frame_ix):

|

| 198 |

+

# 72 dim smpl

|

| 199 |

+

pose = self._pose[ind][frame_ix, :].reshape(-1, 24, 3)

|

| 200 |

+

return pose

|

| 201 |

+

|

| 202 |

+

def _get_action_view_subject_side(self, videopath):

|

| 203 |

+

# TODO: Can be moved to tools.py

|

| 204 |

+

spl = videopath.split('_')

|

| 205 |

+

action = int(spl[0][1:])

|

| 206 |

+

view = int(spl[1][1:])

|

| 207 |

+

subject = int(spl[2][1:])

|

| 208 |

+

side = int(spl[3][1:])

|

| 209 |

+

return action, view, subject, side

|

| 210 |

+

|

| 211 |

+

def _get_videopath(self, action, view, subject, side):

|

| 212 |

+

# Unused function

|

| 213 |

+

return 'a{:d}_d{:d}_p{:03d}_c{:d}_color.avi'.format(

|

| 214 |

+

action, view, subject, side)

|

| 215 |

+

|

| 216 |

+

def parse_action(self, path, return_int=True):

|

| 217 |

+

# Override parent method

|

| 218 |

+

info, _, _, _ = self._get_action_view_subject_side(path)

|

| 219 |

+

if return_int:

|

| 220 |

+

return int(info)

|

| 221 |

+

else:

|

| 222 |

+

return info

|

| 223 |

+

|

| 224 |

+

|

| 225 |

+

if __name__ == "__main__":

|

| 226 |

+

dataset = UESTC()

|

main/data_loaders/get_data.py

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|