Upload 98 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- LICENSE +21 -0

- MODEL_ZOO.md +20 -0

- README.md +0 -3

- augmentations/augmentations_cifar.py +190 -0

- augmentations/augmentations_stl.py +190 -0

- augmentations/augmentations_tiny.py +190 -0

- data_statistics.py +61 -0

- download_imagenet.sh +47 -0

- environment.yml +188 -0

- evaluate_imagenet.py +289 -0

- evaluate_transfer.py +168 -0

- figs/in-linear.png +0 -0

- figs/in-loss-bt.png +0 -0

- figs/in-loss-reg.png +3 -0

- figs/mix-bt.jpg +0 -0

- figs/mix-bt.svg +0 -0

- hubconf.py +19 -0

- linear.py +166 -0

- main.py +271 -0

- main_imagenet.py +463 -0

- model.py +40 -0

- preprocess_datasets/preprocess_tinyimagenet.sh +34 -0

- scripts-linear-resnet18/cifar10.sh +14 -0

- scripts-linear-resnet18/cifar100.sh +14 -0

- scripts-linear-resnet18/stl10.sh +14 -0

- scripts-linear-resnet18/tinyimagenet.sh +14 -0

- scripts-linear-resnet50/cifar10.sh +14 -0

- scripts-linear-resnet50/cifar100.sh +14 -0

- scripts-linear-resnet50/imagenet_sup.sh +11 -0

- scripts-linear-resnet50/stl10.sh +14 -0

- scripts-linear-resnet50/tinyimagenet.sh +14 -0

- scripts-pretrain-resnet18/cifar10.sh +21 -0

- scripts-pretrain-resnet18/cifar100.sh +20 -0

- scripts-pretrain-resnet18/stl10.sh +20 -0

- scripts-pretrain-resnet18/tinyimagenet.sh +20 -0

- scripts-pretrain-resnet50/cifar10.sh +20 -0

- scripts-pretrain-resnet50/cifar100.sh +20 -0

- scripts-pretrain-resnet50/imagenet.sh +15 -0

- scripts-pretrain-resnet50/stl10.sh +20 -0

- scripts-pretrain-resnet50/tinyimagenet.sh +20 -0

- scripts-transfer-resnet18/cifar10-to-x.sh +28 -0

- scripts-transfer-resnet18/cifar100-to-x.sh +28 -0

- scripts-transfer-resnet18/stl10-to-x-bt.sh +28 -0

- setup.sh +12 -0

- ssl-sota/README.md +87 -0

- ssl-sota/cfg.py +152 -0

- ssl-sota/datasets/__init__.py +22 -0

- ssl-sota/datasets/base.py +67 -0

- ssl-sota/datasets/cifar10.py +26 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

figs/in-loss-reg.png filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Wele Gedara Chaminda Bandara

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

MODEL_ZOO.md

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The following links provide pre-trained models:

|

| 2 |

+

# ResNet-18 Pre-trained Models

|

| 3 |

+

| Dataset | d | Lambda_BT | Lambda_Reg | Path to Pretrained Model | KNN Acc. | Linear Acc. |

|

| 4 |

+

| ---------- | --- | ---------- | ---------- | ------------------------ | -------- | ----------- |

|

| 5 |

+

| CIFAR-10 | 1024 | 0.0078125 | 4.0 | 4wdhbpcf_0.0078125_1024_256_cifar10_model.pth | 90.52 | 92.58 |

|

| 6 |

+

| CIFAR-100 | 1024 | 0.0078125 | 4.0 | 76kk7scz_0.0078125_1024_256_cifar100_model.pth | 61.25 | 69.31 |

|

| 7 |

+

| TinyImageNet | 1024 | 0.0009765 | 4.0 | 02azq6fs_0.0009765_1024_256_tiny_imagenet_model.pth | 38.11 | 51.67 |

|

| 8 |

+

| STL-10 | 1024 | 0.0078125 | 2.0 | i7det4xq_0.0078125_1024_256_stl10_model.pth | 88.94 | 91.02 |

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

# ResNet-50 Pre-trained Models

|

| 13 |

+

| Dataset | d | Lambda_BT | Lambda_Reg | Path to Pretrained Model | KNN Acc. | Linear Acc. |

|

| 14 |

+

| ---------- | --- | ---------- | ---------- | ------------------------ | -------- | ----------- |

|

| 15 |

+

| CIFAR-10 | 1024 | 0.0078125 | 4.0 | v3gwgusq_0.0078125_1024_256_cifar10_model.pth | 91.39 | 93.89 |

|

| 16 |

+

| CIFAR-100 | 1024 | 0.0078125 | 4.0 | z6ngefw7_0.0078125_1024_256_cifar100_model_2000.pth | 64.32 | 72.51 |

|

| 17 |

+

| TinyImageNet | 1024 | 0.0009765 | 4.0 | kxlkigsv_0.0009765_1024_256_tiny_imagenet_model_2000.pth | 42.21 | 51.84 |

|

| 18 |

+

| STL-10 | 1024 | 0.0078125 | 2.0 | pbknx38b_0.0078125_1024_256_stl10_model.pth | 87.79 | 91.70 |

|

| 19 |

+

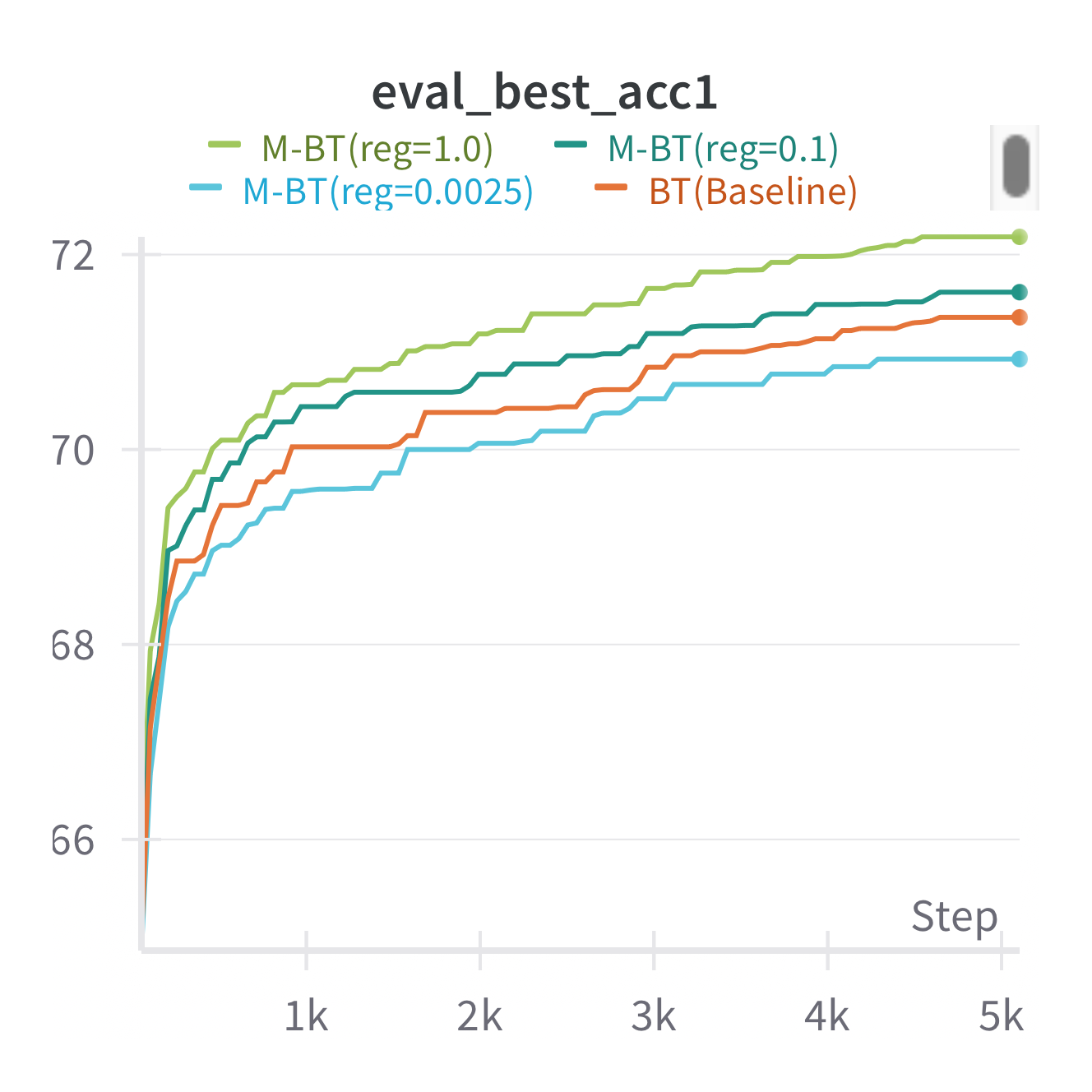

| ImageNet | 1024 | 0.0051 | 0.1 | 13awtq23_0.0051_8192_1024_imagenet_0.1_resnet50.pth | - | 72.1 |

|

| 20 |

+

|

README.md

CHANGED

|

@@ -1,6 +1,3 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: mit

|

| 3 |

-

---

|

| 4 |

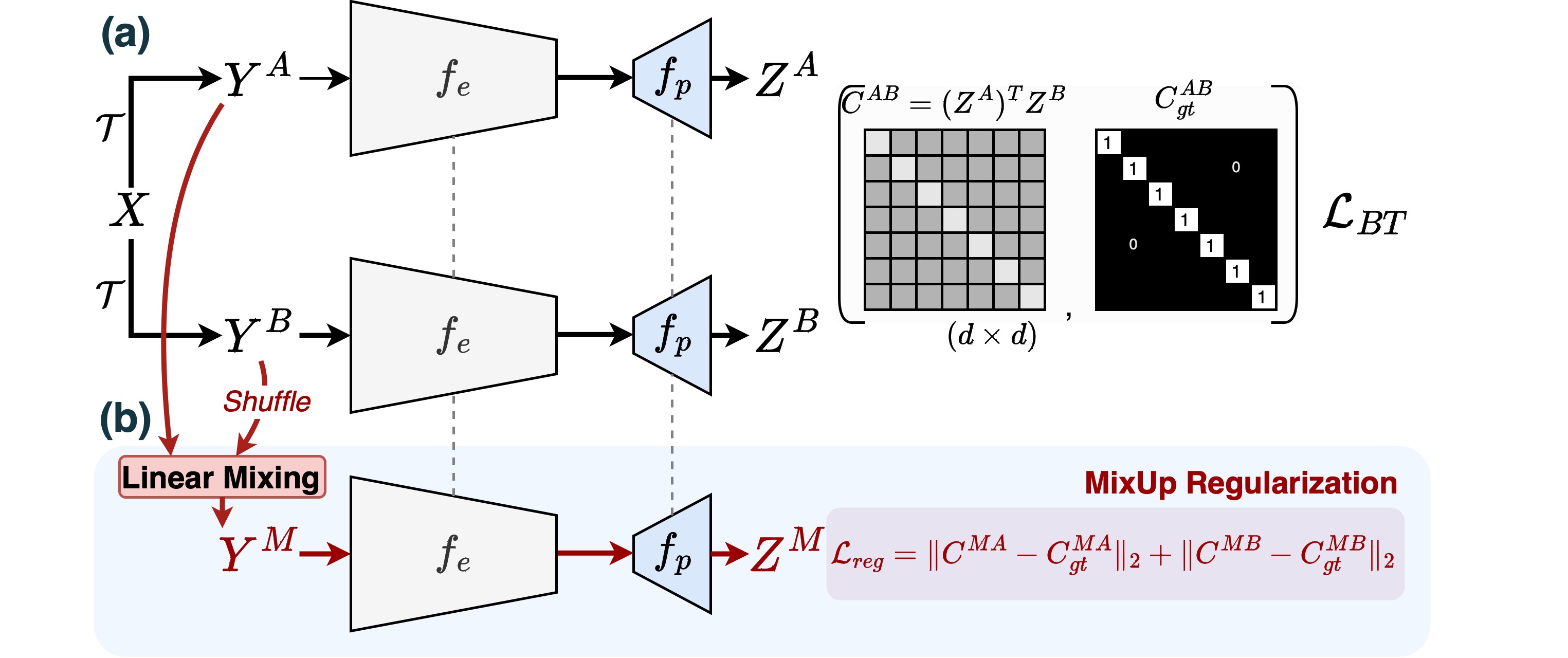

# Mixed Barlow Twins

|

| 5 |

[**Guarding Barlow Twins Against Overfitting with Mixed Samples**](https://arxiv.org/abs/2312.02151)<br>

|

| 6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

# Mixed Barlow Twins

|

| 2 |

[**Guarding Barlow Twins Against Overfitting with Mixed Samples**](https://arxiv.org/abs/2312.02151)<br>

|

| 3 |

|

augmentations/augmentations_cifar.py

ADDED

|

@@ -0,0 +1,190 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2019 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# https://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

# ==============================================================================

|

| 15 |

+

"""Base augmentations operators."""

|

| 16 |

+

|

| 17 |

+

import numpy as np

|

| 18 |

+

from PIL import Image, ImageOps, ImageEnhance

|

| 19 |

+

|

| 20 |

+

# ImageNet code should change this value

|

| 21 |

+

IMAGE_SIZE = 32

|

| 22 |

+

import torch

|

| 23 |

+

from torchvision import transforms

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def int_parameter(level, maxval):

|

| 27 |

+

"""Helper function to scale `val` between 0 and maxval .

|

| 28 |

+

|

| 29 |

+

Args:

|

| 30 |

+

level: Level of the operation that will be between [0, `PARAMETER_MAX`].

|

| 31 |

+

maxval: Maximum value that the operation can have. This will be scaled to

|

| 32 |

+

level/PARAMETER_MAX.

|

| 33 |

+

|

| 34 |

+

Returns:

|

| 35 |

+

An int that results from scaling `maxval` according to `level`.

|

| 36 |

+

"""

|

| 37 |

+

return int(level * maxval / 10)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

def float_parameter(level, maxval):

|

| 41 |

+

"""Helper function to scale `val` between 0 and maxval.

|

| 42 |

+

|

| 43 |

+

Args:

|

| 44 |

+

level: Level of the operation that will be between [0, `PARAMETER_MAX`].

|

| 45 |

+

maxval: Maximum value that the operation can have. This will be scaled to

|

| 46 |

+

level/PARAMETER_MAX.

|

| 47 |

+

|

| 48 |

+

Returns:

|

| 49 |

+

A float that results from scaling `maxval` according to `level`.

|

| 50 |

+

"""

|

| 51 |

+

return float(level) * maxval / 10.

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def sample_level(n):

|

| 55 |

+

return np.random.uniform(low=0.1, high=n)

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def autocontrast(pil_img, _):

|

| 59 |

+

return ImageOps.autocontrast(pil_img)

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

def equalize(pil_img, _):

|

| 63 |

+

return ImageOps.equalize(pil_img)

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

def posterize(pil_img, level):

|

| 67 |

+

level = int_parameter(sample_level(level), 4)

|

| 68 |

+

return ImageOps.posterize(pil_img, 4 - level)

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

def rotate(pil_img, level):

|

| 72 |

+

degrees = int_parameter(sample_level(level), 30)

|

| 73 |

+

if np.random.uniform() > 0.5:

|

| 74 |

+

degrees = -degrees

|

| 75 |

+

return pil_img.rotate(degrees, resample=Image.BILINEAR)

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

def solarize(pil_img, level):

|

| 79 |

+

level = int_parameter(sample_level(level), 256)

|

| 80 |

+

return ImageOps.solarize(pil_img, 256 - level)

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

def shear_x(pil_img, level):

|

| 84 |

+

level = float_parameter(sample_level(level), 0.3)

|

| 85 |

+

if np.random.uniform() > 0.5:

|

| 86 |

+

level = -level

|

| 87 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 88 |

+

Image.AFFINE, (1, level, 0, 0, 1, 0),

|

| 89 |

+

resample=Image.BILINEAR)

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

def shear_y(pil_img, level):

|

| 93 |

+

level = float_parameter(sample_level(level), 0.3)

|

| 94 |

+

if np.random.uniform() > 0.5:

|

| 95 |

+

level = -level

|

| 96 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 97 |

+

Image.AFFINE, (1, 0, 0, level, 1, 0),

|

| 98 |

+

resample=Image.BILINEAR)

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

def translate_x(pil_img, level):

|

| 102 |

+

level = int_parameter(sample_level(level), IMAGE_SIZE / 3)

|

| 103 |

+

if np.random.random() > 0.5:

|

| 104 |

+

level = -level

|

| 105 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 106 |

+

Image.AFFINE, (1, 0, level, 0, 1, 0),

|

| 107 |

+

resample=Image.BILINEAR)

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

def translate_y(pil_img, level):

|

| 111 |

+

level = int_parameter(sample_level(level), IMAGE_SIZE / 3)

|

| 112 |

+

if np.random.random() > 0.5:

|

| 113 |

+

level = -level

|

| 114 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 115 |

+

Image.AFFINE, (1, 0, 0, 0, 1, level),

|

| 116 |

+

resample=Image.BILINEAR)

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

# operation that overlaps with ImageNet-C's test set

|

| 120 |

+

def color(pil_img, level):

|

| 121 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 122 |

+

return ImageEnhance.Color(pil_img).enhance(level)

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

# operation that overlaps with ImageNet-C's test set

|

| 126 |

+

def contrast(pil_img, level):

|

| 127 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 128 |

+

return ImageEnhance.Contrast(pil_img).enhance(level)

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

# operation that overlaps with ImageNet-C's test set

|

| 132 |

+

def brightness(pil_img, level):

|

| 133 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 134 |

+

return ImageEnhance.Brightness(pil_img).enhance(level)

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

# operation that overlaps with ImageNet-C's test set

|

| 138 |

+

def sharpness(pil_img, level):

|

| 139 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 140 |

+

return ImageEnhance.Sharpness(pil_img).enhance(level)

|

| 141 |

+

|

| 142 |

+

def random_resized_crop(pil_img, level):

|

| 143 |

+

return transforms.RandomResizedCrop(32)(pil_img)

|

| 144 |

+

|

| 145 |

+

def random_flip(pil_img, level):

|

| 146 |

+

return transforms.RandomHorizontalFlip(p=0.5)(pil_img)

|

| 147 |

+

|

| 148 |

+

def grayscale(pil_img, level):

|

| 149 |

+

return transforms.Grayscale(num_output_channels=3)(pil_img)

|

| 150 |

+

|

| 151 |

+

augmentations = [

|

| 152 |

+

autocontrast, equalize, posterize, rotate, solarize, shear_x, shear_y,

|

| 153 |

+

translate_x, translate_y, grayscale #random_resized_crop, random_flip

|

| 154 |

+

]

|

| 155 |

+

|

| 156 |

+

augmentations_all = [

|

| 157 |

+

autocontrast, equalize, posterize, rotate, solarize, shear_x, shear_y,

|

| 158 |

+

translate_x, translate_y, color, contrast, brightness, sharpness, grayscale #, random_resized_crop, random_flip

|

| 159 |

+

]

|

| 160 |

+

|

| 161 |

+

def aug_cifar(image, preprocess, mixture_width=3, mixture_depth=-1, aug_severity=3):

|

| 162 |

+

"""Perform AugMix augmentations and compute mixture.

|

| 163 |

+

|

| 164 |

+

Args:

|

| 165 |

+

image: PIL.Image input image

|

| 166 |

+

preprocess: Preprocessing function which should return a torch tensor.

|

| 167 |

+

|

| 168 |

+

Returns:

|

| 169 |

+

mixed: Augmented and mixed image.

|

| 170 |

+

"""

|

| 171 |

+

aug_list = augmentations_all

|

| 172 |

+

# if args.all_ops:

|

| 173 |

+

# aug_list = augmentations.augmentations_all

|

| 174 |

+

|

| 175 |

+

ws = np.float32(np.random.dirichlet([1] * mixture_width))

|

| 176 |

+

m = np.float32(np.random.beta(1, 1))

|

| 177 |

+

|

| 178 |

+

mix = torch.zeros_like(preprocess(image))

|

| 179 |

+

for i in range(mixture_width):

|

| 180 |

+

image_aug = image.copy()

|

| 181 |

+

depth = mixture_depth if mixture_depth > 0 else np.random.randint(

|

| 182 |

+

1, 4)

|

| 183 |

+

for _ in range(depth):

|

| 184 |

+

op = np.random.choice(aug_list)

|

| 185 |

+

image_aug = op(image_aug, aug_severity)

|

| 186 |

+

# Preprocessing commutes since all coefficients are convex

|

| 187 |

+

mix += ws[i] * preprocess(image_aug)

|

| 188 |

+

|

| 189 |

+

# mixed = (1 - m) * preprocess(image) + m * mix

|

| 190 |

+

return mix

|

augmentations/augmentations_stl.py

ADDED

|

@@ -0,0 +1,190 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2019 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# https://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

# ==============================================================================

|

| 15 |

+

"""Base augmentations operators."""

|

| 16 |

+

|

| 17 |

+

import numpy as np

|

| 18 |

+

from PIL import Image, ImageOps, ImageEnhance

|

| 19 |

+

|

| 20 |

+

# ImageNet code should change this value

|

| 21 |

+

IMAGE_SIZE = 64

|

| 22 |

+

import torch

|

| 23 |

+

from torchvision import transforms

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def int_parameter(level, maxval):

|

| 27 |

+

"""Helper function to scale `val` between 0 and maxval .

|

| 28 |

+

|

| 29 |

+

Args:

|

| 30 |

+

level: Level of the operation that will be between [0, `PARAMETER_MAX`].

|

| 31 |

+

maxval: Maximum value that the operation can have. This will be scaled to

|

| 32 |

+

level/PARAMETER_MAX.

|

| 33 |

+

|

| 34 |

+

Returns:

|

| 35 |

+

An int that results from scaling `maxval` according to `level`.

|

| 36 |

+

"""

|

| 37 |

+

return int(level * maxval / 10)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

def float_parameter(level, maxval):

|

| 41 |

+

"""Helper function to scale `val` between 0 and maxval.

|

| 42 |

+

|

| 43 |

+

Args:

|

| 44 |

+

level: Level of the operation that will be between [0, `PARAMETER_MAX`].

|

| 45 |

+

maxval: Maximum value that the operation can have. This will be scaled to

|

| 46 |

+

level/PARAMETER_MAX.

|

| 47 |

+

|

| 48 |

+

Returns:

|

| 49 |

+

A float that results from scaling `maxval` according to `level`.

|

| 50 |

+

"""

|

| 51 |

+

return float(level) * maxval / 10.

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def sample_level(n):

|

| 55 |

+

return np.random.uniform(low=0.1, high=n)

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def autocontrast(pil_img, _):

|

| 59 |

+

return ImageOps.autocontrast(pil_img)

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

def equalize(pil_img, _):

|

| 63 |

+

return ImageOps.equalize(pil_img)

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

def posterize(pil_img, level):

|

| 67 |

+

level = int_parameter(sample_level(level), 4)

|

| 68 |

+

return ImageOps.posterize(pil_img, 4 - level)

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

def rotate(pil_img, level):

|

| 72 |

+

degrees = int_parameter(sample_level(level), 30)

|

| 73 |

+

if np.random.uniform() > 0.5:

|

| 74 |

+

degrees = -degrees

|

| 75 |

+

return pil_img.rotate(degrees, resample=Image.BILINEAR)

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

def solarize(pil_img, level):

|

| 79 |

+

level = int_parameter(sample_level(level), 256)

|

| 80 |

+

return ImageOps.solarize(pil_img, 256 - level)

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

def shear_x(pil_img, level):

|

| 84 |

+

level = float_parameter(sample_level(level), 0.3)

|

| 85 |

+

if np.random.uniform() > 0.5:

|

| 86 |

+

level = -level

|

| 87 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 88 |

+

Image.AFFINE, (1, level, 0, 0, 1, 0),

|

| 89 |

+

resample=Image.BILINEAR)

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

def shear_y(pil_img, level):

|

| 93 |

+

level = float_parameter(sample_level(level), 0.3)

|

| 94 |

+

if np.random.uniform() > 0.5:

|

| 95 |

+

level = -level

|

| 96 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 97 |

+

Image.AFFINE, (1, 0, 0, level, 1, 0),

|

| 98 |

+

resample=Image.BILINEAR)

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

def translate_x(pil_img, level):

|

| 102 |

+

level = int_parameter(sample_level(level), IMAGE_SIZE / 3)

|

| 103 |

+

if np.random.random() > 0.5:

|

| 104 |

+

level = -level

|

| 105 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 106 |

+

Image.AFFINE, (1, 0, level, 0, 1, 0),

|

| 107 |

+

resample=Image.BILINEAR)

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

def translate_y(pil_img, level):

|

| 111 |

+

level = int_parameter(sample_level(level), IMAGE_SIZE / 3)

|

| 112 |

+

if np.random.random() > 0.5:

|

| 113 |

+

level = -level

|

| 114 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 115 |

+

Image.AFFINE, (1, 0, 0, 0, 1, level),

|

| 116 |

+

resample=Image.BILINEAR)

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

# operation that overlaps with ImageNet-C's test set

|

| 120 |

+

def color(pil_img, level):

|

| 121 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 122 |

+

return ImageEnhance.Color(pil_img).enhance(level)

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

# operation that overlaps with ImageNet-C's test set

|

| 126 |

+

def contrast(pil_img, level):

|

| 127 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 128 |

+

return ImageEnhance.Contrast(pil_img).enhance(level)

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

# operation that overlaps with ImageNet-C's test set

|

| 132 |

+

def brightness(pil_img, level):

|

| 133 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 134 |

+

return ImageEnhance.Brightness(pil_img).enhance(level)

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

# operation that overlaps with ImageNet-C's test set

|

| 138 |

+

def sharpness(pil_img, level):

|

| 139 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 140 |

+

return ImageEnhance.Sharpness(pil_img).enhance(level)

|

| 141 |

+

|

| 142 |

+

def random_resized_crop(pil_img, level):

|

| 143 |

+

return transforms.RandomResizedCrop(32)(pil_img)

|

| 144 |

+

|

| 145 |

+

def random_flip(pil_img, level):

|

| 146 |

+

return transforms.RandomHorizontalFlip(p=0.5)(pil_img)

|

| 147 |

+

|

| 148 |

+

def grayscale(pil_img, level):

|

| 149 |

+

return transforms.Grayscale(num_output_channels=3)(pil_img)

|

| 150 |

+

|

| 151 |

+

augmentations = [

|

| 152 |

+

autocontrast, equalize, posterize, rotate, solarize, shear_x, shear_y,

|

| 153 |

+

translate_x, translate_y, grayscale #random_resized_crop, random_flip

|

| 154 |

+

]

|

| 155 |

+

|

| 156 |

+

augmentations_all = [

|

| 157 |

+

autocontrast, equalize, posterize, rotate, solarize, shear_x, shear_y,

|

| 158 |

+

translate_x, translate_y, color, contrast, brightness, sharpness, grayscale #, random_resized_crop, random_flip

|

| 159 |

+

]

|

| 160 |

+

|

| 161 |

+

def aug_stl(image, preprocess, mixture_width=3, mixture_depth=-1, aug_severity=3):

|

| 162 |

+

"""Perform AugMix augmentations and compute mixture.

|

| 163 |

+

|

| 164 |

+

Args:

|

| 165 |

+

image: PIL.Image input image

|

| 166 |

+

preprocess: Preprocessing function which should return a torch tensor.

|

| 167 |

+

|

| 168 |

+

Returns:

|

| 169 |

+

mixed: Augmented and mixed image.

|

| 170 |

+

"""

|

| 171 |

+

aug_list = augmentations

|

| 172 |

+

# if args.all_ops:

|

| 173 |

+

# aug_list = augmentations.augmentations_all

|

| 174 |

+

|

| 175 |

+

ws = np.float32(np.random.dirichlet([1] * mixture_width))

|

| 176 |

+

m = np.float32(np.random.beta(1, 1))

|

| 177 |

+

|

| 178 |

+

mix = torch.zeros_like(preprocess(image))

|

| 179 |

+

for i in range(mixture_width):

|

| 180 |

+

image_aug = image.copy()

|

| 181 |

+

depth = mixture_depth if mixture_depth > 0 else np.random.randint(

|

| 182 |

+

1, 4)

|

| 183 |

+

for _ in range(depth):

|

| 184 |

+

op = np.random.choice(aug_list)

|

| 185 |

+

image_aug = op(image_aug, aug_severity)

|

| 186 |

+

# Preprocessing commutes since all coefficients are convex

|

| 187 |

+

mix += ws[i] * preprocess(image_aug)

|

| 188 |

+

|

| 189 |

+

mixed = (1 - m) * preprocess(image) + m * mix

|

| 190 |

+

return mixed

|

augmentations/augmentations_tiny.py

ADDED

|

@@ -0,0 +1,190 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2019 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# https://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

# ==============================================================================

|

| 15 |

+

"""Base augmentations operators."""

|

| 16 |

+

|

| 17 |

+

import numpy as np

|

| 18 |

+

from PIL import Image, ImageOps, ImageEnhance

|

| 19 |

+

|

| 20 |

+

# ImageNet code should change this value

|

| 21 |

+

IMAGE_SIZE = 64

|

| 22 |

+

import torch

|

| 23 |

+

from torchvision import transforms

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def int_parameter(level, maxval):

|

| 27 |

+

"""Helper function to scale `val` between 0 and maxval .

|

| 28 |

+

|

| 29 |

+

Args:

|

| 30 |

+

level: Level of the operation that will be between [0, `PARAMETER_MAX`].

|

| 31 |

+

maxval: Maximum value that the operation can have. This will be scaled to

|

| 32 |

+

level/PARAMETER_MAX.

|

| 33 |

+

|

| 34 |

+

Returns:

|

| 35 |

+

An int that results from scaling `maxval` according to `level`.

|

| 36 |

+

"""

|

| 37 |

+

return int(level * maxval / 10)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

def float_parameter(level, maxval):

|

| 41 |

+

"""Helper function to scale `val` between 0 and maxval.

|

| 42 |

+

|

| 43 |

+

Args:

|

| 44 |

+

level: Level of the operation that will be between [0, `PARAMETER_MAX`].

|

| 45 |

+

maxval: Maximum value that the operation can have. This will be scaled to

|

| 46 |

+

level/PARAMETER_MAX.

|

| 47 |

+

|

| 48 |

+

Returns:

|

| 49 |

+

A float that results from scaling `maxval` according to `level`.

|

| 50 |

+

"""

|

| 51 |

+

return float(level) * maxval / 10.

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def sample_level(n):

|

| 55 |

+

return np.random.uniform(low=0.1, high=n)

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def autocontrast(pil_img, _):

|

| 59 |

+

return ImageOps.autocontrast(pil_img)

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

def equalize(pil_img, _):

|

| 63 |

+

return ImageOps.equalize(pil_img)

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

def posterize(pil_img, level):

|

| 67 |

+

level = int_parameter(sample_level(level), 4)

|

| 68 |

+

return ImageOps.posterize(pil_img, 4 - level)

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

def rotate(pil_img, level):

|

| 72 |

+

degrees = int_parameter(sample_level(level), 30)

|

| 73 |

+

if np.random.uniform() > 0.5:

|

| 74 |

+

degrees = -degrees

|

| 75 |

+

return pil_img.rotate(degrees, resample=Image.BILINEAR)

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

def solarize(pil_img, level):

|

| 79 |

+

level = int_parameter(sample_level(level), 256)

|

| 80 |

+

return ImageOps.solarize(pil_img, 256 - level)

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

def shear_x(pil_img, level):

|

| 84 |

+

level = float_parameter(sample_level(level), 0.3)

|

| 85 |

+

if np.random.uniform() > 0.5:

|

| 86 |

+

level = -level

|

| 87 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 88 |

+

Image.AFFINE, (1, level, 0, 0, 1, 0),

|

| 89 |

+

resample=Image.BILINEAR)

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

def shear_y(pil_img, level):

|

| 93 |

+

level = float_parameter(sample_level(level), 0.3)

|

| 94 |

+

if np.random.uniform() > 0.5:

|

| 95 |

+

level = -level

|

| 96 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 97 |

+

Image.AFFINE, (1, 0, 0, level, 1, 0),

|

| 98 |

+

resample=Image.BILINEAR)

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

def translate_x(pil_img, level):

|

| 102 |

+

level = int_parameter(sample_level(level), IMAGE_SIZE / 3)

|

| 103 |

+

if np.random.random() > 0.5:

|

| 104 |

+

level = -level

|

| 105 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 106 |

+

Image.AFFINE, (1, 0, level, 0, 1, 0),

|

| 107 |

+

resample=Image.BILINEAR)

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

def translate_y(pil_img, level):

|

| 111 |

+

level = int_parameter(sample_level(level), IMAGE_SIZE / 3)

|

| 112 |

+

if np.random.random() > 0.5:

|

| 113 |

+

level = -level

|

| 114 |

+

return pil_img.transform((IMAGE_SIZE, IMAGE_SIZE),

|

| 115 |

+

Image.AFFINE, (1, 0, 0, 0, 1, level),

|

| 116 |

+

resample=Image.BILINEAR)

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

# operation that overlaps with ImageNet-C's test set

|

| 120 |

+

def color(pil_img, level):

|

| 121 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 122 |

+

return ImageEnhance.Color(pil_img).enhance(level)

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

# operation that overlaps with ImageNet-C's test set

|

| 126 |

+

def contrast(pil_img, level):

|

| 127 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 128 |

+

return ImageEnhance.Contrast(pil_img).enhance(level)

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

# operation that overlaps with ImageNet-C's test set

|

| 132 |

+

def brightness(pil_img, level):

|

| 133 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 134 |

+

return ImageEnhance.Brightness(pil_img).enhance(level)

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

# operation that overlaps with ImageNet-C's test set

|

| 138 |

+

def sharpness(pil_img, level):

|

| 139 |

+

level = float_parameter(sample_level(level), 1.8) + 0.1

|

| 140 |

+

return ImageEnhance.Sharpness(pil_img).enhance(level)

|

| 141 |

+

|

| 142 |

+

def random_resized_crop(pil_img, level):

|

| 143 |

+

return transforms.RandomResizedCrop(32)(pil_img)

|

| 144 |

+

|

| 145 |

+

def random_flip(pil_img, level):

|

| 146 |

+

return transforms.RandomHorizontalFlip(p=0.5)(pil_img)

|

| 147 |

+

|

| 148 |

+

def grayscale(pil_img, level):

|

| 149 |

+

return transforms.Grayscale(num_output_channels=3)(pil_img)

|

| 150 |

+

|

| 151 |

+

augmentations = [

|

| 152 |

+

autocontrast, equalize, posterize, rotate, solarize, shear_x, shear_y,

|

| 153 |

+

translate_x, translate_y, grayscale #random_resized_crop, random_flip

|

| 154 |

+

]

|

| 155 |

+

|

| 156 |

+

augmentations_all = [

|

| 157 |

+

autocontrast, equalize, posterize, rotate, solarize, shear_x, shear_y,

|

| 158 |

+

translate_x, translate_y, color, contrast, brightness, sharpness, grayscale #, random_resized_crop, random_flip

|

| 159 |

+

]

|

| 160 |

+

|

| 161 |

+

def aug_tiny(image, preprocess, mixture_width=3, mixture_depth=-1, aug_severity=3):

|

| 162 |

+

"""Perform AugMix augmentations and compute mixture.

|

| 163 |

+

|

| 164 |

+

Args:

|

| 165 |

+

image: PIL.Image input image

|

| 166 |

+

preprocess: Preprocessing function which should return a torch tensor.

|

| 167 |

+

|

| 168 |

+

Returns:

|

| 169 |

+

mixed: Augmented and mixed image.

|

| 170 |

+

"""

|

| 171 |

+

aug_list = augmentations

|

| 172 |

+

# if args.all_ops:

|

| 173 |

+

# aug_list = augmentations.augmentations_all

|

| 174 |

+

|

| 175 |

+

ws = np.float32(np.random.dirichlet([1] * mixture_width))

|

| 176 |

+

m = np.float32(np.random.beta(1, 1))

|

| 177 |

+

|

| 178 |

+

mix = torch.zeros_like(preprocess(image))

|

| 179 |

+

for i in range(mixture_width):

|

| 180 |

+

image_aug = image.copy()

|

| 181 |

+

depth = mixture_depth if mixture_depth > 0 else np.random.randint(

|

| 182 |

+

1, 4)

|

| 183 |

+

for _ in range(depth):

|

| 184 |

+

op = np.random.choice(aug_list)

|

| 185 |

+

image_aug = op(image_aug, aug_severity)

|

| 186 |

+

# Preprocessing commutes since all coefficients are convex

|

| 187 |

+

mix += ws[i] * preprocess(image_aug)

|

| 188 |

+

|

| 189 |

+

mixed = (1 - m) * preprocess(image) + m * mix

|

| 190 |

+

return mixed

|

data_statistics.py

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

def get_data_mean_and_stdev(dataset):

|

| 2 |

+

if dataset == 'CIFAR10' or dataset == 'CIFAR100':

|

| 3 |

+

mean = [0.5, 0.5, 0.5]

|

| 4 |

+

std = [0.5, 0.5, 0.5]

|

| 5 |

+

elif dataset == 'STL-10':

|

| 6 |

+

mean = [0.491, 0.482, 0.447]

|

| 7 |

+

std = [0.247, 0.244, 0.262]

|

| 8 |

+

elif dataset == 'ImageNet':

|

| 9 |

+

mean = [0.485, 0.456, 0.406]

|

| 10 |

+

std = [0.229, 0.224, 0.225]

|

| 11 |

+

elif dataset == 'aircraft':

|

| 12 |

+

mean = [0.486, 0.507, 0.525]

|

| 13 |

+

std = [0.266, 0.260, 0.276]

|

| 14 |

+

elif dataset == 'cu_birds':

|

| 15 |

+

mean = [0.483, 0.491, 0.424]

|

| 16 |

+

std = [0.228, 0.224, 0.259]

|

| 17 |

+

elif dataset == 'dtd':

|

| 18 |

+

mean = [0.533, 0.474, 0.426]

|

| 19 |

+

std = [0.261, 0.250, 0.259]

|

| 20 |

+

elif dataset == 'fashionmnist':

|

| 21 |

+

mean = [0.348, 0.348, 0.348]

|

| 22 |

+

std = [0.347, 0.347, 0.347]

|

| 23 |

+

elif dataset == 'mnist':

|

| 24 |

+

mean = [0.170, 0.170, 0.170]

|

| 25 |

+

std = [0.320, 0.320, 0.320]

|

| 26 |

+

elif dataset == 'traffic_sign':

|

| 27 |

+

mean = [0.335, 0.291, 0.295]

|

| 28 |

+

std = [0.267, 0.249, 0.251]

|

| 29 |

+

elif dataset == 'vgg_flower':

|

| 30 |

+

mean = [0.518, 0.410, 0.329]

|

| 31 |

+

std = [0.296, 0.249, 0.285]

|

| 32 |

+

else:

|

| 33 |

+

raise Exception('Dataset %s not supported.'%dataset)

|

| 34 |

+

return mean, std

|

| 35 |

+

|

| 36 |

+

def get_data_nclass(dataset):

|

| 37 |

+

if dataset == 'cifar10':

|

| 38 |

+

nclass = 10

|

| 39 |

+

elif dataset == 'cifar100cifar10':

|

| 40 |

+

nclass = 100

|

| 41 |

+

elif dataset == 'stl-10':

|

| 42 |

+

nclass = 10

|

| 43 |

+

elif dataset == 'ImageNet':

|

| 44 |

+

nclass = 1000

|

| 45 |

+

elif dataset == 'aircraft':

|

| 46 |

+

nclass = 102

|

| 47 |

+

elif dataset == 'cu_birds':

|

| 48 |

+

nclass = 200

|

| 49 |

+

elif dataset == 'dtd':

|

| 50 |

+

nclass = 47

|

| 51 |

+

elif dataset == 'fashionmnist':

|

| 52 |

+

nclass = 10

|

| 53 |

+

elif dataset == 'mnist':

|

| 54 |

+

nclass = 10

|

| 55 |

+

elif dataset == 'traffic_sign':

|

| 56 |

+

nclass = 43

|

| 57 |

+

elif dataset == 'vgg_flower':

|

| 58 |

+

nclass = 102

|

| 59 |

+

else:

|

| 60 |

+

raise Exception('Dataset %s not supported.'%dataset)

|

| 61 |

+

return nclass

|

download_imagenet.sh

ADDED

|

@@ -0,0 +1,47 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

# https://gist.github.com/BIGBALLON/8a71d225eff18d88e469e6ea9b39cef4

|

| 3 |

+

cd /mnt/store/wbandar1/datasets

|

| 4 |

+

wget https://image-net.org/data/ILSVRC/2012/ILSVRC2012_img_train.tar --no-check-certificate

|

| 5 |

+

wget https://image-net.org/data/ILSVRC/2012/ILSVRC2012_img_val.tar --no-check-certificate

|

| 6 |

+

|

| 7 |

+

#

|

| 8 |

+

# script to extract ImageNet dataset

|

| 9 |

+

# ILSVRC2012_img_train.tar (about 138 GB)

|

| 10 |

+

# ILSVRC2012_img_val.tar (about 6.3 GB)

|

| 11 |

+

# make sure ILSVRC2012_img_train.tar & ILSVRC2012_img_val.tar in your current directory

|

| 12 |

+

#

|

| 13 |

+

# https://github.com/facebook/fb.resnet.torch/blob/master/INSTALL.md

|

| 14 |

+

#

|

| 15 |

+

# train/

|

| 16 |

+

# ├── n01440764

|

| 17 |

+

# │ ├── n01440764_10026.JPEG

|

| 18 |

+

# │ ├── n01440764_10027.JPEG

|

| 19 |

+

# │ ├── ......

|

| 20 |

+

# ├── ......

|

| 21 |

+

# val/

|

| 22 |

+

# ├── n01440764

|

| 23 |

+

# │ ├── ILSVRC2012_val_00000293.JPEG

|

| 24 |

+

# │ ├── ILSVRC2012_val_00002138.JPEG

|

| 25 |

+

# │ ├── ......

|

| 26 |

+

# ├── ......

|

| 27 |

+

#

|

| 28 |

+

#

|

| 29 |

+

# Extract the training data:

|

| 30 |

+

#

|

| 31 |

+

mkdir train && mv ILSVRC2012_img_train.tar train/ && cd train

|

| 32 |

+

tar -xvf ILSVRC2012_img_train.tar && rm -f ILSVRC2012_img_train.tar

|

| 33 |

+

find . -name "*.tar" | while read NAME ; do mkdir -p "${NAME%.tar}"; tar -xvf "${NAME}" -C "${NAME%.tar}"; rm -f "${NAME}"; done

|

| 34 |

+

cd ..

|

| 35 |

+

#

|

| 36 |

+

# Extract the validation data and move images to subfolders:

|

| 37 |

+

#

|

| 38 |

+

mkdir val && mv ILSVRC2012_img_val.tar val/ && cd val && tar -xvf ILSVRC2012_img_val.tar

|

| 39 |

+

wget -qO- https://raw.githubusercontent.com/soumith/imagenetloader.torch/master/valprep.sh | bash

|

| 40 |

+

#

|

| 41 |

+

# Check total files after extract

|

| 42 |

+

#

|

| 43 |

+

# $ find train/ -name "*.JPEG" | wc -l

|

| 44 |

+

# 1281167

|

| 45 |

+

# $ find val/ -name "*.JPEG" | wc -l

|

| 46 |

+

# 50000

|

| 47 |

+

#

|

environment.yml

ADDED

|

@@ -0,0 +1,188 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: ssl-aug

|

| 2 |

+

channels:

|

| 3 |

+

- pytorch

|

| 4 |

+

- anaconda

|

| 5 |

+

- conda-forge

|

| 6 |

+

- defaults

|

| 7 |

+

dependencies:

|

| 8 |

+

- _libgcc_mutex=0.1=main

|

| 9 |

+

- _openmp_mutex=5.1=1_gnu

|

| 10 |

+

- blas=1.0=mkl

|

| 11 |

+

- bottleneck=1.3.4=py38hce1f21e_0

|

| 12 |

+

- brotlipy=0.7.0=py38h27cfd23_1003

|

| 13 |

+

- bzip2=1.0.8=h7b6447c_0

|

| 14 |

+

- ca-certificates=2022.6.15=ha878542_0

|

| 15 |

+

- cairo=1.16.0=hcf35c78_1003

|

| 16 |

+

- certifi=2022.6.15=py38h578d9bd_0

|

| 17 |

+

- cffi=1.15.0=py38h7f8727e_0

|

| 18 |

+

- charset-normalizer=2.0.4=pyhd3eb1b0_0

|

| 19 |

+

- cryptography=37.0.1=py38h9ce1e76_0

|

| 20 |

+

- cudatoolkit=11.3.1=h2bc3f7f_2

|

| 21 |

+

- dataclasses=0.8=pyh6d0b6a4_7

|

| 22 |

+

- dbus=1.13.18=hb2f20db_0

|

| 23 |

+

- expat=2.4.8=h27087fc_0

|

| 24 |

+

- ffmpeg=4.3.2=hca11adc_0

|

| 25 |

+

- fontconfig=2.14.0=h8e229c2_0

|

| 26 |

+

- freetype=2.11.0=h70c0345_0

|

| 27 |

+

- fvcore=0.1.5.post20220512=pyhd8ed1ab_0

|

| 28 |

+

- gettext=0.19.8.1=hd7bead4_3

|

| 29 |

+

- gh=2.12.1=ha8f183a_0

|

| 30 |

+

- giflib=5.2.1=h7b6447c_0

|

| 31 |

+

- glib=2.66.3=h58526e2_0

|

| 32 |

+

- gmp=6.2.1=h295c915_3

|

| 33 |

+

- gnutls=3.6.15=he1e5248_0

|

| 34 |

+

- graphite2=1.3.14=h295c915_1

|

| 35 |

+

- gst-plugins-base=1.14.5=h0935bb2_2

|

| 36 |

+

- gstreamer=1.14.5=h36ae1b5_2

|

| 37 |

+

- harfbuzz=2.4.0=h9f30f68_3

|

| 38 |

+

- hdf5=1.10.6=hb1b8bf9_0

|

| 39 |

+

- icu=64.2=he1b5a44_1

|

| 40 |

+

- idna=3.3=pyhd3eb1b0_0

|

| 41 |

+

- intel-openmp=2021.4.0=h06a4308_3561

|

| 42 |

+