add gen_compare

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- gen_compare/.gitattributes +8 -0

- gen_compare/README.md +55 -0

- gen_compare/control_images/bird_512x512.png +0 -0

- gen_compare/control_images/converted/control_bird_canny.png +0 -0

- gen_compare/control_images/converted/control_bird_depth.png +0 -0

- gen_compare/control_images/converted/control_bird_hed.png +0 -0

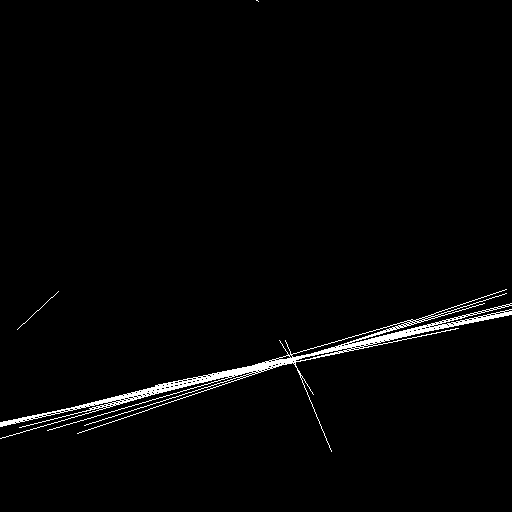

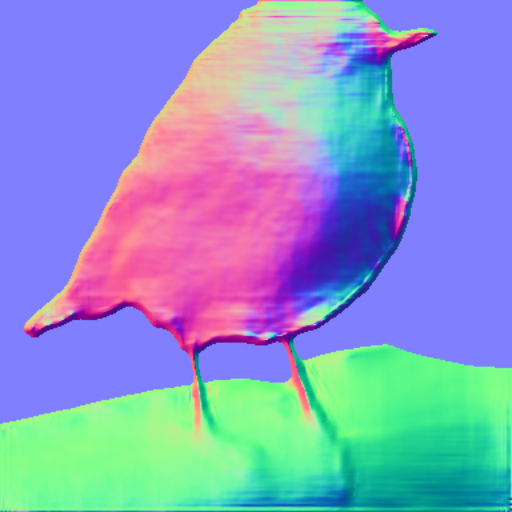

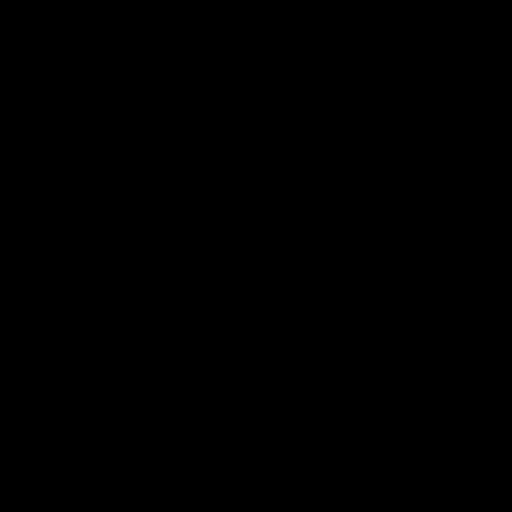

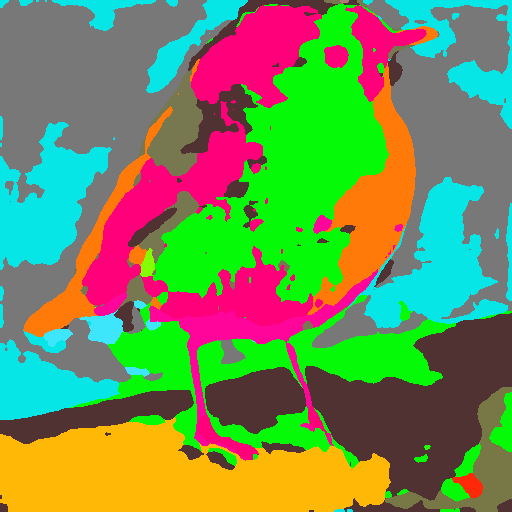

- gen_compare/control_images/converted/control_bird_mlsd.png +0 -0

- gen_compare/control_images/converted/control_bird_normal.png +0 -0

- gen_compare/control_images/converted/control_bird_openpose.png +0 -0

- gen_compare/control_images/converted/control_bird_scribble.png +0 -0

- gen_compare/control_images/converted/control_bird_seg.png +0 -0

- gen_compare/control_images/converted/control_human_canny.png +0 -0

- gen_compare/control_images/converted/control_human_depth.png +0 -0

- gen_compare/control_images/converted/control_human_hed.png +0 -0

- gen_compare/control_images/converted/control_human_mlsd.png +0 -0

- gen_compare/control_images/converted/control_human_normal.png +0 -0

- gen_compare/control_images/converted/control_human_openpose.png +0 -0

- gen_compare/control_images/converted/control_human_scribble.png +0 -0

- gen_compare/control_images/converted/control_human_seg.png +0 -0

- gen_compare/control_images/converted/control_room_canny.png +0 -0

- gen_compare/control_images/converted/control_room_depth.png +0 -0

- gen_compare/control_images/converted/control_room_hed.png +0 -0

- gen_compare/control_images/converted/control_room_mlsd.png +0 -0

- gen_compare/control_images/converted/control_room_normal.png +0 -0

- gen_compare/control_images/converted/control_room_openpose.png +0 -0

- gen_compare/control_images/converted/control_room_scribble.png +0 -0

- gen_compare/control_images/converted/control_room_seg.png +0 -0

- gen_compare/control_images/converted/control_vermeer_canny.png +0 -0

- gen_compare/control_images/converted/control_vermeer_depth.png +0 -0

- gen_compare/control_images/converted/control_vermeer_hed.png +0 -0

- gen_compare/control_images/converted/control_vermeer_mlsd.png +0 -0

- gen_compare/control_images/converted/control_vermeer_normal.png +0 -0

- gen_compare/control_images/converted/control_vermeer_openpose.png +0 -0

- gen_compare/control_images/converted/control_vermeer_scribble.png +0 -0

- gen_compare/control_images/converted/control_vermeer_seg.png +0 -0

- gen_compare/control_images/human_512x512.png +0 -0

- gen_compare/control_images/room_512x512.png +0 -0

- gen_compare/control_images/vermeer_512x512.png +0 -0

- gen_compare/create_control_images.py +29 -0

- gen_compare/create_plots.py +50 -0

- gen_compare/gen_diffusers_image.py +49 -0

- gen_compare/gen_diffusers_image.sh +9 -0

- gen_compare/gen_reference_image.py +60 -0

- gen_compare/gen_reference_image.sh +9 -0

- gen_compare/plots/figure_canny.png +3 -0

- gen_compare/plots/figure_depth.png +3 -0

- gen_compare/plots/figure_hed.png +3 -0

- gen_compare/plots/figure_mlsd.png +3 -0

- gen_compare/plots/figure_normal.png +3 -0

- gen_compare/plots/figure_openpose.png +3 -0

gen_compare/.gitattributes

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

plots/figure_seg.png filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

plots/figure_canny.png filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

plots/figure_depth.png filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

plots/figure_hed.png filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

plots/figure_mlsd.png filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

plots/figure_normal.png filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

plots/figure_openpose.png filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

plots/figure_scribble.png filter=lfs diff=lfs merge=lfs -text

|

gen_compare/README.md

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

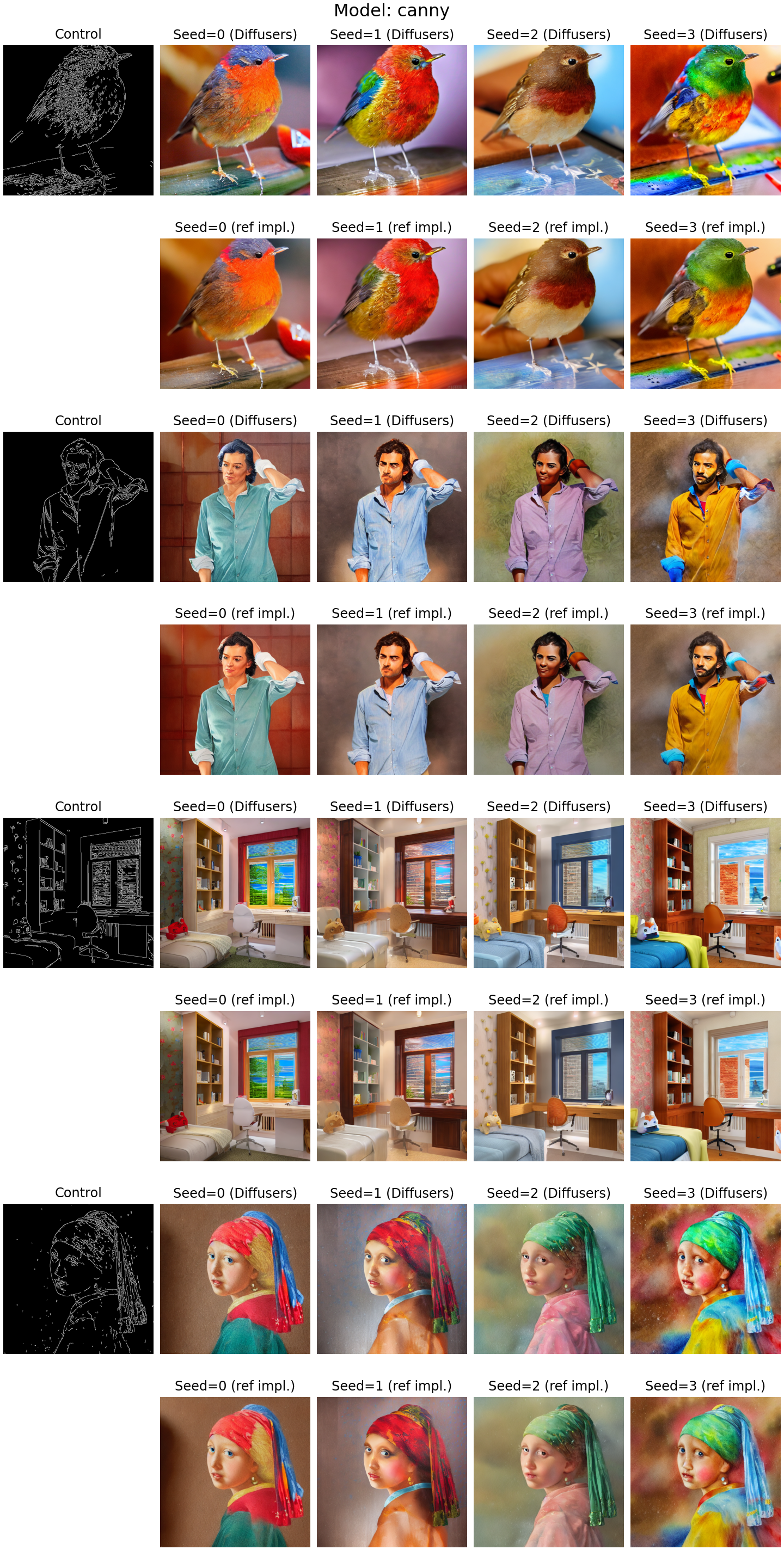

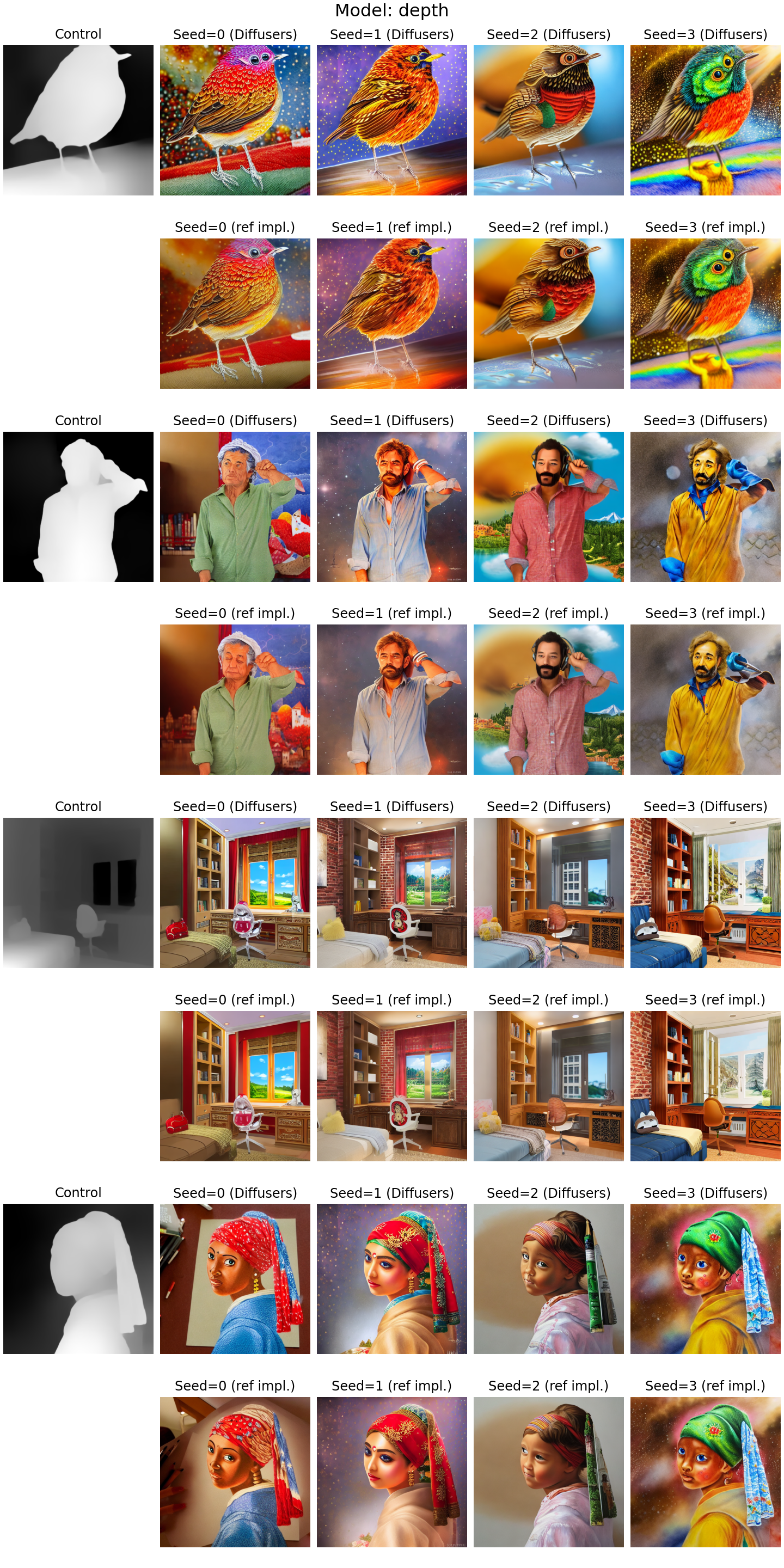

# Diffusers ControlNet Impl. & Reference Impl. generated image comparison

|

| 2 |

+

|

| 3 |

+

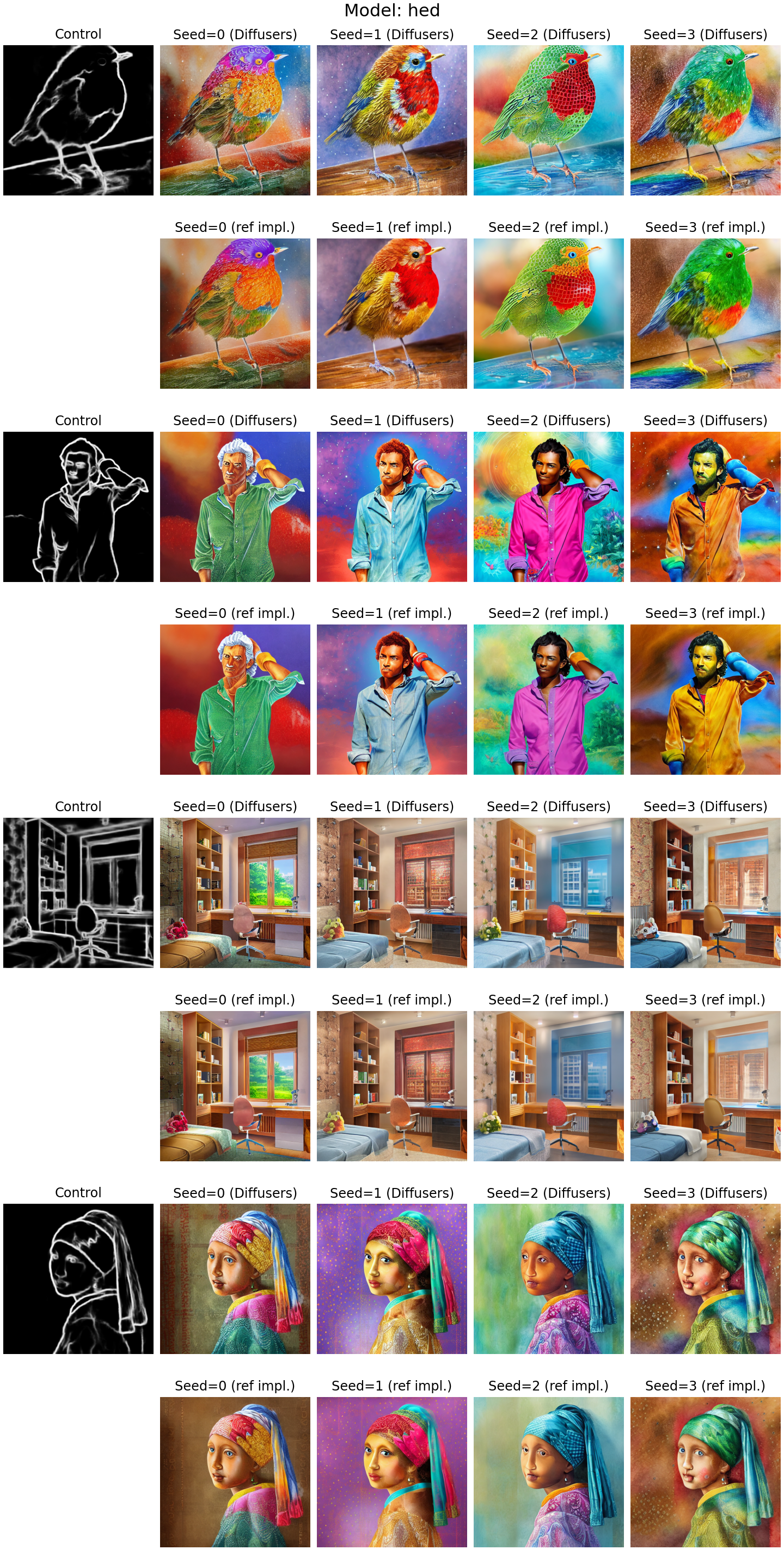

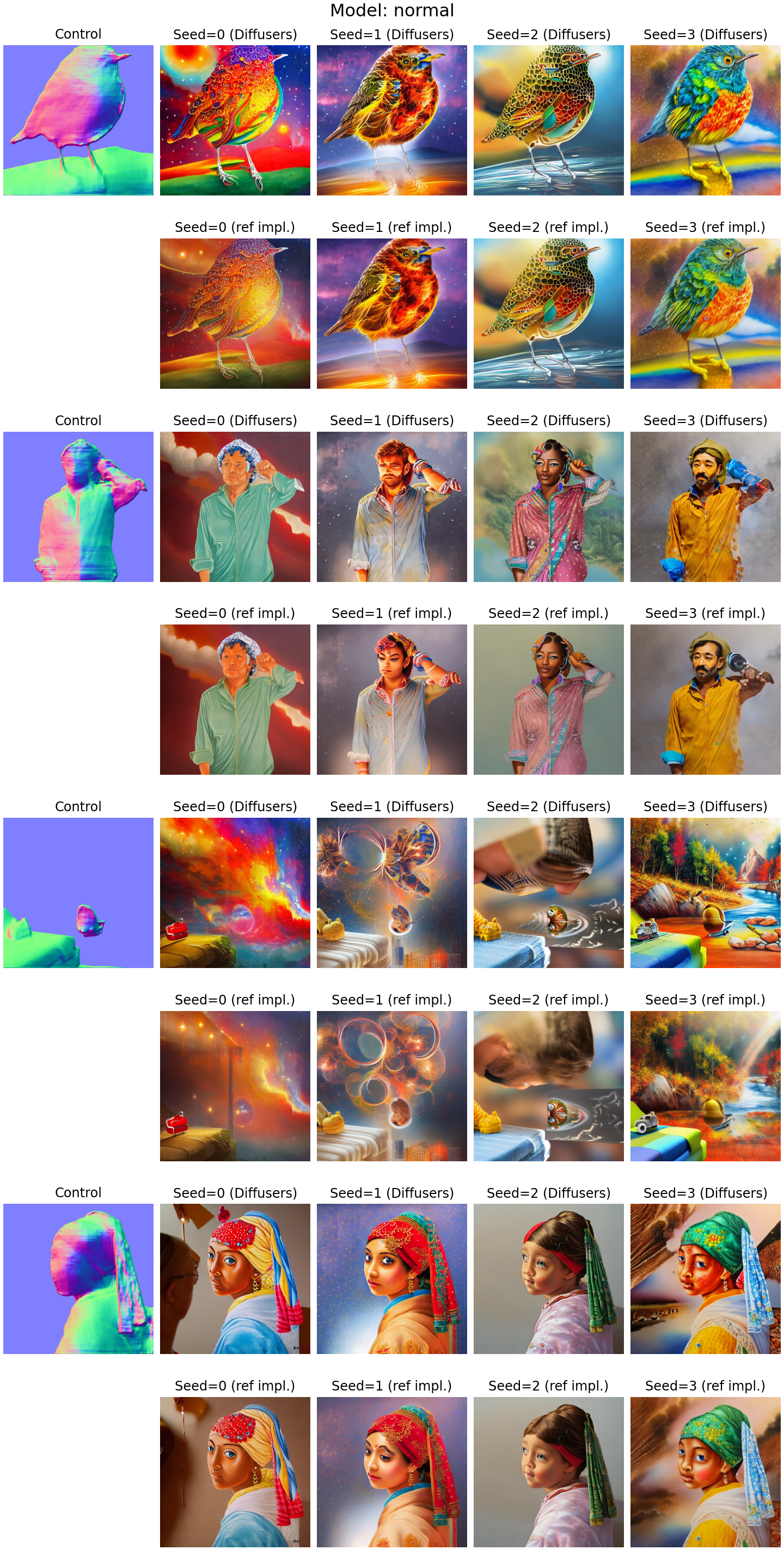

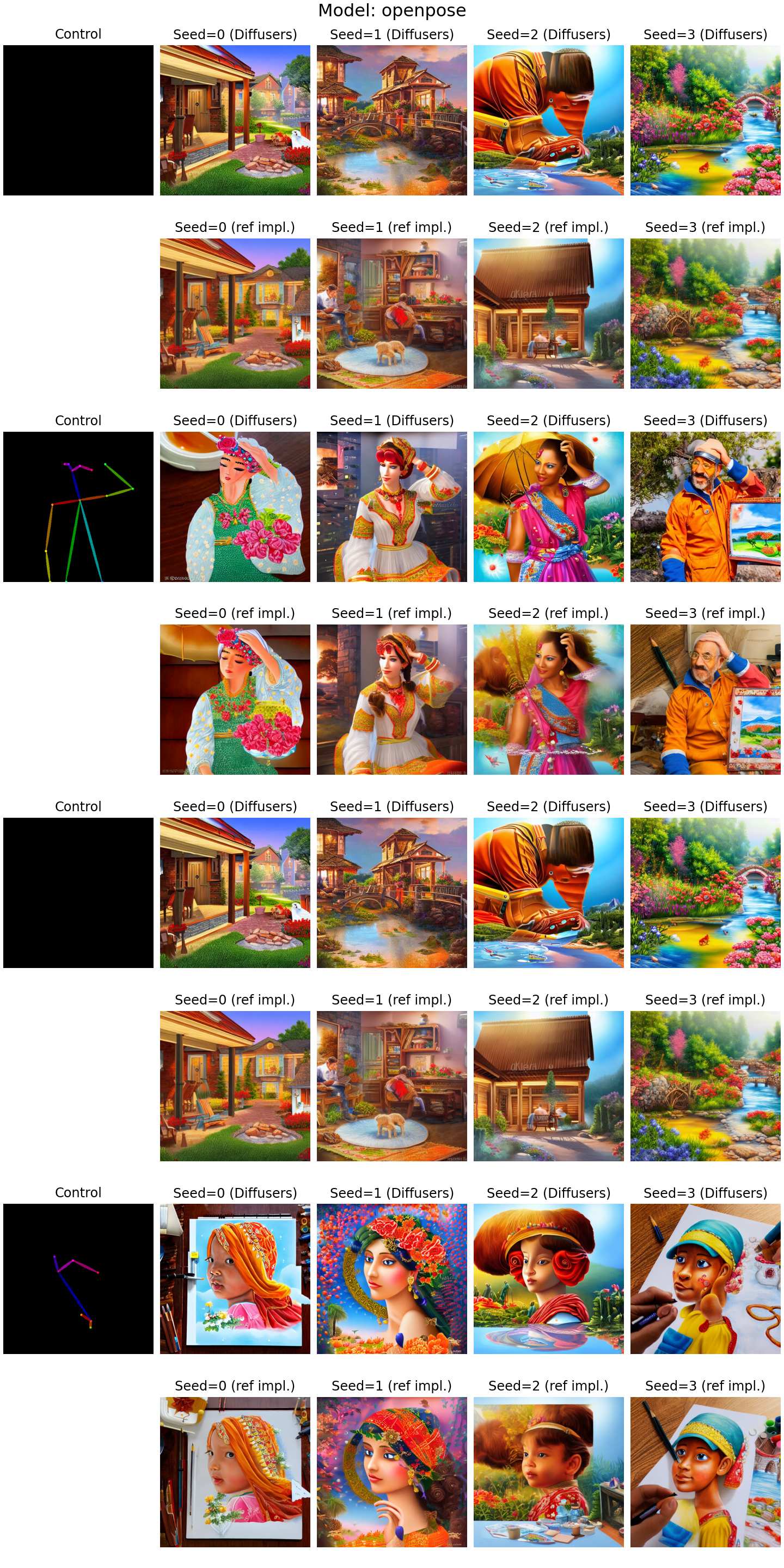

## Implementation Source Code & versions

|

| 4 |

+

- Diffusers (in development version): https://github.com/takuma104/diffusers/tree/e758682c00a7d23e271fd8c9fb7a48912838045c

|

| 5 |

+

- Reference Impl.: https://github.com/lllyasviel/ControlNet/tree/2f77609bf6a8a2243d9faa198365fc6222c5435f

|

| 6 |

+

|

| 7 |

+

## Environment

|

| 8 |

+

- OS: Ubuntu 22.04

|

| 9 |

+

- GPU: Nvidia RTX3060 (12GB)

|

| 10 |

+

|

| 11 |

+

## Scripts to generate plots:

|

| 12 |

+

- Create control image: [create_control_images.py](create_control_images.py)

|

| 13 |

+

- Diffusers generated image: [gen_diffusers_image.py](gen_diffusers_image.py)

|

| 14 |

+

- Reference generated image: [gen_reference_image.py](gen_reference_image.py)

|

| 15 |

+

- Create Plots: [create_plots.py](create_plots.py)

|

| 16 |

+

|

| 17 |

+

## Original image for control image:

|

| 18 |

+

- All images from [test_imgs](https://github.com/lllyasviel/ControlNet/test_imgs) excepts vermeer image. Croped and resized to 512x512px.

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

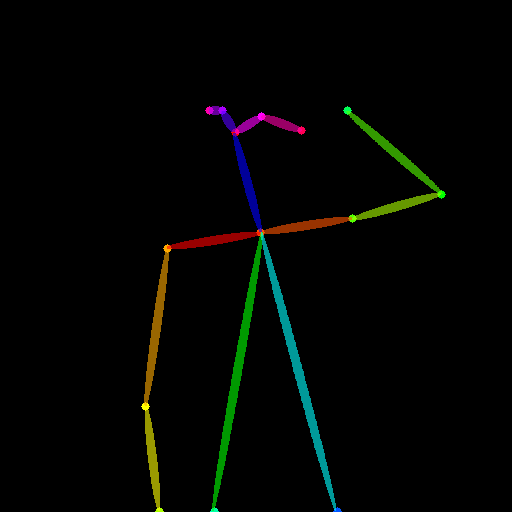

<img width="128" src="control_images/bird_512x512.png">

|

| 22 |

+

<img width="128" src="control_images/human_512x512.png">

|

| 23 |

+

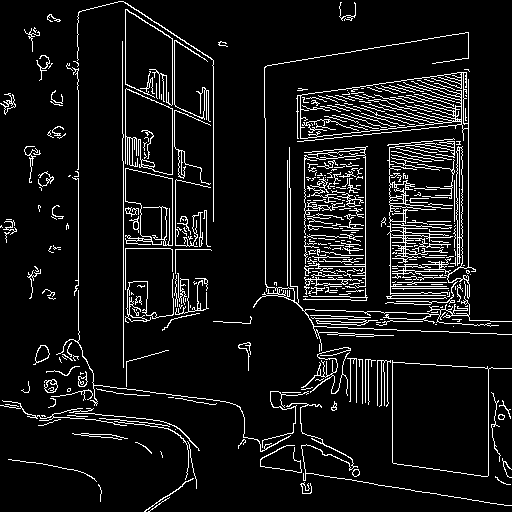

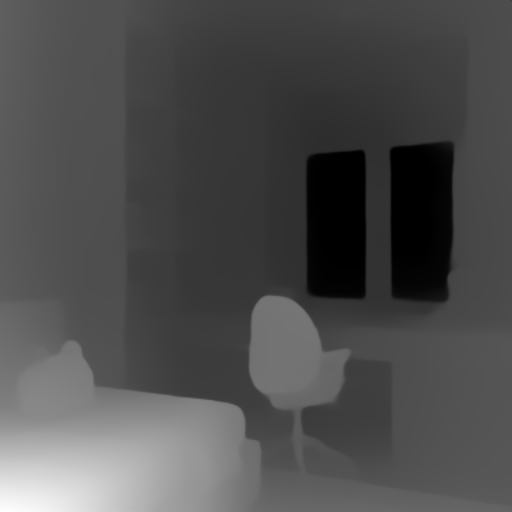

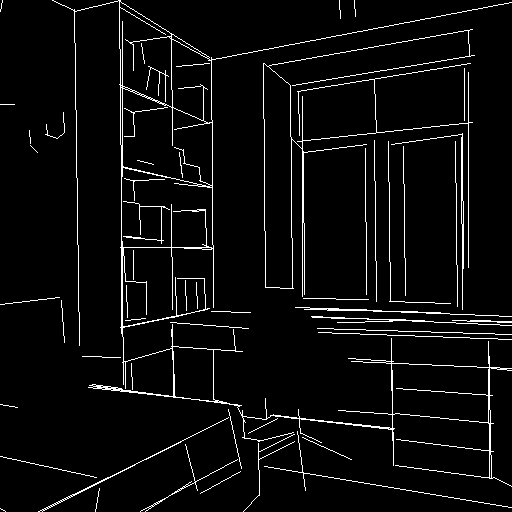

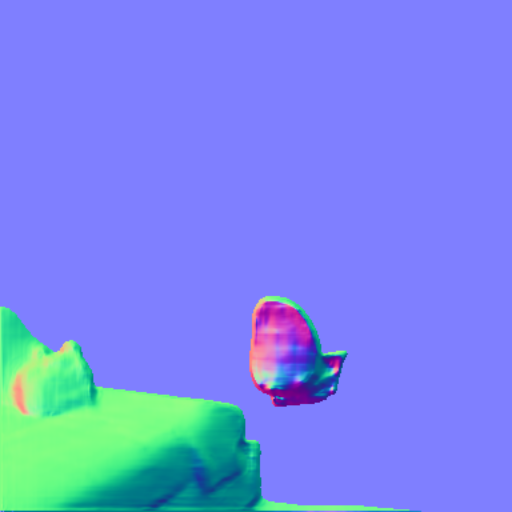

<img width="128" src="control_images/room_512x512.png">

|

| 24 |

+

<img width="128" src="control_images/vermeer_512x512.png">

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

## Generate Settings:

|

| 28 |

+

#### Control Images:

|

| 29 |

+

Converted above original images by [controlnet_hinter](https://github.com/takuma104/controlnet_hinter).

|

| 30 |

+

|

| 31 |

+

#### Prompts:

|

| 32 |

+

All images were generated with the same prompt.

|

| 33 |

+

|

| 34 |

+

- Prompt: `best quality, extremely detailed, illustration, looking at viewer`

|

| 35 |

+

- Negative Prompt: `monochrome, lowres, bad anatomy, worst quality, low quality`

|

| 36 |

+

|

| 37 |

+

#### Ohter setting (both common):

|

| 38 |

+

- sampler: DDIM

|

| 39 |

+

- guidance_scale: 9.0

|

| 40 |

+

- num_inference_steps: 20

|

| 41 |

+

- initial random latents: created on CPU using seed

|

| 42 |

+

|

| 43 |

+

## Results:

|

| 44 |

+

|

| 45 |

+

[](plots/figure_canny.png)

|

| 46 |

+

[](plots/figure_depth.png)

|

| 47 |

+

[](plots/figure_hed.png)

|

| 48 |

+

[](plots/figure_mlsd.png)

|

| 49 |

+

[](plots/figure_normal.png)

|

| 50 |

+

[](plots/figure_openpose.png)

|

| 51 |

+

[](plots/figure_scribble.png)

|

| 52 |

+

[](plots/figure_seg.png)

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

|

gen_compare/control_images/bird_512x512.png

ADDED

|

gen_compare/control_images/converted/control_bird_canny.png

ADDED

|

gen_compare/control_images/converted/control_bird_depth.png

ADDED

|

gen_compare/control_images/converted/control_bird_hed.png

ADDED

|

gen_compare/control_images/converted/control_bird_mlsd.png

ADDED

|

gen_compare/control_images/converted/control_bird_normal.png

ADDED

|

gen_compare/control_images/converted/control_bird_openpose.png

ADDED

|

gen_compare/control_images/converted/control_bird_scribble.png

ADDED

|

gen_compare/control_images/converted/control_bird_seg.png

ADDED

|

gen_compare/control_images/converted/control_human_canny.png

ADDED

|

gen_compare/control_images/converted/control_human_depth.png

ADDED

|

gen_compare/control_images/converted/control_human_hed.png

ADDED

|

gen_compare/control_images/converted/control_human_mlsd.png

ADDED

|

gen_compare/control_images/converted/control_human_normal.png

ADDED

|

gen_compare/control_images/converted/control_human_openpose.png

ADDED

|

gen_compare/control_images/converted/control_human_scribble.png

ADDED

|

gen_compare/control_images/converted/control_human_seg.png

ADDED

|

gen_compare/control_images/converted/control_room_canny.png

ADDED

|

gen_compare/control_images/converted/control_room_depth.png

ADDED

|

gen_compare/control_images/converted/control_room_hed.png

ADDED

|

gen_compare/control_images/converted/control_room_mlsd.png

ADDED

|

gen_compare/control_images/converted/control_room_normal.png

ADDED

|

gen_compare/control_images/converted/control_room_openpose.png

ADDED

|

gen_compare/control_images/converted/control_room_scribble.png

ADDED

|

gen_compare/control_images/converted/control_room_seg.png

ADDED

|

gen_compare/control_images/converted/control_vermeer_canny.png

ADDED

|

gen_compare/control_images/converted/control_vermeer_depth.png

ADDED

|

gen_compare/control_images/converted/control_vermeer_hed.png

ADDED

|

gen_compare/control_images/converted/control_vermeer_mlsd.png

ADDED

|

gen_compare/control_images/converted/control_vermeer_normal.png

ADDED

|

gen_compare/control_images/converted/control_vermeer_openpose.png

ADDED

|

gen_compare/control_images/converted/control_vermeer_scribble.png

ADDED

|

gen_compare/control_images/converted/control_vermeer_seg.png

ADDED

|

gen_compare/control_images/human_512x512.png

ADDED

|

gen_compare/control_images/room_512x512.png

ADDED

|

gen_compare/control_images/vermeer_512x512.png

ADDED

|

gen_compare/create_control_images.py

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from PIL import Image

|

| 2 |

+

import controlnet_hinter

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

#model_suffixes = {"canny","normal","depth","openpose","hed","scribble","mlsd","seg"}

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

def write_converted_files(original_image, prefix):

|

| 9 |

+

controlnet_hinter.hint_canny(original_image).save(prefix + '_canny.png')

|

| 10 |

+

controlnet_hinter.hint_depth(original_image).save(prefix + '_depth.png')

|

| 11 |

+

controlnet_hinter.hint_fake_scribble(original_image).save(

|

| 12 |

+

prefix + '_scribble.png')

|

| 13 |

+

controlnet_hinter.hint_hed(original_image).save(prefix + '_hed.png')

|

| 14 |

+

controlnet_hinter.hint_hough(original_image).save(prefix + '_mlsd.png')

|

| 15 |

+

controlnet_hinter.hint_normal(original_image).save(prefix + '_normal.png')

|

| 16 |

+

controlnet_hinter.hint_openpose(

|

| 17 |

+

original_image).save(prefix + '_openpose.png')

|

| 18 |

+

# controlnet_hinter.hint_scribble(

|

| 19 |

+

# original_image).save(prefix + '_scribble.png')

|

| 20 |

+

controlnet_hinter.hint_segmentation(

|

| 21 |

+

original_image).save(prefix + '_seg.png')

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

if __name__ == '__main__':

|

| 25 |

+

image_types = {'bird', 'human', 'room', 'vermeer'}

|

| 26 |

+

for itype in image_types:

|

| 27 |

+

image = Image.open(f"control_images/{itype}_512x512.png")

|

| 28 |

+

write_converted_files(

|

| 29 |

+

image, prefix=f'control_images/converted/control_{itype}')

|

gen_compare/create_plots.py

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import matplotlib.pyplot as plt

|

| 3 |

+

from PIL import Image

|

| 4 |

+

|

| 5 |

+

plt.rcParams["figure.figsize"] = (10, 5)

|

| 6 |

+

plt.rcParams['figure.facecolor'] = 'white'

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def render_figure(model_name, fn):

|

| 10 |

+

image_types = ['bird', 'human', 'room', 'vermeer']

|

| 11 |

+

|

| 12 |

+

def plot_row(axs, control_fn_prefix, output_fn_prefix, name, show_control=False):

|

| 13 |

+

for i, ax in enumerate(axs):

|

| 14 |

+

if i == 0:

|

| 15 |

+

if show_control:

|

| 16 |

+

ax.set_title(f'Control')

|

| 17 |

+

ax.imshow(Image.open(f'{control_fn_prefix}.png'))

|

| 18 |

+

else:

|

| 19 |

+

ax.set_title(f'Seed={i-1} ({name})')

|

| 20 |

+

ax.imshow(Image.open(f'{output_fn_prefix}_{i-1}.png'))

|

| 21 |

+

|

| 22 |

+

fig, axs = plt.subplots(

|

| 23 |

+

2 * len(image_types), 5, layout="constrained", figsize=(10, 5 * len(image_types)))

|

| 24 |

+

for ax in axs.flatten():

|

| 25 |

+

ax.set_aspect('equal', 'box')

|

| 26 |

+

ax.axis('off')

|

| 27 |

+

|

| 28 |

+

pair_axs = [list(pair) for pair in zip(axs[::2], axs[1::2])]

|

| 29 |

+

for image_type, pair_ax in zip(image_types, pair_axs):

|

| 30 |

+

plot_row(pair_ax[0],

|

| 31 |

+

f'./control_images/converted/control_{image_type}_{model_name}',

|

| 32 |

+

f'./output_images/diffusers/output_{image_type}_{model_name}',

|

| 33 |

+

'Diffusers', show_control=True)

|

| 34 |

+

plot_row(pair_ax[1],

|

| 35 |

+

f'./control_images/converted/control_{image_type}_{model_name}',

|

| 36 |

+

f'./output_images/ref/output_{image_type}_{model_name}',

|

| 37 |

+

'ref impl.')

|

| 38 |

+

|

| 39 |

+

fig.suptitle(f'Model: {model_name}', fontsize=16)

|

| 40 |

+

# fig.tight_layout()

|

| 41 |

+

fig.savefig(fn, dpi=144)

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

if __name__ == '__main__':

|

| 45 |

+

model_names = ["canny", "normal", "depth",

|

| 46 |

+

"openpose", "hed", "scribble", "mlsd", "seg"]

|

| 47 |

+

for model in model_names:

|

| 48 |

+

fn = f"plots/figure_{model}.png"

|

| 49 |

+

render_figure(model, fn)

|

| 50 |

+

print(fn)

|

gen_compare/gen_diffusers_image.py

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Diffusers' ControlNet Implementation Subjective Evaluation

|

| 2 |

+

# https://github.com/takuma104/diffusers/tree/controlnet

|

| 3 |

+

|

| 4 |

+

import einops

|

| 5 |

+

import numpy as np

|

| 6 |

+

import torch

|

| 7 |

+

import sys

|

| 8 |

+

|

| 9 |

+

from diffusers import StableDiffusionControlNetPipeline

|

| 10 |

+

|

| 11 |

+

from PIL import Image

|

| 12 |

+

|

| 13 |

+

test_prompt = "best quality, extremely detailed, illustration, looking at viewer"

|

| 14 |

+

test_negative_prompt = "monochrome, lowres, bad anatomy, worst quality, low quality"

|

| 15 |

+

|

| 16 |

+

def generate_image(seed, control):

|

| 17 |

+

latent = torch.randn((1,4,64,64), device="cpu", generator=torch.Generator(device="cpu").manual_seed(seed)).cuda()

|

| 18 |

+

image = pipe(

|

| 19 |

+

prompt=test_prompt,

|

| 20 |

+

negative_prompt=test_negative_prompt,

|

| 21 |

+

guidance_scale=9.0,

|

| 22 |

+

num_inference_steps=20,

|

| 23 |

+

latents=latent,

|

| 24 |

+

#generator=torch.Generator(device="cuda").manual_seed(seed),

|

| 25 |

+

image=control,

|

| 26 |

+

).images[0]

|

| 27 |

+

return image

|

| 28 |

+

|

| 29 |

+

if __name__ == '__main__':

|

| 30 |

+

model_name = sys.argv[1]

|

| 31 |

+

control_image_folder = '../huggingface/controlnet_dev/gen_compare/control_images/converted/'

|

| 32 |

+

output_image_folder = '../huggingface/controlnet_dev/gen_compare/output_images/diffusers/'

|

| 33 |

+

model_id = f'../huggingface/control_sd15_{model_name}'

|

| 34 |

+

|

| 35 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(model_id).to("cuda")

|

| 36 |

+

pipe.enable_attention_slicing(1)

|

| 37 |

+

|

| 38 |

+

image_types = {'bird', 'human', 'room', 'vermeer'}

|

| 39 |

+

|

| 40 |

+

for image_type in image_types:

|

| 41 |

+

control_image = Image.open(f'{control_image_folder}control_{image_type}_{model_name}.png')

|

| 42 |

+

control = np.array(control_image)[:,:,::-1].copy()

|

| 43 |

+

control = torch.from_numpy(control).float().cuda() / 255.0

|

| 44 |

+

control = torch.stack([control for _ in range(1)], dim=0)

|

| 45 |

+

control = einops.rearrange(control, 'b h w c -> b c h w').clone()

|

| 46 |

+

|

| 47 |

+

for seed in range(4):

|

| 48 |

+

image = generate_image(seed=seed, control=control)

|

| 49 |

+

image.save(f'{output_image_folder}output_{image_type}_{model_name}_{seed}.png')

|

gen_compare/gen_diffusers_image.sh

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

models=("canny" "normal" "depth" "openpose" "hed" "scribble" "mlsd" "seg")

|

| 4 |

+

|

| 5 |

+

for model in "${models[@]}"

|

| 6 |

+

do

|

| 7 |

+

echo $model

|

| 8 |

+

python gen_diffusers_image.py $model

|

| 9 |

+

done

|

gen_compare/gen_reference_image.py

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# from https://github.com/lllyasviel/ControlNet/blob/main/gradio_canny2image.py

|

| 2 |

+

|

| 3 |

+

from share import *

|

| 4 |

+

|

| 5 |

+

import einops

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import sys

|

| 10 |

+

|

| 11 |

+

from pytorch_lightning import seed_everything

|

| 12 |

+

from cldm.model import create_model, load_state_dict

|

| 13 |

+

from ldm.models.diffusion.ddim import DDIMSampler

|

| 14 |

+

from diffusers.utils import load_image

|

| 15 |

+

|

| 16 |

+

test_prompt = "best quality, extremely detailed, illustration, looking at viewer"

|

| 17 |

+

test_negative_prompt = "monochrome, lowres, bad anatomy, worst quality, low quality"

|

| 18 |

+

|

| 19 |

+

@torch.no_grad()

|

| 20 |

+

def generate(prompt, n_prompt, seed, control, ddim_steps=20, eta=0.0, scale=9.0, H=512, W=512):

|

| 21 |

+

seed_everything(seed)

|

| 22 |

+

|

| 23 |

+

cond = {"c_concat": [control], "c_crossattn": [model.get_learned_conditioning([prompt] * num_samples)]}

|

| 24 |

+

un_cond = {"c_concat": [control], "c_crossattn": [model.get_learned_conditioning([n_prompt] * num_samples)]}

|

| 25 |

+

shape = (4, H // 8, W // 8)

|

| 26 |

+

|

| 27 |

+

latent = torch.randn((1,) + shape, device="cpu", generator=torch.Generator(device="cpu").manual_seed(seed)).cuda()

|

| 28 |

+

samples, intermediates = ddim_sampler.sample(ddim_steps, num_samples,

|

| 29 |

+

shape, cond, x_T=latent,

|

| 30 |

+

verbose=False, eta=eta,

|

| 31 |

+

unconditional_guidance_scale=scale,

|

| 32 |

+

unconditional_conditioning=un_cond)

|

| 33 |

+

x_samples = model.decode_first_stage(samples)

|

| 34 |

+

x_samples = (einops.rearrange(x_samples, 'b c h w -> b h w c') * 127.5 + 127.5).cpu().numpy().clip(0, 255).astype(np.uint8)

|

| 35 |

+

|

| 36 |

+

return Image.fromarray(x_samples[0])

|

| 37 |

+

|

| 38 |

+

if __name__ == '__main__':

|

| 39 |

+

model_name = sys.argv[1]

|

| 40 |

+

control_image_folder = '../huggingface/controlnet_dev/gen_compare/control_images/converted/'

|

| 41 |

+

output_image_folder = '../huggingface/controlnet_dev/gen_compare/output_images/ref/'

|

| 42 |

+

|

| 43 |

+

num_samples = 1

|

| 44 |

+

model = create_model('./models/cldm_v15.yaml').cpu()

|

| 45 |

+

model.load_state_dict(load_state_dict(f'../huggingface/ControlNet/models/control_sd15_{model_name}.pth', location='cpu'))

|

| 46 |

+

model = model.cuda()

|

| 47 |

+

ddim_sampler = DDIMSampler(model)

|

| 48 |

+

|

| 49 |

+

image_types = {'bird', 'human', 'room', 'vermeer'}

|

| 50 |

+

|

| 51 |

+

for image_type in image_types:

|

| 52 |

+

control_image = Image.open(f'{control_image_folder}control_{image_type}_{model_name}.png')

|

| 53 |

+

control = np.array(control_image)[:,:,::-1].copy()

|

| 54 |

+

control = torch.from_numpy(control).float().cuda() / 255.0

|

| 55 |

+

control = torch.stack([control for _ in range(num_samples)], dim=0)

|

| 56 |

+

control = einops.rearrange(control, 'b h w c -> b c h w').clone()

|

| 57 |

+

|

| 58 |

+

for seed in range(4):

|

| 59 |

+

image = generate(test_prompt, test_negative_prompt, seed=seed, control=control)

|

| 60 |

+

image.save(f'{output_image_folder}output_{image_type}_{model_name}_{seed}.png')

|

gen_compare/gen_reference_image.sh

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

models=("canny" "normal" "depth" "openpose" "hed" "scribble" "mlsd" "seg")

|

| 4 |

+

|

| 5 |

+

for model in "${models[@]}"

|

| 6 |

+

do

|

| 7 |

+

echo $model

|

| 8 |

+

python gen_reference_image.py $model

|

| 9 |

+

done

|

gen_compare/plots/figure_canny.png

ADDED

|

Git LFS Details

|

gen_compare/plots/figure_depth.png

ADDED

|

Git LFS Details

|

gen_compare/plots/figure_hed.png

ADDED

|

Git LFS Details

|

gen_compare/plots/figure_mlsd.png

ADDED

|

Git LFS Details

|

gen_compare/plots/figure_normal.png

ADDED

|

Git LFS Details

|

gen_compare/plots/figure_openpose.png

ADDED

|

Git LFS Details

|