Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +8 -0

- .github/ISSUE_TEMPLATE/bug_report.yaml +88 -0

- .github/ISSUE_TEMPLATE/config.yaml +1 -0

- .github/ISSUE_TEMPLATE/feature_request.yaml +78 -0

- .gitignore +11 -0

- 1.jpg +0 -0

- FAQ.md +55 -0

- FAQ_zh.md +52 -0

- LICENSE +53 -0

- NOTICE +52 -0

- README.md +675 -7

- README_CN.md +666 -0

- TUTORIAL.md +221 -0

- TUTORIAL_zh.md +216 -0

- assets/apple.jpeg +3 -0

- assets/apple_r.jpeg +0 -0

- assets/demo.jpeg +0 -0

- assets/demo_highfive.jpg +0 -0

- assets/demo_spotting_caption.jpg +0 -0

- assets/demo_vl.gif +3 -0

- assets/logo.jpg +0 -0

- assets/mm_tutorial/Beijing.jpeg +3 -0

- assets/mm_tutorial/Beijing_Small.jpeg +0 -0

- assets/mm_tutorial/Chongqing.jpeg +3 -0

- assets/mm_tutorial/Chongqing_Small.jpeg +0 -0

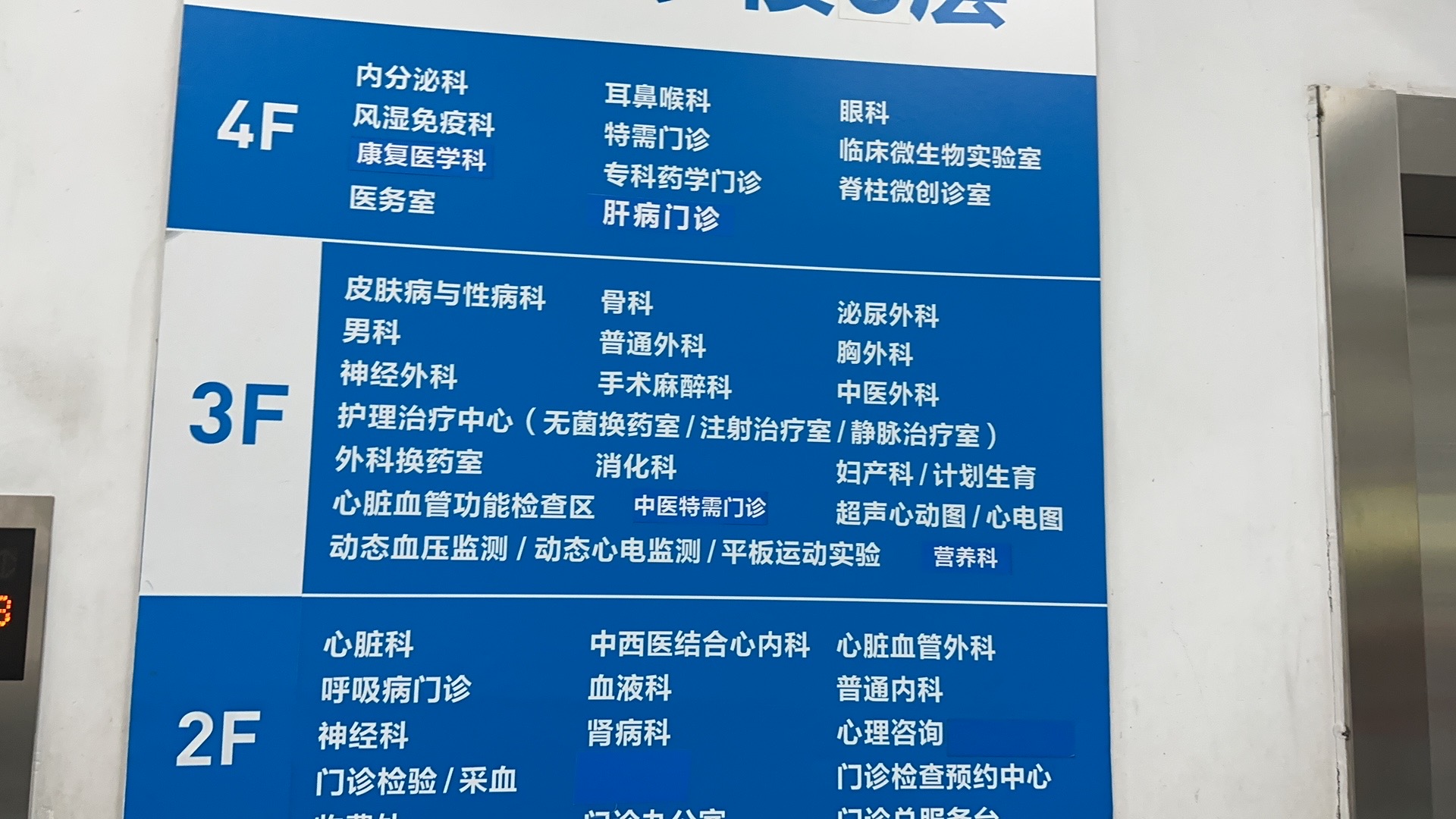

- assets/mm_tutorial/Hospital.jpg +0 -0

- assets/mm_tutorial/Hospital_Small.jpg +0 -0

- assets/mm_tutorial/Menu.jpeg +0 -0

- assets/mm_tutorial/Rebecca_(1939_poster).jpeg +3 -0

- assets/mm_tutorial/Rebecca_(1939_poster)_Small.jpeg +0 -0

- assets/mm_tutorial/Shanghai.jpg +0 -0

- assets/mm_tutorial/Shanghai_Output.jpg +3 -0

- assets/mm_tutorial/Shanghai_Output_Small.jpeg +0 -0

- assets/mm_tutorial/Shanghai_Small.jpeg +0 -0

- assets/mm_tutorial/TUTORIAL.ipynb +0 -0

- assets/qwenvl.jpeg +0 -0

- assets/radar.png +0 -0

- assets/touchstone_datasets.jpg +3 -0

- assets/touchstone_eval.png +0 -0

- assets/touchstone_logo.png +3 -0

- eval_mm/EVALUATION.md +1 -0

- eval_mm/evaluate_caption.py +193 -0

- eval_mm/evaluate_grounding.py +213 -0

- eval_mm/evaluate_multiple_choice.py +184 -0

- eval_mm/evaluate_vizwiz_testdev.py +167 -0

- eval_mm/evaluate_vqa.py +357 -0

- eval_mm/vqa.py +206 -0

- eval_mm/vqa_eval.py +330 -0

- requirements.txt +10 -0

- requirements_web_demo.txt +1 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,11 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/apple.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/demo_vl.gif filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

assets/mm_tutorial/Beijing.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

assets/mm_tutorial/Chongqing.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

assets/mm_tutorial/Rebecca_(1939_poster).jpeg filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

assets/mm_tutorial/Shanghai_Output.jpg filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

assets/touchstone_datasets.jpg filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

assets/touchstone_logo.png filter=lfs diff=lfs merge=lfs -text

|

.github/ISSUE_TEMPLATE/bug_report.yaml

ADDED

|

@@ -0,0 +1,88 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: 🐞 Bug

|

| 2 |

+

description: 提交错误报告 | File a bug/issue

|

| 3 |

+

title: "[BUG] <title>"

|

| 4 |

+

labels: []

|

| 5 |

+

body:

|

| 6 |

+

- type: checkboxes

|

| 7 |

+

attributes:

|

| 8 |

+

label: 是否已有关于该错误的issue或讨论? | Is there an existing issue / discussion for this?

|

| 9 |

+

description: |

|

| 10 |

+

请先搜索您遇到的错误是否在已有的issues或讨论中提到过。

|

| 11 |

+

Please search to see if an issue / discussion already exists for the bug you encountered.

|

| 12 |

+

[Issues](https://github.com/QwenLM/Qwen-7B/issues)

|

| 13 |

+

[Discussions](https://github.com/QwenLM/Qwen-7B/discussions)

|

| 14 |

+

options:

|

| 15 |

+

- label: 我已经搜索过已有的issues和讨论 | I have searched the existing issues / discussions

|

| 16 |

+

required: true

|

| 17 |

+

- type: checkboxes

|

| 18 |

+

attributes:

|

| 19 |

+

label: 该问题是否在FAQ中有解答? | Is there an existing answer for this in FAQ?

|

| 20 |

+

description: |

|

| 21 |

+

请先搜索您遇到的错误是否已在FAQ中有相关解答。

|

| 22 |

+

Please search to see if an answer already exists in FAQ for the bug you encountered.

|

| 23 |

+

[FAQ-en](https://github.com/QwenLM/Qwen-7B/blob/main/FAQ.md)

|

| 24 |

+

[FAQ-zh](https://github.com/QwenLM/Qwen-7B/blob/main/FAQ_zh.md)

|

| 25 |

+

options:

|

| 26 |

+

- label: 我已经搜索过FAQ | I have searched FAQ

|

| 27 |

+

required: true

|

| 28 |

+

- type: textarea

|

| 29 |

+

attributes:

|

| 30 |

+

label: 当前行为 | Current Behavior

|

| 31 |

+

description: |

|

| 32 |

+

准确描述遇到的行为。

|

| 33 |

+

A concise description of what you're experiencing.

|

| 34 |

+

validations:

|

| 35 |

+

required: false

|

| 36 |

+

- type: textarea

|

| 37 |

+

attributes:

|

| 38 |

+

label: 期望行为 | Expected Behavior

|

| 39 |

+

description: |

|

| 40 |

+

准确描述预期的行为。

|

| 41 |

+

A concise description of what you expected to happen.

|

| 42 |

+

validations:

|

| 43 |

+

required: false

|

| 44 |

+

- type: textarea

|

| 45 |

+

attributes:

|

| 46 |

+

label: 复现方法 | Steps To Reproduce

|

| 47 |

+

description: |

|

| 48 |

+

复现当前行为的详细步骤。

|

| 49 |

+

Steps to reproduce the behavior.

|

| 50 |

+

placeholder: |

|

| 51 |

+

1. In this environment...

|

| 52 |

+

2. With this config...

|

| 53 |

+

3. Run '...'

|

| 54 |

+

4. See error...

|

| 55 |

+

validations:

|

| 56 |

+

required: false

|

| 57 |

+

- type: textarea

|

| 58 |

+

attributes:

|

| 59 |

+

label: 运行环境 | Environment

|

| 60 |

+

description: |

|

| 61 |

+

examples:

|

| 62 |

+

- **OS**: Ubuntu 20.04

|

| 63 |

+

- **Python**: 3.8

|

| 64 |

+

- **Transformers**: 4.31.0

|

| 65 |

+

- **PyTorch**: 2.0.1

|

| 66 |

+

- **CUDA**: 11.4

|

| 67 |

+

value: |

|

| 68 |

+

- OS:

|

| 69 |

+

- Python:

|

| 70 |

+

- Transformers:

|

| 71 |

+

- PyTorch:

|

| 72 |

+

- CUDA (`python -c 'import torch; print(torch.version.cuda)'`):

|

| 73 |

+

render: Markdown

|

| 74 |

+

validations:

|

| 75 |

+

required: false

|

| 76 |

+

- type: textarea

|

| 77 |

+

attributes:

|

| 78 |

+

label: 备注 | Anything else?

|

| 79 |

+

description: |

|

| 80 |

+

您可以在这里补充其他关于该问题背景信息的描述、链接或引用等。

|

| 81 |

+

|

| 82 |

+

您可以通过点击高亮此区域然后拖动文件的方式上传图片或日志文件。

|

| 83 |

+

|

| 84 |

+

Links? References? Anything that will give us more context about the issue you are encountering!

|

| 85 |

+

|

| 86 |

+

Tip: You can attach images or log files by clicking this area to highlight it and then dragging files in.

|

| 87 |

+

validations:

|

| 88 |

+

required: false

|

.github/ISSUE_TEMPLATE/config.yaml

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

blank_issues_enabled: true

|

.github/ISSUE_TEMPLATE/feature_request.yaml

ADDED

|

@@ -0,0 +1,78 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: "💡 Feature Request"

|

| 2 |

+

description: 创建新功能请求 | Create a new ticket for a new feature request

|

| 3 |

+

title: "💡 [REQUEST] - <title>"

|

| 4 |

+

labels: [

|

| 5 |

+

"question"

|

| 6 |

+

]

|

| 7 |

+

body:

|

| 8 |

+

- type: input

|

| 9 |

+

id: start_date

|

| 10 |

+

attributes:

|

| 11 |

+

label: "起始日期 | Start Date"

|

| 12 |

+

description: |

|

| 13 |

+

起始开发日期

|

| 14 |

+

Start of development

|

| 15 |

+

placeholder: "month/day/year"

|

| 16 |

+

validations:

|

| 17 |

+

required: false

|

| 18 |

+

- type: textarea

|

| 19 |

+

id: implementation_pr

|

| 20 |

+

attributes:

|

| 21 |

+

label: "实现PR | Implementation PR"

|

| 22 |

+

description: |

|

| 23 |

+

实现该功能的Pull request

|

| 24 |

+

Pull request used

|

| 25 |

+

placeholder: "#Pull Request ID"

|

| 26 |

+

validations:

|

| 27 |

+

required: false

|

| 28 |

+

- type: textarea

|

| 29 |

+

id: reference_issues

|

| 30 |

+

attributes:

|

| 31 |

+

label: "相关Issues | Reference Issues"

|

| 32 |

+

description: |

|

| 33 |

+

与该功能相关的issues

|

| 34 |

+

Common issues

|

| 35 |

+

placeholder: "#Issues IDs"

|

| 36 |

+

validations:

|

| 37 |

+

required: false

|

| 38 |

+

- type: textarea

|

| 39 |

+

id: summary

|

| 40 |

+

attributes:

|

| 41 |

+

label: "摘要 | Summary"

|

| 42 |

+

description: |

|

| 43 |

+

简要描述新功能的特点

|

| 44 |

+

Provide a brief explanation of the feature

|

| 45 |

+

placeholder: |

|

| 46 |

+

Describe in a few lines your feature request

|

| 47 |

+

validations:

|

| 48 |

+

required: true

|

| 49 |

+

- type: textarea

|

| 50 |

+

id: basic_example

|

| 51 |

+

attributes:

|

| 52 |

+

label: "基本示例 | Basic Example"

|

| 53 |

+

description: Indicate here some basic examples of your feature.

|

| 54 |

+

placeholder: A few specific words about your feature request.

|

| 55 |

+

validations:

|

| 56 |

+

required: true

|

| 57 |

+

- type: textarea

|

| 58 |

+

id: drawbacks

|

| 59 |

+

attributes:

|

| 60 |

+

label: "缺陷 | Drawbacks"

|

| 61 |

+

description: |

|

| 62 |

+

该新功能有哪些缺陷/可能造成哪些影响?

|

| 63 |

+

What are the drawbacks/impacts of your feature request ?

|

| 64 |

+

placeholder: |

|

| 65 |

+

Identify the drawbacks and impacts while being neutral on your feature request

|

| 66 |

+

validations:

|

| 67 |

+

required: true

|

| 68 |

+

- type: textarea

|

| 69 |

+

id: unresolved_question

|

| 70 |

+

attributes:

|

| 71 |

+

label: "未解决问题 | Unresolved questions"

|

| 72 |

+

description: |

|

| 73 |

+

有哪些尚未解决的问题?

|

| 74 |

+

What questions still remain unresolved ?

|

| 75 |

+

placeholder: |

|

| 76 |

+

Identify any unresolved issues.

|

| 77 |

+

validations:

|

| 78 |

+

required: false

|

.gitignore

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__

|

| 2 |

+

*.so

|

| 3 |

+

build

|

| 4 |

+

.coverage_*

|

| 5 |

+

*.egg-info

|

| 6 |

+

*~

|

| 7 |

+

.vscode/

|

| 8 |

+

.idea/

|

| 9 |

+

.DS_Store

|

| 10 |

+

|

| 11 |

+

/private/

|

1.jpg

ADDED

|

FAQ.md

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# FAQ

|

| 2 |

+

|

| 3 |

+

## Installation & Environment

|

| 4 |

+

|

| 5 |

+

#### Which version of transformers should I use?

|

| 6 |

+

|

| 7 |

+

4.31.0 is preferred.

|

| 8 |

+

|

| 9 |

+

#### I downloaded the codes and checkpoints but I can't load the model locally. What should I do?

|

| 10 |

+

|

| 11 |

+

Please check if you have updated the code to the latest, and correctly downloaded all the sharded checkpoint files.

|

| 12 |

+

|

| 13 |

+

#### `qwen.tiktoken` is not found. What is it?

|

| 14 |

+

|

| 15 |

+

This is the merge file of the tokenizer. You have to download it. Note that if you just git clone the repo without [git-lfs](https://git-lfs.com), you cannot download this file.

|

| 16 |

+

|

| 17 |

+

#### transformers_stream_generator/tiktoken/accelerate not found

|

| 18 |

+

|

| 19 |

+

Run the command `pip install -r requirements.txt`. You can find the file at [https://github.com/QwenLM/Qwen-VL/blob/main/requirements.txt](https://github.com/QwenLM/Qwen-VL/blob/main/requirements.txt).

|

| 20 |

+

<br><br>

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

## Demo & Inference

|

| 25 |

+

|

| 26 |

+

#### Is there any demo?

|

| 27 |

+

|

| 28 |

+

Yes, see `web_demo_mm.py` for web demo. See README for more information.

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

#### Can Qwen-VL support streaming?

|

| 33 |

+

|

| 34 |

+

No. We do not support streaming yet.

|

| 35 |

+

|

| 36 |

+

#### It seems that the generation is not related to the instruction...

|

| 37 |

+

|

| 38 |

+

Please check if you are loading Qwen-VL-Chat instead of Qwen-VL. Qwen-VL is the base model without alignment, which behaves differently from the SFT/Chat model.

|

| 39 |

+

|

| 40 |

+

#### Is quantization supported?

|

| 41 |

+

|

| 42 |

+

No. We would support quantization asap.

|

| 43 |

+

|

| 44 |

+

#### Unsatisfactory performance in processing long sequences

|

| 45 |

+

|

| 46 |

+

Please ensure that NTK is applied. `use_dynamc_ntk` and `use_logn_attn` in `config.json` should be set to `true` (`true` by default).

|

| 47 |

+

<br><br>

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

## Tokenizer

|

| 51 |

+

|

| 52 |

+

#### bos_id/eos_id/pad_id not found

|

| 53 |

+

|

| 54 |

+

In our training, we only use `<|endoftext|>` as the separator and padding token. You can set bos_id, eos_id, and pad_id to tokenizer.eod_id. Learn more about our tokenizer from our documents about the tokenizer.

|

| 55 |

+

|

FAQ_zh.md

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# FAQ

|

| 2 |

+

|

| 3 |

+

## 安装&环境

|

| 4 |

+

|

| 5 |

+

#### 我应该用哪个transformers版本?

|

| 6 |

+

|

| 7 |

+

建议使用4.31.0。

|

| 8 |

+

|

| 9 |

+

#### 我把模型和代码下到本地,按照教程无法使用,该怎么办?

|

| 10 |

+

|

| 11 |

+

答:别着急,先检查你的代码是不是更新到最新版本,然后确认你是否完整地将模型checkpoint下到本地。

|

| 12 |

+

|

| 13 |

+

#### `qwen.tiktoken`这个文件找不到,怎么办?

|

| 14 |

+

|

| 15 |

+

这个是我们的tokenizer的merge文件,你必须下载它才能使用我们的tokenizer。注意,如果你使用git clone却没有使用git-lfs,这个文件不会被下载。如果你不了解git-lfs,可点击[官网](https://git-lfs.com/)了解。

|

| 16 |

+

|

| 17 |

+

#### transformers_stream_generator/tiktoken/accelerate,这几个库提示找不到,怎么办?

|

| 18 |

+

|

| 19 |

+

运行如下命令:`pip install -r requirements.txt`。相关依赖库在[https://github.com/QwenLM/Qwen-VL/blob/main/requirements.txt](https://github.com/QwenLM/Qwen-VL/blob/main/requirements.txt) 可以找到。

|

| 20 |

+

<br><br>

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## Demo & 推理

|

| 24 |

+

|

| 25 |

+

#### 是否提供Demo?

|

| 26 |

+

|

| 27 |

+

`web_demo_mm.py`提供了Web UI。请查看README相关内容了解更多。

|

| 28 |

+

|

| 29 |

+

#### Qwen-VL支持流式推理吗?

|

| 30 |

+

|

| 31 |

+

Qwen-VL当前不支持流式推理。

|

| 32 |

+

|

| 33 |

+

#### 模型的输出看起来与输入无关/没有遵循指令/看起来呆呆的

|

| 34 |

+

|

| 35 |

+

请检查是否加载的是Qwen-VL-Chat模型进行推理,Qwen-VL模型是未经align的预训练基模型,不期望具备响应用户指令的能力。我们在模型最新版本已经对`chat`接口内进行了检查,避免您误将预训练模型作为SFT/Chat模型使用。

|

| 36 |

+

|

| 37 |

+

#### 是否有量化版本模型

|

| 38 |

+

|

| 39 |

+

目前Qwen-VL不支持量化,后续我们将支持高效的量化推理实现。

|

| 40 |

+

|

| 41 |

+

#### 处理长序列时效果有问题

|

| 42 |

+

|

| 43 |

+

请确认是否开启ntk。若要启用这些技巧,请将`config.json`里的`use_dynamc_ntk`和`use_logn_attn`设置为`true`。最新代码默认为`true`。

|

| 44 |

+

<br><br>

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

## Tokenizer

|

| 48 |

+

|

| 49 |

+

#### bos_id/eos_id/pad_id,这些token id不存在,为什么?

|

| 50 |

+

|

| 51 |

+

在训练过程中,我们仅使用<|endoftext|>这一token作为sample/document之间的分隔符及padding位置占位符,你可以将bos_id, eos_id, pad_id均指向tokenizer.eod_id。请阅读我们关于tokenizer的文档,了解如何设置这些id。

|

| 52 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Tongyi Qianwen LICENSE AGREEMENT

|

| 2 |

+

|

| 3 |

+

Tongyi Qianwen Release Date: August 23, 2023

|

| 4 |

+

|

| 5 |

+

By clicking to agree or by using or distributing any portion or element of the Tongyi Qianwen Materials, you will be deemed to have recognized and accepted the content of this Agreement, which is effective immediately.

|

| 6 |

+

|

| 7 |

+

1. Definitions

|

| 8 |

+

a. This Tongyi Qianwen LICENSE AGREEMENT (this "Agreement") shall mean the terms and conditions for use, reproduction, distribution and modification of the Materials as defined by this Agreement.

|

| 9 |

+

b. "We"(or "Us") shall mean Alibaba Cloud.

|

| 10 |

+

c. "You" (or "Your") shall mean a natural person or legal entity exercising the rights granted by this Agreement and/or using the Materials for any purpose and in any field of use.

|

| 11 |

+

d. "Third Parties" shall mean individuals or legal entities that are not under common control with Us or You.

|

| 12 |

+

e. "Tongyi Qianwen" shall mean the large language models (including Qwen-VL model and Qwen-VL-Chat model), and software and algorithms, consisting of trained model weights, parameters (including optimizer states), machine-learning model code, inference-enabling code, training-enabling code, fine-tuning enabling code and other elements of the foregoing distributed by Us.

|

| 13 |

+

f. "Materials" shall mean, collectively, Alibaba Cloud's proprietary Tongyi Qianwen and Documentation (and any portion thereof) made available under this Agreement.

|

| 14 |

+

g. "Source" form shall mean the preferred form for making modifications, including but not limited to model source code, documentation source, and configuration files.

|

| 15 |

+

h. "Object" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation,

|

| 16 |

+

and conversions to other media types.

|

| 17 |

+

|

| 18 |

+

2. Grant of Rights

|

| 19 |

+

You are granted a non-exclusive, worldwide, non-transferable and royalty-free limited license under Alibaba Cloud's intellectual property or other rights owned by Us embodied in the Materials to use, reproduce, distribute, copy, create derivative works of, and make modifications to the Materials.

|

| 20 |

+

|

| 21 |

+

3. Redistribution

|

| 22 |

+

You may reproduce and distribute copies of the Materials or derivative works thereof in any medium, with or without modifications, and in Source or Object form, provided that You meet the following conditions:

|

| 23 |

+

a. You shall give any other recipients of the Materials or derivative works a copy of this Agreement;

|

| 24 |

+

b. You shall cause any modified files to carry prominent notices stating that You changed the files;

|

| 25 |

+

c. You shall retain in all copies of the Materials that You distribute the following attribution notices within a "Notice" text file distributed as a part of such copies: "Tongyi Qianwen is licensed under the Tongyi Qianwen LICENSE AGREEMENT, Copyright (c) Alibaba Cloud. All Rights Reserved."; and

|

| 26 |

+

d. You may add Your own copyright statement to Your modifications and may provide additional or different license terms and conditions for use, reproduction, or distribution of Your modifications, or for any such derivative works as a whole, provided Your use, reproduction, and distribution of the work otherwise complies with the terms and conditions of this Agreement.

|

| 27 |

+

|

| 28 |

+

4. Restrictions

|

| 29 |

+

If you are commercially using the Materials, and your product or service has more than 100 million monthly active users, You shall request a license from Us. You cannot exercise your rights under this Agreement without our express authorization.

|

| 30 |

+

|

| 31 |

+

5. Rules of use

|

| 32 |

+

a. The Materials may be subject to export controls or restrictions in China, the United States or other countries or regions. You shall comply with applicable laws and regulations in your use of the Materials.

|

| 33 |

+

b. You can not use the Materials or any output therefrom to improve any other large language model (excluding Tongyi Qianwen or derivative works thereof).

|

| 34 |

+

|

| 35 |

+

6. Intellectual Property

|

| 36 |

+

a. We retain ownership of all intellectual property rights in and to the Materials and derivatives made by or for Us. Conditioned upon compliance with the terms and conditions of this Agreement, with respect to any derivative works and modifications of the Materials that are made by you, you are and will be the owner of such derivative works and modifications.

|

| 37 |

+

b. No trademark license is granted to use the trade names, trademarks, service marks, or product names of Us, except as required to fulfill notice requirements under this Agreement or as required for reasonable and customary use in describing and redistributing the Materials.

|

| 38 |

+

c. If you commence a lawsuit or other proceedings (including a cross-claim or counterclaim in a lawsuit) against Us or any entity alleging that the Materials or any output therefrom, or any part of the foregoing, infringe any intellectual property or other right owned or licensable by you, then all licences granted to you under this Agreement shall terminate as of the date such lawsuit or other proceeding is commenced or brought.

|

| 39 |

+

|

| 40 |

+

7. Disclaimer of Warranty and Limitation of Liability

|

| 41 |

+

|

| 42 |

+

a. We are not obligated to support, update, provide training for, or develop any further version of the Tongyi Qianwen Materials or to grant any license thereto.

|

| 43 |

+

b. THE MATERIALS ARE PROVIDED "AS IS" WITHOUT ANY EXPRESS OR IMPLIED WARRANTY OF ANY KIND INCLUDING WARRANTIES OF MERCHANTABILITY, NONINFRINGEMENT, OR FITNESS FOR A PARTICULAR PURPOSE. WE MAKE NO WARRANTY AND ASSUME NO RESPONSIBILITY FOR THE SAFETY OR STABILITY OF THE MATERIALS AND ANY OUTPUT THEREFROM.

|

| 44 |

+

c. IN NO EVENT SHALL WE BE LIABLE TO YOU FOR ANY DAMAGES, INCLUDING, BUT NOT LIMITED TO ANY DIRECT, OR INDIRECT, SPECIAL OR CONSEQUENTIAL DAMAGES ARISING FROM YOUR USE OR INABILITY TO USE THE MATERIALS OR ANY OUTPUT OF IT, NO MATTER HOW IT’S CAUSED.

|

| 45 |

+

d. You will defend, indemnify and hold harmless Us from and against any claim by any third party arising out of or related to your use or distribution of the Materials.

|

| 46 |

+

|

| 47 |

+

8. Survival and Termination.

|

| 48 |

+

a. The term of this Agreement shall commence upon your acceptance of this Agreement or access to the Materials and will continue in full force and effect until terminated in accordance with the terms and conditions herein.

|

| 49 |

+

b. We may terminate this Agreement if you breach any of the terms or conditions of this Agreement. Upon termination of this Agreement, you must delete and cease use of the Materials. Sections 7 and 9 shall survive the termination of this Agreement.

|

| 50 |

+

|

| 51 |

+

9. Governing Law and Jurisdiction.

|

| 52 |

+

a. This Agreement and any dispute arising out of or relating to it will be governed by the laws of China, without regard to conflict of law principles, and the UN Convention on Contracts for the International Sale of Goods does not apply to this Agreement.

|

| 53 |

+

b. The People's Courts in Hangzhou City shall have exclusive jurisdiction over any dispute arising out of this Agreement.

|

NOTICE

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

------------- LICENSE FOR NVIDIA Megatron-LM code --------------

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

|

| 4 |

+

|

| 5 |

+

Redistribution and use in source and binary forms, with or without

|

| 6 |

+

modification, are permitted provided that the following conditions

|

| 7 |

+

are met:

|

| 8 |

+

* Redistributions of source code must retain the above copyright

|

| 9 |

+

notice, this list of conditions and the following disclaimer.

|

| 10 |

+

* Redistributions in binary form must reproduce the above copyright

|

| 11 |

+

notice, this list of conditions and the following disclaimer in the

|

| 12 |

+

documentation and/or other materials provided with the distribution.

|

| 13 |

+

* Neither the name of NVIDIA CORPORATION nor the names of its

|

| 14 |

+

contributors may be used to endorse or promote products derived

|

| 15 |

+

from this software without specific prior written permission.

|

| 16 |

+

|

| 17 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS ``AS IS'' AND ANY

|

| 18 |

+

EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

| 19 |

+

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

|

| 20 |

+

PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR

|

| 21 |

+

CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL,

|

| 22 |

+

EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO,

|

| 23 |

+

PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR

|

| 24 |

+

PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY

|

| 25 |

+

OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

|

| 26 |

+

(INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

| 27 |

+

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

------------- LICENSE FOR OpenAI tiktoken code --------------

|

| 31 |

+

|

| 32 |

+

MIT License

|

| 33 |

+

|

| 34 |

+

Copyright (c) 2022 OpenAI, Shantanu Jain

|

| 35 |

+

|

| 36 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 37 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 38 |

+

in the Software without restriction, including without limitation the rights

|

| 39 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 40 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 41 |

+

furnished to do so, subject to the following conditions:

|

| 42 |

+

|

| 43 |

+

The above copyright notice and this permission notice shall be included in all

|

| 44 |

+

copies or substantial portions of the Software.

|

| 45 |

+

|

| 46 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 47 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 48 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 49 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 50 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 51 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 52 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,680 @@

|

|

| 1 |

---

|

| 2 |

-

title: Qwen

|

| 3 |

-

|

| 4 |

-

colorFrom: yellow

|

| 5 |

-

colorTo: yellow

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 3.40.1

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Qwen-VL

|

| 3 |

+

app_file: web_demo_mm.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

sdk_version: 3.40.1

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

<br>

|

| 8 |

+

|

| 9 |

+

<p align="center">

|

| 10 |

+

<img src="assets/logo.jpg" width="400"/>

|

| 11 |

+

<p>

|

| 12 |

+

<br>

|

| 13 |

+

|

| 14 |

+

<p align="center">

|

| 15 |

+

Qwen-VL <a href="https://modelscope.cn/models/qwen/Qwen-VL/summary">🤖 <a> | <a href="https://huggingface.co/Qwen/Qwen-VL">🤗</a>  | Qwen-VL-Chat <a href="https://modelscope.cn/models/qwen/Qwen-VL-Chat/summary">🤖 <a>| <a href="https://huggingface.co/Qwen/Qwen-VL-Chat">🤗</a>  |  <a href="https://modelscope.cn/studios/qwen/Qwen-VL-Chat-Demo/summary">Demo</a>  |  <a>Report</a>   |   <a href="https://discord.gg/z3GAxXZ9Ce">Discord</a>

|

| 16 |

+

|

| 17 |

+

</p>

|

| 18 |

+

<br>

|

| 19 |

+

|

| 20 |

+

<p align="center">

|

| 21 |

+

<a href="README_CN.md">中文</a>  |   English

|

| 22 |

+

</p>

|

| 23 |

+

<br><br>

|

| 24 |

+

|

| 25 |

+

**Qwen-VL** (Qwen Large Vision Language Model) is the multimodal version of the large model series, Qwen (abbr. Tongyi Qianwen), proposed by Alibaba Cloud. Qwen-VL accepts image, text, and bounding box as inputs, outputs text and bounding box. The features of Qwen-VL include:

|

| 26 |

+

- **Strong performance**: It significantly surpasses existing open-source Large Vision Language Models (LVLM) under similar model scale on multiple English evaluation benchmarks (including Zero-shot Captioning, VQA, DocVQA, and Grounding).

|

| 27 |

+

- **Multi-lingual LVLM supporting text recognition**: Qwen-VL naturally supports English, Chinese, and multi-lingual conversation, and it promotes end-to-end recognition of Chinese and English bi-lingual text in images.

|

| 28 |

+

- **Multi-image interleaved conversations**: This feature allows for the input and comparison of multiple images, as well as the ability to specify questions related to the images and engage in multi-image storytelling.

|

| 29 |

+

- **First generalist model supporting grounding in Chinese**: Detecting bounding boxes through open-domain language expression in both Chinese and English.

|

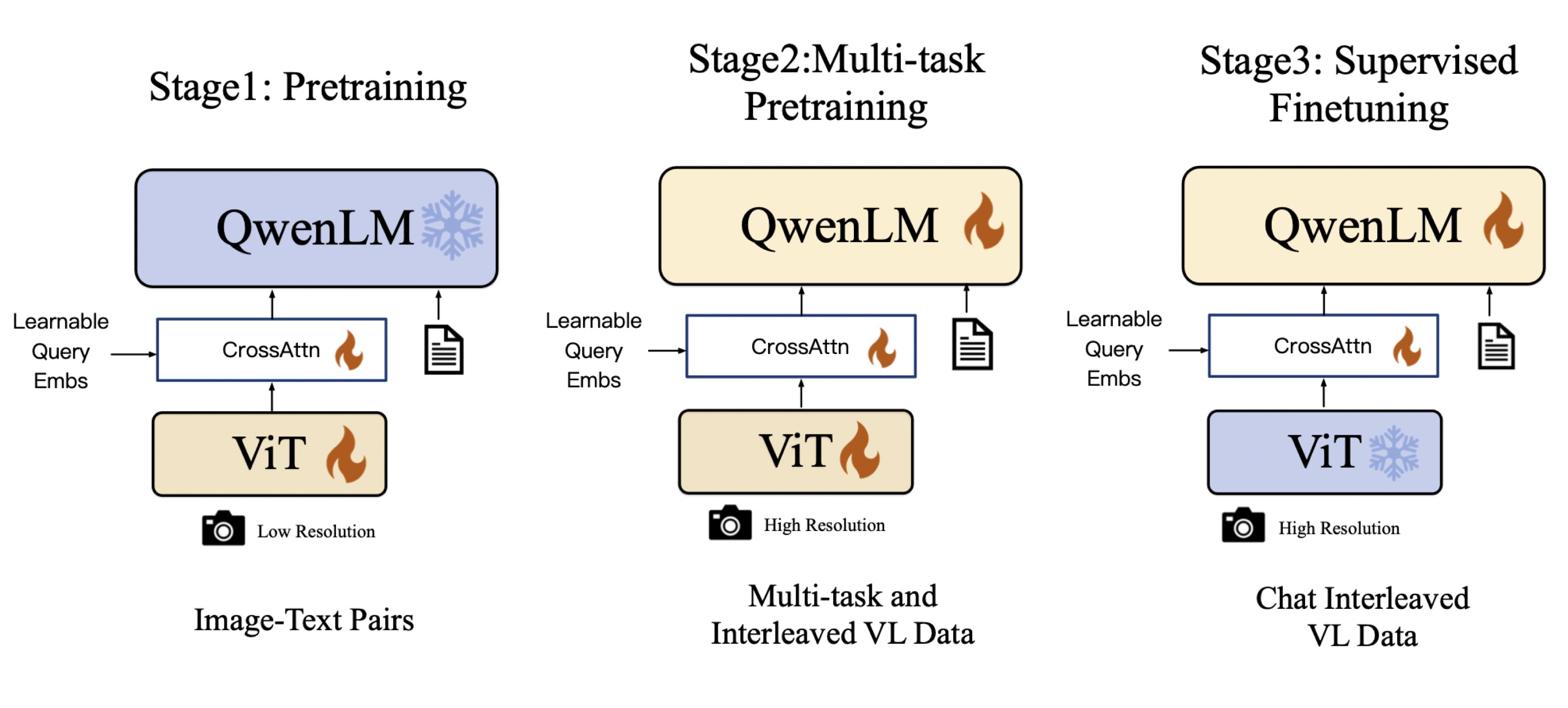

| 30 |

+

- **Fine-grained recognition and understanding**: Compared to the 224\*224 resolution currently used by other open-source LVLM, the 448\*448 resolution promotes fine-grained text recognition, document QA, and bounding box annotation.

|

| 31 |

+

|

| 32 |

+

<br>

|

| 33 |

+

<p align="center">

|

| 34 |

+

<img src="assets/demo_vl.gif" width="400"/>

|

| 35 |

+

<p>

|

| 36 |

+

<br>

|

| 37 |

+

|

| 38 |

+

We release two models of the Qwen-VL series:

|

| 39 |

+

- Qwen-VL: The pre-trained LVLM model uses Qwen-7B as the initialization of the LLM, and [Openclip ViT-bigG](https://github.com/mlfoundations/open_clip) as the initialization of the visual encoder. And connects them with a randomly initialized cross-attention layer.

|

| 40 |

+

- Qwen-VL-Chat: A multimodal LLM-based AI assistant, which is trained with alignment techniques. Qwen-VL-Chat supports more flexible interaction, such as multiple image inputs, multi-round question answering, and creative capabilities.

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

## Evaluation

|

| 44 |

+

|

| 45 |

+

We evaluated the model's abilities from two perspectives:

|

| 46 |

+

1. **Standard Benchmarks**: We evaluate the model's basic task capabilities on four major categories of multimodal tasks:

|

| 47 |

+

- Zero-shot Captioning: Evaluate model's zero-shot image captioning ability on unseen datasets;

|

| 48 |

+

- General VQA: Evaluate the general question-answering ability of pictures, such as the judgment, color, number, category, etc;

|

| 49 |

+

- Text-based VQA: Evaluate the model's ability to recognize text in pictures, such as document QA, chart QA, etc;

|

| 50 |

+

- Referring Expression Comprehension: Evaluate the ability to localize a target object in an image described by a referring expression.

|

| 51 |

+

|

| 52 |

+

2. **TouchStone**: To evaluate the overall text-image dialogue capability and alignment level with humans, we have constructed a benchmark called TouchStone, which is based on scoring with GPT4 to evaluate the LVLM model.

|

| 53 |

+

- The TouchStone benchmark covers a total of 300+ images, 800+ questions, and 27 categories. Such as attribute-based Q&A, celebrity recognition, writing poetry, summarizing multiple images, product comparison, math problem solving, etc;

|

| 54 |

+

- In order to break the current limitation of GPT4 in terms of direct image input, TouchStone provides fine-grained image annotations by human labeling. These detailed annotations, along with the questions and the model's output, are then presented to GPT4 for scoring.

|

| 55 |

+

- The benchmark includes both English and Chinese versions.

|

| 56 |

+

|

| 57 |

+

The results of the evaluation are as follows:

|

| 58 |

+

|

| 59 |

+

Qwen-VL outperforms current SOTA generalist models on multiple VL tasks and has a more comprehensive coverage in terms of capability range.

|

| 60 |

+

|

| 61 |

+

<p align="center">

|

| 62 |

+

<img src="assets/radar.png" width="600"/>

|

| 63 |

+

<p>

|

| 64 |

+

|

| 65 |

+

### Zero-shot Captioning & General VQA

|

| 66 |

+

<table>

|

| 67 |

+

<thead>

|

| 68 |

+

<tr>

|

| 69 |

+

<th rowspan="2">Model type</th>

|

| 70 |

+

<th rowspan="2">Model</th>

|

| 71 |

+

<th colspan="2">Zero-shot Captioning</th>

|

| 72 |

+

<th colspan="5">General VQA</th>

|

| 73 |

+

</tr>

|

| 74 |

+

<tr>

|

| 75 |

+

<th>NoCaps</th>

|

| 76 |

+

<th>Flickr30K</th>

|

| 77 |

+

<th>VQAv2<sup>dev</sup></th>

|

| 78 |

+

<th>OK-VQA</th>

|

| 79 |

+

<th>GQA</th>

|

| 80 |

+

<th>SciQA-Img<br>(0-shot)</th>

|

| 81 |

+

<th>VizWiz<br>(0-shot)</th>

|

| 82 |

+

</tr>

|

| 83 |

+

</thead>

|

| 84 |

+

<tbody align="center">

|

| 85 |

+

<tr>

|

| 86 |

+

<td rowspan="10">Generalist<br>Models</td>

|

| 87 |

+

<td>Flamingo-9B</td>

|

| 88 |

+

<td>-</td>

|

| 89 |

+

<td>61.5</td>

|

| 90 |

+

<td>51.8</td>

|

| 91 |

+

<td>44.7</td>

|

| 92 |

+

<td>-</td>

|

| 93 |

+

<td>-</td>

|

| 94 |

+

<td>28.8</td>

|

| 95 |

+

</tr>

|

| 96 |

+

<tr>

|

| 97 |

+

<td>Flamingo-80B</td>

|

| 98 |

+

<td>-</td>

|

| 99 |

+

<td>67.2</td>

|

| 100 |

+

<td>56.3</td>

|

| 101 |

+

<td>50.6</td>

|

| 102 |

+

<td>-</td>

|

| 103 |

+

<td>-</td>

|

| 104 |

+

<td>31.6</td>

|

| 105 |

+

</tr>

|

| 106 |

+

<tr>

|

| 107 |

+

<td>Unified-IO-XL</td>

|

| 108 |

+

<td>100.0</td>

|

| 109 |

+

<td>-</td>

|

| 110 |

+

<td>77.9</td>

|

| 111 |

+

<td>54.0</td>

|

| 112 |

+

<td>-</td>

|

| 113 |

+

<td>-</td>

|

| 114 |

+

<td>-</td>

|

| 115 |

+

</tr>

|

| 116 |

+

<tr>

|

| 117 |

+

<td>Kosmos-1</td>

|

| 118 |

+

<td>-</td>

|

| 119 |

+

<td>67.1</td>

|

| 120 |

+

<td>51.0</td>

|

| 121 |

+

<td>-</td>

|

| 122 |

+

<td>-</td>

|

| 123 |

+

<td>-</td>

|

| 124 |

+

<td>29.2</td>

|

| 125 |

+

</tr>

|

| 126 |

+

<tr>

|

| 127 |

+

<td>Kosmos-2</td>

|

| 128 |

+

<td>-</td>

|

| 129 |

+

<td>66.7</td>

|

| 130 |

+

<td>45.6</td>

|

| 131 |

+

<td>-</td>

|

| 132 |

+

<td>-</td>

|

| 133 |

+

<td>-</td>

|

| 134 |

+

<td>-</td>

|

| 135 |

+

</tr>

|

| 136 |

+

<tr>

|

| 137 |

+

<td>BLIP-2 (Vicuna-13B)</td>

|

| 138 |

+

<td>103.9</td>

|

| 139 |

+

<td>71.6</td>

|

| 140 |

+

<td>65.0</td>

|

| 141 |

+

<td>45.9</td>

|

| 142 |

+

<td>32.3</td>

|

| 143 |

+

<td>61.0</td>

|

| 144 |

+

<td>19.6</td>

|

| 145 |

+

</tr>

|

| 146 |

+

<tr>

|

| 147 |

+

<td>InstructBLIP (Vicuna-13B)</td>

|

| 148 |

+

<td><strong>121.9</strong></td>

|

| 149 |

+

<td>82.8</td>

|

| 150 |

+

<td>-</td>

|

| 151 |

+

<td>-</td>

|

| 152 |

+

<td>49.5</td>

|

| 153 |

+

<td>63.1</td>

|

| 154 |

+

<td>33.4</td>

|

| 155 |

+

</tr>

|

| 156 |

+

<tr>

|

| 157 |

+

<td>Shikra (Vicuna-13B)</td>

|

| 158 |

+

<td>-</td>

|

| 159 |

+

<td>73.9</td>

|

| 160 |

+

<td>77.36</td>

|

| 161 |

+

<td>47.16</td>

|

| 162 |

+

<td>-</td>

|

| 163 |

+

<td>-</td>

|

| 164 |

+

<td>-</td>

|

| 165 |

+

</tr>

|

| 166 |

+

<tr>

|

| 167 |

+

<td><strong>Qwen-VL (Qwen-7B)</strong></td>

|

| 168 |

+

<td>121.4</td>

|

| 169 |

+

<td><b>85.8</b></td>

|

| 170 |

+

<td><b>78.8</b></td>

|

| 171 |

+

<td><b>58.6</b></td>

|

| 172 |

+

<td><b>59.3</b></td>

|

| 173 |

+

<td>67.1</td>

|

| 174 |

+

<td>35.2</td>

|

| 175 |

+

</tr>

|

| 176 |

+

<!-- <tr>

|

| 177 |

+

<td>Qwen-VL (4-shot)</td>

|

| 178 |

+

<td>-</td>

|

| 179 |

+

<td>-</td>

|

| 180 |

+

<td>-</td>

|

| 181 |

+

<td>63.6</td>

|

| 182 |

+

<td>-</td>

|

| 183 |

+

<td>-</td>

|

| 184 |

+

<td>39.1</td>

|

| 185 |

+

</tr> -->

|

| 186 |

+

<tr>

|

| 187 |

+

<td>Qwen-VL-Chat</td>

|

| 188 |

+

<td>120.2</td>

|

| 189 |

+

<td>81.0</td>

|

| 190 |

+

<td>78.2</td>

|

| 191 |

+

<td>56.6</td>

|

| 192 |

+

<td>57.5</td>

|

| 193 |

+

<td><b>68.2</b></td>

|

| 194 |

+

<td><b>38.9</b></td>

|

| 195 |

+

</tr>

|

| 196 |

+

<!-- <tr>

|

| 197 |

+

<td>Qwen-VL-Chat (4-shot)</td>

|

| 198 |

+

<td>-</td>

|

| 199 |

+

<td>-</td>

|

| 200 |

+

<td>-</td>

|

| 201 |

+

<td>60.6</td>

|

| 202 |

+

<td>-</td>

|

| 203 |

+

<td>-</td>

|

| 204 |

+

<td>44.45</td>

|

| 205 |

+

</tr> -->

|

| 206 |

+

<tr>

|

| 207 |

+

<td>Previous SOTA<br>(Per Task Fine-tuning)</td>

|

| 208 |

+

<td>-</td>

|

| 209 |

+

<td>127.0<br>(PALI-17B)</td>

|

| 210 |

+

<td>84.5<br>(InstructBLIP<br>-FlanT5-XL)</td>

|

| 211 |

+

<td>86.1<br>(PALI-X<br>-55B)</td>

|

| 212 |

+

<td>66.1<br>(PALI-X<br>-55B)</td>

|

| 213 |

+

<td>72.1<br>(CFR)</td>

|

| 214 |

+

<td>92.53<br>(LLaVa+<br>GPT-4)</td>

|

| 215 |

+

<td>70.9<br>(PALI-X<br>-55B)</td>

|

| 216 |

+

</tr>

|

| 217 |

+

</tbody>

|

| 218 |

+

</table>

|

| 219 |

+

|

| 220 |

+

- For zero-shot image captioning, Qwen-VL achieves the **SOTA** on Flickr30K and competitive results on Nocaps with InstructBlip.

|

| 221 |

+

- For general VQA, Qwen-VL achieves the **SOTA** under the same generalist LVLM scale settings.

|

| 222 |

+

|

| 223 |

+

### Text-oriented VQA (focuse on text understanding capabilities in images)

|

| 224 |