Spaces:

Sleeping

Sleeping

Shri Jayaram

commited on

Commit

·

fdad24e

1

Parent(s):

c7d31dd

scale detection method

Browse files- FlowChart.png +0 -0

- app.py +398 -0

- requirements.txt +10 -0

FlowChart.png

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,398 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

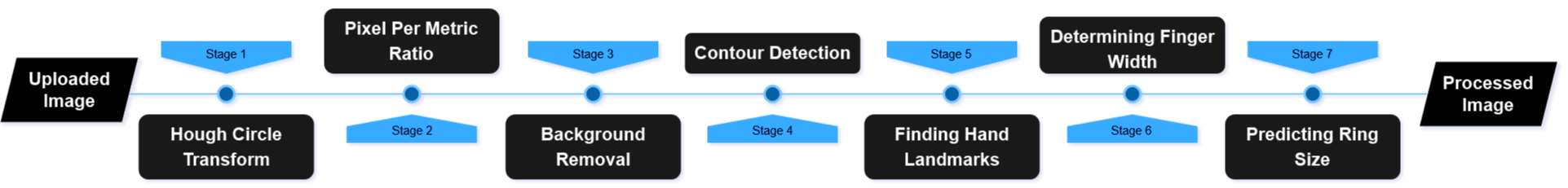

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from PIL import Image, ImageDraw, ImageFont

|

| 3 |

+

import io

|

| 4 |

+

from io import BytesIO

|

| 5 |

+

import os

|

| 6 |

+

import cv2

|

| 7 |

+

import numpy as np

|

| 8 |

+

import matplotlib.pyplot as plt

|

| 9 |

+

from rembg import remove

|

| 10 |

+

import mediapipe as mp

|

| 11 |

+

import torch

|

| 12 |

+

from transformers import AutoProcessor, AutoModelForCausalLM

|

| 13 |

+

from transformers.dynamic_module_utils import get_imports

|

| 14 |

+

from unittest.mock import patch

|

| 15 |

+

from scipy.spatial import distance as dist

|

| 16 |

+

|

| 17 |

+

st.set_page_config(layout="wide", page_title="Ring Size Measurement")

|

| 18 |

+

ring_size_dict = {

|

| 19 |

+

14.0: 3,

|

| 20 |

+

14.4: 3.5,

|

| 21 |

+

14.8: 4,

|

| 22 |

+

15.2: 4.5,

|

| 23 |

+

15.6: 5,

|

| 24 |

+

16.0: 5.5,

|

| 25 |

+

16.45: 6,

|

| 26 |

+

16.9: 6.5,

|

| 27 |

+

17.3: 7,

|

| 28 |

+

17.7: 7.5,

|

| 29 |

+

18.2: 8,

|

| 30 |

+

18.6: 8.5,

|

| 31 |

+

19.0: 9,

|

| 32 |

+

19.4: 9.5,

|

| 33 |

+

19.8: 10,

|

| 34 |

+

20.2: 10.5,

|

| 35 |

+

20.6: 11,

|

| 36 |

+

21.0: 11.5,

|

| 37 |

+

21.4: 12,

|

| 38 |

+

21.8: 12.5,

|

| 39 |

+

22.2: 13,

|

| 40 |

+

22.6: 13.5

|

| 41 |

+

}

|

| 42 |

+

|

| 43 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 44 |

+

|

| 45 |

+

def fixed_get_imports(filename: str | os.PathLike) -> list[str]:

|

| 46 |

+

if not str(filename).endswith("modeling_florence2.py"):

|

| 47 |

+

return get_imports(filename)

|

| 48 |

+

imports = get_imports(filename)

|

| 49 |

+

imports.remove("flash_attn")

|

| 50 |

+

return imports

|

| 51 |

+

|

| 52 |

+

def load_model():

|

| 53 |

+

model_id = "microsoft/Florence-2-base-ft"

|

| 54 |

+

processor = AutoProcessor.from_pretrained(model_id, torch_dtype=torch.qint8, trust_remote_code=True)

|

| 55 |

+

|

| 56 |

+

try:

|

| 57 |

+

os.mkdir("temp")

|

| 58 |

+

except:

|

| 59 |

+

pass

|

| 60 |

+

|

| 61 |

+

with patch("transformers.dynamic_module_utils.get_imports", fixed_get_imports):

|

| 62 |

+

model = AutoModelForCausalLM.from_pretrained(model_id, attn_implementation="sdpa", trust_remote_code=True)

|

| 63 |

+

|

| 64 |

+

Qmodel = torch.quantization.quantize_dynamic(model, {torch.nn.Linear}, dtype=torch.qint8)

|

| 65 |

+

return Qmodel.to(device), processor

|

| 66 |

+

|

| 67 |

+

if 'model_loaded' not in st.session_state:

|

| 68 |

+

st.session_state.model_loaded = False

|

| 69 |

+

|

| 70 |

+

if not st.session_state.model_loaded:

|

| 71 |

+

with st.spinner('Loading model...'):

|

| 72 |

+

st.session_state.model, st.session_state.processor = load_model()

|

| 73 |

+

st.session_state.model_loaded = True

|

| 74 |

+

st.write("Model loaded complete")

|

| 75 |

+

|

| 76 |

+

def calculate_pixel_per_metric(image, known_diameter_of_coin=25):

|

| 77 |

+

def generate_labels(model, processor, task_prompt, image, text_input=None):

|

| 78 |

+

if text_input is None:

|

| 79 |

+

prompt = task_prompt

|

| 80 |

+

else:

|

| 81 |

+

prompt = task_prompt + " " + text_input

|

| 82 |

+

|

| 83 |

+

inputs = processor(text=prompt, images=image, return_tensors="pt").to(device)

|

| 84 |

+

|

| 85 |

+

generated_ids = model.generate(

|

| 86 |

+

input_ids=inputs["input_ids"],

|

| 87 |

+

pixel_values=inputs["pixel_values"],

|

| 88 |

+

max_new_tokens=1024,

|

| 89 |

+

early_stopping=False,

|

| 90 |

+

do_sample=False,

|

| 91 |

+

num_beams=3,

|

| 92 |

+

)

|

| 93 |

+

|

| 94 |

+

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

|

| 95 |

+

|

| 96 |

+

output = processor.post_process_generation(

|

| 97 |

+

generated_text,

|

| 98 |

+

task=task_prompt,

|

| 99 |

+

image_size=(image.width, image.height)

|

| 100 |

+

)

|

| 101 |

+

|

| 102 |

+

return output

|

| 103 |

+

|

| 104 |

+

def plot_bbox(original_image, data):

|

| 105 |

+

# Create a copy of the original image to draw on

|

| 106 |

+

image_with_bboxes = original_image.copy()

|

| 107 |

+

|

| 108 |

+

# Use Pillow to draw bounding boxes and labels

|

| 109 |

+

draw = ImageDraw.Draw(image_with_bboxes)

|

| 110 |

+

def calculate_bbox_dimensions(bbox):

|

| 111 |

+

x1, y1, x2, y2 = bbox

|

| 112 |

+

width = x2 - x1

|

| 113 |

+

height = y2 - y1

|

| 114 |

+

return width, height

|

| 115 |

+

|

| 116 |

+

# Inside your `plot_bbox` function, after drawing the bounding box:

|

| 117 |

+

for bbox, label in zip(data['bboxes'], data['labels']):

|

| 118 |

+

x1, y1, x2, y2 = bbox

|

| 119 |

+

draw.rectangle([x1, y1, x2, y2], outline="red", width=2)

|

| 120 |

+

draw.text((x1, y1), label, fill="red", font=ImageFont.truetype("arial.ttf", 28))

|

| 121 |

+

|

| 122 |

+

# Calculate dimensions

|

| 123 |

+

width, height = calculate_bbox_dimensions(bbox)

|

| 124 |

+

print(f"Label: {label}, Width: {width}, Height: {height}")

|

| 125 |

+

dimension_text = f"W: {width}, H: {height}"

|

| 126 |

+

draw.text((x1, y1 + 20), dimension_text, fill="red", font=ImageFont.truetype("arial.ttf", 28))

|

| 127 |

+

|

| 128 |

+

real_world_dimension_mm = 160

|

| 129 |

+

largest_dimension = max(width, height)

|

| 130 |

+

pixels_per_mm = largest_dimension / real_world_dimension_mm

|

| 131 |

+

ratio_text = f"Pixels/mm: {pixels_per_mm:.2f}"

|

| 132 |

+

draw.text((x1, y1 + 40), ratio_text, fill="red", font=ImageFont.truetype("arial.ttf", 28))

|

| 133 |

+

|

| 134 |

+

# buf = BytesIO()

|

| 135 |

+

# image_with_bboxes.save(buf, format='PNG')

|

| 136 |

+

# buf.seek(0)

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

return image_with_bboxes,pixels_per_mm,pixels_per_mm

|

| 140 |

+

|

| 141 |

+

def detecting_ruler(model, processor, image, task_prompt, text_input=None):

|

| 142 |

+

results = generate_labels(model, processor, task_prompt, image, text_input=text_input)

|

| 143 |

+

image_with_bboxes, value_1, value_2 = plot_bbox(image, results['<CAPTION_TO_PHRASE_GROUNDING>'])

|

| 144 |

+

return value_1, value_2, image_with_bboxes

|

| 145 |

+

|

| 146 |

+

image_for_model = image.copy()

|

| 147 |

+

|

| 148 |

+

image_for_model = cv2.cvtColor(image_for_model, cv2.COLOR_BGR2RGB)

|

| 149 |

+

image_for_model = Image.fromarray(image_for_model)

|

| 150 |

+

# if image_for_model.mode != 'RGB':

|

| 151 |

+

# image_for_model = image_for_model.convert('RGB')

|

| 152 |

+

|

| 153 |

+

# Process the image

|

| 154 |

+

text_input = "ruler"

|

| 155 |

+

task_prompt = "<CAPTION_TO_PHRASE_GROUNDING>"

|

| 156 |

+

pixel_per_metric, mm_per_pixel, marked_image_buf = detecting_ruler(st.session_state.model, st.session_state.processor, image_for_model, task_prompt, text_input)

|

| 157 |

+

|

| 158 |

+

return pixel_per_metric, mm_per_pixel, marked_image_buf

|

| 159 |

+

|

| 160 |

+

def process_image(image):

|

| 161 |

+

return remove(image)

|

| 162 |

+

|

| 163 |

+

def calculate_pip_width(image, original_img, pixel_per_metric):

|

| 164 |

+

def calSize(xA, yA, xB, yB, color_circle, color_line, img):

|

| 165 |

+

d = dist.euclidean((xA, yA), (xB, yB))

|

| 166 |

+

cv2.circle(img, (int(xA), int(yA)), 5, color_circle, -1)

|

| 167 |

+

cv2.circle(img, (int(xB), int(yB)), 5, color_circle, -1)

|

| 168 |

+

cv2.line(img, (int(xA), int(yA)), (int(xB), int(yB)), color_line, 2)

|

| 169 |

+

d_mm = d / pixel_per_metric

|

| 170 |

+

d_mm = d_mm - 1.5

|

| 171 |

+

cv2.putText(img, "{:.1f}".format(d_mm), (int(xA - 15), int(yA - 10)), cv2.FONT_HERSHEY_SIMPLEX, 0.65, (255, 255, 255), 2)

|

| 172 |

+

print(d_mm)

|

| 173 |

+

return d_mm

|

| 174 |

+

|

| 175 |

+

def process_point(point, cnt, m1, b):

|

| 176 |

+

x1, x2 = point[0], point[0]

|

| 177 |

+

y1 = m1 * x1 + b

|

| 178 |

+

y2 = m1 * x2 + b

|

| 179 |

+

|

| 180 |

+

result = 1.0

|

| 181 |

+

while result > 0:

|

| 182 |

+

result = cv2.pointPolygonTest(cnt, (x1, y1), False)

|

| 183 |

+

x1 += 1

|

| 184 |

+

y1 = m1 * x1 + b

|

| 185 |

+

x1 -= 1

|

| 186 |

+

|

| 187 |

+

result = 1.0

|

| 188 |

+

while result > 0:

|

| 189 |

+

result = cv2.pointPolygonTest(cnt, (x2, y2), False)

|

| 190 |

+

x2 -= 1

|

| 191 |

+

y2 = m1 * x2 + b

|

| 192 |

+

x2 += 1

|

| 193 |

+

|

| 194 |

+

return x1, y1, x2, y2

|

| 195 |

+

|

| 196 |

+

og_img = original_img.copy()

|

| 197 |

+

imgH, imgW, _ = image.shape

|

| 198 |

+

imgcpy = image.copy()

|

| 199 |

+

image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

|

| 200 |

+

_, binary_image = cv2.threshold(image_gray, 1, 255, cv2.THRESH_BINARY)

|

| 201 |

+

contours, _ = cv2.findContours(binary_image, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

|

| 202 |

+

contour_image = np.zeros_like(image_gray)

|

| 203 |

+

cv2.drawContours(contour_image, contours, -1, (255), thickness=cv2.FILLED)

|

| 204 |

+

cv2.drawContours(imgcpy, contours, -1, (0, 255, 0), 2)

|

| 205 |

+

# print("length : ",len(contours))

|

| 206 |

+

|

| 207 |

+

marked_img = image.copy()

|

| 208 |

+

|

| 209 |

+

if len(contours) > 0:

|

| 210 |

+

cnt = max(contours, key=cv2.contourArea)

|

| 211 |

+

frame2 = cv2.cvtColor(og_img, cv2.COLOR_BGR2RGB)

|

| 212 |

+

handsLM = mp.solutions.hands.Hands(max_num_hands=1, min_detection_confidence=0.8, min_tracking_confidence=0.8)

|

| 213 |

+

pr = handsLM.process(frame2)

|

| 214 |

+

print(pr.multi_hand_landmarks)

|

| 215 |

+

if pr.multi_hand_landmarks:

|

| 216 |

+

for hand_landmarks in pr.multi_hand_landmarks:

|

| 217 |

+

lmlist = []

|

| 218 |

+

for id, landMark in enumerate(hand_landmarks.landmark):

|

| 219 |

+

xPos, yPos = int(landMark.x * imgW), int(landMark.y * imgH)

|

| 220 |

+

lmlist.append([id, xPos, yPos])

|

| 221 |

+

|

| 222 |

+

if len(lmlist) != 0:

|

| 223 |

+

pip_joint = [lmlist[14][1], lmlist[14][2]]

|

| 224 |

+

mcp_joint = [lmlist[13][1], lmlist[13][2]]

|

| 225 |

+

|

| 226 |

+

midpoint_x = (pip_joint[0] + mcp_joint[0]) / 2

|

| 227 |

+

midpoint_y = (pip_joint[1] + mcp_joint[1]) / 2

|

| 228 |

+

midpoint = [midpoint_x, midpoint_y]

|

| 229 |

+

|

| 230 |

+

m2 = (pip_joint[1] - mcp_joint[1]) / (pip_joint[0] - mcp_joint[0])

|

| 231 |

+

m1 = -1 / m2

|

| 232 |

+

b = pip_joint[1] - m1 * pip_joint[0]

|

| 233 |

+

|

| 234 |

+

#pip_joint

|

| 235 |

+

x1_pip, y1_pip, x2_pip, y2_pip = process_point(pip_joint, cnt, m1, b)

|

| 236 |

+

|

| 237 |

+

m2 = (midpoint_y - mcp_joint[1]) / (midpoint_x - mcp_joint[0])

|

| 238 |

+

m1 = -1 / m2

|

| 239 |

+

b = midpoint_y - m1 * midpoint_x

|

| 240 |

+

|

| 241 |

+

#midpoint

|

| 242 |

+

x1_mid, y1_mid, x2_mid, y2_mid = process_point(midpoint, cnt, m1, b)

|

| 243 |

+

|

| 244 |

+

d_mm_pip = calSize(x1_pip, y1_pip, x2_pip, y2_pip, (255, 0, 0), (255, 0, 255), original_img)

|

| 245 |

+

d_mm_mid = calSize(x1_mid, y1_mid, x2_mid, y2_mid, (0, 255, 0), (0, 0, 255), original_img)

|

| 246 |

+

|

| 247 |

+

largest_d_mm = max(int(d_mm_mid),int(d_mm_pip))

|

| 248 |

+

return original_img, largest_d_mm, imgcpy, marked_img

|

| 249 |

+

|

| 250 |

+

def mark_hand_landmarks(image_path):

|

| 251 |

+

|

| 252 |

+

mp_hands = mp.solutions.hands

|

| 253 |

+

hands = mp_hands.Hands()

|

| 254 |

+

mp_draw = mp.solutions.drawing_utils

|

| 255 |

+

|

| 256 |

+

img = image_path

|

| 257 |

+

img_rgb = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

|

| 258 |

+

|

| 259 |

+

results = hands.process(img_rgb)

|

| 260 |

+

|

| 261 |

+

if results.multi_hand_landmarks:

|

| 262 |

+

for hand_landmarks in results.multi_hand_landmarks:

|

| 263 |

+

mp_draw.draw_landmarks(img, hand_landmarks, mp_hands.HAND_CONNECTIONS)

|

| 264 |

+

|

| 265 |

+

mcp = hand_landmarks.landmark[13]

|

| 266 |

+

pip = hand_landmarks.landmark[14]

|

| 267 |

+

|

| 268 |

+

img_height, img_width, _ = img.shape

|

| 269 |

+

|

| 270 |

+

mcp_x, mcp_y = int(mcp.x * img_width), int(mcp.y * img_height)

|

| 271 |

+

pip_x, pip_y = int(pip.x * img_width), int(pip.y * img_height)

|

| 272 |

+

|

| 273 |

+

cv2.circle(img, (mcp_x, mcp_y), 10, (255, 0, 0), -1)

|

| 274 |

+

cv2.circle(img, (pip_x, pip_y), 10, (255, 0, 0), -1)

|

| 275 |

+

|

| 276 |

+

return img

|

| 277 |

+

|

| 278 |

+

def show_resized_image(images, titles, scale=0.5):

|

| 279 |

+

num_images = len(images)

|

| 280 |

+

|

| 281 |

+

fig, axes = plt.subplots(2, 3, figsize=(17, 13))

|

| 282 |

+

axes = axes.flatten()

|

| 283 |

+

|

| 284 |

+

for ax in axes[num_images:]:

|

| 285 |

+

ax.axis('off')

|

| 286 |

+

i = 0

|

| 287 |

+

for ax, img, title in zip(axes, images, titles):

|

| 288 |

+

i = i + 1

|

| 289 |

+

print(i)

|

| 290 |

+

resized_image = cv2.resize(img, None, fx=scale, fy=scale, interpolation=cv2.INTER_LINEAR)

|

| 291 |

+

ax.imshow(cv2.cvtColor(resized_image, cv2.COLOR_BGR2RGB))

|

| 292 |

+

ax.set_title(title)

|

| 293 |

+

ax.axis('off')

|

| 294 |

+

|

| 295 |

+

plt.tight_layout()

|

| 296 |

+

img_stream = BytesIO()

|

| 297 |

+

plt.savefig(img_stream, format='png')

|

| 298 |

+

img_stream.seek(0)

|

| 299 |

+

plt.close(fig)

|

| 300 |

+

return img_stream

|

| 301 |

+

|

| 302 |

+

def get_ring_size(mm_value):

|

| 303 |

+

if mm_value in ring_size_dict:

|

| 304 |

+

return ring_size_dict[mm_value]

|

| 305 |

+

else:

|

| 306 |

+

closest_mm = min(ring_size_dict.keys(), key=lambda x: abs(x - mm_value))

|

| 307 |

+

return ring_size_dict[closest_mm]

|

| 308 |

+

|

| 309 |

+

# st.set_page_config(layout="wide", page_title="Ring Size Measurement")

|

| 310 |

+

st.write("## Determine Your Ring Size")

|

| 311 |

+

st.write(

|

| 312 |

+

"📏 Upload an image of your hand to measure the finger width and determine your ring size. The measurement will be displayed along with a visual breakdown of the image processing flow."

|

| 313 |

+

)

|

| 314 |

+

st.sidebar.write("## Upload :gear:")

|

| 315 |

+

#~~

|

| 316 |

+

st.write("### Workflow Overview")

|

| 317 |

+

st.image("FlowChart.png", caption="Workflow Overview", use_column_width=True)

|

| 318 |

+

|

| 319 |

+

st.write("### Detailed Workflow")

|

| 320 |

+

st.write("1. **Hough Circle Transform:** The Hough Circle Transform is a technique used to detect circles in an image. It works by transforming the image into a parameter space, identifying circles based on their radius and center coordinates. This method is effective for locating circular objects, such as a coin, within the image.")

|

| 321 |

+

st.write("2. **Pixel Per Metric Ratio:** The Pixel Per Metric Ratio is used to convert pixel measurements into real-world units. By comparing the pixel length obtained from image analysis (i.e., Hough Circle) with the known real-world measurement of the reference object (coin), we get the ratio. This ratio then allows us to accurately scale and size estimation of objects within the image.")

|

| 322 |

+

st.write("3. **Background Removal:** Removing the background first ensures that only the relevant subject is highlighted. We start by converting the image to grayscale and applying thresholding to distinguish the subject from the background. Erosion and dilation then clean up the image, improving the detection of specific features like individual fingers.")

|

| 323 |

+

st.write("4. **Contour Detection:** We use Contour Detection to find the largest contour, which allows us to outline or draw a boundary around the subject (i.e., hand). This highlights the object's shape and edges, improving the precision of the subject.")

|

| 324 |

+

st.write("5. **Finding Hand Landmarks:** This involves using the MediaPipe library to identify key points on the hand, such as the PIP (Proximal Interphalangeal) and MCP (Metacarpophalangeal) joints of the ring finger. This enables precise tracking and analysis of finger positions and movements.")

|

| 325 |

+

st.write("6. **Determining Finger Width:** Here we use the slope formula `[y = mx + b]` with PIP and MCP points to measure the finger's width. We project outward perpendicularly from the PIP point towards the MCP point, then apply a point polygon test to accurately determine the pixel width of the finger.")

|

| 326 |

+

st.write("7. **Predicting Ring Size:** Predicting Ring Size involves calculating the finger’s diameter using the Pixel Per Metric Ratio and the largest width measurement at the PIP or MCP joint. This diameter is then used to predict the appropriate ring size.")

|

| 327 |

+

#~~

|

| 328 |

+

|

| 329 |

+

MAX_FILE_SIZE = 5 * 1024 * 1024 # 5MB

|

| 330 |

+

|

| 331 |

+

def process_image_and_get_results(upload):

|

| 332 |

+

image = Image.open(upload)

|

| 333 |

+

# image = cv2.imread(upload)

|

| 334 |

+

image_np = np.array(image)

|

| 335 |

+

image_np = cv2.cvtColor(image_np, cv2.COLOR_RGB2BGR)

|

| 336 |

+

original_img = image_np.copy()

|

| 337 |

+

og_img1 = image_np.copy()

|

| 338 |

+

og_img2 = image_np.copy()

|

| 339 |

+

img_1 = image_np.copy()

|

| 340 |

+

hand_lms = mark_hand_landmarks(img_1)

|

| 341 |

+

|

| 342 |

+

pixel_per_metric, mm_per_pixel, image_with_coin_info = calculate_pixel_per_metric(image_np)

|

| 343 |

+

processed_image = process_image(og_img1)

|

| 344 |

+

image_with_pip_width, width_mm, contour_image, pip_mark_img = calculate_pip_width(processed_image, original_img, pixel_per_metric)

|

| 345 |

+

image_with_coin_info = np.array(image_with_coin_info)

|

| 346 |

+

if image_with_coin_info is None:

|

| 347 |

+

print("inside1")

|

| 348 |

+

raise ValueError("Image is None, cannot resize.")

|

| 349 |

+

|

| 350 |

+

elif not isinstance(image_with_coin_info, (np.ndarray, cv2.UMat)):

|

| 351 |

+

print("inside2")

|

| 352 |

+

raise TypeError(f"Invalid image type: {type(image_with_coin_info)}. Expected numpy array or cv2.UMat.")

|

| 353 |

+

ring_size = get_ring_size(width_mm)

|

| 354 |

+

return {

|

| 355 |

+

"processed_image": image_with_pip_width,

|

| 356 |

+

"original_image": og_img2,

|

| 357 |

+

"hand_lm_marked_image": hand_lms,

|

| 358 |

+

"image_with_coin_info": image_with_coin_info,

|

| 359 |

+

"contour_image": contour_image,

|

| 360 |

+

"width_mm": width_mm,

|

| 361 |

+

"ring_size": ring_size

|

| 362 |

+

}

|

| 363 |

+

|

| 364 |

+

def show_how_it_works(processed_image):

|

| 365 |

+

st.write("## How It Works")

|

| 366 |

+

st.write("Here's a step-by-step breakdown of how your image is processed to determine your ring size:")

|

| 367 |

+

st.image(processed_image, caption="Image Processing Flow", use_column_width=True)

|

| 368 |

+

|

| 369 |

+

col1, col2 = st.columns(2)

|

| 370 |

+

my_upload = st.sidebar.file_uploader("Upload an image", type=["png", "jpg", "jpeg"])

|

| 371 |

+

|

| 372 |

+

if my_upload is not None:

|

| 373 |

+

if my_upload.size > MAX_FILE_SIZE:

|

| 374 |

+

st.error("The uploaded file is too large. Please upload an image smaller than 5MB.")

|

| 375 |

+

else:

|

| 376 |

+

st.write("## Image Processing Flow")

|

| 377 |

+

results = process_image_and_get_results(my_upload)

|

| 378 |

+

|

| 379 |

+

col1.write("Uploaded Image :camera:")

|

| 380 |

+

col1.image(cv2.cvtColor(results["original_image"], cv2.COLOR_BGR2RGB), caption="Uploaded Image")

|

| 381 |

+

|

| 382 |

+

col2.write("Processed Image :wrench:")

|

| 383 |

+

col2.image(cv2.cvtColor(results["processed_image"], cv2.COLOR_BGR2RGB), caption="Processed Image with PIP Width")

|

| 384 |

+

|

| 385 |

+

st.write(f"📏 The width of your finger is {results['width_mm']:.2f} mm, and the estimated ring size is {results['ring_size']:.1f}.")

|

| 386 |

+

|

| 387 |

+

if st.button("How it Works"):

|

| 388 |

+

st.write("## How It Works")

|

| 389 |

+

st.write("Here's a step-by-step breakdown of how your image is processed to determine your ring size:")

|

| 390 |

+

print("here")

|

| 391 |

+

img_stream = show_resized_image(

|

| 392 |

+

[results["original_image"], results["image_with_coin_info"], results["contour_image"], results["hand_lm_marked_image"], results["processed_image"]],

|

| 393 |

+

['Original Image', 'Image with Scale Info', 'Contour Boundary Image', 'Hand Landmarks', 'Ring Finger Width'],

|

| 394 |

+

scale=0.5

|

| 395 |

+

)

|

| 396 |

+

st.image(img_stream, caption="Processing Flow", use_column_width=True)

|

| 397 |

+

else:

|

| 398 |

+

st.info("Please upload an image to get started.")

|

requirements.txt

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

streamlit

|

| 2 |

+

pillow

|

| 3 |

+

opencv-python

|

| 4 |

+

numpy

|

| 5 |

+

matplotlib

|

| 6 |

+

rembg

|

| 7 |

+

mediapipe

|

| 8 |

+

torch

|

| 9 |

+

transformers

|

| 10 |

+

scipy

|