Spaces:

Build error

Build error

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +5 -0

- .gitignore +18 -0

- .gitlab-ci.yml +33 -0

- .gitmodules +0 -0

- .vscode/settings.json +2 -0

- Dockerfile +55 -0

- README.md +113 -7

- abstractClass.py +59 -0

- app.py +406 -0

- cropped_table.png +0 -0

- cropped_table_0.png +0 -0

- cropped_table_1.png +0 -0

- deepdoc/README.md +122 -0

- deepdoc/__init__.py +8 -0

- deepdoc/models/.gitattributes +35 -0

- deepdoc/models/README.md +3 -0

- deepdoc/models/det.onnx +3 -0

- deepdoc/models/layout.laws.onnx +3 -0

- deepdoc/models/layout.manual.onnx +3 -0

- deepdoc/models/layout.onnx +3 -0

- deepdoc/models/layout.paper.onnx +3 -0

- deepdoc/models/ocr.res +6623 -0

- deepdoc/models/rec.onnx +3 -0

- deepdoc/models/tsr.onnx +3 -0

- deepdoc/vision/__init__.py +3 -0

- deepdoc/vision/ocr.res +6623 -0

- deepdoc/vision/operators.py +711 -0

- deepdoc/vision/postprocess.py +353 -0

- deepdoc/vision/ragFlow.py +313 -0

- detectionAndOcrTable1.py +425 -0

- detectionAndOcrTable2.py +306 -0

- detectionAndOcrTable3.py +267 -0

- detectionAndOcrTable4.py +112 -0

- doctrfiles/__init__.py +4 -0

- doctrfiles/doctr_recognizer.py +183 -0

- doctrfiles/models/config-multi2.json +21 -0

- doctrfiles/models/db_mobilenet_v3_large-81e9b152.pt +3 -0

- doctrfiles/models/db_resnet34-cb6aed9e.pt +3 -0

- doctrfiles/models/db_resnet50-79bd7d70.pt +3 -0

- doctrfiles/models/db_resnet50_config.json +20 -0

- doctrfiles/models/doctr-multilingual-parseq.bin +3 -0

- doctrfiles/models/master-fde31e4a.pt +3 -0

- doctrfiles/models/master.json +21 -0

- doctrfiles/models/multi2.bin +3 -0

- doctrfiles/models/multilingual-parseq-config.json +21 -0

- doctrfiles/word_detector.py +282 -0

- image-1.png +0 -0

- image-2.png +0 -0

- image.png +0 -0

- june11.jpg +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

res0.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

table_drawn_bbox_with_extra.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

unitable/website/unitable-demo.gif filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

unitable/website/unitable-demo.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

unitable/website/wandb_screenshot.png filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

unitable/experiments/unitable_weights/**

|

| 3 |

+

|

| 4 |

+

res/**

|

| 5 |

+

|

| 6 |

+

TestingFiles/**

|

| 7 |

+

TestingFilesImages/**

|

| 8 |

+

|

| 9 |

+

# python generated files

|

| 10 |

+

__pycache__/

|

| 11 |

+

*.py[oc]

|

| 12 |

+

build/

|

| 13 |

+

dist/

|

| 14 |

+

wheels/

|

| 15 |

+

*.egg-info

|

| 16 |

+

|

| 17 |

+

# venv

|

| 18 |

+

.venv

|

.gitlab-ci.yml

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

variables:

|

| 2 |

+

GIT_STRATEGY: fetch

|

| 3 |

+

GIT_SSL_NO_VERIFY: "true"

|

| 4 |

+

GIT_LFS_SKIP_SMUDGE: 1

|

| 5 |

+

DOCKER_BUILDKIT: 1

|

| 6 |

+

|

| 7 |

+

stages:

|

| 8 |

+

- build

|

| 9 |

+

|

| 10 |

+

image_build:

|

| 11 |

+

stage: build

|

| 12 |

+

image: docker:stable

|

| 13 |

+

before_script:

|

| 14 |

+

- docker login -u gitlab-ci-token -p $CI_JOB_TOKEN http://$CI_REGISTRY

|

| 15 |

+

script: |

|

| 16 |

+

CI_COMMIT_SHA_7=$(echo $CI_COMMIT_SHA | cut -c1-7)

|

| 17 |

+

DATE=$(date +%Y-%m-%d)

|

| 18 |

+

docker build --tag $CI_REGISTRY_IMAGE/$CI_COMMIT_BRANCH:latest \

|

| 19 |

+

--tag $CI_REGISTRY_IMAGE/$CI_COMMIT_BRANCH:$CI_COMMIT_SHA_7 \

|

| 20 |

+

--tag $CI_REGISTRY_IMAGE/$CI_COMMIT_BRANCH:$DATE \

|

| 21 |

+

-f Dockerfile .

|

| 22 |

+

docker push $CI_REGISTRY_IMAGE/$CI_COMMIT_BRANCH:latest

|

| 23 |

+

docker push $CI_REGISTRY_IMAGE/$CI_COMMIT_BRANCH:$CI_COMMIT_SHA_7

|

| 24 |

+

docker push $CI_REGISTRY_IMAGE/$CI_COMMIT_BRANCH:$DATE

|

| 25 |

+

# Run only when Dockerfile has changed

|

| 26 |

+

rules:

|

| 27 |

+

- if: $CI_PIPELINE_SOURCE == "push"

|

| 28 |

+

changes:

|

| 29 |

+

- Dockerfile

|

| 30 |

+

# Set to `on_success` to automatically rebuild

|

| 31 |

+

# Set to `manual` to trigger the build manually using Gitlab UI

|

| 32 |

+

when: on_success

|

| 33 |

+

allow_failure: true

|

.gitmodules

ADDED

|

File without changes

|

.vscode/settings.json

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

}

|

Dockerfile

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

ARG BASE_IMAGE="nvidia/cuda:12.2.2-devel-ubuntu22.04"

|

| 2 |

+

|

| 3 |

+

FROM ${BASE_IMAGE}

|

| 4 |

+

ARG HOMEDIRECTORY="/myhome"

|

| 5 |

+

ENV HOMEDIRECTORY=$HOMEDIRECTORY

|

| 6 |

+

|

| 7 |

+

USER root

|

| 8 |

+

RUN apt-get update && \

|

| 9 |

+

apt-get install -y --no-install-recommends \

|

| 10 |

+

curl \

|

| 11 |

+

python3 \

|

| 12 |

+

python3-pip \

|

| 13 |

+

python3-dev \

|

| 14 |

+

poppler-utils \

|

| 15 |

+

gcc \

|

| 16 |

+

git \

|

| 17 |

+

git-lfs \

|

| 18 |

+

htop \

|

| 19 |

+

libgl1 \

|

| 20 |

+

libglib2.0-0 \

|

| 21 |

+

ncdu \

|

| 22 |

+

openssh-client \

|

| 23 |

+

openssh-server \

|

| 24 |

+

psmisc \

|

| 25 |

+

rsync \

|

| 26 |

+

screen \

|

| 27 |

+

sudo \

|

| 28 |

+

tmux \

|

| 29 |

+

unzip \

|

| 30 |

+

vim \

|

| 31 |

+

wget && \

|

| 32 |

+

wget -q https://github.com/justjanne/powerline-go/releases/download/v1.24/powerline-go-linux-"$(dpkg --print-architecture)" -O /usr/local/bin/powerline-shell && \

|

| 33 |

+

chmod a+x /usr/local/bin/powerline-shell

|

| 34 |

+

|

| 35 |

+

RUN ln -s /usr/bin/python3 /usr/bin/python

|

| 36 |

+

COPY requirements.txt .

|

| 37 |

+

RUN pip install --no-cache-dir -r requirements.txt --extra-index-url https://download.pytorch.org/whl/cu117

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

# setup ssh

|

| 41 |

+

RUN ssh-keygen -A

|

| 42 |

+

RUN sed -i 's/#*PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

|

| 43 |

+

EXPOSE 22

|

| 44 |

+

|

| 45 |

+

# Make the root user's home directory /myhome (the default for run.ai),

|

| 46 |

+

# and allow to login with password 'root'.

|

| 47 |

+

RUN echo 'root:root' | chpasswd

|

| 48 |

+

RUN sed -i 's|:root:/root:|:root:/myhome:|' /etc/passwd

|

| 49 |

+

|

| 50 |

+

ENTRYPOINT sudo service ssh start && /bin/bash

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

|

README.md

CHANGED

|

@@ -1,12 +1,118 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

colorFrom: purple

|

| 5 |

-

colorTo: green

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 4.44.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

+

title: alps

|

| 3 |

+

app_file: app.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

sdk_version: 4.44.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

# Alps

|

| 8 |

+

|

| 9 |

+

Pipeline for OCRing PDFs and tables

|

| 10 |

+

|

| 11 |

+

This repository contains different OCR methods using various libraries/models.

|

| 12 |

+

|

| 13 |

+

## Running gradio:

|

| 14 |

+

`python app.py` in terminal

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

## Installation :

|

| 18 |

+

Build the docker image and run the contianer

|

| 19 |

+

|

| 20 |

+

Clone this repository and Install the required dependencies:

|

| 21 |

+

```

|

| 22 |

+

pip install -r requirements.txt --extra-index-url https://download.pytorch.org/whl/cu117

|

| 23 |

+

|

| 24 |

+

apt install weasyprint

|

| 25 |

+

|

| 26 |

+

```

|

| 27 |

+

Note: You need a GPU to run this code.

|

| 28 |

+

|

| 29 |

+

## Example Usage

|

| 30 |

+

|

| 31 |

+

Run python main.py inside the directory. Provide the path to the test file (the file must be placed inside the repository,and the file path should be relative to the repository (alps)). Next, provide the path to save intermediate outputs from the run (draw cell bounding boxes on the table, show table detection results in pdf), and specify which component to run.

|

| 32 |

+

|

| 33 |

+

outputs are printed in terminal

|

| 34 |

+

|

| 35 |

+

```

|

| 36 |

+

usage: main.py [-h] [--test_file TEST_FILE] [--debug_folder DEBUG_FOLDER] [--englishFlag ENGLISHFLAG] [--denoise DENOISE] ocr

|

| 37 |

+

|

| 38 |

+

```

|

| 39 |

+

Description of the component:

|

| 40 |

+

|

| 41 |

+

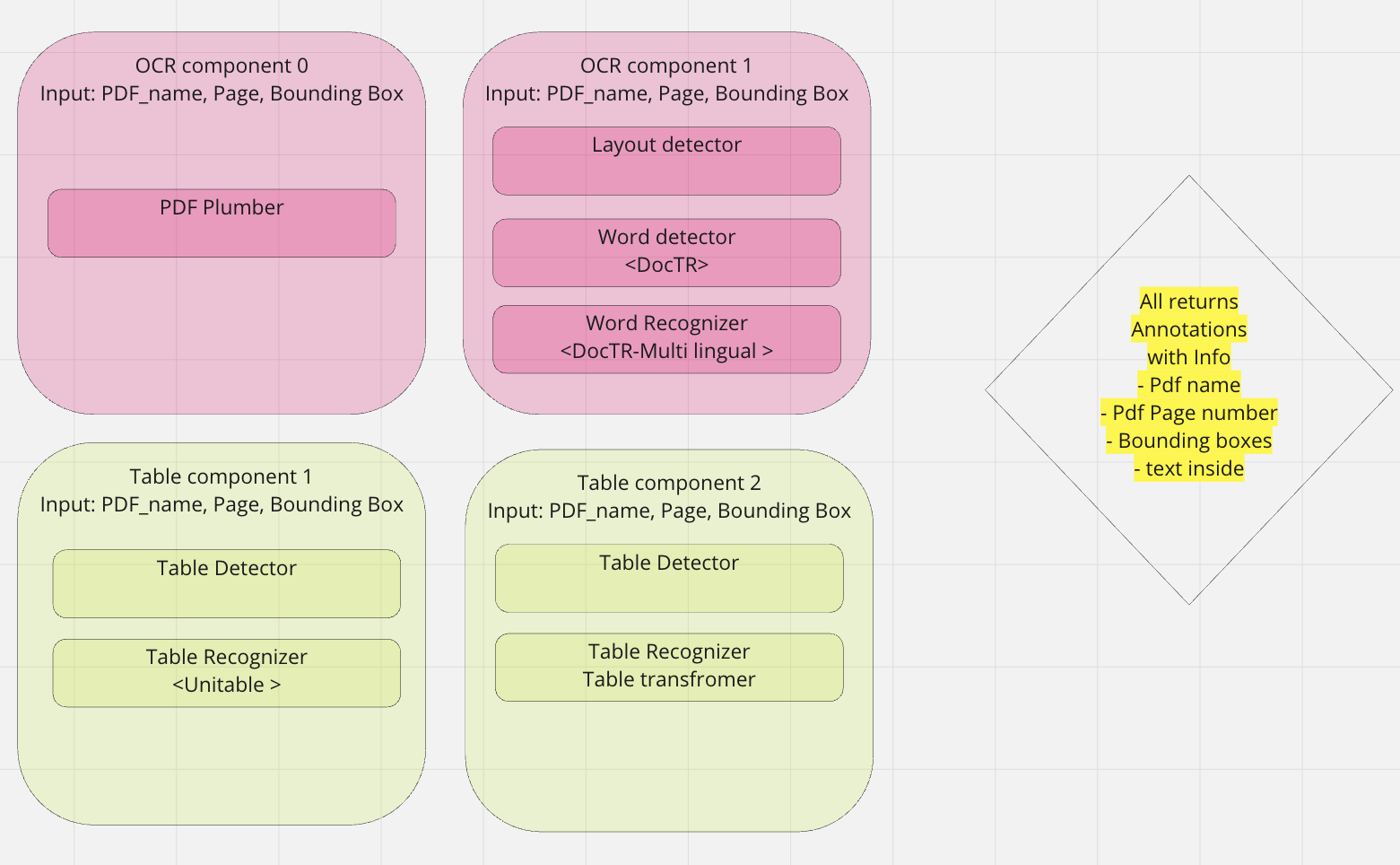

### ocr1

|

| 42 |

+

|

| 43 |

+

ocr1

|

| 44 |

+

Input: Path to a PDF file

|

| 45 |

+

Output: Dictionary of each page and list of line_annotations. List of LineAnnotations contains bboxes for each line and List of its children wordAnnotation. Each wordAnnotation contains bboxes and text inside.

|

| 46 |

+

What it does: Runs Ragflow textline detector + OCR with DocTR

|

| 47 |

+

|

| 48 |

+

Example:

|

| 49 |

+

```

|

| 50 |

+

python main.py ocr1 --test_file TestingFiles/OCRTest1German.pdf --debug_folder ./res/ocrdebug1/

|

| 51 |

+

python main.py ocr1 --test_file TestingFiles/OCRTest3English.pdf --debug_folder ./res/ocrdebug1/ --englishFlag True

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

### table1

|

| 55 |

+

Input : file path to an image of a cropped table

|

| 56 |

+

Output: Parsed table in HTML form

|

| 57 |

+

What it does: Uses Unitable + DocTR

|

| 58 |

+

|

| 59 |

+

```

|

| 60 |

+

python main.py table1 --test_file cropped_table.png --debug_folder ./res/table1/

|

| 61 |

+

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

### table2

|

| 65 |

+

Input: File path to an image of a cropped table

|

| 66 |

+

Output: Parsed table in HTML form

|

| 67 |

+

What it does: Uses Unitable

|

| 68 |

+

|

| 69 |

+

```

|

| 70 |

+

python main.py table2 --test_file cropped_table.png --debug_folder ./res/table2/

|

| 71 |

+

|

| 72 |

+

```

|

| 73 |

+

### pdftable1

|

| 74 |

+

Input: PDF file path

|

| 75 |

+

Output: Parsed table in HTML form

|

| 76 |

+

What it does: Uses Unitable + DocTR

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

```

|

| 80 |

+

python main.py pdftable1 --test_file TestingFiles/OCRTest5English.pdf --debug_folder ./res/table_debug1/

|

| 81 |

+

|

| 82 |

+

python main.py pdftable3 --test_file TestingFiles/TableOCRTestEnglish.pdf --debug_folder ./res/poor_relief2

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

### pdftable2 :

|

| 87 |

+

Input: PDF file path

|

| 88 |

+

Output: Parsed table in HTML form

|

| 89 |

+

What it does: Detects table and parses them, Runs Full Unitable Table detection

|

| 90 |

+

|

| 91 |

+

```

|

| 92 |

+

python main.py pdftable2 --test_file TestingFiles/OCRTest5English.pdf --debug_folder ./res/table_debug2/

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

### pdftable3

|

| 97 |

+

Input: PDF file path

|

| 98 |

+

Output: Parsed table in HTML form

|

| 99 |

+

What it does: Detects table with YOLO, Unitable + DocTR

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

### pdftable4

|

| 104 |

+

Input: PDF file path

|

| 105 |

+

Output: Parsed table in HTML form

|

| 106 |

+

What it does: Detects table with YOLO, Runs Full doctr Table detection

|

| 107 |

+

|

| 108 |

+

python main.py pdftable4 --test_file TestingFiles/TableOCRTestEasier.pdf --debug_folder ./res/table_debug3/

|

| 109 |

+

|

| 110 |

+

|

| 111 |

+

## bbox

|

| 112 |

+

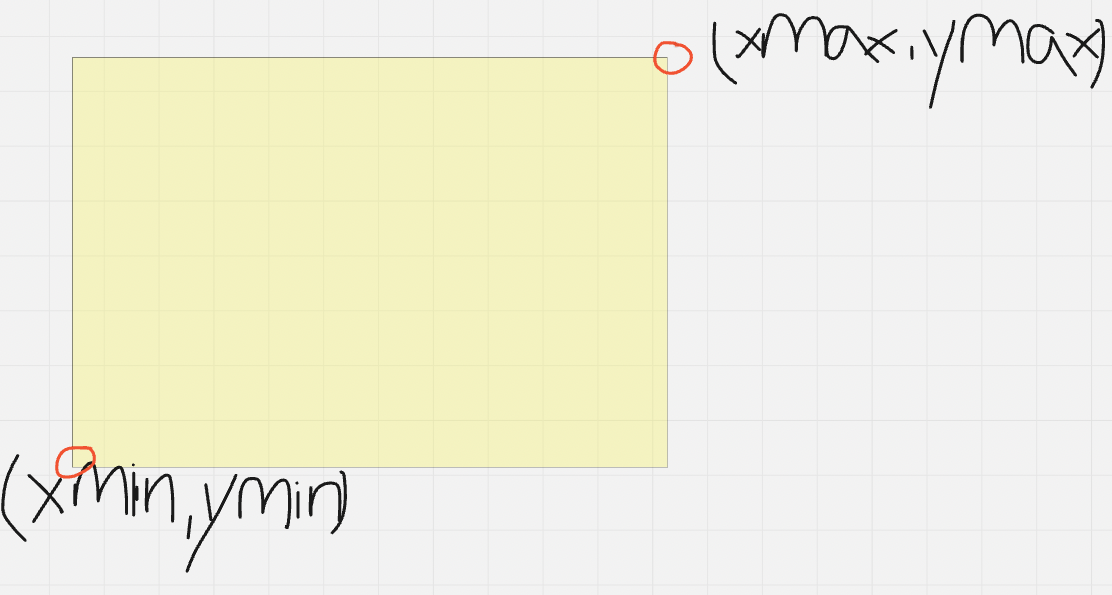

They are ordered as ordered as [xmin,ymin,xmax,ymax] . Cause the coordinates starts from (0,0) of the image which is upper left corner

|

| 113 |

+

|

| 114 |

+

xmin ymim - upper left corner

|

| 115 |

+

xmax ymax - bottom lower corner

|

| 116 |

+

|

| 117 |

+

|

| 118 |

|

|

|

abstractClass.py

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

from typing import Any, List, Literal, Mapping, Optional, Tuple

|

| 3 |

+

from abc import ABC, abstractmethod

|

| 4 |

+

|

| 5 |

+

import numpy as np

|

| 6 |

+

import cv2

|

| 7 |

+

from PIL import Image

|

| 8 |

+

from abc import ABC, abstractmethod

|

| 9 |

+

|

| 10 |

+

from utils import cropImage

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

class OCRComponent:

|

| 14 |

+

"""

|

| 15 |

+

Wrapper class for cropping images and giving it to OCR Predictor

|

| 16 |

+

"""

|

| 17 |

+

def predict_pdf(self, pdf_name:str="", page:int=None, bbx:List[List[float]]=None)-> List[List[float]]:

|

| 18 |

+

#TODO: Preprocessing to crop interest region

|

| 19 |

+

pass

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

class TextDetector(ABC):

|

| 23 |

+

"""

|

| 24 |

+

Abstract base class for text detectors that takes in bounding boxes, pdf name, and page

|

| 25 |

+

and returns bounding boxes results on them.

|

| 26 |

+

"""

|

| 27 |

+

|

| 28 |

+

def __init__(self):

|

| 29 |

+

|

| 30 |

+

pass

|

| 31 |

+

|

| 32 |

+

"""

|

| 33 |

+

This is for predicting given an already cropped image

|

| 34 |

+

"""

|

| 35 |

+

@abstractmethod

|

| 36 |

+

def predict_img(self, img:np.ndarray=None)-> List[List[float]]:

|

| 37 |

+

# do something with self.input and return bbx

|

| 38 |

+

pass

|

| 39 |

+

|

| 40 |

+

class textRecognizer(ABC):

|

| 41 |

+

"""

|

| 42 |

+

class of textRecognizer that takes in bounding boxes, pdf name and page and returns

|

| 43 |

+

OCR results on them

|

| 44 |

+

"""

|

| 45 |

+

|

| 46 |

+

def __init__(self):

|

| 47 |

+

|

| 48 |

+

pass

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

"""

|

| 52 |

+

This is for predicting given text line detection result form text line detector

|

| 53 |

+

"""

|

| 54 |

+

@abstractmethod

|

| 55 |

+

def predict_img(self, bxs:List[List[float]], img:Image.Image)-> List[List[float]]:

|

| 56 |

+

# do something with self.input and return bbx

|

| 57 |

+

pass

|

| 58 |

+

|

| 59 |

+

|

app.py

ADDED

|

@@ -0,0 +1,406 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import os

|

| 3 |

+

import traceback

|

| 4 |

+

import argparse

|

| 5 |

+

from typing import List, Tuple, Set, Dict

|

| 6 |

+

|

| 7 |

+

import time

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import numpy as np

|

| 10 |

+

from doctr.models import ocr_predictor

|

| 11 |

+

import logging

|

| 12 |

+

import pandas as pd

|

| 13 |

+

from bs4 import BeautifulSoup

|

| 14 |

+

import gradio

|

| 15 |

+

|

| 16 |

+

from utils import cropImages

|

| 17 |

+

from utils import draw_only_box,draw_box_with_text,getlogger,Annotation

|

| 18 |

+

from ocr_component1 import OCRComponent1

|

| 19 |

+

from detectionAndOcrTable1 import DetectionAndOcrTable1

|

| 20 |

+

from detectionAndOcrTable2 import DetectionAndOcrTable2

|

| 21 |

+

from detectionAndOcrTable3 import DetectionAndOcrTable3

|

| 22 |

+

from detectionAndOcrTable4 import DetectionAndOcrTable4

|

| 23 |

+

from ocrTable1 import OcrTable1

|

| 24 |

+

from ocrTable2 import OcrTable2

|

| 25 |

+

from pdf2image import convert_from_path

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

def convertHTMLToCSV(html:str,output_path:str)->str:

|

| 29 |

+

|

| 30 |

+

# empty list

|

| 31 |

+

data = []

|

| 32 |

+

|

| 33 |

+

# for getting the header from

|

| 34 |

+

# the HTML file

|

| 35 |

+

list_header = []

|

| 36 |

+

soup = BeautifulSoup(html,'html.parser')

|

| 37 |

+

header = soup.find_all("table")[0].find("tr")

|

| 38 |

+

|

| 39 |

+

for items in header:

|

| 40 |

+

try:

|

| 41 |

+

list_header.append(items.get_text())

|

| 42 |

+

except:

|

| 43 |

+

continue

|

| 44 |

+

|

| 45 |

+

# for getting the data

|

| 46 |

+

HTML_data = soup.find_all("table")[0].find_all("tr")[1:]

|

| 47 |

+

|

| 48 |

+

for element in HTML_data:

|

| 49 |

+

sub_data = []

|

| 50 |

+

for sub_element in element:

|

| 51 |

+

try:

|

| 52 |

+

sub_data.append(sub_element.get_text())

|

| 53 |

+

except:

|

| 54 |

+

continue

|

| 55 |

+

data.append(sub_data)

|

| 56 |

+

|

| 57 |

+

# Storing the data into Pandas

|

| 58 |

+

# DataFrame

|

| 59 |

+

dataFrame = pd.DataFrame(data = data, columns = list_header)

|

| 60 |

+

|

| 61 |

+

# Converting Pandas DataFrame

|

| 62 |

+

# into CSV file

|

| 63 |

+

dataFrame.to_csv(output_path)

|

| 64 |

+

|

| 65 |

+

def saveResults(image_list, results, labels, output_dir='output/', threshold=0.5):

|

| 66 |

+

if not os.path.exists(output_dir):

|

| 67 |

+

os.makedirs(output_dir)

|

| 68 |

+

for idx, im in enumerate(image_list):

|

| 69 |

+

im = draw_only_box(im, results[idx], labels, threshold=threshold)

|

| 70 |

+

|

| 71 |

+

out_path = os.path.join(output_dir, f"{idx}.jpg")

|

| 72 |

+

im.save(out_path, quality=95)

|

| 73 |

+

print("save result to: " + out_path)

|

| 74 |

+

|

| 75 |

+

def InputToImages(input_path:str,resolution=300)-> List[Image.Image]:

|

| 76 |

+

"""

|

| 77 |

+

input is file location to image

|

| 78 |

+

return : List of Pillow image objects

|

| 79 |

+

"""

|

| 80 |

+

images=[]

|

| 81 |

+

try:

|

| 82 |

+

img =Image.open(input_path)

|

| 83 |

+

if img.mode == 'RGBA':

|

| 84 |

+

img = img.convert('RGB')

|

| 85 |

+

images.append(img)

|

| 86 |

+

except Exception as e:

|

| 87 |

+

traceback.print_exc()

|

| 88 |

+

return images

|

| 89 |

+

|

| 90 |

+

def drawTextDetRes(bxs :List[List[float]],img:Image.Image,output_path:str):

|

| 91 |

+

"""

|

| 92 |

+

draw layout analysis results

|

| 93 |

+

"""

|

| 94 |

+

"""bxs_draw is xmin, ymin, xmax, ymax"""

|

| 95 |

+

bxs_draw = [[b[0][0], b[0][1], b[1][0], b[-1][1]] for b in bxs if b[0][0] <= b[1][0] and b[0][1] <= b[-1][1]]

|

| 96 |

+

|

| 97 |

+

#images_to_recognizer = cropImage(bxs, img)

|

| 98 |

+

img_to_save = draw_only_box(img, bxs_draw)

|

| 99 |

+

img_to_save.save(output_path, quality=95)

|

| 100 |

+

|

| 101 |

+

def test_ocr_component1(test_file="TestingFiles/OCRTest1German.pdf", debug_folder = './res/table1/',englishFlag = False):

|

| 102 |

+

#Takes as input image of a single page and returns the detected lines and words

|

| 103 |

+

|

| 104 |

+

images = convert_from_path(test_file)

|

| 105 |

+

ocr = OCRComponent1(englishFlag)

|

| 106 |

+

ocr_results = {}

|

| 107 |

+

|

| 108 |

+

all_text_in_pages = {}

|

| 109 |

+

for page_number,img in enumerate(images):

|

| 110 |

+

text_in_page = ""

|

| 111 |

+

|

| 112 |

+

line_annotations= ocr.predict(img = np.array(img))

|

| 113 |

+

ocr_results[page_number] = line_annotations

|

| 114 |

+

|

| 115 |

+

"""

|

| 116 |

+

boxes_to_draw =[]

|

| 117 |

+

for list_of_ann in word_annotations:

|

| 118 |

+

for ann in list_of_ann:

|

| 119 |

+

logger.info(ann.text)

|

| 120 |

+

b = ann.box

|

| 121 |

+

boxes_to_draw.append(b)

|

| 122 |

+

|

| 123 |

+

img_to_save = draw_only_box(img,boxes_to_draw)

|

| 124 |

+

img_to_save.save("res/12June_2_lines.png", quality=95)

|

| 125 |

+

"""

|

| 126 |

+

|

| 127 |

+

line_boxes_to_draw =[]

|

| 128 |

+

#print("Detected lines are ")

|

| 129 |

+

#print(len(line_annotations.items()))

|

| 130 |

+

for index,ann in line_annotations.items():

|

| 131 |

+

|

| 132 |

+

b = ann.box

|

| 133 |

+

line_boxes_to_draw.append(b)

|

| 134 |

+

line_words = ""

|

| 135 |

+

#print("detected words per line")

|

| 136 |

+

#print(len(ann.words))

|

| 137 |

+

for wordann in ann.words:

|

| 138 |

+

line_words += wordann.text +" "

|

| 139 |

+

print(line_words)

|

| 140 |

+

text_in_page += line_words +"\n"

|

| 141 |

+

|

| 142 |

+

img_to_save1 = draw_only_box(img,line_boxes_to_draw)

|

| 143 |

+

imgname = test_file.split("/")[-1][:-4]

|

| 144 |

+

img_to_save1.save(debug_folder+imgname+"_"+str(page_number)+"_bbox_detection.png", quality=95)

|

| 145 |

+

|

| 146 |

+

all_text_in_pages[page_number] = text_in_page

|

| 147 |

+

|

| 148 |

+

return ocr_results, all_text_in_pages

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

def test_tableOcrOnly1(test_file :Image.Image , debug_folder = './res/table1/',denoise = False,englishFlag = False):

|

| 152 |

+

#Hybrid Unitable +DocTR

|

| 153 |

+

#Good at these kind of tables - with a lot of texts

|

| 154 |

+

table = OcrTable1(englishFlag)

|

| 155 |

+

image = test_file.convert("RGB")

|

| 156 |

+

"""

|

| 157 |

+

parts = test_file.split("/")

|

| 158 |

+

filename = parts[-1][:-4]

|

| 159 |

+

debugfolder_filename_page_name= debug_folder+filename+"_"

|

| 160 |

+

|

| 161 |

+

table_code = table.predict([image],debugfolder_filename_page_name,denoise = denoise)

|

| 162 |

+

with open(debugfolder_filename_page_name+'output.txt', 'w') as file:

|

| 163 |

+

file.write(table_code)

|

| 164 |

+

"""

|

| 165 |

+

|

| 166 |

+

table_code = table.predict([image],denoise = denoise)

|

| 167 |

+

return table_code

|

| 168 |

+

|

| 169 |

+

|

| 170 |

+

def test_tableOcrOnly2(test_file:Image.Image, debug_folder = './res/table2/'):

|

| 171 |

+

table = OcrTable2()

|

| 172 |

+

#FullUnitable

|

| 173 |

+

#Good at these kind of tables - with not much text

|

| 174 |

+

|

| 175 |

+

image = test_file.convert("RGB")

|

| 176 |

+

table.predict([image],debug_folder)

|

| 177 |

+

|

| 178 |

+

def test_table_component1(test_file = 'TestingFiles/TableOCRTestEnglish.pdf', debug_folder ='./res/table_debug2/',denoise = False,englishFlag = True):

|

| 179 |

+

table_predictor = DetectionAndOcrTable1(englishFlag)

|

| 180 |

+

|

| 181 |

+

images = convert_from_path(test_file)

|

| 182 |

+

for page_number,img in enumerate(images):

|

| 183 |

+

|

| 184 |

+

#print(img.mode)

|

| 185 |

+

print("Looking at page:")

|

| 186 |

+

print(page_number)

|

| 187 |

+

parts = test_file.split("/")

|

| 188 |

+

filename = parts[-1][:-4]

|

| 189 |

+

debugfolder_filename_page_name= debug_folder+filename+"_"+ str(page_number)+'_'

|

| 190 |

+

table_codes = table_predictor.predict(img,debugfolder_filename_page_name=debugfolder_filename_page_name,denoise = denoise)

|

| 191 |

+

for index, table_code in enumerate(table_codes):

|

| 192 |

+

with open(debugfolder_filename_page_name+str(index)+'output.xls', 'w') as file:

|

| 193 |

+

file.write(table_code)

|

| 194 |

+

return table_codes

|

| 195 |

+

|

| 196 |

+

def test_table_component2(test_file = 'TestingFiles/TableOCRTestEnglish.pdf', debug_folder ='./res/table_debug2/'):

|

| 197 |

+

#This components can take in entire pdf page as input , scan for tables and return the table in html format

|

| 198 |

+

#Uses the full unitable model

|

| 199 |

+

|

| 200 |

+

table_predictor = DetectionAndOcrTable2()

|

| 201 |

+

|

| 202 |

+

images = convert_from_path(test_file)

|

| 203 |

+

for page_number,img in enumerate(images):

|

| 204 |

+

print("Looking at page:")

|

| 205 |

+

print(page_number)

|

| 206 |

+

parts = test_file.split("/")

|

| 207 |

+

filename = parts[-1][:-4]

|

| 208 |

+

debugfolder_filename_page_name= debug_folder+filename+"_"+ str(page_number)+'_'

|

| 209 |

+

table_codes = table_predictor.predict(img,debugfolder_filename_page_name=debugfolder_filename_page_name)

|

| 210 |

+

for index, table_code in enumerate(table_codes):

|

| 211 |

+

with open(debugfolder_filename_page_name+str(index)+'output.xls', 'w') as file:

|

| 212 |

+

file.write(table_code)

|

| 213 |

+

return table_codes

|

| 214 |

+

|

| 215 |

+

def test_table_component3(test_file = 'TestingFiles/TableOCRTestEnglish.pdf',debug_folder ='./res/table_debug3/',denoise = False,englishFlag = True):

|

| 216 |

+

table_predictor = DetectionAndOcrTable3(englishFlag)

|

| 217 |

+

|

| 218 |

+

images = convert_from_path(test_file)

|

| 219 |

+

for page_number,img in enumerate(images):

|

| 220 |

+

#print(img.mode)

|

| 221 |

+

print("Looking at page:")

|

| 222 |

+

print(page_number)

|

| 223 |

+

parts = test_file.split("/")

|

| 224 |

+

filename = parts[-1][:-4]

|

| 225 |

+

debugfolder_filename_page_name= debug_folder+filename+"_"+ str(page_number)+'_'

|

| 226 |

+

table_codes = table_predictor.predict(img,debugfolder_filename_page_name=debugfolder_filename_page_name)

|

| 227 |

+

for index, table_code in enumerate(table_codes):

|

| 228 |

+

with open(debugfolder_filename_page_name+str(index)+'output.xls', 'w') as file:

|

| 229 |

+

file.write(table_code)

|

| 230 |

+

return table_codes

|

| 231 |

+

|

| 232 |

+

|

| 233 |

+

|

| 234 |

+

def test_table_component4(test_file = 'TestingFiles/TableOCRTestEnglish.pdf',debug_folder ='./res/table_debug3/'):

|

| 235 |

+

table_predictor = DetectionAndOcrTable4()

|

| 236 |

+

|

| 237 |

+

images = convert_from_path(test_file)

|

| 238 |

+

for page_number,img in enumerate(images):

|

| 239 |

+

#print(img.mode)

|

| 240 |

+

print("Looking at page:")

|

| 241 |

+

print(page_number)

|

| 242 |

+

parts = test_file.split("/")

|

| 243 |

+

filename = parts[-1][:-4]

|

| 244 |

+

debugfolder_filename_page_name= debug_folder+filename+"_"+ str(page_number)+'_'

|

| 245 |

+

table_codes = table_predictor.predict(img,debugfolder_filename_page_name=debugfolder_filename_page_name)

|

| 246 |

+

for index, table_code in enumerate(table_codes):

|

| 247 |

+

with open(debugfolder_filename_page_name+str(index)+'output.xls', 'w') as file:

|

| 248 |

+

file.write(table_code)

|

| 249 |

+

return table_codes

|

| 250 |

+

|

| 251 |

+

|

| 252 |

+

"""

|

| 253 |

+

parser = argparse.ArgumentParser(description='Process some strings.')

|

| 254 |

+

parser.add_argument('ocr', type=str, help='type in id of the component to test')

|

| 255 |

+

parser.add_argument('--test_file',type=str, help='path to the testing file')

|

| 256 |

+

parser.add_argument('--debug_folder',type=str, help='path to the folder you want to save your results in')

|

| 257 |

+

parser.add_argument('--englishFlag',type=bool, help='Whether your pdf is in english => could lead to better results ')

|

| 258 |

+

parser.add_argument('--denoise',type=bool, help='preprocessing for not clean scans ')

|

| 259 |

+

|

| 260 |

+

args = parser.parse_args()

|

| 261 |

+

start = time.time()

|

| 262 |

+

if args.ocr == "ocr1":

|

| 263 |

+

test_ocr_component1(args.test_file,args.debug_folder, args.englishFlag)

|

| 264 |

+

elif args.ocr == "table1":

|

| 265 |

+

test_tableOcrOnly1(args.test_file,args.debug_folder,args.englishFlag,args.denoise)

|

| 266 |

+

elif args.ocr == "table2":

|

| 267 |

+

test_tableOcrOnly2(args.test_file,args.debug_folder)

|

| 268 |

+

elif args.ocr =="pdftable1":

|

| 269 |

+

test_table_component1(args.test_file,args.debug_folder,args.englishFlag,args.denoise)

|

| 270 |

+

elif args.ocr =="pdftable2":

|

| 271 |

+

test_table_component2(args.test_file,args.debug_folder)

|

| 272 |

+

elif args.ocr =="pdftable3":

|

| 273 |

+

test_table_component3(args.test_file,args.debug_folder,args.englishFlag,args.denoise)

|

| 274 |

+

elif args.ocr =="pdftable4":

|

| 275 |

+

test_table_component4(args.test_file,args.debug_folder)

|

| 276 |

+

|

| 277 |

+

"""

|

| 278 |

+

import gradio as gr

|

| 279 |

+

from gradio_pdf import PDF

|

| 280 |

+

|

| 281 |

+

with gr.Blocks() as demo:

|

| 282 |

+

gr.Markdown("# OCR component")

|

| 283 |

+

inputs_for_ocr = [PDF(label="Document"), gr.Textbox(label="internal debug folder",placeholder = "./res/table1/"),gr.Checkbox(label ="English Document?",value =False)]

|

| 284 |

+

ocr_btn = gr.Button("Run ocr")

|

| 285 |

+

|

| 286 |

+

gr.Examples(

|

| 287 |

+

examples=[["TestingFiles/OCRTest1German.pdf",'./res/table1/',False]],

|

| 288 |

+

inputs=inputs_for_ocr

|

| 289 |

+

)

|

| 290 |

+

|

| 291 |

+

outputs_for_ocr = [gr.Textbox(label="List of annotation objects"), gr.Textbox("Text in page")]

|

| 292 |

+

|

| 293 |

+

ocr_btn.click(fn=test_ocr_component1,

|

| 294 |

+

inputs = inputs_for_ocr,

|

| 295 |

+

outputs = outputs_for_ocr,

|

| 296 |

+

api_name="OCR"

|

| 297 |

+

)

|

| 298 |

+

|

| 299 |

+

gr.Markdown("# Table OCR components that takes a pdf, extract table and return their html code ")

|

| 300 |

+

gr.Markdown("## Component 1 uses table transformer and doctr +Unitable")

|

| 301 |

+

inputs_for_pdftable1 = [PDF(label="Document"), gr.Textbox(label="internal debug folder",placeholder = "./res/table1/"),gr.Checkbox(label ="Denoise?",value =False),gr.Checkbox(label ="English Document?",value =False)]

|

| 302 |

+

table1_btn = gr.Button("Run pdftable1")

|

| 303 |

+

|

| 304 |

+

gr.Examples(

|

| 305 |

+

examples=[["TestingFiles/OCRTest5English.pdf",'./res/table1/',False]],

|

| 306 |

+

inputs=inputs_for_pdftable1

|

| 307 |

+

)

|

| 308 |

+

outputs_for_pdftable1 = [gr.Textbox(label="Table code")]

|

| 309 |

+

|

| 310 |

+

table1_btn.click(fn=test_table_component1,

|

| 311 |

+

inputs = inputs_for_pdftable1,

|

| 312 |

+

outputs = outputs_for_pdftable1,

|

| 313 |

+

api_name="pdfTable1"

|

| 314 |

+

)

|

| 315 |

+

|

| 316 |

+

gr.Markdown("## Component 2 uses table transformer and Unitable")

|

| 317 |

+

inputs_for_pdftable2 = [PDF(label="Document"), gr.Textbox(label="internal debug folder",placeholder = "./res/table1/")]

|

| 318 |

+

table2_btn = gr.Button("Run pdftable2")

|

| 319 |

+

|

| 320 |

+

gr.Examples(

|

| 321 |

+

examples=[["TestingFiles/OCRTest5English.pdf",'./res/table1/',False]],

|

| 322 |

+

inputs=inputs_for_pdftable1

|

| 323 |

+

)

|

| 324 |

+

outputs_for_pdftable2 = [gr.Textbox(label="Table code")]

|

| 325 |

+

|

| 326 |

+

table2_btn.click(fn=test_table_component2,

|

| 327 |

+

inputs = inputs_for_pdftable2,

|

| 328 |

+

outputs = outputs_for_pdftable2,

|

| 329 |

+

api_name="pdfTable2"

|

| 330 |

+

)

|

| 331 |

+

|

| 332 |

+

gr.Markdown("## Component 3 uses Yolo and Unitable+doctr")

|

| 333 |

+

inputs_for_pdftable3 = [PDF(label="Document"), gr.Textbox(label="internal debug folder",placeholder = "./res/table1/"),gr.Checkbox(label ="Denoise?",value =False),gr.Checkbox(label ="English Document?",value =False)]

|

| 334 |

+

table3_btn = gr.Button("Run pdftable3")

|

| 335 |

+

|

| 336 |

+

|

| 337 |

+

gr.Examples(

|

| 338 |

+

examples=[["TestingFiles/TableOCRTestEnglish.pdf",'./res/table1/',False]],

|

| 339 |

+

inputs=inputs_for_pdftable1

|

| 340 |

+

)

|

| 341 |

+

outputs_for_pdftable3 = [gr.Textbox(label="Table code")]

|

| 342 |

+

|

| 343 |

+

table3_btn.click(fn=test_table_component3,

|

| 344 |

+

inputs = inputs_for_pdftable3,

|

| 345 |

+

outputs = outputs_for_pdftable3,

|

| 346 |

+

api_name="pdfTable3"

|

| 347 |

+

)

|

| 348 |

+

|

| 349 |

+

gr.Markdown("## Component 4 uses Yolo and Unitable")

|

| 350 |

+

inputs_for_pdftable4 = [PDF(label="Document"), gr.Textbox(label="internal debug folder",placeholder = "./res/table1/")]

|

| 351 |

+

table4_btn = gr.Button("Run pdftable4")

|

| 352 |

+

|

| 353 |

+

gr.Examples(

|

| 354 |

+

examples=[["TestingFiles/TableOCRTestEasier.pdf",'./res/table1/',False]],

|

| 355 |

+

inputs=inputs_for_pdftable1

|

| 356 |

+

)

|

| 357 |

+

outputs_for_pdftable4 = [gr.Textbox(label="Table code")]

|

| 358 |

+

|

| 359 |

+

|

| 360 |

+

table4_btn.click(fn=test_table_component4,

|

| 361 |

+

inputs = inputs_for_pdftable4,

|

| 362 |

+

outputs = outputs_for_pdftable4,

|

| 363 |

+

api_name="pdfTable4"

|

| 364 |

+

)

|

| 365 |

+

|

| 366 |

+

|

| 367 |

+

gr.Markdown("# Table OCR component that takes image of an cropped tavle, extract table and return their html code ")

|

| 368 |

+

|

| 369 |

+

inputs_for_table1 = [gr.Image(label="Image of cropped table",type='pil'), gr.Textbox(label="internal debug folder",placeholder = "./res/table1/"),gr.Checkbox(label ="Denoise?",value =False),gr.Checkbox(label ="English Document?",value =False)]

|

| 370 |

+

onlytable1_btn = gr.Button("Run table1")

|

| 371 |

+

|

| 372 |

+

gr.Examples(

|

| 373 |

+

examples=[[Image.open("cropped_table.png"),'./res/table1/',False]],

|

| 374 |

+

inputs=inputs_for_table1

|

| 375 |

+

)

|

| 376 |

+

outputs_for_table1 = [gr.HTML(label="Table code")]

|

| 377 |

+

|

| 378 |

+

|

| 379 |

+

onlytable1_btn.click(fn=test_tableOcrOnly1,

|

| 380 |

+

inputs = inputs_for_table1,

|

| 381 |

+

outputs = outputs_for_table1,

|

| 382 |

+

api_name="table1"

|

| 383 |

+

)

|

| 384 |

+

|

| 385 |

+

gr.Markdown("## Another Table OCR component that takes image of an cropped table, extract table and return their html code ")

|

| 386 |

+

|

| 387 |

+

inputs_for_table2 = [gr.Image(label="Image of cropped table",type='pil'), gr.Textbox(label="internal debug folder",placeholder = "./res/table1/")]

|

| 388 |

+

onlytable2_btn = gr.Button("Run table2")

|

| 389 |

+

|

| 390 |

+

|

| 391 |

+

gr.Examples(

|

| 392 |

+

examples=[[Image.open("cropped_table.png"),'./res/table1/',False]],

|

| 393 |

+

inputs=inputs_for_table2

|

| 394 |

+

)

|

| 395 |

+

outputs_for_table2 = [gr.HTML(label="Table code")]

|

| 396 |

+

|

| 397 |

+

onlytable2_btn.click(fn=test_tableOcrOnly2,

|

| 398 |

+

inputs = inputs_for_table2,

|

| 399 |

+

outputs = outputs_for_table2,

|

| 400 |

+

api_name="table2"

|

| 401 |

+

)

|

| 402 |

+

|

| 403 |

+

|

| 404 |

+

|

| 405 |

+

|

| 406 |

+

demo.launch(share=True)

|

cropped_table.png

ADDED

|

cropped_table_0.png

ADDED

|

cropped_table_1.png

ADDED

|

deepdoc/README.md

ADDED

|

@@ -0,0 +1,122 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

English | [简体中文](./README_zh.md)

|

| 2 |

+

|

| 3 |

+

# *Deep*Doc

|

| 4 |

+

|

| 5 |

+

- [1. Introduction](#1)

|

| 6 |

+

- [2. Vision](#2)

|

| 7 |

+

- [3. Parser](#3)

|

| 8 |

+

|

| 9 |

+

<a name="1"></a>

|

| 10 |

+

## 1. Introduction

|

| 11 |

+

|

| 12 |

+

With a bunch of documents from various domains with various formats and along with diverse retrieval requirements,

|

| 13 |

+

an accurate analysis becomes a very challenge task. *Deep*Doc is born for that purpose.

|

| 14 |

+

There are 2 parts in *Deep*Doc so far: vision and parser.

|

| 15 |

+

You can run the flowing test programs if you're interested in our results of OCR, layout recognition and TSR.

|

| 16 |

+

```bash

|

| 17 |

+

python deepdoc/vision/t_ocr.py -h

|

| 18 |

+

usage: t_ocr.py [-h] --inputs INPUTS [--output_dir OUTPUT_DIR]

|

| 19 |

+

|

| 20 |

+

options:

|

| 21 |

+

-h, --help show this help message and exit

|

| 22 |

+

--inputs INPUTS Directory where to store images or PDFs, or a file path to a single image or PDF

|

| 23 |

+

--output_dir OUTPUT_DIR

|

| 24 |

+

Directory where to store the output images. Default: './ocr_outputs'

|

| 25 |

+

```

|

| 26 |

+

```bash

|

| 27 |

+

python deepdoc/vision/t_recognizer.py -h

|

| 28 |

+

usage: t_recognizer.py [-h] --inputs INPUTS [--output_dir OUTPUT_DIR] [--threshold THRESHOLD] [--mode {layout,tsr}]

|

| 29 |

+

|

| 30 |

+

options:

|

| 31 |

+

-h, --help show this help message and exit

|

| 32 |

+

--inputs INPUTS Directory where to store images or PDFs, or a file path to a single image or PDF

|

| 33 |

+

--output_dir OUTPUT_DIR

|

| 34 |

+

Directory where to store the output images. Default: './layouts_outputs'

|

| 35 |

+

--threshold THRESHOLD

|

| 36 |

+

A threshold to filter out detections. Default: 0.5

|

| 37 |

+

--mode {layout,tsr} Task mode: layout recognition or table structure recognition

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

Our models are served on HuggingFace. If you have trouble downloading HuggingFace models, this might help!!

|

| 41 |

+

```bash

|

| 42 |

+

export HF_ENDPOINT=https://hf-mirror.com

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

<a name="2"></a>

|

| 46 |

+

## 2. Vision

|

| 47 |

+

|

| 48 |

+

We use vision information to resolve problems as human being.

|

| 49 |

+

- OCR. Since a lot of documents presented as images or at least be able to transform to image,

|

| 50 |

+

OCR is a very essential and fundamental or even universal solution for text extraction.

|

| 51 |

+

```bash

|

| 52 |

+

python deepdoc/vision/t_ocr.py --inputs=path_to_images_or_pdfs --output_dir=path_to_store_result

|

| 53 |

+

```

|

| 54 |

+

The inputs could be directory to images or PDF, or a image or PDF.

|

| 55 |

+

You can look into the folder 'path_to_store_result' where has images which demonstrate the positions of results,

|

| 56 |

+

txt files which contain the OCR text.

|

| 57 |

+

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

| 58 |

+

<img src="https://github.com/infiniflow/ragflow/assets/12318111/f25bee3d-aaf7-4102-baf5-d5208361d110" width="900"/>

|

| 59 |

+

</div>

|

| 60 |

+

|

| 61 |

+

- Layout recognition. Documents from different domain may have various layouts,

|

| 62 |

+

like, newspaper, magazine, book and résumé are distinct in terms of layout.

|

| 63 |

+

Only when machine have an accurate layout analysis, it can decide if these text parts are successive or not,

|

| 64 |

+

or this part needs Table Structure Recognition(TSR) to process, or this part is a figure and described with this caption.

|

| 65 |

+

We have 10 basic layout components which covers most cases:

|

| 66 |

+

- Text

|

| 67 |

+

- Title

|

| 68 |

+

- Figure

|

| 69 |

+

- Figure caption

|

| 70 |

+

- Table

|

| 71 |

+

- Table caption

|

| 72 |

+

- Header

|

| 73 |

+

- Footer

|

| 74 |

+

- Reference

|

| 75 |

+

- Equation

|

| 76 |

+

|

| 77 |

+

Have a try on the following command to see the layout detection results.

|

| 78 |

+

```bash

|

| 79 |

+

python deepdoc/vision/t_recognizer.py --inputs=path_to_images_or_pdfs --threshold=0.2 --mode=layout --output_dir=path_to_store_result

|

| 80 |

+

```

|

| 81 |

+

The inputs could be directory to images or PDF, or a image or PDF.

|

| 82 |

+

You can look into the folder 'path_to_store_result' where has images which demonstrate the detection results as following:

|

| 83 |

+

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

| 84 |

+

<img src="https://github.com/infiniflow/ragflow/assets/12318111/07e0f625-9b28-43d0-9fbb-5bf586cd286f" width="1000"/>

|

| 85 |

+

</div>

|

| 86 |

+

|

| 87 |

+

- Table Structure Recognition(TSR). Data table is a frequently used structure to present data including numbers or text.

|

| 88 |

+

And the structure of a table might be very complex, like hierarchy headers, spanning cells and projected row headers.

|

| 89 |

+

Along with TSR, we also reassemble the content into sentences which could be well comprehended by LLM.

|

| 90 |

+

We have five labels for TSR task:

|

| 91 |

+

- Column

|

| 92 |

+

- Row

|

| 93 |

+

- Column header

|

| 94 |

+

- Projected row header

|

| 95 |

+

- Spanning cell

|

| 96 |

+

|

| 97 |

+

Have a try on the following command to see the layout detection results.

|

| 98 |

+

```bash

|