Spaces:

Configuration error

Configuration error

Upload files

Browse files- .gitattributes +2 -0

- .gitignore +6 -0

- README.md +139 -13

- contents/alpha_scale.gif +3 -0

- contents/alpha_scale.mp4 +3 -0

- contents/disney_lora.jpg +0 -0

- contents/pop_art.jpg +0 -0

- lora_diffusion/__init__.py +1 -0

- lora_diffusion/cli_lora_add.py +49 -0

- lora_diffusion/lora.py +166 -0

- lora_disney.pt +3 -0

- lora_illust.pt +3 -0

- lora_pop.pt +3 -0

- requirements.txt +3 -3

- run_lora_db.sh +17 -0

- scripts/make_alpha_gifs.ipynb +0 -0

- scripts/run_inference.ipynb +0 -0

- setup.py +25 -0

- train_lora_dreambooth.py +964 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

contents/alpha_scale.gif filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

contents/alpha_scale.mp4 filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

data_*

|

| 2 |

+

output_*

|

| 3 |

+

__pycache__

|

| 4 |

+

*.pyc

|

| 5 |

+

__test*

|

| 6 |

+

merged_lora*

|

README.md

CHANGED

|

@@ -1,13 +1,139 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Low-rank Adaptation for Fast Text-to-Image Diffusion Fine-tuning

|

| 2 |

+

|

| 3 |

+

<!-- #region -->

|

| 4 |

+

<p align="center">

|

| 5 |

+

<img src="contents/alpha_scale.gif">

|

| 6 |

+

</p>

|

| 7 |

+

<!-- #endregion -->

|

| 8 |

+

|

| 9 |

+

> Using LORA to fine tune on illustration dataset : $W = W_0 + \alpha \Delta W$, where $\alpha$ is the merging ratio. Above gif is scaling alpha from 0 to 1. Setting alpha to 0 is same as using the original model, and setting alpha to 1 is same as using the fully fine-tuned model.

|

| 10 |

+

|

| 11 |

+

<!-- #region -->

|

| 12 |

+

<p align="center">

|

| 13 |

+

<img src="contents/disney_lora.jpg">

|

| 14 |

+

</p>

|

| 15 |

+

<!-- #endregion -->

|

| 16 |

+

|

| 17 |

+

> "style of sks, baby lion", with disney-style LORA model.

|

| 18 |

+

|

| 19 |

+

<!-- #region -->

|

| 20 |

+

<p align="center">

|

| 21 |

+

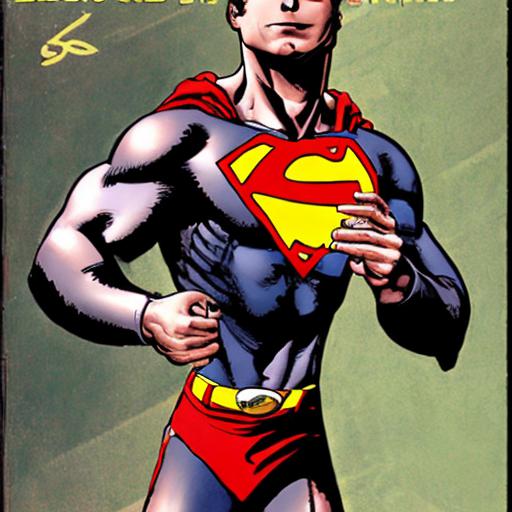

<img src="contents/pop_art.jpg">

|

| 22 |

+

</p>

|

| 23 |

+

<!-- #endregion -->

|

| 24 |

+

|

| 25 |

+

> "style of sks, superman", with pop-art style LORA model.

|

| 26 |

+

|

| 27 |

+

## Main Features

|

| 28 |

+

|

| 29 |

+

- Fine-tune Stable diffusion models twice as faster than dreambooth method, by Low-rank Adaptation

|

| 30 |

+

- Get insanely small end result, easy to share and download.

|

| 31 |

+

- Easy to use, compatible with diffusers

|

| 32 |

+

- Sometimes even better performance than full fine-tuning (but left as future work for extensive comparisons)

|

| 33 |

+

- Merge checkpoints by merging LORA

|

| 34 |

+

|

| 35 |

+

# Lengthy Introduction

|

| 36 |

+

|

| 37 |

+

Thanks to the generous work of Stability AI and Huggingface, so many people have enjoyed fine-tuning stable diffusion models to fit their needs and generate higher fidelity images. **However, the fine-tuning process is very slow, and it is not easy to find a good balance between the number of steps and the quality of the results.**

|

| 38 |

+

|

| 39 |

+

Also, the final results (fully fined-tuned model) is very large. Some people instead works with textual-inversion as an alternative for this. But clearly this is suboptimal: textual inversion only creates a small word-embedding, and the final image is not as good as a fully fine-tuned model.

|

| 40 |

+

|

| 41 |

+

Well, what's the alternative? In the domain of LLM, researchers have developed Efficient fine-tuning methods. LORA, especially, tackles the very problem the community currently has: end users with Open-sourced stable-diffusion model want to try various other fine-tuned model that is created by the community, but the model is too large to download and use. LORA instead attempts to fine-tune the "residual" of the model instead of the entire model: i.e., train the $\Delta W$ instead of $W$.

|

| 42 |

+

|

| 43 |

+

$$

|

| 44 |

+

W' = W + \Delta W

|

| 45 |

+

$$

|

| 46 |

+

|

| 47 |

+

Where we can further decompose $\Delta W$ into low-rank matrices : $\Delta W = A B^T $, where $A, \in \mathbb{R}^{n \times d}, B \in \mathbb{R}^{m \times d}, d << n$.

|

| 48 |

+

This is the key idea of LORA. We can then fine-tune $A$ and $B$ instead of $W$. In the end, you get an insanely small model as $A$ and $B$ are much smaller than $W$.

|

| 49 |

+

|

| 50 |

+

Also, not all of the parameters need tuning: they found that often, $Q, K, V, O$ (i.e., attention layer) of the transformer model is enough to tune. (This is also the reason why the end result is so small). This repo will follow the same idea.

|

| 51 |

+

|

| 52 |

+

Enough of the lengthy introduction, let's get to the code.

|

| 53 |

+

|

| 54 |

+

# Installation

|

| 55 |

+

|

| 56 |

+

```bash

|

| 57 |

+

pip install git+https://github.com/cloneofsimo/lora.git

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

# Getting Started

|

| 61 |

+

|

| 62 |

+

## Fine-tuning Stable diffusion with LORA.

|

| 63 |

+

|

| 64 |

+

Basic usage is as follows: prepare sets of $A, B$ matrices in an unet model, and fine-tune them.

|

| 65 |

+

|

| 66 |

+

```python

|

| 67 |

+

from lora_diffusion import inject_trainable_lora, extract_lora_up_downs

|

| 68 |

+

|

| 69 |

+

...

|

| 70 |

+

|

| 71 |

+

unet = UNet2DConditionModel.from_pretrained(

|

| 72 |

+

pretrained_model_name_or_path,

|

| 73 |

+

subfolder="unet",

|

| 74 |

+

)

|

| 75 |

+

unet.requires_grad_(False)

|

| 76 |

+

unet_lora_params, train_names = inject_trainable_lora(unet) # This will

|

| 77 |

+

# turn off all of the gradients of unet, except for the trainable LORA params.

|

| 78 |

+

optimizer = optim.Adam(

|

| 79 |

+

itertools.chain(*unet_lora_params, text_encoder.parameters()), lr=1e-4

|

| 80 |

+

)

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

An example of this can be found in `train_lora_dreambooth.py`. Run this example with

|

| 84 |

+

|

| 85 |

+

```bash

|

| 86 |

+

run_lora_db.sh

|

| 87 |

+

```

|

| 88 |

+

|

| 89 |

+

## Loading, merging, and interpolating trained LORAs.

|

| 90 |

+

|

| 91 |

+

We've seen that people have been merging different checkpoints with different ratios, and this seems to be very useful to the community. LORA is extremely easy to merge.

|

| 92 |

+

|

| 93 |

+

By the nature of LORA, one can interpolate between different fine-tuned models by adding different $A, B$ matrices.

|

| 94 |

+

|

| 95 |

+

Currently, LORA cli has two options : merge unet with LORA, or merge LORA with LORA.

|

| 96 |

+

|

| 97 |

+

### Merging unet with LORA

|

| 98 |

+

|

| 99 |

+

```bash

|

| 100 |

+

$ lora_add --path_1 PATH_TO_DIFFUSER_FORMAT_MODEL --path_2 PATH_TO_LORA.PT --mode upl --alpha 1.0 --output_path OUTPUT_PATH

|

| 101 |

+

```

|

| 102 |

+

|

| 103 |

+

`path_1` can be both local path or huggingface model name. When adding LORA to unet, alpha is the constant as below:

|

| 104 |

+

|

| 105 |

+

$$

|

| 106 |

+

W' = W + \alpha \Delta W

|

| 107 |

+

$$

|

| 108 |

+

|

| 109 |

+

So, set alpha to 1.0 to fully add LORA. If the LORA seems to have too much effect (i.e., overfitted), set alpha to lower value. If the LORA seems to have too little effect, set alpha to higher than 1.0. You can tune these values to your needs.

|

| 110 |

+

|

| 111 |

+

**Example**

|

| 112 |

+

|

| 113 |

+

```bash

|

| 114 |

+

$ lora_add --path_1 stabilityai/stable-diffusion-2-base --path_2 lora_illust.pt --mode upl --alpha 1.0 --output_path merged_model

|

| 115 |

+

```

|

| 116 |

+

|

| 117 |

+

### Merging LORA with LORA

|

| 118 |

+

|

| 119 |

+

```bash

|

| 120 |

+

$ lora_add --path_1 PATH_TO_LORA.PT --path_2 PATH_TO_LORA.PT --mode lpl --alpha 0.5 --output_path OUTPUT_PATH.PT

|

| 121 |

+

```

|

| 122 |

+

|

| 123 |

+

alpha is the ratio of the first model to the second model. i.e.,

|

| 124 |

+

|

| 125 |

+

$$

|

| 126 |

+

\Delta W = (\alpha A_1 + (1 - \alpha) A_2) (B_1 + (1 - \alpha) B_2)^T

|

| 127 |

+

$$

|

| 128 |

+

|

| 129 |

+

Set alpha to 0.5 to get the average of the two models. Set alpha close to 1.0 to get more effect of the first model, and set alpha close to 0.0 to get more effect of the second model.

|

| 130 |

+

|

| 131 |

+

**Example**

|

| 132 |

+

|

| 133 |

+

```bash

|

| 134 |

+

$ lora_add --path_1 lora_illust.pt --path_2 lora_pop.pt --alpha 0.3 --output_path lora_merged.pt

|

| 135 |

+

```

|

| 136 |

+

|

| 137 |

+

### Making Inference with trained LORA

|

| 138 |

+

|

| 139 |

+

Checkout `scripts/run_inference.ipynb` for an example of how to make inference with LORA.

|

contents/alpha_scale.gif

ADDED

|

Git LFS Details

|

contents/alpha_scale.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1ad74f5f69d99bfcbeee1d4d2b3900ac1ca7ff83fba5ddf8269ffed8a56c9c6e

|

| 3 |

+

size 5247140

|

contents/disney_lora.jpg

ADDED

|

contents/pop_art.jpg

ADDED

|

lora_diffusion/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .lora import *

|

lora_diffusion/cli_lora_add.py

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Literal, Union, Dict

|

| 2 |

+

|

| 3 |

+

import fire

|

| 4 |

+

from diffusers import StableDiffusionPipeline

|

| 5 |

+

|

| 6 |

+

import torch

|

| 7 |

+

from .lora import tune_lora_scale, weight_apply_lora

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def add(

|

| 11 |

+

path_1: str,

|

| 12 |

+

path_2: str,

|

| 13 |

+

output_path: str = "./merged_lora.pt",

|

| 14 |

+

alpha: float = 0.5,

|

| 15 |

+

mode: Literal["lpl", "upl"] = "lpl",

|

| 16 |

+

):

|

| 17 |

+

if mode == "lpl":

|

| 18 |

+

out_list = []

|

| 19 |

+

l1 = torch.load(path_1)

|

| 20 |

+

l2 = torch.load(path_2)

|

| 21 |

+

|

| 22 |

+

l1pairs = zip(l1[::2], l1[1::2])

|

| 23 |

+

l2pairs = zip(l2[::2], l2[1::2])

|

| 24 |

+

|

| 25 |

+

for (x1, y1), (x2, y2) in zip(l1pairs, l2pairs):

|

| 26 |

+

x1.data = alpha * x1.data + (1 - alpha) * x2.data

|

| 27 |

+

y1.data = alpha * y1.data + (1 - alpha) * y2.data

|

| 28 |

+

|

| 29 |

+

out_list.append(x1)

|

| 30 |

+

out_list.append(y1)

|

| 31 |

+

|

| 32 |

+

torch.save(out_list, output_path)

|

| 33 |

+

|

| 34 |

+

elif mode == "upl":

|

| 35 |

+

|

| 36 |

+

loaded_pipeline = StableDiffusionPipeline.from_pretrained(

|

| 37 |

+

path_1,

|

| 38 |

+

).to("cpu")

|

| 39 |

+

|

| 40 |

+

weight_apply_lora(loaded_pipeline.unet, torch.load(path_2), alpha=alpha)

|

| 41 |

+

|

| 42 |

+

if output_path.endswith(".pt"):

|

| 43 |

+

output_path = output_path[:-3]

|

| 44 |

+

|

| 45 |

+

loaded_pipeline.save_pretrained(output_path)

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

def main():

|

| 49 |

+

fire.Fire(add)

|

lora_diffusion/lora.py

ADDED

|

@@ -0,0 +1,166 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

from typing import Callable, Dict, List, Optional, Tuple

|

| 3 |

+

|

| 4 |

+

import numpy as np

|

| 5 |

+

import PIL

|

| 6 |

+

import torch

|

| 7 |

+

import torch.nn.functional as F

|

| 8 |

+

|

| 9 |

+

import torch.nn as nn

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class LoraInjectedLinear(nn.Module):

|

| 13 |

+

def __init__(self, in_features, out_features, bias=False):

|

| 14 |

+

super().__init__()

|

| 15 |

+

self.linear = nn.Linear(in_features, out_features, bias)

|

| 16 |

+

self.lora_down = nn.Linear(in_features, 4, bias=False)

|

| 17 |

+

self.lora_up = nn.Linear(4, out_features, bias=False)

|

| 18 |

+

self.scale = 1.0

|

| 19 |

+

|

| 20 |

+

nn.init.normal_(self.lora_down.weight, std=1 / 16)

|

| 21 |

+

nn.init.zeros_(self.lora_up.weight)

|

| 22 |

+

|

| 23 |

+

def forward(self, input):

|

| 24 |

+

return self.linear(input) + self.lora_up(self.lora_down(input)) * self.scale

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

def inject_trainable_lora(

|

| 28 |

+

model: nn.Module, target_replace_module: List[str] = ["CrossAttention", "Attention"]

|

| 29 |

+

):

|

| 30 |

+

"""

|

| 31 |

+

inject lora into model, and returns lora parameter groups.

|

| 32 |

+

"""

|

| 33 |

+

|

| 34 |

+

require_grad_params = []

|

| 35 |

+

names = []

|

| 36 |

+

|

| 37 |

+

for _module in model.modules():

|

| 38 |

+

if _module.__class__.__name__ in target_replace_module:

|

| 39 |

+

|

| 40 |

+

for name, _child_module in _module.named_modules():

|

| 41 |

+

if _child_module.__class__.__name__ == "Linear":

|

| 42 |

+

|

| 43 |

+

weight = _child_module.weight

|

| 44 |

+

bias = _child_module.bias

|

| 45 |

+

_tmp = LoraInjectedLinear(

|

| 46 |

+

_child_module.in_features,

|

| 47 |

+

_child_module.out_features,

|

| 48 |

+

_child_module.bias is not None,

|

| 49 |

+

)

|

| 50 |

+

_tmp.linear.weight = weight

|

| 51 |

+

if bias is not None:

|

| 52 |

+

_tmp.linear.bias = bias

|

| 53 |

+

|

| 54 |

+

# switch the module

|

| 55 |

+

_module._modules[name] = _tmp

|

| 56 |

+

|

| 57 |

+

require_grad_params.append(

|

| 58 |

+

_module._modules[name].lora_up.parameters()

|

| 59 |

+

)

|

| 60 |

+

require_grad_params.append(

|

| 61 |

+

_module._modules[name].lora_down.parameters()

|

| 62 |

+

)

|

| 63 |

+

|

| 64 |

+

_module._modules[name].lora_up.weight.requires_grad = True

|

| 65 |

+

_module._modules[name].lora_down.weight.requires_grad = True

|

| 66 |

+

names.append(name)

|

| 67 |

+

|

| 68 |

+

return require_grad_params, names

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

def extract_lora_ups_down(model, target_replace_module=["CrossAttention", "Attention"]):

|

| 72 |

+

|

| 73 |

+

loras = []

|

| 74 |

+

|

| 75 |

+

for _module in model.modules():

|

| 76 |

+

if _module.__class__.__name__ in target_replace_module:

|

| 77 |

+

for _child_module in _module.modules():

|

| 78 |

+

if _child_module.__class__.__name__ == "LoraInjectedLinear":

|

| 79 |

+

loras.append((_child_module.lora_up, _child_module.lora_down))

|

| 80 |

+

if len(loras) == 0:

|

| 81 |

+

raise ValueError("No lora injected.")

|

| 82 |

+

return loras

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def save_lora_weight(model, path="./lora.pt"):

|

| 86 |

+

weights = []

|

| 87 |

+

for _up, _down in extract_lora_ups_down(model):

|

| 88 |

+

weights.append(_up.weight)

|

| 89 |

+

weights.append(_down.weight)

|

| 90 |

+

|

| 91 |

+

torch.save(weights, path)

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

def save_lora_as_json(model, path="./lora.json"):

|

| 95 |

+

weights = []

|

| 96 |

+

for _up, _down in extract_lora_ups_down(model):

|

| 97 |

+

weights.append(_up.weight.detach().cpu().numpy().tolist())

|

| 98 |

+

weights.append(_down.weight.detach().cpu().numpy().tolist())

|

| 99 |

+

|

| 100 |

+

import json

|

| 101 |

+

|

| 102 |

+

with open(path, "w") as f:

|

| 103 |

+

json.dump(weights, f)

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

def weight_apply_lora(

|

| 107 |

+

model, loras, target_replace_module=["CrossAttention", "Attention"], alpha=1.0

|

| 108 |

+

):

|

| 109 |

+

|

| 110 |

+

for _module in model.modules():

|

| 111 |

+

if _module.__class__.__name__ in target_replace_module:

|

| 112 |

+

for _child_module in _module.modules():

|

| 113 |

+

if _child_module.__class__.__name__ == "Linear":

|

| 114 |

+

|

| 115 |

+

weight = _child_module.weight

|

| 116 |

+

|

| 117 |

+

up_weight = loras.pop(0).detach().to(weight.device)

|

| 118 |

+

down_weight = loras.pop(0).detach().to(weight.device)

|

| 119 |

+

|

| 120 |

+

# W <- W + U * D

|

| 121 |

+

weight = weight + alpha * (up_weight @ down_weight).type(

|

| 122 |

+

weight.dtype

|

| 123 |

+

)

|

| 124 |

+

_child_module.weight = nn.Parameter(weight)

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

def monkeypatch_lora(

|

| 128 |

+

model, loras, target_replace_module=["CrossAttention", "Attention"]

|

| 129 |

+

):

|

| 130 |

+

for _module in model.modules():

|

| 131 |

+

if _module.__class__.__name__ in target_replace_module:

|

| 132 |

+

for name, _child_module in _module.named_modules():

|

| 133 |

+

if _child_module.__class__.__name__ == "Linear":

|

| 134 |

+

|

| 135 |

+

weight = _child_module.weight

|

| 136 |

+

bias = _child_module.bias

|

| 137 |

+

_tmp = LoraInjectedLinear(

|

| 138 |

+

_child_module.in_features,

|

| 139 |

+

_child_module.out_features,

|

| 140 |

+

_child_module.bias is not None,

|

| 141 |

+

)

|

| 142 |

+

_tmp.linear.weight = weight

|

| 143 |

+

|

| 144 |

+

if bias is not None:

|

| 145 |

+

_tmp.linear.bias = bias

|

| 146 |

+

|

| 147 |

+

# switch the module

|

| 148 |

+

_module._modules[name] = _tmp

|

| 149 |

+

|

| 150 |

+

up_weight = loras.pop(0)

|

| 151 |

+

down_weight = loras.pop(0)

|

| 152 |

+

|

| 153 |

+

_module._modules[name].lora_up.weight = nn.Parameter(

|

| 154 |

+

up_weight.type(weight.dtype)

|

| 155 |

+

)

|

| 156 |

+

_module._modules[name].lora_down.weight = nn.Parameter(

|

| 157 |

+

down_weight.type(weight.dtype)

|

| 158 |

+

)

|

| 159 |

+

|

| 160 |

+

_module._modules[name].to(weight.device)

|

| 161 |

+

|

| 162 |

+

|

| 163 |

+

def tune_lora_scale(model, alpha: float = 1.0):

|

| 164 |

+

for _module in model.modules():

|

| 165 |

+

if _module.__class__.__name__ == "LoraInjectedLinear":

|

| 166 |

+

_module.scale = alpha

|

lora_disney.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:72f687f810b86bb8cc64d2ece59886e2e96d29e3f57f97340ee147d168b8a5fe

|

| 3 |

+

size 3397249

|

lora_illust.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7f6acb0bc0cd5f96299be7839f89f58727e2666e58861e55866ea02125c97aba

|

| 3 |

+

size 3397249

|

lora_pop.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:18a1565852a08cfcff63e90670286c9427e3958f57de9b84e3f8b2c9a3a14b6c

|

| 3 |

+

size 3397249

|

requirements.txt

CHANGED

|

@@ -1,4 +1,4 @@

|

|

| 1 |

-

diffusers

|

| 2 |

transformers

|

| 3 |

-

|

| 4 |

-

|

|

|

|

| 1 |

+

diffusers>=0.9.0

|

| 2 |

transformers

|

| 3 |

+

scipy

|

| 4 |

+

ftfy

|

run_lora_db.sh

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#https://github.com/huggingface/diffusers/tree/main/examples/dreambooth

|

| 2 |

+

export MODEL_NAME="stabilityai/stable-diffusion-2-1-base"

|

| 3 |

+

export INSTANCE_DIR="./data_example"

|

| 4 |

+

export OUTPUT_DIR="./output_example"

|

| 5 |

+

|

| 6 |

+

accelerate launch train_lora_dreambooth.py \

|

| 7 |

+

--pretrained_model_name_or_path=$MODEL_NAME \

|

| 8 |

+

--instance_data_dir=$INSTANCE_DIR \

|

| 9 |

+

--output_dir=$OUTPUT_DIR \

|

| 10 |

+

--instance_prompt="style of sks" \

|

| 11 |

+

--resolution=512 \

|

| 12 |

+

--train_batch_size=1 \

|

| 13 |

+

--gradient_accumulation_steps=1 \

|

| 14 |

+

--learning_rate=1e-4 \

|

| 15 |

+

--lr_scheduler="constant" \

|

| 16 |

+

--lr_warmup_steps=0 \

|

| 17 |

+

--max_train_steps=30000

|

scripts/make_alpha_gifs.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

scripts/run_inference.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

setup.py

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

|

| 3 |

+

import pkg_resources

|

| 4 |

+

from setuptools import find_packages, setup

|

| 5 |

+

|

| 6 |

+

setup(

|

| 7 |

+

name="lora_diffusion",

|

| 8 |

+

py_modules=["lora_diffusion"],

|

| 9 |

+

version="0.0.1",

|

| 10 |

+

description="Low Rank Adaptation for Diffusion Models. Works with Stable Diffusion out-of-the-box.",

|

| 11 |

+

author="Simo Ryu",

|

| 12 |

+

packages=find_packages(),

|

| 13 |

+

entry_points={

|

| 14 |

+

"console_scripts": [

|

| 15 |

+

"lora_add = lora_diffusion.cli_lora_add:main",

|

| 16 |

+

],

|

| 17 |

+

},

|

| 18 |

+

install_requires=[

|

| 19 |

+

str(r)

|

| 20 |

+

for r in pkg_resources.parse_requirements(

|

| 21 |

+

open(os.path.join(os.path.dirname(__file__), "requirements.txt"))

|

| 22 |

+

)

|

| 23 |

+

],

|

| 24 |

+

include_package_data=True,

|

| 25 |

+

)

|

train_lora_dreambooth.py

ADDED

|

@@ -0,0 +1,964 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Bootstrapped from:

|

| 2 |

+

# https://github.com/huggingface/diffusers/blob/main/examples/dreambooth/train_dreambooth.py

|

| 3 |

+

|

| 4 |

+

import argparse

|

| 5 |

+

import hashlib

|

| 6 |

+

import itertools

|

| 7 |

+

import math

|

| 8 |

+

import os

|

| 9 |

+

from pathlib import Path

|

| 10 |

+

from typing import Optional

|

| 11 |

+

|

| 12 |

+

import torch

|

| 13 |

+

import torch.nn.functional as F

|

| 14 |

+

import torch.utils.checkpoint

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

from accelerate import Accelerator

|

| 18 |

+

from accelerate.logging import get_logger

|

| 19 |

+

from accelerate.utils import set_seed

|

| 20 |

+

from diffusers import (

|

| 21 |

+

AutoencoderKL,

|

| 22 |

+

DDPMScheduler,

|

| 23 |

+

StableDiffusionPipeline,

|

| 24 |

+

UNet2DConditionModel,

|

| 25 |

+

)

|

| 26 |

+

from diffusers.optimization import get_scheduler

|

| 27 |

+

from huggingface_hub import HfFolder, Repository, whoami

|

| 28 |

+

|

| 29 |

+

from tqdm.auto import tqdm

|

| 30 |

+

from transformers import CLIPTextModel, CLIPTokenizer

|

| 31 |

+

|

| 32 |

+

from lora_diffusion import (

|

| 33 |

+

inject_trainable_lora,

|

| 34 |

+

save_lora_weight,

|

| 35 |

+

extract_lora_ups_down,

|

| 36 |

+

)

|

| 37 |

+

|

| 38 |

+

from torch.utils.data import Dataset

|

| 39 |

+

from PIL import Image

|

| 40 |

+

from torchvision import transforms

|

| 41 |

+

|

| 42 |

+

from pathlib import Path

|

| 43 |

+

|

| 44 |

+

import random

|

| 45 |

+

import re

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

class DreamBoothDataset(Dataset):

|

| 49 |

+

"""

|

| 50 |

+

A dataset to prepare the instance and class images with the prompts for fine-tuning the model.

|

| 51 |

+

It pre-processes the images and the tokenizes prompts.

|

| 52 |

+

"""

|

| 53 |

+

|

| 54 |

+

def __init__(

|

| 55 |

+

self,

|

| 56 |

+

instance_data_root,

|

| 57 |

+

instance_prompt,

|

| 58 |

+

tokenizer,

|

| 59 |

+

class_data_root=None,

|

| 60 |

+

class_prompt=None,

|

| 61 |

+

size=512,

|

| 62 |

+

center_crop=False,

|

| 63 |

+

):

|

| 64 |

+

self.size = size

|

| 65 |

+

self.center_crop = center_crop

|

| 66 |

+

self.tokenizer = tokenizer

|

| 67 |

+

|

| 68 |

+

self.instance_data_root = Path(instance_data_root)

|

| 69 |

+

if not self.instance_data_root.exists():

|

| 70 |

+

raise ValueError("Instance images root doesn't exists.")

|

| 71 |

+

|

| 72 |

+

self.instance_images_path = list(Path(instance_data_root).iterdir())

|

| 73 |

+

self.num_instance_images = len(self.instance_images_path)

|

| 74 |

+

self.instance_prompt = instance_prompt

|

| 75 |

+

self._length = self.num_instance_images

|

| 76 |

+

|

| 77 |

+

if class_data_root is not None:

|

| 78 |

+

self.class_data_root = Path(class_data_root)

|

| 79 |

+

self.class_data_root.mkdir(parents=True, exist_ok=True)

|

| 80 |

+

self.class_images_path = list(self.class_data_root.iterdir())

|

| 81 |

+

self.num_class_images = len(self.class_images_path)

|

| 82 |

+

self._length = max(self.num_class_images, self.num_instance_images)

|

| 83 |

+

self.class_prompt = class_prompt

|

| 84 |

+

else:

|

| 85 |

+

self.class_data_root = None

|

| 86 |

+

|

| 87 |

+

self.image_transforms = transforms.Compose(

|

| 88 |

+

[

|

| 89 |

+

transforms.Resize(

|

| 90 |

+

size, interpolation=transforms.InterpolationMode.BILINEAR

|

| 91 |

+

),

|

| 92 |

+

transforms.CenterCrop(size)

|

| 93 |

+

if center_crop

|

| 94 |

+

else transforms.RandomCrop(size),

|

| 95 |

+

transforms.ToTensor(),

|

| 96 |

+

transforms.Normalize([0.5], [0.5]),

|

| 97 |

+

]

|

| 98 |

+

)

|

| 99 |

+

|

| 100 |

+

def __len__(self):

|

| 101 |

+

return self._length

|

| 102 |

+

|

| 103 |

+

def __getitem__(self, index):

|

| 104 |

+

example = {}

|

| 105 |

+

instance_image = Image.open(

|

| 106 |

+

self.instance_images_path[index % self.num_instance_images]

|

| 107 |

+

)

|

| 108 |

+

if not instance_image.mode == "RGB":

|

| 109 |

+

instance_image = instance_image.convert("RGB")

|

| 110 |

+

example["instance_images"] = self.image_transforms(instance_image)

|

| 111 |

+

example["instance_prompt_ids"] = self.tokenizer(

|

| 112 |

+

self.instance_prompt,

|

| 113 |

+

padding="do_not_pad",

|

| 114 |

+

truncation=True,

|

| 115 |

+

max_length=self.tokenizer.model_max_length,

|

| 116 |

+

).input_ids

|

| 117 |

+

|

| 118 |

+

if self.class_data_root:

|

| 119 |

+

class_image = Image.open(

|

| 120 |

+

self.class_images_path[index % self.num_class_images]

|

| 121 |

+

)

|

| 122 |

+

if not class_image.mode == "RGB":

|

| 123 |

+

class_image = class_image.convert("RGB")

|

| 124 |

+

example["class_images"] = self.image_transforms(class_image)

|

| 125 |

+

example["class_prompt_ids"] = self.tokenizer(

|

| 126 |

+

self.class_prompt,

|

| 127 |

+

padding="do_not_pad",

|

| 128 |

+

truncation=True,

|

| 129 |

+

max_length=self.tokenizer.model_max_length,

|

| 130 |

+

).input_ids

|

| 131 |

+

|

| 132 |

+

return example

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

class DreamBoothLabled(Dataset):

|

| 136 |

+

"""

|

| 137 |

+

A dataset to prepare the instance and class images with the prompts for fine-tuning the model.

|

| 138 |

+

It pre-processes the images and the tokenizes prompts.

|

| 139 |

+

"""

|

| 140 |

+

|

| 141 |

+

def __init__(

|

| 142 |

+

self,

|

| 143 |

+

instance_data_root,

|

| 144 |

+

instance_prompt,

|

| 145 |

+

tokenizer,

|

| 146 |

+

class_data_root=None,

|

| 147 |

+

class_prompt=None,

|

| 148 |

+

size=512,

|

| 149 |

+

center_crop=False,

|

| 150 |

+

):

|

| 151 |

+

self.size = size

|

| 152 |

+

self.center_crop = center_crop

|

| 153 |

+

self.tokenizer = tokenizer

|

| 154 |

+

|

| 155 |

+

self.instance_data_root = Path(instance_data_root)

|

| 156 |

+

if not self.instance_data_root.exists():

|

| 157 |

+

raise ValueError("Instance images root doesn't exists.")

|

| 158 |

+

|

| 159 |

+

self.instance_images_path = list(Path(instance_data_root).iterdir())

|

| 160 |

+

self.num_instance_images = len(self.instance_images_path)

|

| 161 |

+

self.instance_prompt = instance_prompt

|

| 162 |

+

self._length = self.num_instance_images

|

| 163 |

+

|

| 164 |

+

if class_data_root is not None:

|

| 165 |

+

self.class_data_root = Path(class_data_root)

|

| 166 |

+

self.class_data_root.mkdir(parents=True, exist_ok=True)

|

| 167 |

+

self.class_images_path = list(self.class_data_root.iterdir())

|

| 168 |

+

self.num_class_images = len(self.class_images_path)

|

| 169 |

+

self._length = max(self.num_class_images, self.num_instance_images)

|

| 170 |

+

self.class_prompt = class_prompt

|

| 171 |

+

else:

|

| 172 |

+

self.class_data_root = None

|

| 173 |

+

|

| 174 |

+

self.image_transforms = transforms.Compose(

|

| 175 |

+

[

|

| 176 |

+

transforms.Resize(

|

| 177 |

+

size, interpolation=transforms.InterpolationMode.BILINEAR

|

| 178 |

+

),

|

| 179 |

+

transforms.CenterCrop(size)

|

| 180 |

+

if center_crop

|

| 181 |

+

else transforms.RandomCrop(size),

|

| 182 |

+

transforms.ToTensor(),

|

| 183 |

+

transforms.Normalize([0.5], [0.5]),

|

| 184 |

+

]

|

| 185 |

+

)

|

| 186 |

+

|

| 187 |

+

def __len__(self):

|

| 188 |

+

return self._length

|

| 189 |

+

|

| 190 |

+