Spaces:

Configuration error

Configuration error

Commit

•

52a1665

1

Parent(s):

b4324e8

test

Browse files- README.md +32 -9

- added_tokens.json +2 -0

- config.json +48 -0

- gitattributes.txt +16 -0

- merges.txt +0 -0

- pytorch_model.bin +3 -0

- special_tokens_map.json +2 -0

- summ.png +0 -0

- tokenizer.json +0 -0

- vocab.json +0 -0

README.md

CHANGED

|

@@ -1,13 +1,36 @@

|

|

| 1 |

---

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

colorTo: purple

|

| 6 |

-

sdk: streamlit

|

| 7 |

-

sdk_version: 1.21.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

license: mit

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

language: ko

|

| 3 |

+

tags:

|

| 4 |

+

- bart

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

license: mit

|

| 6 |

---

|

| 7 |

|

| 8 |

+

# Korean News Summarization Model

|

| 9 |

+

|

| 10 |

+

## Demo

|

| 11 |

+

|

| 12 |

+

https://huggingface.co/spaces/gogamza/kobart-summarization

|

| 13 |

+

|

| 14 |

+

## How to use

|

| 15 |

+

|

| 16 |

+

```python

|

| 17 |

+

import torch

|

| 18 |

+

from transformers import PreTrainedTokenizerFast

|

| 19 |

+

from transformers import BartForConditionalGeneration

|

| 20 |

+

|

| 21 |

+

tokenizer = PreTrainedTokenizerFast.from_pretrained('gogamza/kobart-summarization')

|

| 22 |

+

model = BartForConditionalGeneration.from_pretrained('gogamza/kobart-summarization')

|

| 23 |

+

|

| 24 |

+

text = "과거를 떠올려보자. 방송을 보던 우리의 모습을. 독보적인 매체는 TV였다. 온 가족이 둘러앉아 TV를 봤다. 간혹 가족들끼리 뉴스와 드라마, 예능 프로그램을 둘러싸고 리모컨 쟁탈전이 벌어지기도 했다. 각자 선호하는 프로그램을 ‘본방’으로 보기 위한 싸움이었다. TV가 한 대인지 두 대인지 여부도 그래서 중요했다. 지금은 어떤가. ‘안방극장’이라는 말은 옛말이 됐다. TV가 없는 집도 많다. 미디어의 혜 택을 누릴 수 있는 방법은 늘어났다. 각자의 방에서 각자의 휴대폰으로, 노트북으로, 태블릿으로 콘텐츠 를 즐긴다."

|

| 25 |

+

|

| 26 |

+

raw_input_ids = tokenizer.encode(text)

|

| 27 |

+

input_ids = [tokenizer.bos_token_id] + raw_input_ids + [tokenizer.eos_token_id]

|

| 28 |

+

|

| 29 |

+

summary_ids = model.generate(torch.tensor([input_ids]))

|

| 30 |

+

tokenizer.decode(summary_ids.squeeze().tolist(), skip_special_tokens=True)

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

added_tokens.json

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{}

|

| 2 |

+

|

config.json

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"activation_dropout": 0.0,

|

| 3 |

+

"activation_function": "gelu",

|

| 4 |

+

"add_bias_logits": false,

|

| 5 |

+

"add_final_layer_norm": false,

|

| 6 |

+

"architectures": [

|

| 7 |

+

"BartForConditionalGeneration"

|

| 8 |

+

],

|

| 9 |

+

"attention_dropout": 0.0,

|

| 10 |

+

"bos_token_id": 0,

|

| 11 |

+

"classif_dropout": 0.1,

|

| 12 |

+

"classifier_dropout": 0.1,

|

| 13 |

+

"d_model": 768,

|

| 14 |

+

"decoder_attention_heads": 16,

|

| 15 |

+

"decoder_ffn_dim": 3072,

|

| 16 |

+

"decoder_layerdrop": 0.0,

|

| 17 |

+

"decoder_layers": 6,

|

| 18 |

+

"do_blenderbot_90_layernorm": false,

|

| 19 |

+

"dropout": 0.1,

|

| 20 |

+

"encoder_attention_heads": 16,

|

| 21 |

+

"encoder_ffn_dim": 3072,

|

| 22 |

+

"encoder_layerdrop": 0.0,

|

| 23 |

+

"encoder_layers": 6,

|

| 24 |

+

"eos_token_id": 1,

|

| 25 |

+

"extra_pos_embeddings": 2,

|

| 26 |

+

"force_bos_token_to_be_generated": false,

|

| 27 |

+

"id2label": {

|

| 28 |

+

"0": "NEGATIVE",

|

| 29 |

+

"1": "POSITIVE"

|

| 30 |

+

},

|

| 31 |

+

"init_std": 0.02,

|

| 32 |

+

"is_encoder_decoder": true,

|

| 33 |

+

"label2id": {

|

| 34 |

+

"NEGATIVE": 0,

|

| 35 |

+

"POSITIVE": 1

|

| 36 |

+

},

|

| 37 |

+

"max_position_embeddings": 1026,

|

| 38 |

+

"model_type": "bart",

|

| 39 |

+

"normalize_before": false,

|

| 40 |

+

"normalize_embedding": true,

|

| 41 |

+

"num_hidden_layers": 6,

|

| 42 |

+

"pad_token_id": 3,

|

| 43 |

+

"scale_embedding": false,

|

| 44 |

+

"static_position_embeddings": false,

|

| 45 |

+

"use_cache": true,

|

| 46 |

+

"vocab_size": 30000,

|

| 47 |

+

"author": "Heewon Jeon(madjakarta@gmail.com)"

|

| 48 |

+

}

|

gitattributes.txt

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.bin.* filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.tar.gz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0f59dd27e0e73b4b5cf45a6c70513cc5af667df248be097874be71bc51fd5f53

|

| 3 |

+

size 495659091

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{"bos_token": "<s>", "eos_token": "</s>", "unk_token": "<unk>", "pad_token": "<pad>", "mask_token": "<mask>"}

|

| 2 |

+

|

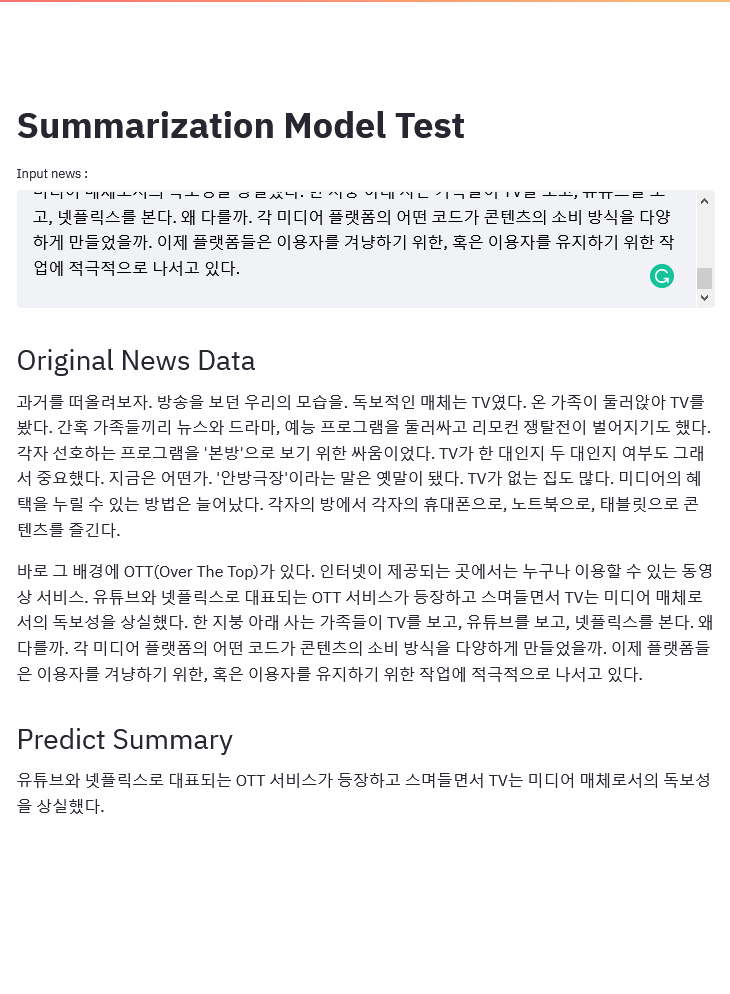

summ.png

ADDED

|

tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|