Spaces:

Running

Running

Vincentqyw

commited on

Commit

·

2673dcd

1

Parent(s):

45354a0

add: lightglue

Browse files- pre-requirements.txt +0 -3

- third_party/LightGlue/.gitattributes +1 -0

- third_party/LightGlue/.gitignore +10 -0

- third_party/LightGlue/LICENSE +201 -0

- third_party/LightGlue/README.md +134 -0

- third_party/LightGlue/assets/DSC_0410.JPG +0 -0

- third_party/LightGlue/assets/DSC_0411.JPG +0 -0

- third_party/LightGlue/assets/architecture.svg +0 -0

- third_party/LightGlue/assets/easy_hard.jpg +0 -0

- third_party/LightGlue/assets/sacre_coeur1.jpg +0 -0

- third_party/LightGlue/assets/sacre_coeur2.jpg +0 -0

- third_party/LightGlue/assets/teaser.svg +1499 -0

- third_party/LightGlue/demo.ipynb +0 -0

- third_party/LightGlue/lightglue/__init__.py +4 -0

- third_party/LightGlue/lightglue/disk.py +70 -0

- third_party/LightGlue/lightglue/lightglue.py +466 -0

- third_party/LightGlue/lightglue/superpoint.py +230 -0

- third_party/LightGlue/lightglue/utils.py +135 -0

- third_party/LightGlue/lightglue/viz2d.py +161 -0

- third_party/LightGlue/requirements.txt +6 -0

- third_party/LightGlue/setup.py +27 -0

pre-requirements.txt

CHANGED

|

@@ -1,4 +1,3 @@

|

|

| 1 |

-

# python>=3.10.4

|

| 2 |

torch>=1.12.1

|

| 3 |

torchvision>=0.13.1

|

| 4 |

torchmetrics>=0.6.0

|

|

@@ -9,5 +8,3 @@ einops>=0.3.0

|

|

| 9 |

kornia>=0.6

|

| 10 |

gradio

|

| 11 |

gradio_client==0.2.7

|

| 12 |

-

# datasets[vision]>=2.4.0

|

| 13 |

-

|

|

|

|

|

|

|

| 1 |

torch>=1.12.1

|

| 2 |

torchvision>=0.13.1

|

| 3 |

torchmetrics>=0.6.0

|

|

|

|

| 8 |

kornia>=0.6

|

| 9 |

gradio

|

| 10 |

gradio_client==0.2.7

|

|

|

|

|

|

third_party/LightGlue/.gitattributes

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

*.ipynb linguist-documentation

|

third_party/LightGlue/.gitignore

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.egg-info

|

| 2 |

+

*.pyc

|

| 3 |

+

/.idea/

|

| 4 |

+

/data/

|

| 5 |

+

/outputs/

|

| 6 |

+

__pycache__

|

| 7 |

+

/lightglue/weights/

|

| 8 |

+

lightglue/_flash/

|

| 9 |

+

*-checkpoint.ipynb

|

| 10 |

+

*.pth

|

third_party/LightGlue/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

third_party/LightGlue/README.md

ADDED

|

@@ -0,0 +1,134 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<p align="center">

|

| 2 |

+

<h1 align="center"><ins>LightGlue ⚡️</ins><br>Local Feature Matching at Light Speed</h1>

|

| 3 |

+

<p align="center">

|

| 4 |

+

<a href="https://www.linkedin.com/in/philipplindenberger/">Philipp Lindenberger</a>

|

| 5 |

+

·

|

| 6 |

+

<a href="https://psarlin.com/">Paul-Edouard Sarlin</a>

|

| 7 |

+

·

|

| 8 |

+

<a href="https://www.microsoft.com/en-us/research/people/mapoll/">Marc Pollefeys</a>

|

| 9 |

+

</p>

|

| 10 |

+

<!-- <p align="center">

|

| 11 |

+

<img src="assets/larchitecture.svg" alt="Logo" height="40">

|

| 12 |

+

</p> -->

|

| 13 |

+

<!-- <h2 align="center">PrePrint 2023</h2> -->

|

| 14 |

+

<h2 align="center"><p>

|

| 15 |

+

<a href="https://arxiv.org/pdf/2306.13643.pdf" align="center">Paper</a> |

|

| 16 |

+

<a href="https://colab.research.google.com/github/cvg/LightGlue/blob/main/demo.ipynb" align="center">Colab</a>

|

| 17 |

+

</p></h2>

|

| 18 |

+

<div align="center"></div>

|

| 19 |

+

</p>

|

| 20 |

+

<p align="center">

|

| 21 |

+

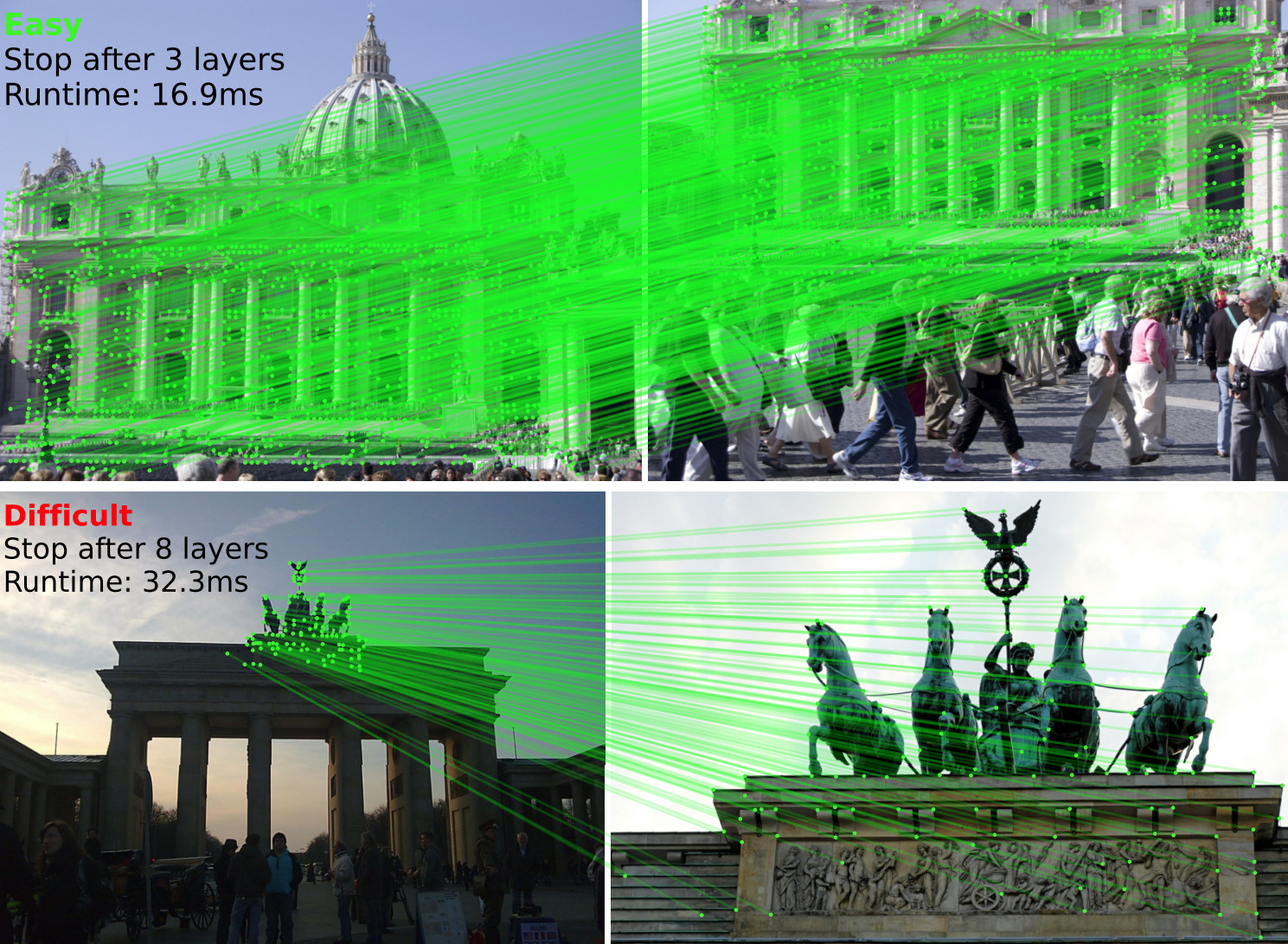

<a href="https://arxiv.org/abs/2306.13643"><img src="assets/easy_hard.jpg" alt="example" width=80%></a>

|

| 22 |

+

<br>

|

| 23 |

+

<em>LightGlue is a deep neural network that matches sparse local features across image pairs.<br>An adaptive mechanism makes it fast for easy pairs (top) and reduces the computational complexity for difficult ones (bottom).</em>

|

| 24 |

+

</p>

|

| 25 |

+

|

| 26 |

+

##

|

| 27 |

+

|

| 28 |

+

This repository hosts the inference code of LightGlue, a lightweight feature matcher with high accuracy and blazing fast inference. It takes as input a set of keypoints and descriptors for each image and returns the indices of corresponding points. The architecture is based on adaptive pruning techniques, in both network width and depth - [check out the paper for more details](https://arxiv.org/pdf/2306.13643.pdf).

|

| 29 |

+

|

| 30 |

+

We release pretrained weights of LightGlue with [SuperPoint](https://arxiv.org/abs/1712.07629) and [DISK](https://arxiv.org/abs/2006.13566) local features.

|

| 31 |

+

The training end evaluation code will be released in July in a separate repo. To be notified, subscribe to [issue #6](https://github.com/cvg/LightGlue/issues/6).

|

| 32 |

+

|

| 33 |

+

## Installation and demo [](https://colab.research.google.com/github/cvg/LightGlue/blob/main/demo.ipynb)

|

| 34 |

+

|

| 35 |

+

Install this repo using pip:

|

| 36 |

+

|

| 37 |

+

```bash

|

| 38 |

+

git clone https://github.com/cvg/LightGlue.git && cd LightGlue

|

| 39 |

+

python -m pip install -e .

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

We provide a [demo notebook](demo.ipynb) which shows how to perform feature extraction and matching on an image pair.

|

| 43 |

+

|

| 44 |

+

Here is a minimal script to match two images:

|

| 45 |

+

|

| 46 |

+

```python

|

| 47 |

+

from lightglue import LightGlue, SuperPoint, DISK

|

| 48 |

+

from lightglue.utils import load_image, rbd

|

| 49 |

+

|

| 50 |

+

# SuperPoint+LightGlue

|

| 51 |

+

extractor = SuperPoint(max_num_keypoints=2048).eval().cuda() # load the extractor

|

| 52 |

+

matcher = LightGlue(features='superpoint').eval().cuda() # load the matcher

|

| 53 |

+

|

| 54 |

+

# or DISK+LightGlue

|

| 55 |

+

extractor = DISK(max_num_keypoints=2048).eval().cuda() # load the extractor

|

| 56 |

+

matcher = LightGlue(features='disk').eval().cuda() # load the matcher

|

| 57 |

+

|

| 58 |

+

# load each image as a torch.Tensor on GPU with shape (3,H,W), normalized in [0,1]

|

| 59 |

+

image0 = load_image('path/to/image_0.jpg').cuda()

|

| 60 |

+

image1 = load_image('path/to/image_1.jpg').cuda()

|

| 61 |

+

|

| 62 |

+

# extract local features

|

| 63 |

+

feats0 = extractor.extract(image0) # auto-resize the image, disable with resize=None

|

| 64 |

+

feats1 = extractor.extract(image1)

|

| 65 |

+

|

| 66 |

+

# match the features

|

| 67 |

+

matches01 = matcher({'image0': feats0, 'image1': feats1})

|

| 68 |

+

feats0, feats1, matches01 = [rbd(x) for x in [feats0, feats1, matches01]] # remove batch dimension

|

| 69 |

+

matches = matches01['matches'] # indices with shape (K,2)

|

| 70 |

+

points0 = feats0['keypoints'][matches[..., 0]] # coordinates in image #0, shape (K,2)

|

| 71 |

+

points1 = feats1['keypoints'][matches[..., 1]] # coordinates in image #1, shape (K,2)

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

We also provide a convenience method to match a pair of images:

|

| 75 |

+

|

| 76 |

+

```python

|

| 77 |

+

from lightglue import match_pair

|

| 78 |

+

feats0, feats1, matches01 = match_pair(extractor, matcher, image0, image1)

|

| 79 |

+

```

|

| 80 |

+

|

| 81 |

+

##

|

| 82 |

+

|

| 83 |

+

<p align="center">

|

| 84 |

+

<a href="https://arxiv.org/abs/2306.13643"><img src="assets/teaser.svg" alt="Logo" width=50%></a>

|

| 85 |

+

<br>

|

| 86 |

+

<em>LightGlue can adjust its depth (number of layers) and width (number of keypoints) per image pair, with a marginal impact on accuracy.</em>

|

| 87 |

+

</p>

|

| 88 |

+

|

| 89 |

+

## Advanced configuration

|

| 90 |

+

|

| 91 |

+

The default values give a good trade-off between speed and accuracy. To maximize the accuracy, use all keypoints and disable the adaptive mechanisms:

|

| 92 |

+

```python

|

| 93 |

+

extractor = SuperPoint(max_num_keypoints=None)

|

| 94 |

+

matcher = LightGlue(features='superpoint', depth_confidence=-1, width_confidence=-1)

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

To increase the speed with a small drop of accuracy, decrease the number of keypoints and lower the adaptive thresholds:

|

| 98 |

+

```python

|

| 99 |

+

extractor = SuperPoint(max_num_keypoints=1024)

|

| 100 |

+

matcher = LightGlue(features='superpoint', depth_confidence=0.9, width_confidence=0.95)

|

| 101 |

+

```

|

| 102 |

+

The maximum speed is obtained with [FlashAttention](https://arxiv.org/abs/2205.14135), which is automatically used when ```torch >= 2.0``` or if it is [installed from source](https://github.com/HazyResearch/flash-attention#installation-and-features).

|

| 103 |

+

|

| 104 |

+

<details>

|

| 105 |

+

<summary>[Detail of all parameters - click to expand]</summary>

|

| 106 |

+

|

| 107 |

+

- [```n_layers```](https://github.com/cvg/LightGlue/blob/main/lightglue/lightglue.py#L261): Number of stacked self+cross attention layers. Reduce this value for faster inference at the cost of accuracy (continuous red line in the plot above). Default: 9 (all layers).

|

| 108 |

+

- [```flash```](https://github.com/cvg/LightGlue/blob/main/lightglue/lightglue.py#L263): Enable FlashAttention. Significantly increases the speed and reduces the memory consumption without any impact on accuracy. Default: True (LightGlue automatically detects if FlashAttention is available).

|

| 109 |

+

- [```mp```](https://github.com/cvg/LightGlue/blob/main/lightglue/lightglue.py#L264): Enable mixed precision inference. Default: False (off)

|

| 110 |

+

- [```depth_confidence```](https://github.com/cvg/LightGlue/blob/main/lightglue/lightglue.py#L265): Controls the early stopping. A lower values stops more often at earlier layers. Default: 0.95, disable with -1.

|

| 111 |

+

- [```width_confidence```](https://github.com/cvg/LightGlue/blob/main/lightglue/lightglue.py#L266): Controls the iterative point pruning. A lower value prunes more points earlier. Default: 0.99, disable with -1.

|

| 112 |

+

- [```filter_threshold```](https://github.com/cvg/LightGlue/blob/main/lightglue/lightglue.py#L267): Match confidence. Increase this value to obtain less, but stronger matches. Default: 0.1

|

| 113 |

+

|

| 114 |

+

</details>

|

| 115 |

+

|

| 116 |

+

## Other links

|

| 117 |

+

- [hloc - the visual localization toolbox](https://github.com/cvg/Hierarchical-Localization/): run LightGlue for Structure-from-Motion and visual localization.

|

| 118 |

+

- [LightGlue-ONNX](https://github.com/fabio-sim/LightGlue-ONNX): export LightGlue to the Open Neural Network Exchange format.

|

| 119 |

+

- [Image Matching WebUI](https://github.com/Vincentqyw/image-matching-webui): a web GUI to easily compare different matchers, including LightGlue.

|

| 120 |

+

- [kornia](kornia.readthedocs.io/) now exposes LightGlue via the interfaces [`LightGlue`](https://kornia.readthedocs.io/en/latest/feature.html#kornia.feature.LightGlue) and [`LightGlueMatcher`](https://kornia.readthedocs.io/en/latest/feature.html#kornia.feature.LightGlueMatcher).

|

| 121 |

+

|

| 122 |

+

## BibTeX Citation

|

| 123 |

+

If you use any ideas from the paper or code from this repo, please consider citing:

|

| 124 |

+

|

| 125 |

+

```txt

|

| 126 |

+

@inproceedings{lindenberger23lightglue,

|

| 127 |

+

author = {Philipp Lindenberger and

|

| 128 |

+

Paul-Edouard Sarlin and

|

| 129 |

+

Marc Pollefeys},

|

| 130 |

+

title = {{LightGlue: Local Feature Matching at Light Speed}},

|

| 131 |

+

booktitle = {ICCV},

|

| 132 |

+

year = {2023}

|

| 133 |

+

}

|

| 134 |

+

```

|

third_party/LightGlue/assets/DSC_0410.JPG

ADDED

|

|

third_party/LightGlue/assets/DSC_0411.JPG

ADDED

|

|

third_party/LightGlue/assets/architecture.svg

ADDED

|

|

third_party/LightGlue/assets/easy_hard.jpg

ADDED

|

third_party/LightGlue/assets/sacre_coeur1.jpg

ADDED

|

third_party/LightGlue/assets/sacre_coeur2.jpg

ADDED

|

third_party/LightGlue/assets/teaser.svg

ADDED

|

|

third_party/LightGlue/demo.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

third_party/LightGlue/lightglue/__init__.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .lightglue import LightGlue

|

| 2 |

+

from .superpoint import SuperPoint

|

| 3 |

+

from .disk import DISK

|

| 4 |

+

from .utils import match_pair

|

third_party/LightGlue/lightglue/disk.py

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

import kornia

|

| 4 |

+

from types import SimpleNamespace

|

| 5 |

+

from .utils import ImagePreprocessor

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class DISK(nn.Module):

|

| 9 |

+

default_conf = {

|

| 10 |

+

'weights': 'depth',

|

| 11 |

+

'max_num_keypoints': None,

|

| 12 |

+

'desc_dim': 128,

|

| 13 |

+

'nms_window_size': 5,

|

| 14 |

+

'detection_threshold': 0.0,

|

| 15 |

+

'pad_if_not_divisible': True,

|

| 16 |

+

}

|

| 17 |

+

|

| 18 |

+

preprocess_conf = {

|

| 19 |

+

**ImagePreprocessor.default_conf,

|

| 20 |

+

'resize': 1024,

|

| 21 |

+

'grayscale': False,

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

required_data_keys = ['image']

|

| 25 |

+

|

| 26 |

+

def __init__(self, **conf) -> None:

|

| 27 |

+

super().__init__()

|

| 28 |

+

self.conf = {**self.default_conf, **conf}

|

| 29 |

+

self.conf = SimpleNamespace(**self.conf)

|

| 30 |

+

self.model = kornia.feature.DISK.from_pretrained(self.conf.weights)

|

| 31 |

+

|

| 32 |

+

def forward(self, data: dict) -> dict:

|

| 33 |

+

""" Compute keypoints, scores, descriptors for image """

|

| 34 |

+

for key in self.required_data_keys:

|

| 35 |

+

assert key in data, f'Missing key {key} in data'

|

| 36 |

+

image = data['image']

|

| 37 |

+

features = self.model(

|

| 38 |

+

image,

|

| 39 |

+

n=self.conf.max_num_keypoints,

|

| 40 |

+

window_size=self.conf.nms_window_size,

|

| 41 |

+

score_threshold=self.conf.detection_threshold,

|

| 42 |

+

pad_if_not_divisible=self.conf.pad_if_not_divisible

|

| 43 |

+

)

|

| 44 |

+

keypoints = [f.keypoints for f in features]

|

| 45 |

+

scores = [f.detection_scores for f in features]

|

| 46 |

+

descriptors = [f.descriptors for f in features]

|

| 47 |

+

del features

|

| 48 |

+

|

| 49 |

+

keypoints = torch.stack(keypoints, 0)

|

| 50 |

+

scores = torch.stack(scores, 0)

|

| 51 |

+

descriptors = torch.stack(descriptors, 0)

|

| 52 |

+

|

| 53 |

+

return {

|

| 54 |

+

'keypoints': keypoints.to(image),

|

| 55 |

+

'keypoint_scores': scores.to(image),

|

| 56 |

+

'descriptors': descriptors.to(image),

|

| 57 |

+

}

|

| 58 |

+

|

| 59 |

+

def extract(self, img: torch.Tensor, **conf) -> dict:

|

| 60 |

+

""" Perform extraction with online resizing"""

|

| 61 |

+

if img.dim() == 3:

|

| 62 |

+

img = img[None] # add batch dim

|

| 63 |

+

assert img.dim() == 4 and img.shape[0] == 1

|

| 64 |

+

shape = img.shape[-2:][::-1]

|

| 65 |

+

img, scales = ImagePreprocessor(

|

| 66 |

+

**{**self.preprocess_conf, **conf})(img)

|

| 67 |

+

feats = self.forward({'image': img})

|

| 68 |

+

feats['image_size'] = torch.tensor(shape)[None].to(img).float()

|

| 69 |

+

feats['keypoints'] = (feats['keypoints'] + .5) / scales[None] - .5

|

| 70 |

+

return feats

|

third_party/LightGlue/lightglue/lightglue.py

ADDED

|

@@ -0,0 +1,466 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from pathlib import Path

|

| 2 |

+

from types import SimpleNamespace

|

| 3 |

+

import warnings

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torch

|

| 6 |

+

from torch import nn

|

| 7 |

+

import torch.nn.functional as F

|

| 8 |

+

from typing import Optional, List, Callable

|

| 9 |

+

|

| 10 |

+

try:

|

| 11 |

+

from flash_attn.modules.mha import FlashCrossAttention

|

| 12 |

+

except ModuleNotFoundError:

|

| 13 |

+

FlashCrossAttention = None

|

| 14 |

+

|

| 15 |

+

if FlashCrossAttention or hasattr(F, 'scaled_dot_product_attention'):

|

| 16 |

+

FLASH_AVAILABLE = True

|

| 17 |

+

else:

|

| 18 |

+

FLASH_AVAILABLE = False

|

| 19 |

+

|

| 20 |

+

torch.backends.cudnn.deterministic = True

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

@torch.cuda.amp.custom_fwd(cast_inputs=torch.float32)

|

| 24 |

+

def normalize_keypoints(

|

| 25 |

+

kpts: torch.Tensor,

|

| 26 |

+

size: torch.Tensor) -> torch.Tensor:

|

| 27 |

+

if isinstance(size, torch.Size):

|

| 28 |

+

size = torch.tensor(size)[None]

|

| 29 |

+

shift = size.float().to(kpts) / 2

|

| 30 |

+

scale = size.max(1).values.float().to(kpts) / 2

|

| 31 |

+

kpts = (kpts - shift[:, None]) / scale[:, None, None]

|

| 32 |

+

return kpts

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

def rotate_half(x: torch.Tensor) -> torch.Tensor:

|

| 36 |

+

x = x.unflatten(-1, (-1, 2))

|

| 37 |

+

x1, x2 = x.unbind(dim=-1)

|

| 38 |

+

return torch.stack((-x2, x1), dim=-1).flatten(start_dim=-2)

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def apply_cached_rotary_emb(

|

| 42 |

+

freqs: torch.Tensor, t: torch.Tensor) -> torch.Tensor:

|

| 43 |

+

return (t * freqs[0]) + (rotate_half(t) * freqs[1])

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

class LearnableFourierPositionalEncoding(nn.Module):

|

| 47 |

+

def __init__(self, M: int, dim: int, F_dim: int = None,

|

| 48 |

+

gamma: float = 1.0) -> None:

|

| 49 |

+

super().__init__()

|

| 50 |

+

F_dim = F_dim if F_dim is not None else dim

|

| 51 |

+

self.gamma = gamma

|

| 52 |

+

self.Wr = nn.Linear(M, F_dim // 2, bias=False)

|

| 53 |

+

nn.init.normal_(self.Wr.weight.data, mean=0, std=self.gamma ** -2)

|

| 54 |

+

|

| 55 |

+

def forward(self, x: torch.Tensor) -> torch.Tensor:

|

| 56 |

+

""" encode position vector """

|

| 57 |

+

projected = self.Wr(x)

|

| 58 |

+

cosines, sines = torch.cos(projected), torch.sin(projected)

|

| 59 |

+

emb = torch.stack([cosines, sines], 0).unsqueeze(-3)

|

| 60 |

+

return emb.repeat_interleave(2, dim=-1)

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

class TokenConfidence(nn.Module):

|

| 64 |

+

def __init__(self, dim: int) -> None:

|

| 65 |

+

super().__init__()

|

| 66 |

+

self.token = nn.Sequential(

|

| 67 |

+

nn.Linear(dim, 1),

|

| 68 |

+

nn.Sigmoid()

|

| 69 |

+

)

|

| 70 |

+

|

| 71 |

+

def forward(self, desc0: torch.Tensor, desc1: torch.Tensor):

|

| 72 |

+

""" get confidence tokens """

|

| 73 |

+

return (

|

| 74 |

+

self.token(desc0.detach().float()).squeeze(-1),

|

| 75 |

+

self.token(desc1.detach().float()).squeeze(-1))

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

class Attention(nn.Module):

|

| 79 |

+

def __init__(self, allow_flash: bool) -> None:

|

| 80 |

+

super().__init__()

|

| 81 |

+

if allow_flash and not FLASH_AVAILABLE:

|

| 82 |

+

warnings.warn(

|

| 83 |

+

'FlashAttention is not available. For optimal speed, '

|

| 84 |

+

'consider installing torch >= 2.0 or flash-attn.',

|

| 85 |

+

stacklevel=2,

|

| 86 |

+

)

|

| 87 |

+

self.enable_flash = allow_flash and FLASH_AVAILABLE

|

| 88 |

+

if allow_flash and FlashCrossAttention:

|

| 89 |

+

self.flash_ = FlashCrossAttention()

|

| 90 |

+

|

| 91 |

+

def forward(self, q, k, v) -> torch.Tensor:

|

| 92 |

+

if self.enable_flash and q.device.type == 'cuda':

|

| 93 |

+

if FlashCrossAttention:

|

| 94 |

+

q, k, v = [x.transpose(-2, -3) for x in [q, k, v]]

|

| 95 |

+

m = self.flash_(q.half(), torch.stack([k, v], 2).half())

|

| 96 |

+

return m.transpose(-2, -3).to(q.dtype)

|

| 97 |

+

else: # use torch 2.0 scaled_dot_product_attention with flash

|

| 98 |

+

args = [x.half().contiguous() for x in [q, k, v]]

|

| 99 |

+

with torch.backends.cuda.sdp_kernel(enable_flash=True):

|

| 100 |

+

return F.scaled_dot_product_attention(*args).to(q.dtype)

|

| 101 |

+

elif hasattr(F, 'scaled_dot_product_attention'):

|

| 102 |

+

args = [x.contiguous() for x in [q, k, v]]

|

| 103 |

+

return F.scaled_dot_product_attention(*args).to(q.dtype)

|

| 104 |

+

else:

|

| 105 |

+

s = q.shape[-1] ** -0.5

|

| 106 |

+

attn = F.softmax(torch.einsum('...id,...jd->...ij', q, k) * s, -1)

|

| 107 |

+

return torch.einsum('...ij,...jd->...id', attn, v)

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

class Transformer(nn.Module):

|

| 111 |

+

def __init__(self, embed_dim: int, num_heads: int,

|

| 112 |

+

flash: bool = False, bias: bool = True) -> None:

|

| 113 |

+

super().__init__()

|

| 114 |

+

self.embed_dim = embed_dim

|

| 115 |

+

self.num_heads = num_heads

|

| 116 |

+

assert self.embed_dim % num_heads == 0

|

| 117 |

+

self.head_dim = self.embed_dim // num_heads

|

| 118 |

+

self.Wqkv = nn.Linear(embed_dim, 3*embed_dim, bias=bias)

|

| 119 |

+

self.inner_attn = Attention(flash)

|

| 120 |

+

self.out_proj = nn.Linear(embed_dim, embed_dim, bias=bias)

|

| 121 |

+

self.ffn = nn.Sequential(

|

| 122 |

+

nn.Linear(2*embed_dim, 2*embed_dim),

|

| 123 |

+

nn.LayerNorm(2*embed_dim, elementwise_affine=True),

|

| 124 |

+

nn.GELU(),

|

| 125 |

+

nn.Linear(2*embed_dim, embed_dim)

|

| 126 |

+

)

|

| 127 |

+

|

| 128 |

+

def _forward(self, x: torch.Tensor,

|

| 129 |

+

encoding: Optional[torch.Tensor] = None):

|

| 130 |

+

qkv = self.Wqkv(x)

|

| 131 |

+

qkv = qkv.unflatten(-1, (self.num_heads, -1, 3)).transpose(1, 2)

|

| 132 |

+

q, k, v = qkv[..., 0], qkv[..., 1], qkv[..., 2]

|

| 133 |

+

if encoding is not None:

|

| 134 |

+

q = apply_cached_rotary_emb(encoding, q)

|

| 135 |

+

k = apply_cached_rotary_emb(encoding, k)

|

| 136 |

+

context = self.inner_attn(q, k, v)

|

| 137 |

+

message = self.out_proj(

|

| 138 |

+

context.transpose(1, 2).flatten(start_dim=-2))

|

| 139 |

+

return x + self.ffn(torch.cat([x, message], -1))

|

| 140 |

+

|

| 141 |

+

def forward(self, x0, x1, encoding0=None, encoding1=None):

|

| 142 |

+

return self._forward(x0, encoding0), self._forward(x1, encoding1)

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

class CrossTransformer(nn.Module):

|

| 146 |

+

def __init__(self, embed_dim: int, num_heads: int,

|

| 147 |

+

flash: bool = False, bias: bool = True) -> None:

|

| 148 |

+

super().__init__()

|

| 149 |

+

self.heads = num_heads

|

| 150 |

+

dim_head = embed_dim // num_heads

|

| 151 |

+

self.scale = dim_head ** -0.5

|

| 152 |

+

inner_dim = dim_head * num_heads

|

| 153 |

+

self.to_qk = nn.Linear(embed_dim, inner_dim, bias=bias)

|

| 154 |

+

self.to_v = nn.Linear(embed_dim, inner_dim, bias=bias)

|

| 155 |

+

self.to_out = nn.Linear(inner_dim, embed_dim, bias=bias)

|

| 156 |

+

self.ffn = nn.Sequential(

|

| 157 |

+

nn.Linear(2*embed_dim, 2*embed_dim),

|

| 158 |

+

nn.LayerNorm(2*embed_dim, elementwise_affine=True),

|

| 159 |

+

nn.GELU(),

|

| 160 |

+

nn.Linear(2*embed_dim, embed_dim)

|

| 161 |

+

)

|

| 162 |

+

|

| 163 |

+

if flash and FLASH_AVAILABLE:

|

| 164 |

+

self.flash = Attention(True)

|

| 165 |

+

else:

|

| 166 |

+

self.flash = None

|

| 167 |

+

|

| 168 |

+

def map_(self, func: Callable, x0: torch.Tensor, x1: torch.Tensor):

|

| 169 |

+

return func(x0), func(x1)

|

| 170 |

+

|

| 171 |

+

def forward(self, x0: torch.Tensor, x1: torch.Tensor) -> List[torch.Tensor]:

|

| 172 |

+

qk0, qk1 = self.map_(self.to_qk, x0, x1)

|

| 173 |

+

v0, v1 = self.map_(self.to_v, x0, x1)

|

| 174 |

+

qk0, qk1, v0, v1 = map(

|

| 175 |

+

lambda t: t.unflatten(-1, (self.heads, -1)).transpose(1, 2),

|

| 176 |

+

(qk0, qk1, v0, v1))

|

| 177 |

+

if self.flash is not None:

|

| 178 |

+

m0 = self.flash(qk0, qk1, v1)

|

| 179 |

+

m1 = self.flash(qk1, qk0, v0)

|

| 180 |

+

else:

|

| 181 |

+

qk0, qk1 = qk0 * self.scale**0.5, qk1 * self.scale**0.5

|

| 182 |

+

sim = torch.einsum('b h i d, b h j d -> b h i j', qk0, qk1)

|

| 183 |

+

attn01 = F.softmax(sim, dim=-1)

|

| 184 |

+

attn10 = F.softmax(sim.transpose(-2, -1).contiguous(), dim=-1)

|

| 185 |

+

m0 = torch.einsum('bhij, bhjd -> bhid', attn01, v1)

|

| 186 |

+

m1 = torch.einsum('bhji, bhjd -> bhid', attn10.transpose(-2, -1), v0)

|

| 187 |

+

m0, m1 = self.map_(lambda t: t.transpose(1, 2).flatten(start_dim=-2),

|

| 188 |

+

m0, m1)

|

| 189 |

+

m0, m1 = self.map_(self.to_out, m0, m1)

|

| 190 |

+

x0 = x0 + self.ffn(torch.cat([x0, m0], -1))

|

| 191 |

+

x1 = x1 + self.ffn(torch.cat([x1, m1], -1))

|

| 192 |

+

return x0, x1

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

def sigmoid_log_double_softmax(

|

| 196 |

+

sim: torch.Tensor, z0: torch.Tensor, z1: torch.Tensor) -> torch.Tensor:

|

| 197 |

+

""" create the log assignment matrix from logits and similarity"""

|

| 198 |

+

b, m, n = sim.shape

|

| 199 |

+

certainties = F.logsigmoid(z0) + F.logsigmoid(z1).transpose(1, 2)

|

| 200 |

+

scores0 = F.log_softmax(sim, 2)

|

| 201 |

+

scores1 = F.log_softmax(

|

| 202 |

+

sim.transpose(-1, -2).contiguous(), 2).transpose(-1, -2)

|

| 203 |

+

scores = sim.new_full((b, m+1, n+1), 0)

|

| 204 |

+

scores[:, :m, :n] = (scores0 + scores1 + certainties)

|

| 205 |

+

scores[:, :-1, -1] = F.logsigmoid(-z0.squeeze(-1))

|

| 206 |

+

scores[:, -1, :-1] = F.logsigmoid(-z1.squeeze(-1))

|

| 207 |

+

return scores

|

| 208 |

+

|

| 209 |

+

|

| 210 |

+

class MatchAssignment(nn.Module):

|

| 211 |

+

def __init__(self, dim: int) -> None:

|

| 212 |

+

super().__init__()

|

| 213 |

+

self.dim = dim

|

| 214 |

+

self.matchability = nn.Linear(dim, 1, bias=True)

|

| 215 |

+

self.final_proj = nn.Linear(dim, dim, bias=True)

|

| 216 |

+

|

| 217 |

+

def forward(self, desc0: torch.Tensor, desc1: torch.Tensor):

|

| 218 |

+

""" build assignment matrix from descriptors """

|

| 219 |

+

mdesc0, mdesc1 = self.final_proj(desc0), self.final_proj(desc1)

|

| 220 |

+

_, _, d = mdesc0.shape

|

| 221 |

+

mdesc0, mdesc1 = mdesc0 / d**.25, mdesc1 / d**.25

|

| 222 |

+

sim = torch.einsum('bmd,bnd->bmn', mdesc0, mdesc1)

|

| 223 |

+

z0 = self.matchability(desc0)

|

| 224 |

+

z1 = self.matchability(desc1)

|

| 225 |

+

scores = sigmoid_log_double_softmax(sim, z0, z1)

|

| 226 |

+

return scores, sim

|

| 227 |

+

|

| 228 |

+

def scores(self, desc0: torch.Tensor, desc1: torch.Tensor):

|

| 229 |

+

m0 = torch.sigmoid(self.matchability(desc0)).squeeze(-1)

|

| 230 |

+

m1 = torch.sigmoid(self.matchability(desc1)).squeeze(-1)

|

| 231 |

+

return m0, m1

|

| 232 |

+

|

| 233 |

+

|

| 234 |

+

def filter_matches(scores: torch.Tensor, th: float):

|

| 235 |

+

""" obtain matches from a log assignment matrix [Bx M+1 x N+1]"""

|

| 236 |

+

max0, max1 = scores[:, :-1, :-1].max(2), scores[:, :-1, :-1].max(1)

|

| 237 |

+

m0, m1 = max0.indices, max1.indices

|

| 238 |

+

mutual0 = torch.arange(m0.shape[1]).to(m0)[None] == m1.gather(1, m0)

|

| 239 |

+

mutual1 = torch.arange(m1.shape[1]).to(m1)[None] == m0.gather(1, m1)

|

| 240 |

+

max0_exp = max0.values.exp()

|

| 241 |

+

zero = max0_exp.new_tensor(0)

|

| 242 |

+

mscores0 = torch.where(mutual0, max0_exp, zero)

|

| 243 |

+

mscores1 = torch.where(mutual1, mscores0.gather(1, m1), zero)

|

| 244 |

+

if th is not None:

|

| 245 |

+

valid0 = mutual0 & (mscores0 > th)

|

| 246 |

+

else:

|

| 247 |

+

valid0 = mutual0

|