xdite

commited on

Commit

•

28a91ba

1

Parent(s):

f29bdf0

init

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +8 -34

- .gitignore +1 -0

- Dockerfile +9 -0

- LICENSE.md +14 -0

- LICENSE_Lavis.md +14 -0

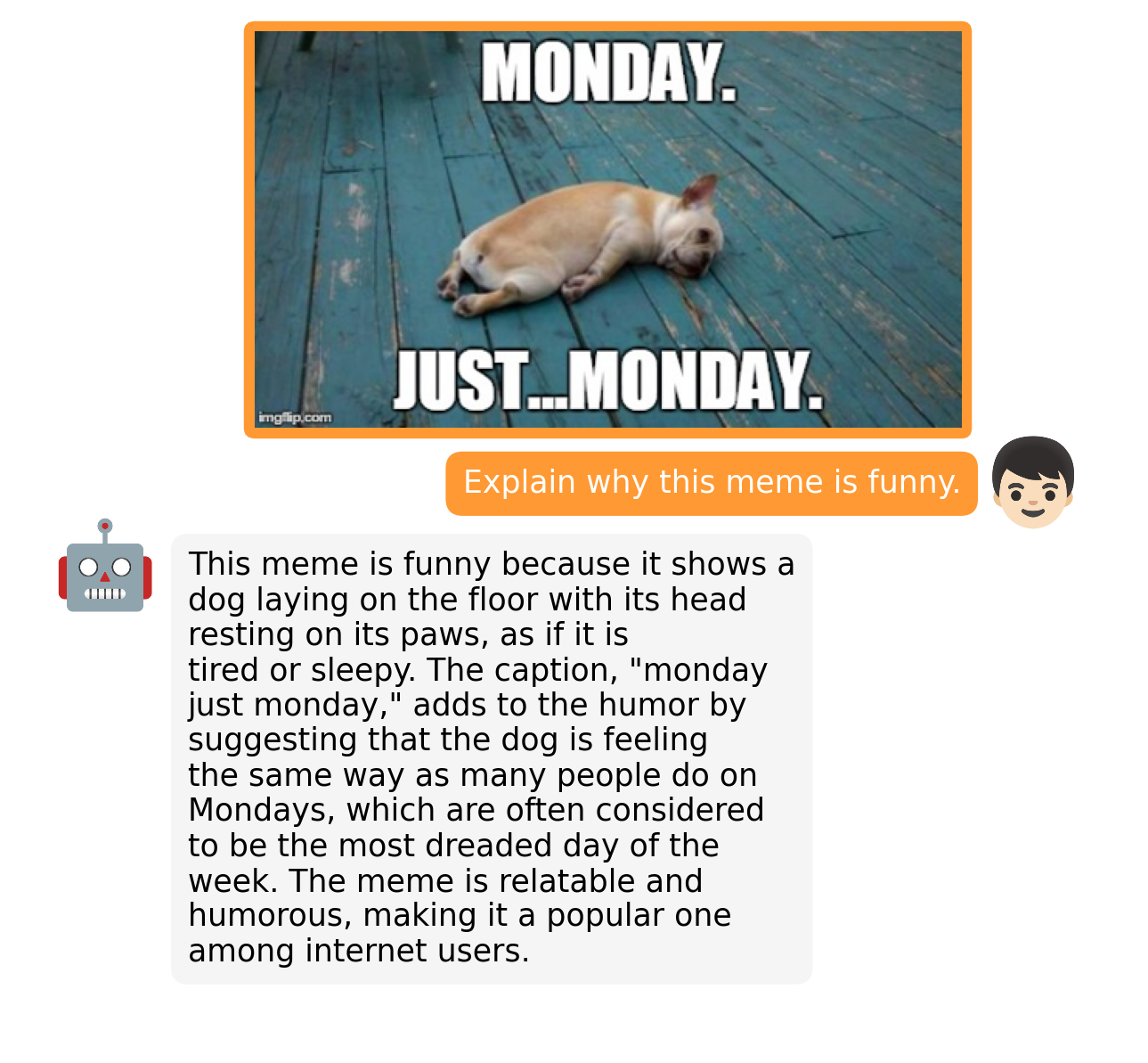

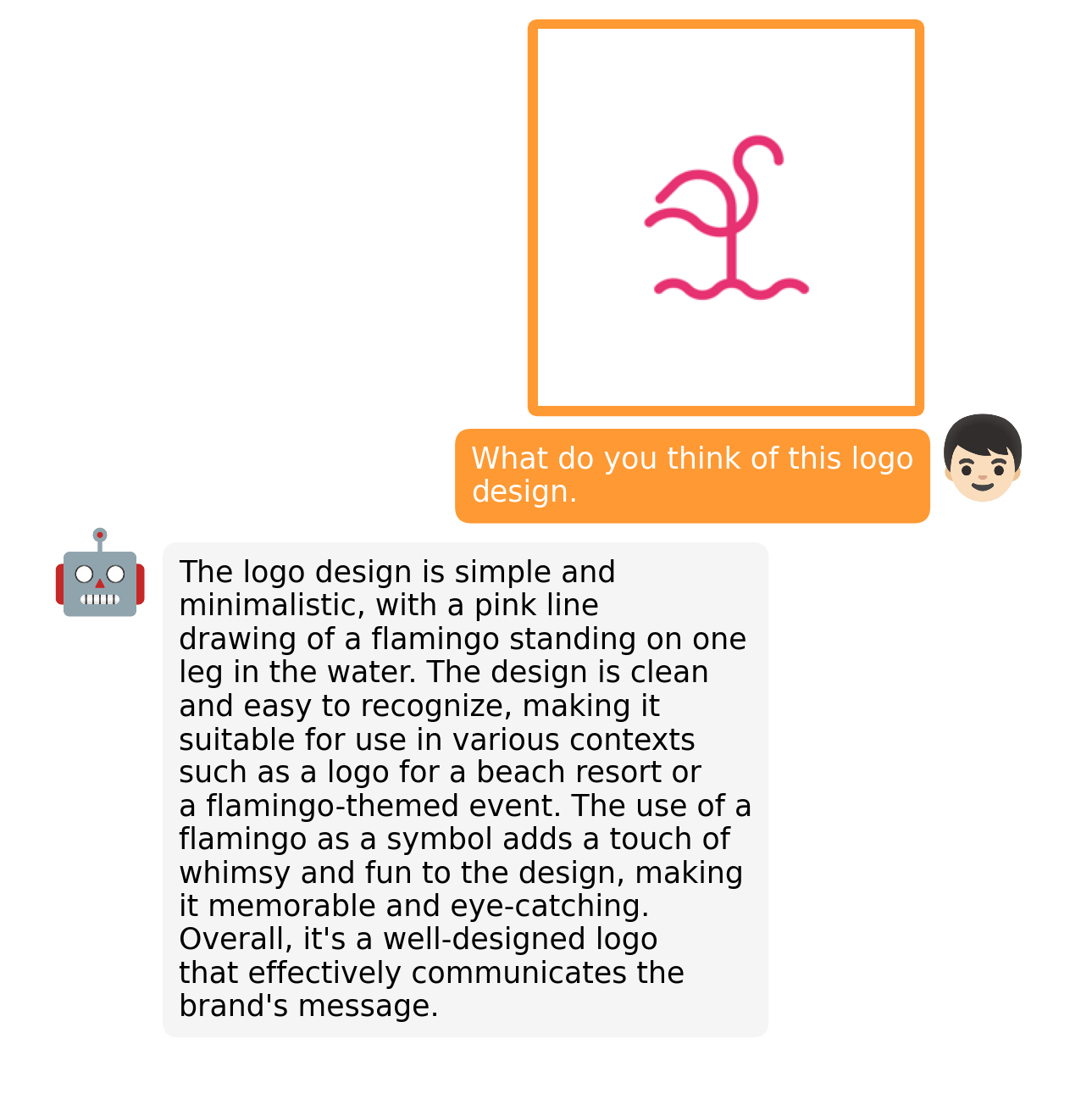

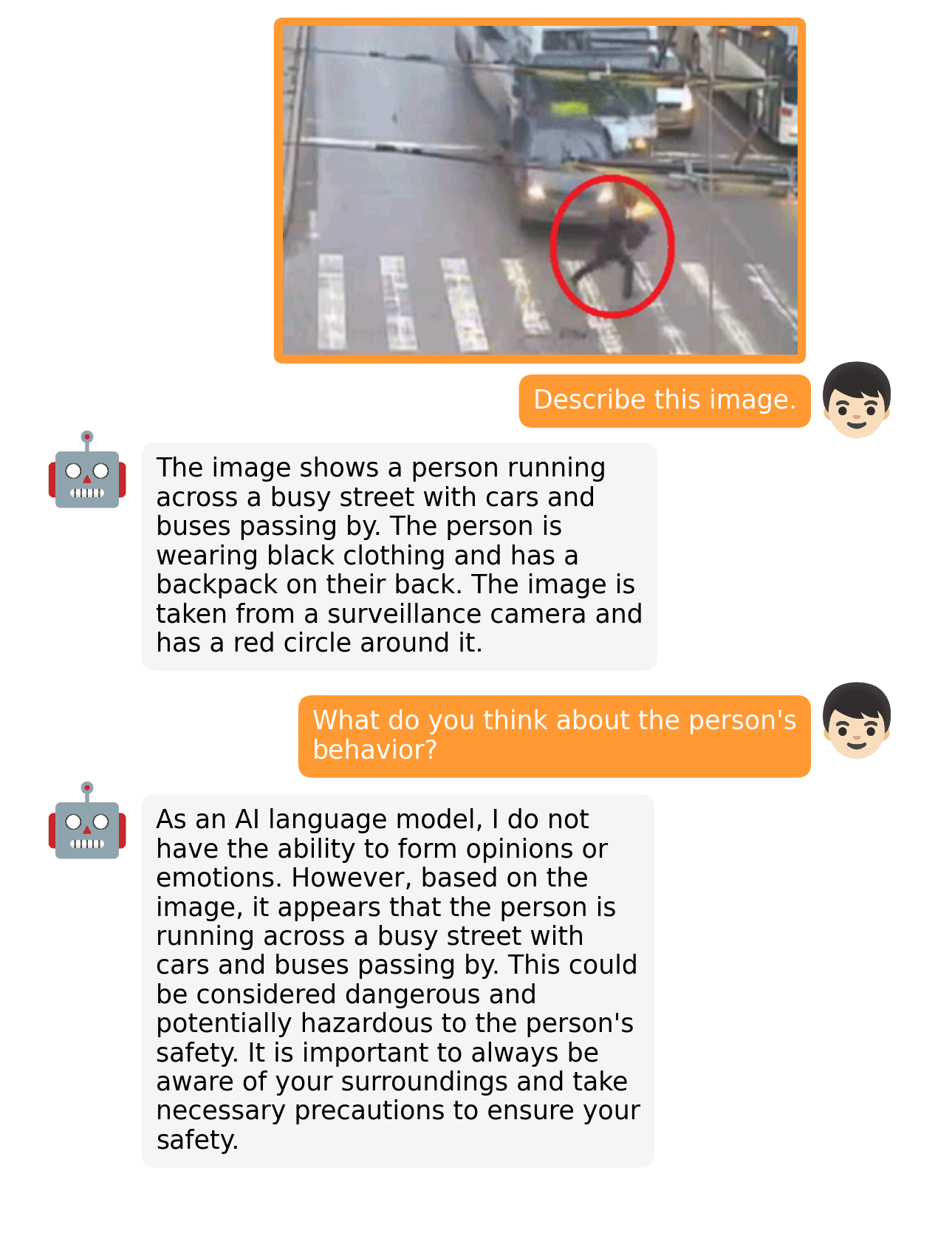

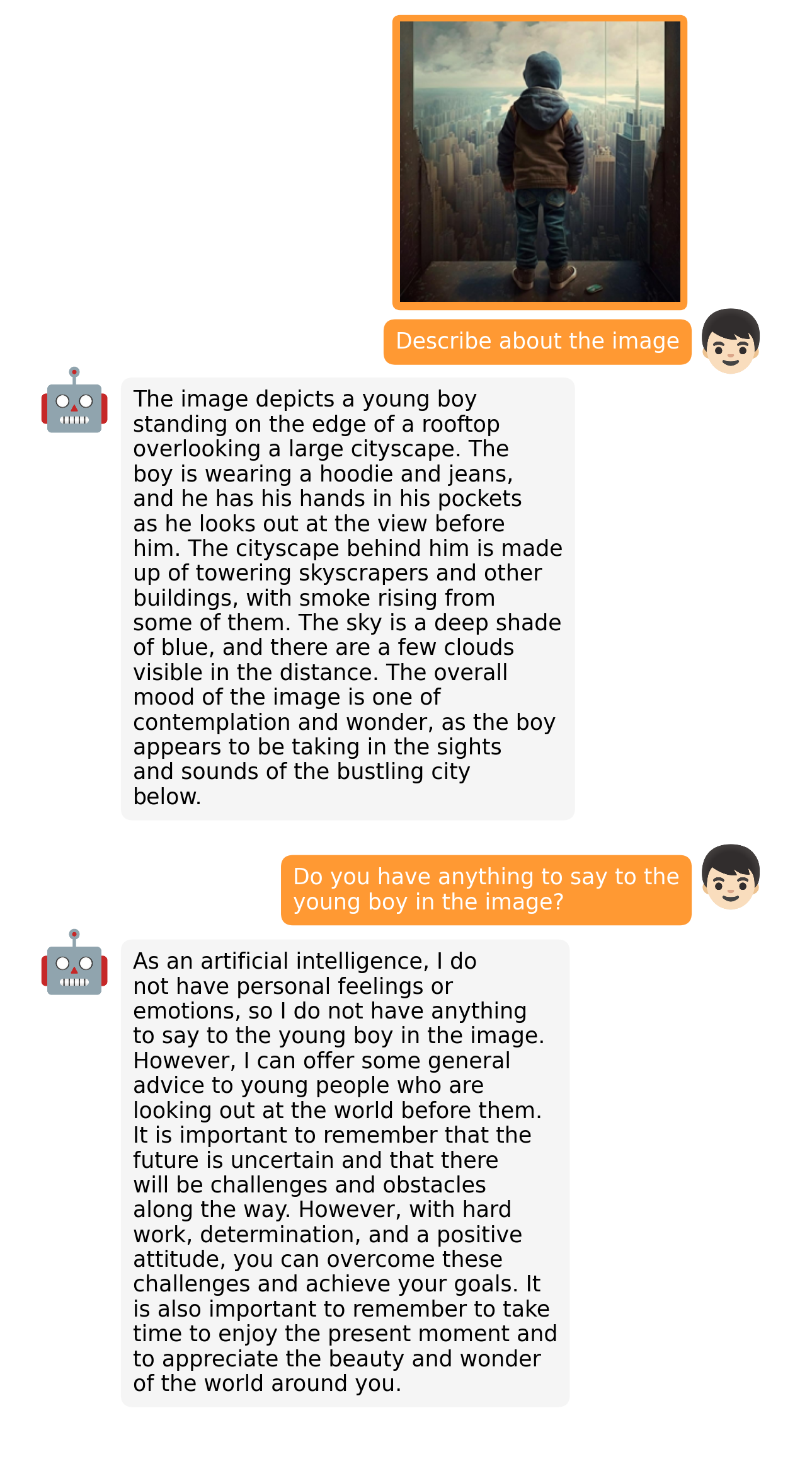

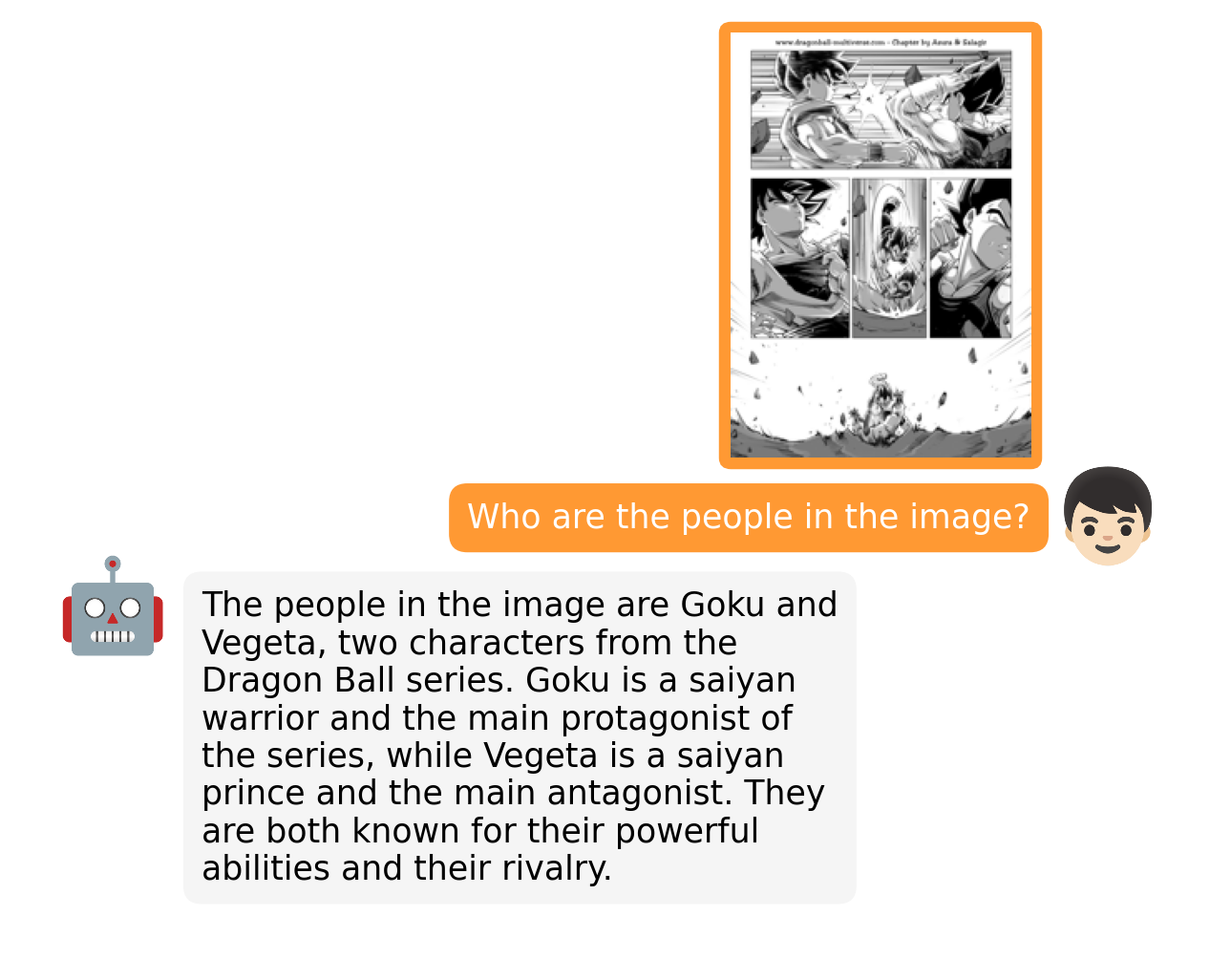

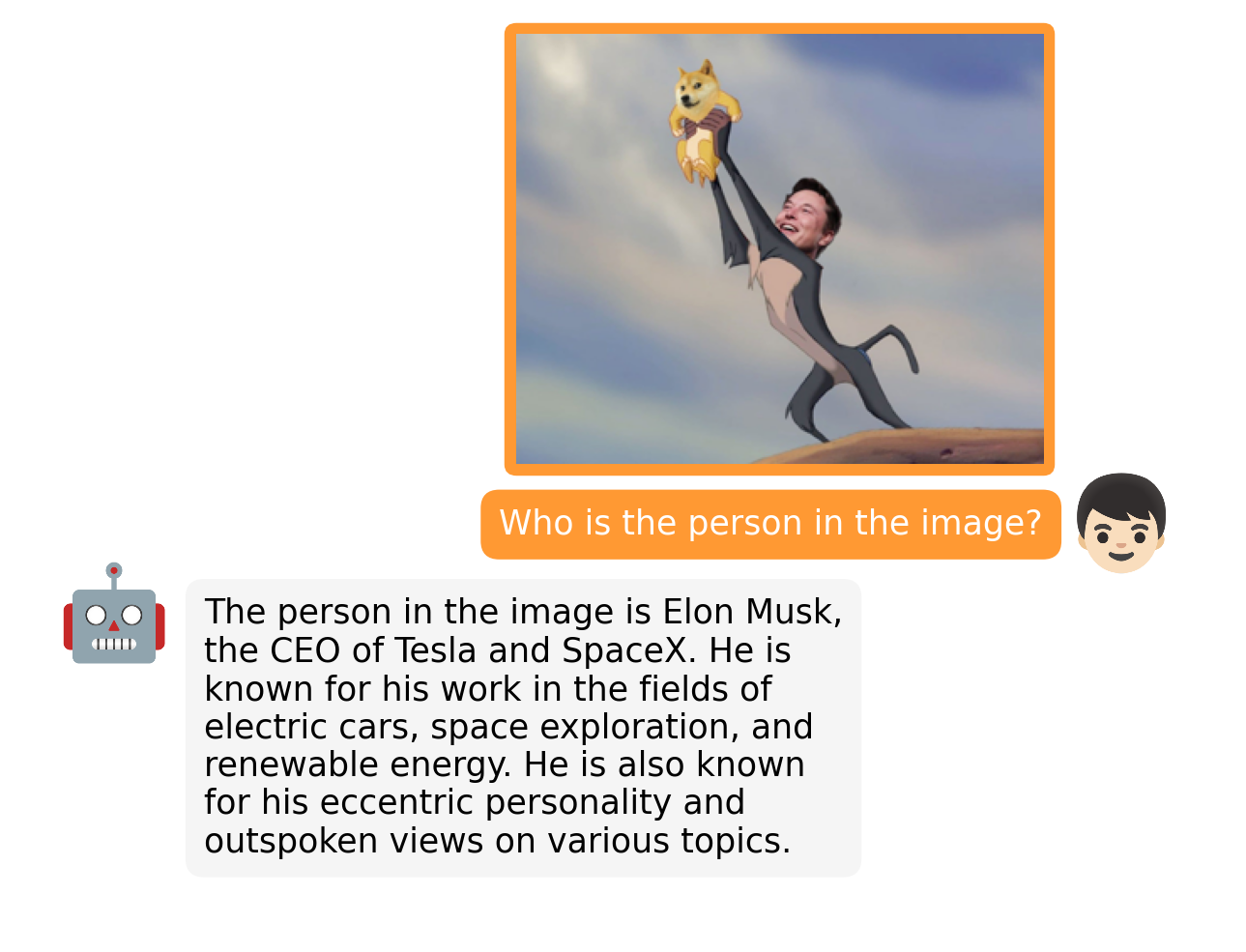

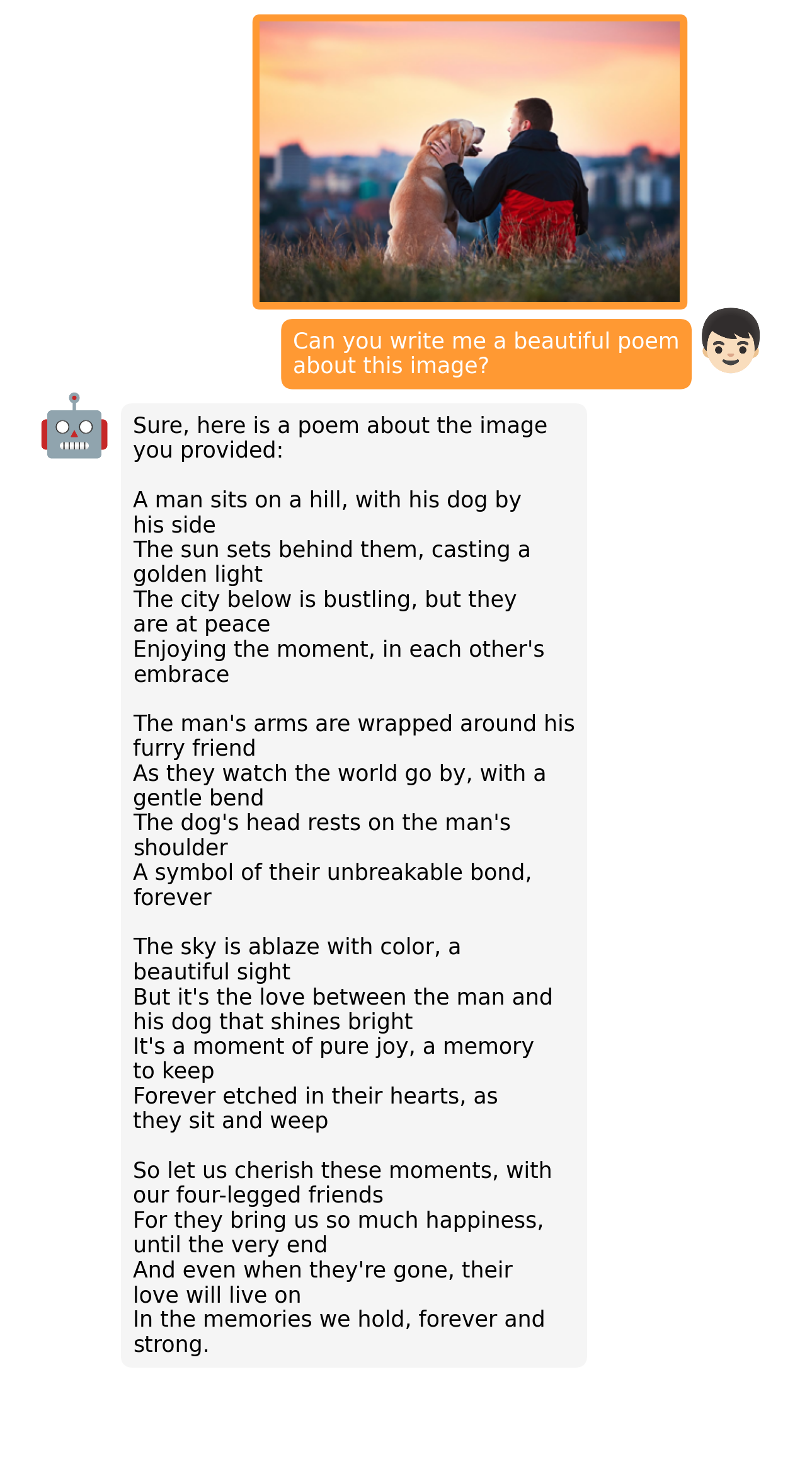

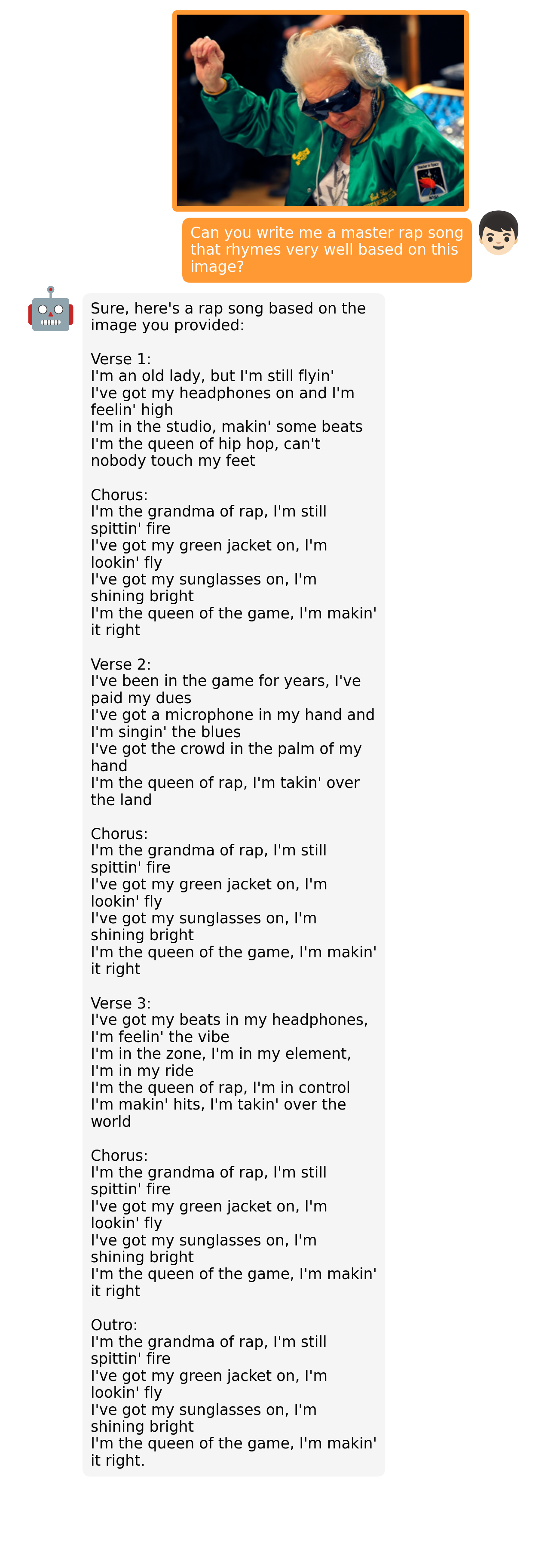

- PrepareVicuna.md +30 -0

- README.md +154 -11

- convert_llama_weights_to_hf.py +278 -0

- dataset/README_1_STAGE.md +96 -0

- dataset/README_2_STAGE.md +19 -0

- dataset/convert_cc_sbu.py +20 -0

- dataset/convert_laion.py +20 -0

- dataset/download_cc_sbu.sh +6 -0

- dataset/download_laion.sh +6 -0

- demo.py +150 -0

- environment.yml +63 -0

- eval_configs/minigpt4_eval.yaml +25 -0

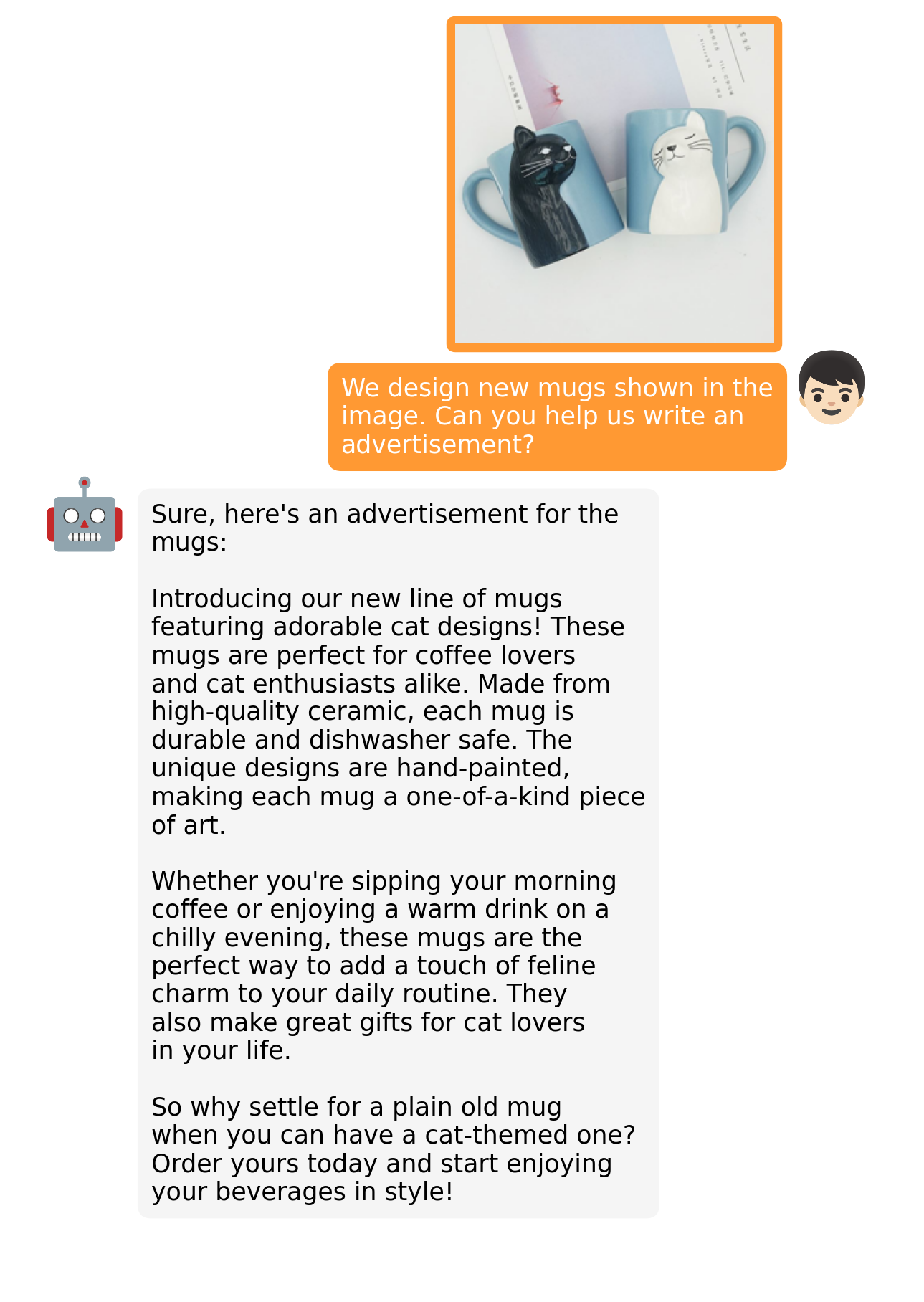

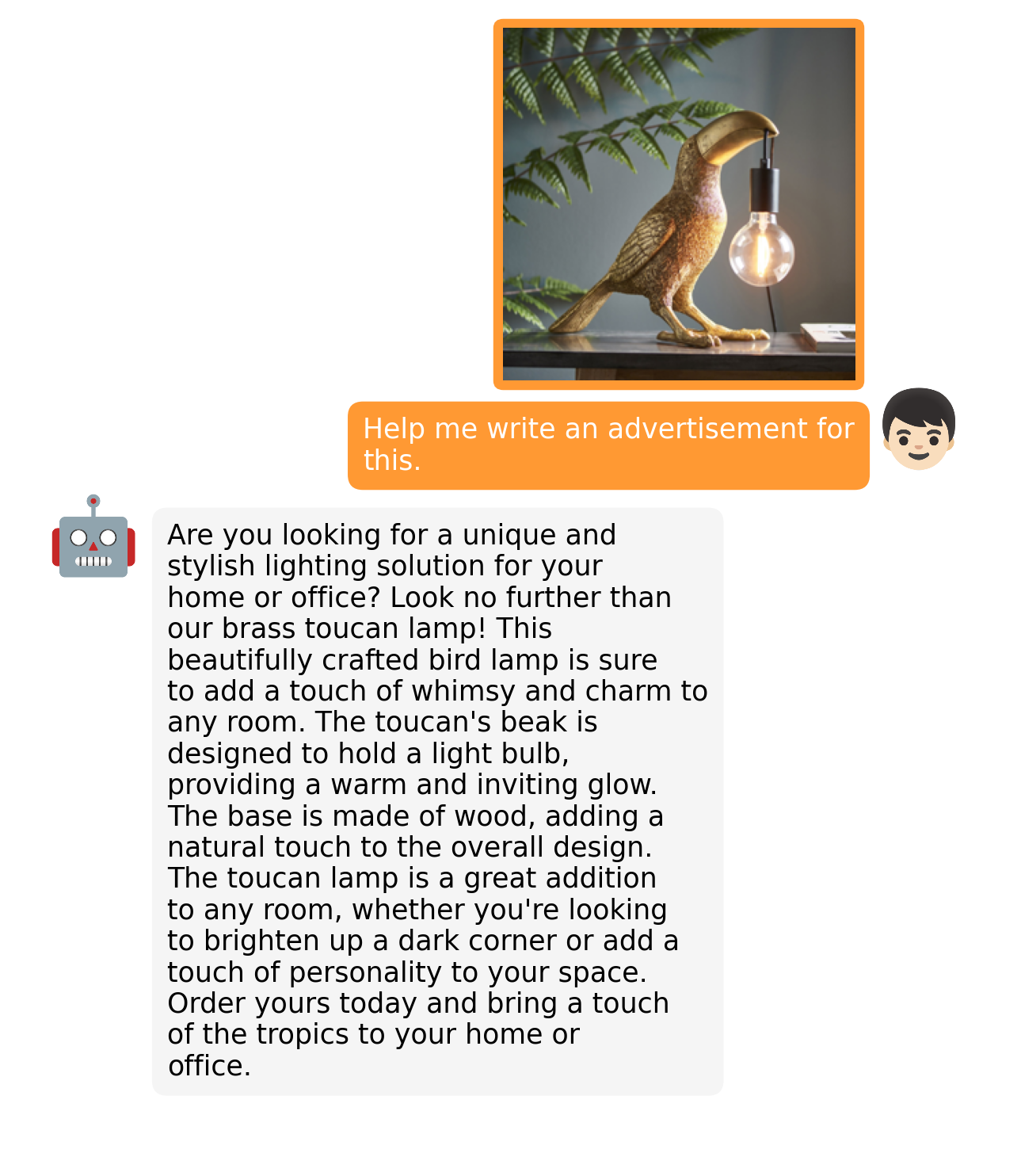

- examples/ad_1.png +0 -0

- examples/ad_2.png +0 -0

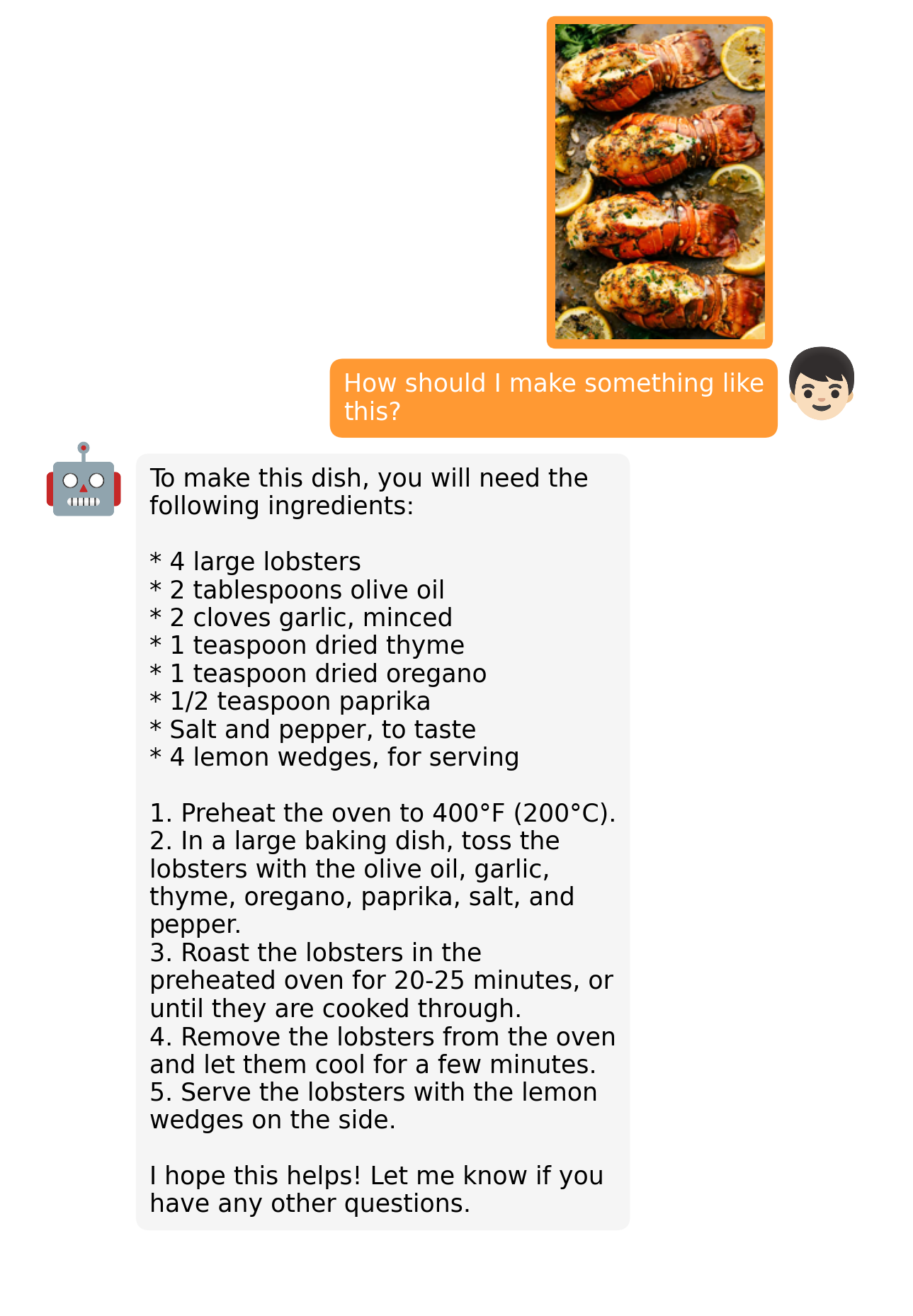

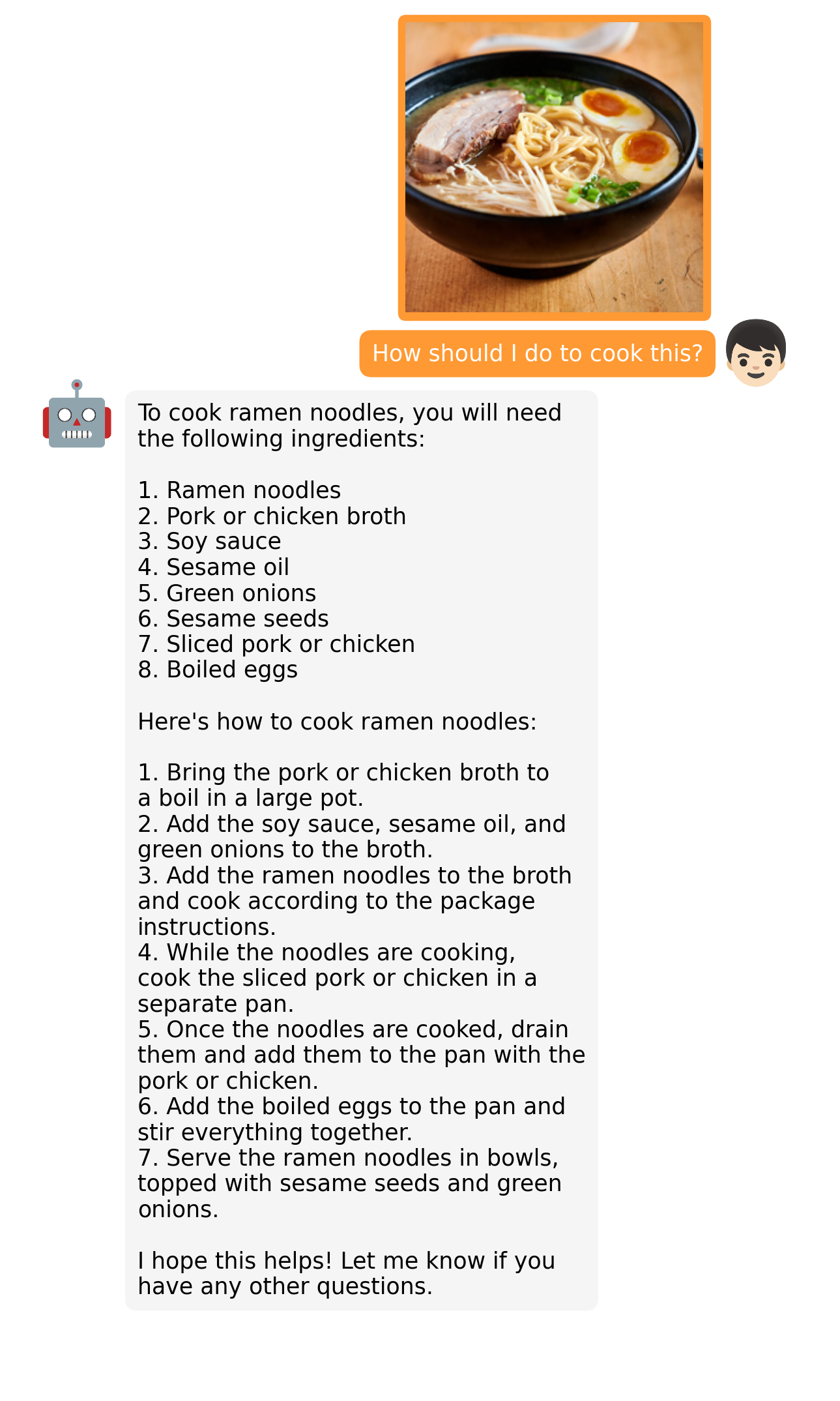

- examples/cook_1.png +0 -0

- examples/cook_2.png +0 -0

- examples/describe_1.png +0 -0

- examples/describe_2.png +0 -0

- examples/fact_1.png +0 -0

- examples/fact_2.png +0 -0

- examples/fix_1.png +0 -0

- examples/fix_2.png +0 -0

- examples/fun_1.png +0 -0

- examples/fun_2.png +0 -0

- examples/logo_1.png +0 -0

- examples/op_1.png +0 -0

- examples/op_2.png +0 -0

- examples/people_1.png +0 -0

- examples/people_2.png +0 -0

- examples/rhyme_1.png +0 -0

- examples/rhyme_2.png +0 -0

- examples/story_1.png +0 -0

- examples/story_2.png +0 -0

- examples/web_1.png +0 -0

- examples/wop_1.png +0 -0

- examples/wop_2.png +0 -0

- figs/examples/ad_1.png +0 -0

- figs/examples/ad_2.png +0 -0

- figs/examples/cook_1.png +0 -0

- figs/examples/cook_2.png +0 -0

- figs/examples/describe_1.png +0 -0

- figs/examples/describe_2.png +0 -0

- figs/examples/fact_1.png +0 -0

- figs/examples/fact_2.png +0 -0

- figs/examples/fix_1.png +0 -0

.gitattributes

CHANGED

|

@@ -1,34 +1,8 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 1 |

+

pretrained_minigpt4.pth filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

vicuna/*.bin filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

vicuna/weight/config.json filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

vicuna/weight/generation_config.json filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

vicuna/weight/pytorch_model.bin.index.json filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

vicuna/weight/special_tokens_map.json filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

vicuna/weight/tokenizer.model filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

vicuna/weight/tokenizer_config.json filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

__pycache__/

|

Dockerfile

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM deepnote/python:3.9-conda AS build

|

| 2 |

+

RUN apt-get update && apt-get install -y zsh && zsh

|

| 3 |

+

RUN /bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install.sh)"

|

| 4 |

+

RUN (echo; echo 'eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"') >> /root/.profile

|

| 5 |

+

RUN eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

|

| 6 |

+

RUN brew install git-lfs

|

| 7 |

+

RUN git lfs install

|

| 8 |

+

RUN pip install fschat

|

| 9 |

+

|

LICENSE.md

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

BSD 3-Clause License

|

| 2 |

+

|

| 3 |

+

Copyright 2023 Deyao Zhu

|

| 4 |

+

All rights reserved.

|

| 5 |

+

|

| 6 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 7 |

+

|

| 8 |

+

1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 9 |

+

|

| 10 |

+

2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 11 |

+

|

| 12 |

+

3. Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 13 |

+

|

| 14 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

LICENSE_Lavis.md

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

BSD 3-Clause License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022 Salesforce, Inc.

|

| 4 |

+

All rights reserved.

|

| 5 |

+

|

| 6 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 7 |

+

|

| 8 |

+

1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 9 |

+

|

| 10 |

+

2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 11 |

+

|

| 12 |

+

3. Neither the name of Salesforce.com nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 13 |

+

|

| 14 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

PrepareVicuna.md

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## How to Prepare Vicuna Weight

|

| 2 |

+

Vicuna is an open-source LLAMA-based LLM that has a performance close to ChatGPT.

|

| 3 |

+

We currently use the v0 version of Vicuna-13B.

|

| 4 |

+

|

| 5 |

+

To prepare Vicuna’s weight, first download Vicuna’s **delta** weight from [https://huggingface.co/lmsys/vicuna-13b-delta-v0](https://huggingface.co/lmsys/vicuna-13b-delta-v0). In case you have git-lfs installed (https://git-lfs.com), this can be done by

|

| 6 |

+

|

| 7 |

+

```

|

| 8 |

+

git lfs install

|

| 9 |

+

git clone https://huggingface.co/lmsys/vicuna-13b-delta-v0

|

| 10 |

+

```

|

| 11 |

+

|

| 12 |

+

Note that this is not directly the working weight, but the difference between the working weight and the original weight of LLAMA-13B. (Due to LLAMA’s rules, we cannot distribute the weight of LLAMA.)

|

| 13 |

+

|

| 14 |

+

Then, you need to obtain the original LLAMA-13B weights in the HuggingFace format either following the instruction provided by HuggingFace [here](https://huggingface.co/docs/transformers/main/model_doc/llama) or from the Internet.

|

| 15 |

+

|

| 16 |

+

When these two weights are ready, we can use tools from Vicuna’s team to create the real working weight.

|

| 17 |

+

First, Install their library that is compatible with v0 Vicuna by

|

| 18 |

+

|

| 19 |

+

```

|

| 20 |

+

pip install git+https://github.com/lm-sys/FastChat.git@v0.1.10

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

Then, run the following command to create the final working weight

|

| 24 |

+

|

| 25 |

+

```

|

| 26 |

+

python -m fastchat.model.apply_delta --base /path/to/llama-13b-hf/ --target /path/to/save/working/vicuna/weight/ --delta /path/to/vicuna-13b-delta-v0/

|

| 27 |

+

```

|

| 28 |

+

|

| 29 |

+

Now you are good to go!

|

| 30 |

+

|

README.md

CHANGED

|

@@ -1,11 +1,154 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# MiniGPT-4: Enhancing Vision-language Understanding with Advanced Large Language Models

|

| 2 |

+

[Deyao Zhu](https://tsutikgiau.github.io/)* (On Job Market!), [Jun Chen](https://junchen14.github.io/)* (On Job Market!), [Xiaoqian Shen](https://xiaoqian-shen.github.io), [Xiang Li](https://xiangli.ac.cn), and [Mohamed Elhoseiny](https://www.mohamed-elhoseiny.com/). *Equal Contribution

|

| 3 |

+

|

| 4 |

+

**King Abdullah University of Science and Technology**

|

| 5 |

+

|

| 6 |

+

<a href='https://minigpt-4.github.io'><img src='https://img.shields.io/badge/Project-Page-Green'></a> <a href='MiniGPT_4.pdf'><img src='https://img.shields.io/badge/Paper-PDF-red'></a>

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

## Online Demo

|

| 10 |

+

|

| 11 |

+

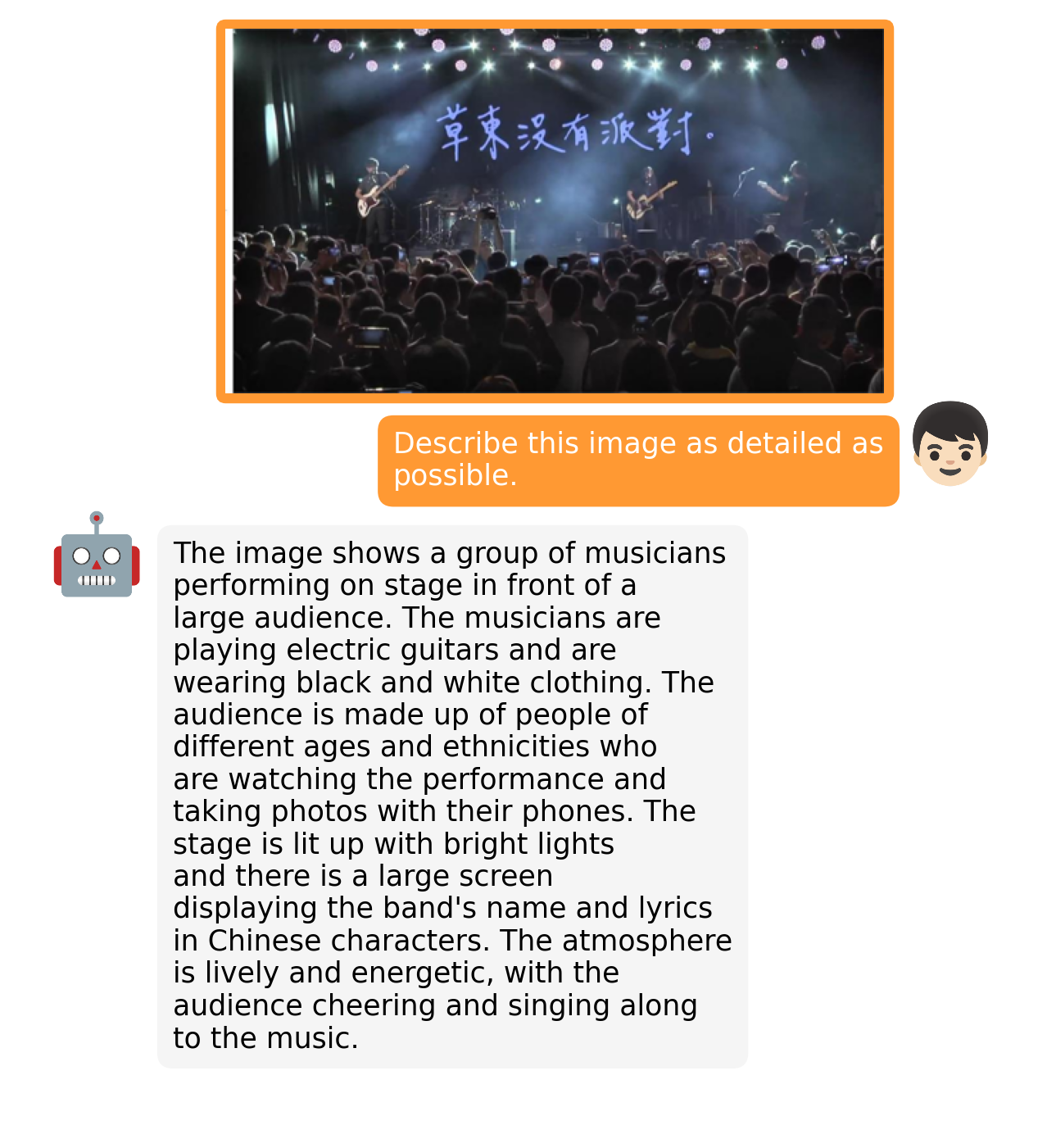

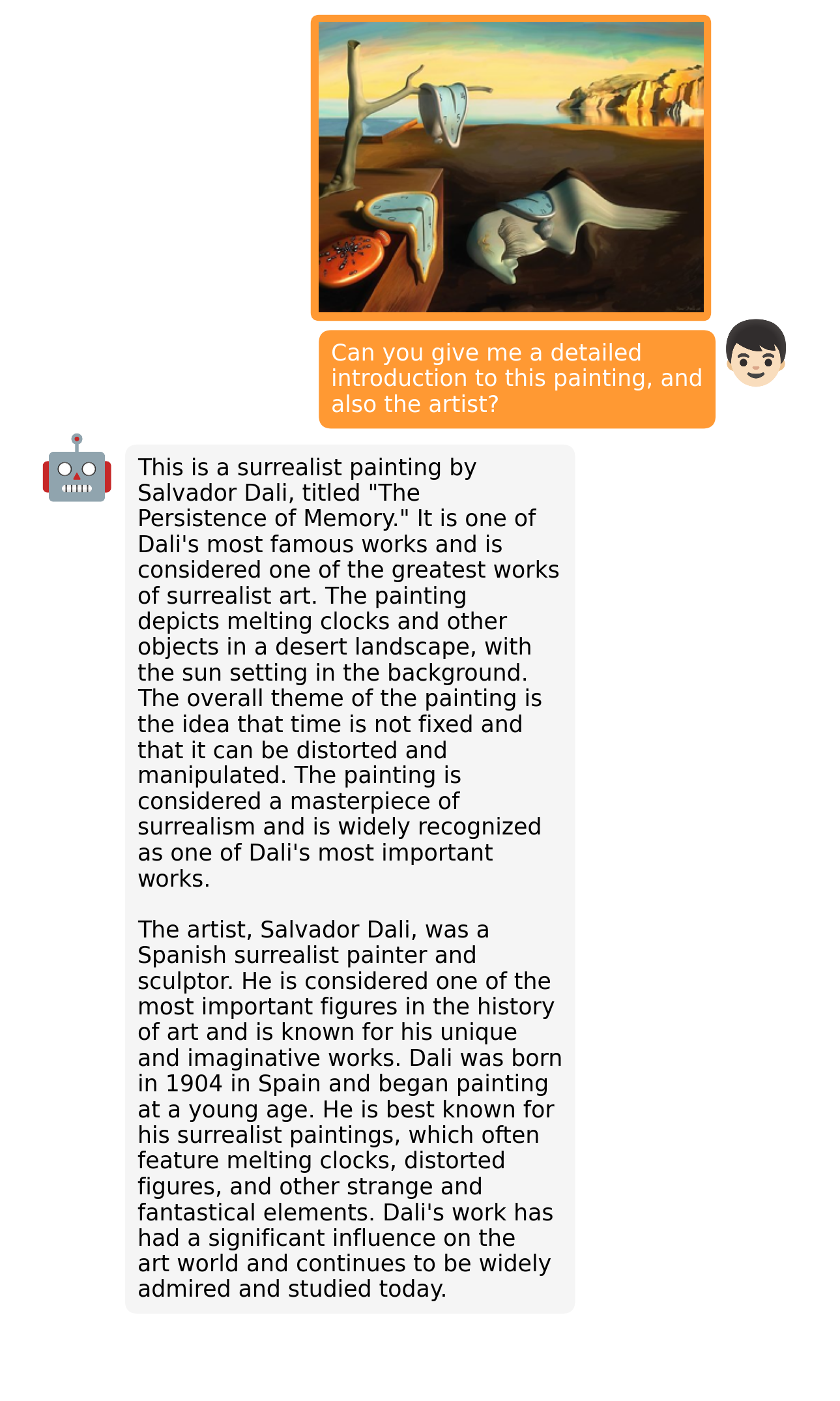

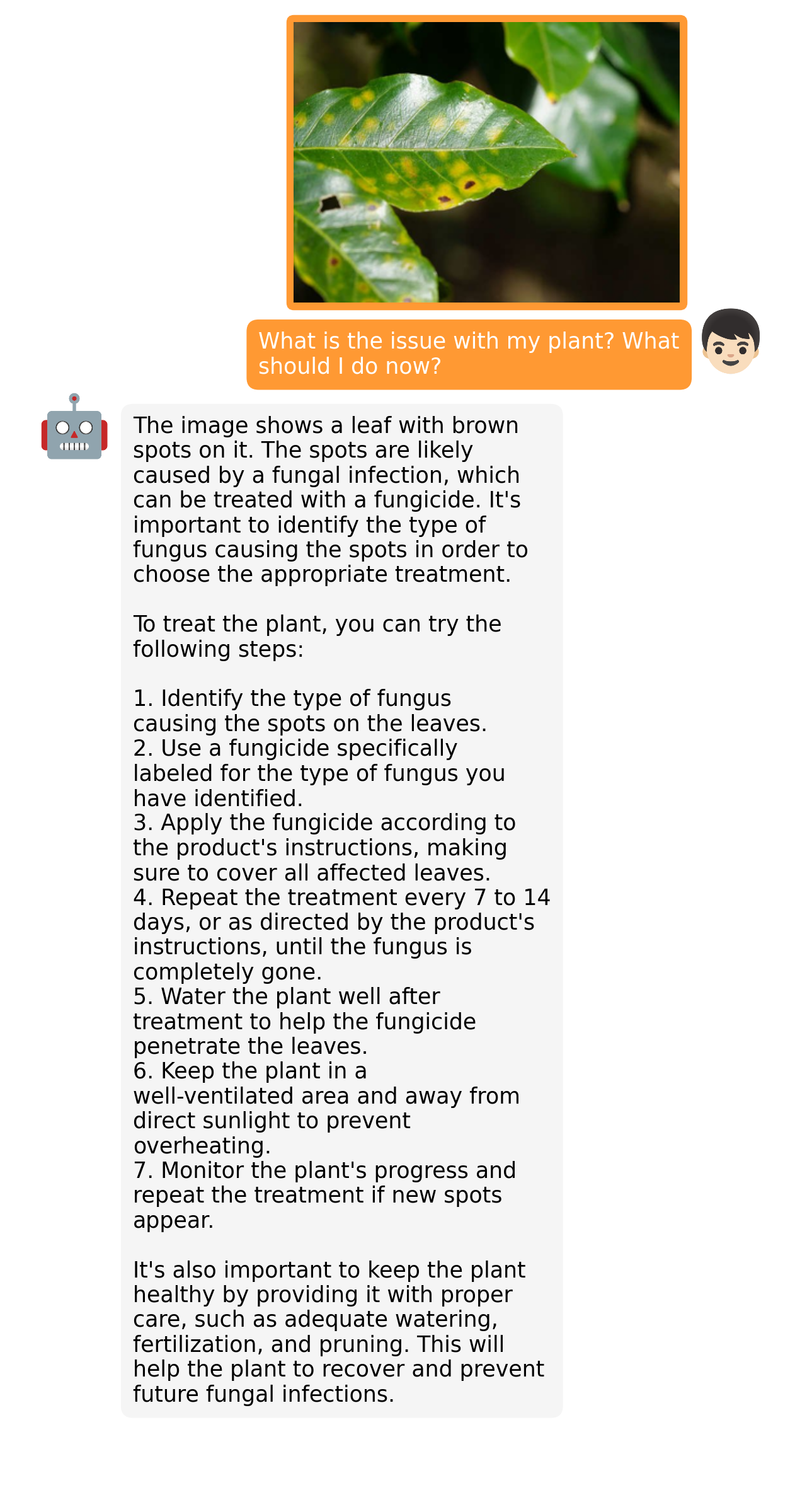

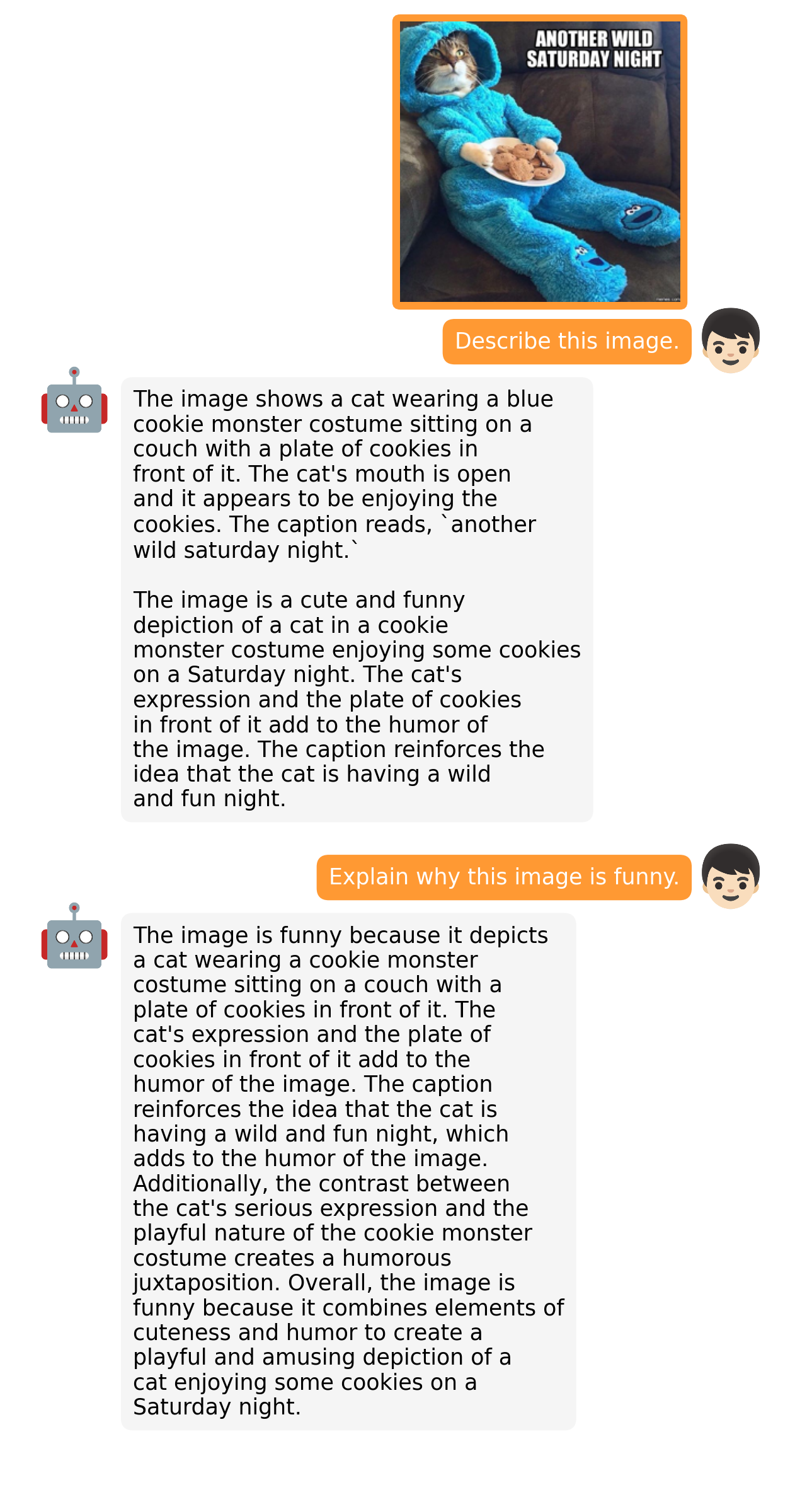

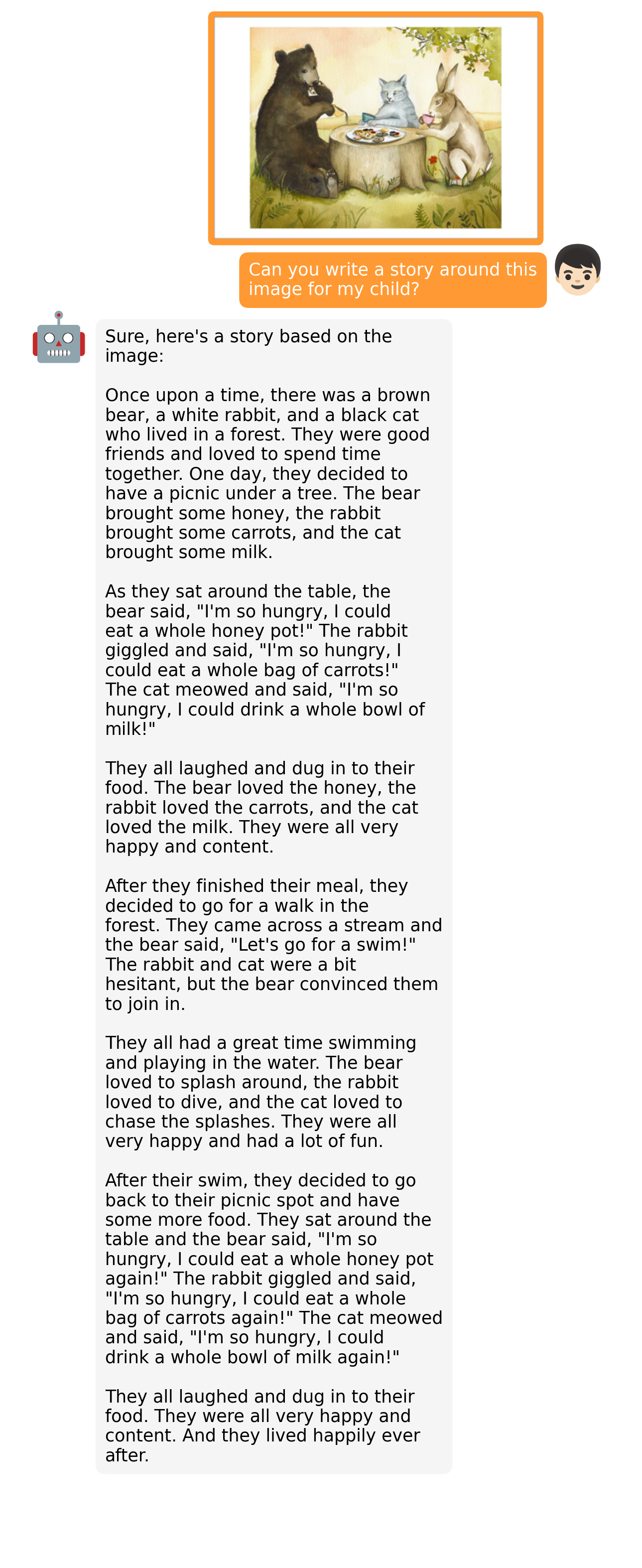

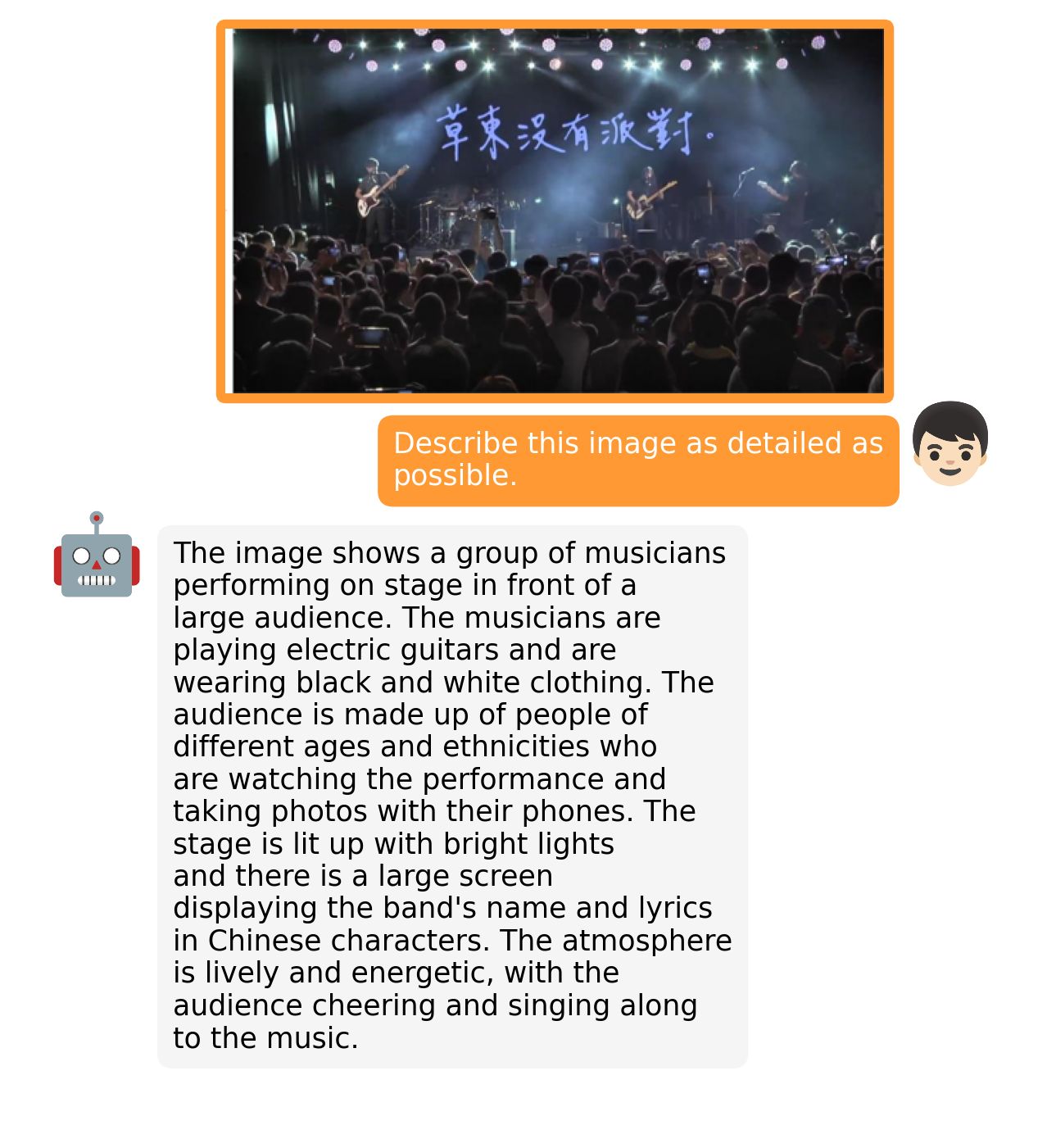

Click the image to chat with MiniGPT-4 around your images

|

| 12 |

+

[](https://minigpt-4.github.io)

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

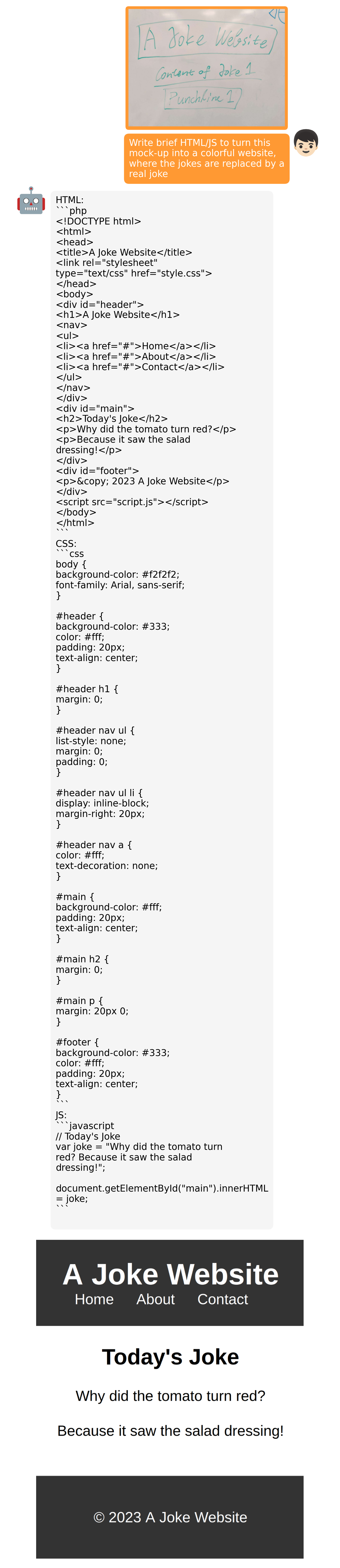

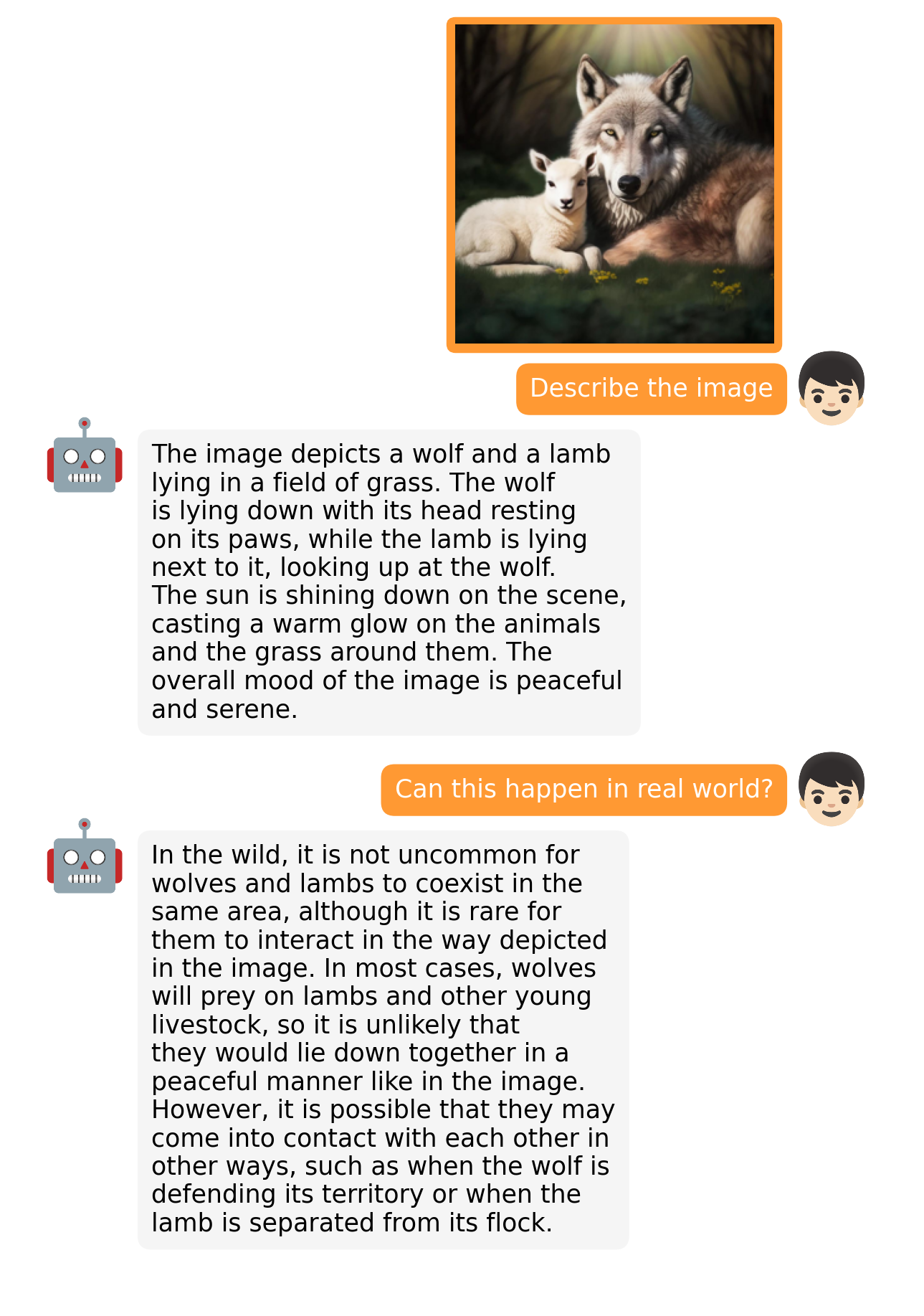

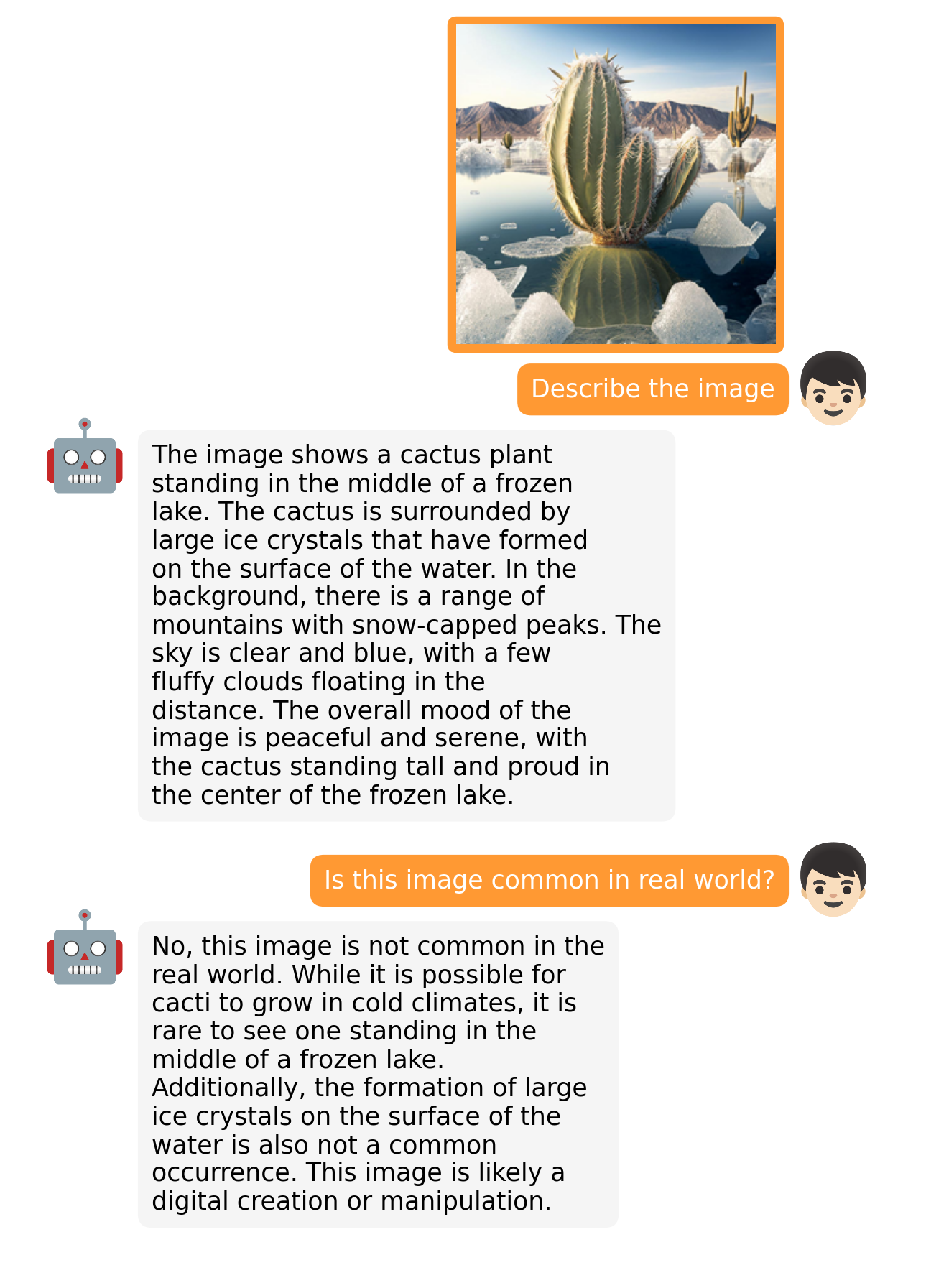

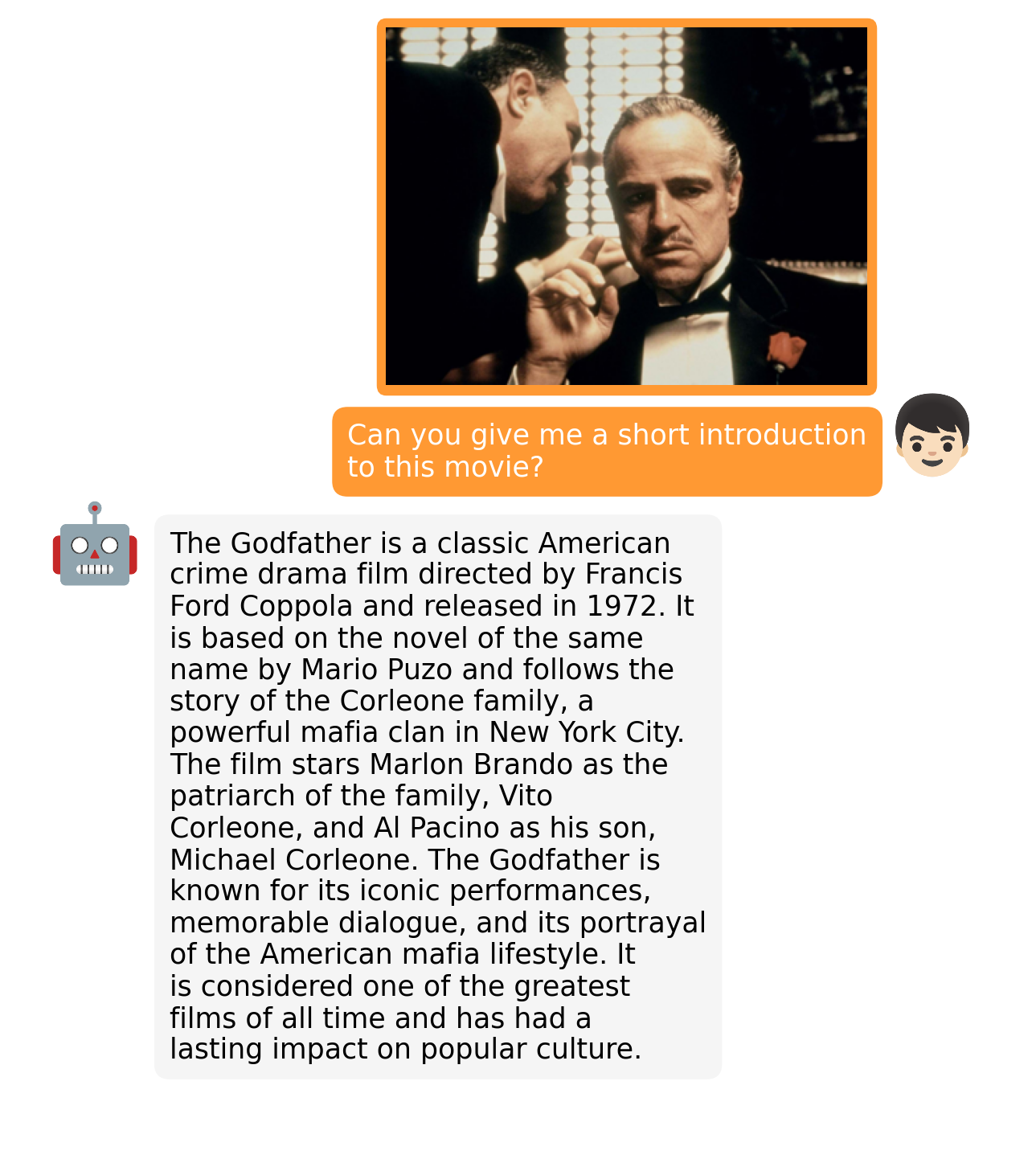

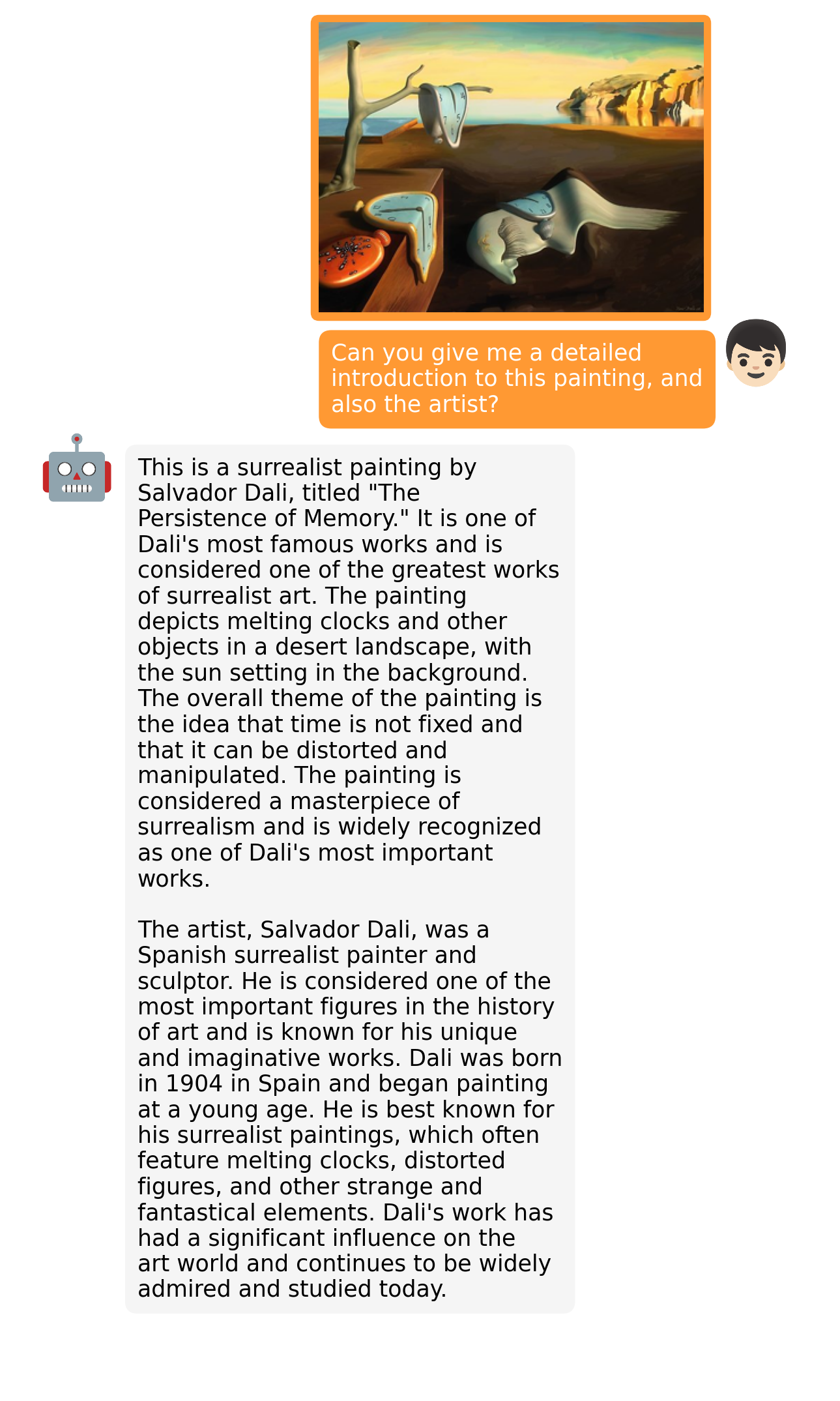

## Examples

|

| 16 |

+

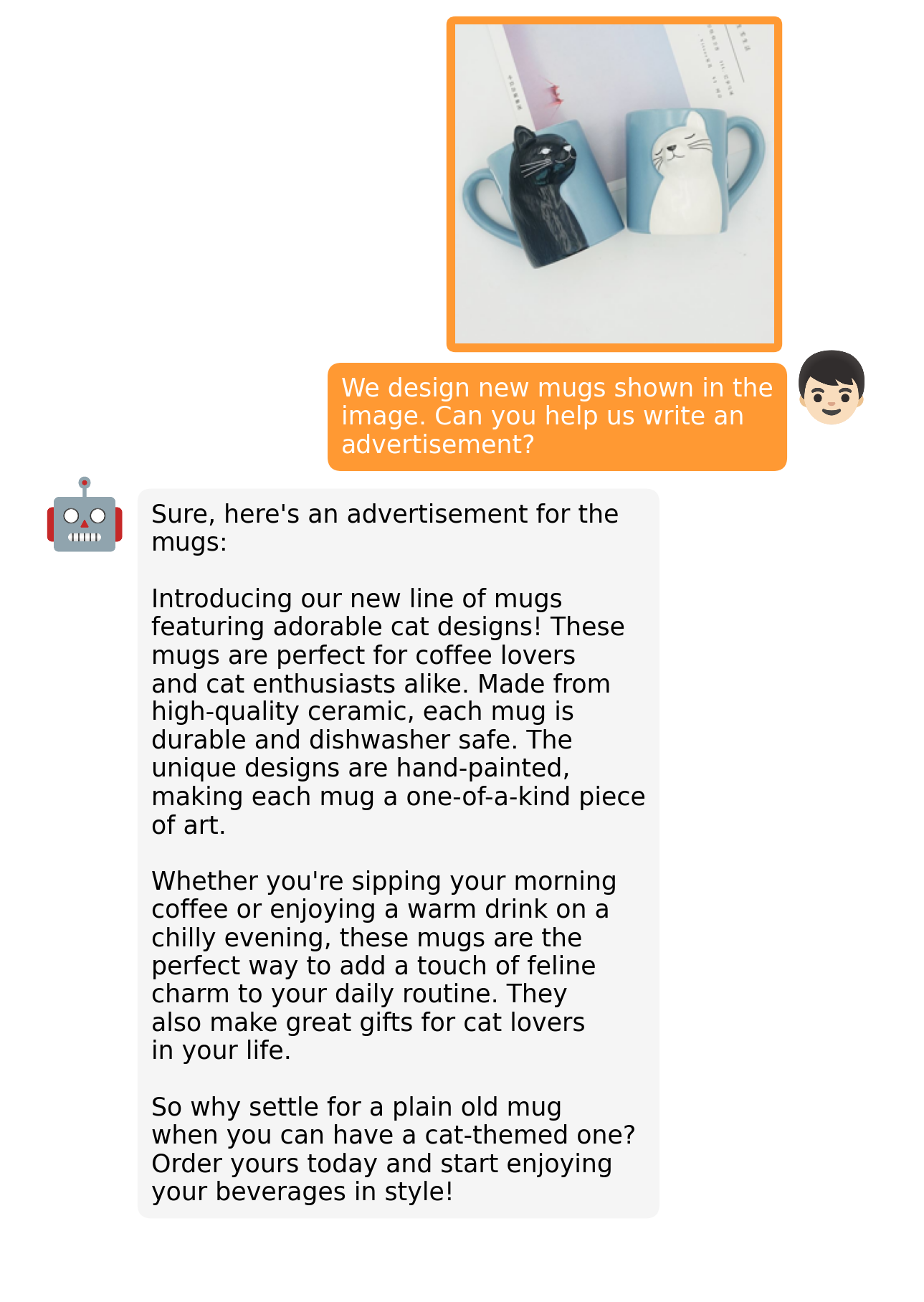

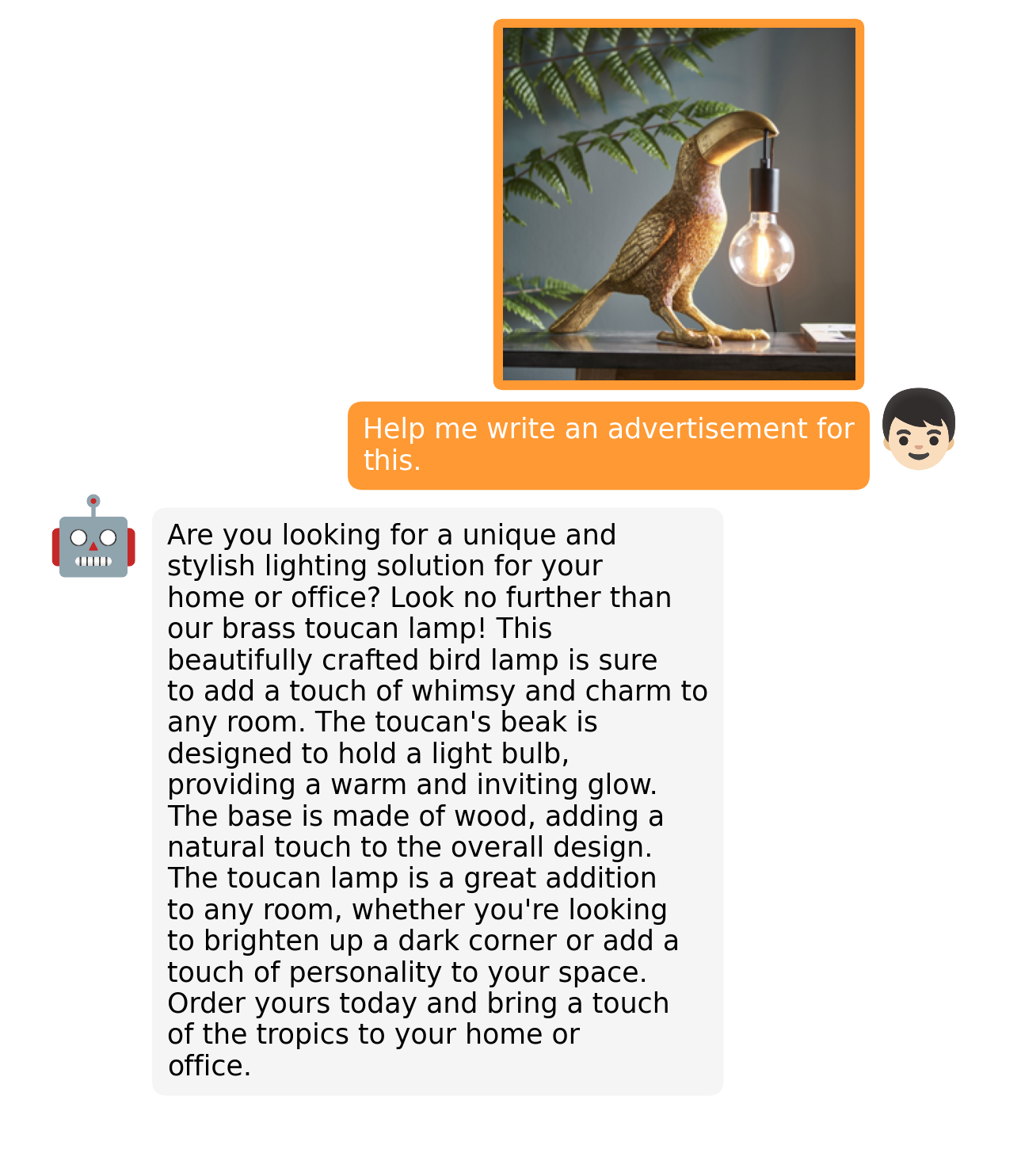

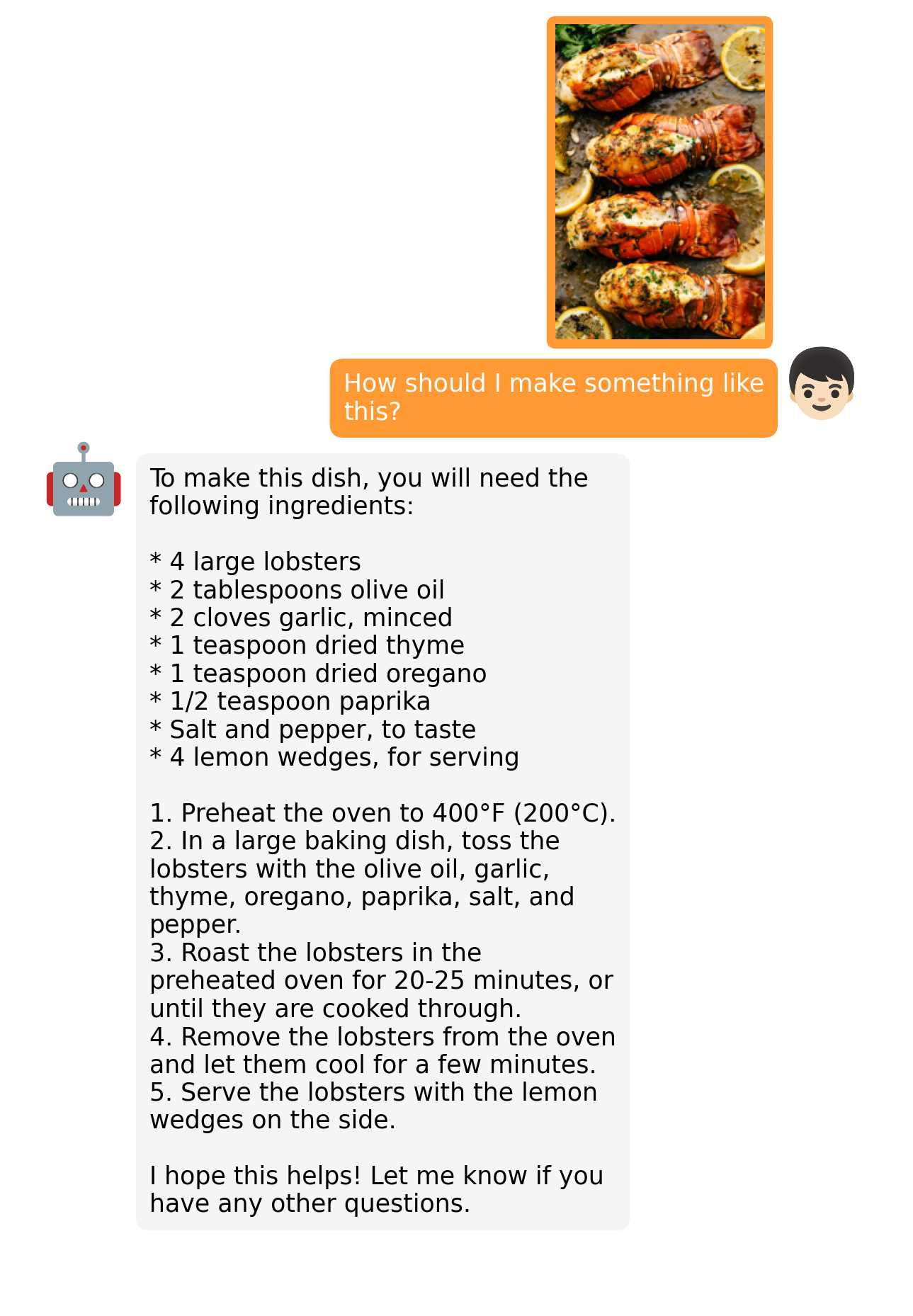

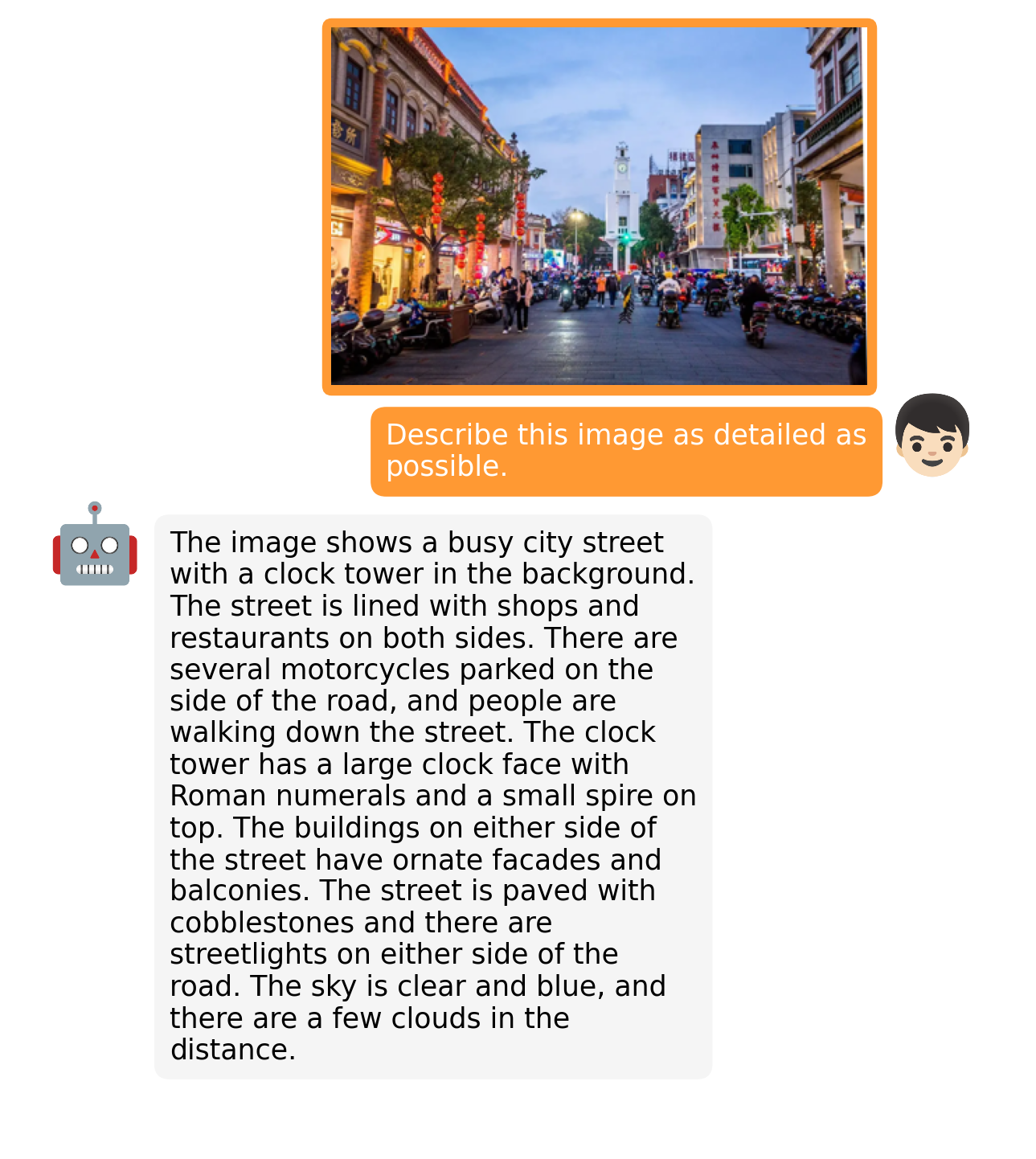

| | |

|

| 17 |

+

:-------------------------:|:-------------------------:

|

| 18 |

+

|

|

| 19 |

+

|

|

| 20 |

+

|

| 21 |

+

More examples can be found in the [project page](https://minigpt-4.github.io).

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

## Introduction

|

| 26 |

+

- MiniGPT-4 aligns a frozen visual encoder from BLIP-2 with a frozen LLM, Vicuna, using just one projection layer.

|

| 27 |

+

- We train MiniGPT-4 with two stages. The first traditional pretraining stage is trained using roughly 5 million aligned image-text pairs in 10 hours using 4 A100s. After the first stage, Vicuna is able to understand the image. But the generation ability of Vicuna is heavilly impacted.

|

| 28 |

+

- To address this issue and improve usability, we propose a novel way to create high-quality image-text pairs by the model itself and ChatGPT together. Based on this, we then create a small (3500 pairs in total) yet high-quality dataset.

|

| 29 |

+

- The second finetuning stage is trained on this dataset in a conversation template to significantly improve its generation reliability and overall usability. To our surprise, this stage is computationally efficient and takes only around 7 minutes with a single A100.

|

| 30 |

+

- MiniGPT-4 yields many emerging vision-language capabilities similar to those demonstrated in GPT-4.

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

## Getting Started

|

| 37 |

+

### Installation

|

| 38 |

+

|

| 39 |

+

**1. Prepare the code and the environment**

|

| 40 |

+

|

| 41 |

+

Git clone our repository, creating a python environment and ativate it via the following command

|

| 42 |

+

|

| 43 |

+

```bash

|

| 44 |

+

git clone https://github.com/Vision-CAIR/MiniGPT-4.git

|

| 45 |

+

cd MiniGPT-4

|

| 46 |

+

conda env create -f environment.yml

|

| 47 |

+

conda activate minigpt4

|

| 48 |

+

```

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

**2. Prepare the pretrained Vicuna weights**

|

| 52 |

+

|

| 53 |

+

The current version of MiniGPT-4 is built on the v0 versoin of Vicuna-13B.

|

| 54 |

+

Please refer to our instruction [here](PrepareVicuna.md)

|

| 55 |

+

to prepare the Vicuna weights.

|

| 56 |

+

The final weights would be in a single folder with the following structure:

|

| 57 |

+

|

| 58 |

+

```

|

| 59 |

+

vicuna_weights

|

| 60 |

+

├── config.json

|

| 61 |

+

├── generation_config.json

|

| 62 |

+

├── pytorch_model.bin.index.json

|

| 63 |

+

├── pytorch_model-00001-of-00003.bin

|

| 64 |

+

...

|

| 65 |

+

```

|

| 66 |

+

|

| 67 |

+

Then, set the path to the vicuna weight in the model config file

|

| 68 |

+

[here](minigpt4/configs/models/minigpt4.yaml#L16) at Line 16.

|

| 69 |

+

|

| 70 |

+

**3. Prepare the pretrained MiniGPT-4 checkpoint**

|

| 71 |

+

|

| 72 |

+

To play with our pretrained model, download the pretrained checkpoint

|

| 73 |

+

[here](https://drive.google.com/file/d/1a4zLvaiDBr-36pasffmgpvH5P7CKmpze/view?usp=share_link).

|

| 74 |

+

Then, set the path to the pretrained checkpoint in the evaluation config file

|

| 75 |

+

in [eval_configs/minigpt4_eval.yaml](eval_configs/minigpt4_eval.yaml#L10) at Line 11.

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

### Launching Demo Locally

|

| 80 |

+

|

| 81 |

+

Try out our demo [demo.py](demo.py) on your local machine by running

|

| 82 |

+

|

| 83 |

+

```

|

| 84 |

+

python demo.py --cfg-path eval_configs/minigpt4_eval.yaml

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

Here, we load Vicuna as 8 bit by default to save some GPU memory usage.

|

| 88 |

+

Besides, the default beam search width is 1.

|

| 89 |

+

Under this setting, the demo cost about 23G GPU memory.

|

| 90 |

+

If you have a more powerful GPU with larger GPU memory, you can run the model

|

| 91 |

+

in 16 bit by setting low_resource to False in the config file

|

| 92 |

+

[minigpt4_eval.yaml](eval_configs/minigpt4_eval.yaml) and use a larger beam search width.

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

### Training

|

| 96 |

+

The training of MiniGPT-4 contains two alignment stages.

|

| 97 |

+

|

| 98 |

+

**1. First pretraining stage**

|

| 99 |

+

|

| 100 |

+

In the first pretrained stage, the model is trained using image-text pairs from Laion and CC datasets

|

| 101 |

+

to align the vision and language model. To download and prepare the datasets, please check

|

| 102 |

+

our [first stage dataset preparation instruction](dataset/README_1_STAGE.md).

|

| 103 |

+

After the first stage, the visual features are mapped and can be understood by the language

|

| 104 |

+

model.

|

| 105 |

+

To launch the first stage training, run the following command. In our experiments, we use 4 A100.

|

| 106 |

+

You can change the save path in the config file

|

| 107 |

+

[train_configs/minigpt4_stage1_pretrain.yaml](train_configs/minigpt4_stage1_pretrain.yaml)

|

| 108 |

+

|

| 109 |

+

```bash

|

| 110 |

+

torchrun --nproc-per-node NUM_GPU train.py --cfg-path train_configs/minigpt4_stage1_pretrain.yaml

|

| 111 |

+

```

|

| 112 |

+

|

| 113 |

+

**2. Second finetuning stage**

|

| 114 |

+

|

| 115 |

+

In the second stage, we use a small high quality image-text pair dataset created by ourselves

|

| 116 |

+

and convert it to a conversation format to further align MiniGPT-4.

|

| 117 |

+

To download and prepare our second stage dataset, please check our

|

| 118 |

+

[second stage dataset preparation instruction](dataset/README_2_STAGE.md).

|

| 119 |

+

To launch the second stage alignment,

|

| 120 |

+

first specify the path to the checkpoint file trained in stage 1 in

|

| 121 |

+

[train_configs/minigpt4_stage1_pretrain.yaml](train_configs/minigpt4_stage2_finetune.yaml).

|

| 122 |

+

You can also specify the output path there.

|

| 123 |

+

Then, run the following command. In our experiments, we use 1 A100.

|

| 124 |

+

|

| 125 |

+

```bash

|

| 126 |

+

torchrun --nproc-per-node NUM_GPU train.py --cfg-path train_configs/minigpt4_stage2_finetune.yaml

|

| 127 |

+

```

|

| 128 |

+

|

| 129 |

+

After the second stage alignment, MiniGPT-4 is able to talk about the image coherently and user-friendly.

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

## Acknowledgement

|

| 135 |

+

|

| 136 |

+

+ [BLIP2](https://huggingface.co/docs/transformers/main/model_doc/blip-2) The model architecture of MiniGPT-4 follows BLIP-2. Don't forget to check this great open-source work if you don't know it before!

|

| 137 |

+

+ [Lavis](https://github.com/salesforce/LAVIS) This repository is built upon Lavis!

|

| 138 |

+

+ [Vicuna](https://github.com/lm-sys/FastChat) The fantastic language ability of Vicuna with only 13B parameters is just amazing. And it is open-source!

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

If you're using MiniGPT-4 in your research or applications, please cite using this BibTeX:

|

| 142 |

+

```bibtex

|

| 143 |

+

@misc{zhu2022minigpt4,

|

| 144 |

+

title={MiniGPT-4: Enhancing Vision-language Understanding with Advanced Large Language Models},

|

| 145 |

+

author={Deyao Zhu and Jun Chen and Xiaoqian Shen and xiang Li and Mohamed Elhoseiny},

|

| 146 |

+

year={2023},

|

| 147 |

+

}

|

| 148 |

+

```

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

## License

|

| 152 |

+

This repository is under [BSD 3-Clause License](LICENSE.md).

|

| 153 |

+

Many codes are based on [Lavis](https://github.com/salesforce/LAVIS) with

|

| 154 |

+

BSD 3-Clause License [here](LICENSE_Lavis.md).

|

convert_llama_weights_to_hf.py

ADDED

|

@@ -0,0 +1,278 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2022 EleutherAI and The HuggingFace Inc. team. All rights reserved.

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

import argparse

|

| 15 |

+

import gc

|

| 16 |

+

import json

|

| 17 |

+

import math

|

| 18 |

+

import os

|

| 19 |

+

import shutil

|

| 20 |

+

import warnings

|

| 21 |

+

|

| 22 |

+

import torch

|

| 23 |

+

|

| 24 |

+

from transformers import LlamaConfig, LlamaForCausalLM, LlamaTokenizer

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

try:

|

| 28 |

+

from transformers import LlamaTokenizerFast

|

| 29 |

+

except ImportError as e:

|

| 30 |

+

warnings.warn(e)

|

| 31 |

+

warnings.warn(

|

| 32 |

+

"The converted tokenizer will be the `slow` tokenizer. To use the fast, update your `tokenizers` library and re-run the tokenizer conversion"

|

| 33 |

+

)

|

| 34 |

+

LlamaTokenizerFast = None

|

| 35 |

+

|

| 36 |

+

"""

|

| 37 |

+

Sample usage:

|

| 38 |

+

|

| 39 |

+

```

|

| 40 |

+

python src/transformers/models/llama/convert_llama_weights_to_hf.py \

|

| 41 |

+

--input_dir /path/to/downloaded/llama/weights --model_size 7B --output_dir /output/path

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

Thereafter, models can be loaded via:

|

| 45 |

+

|

| 46 |

+

```py

|

| 47 |

+

from transformers import LlamaForCausalLM, LlamaTokenizer

|

| 48 |

+

|

| 49 |

+

model = LlamaForCausalLM.from_pretrained("/output/path")

|

| 50 |

+

tokenizer = LlamaTokenizer.from_pretrained("/output/path")

|

| 51 |

+

```

|

| 52 |

+

|

| 53 |

+

Important note: you need to be able to host the whole model in RAM to execute this script (even if the biggest versions

|

| 54 |

+

come in several checkpoints they each contain a part of each weight of the model, so we need to load them all in RAM).

|

| 55 |

+

"""

|

| 56 |

+

|

| 57 |

+

INTERMEDIATE_SIZE_MAP = {

|

| 58 |

+

"7B": 11008,

|

| 59 |

+

"13B": 13824,

|

| 60 |

+

"30B": 17920,

|

| 61 |

+

"65B": 22016,

|

| 62 |

+

}

|

| 63 |

+

NUM_SHARDS = {

|

| 64 |

+

"7B": 1,

|

| 65 |

+

"13B": 2,

|

| 66 |

+

"30B": 4,

|

| 67 |

+

"65B": 8,

|

| 68 |

+

}

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

def compute_intermediate_size(n):

|

| 72 |

+

return int(math.ceil(n * 8 / 3) + 255) // 256 * 256

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def read_json(path):

|

| 76 |

+

with open(path, "r") as f:

|

| 77 |

+

return json.load(f)

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

def write_json(text, path):

|

| 81 |

+

with open(path, "w") as f:

|

| 82 |

+

json.dump(text, f)

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def write_model(model_path, input_base_path, model_size):

|

| 86 |

+

os.makedirs(model_path, exist_ok=True)

|

| 87 |

+

tmp_model_path = os.path.join(model_path, "tmp")

|

| 88 |

+

os.makedirs(tmp_model_path, exist_ok=True)

|

| 89 |

+

|

| 90 |

+

params = read_json(os.path.join(input_base_path, "params.json"))

|

| 91 |

+

num_shards = NUM_SHARDS[model_size]

|

| 92 |

+

n_layers = params["n_layers"]

|

| 93 |

+

n_heads = params["n_heads"]

|

| 94 |

+

n_heads_per_shard = n_heads // num_shards

|

| 95 |

+

dim = params["dim"]

|

| 96 |

+

dims_per_head = dim // n_heads

|

| 97 |

+

base = 10000.0

|

| 98 |

+

inv_freq = 1.0 / (base ** (torch.arange(0, dims_per_head, 2).float() / dims_per_head))

|

| 99 |

+

|

| 100 |

+

# permute for sliced rotary

|

| 101 |

+

def permute(w):

|

| 102 |

+

return w.view(n_heads, dim // n_heads // 2, 2, dim).transpose(1, 2).reshape(dim, dim)

|

| 103 |

+

|

| 104 |

+

print(f"Fetching all parameters from the checkpoint at {input_base_path}.")

|

| 105 |

+

# Load weights

|

| 106 |

+

if model_size == "7B":

|

| 107 |

+

# Not sharded

|

| 108 |

+

# (The sharded implementation would also work, but this is simpler.)

|

| 109 |

+

loaded = torch.load(os.path.join(input_base_path, "consolidated.00.pth"), map_location="cpu")

|

| 110 |

+

else:

|

| 111 |

+

# Sharded

|

| 112 |

+

loaded = [

|

| 113 |

+

torch.load(os.path.join(input_base_path, f"consolidated.{i:02d}.pth"), map_location="cpu")

|

| 114 |

+

for i in range(num_shards)

|

| 115 |

+

]

|

| 116 |

+

param_count = 0

|

| 117 |

+

index_dict = {"weight_map": {}}

|

| 118 |

+

for layer_i in range(n_layers):

|

| 119 |

+

filename = f"pytorch_model-{layer_i + 1}-of-{n_layers + 1}.bin"

|

| 120 |

+

if model_size == "7B":

|

| 121 |

+

# Unsharded

|

| 122 |

+

state_dict = {

|

| 123 |

+

f"model.layers.{layer_i}.self_attn.q_proj.weight": permute(

|

| 124 |

+

loaded[f"layers.{layer_i}.attention.wq.weight"]

|

| 125 |

+

),

|

| 126 |

+

f"model.layers.{layer_i}.self_attn.k_proj.weight": permute(

|

| 127 |

+

loaded[f"layers.{layer_i}.attention.wk.weight"]

|

| 128 |

+

),

|

| 129 |

+

f"model.layers.{layer_i}.self_attn.v_proj.weight": loaded[f"layers.{layer_i}.attention.wv.weight"],

|

| 130 |

+

f"model.layers.{layer_i}.self_attn.o_proj.weight": loaded[f"layers.{layer_i}.attention.wo.weight"],

|

| 131 |

+

f"model.layers.{layer_i}.mlp.gate_proj.weight": loaded[f"layers.{layer_i}.feed_forward.w1.weight"],

|

| 132 |

+

f"model.layers.{layer_i}.mlp.down_proj.weight": loaded[f"layers.{layer_i}.feed_forward.w2.weight"],

|

| 133 |

+

f"model.layers.{layer_i}.mlp.up_proj.weight": loaded[f"layers.{layer_i}.feed_forward.w3.weight"],

|

| 134 |

+

f"model.layers.{layer_i}.input_layernorm.weight": loaded[f"layers.{layer_i}.attention_norm.weight"],

|

| 135 |

+

f"model.layers.{layer_i}.post_attention_layernorm.weight": loaded[f"layers.{layer_i}.ffn_norm.weight"],

|

| 136 |

+

}

|

| 137 |

+

else:

|

| 138 |

+

# Sharded

|

| 139 |

+

# Note that in the 13B checkpoint, not cloning the two following weights will result in the checkpoint

|

| 140 |

+

# becoming 37GB instead of 26GB for some reason.

|

| 141 |

+

state_dict = {

|

| 142 |

+

f"model.layers.{layer_i}.input_layernorm.weight": loaded[0][

|

| 143 |

+

f"layers.{layer_i}.attention_norm.weight"

|

| 144 |

+

].clone(),

|

| 145 |

+

f"model.layers.{layer_i}.post_attention_layernorm.weight": loaded[0][

|

| 146 |

+

f"layers.{layer_i}.ffn_norm.weight"

|

| 147 |

+

].clone(),

|

| 148 |

+

}

|

| 149 |

+

state_dict[f"model.layers.{layer_i}.self_attn.q_proj.weight"] = permute(

|

| 150 |

+

torch.cat(

|

| 151 |

+

[

|

| 152 |

+

loaded[i][f"layers.{layer_i}.attention.wq.weight"].view(n_heads_per_shard, dims_per_head, dim)

|

| 153 |

+

for i in range(num_shards)

|

| 154 |

+

],

|

| 155 |

+

dim=0,

|

| 156 |

+

).reshape(dim, dim)

|

| 157 |

+

)

|

| 158 |

+

state_dict[f"model.layers.{layer_i}.self_attn.k_proj.weight"] = permute(

|

| 159 |

+

torch.cat(

|

| 160 |

+

[

|

| 161 |

+

loaded[i][f"layers.{layer_i}.attention.wk.weight"].view(n_heads_per_shard, dims_per_head, dim)

|

| 162 |

+

for i in range(num_shards)

|

| 163 |

+

],

|

| 164 |

+

dim=0,

|

| 165 |

+

).reshape(dim, dim)

|

| 166 |

+

)

|

| 167 |

+

state_dict[f"model.layers.{layer_i}.self_attn.v_proj.weight"] = torch.cat(

|

| 168 |

+

[

|

| 169 |

+

loaded[i][f"layers.{layer_i}.attention.wv.weight"].view(n_heads_per_shard, dims_per_head, dim)

|

| 170 |

+

for i in range(num_shards)

|

| 171 |

+

],

|

| 172 |

+

dim=0,

|

| 173 |

+

).reshape(dim, dim)

|

| 174 |

+

|

| 175 |

+

state_dict[f"model.layers.{layer_i}.self_attn.o_proj.weight"] = torch.cat(

|

| 176 |

+

[loaded[i][f"layers.{layer_i}.attention.wo.weight"] for i in range(num_shards)], dim=1

|

| 177 |

+

)

|

| 178 |

+

state_dict[f"model.layers.{layer_i}.mlp.gate_proj.weight"] = torch.cat(

|

| 179 |

+

[loaded[i][f"layers.{layer_i}.feed_forward.w1.weight"] for i in range(num_shards)], dim=0

|

| 180 |

+

)

|

| 181 |

+

state_dict[f"model.layers.{layer_i}.mlp.down_proj.weight"] = torch.cat(

|

| 182 |

+

[loaded[i][f"layers.{layer_i}.feed_forward.w2.weight"] for i in range(num_shards)], dim=1

|

| 183 |

+

)

|

| 184 |

+

state_dict[f"model.layers.{layer_i}.mlp.up_proj.weight"] = torch.cat(

|

| 185 |

+

[loaded[i][f"layers.{layer_i}.feed_forward.w3.weight"] for i in range(num_shards)], dim=0

|

| 186 |

+

)

|

| 187 |

+

|

| 188 |

+

state_dict[f"model.layers.{layer_i}.self_attn.rotary_emb.inv_freq"] = inv_freq

|

| 189 |

+

for k, v in state_dict.items():

|

| 190 |

+

index_dict["weight_map"][k] = filename

|

| 191 |

+

param_count += v.numel()

|

| 192 |

+

torch.save(state_dict, os.path.join(tmp_model_path, filename))

|

| 193 |

+

|

| 194 |

+

filename = f"pytorch_model-{n_layers + 1}-of-{n_layers + 1}.bin"

|

| 195 |

+

if model_size == "7B":

|

| 196 |

+

# Unsharded

|

| 197 |

+

state_dict = {

|

| 198 |

+

"model.embed_tokens.weight": loaded["tok_embeddings.weight"],

|

| 199 |

+

"model.norm.weight": loaded["norm.weight"],

|

| 200 |

+

"lm_head.weight": loaded["output.weight"],

|

| 201 |

+

}

|

| 202 |

+

else:

|

| 203 |

+

state_dict = {

|

| 204 |

+

"model.norm.weight": loaded[0]["norm.weight"],

|

| 205 |

+

"model.embed_tokens.weight": torch.cat(

|

| 206 |

+

[loaded[i]["tok_embeddings.weight"] for i in range(num_shards)], dim=1

|

| 207 |

+

),

|

| 208 |

+

"lm_head.weight": torch.cat([loaded[i]["output.weight"] for i in range(num_shards)], dim=0),

|

| 209 |

+

}

|

| 210 |

+

|

| 211 |

+

for k, v in state_dict.items():

|

| 212 |

+

index_dict["weight_map"][k] = filename

|

| 213 |

+

param_count += v.numel()

|

| 214 |

+

torch.save(state_dict, os.path.join(tmp_model_path, filename))

|

| 215 |

+

|

| 216 |

+

# Write configs

|

| 217 |

+

index_dict["metadata"] = {"total_size": param_count * 2}

|

| 218 |

+

write_json(index_dict, os.path.join(tmp_model_path, "pytorch_model.bin.index.json"))

|

| 219 |

+

|

| 220 |

+

config = LlamaConfig(

|

| 221 |

+

hidden_size=dim,

|

| 222 |

+

intermediate_size=compute_intermediate_size(dim),

|

| 223 |

+

num_attention_heads=params["n_heads"],

|

| 224 |

+

num_hidden_layers=params["n_layers"],

|

| 225 |

+

rms_norm_eps=params["norm_eps"],

|

| 226 |

+

)

|

| 227 |

+

config.save_pretrained(tmp_model_path)

|

| 228 |

+

|

| 229 |

+

# Make space so we can load the model properly now.

|

| 230 |

+

del state_dict

|

| 231 |

+

del loaded

|

| 232 |

+

gc.collect()

|

| 233 |

+

|

| 234 |

+

print("Loading the checkpoint in a Llama model.")

|

| 235 |

+

model = LlamaForCausalLM.from_pretrained(tmp_model_path, torch_dtype=torch.float16, low_cpu_mem_usage=True)

|

| 236 |

+

# Avoid saving this as part of the config.

|

| 237 |

+

del model.config._name_or_path

|

| 238 |

+

|

| 239 |

+

print("Saving in the Transformers format.")

|

| 240 |

+

model.save_pretrained(model_path)

|

| 241 |

+

shutil.rmtree(tmp_model_path)

|

| 242 |

+

|

| 243 |

+

|

| 244 |

+

def write_tokenizer(tokenizer_path, input_tokenizer_path):

|

| 245 |

+

# Initialize the tokenizer based on the `spm` model

|

| 246 |

+

tokenizer_class = LlamaTokenizer if LlamaTokenizerFast is None else LlamaTokenizerFast

|

| 247 |

+

print(f"Saving a {tokenizer_class.__name__} to {tokenizer_path}.")

|

| 248 |

+

tokenizer = tokenizer_class(input_tokenizer_path)

|

| 249 |

+

tokenizer.save_pretrained(tokenizer_path)

|

| 250 |

+

|

| 251 |

+

|

| 252 |

+

def main():

|

| 253 |

+

parser = argparse.ArgumentParser()

|

| 254 |

+

parser.add_argument(

|

| 255 |

+

"--input_dir",

|

| 256 |

+

help="Location of LLaMA weights, which contains tokenizer.model and model folders",

|

| 257 |

+

)

|

| 258 |

+

parser.add_argument(

|

| 259 |

+

"--model_size",

|

| 260 |

+

choices=["7B", "13B", "30B", "65B", "tokenizer_only"],

|

| 261 |

+

)

|

| 262 |

+

parser.add_argument(

|

| 263 |

+

"--output_dir",

|

| 264 |

+

help="Location to write HF model and tokenizer",

|

| 265 |

+

)

|

| 266 |

+

args = parser.parse_args()

|

| 267 |

+

if args.model_size != "tokenizer_only":

|

| 268 |

+

write_model(

|

| 269 |

+

model_path=args.output_dir,

|

| 270 |

+

input_base_path=os.path.join(args.input_dir, args.model_size),

|

| 271 |

+

model_size=args.model_size,

|

| 272 |

+

)

|

| 273 |

+

spm_path = os.path.join(args.input_dir, "tokenizer.model")

|

| 274 |

+

write_tokenizer(args.output_dir, spm_path)

|

| 275 |

+

|

| 276 |

+

|

| 277 |

+

if __name__ == "__main__":

|

| 278 |

+

main()

|

dataset/README_1_STAGE.md

ADDED

|

@@ -0,0 +1,96 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Download the filtered Conceptual Captions, SBU, LAION datasets

|

| 2 |

+

|

| 3 |

+

### Pre-training datasets download:

|

| 4 |

+

We use the filtered synthetic captions prepared by BLIP. For more details about the dataset, please refer to [BLIP](https://github.com/salesforce/BLIP).

|

| 5 |

+

|

| 6 |

+

It requires ~2.3T to store LAION and CC3M+CC12M+SBU datasets

|

| 7 |

+

|

| 8 |

+

Image source | Filtered synthetic caption by ViT-L

|

| 9 |

+

--- | :---:

|

| 10 |

+

CC3M+CC12M+SBU | <a href="https://storage.googleapis.com/sfr-vision-language-research/BLIP/datasets/ccs_synthetic_filtered_large.json">Download</a>

|

| 11 |

+

LAION115M | <a href="https://storage.googleapis.com/sfr-vision-language-research/BLIP/datasets/laion_synthetic_filtered_large.json">Download</a>

|

| 12 |

+

|

| 13 |

+

This will download two json files

|

| 14 |

+

```

|

| 15 |

+

ccs_synthetic_filtered_large.json

|

| 16 |

+

laion_synthetic_filtered_large.json

|

| 17 |

+

```

|

| 18 |

+

|

| 19 |

+

## prepare the data step-by-step

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

### setup the dataset folder and move the annotation file to the data storage folder

|

| 23 |

+

```

|

| 24 |

+

export MINIGPT4_DATASET=/YOUR/PATH/FOR/LARGE/DATASET/

|

| 25 |

+

mkdir ${MINIGPT4_DATASET}/cc_sbu

|

| 26 |

+

mkdir ${MINIGPT4_DATASET}/laion

|

| 27 |

+

mv ccs_synthetic_filtered_large.json ${MINIGPT4_DATASET}/cc_sbu

|

| 28 |

+

mv laion_synthetic_filtered_large.json ${MINIGPT4_DATASET}/laion

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

### Convert the scripts to data storate folder

|

| 32 |

+

```

|

| 33 |

+

cp convert_cc_sbu.py ${MINIGPT4_DATASET}/cc_sbu

|

| 34 |

+

cp download_cc_sbu.sh ${MINIGPT4_DATASET}/cc_sbu

|

| 35 |

+

cp convert_laion.py ${MINIGPT4_DATASET}/laion

|

| 36 |

+

cp download_laion.sh ${MINIGPT4_DATASET}/laion

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

### Convert the laion and cc_sbu annotation file format to be img2dataset format

|

| 41 |

+

```

|

| 42 |

+

cd ${MINIGPT4_DATASET}/cc_sbu

|

| 43 |

+

python convert_cc_sbu.py

|

| 44 |

+

|

| 45 |

+

cd ${MINIGPT4_DATASET}/laion

|

| 46 |

+

python convert_laion.py

|

| 47 |

+

```

|

| 48 |

+

|

| 49 |

+

### Download the datasets with img2dataset

|

| 50 |

+

```

|

| 51 |

+

cd ${MINIGPT4_DATASET}/cc_sbu

|

| 52 |

+

sh download_cc_sbu.sh

|

| 53 |

+

cd ${MINIGPT4_DATASET}/laion

|

| 54 |

+

sh download_laion.sh

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

The final dataset structure

|

| 59 |

+

|

| 60 |

+

```

|

| 61 |

+

.

|

| 62 |

+

├── ${MINIGPT4_DATASET}

|

| 63 |

+

│ ├── cc_sbu

|

| 64 |

+

│ ├── convert_cc_sbu.py

|

| 65 |

+

│ ├── download_cc_sbu.sh

|

| 66 |

+

│ ├── ccs_synthetic_filtered_large.json

|

| 67 |

+

│ ├── ccs_synthetic_filtered_large.tsv

|

| 68 |

+

│ └── cc_sbu_dataset

|

| 69 |

+

│ ├── 00000.tar

|

| 70 |

+

│ ├── 00000.parquet

|

| 71 |

+

│ ...

|

| 72 |

+

│ ├── laion

|

| 73 |

+

│ ├── convert_laion.py

|

| 74 |

+

│ ├── download_laion.sh

|

| 75 |

+

│ ├── laion_synthetic_filtered_large.json

|

| 76 |

+

│ ├── laion_synthetic_filtered_large.tsv

|

| 77 |

+

│ └── laion_dataset

|

| 78 |

+

│ ├── 00000.tar

|

| 79 |

+

│ ├── 00000.parquet

|

| 80 |

+

│ ...

|

| 81 |

+

...

|

| 82 |

+

```

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

## Set up the dataset configuration files

|

| 86 |

+

|

| 87 |

+

Then, set up the LAION dataset loading path in

|

| 88 |

+

[here](../minigpt4/configs/datasets/laion/defaults.yaml#L5) at Line 5 as

|

| 89 |

+

${MINIGPT4_DATASET}/laion/laion_dataset/{00000..10488}.tar

|

| 90 |

+

|

| 91 |

+

and the Conceptual Captoin and SBU datasets loading path in

|

| 92 |

+

[here](../minigpt4/configs/datasets/cc_sbu/defaults.yaml#L5) at Line 5 as

|

| 93 |

+

${MINIGPT4_DATASET}/cc_sbu/cc_sbu_dataset/{00000..01255}.tar

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

|

dataset/README_2_STAGE.md

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Second Stage Data Preparation

|

| 2 |

+

|

| 3 |

+

Our second stage dataset can be downloaded from

|

| 4 |

+

[here](https://drive.google.com/file/d/1nJXhoEcy3KTExr17I7BXqY5Y9Lx_-n-9/view?usp=share_link)

|

| 5 |

+

After extraction, you will get a data follder with the following structure:

|

| 6 |

+

|

| 7 |

+

```

|

| 8 |

+

cc_sbu_align

|

| 9 |

+

├── filter_cap.json

|

| 10 |

+

└── image

|

| 11 |

+

├── 2.jpg

|

| 12 |

+

├── 3.jpg

|

| 13 |

+

...

|

| 14 |

+

```

|

| 15 |

+

|

| 16 |

+

Put the folder to any path you want.

|

| 17 |

+

Then, set up the dataset path in the dataset config file

|

| 18 |

+

[here](../minigpt4/configs/datasets/cc_sbu/align.yaml#L5) at Line 5.

|

| 19 |

+

|

dataset/convert_cc_sbu.py

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import csv

|

| 3 |

+

|

| 4 |

+

# specify input and output file paths

|

| 5 |

+

input_file = 'ccs_synthetic_filtered_large.json'

|

| 6 |

+

output_file = 'ccs_synthetic_filtered_large.tsv'

|

| 7 |

+

|

| 8 |

+

# load JSON data from input file

|

| 9 |

+

with open(input_file, 'r') as f:

|

| 10 |

+

data = json.load(f)

|

| 11 |

+

|

| 12 |

+

# extract header and data from JSON

|

| 13 |

+

header = data[0].keys()

|

| 14 |

+

rows = [x.values() for x in data]

|

| 15 |

+

|

| 16 |

+

# write data to TSV file

|

| 17 |

+

with open(output_file, 'w') as f:

|

| 18 |

+

writer = csv.writer(f, delimiter='\t')

|

| 19 |

+

writer.writerow(header)

|

| 20 |

+

writer.writerows(rows)

|

dataset/convert_laion.py

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import csv

|

| 3 |

+

|

| 4 |

+

# specify input and output file paths

|

| 5 |

+

input_file = 'laion_synthetic_filtered_large.json'

|

| 6 |

+

output_file = 'laion_synthetic_filtered_large.tsv'

|

| 7 |

+

|

| 8 |

+

# load JSON data from input file

|

| 9 |

+

with open(input_file, 'r') as f:

|

| 10 |

+

data = json.load(f)

|

| 11 |

+

|

| 12 |

+

# extract header and data from JSON

|

| 13 |

+

header = data[0].keys()

|

| 14 |

+

rows = [x.values() for x in data]

|

| 15 |

+

|

| 16 |

+

# write data to TSV file

|

| 17 |

+

with open(output_file, 'w') as f:

|

| 18 |

+

writer = csv.writer(f, delimiter='\t')

|

| 19 |

+

writer.writerow(header)

|

| 20 |

+

writer.writerows(rows)

|

dataset/download_cc_sbu.sh

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

img2dataset --url_list ccs_synthetic_filtered_large.tsv --input_format "tsv"\

|

| 4 |

+

--url_col "url" --caption_col "caption" --output_format webdataset\

|

| 5 |

+

--output_folder cc_sbu_dataset --processes_count 16 --thread_count 128 --image_size 256 \

|

| 6 |

+

--enable_wandb True

|

dataset/download_laion.sh

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

img2dataset --url_list laion_synthetic_filtered_large.tsv --input_format "tsv"\

|

| 4 |

+

--url_col "url" --caption_col "caption" --output_format webdataset\

|

| 5 |

+

--output_folder laion_dataset --processes_count 16 --thread_count 128 --image_size 256 \

|

| 6 |

+

--enable_wandb True

|

demo.py

ADDED

|

@@ -0,0 +1,150 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import os

|

| 3 |

+

import random

|

| 4 |

+

|

| 5 |

+

import numpy as np

|

| 6 |

+

import torch

|

| 7 |

+

import torch.backends.cudnn as cudnn

|

| 8 |

+

import gradio as gr

|

| 9 |

+

|

| 10 |

+

from minigpt4.common.config import Config

|

| 11 |

+

from minigpt4.common.dist_utils import get_rank

|

| 12 |

+

from minigpt4.common.registry import registry

|

| 13 |

+

from minigpt4.conversation.conversation import Chat, CONV_VISION

|

| 14 |

+

|

| 15 |

+

# imports modules for registration

|

| 16 |

+

from minigpt4.datasets.builders import *

|

| 17 |

+

from minigpt4.models import *

|

| 18 |

+

from minigpt4.processors import *

|

| 19 |

+

from minigpt4.runners import *

|

| 20 |

+

from minigpt4.tasks import *

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def parse_args():

|

| 24 |

+

parser = argparse.ArgumentParser(description="Demo")

|

| 25 |