Spaces:

Runtime error

Runtime error

Shiaoming

commited on

Commit

·

64abe77

1

Parent(s):

da0591b

release code

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +111 -2

- alike.py +143 -0

- alnet.py +164 -0

- assets/alike.png +0 -0

- assets/kitti/000100.png +0 -0

- assets/kitti/000101.png +0 -0

- assets/kitti/000102.png +0 -0

- assets/kitti/000103.png +0 -0

- assets/kitti/000104.png +0 -0

- assets/kitti/000105.png +0 -0

- assets/kitti/000106.png +0 -0

- assets/kitti/000107.png +0 -0

- assets/kitti/000108.png +0 -0

- assets/kitti/000109.png +0 -0

- assets/kitti/000110.png +0 -0

- assets/kitti/000111.png +0 -0

- assets/kitti/000112.png +0 -0

- assets/kitti/000113.png +0 -0

- assets/kitti/000114.png +0 -0

- assets/kitti/000115.png +0 -0

- assets/kitti/000116.png +0 -0

- assets/kitti/000117.png +0 -0

- assets/kitti/000118.png +0 -0

- assets/kitti/000119.png +0 -0

- assets/tum/1311868169.163498.png +0 -0

- assets/tum/1311868169.263274.png +0 -0

- assets/tum/1311868169.363470.png +0 -0

- assets/tum/1311868169.463229.png +0 -0

- assets/tum/1311868169.563501.png +0 -0

- assets/tum/1311868169.663240.png +0 -0

- assets/tum/1311868169.763417.png +0 -0

- assets/tum/1311868169.863396.png +0 -0

- assets/tum/1311868169.963415.png +0 -0

- assets/tum/1311868170.063469.png +0 -0

- assets/tum/1311868170.163416.png +0 -0

- assets/tum/1311868170.263521.png +0 -0

- assets/tum/1311868170.363400.png +0 -0

- assets/tum/1311868170.463383.png +0 -0

- assets/tum/1311868170.563345.png +0 -0

- assets/tum/1311868170.663430.png +0 -0

- assets/tum/1311868170.763453.png +0 -0

- assets/tum/1311868170.863446.png +0 -0

- assets/tum/1311868170.963440.png +0 -0

- assets/tum/1311868171.063438.png +0 -0

- demo.py +167 -0

- hseq/cache/alike-l-ms.npy +0 -0

- hseq/cache/alike-l.npy +0 -0

- hseq/cache/alike-n-ms.npy +0 -0

- hseq/cache/alike-n.npy +0 -0

- hseq/cache/aslfeat.npy +0 -0

README.md

CHANGED

|

@@ -1,3 +1,112 @@

|

|

| 1 |

-

# ALIKE

|

| 2 |

|

| 3 |

-

The

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ALIKE: Accurate and Lightweight Keypoint Detection and Descriptor Extraction

|

| 2 |

|

| 3 |

+

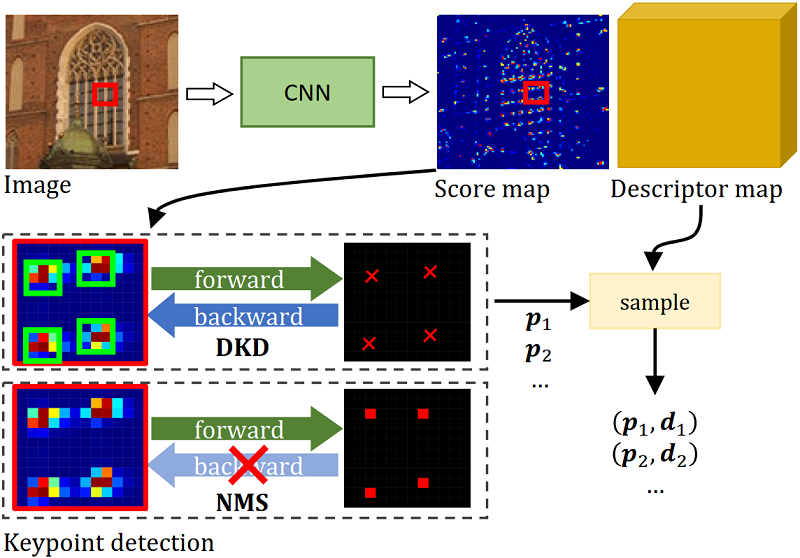

ALIKE applies a differentiable keypoint detection module to detect accurate sub-pixel keypoints. The network can run at 95 frames per second for 640 x 480 images on NVIDIA Titan RTX GPU and achieve equivalent performance with the state-of-the-arts. ALIKE benefits real-time applications in resource-limited platforms/devices. Technical details are described in [this paper](https://arxiv.org/pdf/2112.02906.pdf).

|

| 4 |

+

|

| 5 |

+

> ```

|

| 6 |

+

> Xiaoming Zhao, Xingming Wu, Jinyu Miao, Weihai Chen, Peter C. Y. Chen, Zhengguo Li, "ALIKE: Accurate and Lightweight Keypoint

|

| 7 |

+

> Detection and Descriptor Extraction," IEEE Transactions on Multimedia, 2022.

|

| 8 |

+

> ```

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

If you use ALIKE in an academic work, please cite:

|

| 14 |

+

|

| 15 |

+

```

|

| 16 |

+

@article{Zhao2022ALIKE,

|

| 17 |

+

title={ALIKE: Accurate and Lightweight Keypoint Detection and Descriptor Extraction},

|

| 18 |

+

author={Xiaoming Zhao and Xingming Wu and Jinyu Miao and Weihai Chen and Peter C. Y. Chen and Zhengguo Li},

|

| 19 |

+

journal={IEEE Transactions on Multimedia},

|

| 20 |

+

year={2022}

|

| 21 |

+

}

|

| 22 |

+

```

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

## 1. Prerequisites

|

| 27 |

+

|

| 28 |

+

The required packages are listed in the `requirements.txt` :

|

| 29 |

+

|

| 30 |

+

```shell

|

| 31 |

+

pip install -r requirements.txt

|

| 32 |

+

```

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

## 2. Models

|

| 37 |

+

|

| 38 |

+

The off-the-shelf weights of four variant ALIKE models are provided in `models/` .

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

## 3. Run demo

|

| 43 |

+

|

| 44 |

+

```shell

|

| 45 |

+

$ python demo.py -h

|

| 46 |

+

usage: demo.py [-h] [--model {alike-t,alike-s,alike-n,alike-l}]

|

| 47 |

+

[--device DEVICE] [--top_k TOP_K] [--scores_th SCORES_TH]

|

| 48 |

+

[--n_limit N_LIMIT] [--no_display] [--no_sub_pixel]

|

| 49 |

+

input

|

| 50 |

+

|

| 51 |

+

ALike Demo.

|

| 52 |

+

|

| 53 |

+

positional arguments:

|

| 54 |

+

input Image directory or movie file or "camera0" (for

|

| 55 |

+

webcam0).

|

| 56 |

+

|

| 57 |

+

optional arguments:

|

| 58 |

+

-h, --help show this help message and exit

|

| 59 |

+

--model {alike-t,alike-s,alike-n,alike-l}

|

| 60 |

+

The model configuration

|

| 61 |

+

--device DEVICE Running device (default: cuda).

|

| 62 |

+

--top_k TOP_K Detect top K keypoints. -1 for threshold based mode,

|

| 63 |

+

>0 for top K mode. (default: -1)

|

| 64 |

+

--scores_th SCORES_TH

|

| 65 |

+

Detector score threshold (default: 0.2).

|

| 66 |

+

--n_limit N_LIMIT Maximum number of keypoints to be detected (default:

|

| 67 |

+

5000).

|

| 68 |

+

--no_display Do not display images to screen. Useful if running

|

| 69 |

+

remotely (default: False).

|

| 70 |

+

--no_sub_pixel Do not detect sub-pixel keypoints (default: False).

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

## 4. Examples

|

| 76 |

+

|

| 77 |

+

### KITTI example

|

| 78 |

+

```shell

|

| 79 |

+

python demo.py assets/kitti

|

| 80 |

+

```

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

### TUM example

|

| 84 |

+

```shell

|

| 85 |

+

python demo.py assets/tum

|

| 86 |

+

```

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

## 5. Efficiency and performance

|

| 90 |

+

|

| 91 |

+

| Models | Parameters | GFLOPs(640x480) | MHA@3 on Hpatches | mAA(10°) on [IMW2020-test](https://www.cs.ubc.ca/research/image-matching-challenge/2021/leaderboard) (Stereo) |

|

| 92 |

+

|:---:|:---:|:---:|:-----------------:|:-------------------------------------------------------------------------------------------------------------:|

|

| 93 |

+

| D2-Net(MS) | 7653KB | 889.40 | 38.33% | 12.27% |

|

| 94 |

+

| LF-Net(MS) | 2642KB | 24.37 | 57.78% | 23.44% |

|

| 95 |

+

| SuperPoint | 1301KB | 26.11 | 70.19% | 28.97% |

|

| 96 |

+

| R2D2(MS) | 484KB | 464.55 | 71.48% | 39.02% |

|

| 97 |

+

| ASLFeat(MS) | 823KB | 77.58 | 73.52% | 33.65% |

|

| 98 |

+

| DISK | 1092KB | 98.97 | 70.56% | 51.22% |

|

| 99 |

+

| ALike-N | 318KB | 7.909 | 75.74% | 47.18% |

|

| 100 |

+

| ALike-L | 653KB | 19.685 | 76.85% | 49.58% |

|

| 101 |

+

|

| 102 |

+

### Evaluation on Hpatches

|

| 103 |

+

|

| 104 |

+

- Download [hpatches-sequences-release](https://hpatches.github.io/) and put it into `hseq/hpatches-sequences-release`.

|

| 105 |

+

- Remove the unrelaiable sequences as D2-Net.

|

| 106 |

+

- Run the following command to evaluate the performance:

|

| 107 |

+

```shell

|

| 108 |

+

python hseq/eval.py

|

| 109 |

+

```

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

For more details, please refer to the [paper](https://arxiv.org/abs/2112.02906).

|

alike.py

ADDED

|

@@ -0,0 +1,143 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import logging

|

| 2 |

+

import os

|

| 3 |

+

import cv2

|

| 4 |

+

import torch

|

| 5 |

+

from copy import deepcopy

|

| 6 |

+

import torch.nn.functional as F

|

| 7 |

+

from torchvision.transforms import ToTensor

|

| 8 |

+

import math

|

| 9 |

+

|

| 10 |

+

from alnet import ALNet

|

| 11 |

+

from soft_detect import DKD

|

| 12 |

+

import time

|

| 13 |

+

|

| 14 |

+

configs = {

|

| 15 |

+

'alike-t': {'c1': 8, 'c2': 16, 'c3': 32, 'c4': 64, 'dim': 64, 'single_head': True, 'radius': 2,

|

| 16 |

+

'model_path': os.path.join(os.path.split(__file__)[0], 'models', 'alike-t.pth')},

|

| 17 |

+

'alike-s': {'c1': 8, 'c2': 16, 'c3': 48, 'c4': 96, 'dim': 96, 'single_head': True, 'radius': 2,

|

| 18 |

+

'model_path': os.path.join(os.path.split(__file__)[0], 'models', 'alike-s.pth')},

|

| 19 |

+

'alike-n': {'c1': 16, 'c2': 32, 'c3': 64, 'c4': 128, 'dim': 128, 'single_head': True, 'radius': 2,

|

| 20 |

+

'model_path': os.path.join(os.path.split(__file__)[0], 'models', 'alike-n.pth')},

|

| 21 |

+

'alike-l': {'c1': 32, 'c2': 64, 'c3': 128, 'c4': 128, 'dim': 128, 'single_head': False, 'radius': 2,

|

| 22 |

+

'model_path': os.path.join(os.path.split(__file__)[0], 'models', 'alike-l.pth')},

|

| 23 |

+

}

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class ALike(ALNet):

|

| 27 |

+

def __init__(self,

|

| 28 |

+

# ================================== feature encoder

|

| 29 |

+

c1: int = 32, c2: int = 64, c3: int = 128, c4: int = 128, dim: int = 128,

|

| 30 |

+

single_head: bool = False,

|

| 31 |

+

# ================================== detect parameters

|

| 32 |

+

radius: int = 2,

|

| 33 |

+

top_k: int = 500, scores_th: float = 0.5,

|

| 34 |

+

n_limit: int = 5000,

|

| 35 |

+

device: str = 'cpu',

|

| 36 |

+

model_path: str = ''

|

| 37 |

+

):

|

| 38 |

+

super().__init__(c1, c2, c3, c4, dim, single_head)

|

| 39 |

+

self.radius = radius

|

| 40 |

+

self.top_k = top_k

|

| 41 |

+

self.n_limit = n_limit

|

| 42 |

+

self.scores_th = scores_th

|

| 43 |

+

self.dkd = DKD(radius=self.radius, top_k=self.top_k,

|

| 44 |

+

scores_th=self.scores_th, n_limit=self.n_limit)

|

| 45 |

+

self.device = device

|

| 46 |

+

|

| 47 |

+

if model_path != '':

|

| 48 |

+

state_dict = torch.load(model_path, self.device)

|

| 49 |

+

self.load_state_dict(state_dict)

|

| 50 |

+

self.to(self.device)

|

| 51 |

+

self.eval()

|

| 52 |

+

logging.info(f'Loaded model parameters from {model_path}')

|

| 53 |

+

logging.info(

|

| 54 |

+

f"Number of model parameters: {sum(p.numel() for p in self.parameters() if p.requires_grad) / 1e3}KB")

|

| 55 |

+

|

| 56 |

+

def extract_dense_map(self, image, ret_dict=False):

|

| 57 |

+

# ====================================================

|

| 58 |

+

# check image size, should be integer multiples of 2^5

|

| 59 |

+

# if it is not a integer multiples of 2^5, padding zeros

|

| 60 |

+

device = image.device

|

| 61 |

+

b, c, h, w = image.shape

|

| 62 |

+

h_ = math.ceil(h / 32) * 32 if h % 32 != 0 else h

|

| 63 |

+

w_ = math.ceil(w / 32) * 32 if w % 32 != 0 else w

|

| 64 |

+

if h_ != h:

|

| 65 |

+

h_padding = torch.zeros(b, c, h_ - h, w, device=device)

|

| 66 |

+

image = torch.cat([image, h_padding], dim=2)

|

| 67 |

+

if w_ != w:

|

| 68 |

+

w_padding = torch.zeros(b, c, h_, w_ - w, device=device)

|

| 69 |

+

image = torch.cat([image, w_padding], dim=3)

|

| 70 |

+

# ====================================================

|

| 71 |

+

|

| 72 |

+

scores_map, descriptor_map = super().forward(image)

|

| 73 |

+

|

| 74 |

+

# ====================================================

|

| 75 |

+

if h_ != h or w_ != w:

|

| 76 |

+

descriptor_map = descriptor_map[:, :, :h, :w]

|

| 77 |

+

scores_map = scores_map[:, :, :h, :w] # Bx1xHxW

|

| 78 |

+

# ====================================================

|

| 79 |

+

|

| 80 |

+

# BxCxHxW

|

| 81 |

+

descriptor_map = torch.nn.functional.normalize(descriptor_map, p=2, dim=1)

|

| 82 |

+

|

| 83 |

+

if ret_dict:

|

| 84 |

+

return {'descriptor_map': descriptor_map, 'scores_map': scores_map, }

|

| 85 |

+

else:

|

| 86 |

+

return descriptor_map, scores_map

|

| 87 |

+

|

| 88 |

+

def forward(self, img, image_size_max=99999, sort=False, sub_pixel=False):

|

| 89 |

+

"""

|

| 90 |

+

:param img: np.array HxWx3, RGB

|

| 91 |

+

:param image_size_max: maximum image size, otherwise, the image will be resized

|

| 92 |

+

:param sort: sort keypoints by scores

|

| 93 |

+

:param sub_pixel: whether to use sub-pixel accuracy

|

| 94 |

+

:return: a dictionary with 'keypoints', 'descriptors', 'scores', and 'time'

|

| 95 |

+

"""

|

| 96 |

+

H, W, three = img.shape

|

| 97 |

+

assert three == 3, "input image shape should be [HxWx3]"

|

| 98 |

+

|

| 99 |

+

# ==================== image size constraint

|

| 100 |

+

image = deepcopy(img)

|

| 101 |

+

max_hw = max(H, W)

|

| 102 |

+

if max_hw > image_size_max:

|

| 103 |

+

ratio = float(image_size_max / max_hw)

|

| 104 |

+

image = cv2.resize(image, dsize=None, fx=ratio, fy=ratio)

|

| 105 |

+

|

| 106 |

+

# ==================== convert image to tensor

|

| 107 |

+

image = torch.from_numpy(image).to(self.device).to(torch.float32).permute(2, 0, 1)[None] / 255.0

|

| 108 |

+

|

| 109 |

+

# ==================== extract keypoints

|

| 110 |

+

start = time.time()

|

| 111 |

+

|

| 112 |

+

with torch.no_grad():

|

| 113 |

+

descriptor_map, scores_map = self.extract_dense_map(image)

|

| 114 |

+

keypoints, descriptors, scores, _ = self.dkd(scores_map, descriptor_map,

|

| 115 |

+

sub_pixel=sub_pixel)

|

| 116 |

+

keypoints, descriptors, scores = keypoints[0], descriptors[0], scores[0]

|

| 117 |

+

keypoints = (keypoints + 1) / 2 * keypoints.new_tensor([[W - 1, H - 1]])

|

| 118 |

+

|

| 119 |

+

if sort:

|

| 120 |

+

indices = torch.argsort(scores, descending=True)

|

| 121 |

+

keypoints = keypoints[indices]

|

| 122 |

+

descriptors = descriptors[indices]

|

| 123 |

+

scores = scores[indices]

|

| 124 |

+

|

| 125 |

+

end = time.time()

|

| 126 |

+

|

| 127 |

+

return {'keypoints': keypoints.cpu().numpy(),

|

| 128 |

+

'descriptors': descriptors.cpu().numpy(),

|

| 129 |

+

'scores': scores.cpu().numpy(),

|

| 130 |

+

'scores_map': scores_map.cpu().numpy(),

|

| 131 |

+

'time': end - start, }

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

if __name__ == '__main__':

|

| 135 |

+

import numpy as np

|

| 136 |

+

from thop import profile

|

| 137 |

+

|

| 138 |

+

net = ALike(c1=32, c2=64, c3=128, c4=128, dim=128, single_head=False)

|

| 139 |

+

|

| 140 |

+

image = np.random.random((640, 480, 3)).astype(np.float32)

|

| 141 |

+

flops, params = profile(net, inputs=(image, 9999, False), verbose=False)

|

| 142 |

+

print('{:<30} {:<8} GFLops'.format('Computational complexity: ', flops / 1e9))

|

| 143 |

+

print('{:<30} {:<8} KB'.format('Number of parameters: ', params / 1e3))

|

alnet.py

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn

|

| 3 |

+

from torchvision.models import resnet

|

| 4 |

+

from typing import Optional, Callable

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class ConvBlock(nn.Module):

|

| 8 |

+

def __init__(self, in_channels, out_channels,

|

| 9 |

+

gate: Optional[Callable[..., nn.Module]] = None,

|

| 10 |

+

norm_layer: Optional[Callable[..., nn.Module]] = None):

|

| 11 |

+

super().__init__()

|

| 12 |

+

if gate is None:

|

| 13 |

+

self.gate = nn.ReLU(inplace=True)

|

| 14 |

+

else:

|

| 15 |

+

self.gate = gate

|

| 16 |

+

if norm_layer is None:

|

| 17 |

+

norm_layer = nn.BatchNorm2d

|

| 18 |

+

self.conv1 = resnet.conv3x3(in_channels, out_channels)

|

| 19 |

+

self.bn1 = norm_layer(out_channels)

|

| 20 |

+

self.conv2 = resnet.conv3x3(out_channels, out_channels)

|

| 21 |

+

self.bn2 = norm_layer(out_channels)

|

| 22 |

+

|

| 23 |

+

def forward(self, x):

|

| 24 |

+

x = self.gate(self.bn1(self.conv1(x))) # B x in_channels x H x W

|

| 25 |

+

x = self.gate(self.bn2(self.conv2(x))) # B x out_channels x H x W

|

| 26 |

+

return x

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

# copied from torchvision\models\resnet.py#27->BasicBlock

|

| 30 |

+

class ResBlock(nn.Module):

|

| 31 |

+

expansion: int = 1

|

| 32 |

+

|

| 33 |

+

def __init__(

|

| 34 |

+

self,

|

| 35 |

+

inplanes: int,

|

| 36 |

+

planes: int,

|

| 37 |

+

stride: int = 1,

|

| 38 |

+

downsample: Optional[nn.Module] = None,

|

| 39 |

+

groups: int = 1,

|

| 40 |

+

base_width: int = 64,

|

| 41 |

+

dilation: int = 1,

|

| 42 |

+

gate: Optional[Callable[..., nn.Module]] = None,

|

| 43 |

+

norm_layer: Optional[Callable[..., nn.Module]] = None

|

| 44 |

+

) -> None:

|

| 45 |

+

super(ResBlock, self).__init__()

|

| 46 |

+

if gate is None:

|

| 47 |

+

self.gate = nn.ReLU(inplace=True)

|

| 48 |

+

else:

|

| 49 |

+

self.gate = gate

|

| 50 |

+

if norm_layer is None:

|

| 51 |

+

norm_layer = nn.BatchNorm2d

|

| 52 |

+

if groups != 1 or base_width != 64:

|

| 53 |

+

raise ValueError('ResBlock only supports groups=1 and base_width=64')

|

| 54 |

+

if dilation > 1:

|

| 55 |

+

raise NotImplementedError("Dilation > 1 not supported in ResBlock")

|

| 56 |

+

# Both self.conv1 and self.downsample layers downsample the input when stride != 1

|

| 57 |

+

self.conv1 = resnet.conv3x3(inplanes, planes, stride)

|

| 58 |

+

self.bn1 = norm_layer(planes)

|

| 59 |

+

self.conv2 = resnet.conv3x3(planes, planes)

|

| 60 |

+

self.bn2 = norm_layer(planes)

|

| 61 |

+

self.downsample = downsample

|

| 62 |

+

self.stride = stride

|

| 63 |

+

|

| 64 |

+

def forward(self, x: torch.Tensor) -> torch.Tensor:

|

| 65 |

+

identity = x

|

| 66 |

+

|

| 67 |

+

out = self.conv1(x)

|

| 68 |

+

out = self.bn1(out)

|

| 69 |

+

out = self.gate(out)

|

| 70 |

+

|

| 71 |

+

out = self.conv2(out)

|

| 72 |

+

out = self.bn2(out)

|

| 73 |

+

|

| 74 |

+

if self.downsample is not None:

|

| 75 |

+

identity = self.downsample(x)

|

| 76 |

+

|

| 77 |

+

out += identity

|

| 78 |

+

out = self.gate(out)

|

| 79 |

+

|

| 80 |

+

return out

|

| 81 |

+

|

| 82 |

+

|

| 83 |

+

class ALNet(nn.Module):

|

| 84 |

+

def __init__(self, c1: int = 32, c2: int = 64, c3: int = 128, c4: int = 128, dim: int = 128,

|

| 85 |

+

single_head: bool = True,

|

| 86 |

+

):

|

| 87 |

+

super().__init__()

|

| 88 |

+

|

| 89 |

+

self.gate = nn.ReLU(inplace=True)

|

| 90 |

+

|

| 91 |

+

self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2)

|

| 92 |

+

self.pool4 = nn.MaxPool2d(kernel_size=4, stride=4)

|

| 93 |

+

|

| 94 |

+

self.block1 = ConvBlock(3, c1, self.gate, nn.BatchNorm2d)

|

| 95 |

+

|

| 96 |

+

self.block2 = ResBlock(inplanes=c1, planes=c2, stride=1,

|

| 97 |

+

downsample=nn.Conv2d(c1, c2, 1),

|

| 98 |

+

gate=self.gate,

|

| 99 |

+

norm_layer=nn.BatchNorm2d)

|

| 100 |

+

self.block3 = ResBlock(inplanes=c2, planes=c3, stride=1,

|

| 101 |

+

downsample=nn.Conv2d(c2, c3, 1),

|

| 102 |

+

gate=self.gate,

|

| 103 |

+

norm_layer=nn.BatchNorm2d)

|

| 104 |

+

self.block4 = ResBlock(inplanes=c3, planes=c4, stride=1,

|

| 105 |

+

downsample=nn.Conv2d(c3, c4, 1),

|

| 106 |

+

gate=self.gate,

|

| 107 |

+

norm_layer=nn.BatchNorm2d)

|

| 108 |

+

|

| 109 |

+

# ================================== feature aggregation

|

| 110 |

+

self.conv1 = resnet.conv1x1(c1, dim // 4)

|

| 111 |

+

self.conv2 = resnet.conv1x1(c2, dim // 4)

|

| 112 |

+

self.conv3 = resnet.conv1x1(c3, dim // 4)

|

| 113 |

+

self.conv4 = resnet.conv1x1(dim, dim // 4)

|

| 114 |

+

self.upsample2 = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

|

| 115 |

+

self.upsample4 = nn.Upsample(scale_factor=4, mode='bilinear', align_corners=True)

|

| 116 |

+

self.upsample8 = nn.Upsample(scale_factor=8, mode='bilinear', align_corners=True)

|

| 117 |

+

self.upsample32 = nn.Upsample(scale_factor=32, mode='bilinear', align_corners=True)

|

| 118 |

+

|

| 119 |

+

# ================================== detector and descriptor head

|

| 120 |

+

self.single_head = single_head

|

| 121 |

+

if not self.single_head:

|

| 122 |

+

self.convhead1 = resnet.conv1x1(dim, dim)

|

| 123 |

+

self.convhead2 = resnet.conv1x1(dim, dim + 1)

|

| 124 |

+

|

| 125 |

+

def forward(self, image):

|

| 126 |

+

# ================================== feature encoder

|

| 127 |

+

x1 = self.block1(image) # B x c1 x H x W

|

| 128 |

+

x2 = self.pool2(x1)

|

| 129 |

+

x2 = self.block2(x2) # B x c2 x H/2 x W/2

|

| 130 |

+

x3 = self.pool4(x2)

|

| 131 |

+

x3 = self.block3(x3) # B x c3 x H/8 x W/8

|

| 132 |

+

x4 = self.pool4(x3)

|

| 133 |

+

x4 = self.block4(x4) # B x dim x H/32 x W/32

|

| 134 |

+

|

| 135 |

+

# ================================== feature aggregation

|

| 136 |

+

x1 = self.gate(self.conv1(x1)) # B x dim//4 x H x W

|

| 137 |

+

x2 = self.gate(self.conv2(x2)) # B x dim//4 x H//2 x W//2

|

| 138 |

+

x3 = self.gate(self.conv3(x3)) # B x dim//4 x H//8 x W//8

|

| 139 |

+

x4 = self.gate(self.conv4(x4)) # B x dim//4 x H//32 x W//32

|

| 140 |

+

x2_up = self.upsample2(x2) # B x dim//4 x H x W

|

| 141 |

+

x3_up = self.upsample8(x3) # B x dim//4 x H x W

|

| 142 |

+

x4_up = self.upsample32(x4) # B x dim//4 x H x W

|

| 143 |

+

x1234 = torch.cat([x1, x2_up, x3_up, x4_up], dim=1)

|

| 144 |

+

|

| 145 |

+

# ================================== detector and descriptor head

|

| 146 |

+

if not self.single_head:

|

| 147 |

+

x1234 = self.gate(self.convhead1(x1234))

|

| 148 |

+

x = self.convhead2(x1234) # B x dim+1 x H x W

|

| 149 |

+

|

| 150 |

+

descriptor_map = x[:, :-1, :, :]

|

| 151 |

+

scores_map = torch.sigmoid(x[:, -1, :, :]).unsqueeze(1)

|

| 152 |

+

|

| 153 |

+

return scores_map, descriptor_map

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

if __name__ == '__main__':

|

| 157 |

+

from thop import profile

|

| 158 |

+

|

| 159 |

+

net = ALNet(c1=16, c2=32, c3=64, c4=128, dim=128, single_head=True)

|

| 160 |

+

|

| 161 |

+

image = torch.randn(1, 3, 640, 480)

|

| 162 |

+

flops, params = profile(net, inputs=(image,), verbose=False)

|

| 163 |

+

print('{:<30} {:<8} GFLops'.format('Computational complexity: ', flops / 1e9))

|

| 164 |

+

print('{:<30} {:<8} KB'.format('Number of parameters: ', params / 1e3))

|

assets/alike.png

ADDED

|

assets/kitti/000100.png

ADDED

|

assets/kitti/000101.png

ADDED

|

assets/kitti/000102.png

ADDED

|

assets/kitti/000103.png

ADDED

|

assets/kitti/000104.png

ADDED

|

assets/kitti/000105.png

ADDED

|

assets/kitti/000106.png

ADDED

|

assets/kitti/000107.png

ADDED

|

assets/kitti/000108.png

ADDED

|

assets/kitti/000109.png

ADDED

|

assets/kitti/000110.png

ADDED

|

assets/kitti/000111.png

ADDED

|

assets/kitti/000112.png

ADDED

|

assets/kitti/000113.png

ADDED

|

assets/kitti/000114.png

ADDED

|

assets/kitti/000115.png

ADDED

|

assets/kitti/000116.png

ADDED

|

assets/kitti/000117.png

ADDED

|

assets/kitti/000118.png

ADDED

|

assets/kitti/000119.png

ADDED

|

assets/tum/1311868169.163498.png

ADDED

|

assets/tum/1311868169.263274.png

ADDED

|

assets/tum/1311868169.363470.png

ADDED

|

assets/tum/1311868169.463229.png

ADDED

|

assets/tum/1311868169.563501.png

ADDED

|

assets/tum/1311868169.663240.png

ADDED

|

assets/tum/1311868169.763417.png

ADDED

|

assets/tum/1311868169.863396.png

ADDED

|

assets/tum/1311868169.963415.png

ADDED

|

assets/tum/1311868170.063469.png

ADDED

|

assets/tum/1311868170.163416.png

ADDED

|

assets/tum/1311868170.263521.png

ADDED

|

assets/tum/1311868170.363400.png

ADDED

|

assets/tum/1311868170.463383.png

ADDED

|

assets/tum/1311868170.563345.png

ADDED

|

assets/tum/1311868170.663430.png

ADDED

|

assets/tum/1311868170.763453.png

ADDED

|

assets/tum/1311868170.863446.png

ADDED

|

assets/tum/1311868170.963440.png

ADDED

|

assets/tum/1311868171.063438.png

ADDED

|

demo.py

ADDED

|

@@ -0,0 +1,167 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import copy

|

| 2 |

+

import os

|

| 3 |

+

import cv2

|

| 4 |

+

import glob

|

| 5 |

+

import logging

|

| 6 |

+

import argparse

|

| 7 |

+

import numpy as np

|

| 8 |

+

from tqdm import tqdm

|

| 9 |

+

from alike import ALike, configs

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class ImageLoader(object):

|

| 13 |

+

def __init__(self, filepath: str):

|

| 14 |

+

self.N = 3000

|

| 15 |

+

if filepath.startswith('camera'):

|

| 16 |

+

camera = int(filepath[6:])

|

| 17 |

+

self.cap = cv2.VideoCapture(camera)

|

| 18 |

+

if not self.cap.isOpened():

|

| 19 |

+

raise IOError(f"Can't open camera {camera}!")

|

| 20 |

+

logging.info(f'Opened camera {camera}')

|

| 21 |

+

self.mode = 'camera'

|

| 22 |

+

elif os.path.exists(filepath):

|

| 23 |

+

if os.path.isfile(filepath):

|

| 24 |

+

self.cap = cv2.VideoCapture(filepath)

|

| 25 |

+

if not self.cap.isOpened():

|

| 26 |

+

raise IOError(f"Can't open video {filepath}!")

|

| 27 |

+

rate = self.cap.get(cv2.CAP_PROP_FPS)

|

| 28 |

+

self.N = int(self.cap.get(cv2.CAP_PROP_FRAME_COUNT)) - 1

|

| 29 |

+

duration = self.N / rate

|

| 30 |

+

logging.info(f'Opened video {filepath}')

|

| 31 |

+

logging.info(f'Frames: {self.N}, FPS: {rate}, Duration: {duration}s')

|

| 32 |

+

self.mode = 'video'

|

| 33 |

+

else:

|

| 34 |

+

self.images = glob.glob(os.path.join(filepath, '*.png')) + \

|

| 35 |

+

glob.glob(os.path.join(filepath, '*.jpg')) + \

|

| 36 |

+

glob.glob(os.path.join(filepath, '*.ppm'))

|

| 37 |

+

self.images.sort()

|

| 38 |

+

self.N = len(self.images)

|

| 39 |

+

logging.info(f'Loading {self.N} images')

|

| 40 |

+

self.mode = 'images'

|

| 41 |

+

else:

|

| 42 |

+

raise IOError('Error filepath (camerax/path of images/path of videos): ', filepath)

|

| 43 |

+

|

| 44 |

+

def __getitem__(self, item):

|

| 45 |

+

if self.mode == 'camera' or self.mode == 'video':

|

| 46 |

+

if item > self.N:

|

| 47 |

+

return None

|

| 48 |

+

ret, img = self.cap.read()

|

| 49 |

+

if not ret:

|

| 50 |

+

raise "Can't read image from camera"

|

| 51 |

+

if self.mode == 'video':

|

| 52 |

+

self.cap.set(cv2.CAP_PROP_POS_FRAMES, item)

|

| 53 |

+

elif self.mode == 'images':

|

| 54 |

+

filename = self.images[item]

|

| 55 |

+

img = cv2.imread(filename)

|

| 56 |

+

if img is None:

|

| 57 |

+

raise Exception('Error reading image %s' % filename)

|

| 58 |

+

|

| 59 |

+

return img

|

| 60 |

+

|

| 61 |

+

def __len__(self):

|

| 62 |

+

return self.N

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

class SimpleTracker(object):

|

| 66 |

+

def __init__(self):

|

| 67 |

+

self.pts_prev = None

|

| 68 |

+

self.desc_prev = None

|

| 69 |

+

|

| 70 |

+

def update(self, img, pts, desc):

|

| 71 |

+

N_matches = 0

|

| 72 |

+

if self.pts_prev is None:

|

| 73 |

+

self.pts_prev = pts

|

| 74 |

+

self.desc_prev = desc

|

| 75 |

+

|

| 76 |

+

out = copy.deepcopy(img)

|

| 77 |

+

for pt1 in pts:

|

| 78 |

+

p1 = (int(round(pt1[0])), int(round(pt1[1])))

|

| 79 |

+

cv2.circle(out, p1, 1, (0, 0, 255), -1, lineType=16)

|

| 80 |

+

else:

|

| 81 |

+

matches = self.mnn_mather(self.desc_prev, desc)

|

| 82 |

+

mpts1, mpts2 = self.pts_prev[matches[:, 0]], pts[matches[:, 1]]

|

| 83 |

+

N_matches = len(matches)

|

| 84 |

+

|

| 85 |

+

out = copy.deepcopy(img)

|

| 86 |

+

for pt1, pt2 in zip(mpts1, mpts2):

|

| 87 |

+

p1 = (int(round(pt1[0])), int(round(pt1[1])))

|

| 88 |

+

p2 = (int(round(pt2[0])), int(round(pt2[1])))

|

| 89 |

+

cv2.line(out, p1, p2, (0, 255, 0), lineType=16)

|

| 90 |

+

cv2.circle(out, p2, 1, (0, 0, 255), -1, lineType=16)

|

| 91 |

+

|

| 92 |

+

self.pts_prev = pts

|

| 93 |

+

self.desc_prev = desc

|

| 94 |

+

|

| 95 |

+

return out, N_matches

|

| 96 |

+

|

| 97 |

+

def mnn_mather(self, desc1, desc2):

|

| 98 |

+

sim = desc1 @ desc2.transpose()

|

| 99 |

+

sim[sim < 0.9] = 0

|

| 100 |

+

nn12 = np.argmax(sim, axis=1)

|

| 101 |

+

nn21 = np.argmax(sim, axis=0)

|

| 102 |

+

ids1 = np.arange(0, sim.shape[0])

|

| 103 |

+

mask = (ids1 == nn21[nn12])

|

| 104 |

+

matches = np.stack([ids1[mask], nn12[mask]])

|

| 105 |

+

return matches.transpose()

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

if __name__ == '__main__':

|

| 109 |

+

parser = argparse.ArgumentParser(description='ALike Demo.')

|

| 110 |

+

parser.add_argument('input', type=str, default='',

|

| 111 |

+

help='Image directory or movie file or "camera0" (for webcam0).')

|

| 112 |

+

parser.add_argument('--model', choices=['alike-t', 'alike-s', 'alike-n', 'alike-l'], default="alike-t",

|

| 113 |

+

help="The model configuration")

|

| 114 |

+

parser.add_argument('--device', type=str, default='cuda', help="Running device (default: cuda).")

|

| 115 |

+

parser.add_argument('--top_k', type=int, default=-1,

|

| 116 |

+

help='Detect top K keypoints. -1 for threshold based mode, >0 for top K mode. (default: -1)')

|

| 117 |

+

parser.add_argument('--scores_th', type=float, default=0.2,

|

| 118 |

+

help='Detector score threshold (default: 0.2).')

|

| 119 |

+

parser.add_argument('--n_limit', type=int, default=5000,

|

| 120 |

+

help='Maximum number of keypoints to be detected (default: 5000).')

|

| 121 |

+

parser.add_argument('--no_display', action='store_true',

|

| 122 |

+

help='Do not display images to screen. Useful if running remotely (default: False).')

|

| 123 |

+

parser.add_argument('--no_sub_pixel', action='store_true',

|

| 124 |

+

help='Do not detect sub-pixel keypoints (default: False).')

|

| 125 |

+

args = parser.parse_args()

|

| 126 |

+

|

| 127 |

+

logging.basicConfig(level=logging.INFO)

|

| 128 |

+

|

| 129 |

+

image_loader = ImageLoader(args.input)

|

| 130 |

+

model = ALike(**configs[args.model],

|

| 131 |

+

device=args.device,

|

| 132 |

+

top_k=args.top_k,

|

| 133 |

+

scores_th=args.scores_th,

|

| 134 |

+

n_limit=args.n_limit)

|

| 135 |

+

tracker = SimpleTracker()

|

| 136 |

+

|

| 137 |

+

if not args.no_display:

|

| 138 |

+

logging.info("Press 'q' to stop!")

|

| 139 |

+

cv2.namedWindow(args.model)

|

| 140 |

+

|

| 141 |

+

runtime = []

|

| 142 |

+

progress_bar = tqdm(image_loader)

|

| 143 |

+

for img in progress_bar:

|

| 144 |

+

if img is None:

|

| 145 |

+

break

|

| 146 |

+

|

| 147 |

+

pred = model(img, sub_pixel=not args.no_sub_pixel)

|

| 148 |

+

kpts = pred['keypoints']

|

| 149 |

+

desc = pred['descriptors']

|

| 150 |

+

runtime.append(pred['time'])

|

| 151 |

+

|

| 152 |

+

out, N_matches = tracker.update(img, kpts, desc)

|

| 153 |

+

|

| 154 |

+

ave_fps = (1. / np.stack(runtime)).mean()

|

| 155 |

+

status = f"Fps:{ave_fps:.1f}, Keypoints/Matches: {len(kpts)}/{N_matches}"

|

| 156 |

+

progress_bar.set_description(status)

|

| 157 |

+

|

| 158 |

+

if not args.no_display:

|

| 159 |

+

cv2.setWindowTitle(args.model, args.model + ': ' + status)

|

| 160 |

+

cv2.imshow(args.model, out)

|

| 161 |

+

if cv2.waitKey(1) == ord('q'):

|

| 162 |

+

break

|

| 163 |

+

|

| 164 |

+

logging.info('Finished!')

|

| 165 |

+

if not args.no_display:

|

| 166 |

+

logging.info('Press any key to exit!')

|

| 167 |

+

cv2.waitKey()

|

hseq/cache/alike-l-ms.npy

ADDED

|

Binary file (13.1 kB). View file

|

|

|

hseq/cache/alike-l.npy

ADDED

|

Binary file (13.1 kB). View file

|

|

|

hseq/cache/alike-n-ms.npy

ADDED

|

Binary file (13.1 kB). View file

|

|

|

hseq/cache/alike-n.npy

ADDED

|

Binary file (13.1 kB). View file

|

|

|

hseq/cache/aslfeat.npy

ADDED

|

Binary file (15.4 kB). View file

|

|

|