Spaces:

Sleeping

Sleeping

First commit.

Browse files- app.py +137 -0

- compo.jpg +0 -0

- requirements.txt +3 -0

app.py

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Reference:

|

| 2 |

+

# https://huggingface.co/spaces/Sagar23p/mistralAI_chatBoat

|

| 3 |

+

|

| 4 |

+

import gradio as gr

|

| 5 |

+

from paddleocr import PaddleOCR, draw_ocr

|

| 6 |

+

import asyncio

|

| 7 |

+

import requests

|

| 8 |

+

from huggingface_hub import InferenceClient

|

| 9 |

+

import os

|

| 10 |

+

|

| 11 |

+

API_TOKEN = os.environ.get('HUGGINGFACE_API_KEY')

|

| 12 |

+

|

| 13 |

+

API_URL = "https://api-inference.huggingface.co/models/meta-llama/Meta-Llama-3-8B-Instruct"

|

| 14 |

+

headers = {"Authorization": "Bearer " +API_TOKEN}

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def query(question):

|

| 18 |

+

client = InferenceClient("meta-llama/Meta-Llama-3-8B-Instruct", headers=headers)

|

| 19 |

+

messages = [

|

| 20 |

+

{

|

| 21 |

+

"role": "system",

|

| 22 |

+

"content": "You are a helpful and honest assistant. Please, respond concisely and truthfully.",

|

| 23 |

+

},

|

| 24 |

+

{

|

| 25 |

+

"role": "user",

|

| 26 |

+

"content": question,

|

| 27 |

+

},

|

| 28 |

+

]

|

| 29 |

+

output = client.chat_completion(messages, model="meta-llama/Meta-Llama-3-8B-Instruct", max_tokens=1000)

|

| 30 |

+

if output.choices[0].message['content'].find('Yes')>=0:

|

| 31 |

+

messages+=[output.choices[0].message]

|

| 32 |

+

messages+=[{"role": "user",

|

| 33 |

+

"content": "What is the mistake and what is the correct sentence?"}]

|

| 34 |

+

output = client.chat_completion(messages, model="meta-llama/Meta-Llama-3-8B-Instruct", max_tokens=1000)

|

| 35 |

+

return output.choices[0].message['content']

|

| 36 |

+

|

| 37 |

+

def image2Text(image:str, langChoice:str):

|

| 38 |

+

ocr = PaddleOCR(use_angle_cls=True, lang=langChoice) # need to run only once to download and load model into memory

|

| 39 |

+

img_path = image

|

| 40 |

+

result = ocr.ocr(img_path, cls=True)

|

| 41 |

+

text = ""

|

| 42 |

+

for idx in range(len(result)):

|

| 43 |

+

res = result[idx]

|

| 44 |

+

for line in res:

|

| 45 |

+

import re

|

| 46 |

+

# remove pinyin if it's Chinese

|

| 47 |

+

if langChoice=="ch":

|

| 48 |

+

#t = re.sub('[a-z0-9.]', '', line[1][0])

|

| 49 |

+

t = re.sub('[a-z]', '', line[1][0])

|

| 50 |

+

t = re.sub('[0-9]\.', '', t)

|

| 51 |

+

t = t.replace(" ", "")

|

| 52 |

+

t = t.replace("()", "")

|

| 53 |

+

t = t.replace("()", "")

|

| 54 |

+

t = t.replace("( )", "")

|

| 55 |

+

t = t.replace("()", "")

|

| 56 |

+

if t!="":

|

| 57 |

+

text +=((t) + "\n")

|

| 58 |

+

else:

|

| 59 |

+

print(line)

|

| 60 |

+

t = line[1][0]

|

| 61 |

+

t = re.sub('Term [0-9] Spelling', '', t)

|

| 62 |

+

t = re.sub('Page [0-9]', '', t)

|

| 63 |

+

if t!="":

|

| 64 |

+

text += (t + "\n")

|

| 65 |

+

|

| 66 |

+

text = text.replace("\n"," ").replace(".",".\n")

|

| 67 |

+

|

| 68 |

+

return text

|

| 69 |

+

|

| 70 |

+

def text2PrevMistake(recognized_text, langChoice:str, current_line, session_data):

|

| 71 |

+

if len(session_data) == 0 or session_data[0] == 0 or session_data[0] == 1:

|

| 72 |

+

session_data = []

|

| 73 |

+

else:

|

| 74 |

+

session_data = [session_data[0]-2]

|

| 75 |

+

|

| 76 |

+

return text2NextMistake(recognized_text, langChoice, current_line, session_data)

|

| 77 |

+

|

| 78 |

+

def text2NextMistake(recognized_text, langChoice:str, current_line, session_data):

|

| 79 |

+

|

| 80 |

+

lines = recognized_text.split("\n")

|

| 81 |

+

while 1:

|

| 82 |

+

if len(lines) == 0:

|

| 83 |

+

return current_line, "No mistake. Empty text.", session_data

|

| 84 |

+

elif len(session_data) == 0:

|

| 85 |

+

session_data = [0]

|

| 86 |

+

current_line = lines[session_data[0]]

|

| 87 |

+

elif session_data[0] + 1 >= len(lines):

|

| 88 |

+

session_data = []

|

| 89 |

+

return current_line, "No more mistake. End of text", session_data

|

| 90 |

+

else:

|

| 91 |

+

session_data = [session_data[0]+1]

|

| 92 |

+

current_line = lines[session_data[0]]

|

| 93 |

+

|

| 94 |

+

question = f"Only answer Yes or No. Is there grammatical or logical mistake in the sentence: {current_line}"

|

| 95 |

+

correction_text = query(question)

|

| 96 |

+

|

| 97 |

+

if correction_text.find("No") == 0:

|

| 98 |

+

continue

|

| 99 |

+

else:

|

| 100 |

+

break

|

| 101 |

+

|

| 102 |

+

return current_line, correction_text, session_data

|

| 103 |

+

|

| 104 |

+

with gr.Blocks() as demo:

|

| 105 |

+

gr.HTML("""<h1 align="center">Composition Corrector</h1>""")

|

| 106 |

+

session_data = gr.State([])

|

| 107 |

+

|

| 108 |

+

with gr.Row():

|

| 109 |

+

with gr.Column(scale=1):

|

| 110 |

+

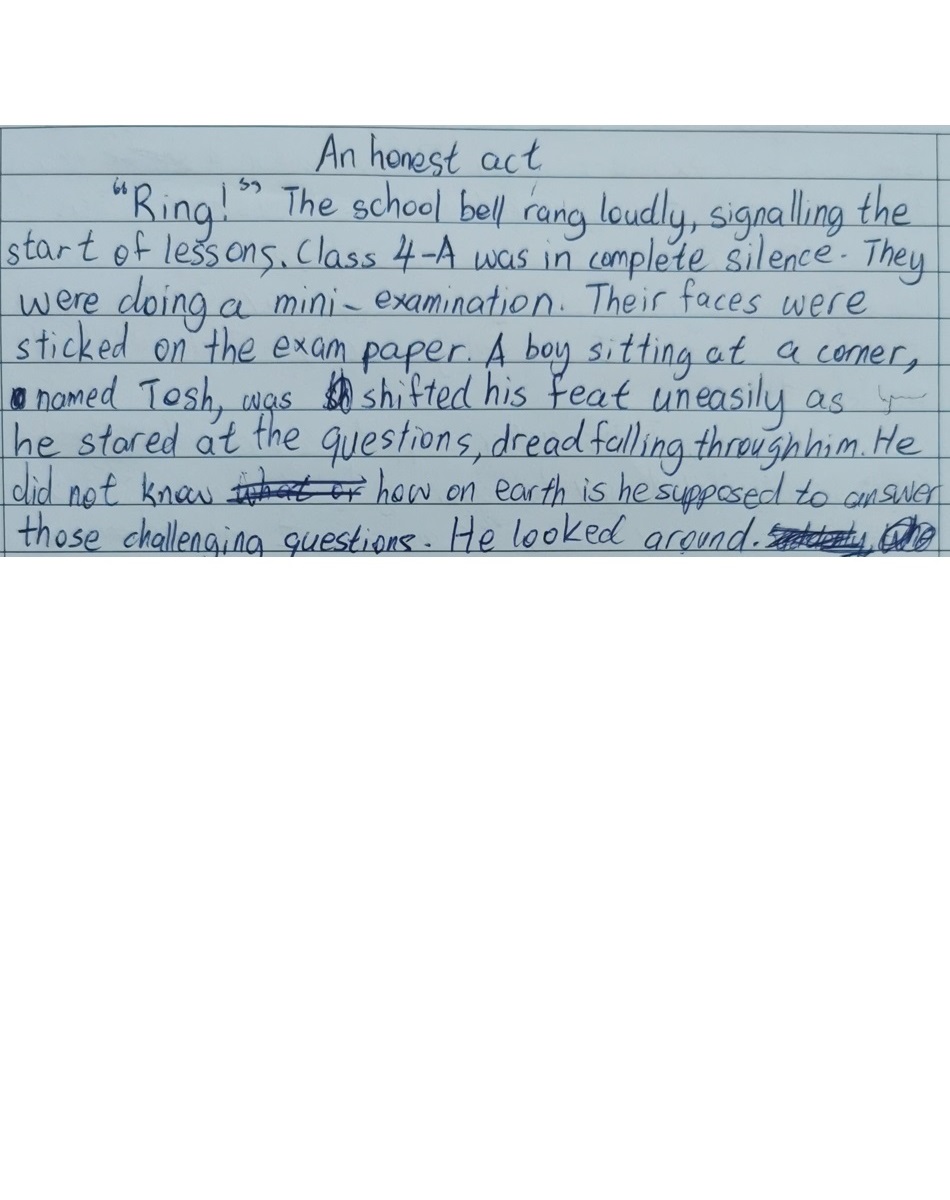

upload_image = gr.Image(height=400,width=400, value = "compo.jpg")

|

| 111 |

+

langChoice = gr.Radio(["en", "ch"], value="en", label="Select lanaguage: 'ch' for Chinese, 'en' for English", info="")

|

| 112 |

+

with gr.Column(scale=3):

|

| 113 |

+

recognized_text = gr.Textbox(show_label=False, placeholder="composition", lines=15)

|

| 114 |

+

toText = gr.Button("Convert image to text")

|

| 115 |

+

|

| 116 |

+

current_line = gr.Textbox(show_label=False, placeholder="current line", lines=1)

|

| 117 |

+

correction_text = gr.Textbox(show_label=False, placeholder="corrections...", lines=15)

|

| 118 |

+

|

| 119 |

+

with gr.Row():

|

| 120 |

+

with gr.Column(scale=1):

|

| 121 |

+

toPrevMistake = gr.Button("Find prev mistake", variant="primary")

|

| 122 |

+

with gr.Column(scale=1):

|

| 123 |

+

toNextMistake = gr.Button("Find next mistake", variant="primary")

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

toText.click(

|

| 128 |

+

image2Text,

|

| 129 |

+

[upload_image, langChoice],

|

| 130 |

+

[recognized_text],

|

| 131 |

+

#show_progress=True,

|

| 132 |

+

|

| 133 |

+

)

|

| 134 |

+

toNextMistake.click(text2NextMistake , [recognized_text, langChoice, current_line, session_data], [current_line, correction_text, session_data])

|

| 135 |

+

toPrevMistake.click(text2PrevMistake , [recognized_text, langChoice, current_line, session_data], [current_line, correction_text, session_data])

|

| 136 |

+

|

| 137 |

+

demo.queue().launch(share=False, inbrowser=True)

|

compo.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio

|

| 2 |

+

paddlepaddle

|

| 3 |

+

paddleocr

|