adding_note (#2)

Browse files- Adding timeline info of the autocast dataset (2a62f64d92e0501bd4d1944baa4248aa67c82dae)

- Merge branch 'main' into pr/2 (1cd55fe729552dab909b3de1c9c7ae992766ed61)

- Small adjustment on the about benchmark (f8a7cbc3fc511b4cc1790aa843bf930ccb86c96a)

- images/autocast_dataset_timeline.png +0 -0

- tabs/faq.py +7 -2

images/autocast_dataset_timeline.png

ADDED

|

tabs/faq.py

CHANGED

|

@@ -1,10 +1,15 @@

|

|

| 1 |

about_olas_predict_benchmark = """\

|

| 2 |

How good are LLMs at making predictions about events in the future? This is a topic that hasn't been well explored to date.

|

| 3 |

[Olas Predict](https://olas.network/services/prediction-agents) aims to rectify this by incentivizing the creation of agents that make predictions about future events (through prediction markets).

|

| 4 |

-

These agents are tested in the wild on real-time prediction market data, which you can see on [here](https://huggingface.co/datasets/valory/prediction_market_data) on HuggingFace (updated weekly)

|

| 5 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

This HF Space showcases the performance of the various models and workflows (called tools in the Olas ecosystem) for making predictions, in terms of accuracy and cost.\

|

| 7 |

|

|

|

|

| 8 |

🤗 Pick a tool and run it on the benchmark using the "🔥 Run the Benchmark" page!

|

| 9 |

"""

|

| 10 |

|

|

|

|

| 1 |

about_olas_predict_benchmark = """\

|

| 2 |

How good are LLMs at making predictions about events in the future? This is a topic that hasn't been well explored to date.

|

| 3 |

[Olas Predict](https://olas.network/services/prediction-agents) aims to rectify this by incentivizing the creation of agents that make predictions about future events (through prediction markets).

|

| 4 |

+

These agents are tested in the wild on real-time prediction market data, which you can see on [here](https://huggingface.co/datasets/valory/prediction_market_data) on HuggingFace (updated weekly).\

|

| 5 |

+

|

| 6 |

+

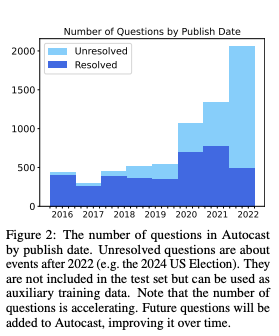

However, if you want to create an agent with new tools, waiting for real-time results to arrive is slow. This is where the Olas Predict Benchmark comes in. It allows devs to backtest new approaches on a historical event forecasting dataset (refined from [Autocast](https://arxiv.org/abs/2206.15474)) with high iteration speed.

|

| 7 |

+

|

| 8 |

+

🗓 🧐 The autocast dataset resolved-questions are from a timeline ending in 2022, so the models might be trained on some of these data. Thus the current reported accuracy measure might be an in-sample forecasting one.

|

| 9 |

+

However, we can learn about the relative strengths of the different approaches (e.g models and logic), before testing the most promising ones on real-time unseen data.

|

| 10 |

This HF Space showcases the performance of the various models and workflows (called tools in the Olas ecosystem) for making predictions, in terms of accuracy and cost.\

|

| 11 |

|

| 12 |

+

|

| 13 |

🤗 Pick a tool and run it on the benchmark using the "🔥 Run the Benchmark" page!

|

| 14 |

"""

|

| 15 |

|