Spaces:

Runtime error

Runtime error

Add application file

Browse files- README.md +0 -13

- app.py +76 -0

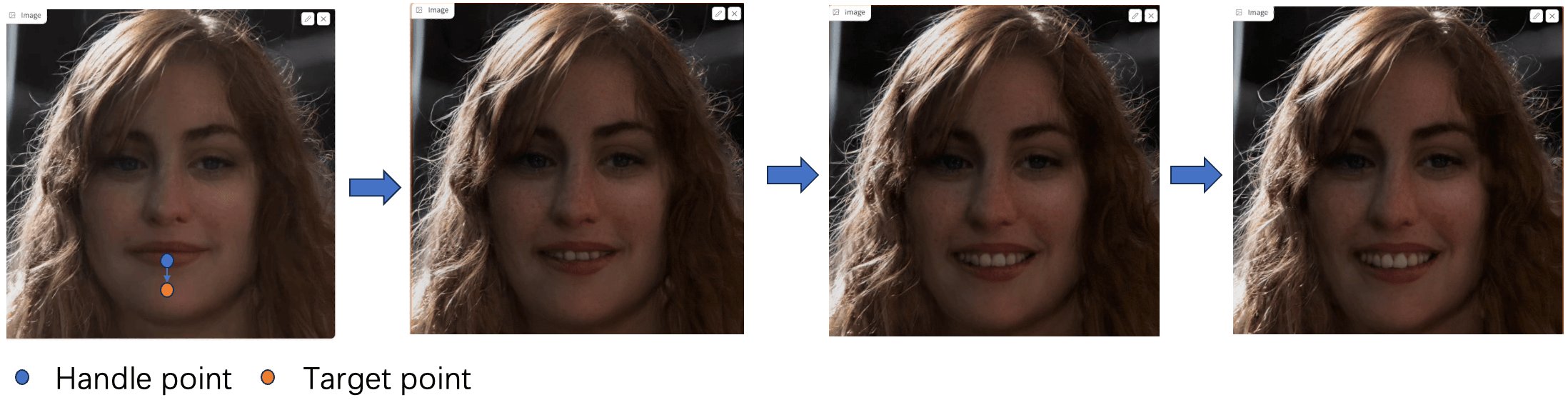

- assets/demo.png +0 -0

- drag_gan.py +243 -0

- requirements.txt +4 -0

- stylegan2/_init__.py +0 -0

- stylegan2/model.py +699 -0

- stylegan2/op/__init__.py +2 -0

- stylegan2/op/conv2d_gradfix.py +227 -0

- stylegan2/op/fused_act.py +127 -0

- stylegan2/op/fused_bias_act.cpp +32 -0

- stylegan2/op/fused_bias_act_kernel.cu +105 -0

- stylegan2/op/upfirdn2d.cpp +31 -0

- stylegan2/op/upfirdn2d.py +209 -0

- stylegan2/op/upfirdn2d_kernel.cu +369 -0

README.md

DELETED

|

@@ -1,13 +0,0 @@

|

|

| 1 |

-

---

|

| 2 |

-

title: DragGAN Unofficial

|

| 3 |

-

emoji: 💻

|

| 4 |

-

colorFrom: indigo

|

| 5 |

-

colorTo: yellow

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 3.29.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: apache-2.0

|

| 11 |

-

---

|

| 12 |

-

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

app.py

ADDED

|

@@ -0,0 +1,76 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import torch

|

| 3 |

+

from drag_gan import stylegan2, drag_gan

|

| 4 |

+

from PIL import Image

|

| 5 |

+

|

| 6 |

+

device = 'cuda'

|

| 7 |

+

g_ema = stylegan2().to(device)

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def to_image(tensor):

|

| 11 |

+

tensor = tensor.squeeze(0).permute(1, 2, 0)

|

| 12 |

+

arr = tensor.detach().cpu().numpy()

|

| 13 |

+

arr = (arr - arr.min()) / (arr.max() - arr.min())

|

| 14 |

+

arr = arr * 255

|

| 15 |

+

return arr.astype('uint8')

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def on_click(image, target_point, points, evt: gr.SelectData):

|

| 19 |

+

x = evt.index[1]

|

| 20 |

+

y = evt.index[0]

|

| 21 |

+

if target_point:

|

| 22 |

+

image[x:x + 5, y:y + 5, :] = 255

|

| 23 |

+

points['target'].append([evt.index[1], evt.index[0]])

|

| 24 |

+

return image, str(evt.index)

|

| 25 |

+

points['handle'].append([evt.index[1], evt.index[0]])

|

| 26 |

+

image[x:x + 5, y:y + 5, :] = 0

|

| 27 |

+

return image, str(evt.index)

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

def on_drag(points, max_iters, state):

|

| 31 |

+

max_iters = int(max_iters)

|

| 32 |

+

latent = state['latent']

|

| 33 |

+

noise = state['noise']

|

| 34 |

+

F = state['F']

|

| 35 |

+

|

| 36 |

+

handle_points = [torch.tensor(p).float() for p in points['handle']]

|

| 37 |

+

target_points = [torch.tensor(p).float() for p in points['target']]

|

| 38 |

+

mask = torch.zeros((1, 1, 1024, 1024)).to(device)

|

| 39 |

+

mask[..., 720:820, 390:600] = 1

|

| 40 |

+

for sample2, latent, F in drag_gan(g_ema, latent, noise, F,

|

| 41 |

+

handle_points, target_points, mask,

|

| 42 |

+

max_iters=max_iters):

|

| 43 |

+

points = {'target': [], 'handle': []}

|

| 44 |

+

image = to_image(sample2)

|

| 45 |

+

|

| 46 |

+

state['F'] = F

|

| 47 |

+

state['latent'] = latent

|

| 48 |

+

yield points, image, state

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

def main():

|

| 52 |

+

torch.cuda.manual_seed(25)

|

| 53 |

+

sample_z = torch.randn([1, 512], device=device)

|

| 54 |

+

latent, noise = g_ema.prepare([sample_z])

|

| 55 |

+

sample, F = g_ema.generate(latent, noise)

|

| 56 |

+

|

| 57 |

+

with gr.Blocks() as demo:

|

| 58 |

+

state = gr.State({

|

| 59 |

+

'latent': latent,

|

| 60 |

+

'noise': noise,

|

| 61 |

+

'F': F,

|

| 62 |

+

})

|

| 63 |

+

max_iters = gr.Slider(1, 100, 5, label='Max Iterations')

|

| 64 |

+

image = gr.Image(to_image(sample)).style(height=512, width=512)

|

| 65 |

+

text = gr.Textbox()

|

| 66 |

+

btn = gr.Button('Drag it')

|

| 67 |

+

points = gr.State({'target': [], 'handle': []})

|

| 68 |

+

target_point = gr.Checkbox(label='Target Point')

|

| 69 |

+

image.select(on_click, [image, target_point, points], [image, text])

|

| 70 |

+

btn.click(on_drag, inputs=[points, max_iters, state], outputs=[points, image, state])

|

| 71 |

+

|

| 72 |

+

demo.queue(concurrency_count=5, max_size=20).launch()

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

if __name__ == '__main__':

|

| 76 |

+

main()

|

assets/demo.png

ADDED

|

drag_gan.py

ADDED

|

@@ -0,0 +1,243 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import copy

|

| 2 |

+

import os

|

| 3 |

+

import random

|

| 4 |

+

import urllib.request

|

| 5 |

+

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

import torch.nn.functional as FF

|

| 9 |

+

import torch.optim

|

| 10 |

+

from torchvision import utils

|

| 11 |

+

from tqdm import tqdm

|

| 12 |

+

|

| 13 |

+

from stylegan2.model import Generator

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

class DownloadProgressBar(tqdm):

|

| 17 |

+

def update_to(self, b=1, bsize=1, tsize=None):

|

| 18 |

+

if tsize is not None:

|

| 19 |

+

self.total = tsize

|

| 20 |

+

self.update(b * bsize - self.n)

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def get_path(base_path):

|

| 24 |

+

BASE_DIR = os.path.join('checkpoints')

|

| 25 |

+

|

| 26 |

+

save_path = os.path.join(BASE_DIR, base_path)

|

| 27 |

+

if not os.path.exists(save_path):

|

| 28 |

+

url = f"https://huggingface.co/aaronb/StyleGAN2/resolve/main/{base_path}"

|

| 29 |

+

print(f'{base_path} not found')

|

| 30 |

+

print('Try to download from huggingface: ', url)

|

| 31 |

+

os.makedirs(os.path.dirname(save_path), exist_ok=True)

|

| 32 |

+

download_url(url, save_path)

|

| 33 |

+

print('Downloaded to ', save_path)

|

| 34 |

+

return save_path

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

def download_url(url, output_path):

|

| 38 |

+

with DownloadProgressBar(unit='B', unit_scale=True,

|

| 39 |

+

miniters=1, desc=url.split('/')[-1]) as t:

|

| 40 |

+

urllib.request.urlretrieve(url, filename=output_path, reporthook=t.update_to)

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

class CustomGenerator(Generator):

|

| 44 |

+

def prepare(

|

| 45 |

+

self,

|

| 46 |

+

styles,

|

| 47 |

+

inject_index=None,

|

| 48 |

+

truncation=1,

|

| 49 |

+

truncation_latent=None,

|

| 50 |

+

input_is_latent=False,

|

| 51 |

+

noise=None,

|

| 52 |

+

randomize_noise=True,

|

| 53 |

+

):

|

| 54 |

+

if not input_is_latent:

|

| 55 |

+

styles = [self.style(s) for s in styles]

|

| 56 |

+

|

| 57 |

+

if noise is None:

|

| 58 |

+

if randomize_noise:

|

| 59 |

+

noise = [None] * self.num_layers

|

| 60 |

+

else:

|

| 61 |

+

noise = [

|

| 62 |

+

getattr(self.noises, f"noise_{i}") for i in range(self.num_layers)

|

| 63 |

+

]

|

| 64 |

+

|

| 65 |

+

if truncation < 1:

|

| 66 |

+

style_t = []

|

| 67 |

+

|

| 68 |

+

for style in styles:

|

| 69 |

+

style_t.append(

|

| 70 |

+

truncation_latent + truncation * (style - truncation_latent)

|

| 71 |

+

)

|

| 72 |

+

|

| 73 |

+

styles = style_t

|

| 74 |

+

|

| 75 |

+

if len(styles) < 2:

|

| 76 |

+

inject_index = self.n_latent

|

| 77 |

+

|

| 78 |

+

if styles[0].ndim < 3:

|

| 79 |

+

latent = styles[0].unsqueeze(1).repeat(1, inject_index, 1)

|

| 80 |

+

|

| 81 |

+

else:

|

| 82 |

+

latent = styles[0]

|

| 83 |

+

|

| 84 |

+

else:

|

| 85 |

+

if inject_index is None:

|

| 86 |

+

inject_index = random.randint(1, self.n_latent - 1)

|

| 87 |

+

|

| 88 |

+

latent = styles[0].unsqueeze(1).repeat(1, inject_index, 1)

|

| 89 |

+

latent2 = styles[1].unsqueeze(1).repeat(1, self.n_latent - inject_index, 1)

|

| 90 |

+

|

| 91 |

+

latent = torch.cat([latent, latent2], 1)

|

| 92 |

+

|

| 93 |

+

return latent, noise

|

| 94 |

+

|

| 95 |

+

def generate(

|

| 96 |

+

self,

|

| 97 |

+

latent,

|

| 98 |

+

noise,

|

| 99 |

+

):

|

| 100 |

+

out = self.input(latent)

|

| 101 |

+

out = self.conv1(out, latent[:, 0], noise=noise[0])

|

| 102 |

+

|

| 103 |

+

skip = self.to_rgb1(out, latent[:, 1])

|

| 104 |

+

i = 1

|

| 105 |

+

for conv1, conv2, noise1, noise2, to_rgb in zip(

|

| 106 |

+

self.convs[::2], self.convs[1::2], noise[1::2], noise[2::2], self.to_rgbs

|

| 107 |

+

):

|

| 108 |

+

out = conv1(out, latent[:, i], noise=noise1)

|

| 109 |

+

out = conv2(out, latent[:, i + 1], noise=noise2)

|

| 110 |

+

skip = to_rgb(out, latent[:, i + 2], skip)

|

| 111 |

+

if out.shape[-1] == 256: F = out

|

| 112 |

+

i += 2

|

| 113 |

+

|

| 114 |

+

image = skip

|

| 115 |

+

F = FF.interpolate(F, image.shape[-2:], mode='bilinear')

|

| 116 |

+

return image, F

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

def stylegan2(

|

| 120 |

+

size=1024,

|

| 121 |

+

channel_multiplier=2,

|

| 122 |

+

latent=512,

|

| 123 |

+

n_mlp=8,

|

| 124 |

+

ckpt='stylegan2-ffhq-config-f.pt'

|

| 125 |

+

):

|

| 126 |

+

g_ema = CustomGenerator(size, latent, n_mlp, channel_multiplier=channel_multiplier)

|

| 127 |

+

checkpoint = torch.load(get_path(ckpt))

|

| 128 |

+

g_ema.load_state_dict(checkpoint["g_ema"], strict=False)

|

| 129 |

+

g_ema.requires_grad_(False)

|

| 130 |

+

g_ema.eval()

|

| 131 |

+

return g_ema

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

def bilinear_interpolate_torch(im, y, x):

|

| 135 |

+

"""

|

| 136 |

+

im : B,C,H,W

|

| 137 |

+

y : 1,numPoints -- pixel location y float

|

| 138 |

+

x : 1,numPOints -- pixel location y float

|

| 139 |

+

"""

|

| 140 |

+

|

| 141 |

+

x0 = torch.floor(x).long()

|

| 142 |

+

x1 = x0 + 1

|

| 143 |

+

|

| 144 |

+

y0 = torch.floor(y).long()

|

| 145 |

+

y1 = y0 + 1

|

| 146 |

+

|

| 147 |

+

wa = (x1.float() - x) * (y1.float() - y)

|

| 148 |

+

wb = (x1.float() - x) * (y - y0.float())

|

| 149 |

+

wc = (x - x0.float()) * (y1.float() - y)

|

| 150 |

+

wd = (x - x0.float()) * (y - y0.float())

|

| 151 |

+

# Instead of clamp

|

| 152 |

+

x1 = x1 - torch.floor(x1 / im.shape[3]).int()

|

| 153 |

+

y1 = y1 - torch.floor(y1 / im.shape[2]).int()

|

| 154 |

+

Ia = im[:, :, y0, x0]

|

| 155 |

+

Ib = im[:, :, y1, x0]

|

| 156 |

+

Ic = im[:, :, y0, x1]

|

| 157 |

+

Id = im[:, :, y1, x1]

|

| 158 |

+

|

| 159 |

+

return Ia * wa + Ib * wb + Ic * wc + Id * wd

|

| 160 |

+

|

| 161 |

+

|

| 162 |

+

def drag_gan(g_ema, latent: torch.Tensor, noise, F, handle_points, target_points, mask, max_iters=1000):

|

| 163 |

+

handle_points0 = copy.deepcopy(handle_points)

|

| 164 |

+

n = len(handle_points)

|

| 165 |

+

r1, r2, lam, d = 3, 12, 20, 1

|

| 166 |

+

|

| 167 |

+

def neighbor(x, y, d):

|

| 168 |

+

points = []

|

| 169 |

+

for i in range(x - d, x + d):

|

| 170 |

+

for j in range(y - d, y + d):

|

| 171 |

+

points.append(torch.tensor([i, j]).float().cuda())

|

| 172 |

+

return points

|

| 173 |

+

|

| 174 |

+

F0 = F.detach().clone()

|

| 175 |

+

# latent = latent.detach().clone().requires_grad_(True)

|

| 176 |

+

latent_trainable = latent[:, :6, :].detach().clone().requires_grad_(True)

|

| 177 |

+

latent_untrainable = latent[:, 6:, :].detach().clone().requires_grad_(False)

|

| 178 |

+

optimizer = torch.optim.Adam([latent_trainable], lr=2e-3)

|

| 179 |

+

for iter in range(max_iters):

|

| 180 |

+

for s in range(1):

|

| 181 |

+

optimizer.zero_grad()

|

| 182 |

+

latent = torch.cat([latent_trainable, latent_untrainable], dim=1)

|

| 183 |

+

sample2, F2 = g_ema.generate(latent, noise)

|

| 184 |

+

|

| 185 |

+

# motion supervision

|

| 186 |

+

loss = 0

|

| 187 |

+

for i in range(n):

|

| 188 |

+

pi, ti = handle_points[i], target_points[i]

|

| 189 |

+

di = (ti - pi) / torch.sum((ti - pi)**2)

|

| 190 |

+

|

| 191 |

+

for qi in neighbor(int(pi[0]), int(pi[1]), r1):

|

| 192 |

+

# f1 = F[..., int(qi[0]), int(qi[1])]

|

| 193 |

+

# f2 = F2[..., int(qi[0] + di[0]), int(qi[1] + di[1])]

|

| 194 |

+

f1 = bilinear_interpolate_torch(F2, qi[0], qi[1]).detach()

|

| 195 |

+

f2 = bilinear_interpolate_torch(F2, qi[0] + di[0], qi[1] + di[1])

|

| 196 |

+

loss += FF.l1_loss(f2, f1)

|

| 197 |

+

|

| 198 |

+

# loss += ((F-F0) * (1-mask)).abs().mean() * lam

|

| 199 |

+

|

| 200 |

+

loss.backward()

|

| 201 |

+

optimizer.step()

|

| 202 |

+

|

| 203 |

+

print(latent_trainable[0, 0, :10])

|

| 204 |

+

# if s % 10 ==0:

|

| 205 |

+

# utils.save_image(sample2, "test2.png", normalize=True, range=(-1, 1))

|

| 206 |

+

|

| 207 |

+

# point tracking

|

| 208 |

+

with torch.no_grad():

|

| 209 |

+

sample2, F2 = g_ema.generate(latent, noise)

|

| 210 |

+

for i in range(n):

|

| 211 |

+

pi = handle_points0[i]

|

| 212 |

+

# f = F0[..., int(pi[0]), int(pi[1])]

|

| 213 |

+

f0 = bilinear_interpolate_torch(F0, pi[0], pi[1])

|

| 214 |

+

minv = 1e9

|

| 215 |

+

minx = 1e9

|

| 216 |

+

miny = 1e9

|

| 217 |

+

for qi in neighbor(int(handle_points[i][0]), int(handle_points[i][1]), r2):

|

| 218 |

+

# f2 = F2[..., int(qi[0]), int(qi[1])]

|

| 219 |

+

try:

|

| 220 |

+

f2 = bilinear_interpolate_torch(F2, qi[0], qi[1])

|

| 221 |

+

except:

|

| 222 |

+

import ipdb

|

| 223 |

+

ipdb.set_trace()

|

| 224 |

+

v = torch.norm(f2 - f0, p=1)

|

| 225 |

+

if v < minv:

|

| 226 |

+

minv = v

|

| 227 |

+

minx = int(qi[0])

|

| 228 |

+

miny = int(qi[1])

|

| 229 |

+

handle_points[i][0] = minx

|

| 230 |

+

handle_points[i][1] = miny

|

| 231 |

+

|

| 232 |

+

F = F2.detach().clone()

|

| 233 |

+

if iter % 1 == 0:

|

| 234 |

+

print(iter, loss.item(), handle_points, target_points)

|

| 235 |

+

# p = handle_points[0].int()

|

| 236 |

+

# sample2[0, :, p[0] - 5:p[0] + 5, p[1] - 5:p[1] + 5] = sample2[0, :, p[0] - 5:p[0] + 5, p[1] - 5:p[1] + 5] * 0

|

| 237 |

+

# t = target_points[0].int()

|

| 238 |

+

# sample2[0, :, t[0] - 5:t[0] + 5, t[1] - 5:t[1] + 5] = sample2[0, :, t[0] - 5:t[0] + 5, t[1] - 5:t[1] + 5] * 255

|

| 239 |

+

|

| 240 |

+

# sample2[0, :, 210, 134] = sample2[0, :, 210, 134] * 0

|

| 241 |

+

utils.save_image(sample2, "test2.png", normalize=True, range=(-1, 1))

|

| 242 |

+

|

| 243 |

+

yield sample2, latent, F2

|

requirements.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

torchvision

|

| 3 |

+

gradio

|

| 4 |

+

tqdm

|

stylegan2/_init__.py

ADDED

|

File without changes

|

stylegan2/model.py

ADDED

|

@@ -0,0 +1,699 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

import random

|

| 3 |

+

import functools

|

| 4 |

+

import operator

|

| 5 |

+

|

| 6 |

+

import torch

|

| 7 |

+

from torch import nn

|

| 8 |

+

from torch.nn import functional as F

|

| 9 |

+

from torch.autograd import Function

|

| 10 |

+

|

| 11 |

+

from .op import FusedLeakyReLU, fused_leaky_relu, upfirdn2d, conv2d_gradfix

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

class PixelNorm(nn.Module):

|

| 15 |

+

def __init__(self):

|

| 16 |

+

super().__init__()

|

| 17 |

+

|

| 18 |

+

def forward(self, input):

|

| 19 |

+

return input * torch.rsqrt(torch.mean(input ** 2, dim=1, keepdim=True) + 1e-8)

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def make_kernel(k):

|

| 23 |

+

k = torch.tensor(k, dtype=torch.float32)

|

| 24 |

+

|

| 25 |

+

if k.ndim == 1:

|

| 26 |

+

k = k[None, :] * k[:, None]

|

| 27 |

+

|

| 28 |

+

k /= k.sum()

|

| 29 |

+

|

| 30 |

+

return k

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

class Upsample(nn.Module):

|

| 34 |

+

def __init__(self, kernel, factor=2):

|

| 35 |

+

super().__init__()

|

| 36 |

+

|

| 37 |

+

self.factor = factor

|

| 38 |

+

kernel = make_kernel(kernel) * (factor ** 2)

|

| 39 |

+

self.register_buffer("kernel", kernel)

|

| 40 |

+

|

| 41 |

+

p = kernel.shape[0] - factor

|

| 42 |

+

|

| 43 |

+

pad0 = (p + 1) // 2 + factor - 1

|

| 44 |

+

pad1 = p // 2

|

| 45 |

+

|

| 46 |

+

self.pad = (pad0, pad1)

|

| 47 |

+

|

| 48 |

+

def forward(self, input):

|

| 49 |

+

out = upfirdn2d(input, self.kernel, up=self.factor, down=1, pad=self.pad)

|

| 50 |

+

|

| 51 |

+

return out

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

class Downsample(nn.Module):

|

| 55 |

+

def __init__(self, kernel, factor=2):

|

| 56 |

+

super().__init__()

|

| 57 |

+

|

| 58 |

+

self.factor = factor

|

| 59 |

+

kernel = make_kernel(kernel)

|

| 60 |

+

self.register_buffer("kernel", kernel)

|

| 61 |

+

|

| 62 |

+

p = kernel.shape[0] - factor

|

| 63 |

+

|

| 64 |

+

pad0 = (p + 1) // 2

|

| 65 |

+

pad1 = p // 2

|

| 66 |

+

|

| 67 |

+

self.pad = (pad0, pad1)

|

| 68 |

+

|

| 69 |

+

def forward(self, input):

|

| 70 |

+

out = upfirdn2d(input, self.kernel, up=1, down=self.factor, pad=self.pad)

|

| 71 |

+

|

| 72 |

+

return out

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

class Blur(nn.Module):

|

| 76 |

+

def __init__(self, kernel, pad, upsample_factor=1):

|

| 77 |

+

super().__init__()

|

| 78 |

+

|

| 79 |

+

kernel = make_kernel(kernel)

|

| 80 |

+

|

| 81 |

+

if upsample_factor > 1:

|

| 82 |

+

kernel = kernel * (upsample_factor ** 2)

|

| 83 |

+

|

| 84 |

+

self.register_buffer("kernel", kernel)

|

| 85 |

+

|

| 86 |

+

self.pad = pad

|

| 87 |

+

|

| 88 |

+

def forward(self, input):

|

| 89 |

+

out = upfirdn2d(input, self.kernel, pad=self.pad)

|

| 90 |

+

|

| 91 |

+

return out

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

class EqualConv2d(nn.Module):

|

| 95 |

+

def __init__(

|

| 96 |

+

self, in_channel, out_channel, kernel_size, stride=1, padding=0, bias=True

|

| 97 |

+

):

|

| 98 |

+

super().__init__()

|

| 99 |

+

|

| 100 |

+

self.weight = nn.Parameter(

|

| 101 |

+

torch.randn(out_channel, in_channel, kernel_size, kernel_size)

|

| 102 |

+

)

|

| 103 |

+

self.scale = 1 / math.sqrt(in_channel * kernel_size ** 2)

|

| 104 |

+

|

| 105 |

+

self.stride = stride

|

| 106 |

+

self.padding = padding

|

| 107 |

+

|

| 108 |

+

if bias:

|

| 109 |

+

self.bias = nn.Parameter(torch.zeros(out_channel))

|

| 110 |

+

|

| 111 |

+

else:

|

| 112 |

+

self.bias = None

|

| 113 |

+

|

| 114 |

+

def forward(self, input):

|

| 115 |

+

out = conv2d_gradfix.conv2d(

|

| 116 |

+

input,

|

| 117 |

+

self.weight * self.scale,

|

| 118 |

+

bias=self.bias,

|

| 119 |

+

stride=self.stride,

|

| 120 |

+

padding=self.padding,

|

| 121 |

+

)

|

| 122 |

+

|

| 123 |

+

return out

|

| 124 |

+

|

| 125 |

+

def __repr__(self):

|

| 126 |

+

return (

|

| 127 |

+

f"{self.__class__.__name__}({self.weight.shape[1]}, {self.weight.shape[0]},"

|

| 128 |

+

f" {self.weight.shape[2]}, stride={self.stride}, padding={self.padding})"

|

| 129 |

+

)

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

class EqualLinear(nn.Module):

|

| 133 |

+

def __init__(

|

| 134 |

+

self, in_dim, out_dim, bias=True, bias_init=0, lr_mul=1, activation=None

|

| 135 |

+

):

|

| 136 |

+

super().__init__()

|

| 137 |

+

|

| 138 |

+

self.weight = nn.Parameter(torch.randn(out_dim, in_dim).div_(lr_mul))

|

| 139 |

+

|

| 140 |

+

if bias:

|

| 141 |

+

self.bias = nn.Parameter(torch.zeros(out_dim).fill_(bias_init))

|

| 142 |

+

|

| 143 |

+

else:

|

| 144 |

+

self.bias = None

|

| 145 |

+

|

| 146 |

+

self.activation = activation

|

| 147 |

+

|

| 148 |

+

self.scale = (1 / math.sqrt(in_dim)) * lr_mul

|

| 149 |

+

self.lr_mul = lr_mul

|

| 150 |

+

|

| 151 |

+

def forward(self, input):

|

| 152 |

+

if self.activation:

|

| 153 |

+

out = F.linear(input, self.weight * self.scale)

|

| 154 |

+

out = fused_leaky_relu(out, self.bias * self.lr_mul)

|

| 155 |

+

|

| 156 |

+

else:

|

| 157 |

+

out = F.linear(

|

| 158 |

+

input, self.weight * self.scale, bias=self.bias * self.lr_mul

|

| 159 |

+

)

|

| 160 |

+

|

| 161 |

+

return out

|

| 162 |

+

|

| 163 |

+

def __repr__(self):

|

| 164 |

+

return (

|

| 165 |

+

f"{self.__class__.__name__}({self.weight.shape[1]}, {self.weight.shape[0]})"

|

| 166 |

+

)

|

| 167 |

+

|

| 168 |

+

|

| 169 |

+

class ModulatedConv2d(nn.Module):

|

| 170 |

+

def __init__(

|

| 171 |

+

self,

|

| 172 |

+

in_channel,

|

| 173 |

+

out_channel,

|

| 174 |

+

kernel_size,

|

| 175 |

+

style_dim,

|

| 176 |

+

demodulate=True,

|

| 177 |

+

upsample=False,

|

| 178 |

+

downsample=False,

|

| 179 |

+

blur_kernel=[1, 3, 3, 1],

|

| 180 |

+

fused=True,

|

| 181 |

+

):

|

| 182 |

+

super().__init__()

|

| 183 |

+

|

| 184 |

+

self.eps = 1e-8

|

| 185 |

+

self.kernel_size = kernel_size

|

| 186 |

+

self.in_channel = in_channel

|

| 187 |

+

self.out_channel = out_channel

|

| 188 |

+

self.upsample = upsample

|

| 189 |

+

self.downsample = downsample

|

| 190 |

+

|

| 191 |

+

if upsample:

|

| 192 |

+

factor = 2

|

| 193 |

+

p = (len(blur_kernel) - factor) - (kernel_size - 1)

|

| 194 |

+

pad0 = (p + 1) // 2 + factor - 1

|

| 195 |

+

pad1 = p // 2 + 1

|

| 196 |

+

|

| 197 |

+

self.blur = Blur(blur_kernel, pad=(pad0, pad1), upsample_factor=factor)

|

| 198 |

+

|

| 199 |

+

if downsample:

|

| 200 |

+

factor = 2

|

| 201 |

+

p = (len(blur_kernel) - factor) + (kernel_size - 1)

|

| 202 |

+

pad0 = (p + 1) // 2

|

| 203 |

+

pad1 = p // 2

|

| 204 |

+

|

| 205 |

+

self.blur = Blur(blur_kernel, pad=(pad0, pad1))

|

| 206 |

+

|

| 207 |

+

fan_in = in_channel * kernel_size ** 2

|

| 208 |

+

self.scale = 1 / math.sqrt(fan_in)

|

| 209 |

+

self.padding = kernel_size // 2

|

| 210 |

+

|

| 211 |

+

self.weight = nn.Parameter(

|

| 212 |

+

torch.randn(1, out_channel, in_channel, kernel_size, kernel_size)

|

| 213 |

+

)

|

| 214 |

+

|

| 215 |

+

self.modulation = EqualLinear(style_dim, in_channel, bias_init=1)

|

| 216 |

+

|

| 217 |

+

self.demodulate = demodulate

|

| 218 |

+

self.fused = fused

|

| 219 |

+

|

| 220 |

+

def __repr__(self):

|

| 221 |

+

return (

|

| 222 |

+

f"{self.__class__.__name__}({self.in_channel}, {self.out_channel}, {self.kernel_size}, "

|

| 223 |

+

f"upsample={self.upsample}, downsample={self.downsample})"

|

| 224 |

+

)

|

| 225 |

+

|

| 226 |

+

def forward(self, input, style):

|

| 227 |

+

batch, in_channel, height, width = input.shape

|

| 228 |

+

|

| 229 |

+

if not self.fused:

|

| 230 |

+

weight = self.scale * self.weight.squeeze(0)

|

| 231 |

+

style = self.modulation(style)

|

| 232 |

+

|

| 233 |

+

if self.demodulate:

|

| 234 |

+

w = weight.unsqueeze(0) * style.view(batch, 1, in_channel, 1, 1)

|

| 235 |

+

dcoefs = (w.square().sum((2, 3, 4)) + 1e-8).rsqrt()

|

| 236 |

+

|

| 237 |

+

input = input * style.reshape(batch, in_channel, 1, 1)

|

| 238 |

+

|

| 239 |

+

if self.upsample:

|

| 240 |

+

weight = weight.transpose(0, 1)

|

| 241 |

+

out = conv2d_gradfix.conv_transpose2d(

|

| 242 |

+

input, weight, padding=0, stride=2

|

| 243 |

+

)

|

| 244 |

+

out = self.blur(out)

|

| 245 |

+

|

| 246 |

+

elif self.downsample:

|

| 247 |

+

input = self.blur(input)

|

| 248 |

+

out = conv2d_gradfix.conv2d(input, weight, padding=0, stride=2)

|

| 249 |

+

|

| 250 |

+

else:

|

| 251 |

+

out = conv2d_gradfix.conv2d(input, weight, padding=self.padding)

|

| 252 |

+

|

| 253 |

+

if self.demodulate:

|

| 254 |

+

out = out * dcoefs.view(batch, -1, 1, 1)

|

| 255 |

+

|

| 256 |

+

return out

|

| 257 |

+

|

| 258 |

+

style = self.modulation(style).view(batch, 1, in_channel, 1, 1)

|

| 259 |

+

weight = self.scale * self.weight * style

|

| 260 |

+

|

| 261 |

+

if self.demodulate:

|

| 262 |

+

demod = torch.rsqrt(weight.pow(2).sum([2, 3, 4]) + 1e-8)

|

| 263 |

+

weight = weight * demod.view(batch, self.out_channel, 1, 1, 1)

|

| 264 |

+

|

| 265 |

+

weight = weight.view(

|

| 266 |

+

batch * self.out_channel, in_channel, self.kernel_size, self.kernel_size

|

| 267 |

+

)

|

| 268 |

+

|

| 269 |

+

if self.upsample:

|

| 270 |

+

input = input.view(1, batch * in_channel, height, width)

|

| 271 |

+

weight = weight.view(

|

| 272 |

+

batch, self.out_channel, in_channel, self.kernel_size, self.kernel_size

|

| 273 |

+

)

|

| 274 |

+

weight = weight.transpose(1, 2).reshape(

|

| 275 |

+

batch * in_channel, self.out_channel, self.kernel_size, self.kernel_size

|

| 276 |

+

)

|

| 277 |

+

out = conv2d_gradfix.conv_transpose2d(

|

| 278 |

+

input, weight, padding=0, stride=2, groups=batch

|

| 279 |

+

)

|

| 280 |

+

_, _, height, width = out.shape

|

| 281 |

+

out = out.view(batch, self.out_channel, height, width)

|

| 282 |

+

out = self.blur(out)

|

| 283 |

+

|

| 284 |

+

elif self.downsample:

|

| 285 |

+

input = self.blur(input)

|

| 286 |

+

_, _, height, width = input.shape

|

| 287 |

+

input = input.view(1, batch * in_channel, height, width)

|

| 288 |

+

out = conv2d_gradfix.conv2d(

|

| 289 |

+

input, weight, padding=0, stride=2, groups=batch

|

| 290 |

+

)

|

| 291 |

+

_, _, height, width = out.shape

|

| 292 |

+

out = out.view(batch, self.out_channel, height, width)

|

| 293 |

+

|

| 294 |

+

else:

|

| 295 |

+

input = input.view(1, batch * in_channel, height, width)

|

| 296 |

+

out = conv2d_gradfix.conv2d(

|

| 297 |

+

input, weight, padding=self.padding, groups=batch

|

| 298 |

+

)

|

| 299 |

+

_, _, height, width = out.shape

|

| 300 |

+

out = out.view(batch, self.out_channel, height, width)

|

| 301 |

+

|

| 302 |

+

return out

|

| 303 |

+

|

| 304 |

+

|

| 305 |

+

class NoiseInjection(nn.Module):

|

| 306 |

+

def __init__(self):

|

| 307 |

+

super().__init__()

|

| 308 |

+

|

| 309 |

+

self.weight = nn.Parameter(torch.zeros(1))

|

| 310 |

+

|

| 311 |

+

def forward(self, image, noise=None):

|

| 312 |

+

if noise is None:

|

| 313 |

+

batch, _, height, width = image.shape

|

| 314 |

+

noise = image.new_empty(batch, 1, height, width).normal_()

|

| 315 |

+

|

| 316 |

+

return image + self.weight * noise

|

| 317 |

+

|

| 318 |

+

|

| 319 |

+

class ConstantInput(nn.Module):

|

| 320 |

+

def __init__(self, channel, size=4):

|

| 321 |

+

super().__init__()

|

| 322 |

+

|

| 323 |

+

self.input = nn.Parameter(torch.randn(1, channel, size, size))

|

| 324 |

+

|

| 325 |

+

def forward(self, input):

|

| 326 |

+

batch = input.shape[0]

|

| 327 |

+

out = self.input.repeat(batch, 1, 1, 1)

|

| 328 |

+

|

| 329 |

+

return out

|

| 330 |

+

|

| 331 |

+

|

| 332 |

+

class StyledConv(nn.Module):

|

| 333 |

+

def __init__(

|

| 334 |

+

self,

|

| 335 |

+

in_channel,

|

| 336 |

+

out_channel,

|

| 337 |

+

kernel_size,

|

| 338 |

+

style_dim,

|

| 339 |

+

upsample=False,

|

| 340 |

+

blur_kernel=[1, 3, 3, 1],

|

| 341 |

+

demodulate=True,

|

| 342 |

+

):

|

| 343 |

+

super().__init__()

|

| 344 |

+

|

| 345 |

+

self.conv = ModulatedConv2d(

|

| 346 |

+

in_channel,

|

| 347 |

+

out_channel,

|

| 348 |

+

kernel_size,

|

| 349 |

+

style_dim,

|

| 350 |

+

upsample=upsample,

|

| 351 |

+

blur_kernel=blur_kernel,

|

| 352 |

+

demodulate=demodulate,

|

| 353 |

+

)

|

| 354 |

+

|

| 355 |

+

self.noise = NoiseInjection()

|

| 356 |

+

# self.bias = nn.Parameter(torch.zeros(1, out_channel, 1, 1))

|

| 357 |

+

# self.activate = ScaledLeakyReLU(0.2)

|

| 358 |

+

self.activate = FusedLeakyReLU(out_channel)

|

| 359 |

+

|

| 360 |

+

def forward(self, input, style, noise=None):

|

| 361 |

+

out = self.conv(input, style)

|

| 362 |

+

out = self.noise(out, noise=noise)

|

| 363 |

+

# out = out + self.bias

|

| 364 |

+

out = self.activate(out)

|

| 365 |

+

|

| 366 |

+

return out

|

| 367 |

+

|

| 368 |

+

|

| 369 |

+

class ToRGB(nn.Module):

|

| 370 |

+

def __init__(self, in_channel, style_dim, upsample=True, blur_kernel=[1, 3, 3, 1]):

|

| 371 |

+

super().__init__()

|

| 372 |

+

|

| 373 |

+

if upsample:

|

| 374 |

+

self.upsample = Upsample(blur_kernel)

|

| 375 |

+

|

| 376 |

+

self.conv = ModulatedConv2d(in_channel, 3, 1, style_dim, demodulate=False)

|

| 377 |

+

self.bias = nn.Parameter(torch.zeros(1, 3, 1, 1))

|

| 378 |

+

|

| 379 |

+

def forward(self, input, style, skip=None):

|

| 380 |

+

out = self.conv(input, style)

|

| 381 |

+

out = out + self.bias

|

| 382 |

+

|

| 383 |

+

if skip is not None:

|

| 384 |

+

skip = self.upsample(skip)

|

| 385 |

+

|

| 386 |

+

out = out + skip

|

| 387 |

+

|

| 388 |

+

return out

|

| 389 |

+

|

| 390 |

+

|

| 391 |

+

class Generator(nn.Module):

|

| 392 |

+

def __init__(

|

| 393 |

+

self,

|

| 394 |

+

size,

|

| 395 |

+

style_dim,

|

| 396 |

+

n_mlp,

|

| 397 |

+

channel_multiplier=2,

|

| 398 |

+

blur_kernel=[1, 3, 3, 1],

|

| 399 |

+

lr_mlp=0.01,

|

| 400 |

+

):

|

| 401 |

+

super().__init__()

|

| 402 |

+

|

| 403 |

+

self.size = size

|

| 404 |

+

|

| 405 |

+

self.style_dim = style_dim

|

| 406 |

+

|

| 407 |

+

layers = [PixelNorm()]

|

| 408 |

+

|

| 409 |

+

for i in range(n_mlp):

|

| 410 |

+

layers.append(

|

| 411 |

+

EqualLinear(

|

| 412 |

+

style_dim, style_dim, lr_mul=lr_mlp, activation="fused_lrelu"

|

| 413 |

+

)

|

| 414 |

+

)

|

| 415 |

+

|

| 416 |

+

self.style = nn.Sequential(*layers)

|

| 417 |

+

|

| 418 |

+

self.channels = {

|

| 419 |

+

4: 512,

|

| 420 |

+

8: 512,

|

| 421 |

+

16: 512,

|

| 422 |

+

32: 512,

|

| 423 |

+

64: 256 * channel_multiplier,

|

| 424 |

+

128: 128 * channel_multiplier,

|

| 425 |

+

256: 64 * channel_multiplier,

|

| 426 |

+

512: 32 * channel_multiplier,

|

| 427 |

+

1024: 16 * channel_multiplier,

|

| 428 |

+

}

|

| 429 |

+

|

| 430 |

+

self.input = ConstantInput(self.channels[4])

|

| 431 |

+

self.conv1 = StyledConv(

|

| 432 |

+

self.channels[4], self.channels[4], 3, style_dim, blur_kernel=blur_kernel

|

| 433 |

+

)

|

| 434 |

+

self.to_rgb1 = ToRGB(self.channels[4], style_dim, upsample=False)

|

| 435 |

+

|

| 436 |

+

self.log_size = int(math.log(size, 2))

|

| 437 |

+

self.num_layers = (self.log_size - 2) * 2 + 1

|

| 438 |

+

|

| 439 |

+

self.convs = nn.ModuleList()

|

| 440 |

+

self.upsamples = nn.ModuleList()

|

| 441 |

+

self.to_rgbs = nn.ModuleList()

|

| 442 |

+

self.noises = nn.Module()

|

| 443 |

+

|

| 444 |

+

in_channel = self.channels[4]

|

| 445 |

+

|

| 446 |

+

for layer_idx in range(self.num_layers):

|

| 447 |

+

res = (layer_idx + 5) // 2

|

| 448 |

+

shape = [1, 1, 2 ** res, 2 ** res]

|

| 449 |

+

self.noises.register_buffer(f"noise_{layer_idx}", torch.randn(*shape))

|

| 450 |

+

|

| 451 |

+

for i in range(3, self.log_size + 1):

|

| 452 |

+

out_channel = self.channels[2 ** i]

|

| 453 |

+

|

| 454 |

+

self.convs.append(

|

| 455 |

+

StyledConv(

|

| 456 |

+

in_channel,

|

| 457 |

+

out_channel,

|

| 458 |

+

3,

|

| 459 |

+

style_dim,

|

| 460 |

+

upsample=True,

|

| 461 |

+

blur_kernel=blur_kernel,

|

| 462 |

+

)

|

| 463 |

+

)

|

| 464 |

+

|

| 465 |

+

self.convs.append(

|

| 466 |

+

StyledConv(

|

| 467 |

+

out_channel, out_channel, 3, style_dim, blur_kernel=blur_kernel

|

| 468 |

+

)

|

| 469 |

+

)

|

| 470 |

+

|

| 471 |

+

self.to_rgbs.append(ToRGB(out_channel, style_dim))

|

| 472 |

+

|

| 473 |

+

in_channel = out_channel

|

| 474 |

+

|

| 475 |

+

self.n_latent = self.log_size * 2 - 2

|

| 476 |

+

|

| 477 |

+

def make_noise(self):

|

| 478 |

+

device = self.input.input.device

|

| 479 |

+

|

| 480 |

+

noises = [torch.randn(1, 1, 2 ** 2, 2 ** 2, device=device)]

|

| 481 |

+

|

| 482 |

+

for i in range(3, self.log_size + 1):

|

| 483 |

+

for _ in range(2):

|

| 484 |

+

noises.append(torch.randn(1, 1, 2 ** i, 2 ** i, device=device))

|

| 485 |

+

|

| 486 |

+

return noises

|

| 487 |

+

|

| 488 |

+

def mean_latent(self, n_latent):

|

| 489 |

+

latent_in = torch.randn(

|

| 490 |

+

n_latent, self.style_dim, device=self.input.input.device

|

| 491 |

+

)

|

| 492 |

+

latent = self.style(latent_in).mean(0, keepdim=True)

|

| 493 |

+

|

| 494 |

+

return latent

|

| 495 |

+

|

| 496 |

+

def get_latent(self, input):

|

| 497 |

+

return self.style(input)

|

| 498 |

+

|

| 499 |

+

def forward(

|

| 500 |

+

self,

|

| 501 |

+

styles,

|

| 502 |

+

return_latents=False,

|

| 503 |

+

inject_index=None,

|

| 504 |

+

truncation=1,

|

| 505 |

+

truncation_latent=None,

|

| 506 |

+

input_is_latent=False,

|

| 507 |

+

noise=None,

|

| 508 |

+

randomize_noise=True,

|

| 509 |

+

):

|

| 510 |

+

if not input_is_latent:

|

| 511 |

+

styles = [self.style(s) for s in styles]

|

| 512 |

+

|

| 513 |

+

if noise is None:

|

| 514 |

+

if randomize_noise:

|

| 515 |

+

noise = [None] * self.num_layers

|

| 516 |

+

else:

|

| 517 |

+

noise = [

|

| 518 |

+

getattr(self.noises, f"noise_{i}") for i in range(self.num_layers)

|

| 519 |

+

]

|

| 520 |

+

|

| 521 |

+

if truncation < 1:

|

| 522 |

+

style_t = []

|

| 523 |

+