Spaces:

Build error

Build error

Add application file

Browse files- LICENSE +25 -0

- README.md +138 -13

- app.py +34 -0

- callbacks.py +360 -0

- configs/pretrain_language_model.yaml +45 -0

- configs/pretrain_vision_model.yaml +58 -0

- configs/pretrain_vision_model_sv.yaml +58 -0

- configs/template.yaml +67 -0

- configs/train_abinet.yaml +71 -0

- configs/train_abinet_sv.yaml +71 -0

- configs/train_abinet_wo_iter.yaml +68 -0

- data/charset_36.txt +36 -0

- data/charset_62.txt +62 -0

- dataset.py +278 -0

- demo.py +109 -0

- docker/Dockerfile +25 -0

- figs/cases.png +0 -0

- figs/framework.png +0 -0

- figs/test/CANDY.png +0 -0

- figs/test/ESPLANADE.png +0 -0

- figs/test/GLOBE.png +0 -0

- figs/test/KAPPA.png +0 -0

- figs/test/MANDARIN.png +0 -0

- figs/test/MEETS.png +0 -0

- figs/test/MONTHLY.png +0 -0

- figs/test/RESTROOM.png +0 -0

- losses.py +72 -0

- main.py +246 -0

- modules/__init__.py +0 -0

- modules/attention.py +97 -0

- modules/backbone.py +36 -0

- modules/model.py +50 -0

- modules/model_abinet.py +30 -0

- modules/model_abinet_iter.py +34 -0

- modules/model_alignment.py +34 -0

- modules/model_language.py +67 -0

- modules/model_vision.py +47 -0

- modules/resnet.py +104 -0

- modules/transformer.py +901 -0

- notebooks/dataset-text.ipynb +159 -0

- notebooks/dataset.ipynb +298 -0

- notebooks/prepare_wikitext103.ipynb +468 -0

- notebooks/transforms.ipynb +0 -0

- requirements.txt +9 -0

- tools/create_lmdb_dataset.py +87 -0

- tools/crop_by_word_bb_syn90k.py +153 -0

- transforms.py +329 -0

- utils.py +304 -0

LICENSE

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

ABINet for non-commercial purposes

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2021, USTC

|

| 4 |

+

All rights reserved.

|

| 5 |

+

|

| 6 |

+

Redistribution and use in source and binary forms, with or without

|

| 7 |

+

modification, are permitted provided that the following conditions are met:

|

| 8 |

+

|

| 9 |

+

1. Redistributions of source code must retain the above copyright notice, this

|

| 10 |

+

list of conditions and the following disclaimer.

|

| 11 |

+

|

| 12 |

+

2. Redistributions in binary form must reproduce the above copyright notice,

|

| 13 |

+

this list of conditions and the following disclaimer in the documentation

|

| 14 |

+

and/or other materials provided with the distribution.

|

| 15 |

+

|

| 16 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

| 17 |

+

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

| 18 |

+

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

| 19 |

+

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

| 20 |

+

FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

| 21 |

+

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

| 22 |

+

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

| 23 |

+

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

| 24 |

+

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

| 25 |

+

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

README.md

CHANGED

|

@@ -1,13 +1,138 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition

|

| 2 |

+

|

| 3 |

+

The official code of [ABINet](https://arxiv.org/pdf/2103.06495.pdf) (CVPR 2021, Oral).

|

| 4 |

+

|

| 5 |

+

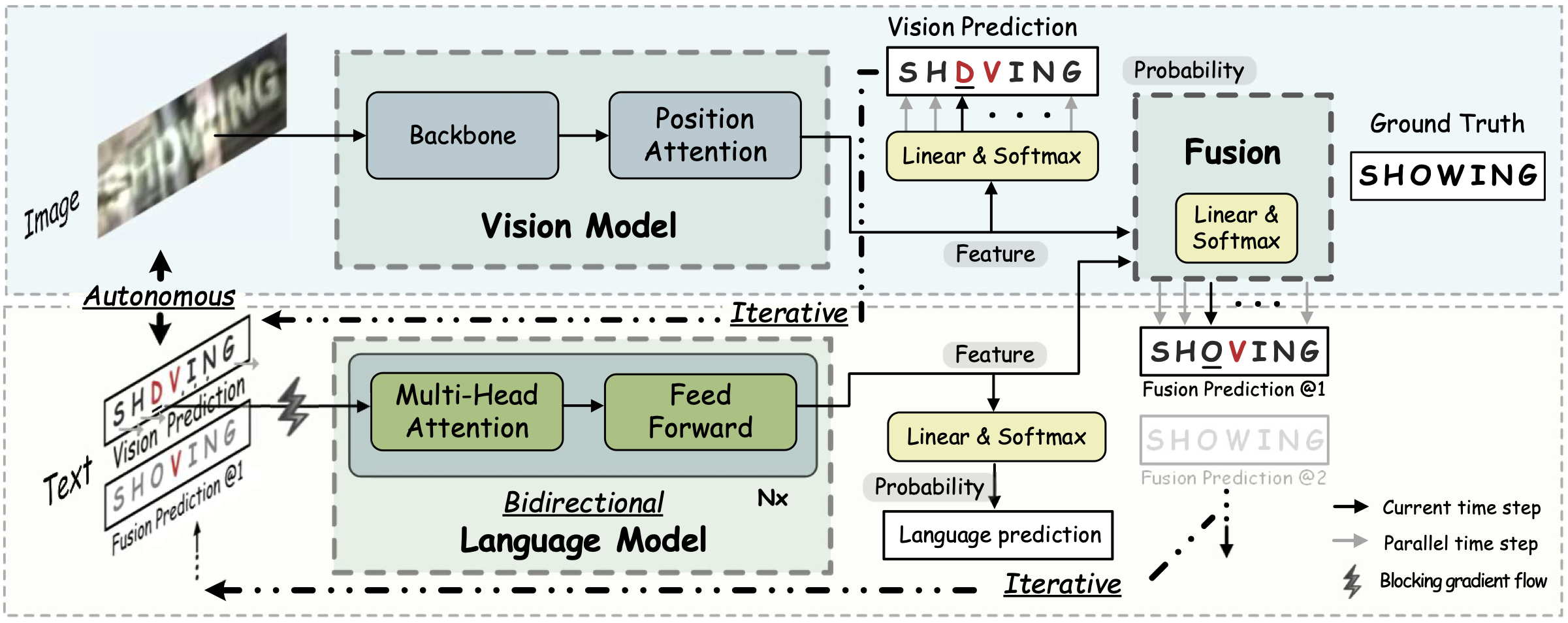

ABINet uses a vision model and an explicit language model to recognize text in the wild, which are trained in end-to-end way. The language model (BCN) achieves bidirectional language representation in simulating cloze test, additionally utilizing iterative correction strategy.

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

## Runtime Environment

|

| 10 |

+

|

| 11 |

+

- We provide a pre-built docker image using the Dockerfile from `docker/Dockerfile`

|

| 12 |

+

|

| 13 |

+

- Running in Docker

|

| 14 |

+

```

|

| 15 |

+

$ git@github.com:FangShancheng/ABINet.git

|

| 16 |

+

$ docker run --gpus all --rm -ti --ipc=host -v $(pwd)/ABINet:/app fangshancheng/fastai:torch1.1 /bin/bash

|

| 17 |

+

```

|

| 18 |

+

- (Untested) Or using the dependencies

|

| 19 |

+

```

|

| 20 |

+

pip install -r requirements.txt

|

| 21 |

+

```

|

| 22 |

+

|

| 23 |

+

## Datasets

|

| 24 |

+

|

| 25 |

+

- Training datasets

|

| 26 |

+

|

| 27 |

+

1. [MJSynth](http://www.robots.ox.ac.uk/~vgg/data/text/) (MJ):

|

| 28 |

+

- Use `tools/create_lmdb_dataset.py` to convert images into LMDB dataset

|

| 29 |

+

- [LMDB dataset BaiduNetdisk(passwd:n23k)](https://pan.baidu.com/s/1mgnTiyoR8f6Cm655rFI4HQ)

|

| 30 |

+

2. [SynthText](http://www.robots.ox.ac.uk/~vgg/data/scenetext/) (ST):

|

| 31 |

+

- Use `tools/crop_by_word_bb.py` to crop images from original [SynthText](http://www.robots.ox.ac.uk/~vgg/data/scenetext/) dataset, and convert images into LMDB dataset by `tools/create_lmdb_dataset.py`

|

| 32 |

+

- [LMDB dataset BaiduNetdisk(passwd:n23k)](https://pan.baidu.com/s/1mgnTiyoR8f6Cm655rFI4HQ)

|

| 33 |

+

3. [WikiText103](https://s3.amazonaws.com/research.metamind.io/wikitext/wikitext-103-v1.zip), which is only used for pre-trainig language models:

|

| 34 |

+

- Use `notebooks/prepare_wikitext103.ipynb` to convert text into CSV format.

|

| 35 |

+

- [CSV dataset BaiduNetdisk(passwd:dk01)](https://pan.baidu.com/s/1yabtnPYDKqhBb_Ie9PGFXA)

|

| 36 |

+

|

| 37 |

+

- Evaluation datasets, LMDB datasets can be downloaded from [BaiduNetdisk(passwd:1dbv)](https://pan.baidu.com/s/1RUg3Akwp7n8kZYJ55rU5LQ), [GoogleDrive](https://drive.google.com/file/d/1dTI0ipu14Q1uuK4s4z32DqbqF3dJPdkk/view?usp=sharing).

|

| 38 |

+

1. ICDAR 2013 (IC13)

|

| 39 |

+

2. ICDAR 2015 (IC15)

|

| 40 |

+

3. IIIT5K Words (IIIT)

|

| 41 |

+

4. Street View Text (SVT)

|

| 42 |

+

5. Street View Text-Perspective (SVTP)

|

| 43 |

+

6. CUTE80 (CUTE)

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

- The structure of `data` directory is

|

| 47 |

+

```

|

| 48 |

+

data

|

| 49 |

+

├── charset_36.txt

|

| 50 |

+

├── evaluation

|

| 51 |

+

│ ├── CUTE80

|

| 52 |

+

│ ├── IC13_857

|

| 53 |

+

│ ├── IC15_1811

|

| 54 |

+

│ ├── IIIT5k_3000

|

| 55 |

+

│ ├── SVT

|

| 56 |

+

│ └── SVTP

|

| 57 |

+

├── training

|

| 58 |

+

│ ├── MJ

|

| 59 |

+

│ │ ├── MJ_test

|

| 60 |

+

│ │ ├── MJ_train

|

| 61 |

+

│ │ └── MJ_valid

|

| 62 |

+

│ └── ST

|

| 63 |

+

├── WikiText-103.csv

|

| 64 |

+

└── WikiText-103_eval_d1.csv

|

| 65 |

+

```

|

| 66 |

+

|

| 67 |

+

### Pretrained Models

|

| 68 |

+

|

| 69 |

+

Get the pretrained models from [BaiduNetdisk(passwd:kwck)](https://pan.baidu.com/s/1b3vyvPwvh_75FkPlp87czQ), [GoogleDrive](https://drive.google.com/file/d/1mYM_26qHUom_5NU7iutHneB_KHlLjL5y/view?usp=sharing). Performances of the pretrained models are summaried as follows:

|

| 70 |

+

|

| 71 |

+

|Model|IC13|SVT|IIIT|IC15|SVTP|CUTE|AVG|

|

| 72 |

+

|-|-|-|-|-|-|-|-|

|

| 73 |

+

|ABINet-SV|97.1|92.7|95.2|84.0|86.7|88.5|91.4|

|

| 74 |

+

|ABINet-LV|97.0|93.4|96.4|85.9|89.5|89.2|92.7|

|

| 75 |

+

|

| 76 |

+

## Training

|

| 77 |

+

|

| 78 |

+

1. Pre-train vision model

|

| 79 |

+

```

|

| 80 |

+

CUDA_VISIBLE_DEVICES=0,1,2,3 python main.py --config=configs/pretrain_vision_model.yaml

|

| 81 |

+

```

|

| 82 |

+

2. Pre-train language model

|

| 83 |

+

```

|

| 84 |

+

CUDA_VISIBLE_DEVICES=0,1,2,3 python main.py --config=configs/pretrain_language_model.yaml

|

| 85 |

+

```

|

| 86 |

+

3. Train ABINet

|

| 87 |

+

```

|

| 88 |

+

CUDA_VISIBLE_DEVICES=0,1,2,3 python main.py --config=configs/train_abinet.yaml

|

| 89 |

+

```

|

| 90 |

+

Note:

|

| 91 |

+

- You can set the `checkpoint` path for vision and language models separately for specific pretrained model, or set to `None` to train from scratch

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

## Evaluation

|

| 95 |

+

|

| 96 |

+

```

|

| 97 |

+

CUDA_VISIBLE_DEVICES=0 python main.py --config=configs/train_abinet.yaml --phase test --image_only

|

| 98 |

+

```

|

| 99 |

+

Additional flags:

|

| 100 |

+

- `--checkpoint /path/to/checkpoint` set the path of evaluation model

|

| 101 |

+

- `--test_root /path/to/dataset` set the path of evaluation dataset

|

| 102 |

+

- `--model_eval [alignment|vision]` which sub-model to evaluate

|

| 103 |

+

- `--image_only` disable dumping visualization of attention masks

|

| 104 |

+

|

| 105 |

+

## Run Demo

|

| 106 |

+

|

| 107 |

+

```

|

| 108 |

+

python demo.py --config=configs/train_abinet.yaml --input=figs/test

|

| 109 |

+

```

|

| 110 |

+

Additional flags:

|

| 111 |

+

- `--config /path/to/config` set the path of configuration file

|

| 112 |

+

- `--input /path/to/image-directory` set the path of image directory or wildcard path, e.g, `--input='figs/test/*.png'`

|

| 113 |

+

- `--checkpoint /path/to/checkpoint` set the path of trained model

|

| 114 |

+

- `--cuda [-1|0|1|2|3...]` set the cuda id, by default -1 is set and stands for cpu

|

| 115 |

+

- `--model_eval [alignment|vision]` which sub-model to use

|

| 116 |

+

- `--image_only` disable dumping visualization of attention masks

|

| 117 |

+

|

| 118 |

+

## Visualization

|

| 119 |

+

Successful and failure cases on low-quality images:

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

## Citation

|

| 124 |

+

If you find our method useful for your reserach, please cite

|

| 125 |

+

```bash

|

| 126 |

+

@article{fang2021read,

|

| 127 |

+

title={Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition},

|

| 128 |

+

author={Fang, Shancheng and Xie, Hongtao and Wang, Yuxin and Mao, Zhendong and Zhang, Yongdong},

|

| 129 |

+

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

|

| 130 |

+

year={2021}

|

| 131 |

+

}

|

| 132 |

+

```

|

| 133 |

+

|

| 134 |

+

## License

|

| 135 |

+

|

| 136 |

+

This project is only free for academic research purposes, licensed under the 2-clause BSD License - see the LICENSE file for details.

|

| 137 |

+

|

| 138 |

+

Feel free to contact fangsc@ustc.edu.cn if you have any questions.

|

app.py

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import gdown

|

| 3 |

+

gdown.download(id='1mYM_26qHUom_5NU7iutHneB_KHlLjL5y', output='workdir.zip')

|

| 4 |

+

os.system('unzip workdir.zip')

|

| 5 |

+

|

| 6 |

+

import glob

|

| 7 |

+

import gradio as gr

|

| 8 |

+

from demo import get_model, preprocess, postprocess, load

|

| 9 |

+

from utils import Config, Logger, CharsetMapper

|

| 10 |

+

|

| 11 |

+

def process_image(image):

|

| 12 |

+

config = Config('configs/train_abinet.yaml')

|

| 13 |

+

config.model_vision_checkpoint = None

|

| 14 |

+

model = get_model(config)

|

| 15 |

+

model = load(model, 'workdir/train-abinet/best-train-abinet.pth')

|

| 16 |

+

charset = CharsetMapper(filename=config.dataset_charset_path, max_length=config.dataset_max_length + 1)

|

| 17 |

+

|

| 18 |

+

img = image.convert('RGB')

|

| 19 |

+

img = preprocess(img, config.dataset_image_width, config.dataset_image_height)

|

| 20 |

+

res = model(img)

|

| 21 |

+

return postprocess(res, charset, 'alignment')[0][0]

|

| 22 |

+

|

| 23 |

+

title = "Interactive demo: ABINet"

|

| 24 |

+

description = "Demo for ABINet, ABINet uses a vision model and an explicit language model to recognize text in the wild, which are trained in end-to-end way. The language model (BCN) achieves bidirectional language representation in simulating cloze test, additionally utilizing iterative correction strategy. To use it, simply upload a (single-text line) image or use one of the example images below and click 'submit'. Results will show up in a few seconds."

|

| 25 |

+

article = "<p style='text-align: center'><a href='https://arxiv.org/pdf/2103.06495.pdf'>Read Like Humans: Autonomous, Bidirectional and Iterative Language Modeling for Scene Text Recognition</a> | <a href='https://github.com/FangShancheng/ABINet'>Github Repo</a></p>"

|

| 26 |

+

|

| 27 |

+

iface = gr.Interface(fn=process_image,

|

| 28 |

+

inputs=gr.inputs.Image(type="pil"),

|

| 29 |

+

outputs=gr.outputs.Textbox(),

|

| 30 |

+

title=title,

|

| 31 |

+

description=description,

|

| 32 |

+

article=article,

|

| 33 |

+

examples=glob.glob('figs/test/*.png'))

|

| 34 |

+

iface.launch(debug=True)

|

callbacks.py

ADDED

|

@@ -0,0 +1,360 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import logging

|

| 2 |

+

import shutil

|

| 3 |

+

import time

|

| 4 |

+

|

| 5 |

+

import editdistance as ed

|

| 6 |

+

import torchvision.utils as vutils

|

| 7 |

+

from fastai.callbacks.tensorboard import (LearnerTensorboardWriter,

|

| 8 |

+

SummaryWriter, TBWriteRequest,

|

| 9 |

+

asyncTBWriter)

|

| 10 |

+

from fastai.vision import *

|

| 11 |

+

from torch.nn.parallel import DistributedDataParallel

|

| 12 |

+

from torchvision import transforms

|

| 13 |

+

|

| 14 |

+

import dataset

|

| 15 |

+

from utils import CharsetMapper, Timer, blend_mask

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

class IterationCallback(LearnerTensorboardWriter):

|

| 19 |

+

"A `TrackerCallback` that monitor in each iteration."

|

| 20 |

+

def __init__(self, learn:Learner, name:str='model', checpoint_keep_num=5,

|

| 21 |

+

show_iters:int=50, eval_iters:int=1000, save_iters:int=20000,

|

| 22 |

+

start_iters:int=0, stats_iters=20000):

|

| 23 |

+

#if self.learn.rank is not None: time.sleep(self.learn.rank) # keep all event files

|

| 24 |

+

super().__init__(learn, base_dir='.', name=learn.path, loss_iters=show_iters,

|

| 25 |

+

stats_iters=stats_iters, hist_iters=stats_iters)

|

| 26 |

+

self.name, self.bestname = Path(name).name, f'best-{Path(name).name}'

|

| 27 |

+

self.show_iters = show_iters

|

| 28 |

+

self.eval_iters = eval_iters

|

| 29 |

+

self.save_iters = save_iters

|

| 30 |

+

self.start_iters = start_iters

|

| 31 |

+

self.checpoint_keep_num = checpoint_keep_num

|

| 32 |

+

self.metrics_root = 'metrics/' # rewrite

|

| 33 |

+

self.timer = Timer()

|

| 34 |

+

self.host = self.learn.rank is None or self.learn.rank == 0

|

| 35 |

+

|

| 36 |

+

def _write_metrics(self, iteration:int, names:List[str], last_metrics:MetricsList)->None:

|

| 37 |

+

"Writes training metrics to Tensorboard."

|

| 38 |

+

for i, name in enumerate(names):

|

| 39 |

+

if last_metrics is None or len(last_metrics) < i+1: return

|

| 40 |

+

scalar_value = last_metrics[i]

|

| 41 |

+

self._write_scalar(name=name, scalar_value=scalar_value, iteration=iteration)

|

| 42 |

+

|

| 43 |

+

def _write_sub_loss(self, iteration:int, last_losses:dict)->None:

|

| 44 |

+

"Writes sub loss to Tensorboard."

|

| 45 |

+

for name, loss in last_losses.items():

|

| 46 |

+

scalar_value = to_np(loss)

|

| 47 |

+

tag = self.metrics_root + name

|

| 48 |

+

self.tbwriter.add_scalar(tag=tag, scalar_value=scalar_value, global_step=iteration)

|

| 49 |

+

|

| 50 |

+

def _save(self, name):

|

| 51 |

+

if isinstance(self.learn.model, DistributedDataParallel):

|

| 52 |

+

tmp = self.learn.model

|

| 53 |

+

self.learn.model = self.learn.model.module

|

| 54 |

+

self.learn.save(name)

|

| 55 |

+

self.learn.model = tmp

|

| 56 |

+

else: self.learn.save(name)

|

| 57 |

+

|

| 58 |

+

def _validate(self, dl=None, callbacks=None, metrics=None, keeped_items=False):

|

| 59 |

+

"Validate on `dl` with potential `callbacks` and `metrics`."

|

| 60 |

+

dl = ifnone(dl, self.learn.data.valid_dl)

|

| 61 |

+

metrics = ifnone(metrics, self.learn.metrics)

|

| 62 |

+

cb_handler = CallbackHandler(ifnone(callbacks, []), metrics)

|

| 63 |

+

cb_handler.on_train_begin(1, None, metrics); cb_handler.on_epoch_begin()

|

| 64 |

+

if keeped_items: cb_handler.state_dict.update(dict(keeped_items=[]))

|

| 65 |

+

val_metrics = validate(self.learn.model, dl, self.loss_func, cb_handler)

|

| 66 |

+

cb_handler.on_epoch_end(val_metrics)

|

| 67 |

+

if keeped_items: return cb_handler.state_dict['keeped_items']

|

| 68 |

+

else: return cb_handler.state_dict['last_metrics']

|

| 69 |

+

|

| 70 |

+

def jump_to_epoch_iter(self, epoch:int, iteration:int)->None:

|

| 71 |

+

try:

|

| 72 |

+

self.learn.load(f'{self.name}_{epoch}_{iteration}', purge=False)

|

| 73 |

+

logging.info(f'Loaded {self.name}_{epoch}_{iteration}')

|

| 74 |

+

except: logging.info(f'Model {self.name}_{epoch}_{iteration} not found.')

|

| 75 |

+

|

| 76 |

+

def on_train_begin(self, n_epochs, **kwargs):

|

| 77 |

+

# TODO: can not write graph here

|

| 78 |

+

# super().on_train_begin(**kwargs)

|

| 79 |

+

self.best = -float('inf')

|

| 80 |

+

self.timer.tic()

|

| 81 |

+

if self.host:

|

| 82 |

+

checkpoint_path = self.learn.path/'checkpoint.yaml'

|

| 83 |

+

if checkpoint_path.exists():

|

| 84 |

+

os.remove(checkpoint_path)

|

| 85 |

+

open(checkpoint_path, 'w').close()

|

| 86 |

+

return {'skip_validate': True, 'iteration':self.start_iters} # disable default validate

|

| 87 |

+

|

| 88 |

+

def on_batch_begin(self, **kwargs:Any)->None:

|

| 89 |

+

self.timer.toc_data()

|

| 90 |

+

super().on_batch_begin(**kwargs)

|

| 91 |

+

|

| 92 |

+

def on_batch_end(self, iteration, epoch, last_loss, smooth_loss, train, **kwargs):

|

| 93 |

+

super().on_batch_end(last_loss, iteration, train, **kwargs)

|

| 94 |

+

if iteration == 0: return

|

| 95 |

+

|

| 96 |

+

if iteration % self.loss_iters == 0:

|

| 97 |

+

last_losses = self.learn.loss_func.last_losses

|

| 98 |

+

self._write_sub_loss(iteration=iteration, last_losses=last_losses)

|

| 99 |

+

self.tbwriter.add_scalar(tag=self.metrics_root + 'lr',

|

| 100 |

+

scalar_value=self.opt.lr, global_step=iteration)

|

| 101 |

+

|

| 102 |

+

if iteration % self.show_iters == 0:

|

| 103 |

+

log_str = f'epoch {epoch} iter {iteration}: loss = {last_loss:6.4f}, ' \

|

| 104 |

+

f'smooth loss = {smooth_loss:6.4f}'

|

| 105 |

+

logging.info(log_str)

|

| 106 |

+

# log_str = f'data time = {self.timer.data_diff:.4f}s, runing time = {self.timer.running_diff:.4f}s'

|

| 107 |

+

# logging.info(log_str)

|

| 108 |

+

|

| 109 |

+

if iteration % self.eval_iters == 0:

|

| 110 |

+

# TODO: or remove time to on_epoch_end

|

| 111 |

+

# 1. Record time

|

| 112 |

+

log_str = f'average data time = {self.timer.average_data_time():.4f}s, ' \

|

| 113 |

+

f'average running time = {self.timer.average_running_time():.4f}s'

|

| 114 |

+

logging.info(log_str)

|

| 115 |

+

|

| 116 |

+

# 2. Call validate

|

| 117 |

+

last_metrics = self._validate()

|

| 118 |

+

self.learn.model.train()

|

| 119 |

+

log_str = f'epoch {epoch} iter {iteration}: eval loss = {last_metrics[0]:6.4f}, ' \

|

| 120 |

+

f'ccr = {last_metrics[1]:6.4f}, cwr = {last_metrics[2]:6.4f}, ' \

|

| 121 |

+

f'ted = {last_metrics[3]:6.4f}, ned = {last_metrics[4]:6.4f}, ' \

|

| 122 |

+

f'ted/w = {last_metrics[5]:6.4f}, '

|

| 123 |

+

logging.info(log_str)

|

| 124 |

+

names = ['eval_loss', 'ccr', 'cwr', 'ted', 'ned', 'ted/w']

|

| 125 |

+

self._write_metrics(iteration, names, last_metrics)

|

| 126 |

+

|

| 127 |

+

# 3. Save best model

|

| 128 |

+

current = last_metrics[2]

|

| 129 |

+

if current is not None and current > self.best:

|

| 130 |

+

logging.info(f'Better model found at epoch {epoch}, '\

|

| 131 |

+

f'iter {iteration} with accuracy value: {current:6.4f}.')

|

| 132 |

+

self.best = current

|

| 133 |

+

self._save(f'{self.bestname}')

|

| 134 |

+

|

| 135 |

+

if iteration % self.save_iters == 0 and self.host:

|

| 136 |

+

logging.info(f'Save model {self.name}_{epoch}_{iteration}')

|

| 137 |

+

filename = f'{self.name}_{epoch}_{iteration}'

|

| 138 |

+

self._save(filename)

|

| 139 |

+

|

| 140 |

+

checkpoint_path = self.learn.path/'checkpoint.yaml'

|

| 141 |

+

if not checkpoint_path.exists():

|

| 142 |

+

open(checkpoint_path, 'w').close()

|

| 143 |

+

with open(checkpoint_path, 'r') as file:

|

| 144 |

+

checkpoints = yaml.load(file, Loader=yaml.FullLoader) or dict()

|

| 145 |

+

checkpoints['all_checkpoints'] = (

|

| 146 |

+

checkpoints.get('all_checkpoints') or list())

|

| 147 |

+

checkpoints['all_checkpoints'].insert(0, filename)

|

| 148 |

+

if len(checkpoints['all_checkpoints']) > self.checpoint_keep_num:

|

| 149 |

+

removed_checkpoint = checkpoints['all_checkpoints'].pop()

|

| 150 |

+

removed_checkpoint = self.learn.path/self.learn.model_dir/f'{removed_checkpoint}.pth'

|

| 151 |

+

os.remove(removed_checkpoint)

|

| 152 |

+

checkpoints['current_checkpoint'] = filename

|

| 153 |

+

with open(checkpoint_path, 'w') as file:

|

| 154 |

+

yaml.dump(checkpoints, file)

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

self.timer.toc_running()

|

| 158 |

+

|

| 159 |

+

def on_train_end(self, **kwargs):

|

| 160 |

+

#self.learn.load(f'{self.bestname}', purge=False)

|

| 161 |

+

pass

|

| 162 |

+

|

| 163 |

+

def on_epoch_end(self, last_metrics:MetricsList, iteration:int, **kwargs)->None:

|

| 164 |

+

self._write_embedding(iteration=iteration)

|

| 165 |

+

|

| 166 |

+

|

| 167 |

+

class TextAccuracy(Callback):

|

| 168 |

+

_names = ['ccr', 'cwr', 'ted', 'ned', 'ted/w']

|

| 169 |

+

def __init__(self, charset_path, max_length, case_sensitive, model_eval):

|

| 170 |

+

self.charset_path = charset_path

|

| 171 |

+

self.max_length = max_length

|

| 172 |

+

self.case_sensitive = case_sensitive

|

| 173 |

+

self.charset = CharsetMapper(charset_path, self.max_length)

|

| 174 |

+

self.names = self._names

|

| 175 |

+

|

| 176 |

+

self.model_eval = model_eval or 'alignment'

|

| 177 |

+

assert self.model_eval in ['vision', 'language', 'alignment']

|

| 178 |

+

|

| 179 |

+

def on_epoch_begin(self, **kwargs):

|

| 180 |

+

self.total_num_char = 0.

|

| 181 |

+

self.total_num_word = 0.

|

| 182 |

+

self.correct_num_char = 0.

|

| 183 |

+

self.correct_num_word = 0.

|

| 184 |

+

self.total_ed = 0.

|

| 185 |

+

self.total_ned = 0.

|

| 186 |

+

|

| 187 |

+

def _get_output(self, last_output):

|

| 188 |

+

if isinstance(last_output, (tuple, list)):

|

| 189 |

+

for res in last_output:

|

| 190 |

+

if res['name'] == self.model_eval: output = res

|

| 191 |

+

else: output = last_output

|

| 192 |

+

return output

|

| 193 |

+

|

| 194 |

+

def _update_output(self, last_output, items):

|

| 195 |

+

if isinstance(last_output, (tuple, list)):

|

| 196 |

+

for res in last_output:

|

| 197 |

+

if res['name'] == self.model_eval: res.update(items)

|

| 198 |

+

else: last_output.update(items)

|

| 199 |

+

return last_output

|

| 200 |

+

|

| 201 |

+

def on_batch_end(self, last_output, last_target, **kwargs):

|

| 202 |

+

output = self._get_output(last_output)

|

| 203 |

+

logits, pt_lengths = output['logits'], output['pt_lengths']

|

| 204 |

+

pt_text, pt_scores, pt_lengths_ = self.decode(logits)

|

| 205 |

+

assert (pt_lengths == pt_lengths_).all(), f'{pt_lengths} != {pt_lengths_} for {pt_text}'

|

| 206 |

+

last_output = self._update_output(last_output, {'pt_text':pt_text, 'pt_scores':pt_scores})

|

| 207 |

+

|

| 208 |

+

pt_text = [self.charset.trim(t) for t in pt_text]

|

| 209 |

+

label = last_target[0]

|

| 210 |

+

if label.dim() == 3: label = label.argmax(dim=-1) # one-hot label

|

| 211 |

+

gt_text = [self.charset.get_text(l, trim=True) for l in label]

|

| 212 |

+

|

| 213 |

+

for i in range(len(gt_text)):

|

| 214 |

+

if not self.case_sensitive:

|

| 215 |

+

gt_text[i], pt_text[i] = gt_text[i].lower(), pt_text[i].lower()

|

| 216 |

+

distance = ed.eval(gt_text[i], pt_text[i])

|

| 217 |

+

self.total_ed += distance

|

| 218 |

+

self.total_ned += float(distance) / max(len(gt_text[i]), 1)

|

| 219 |

+

|

| 220 |

+

if gt_text[i] == pt_text[i]:

|

| 221 |

+

self.correct_num_word += 1

|

| 222 |

+

self.total_num_word += 1

|

| 223 |

+

|

| 224 |

+

for j in range(min(len(gt_text[i]), len(pt_text[i]))):

|

| 225 |

+

if gt_text[i][j] == pt_text[i][j]:

|

| 226 |

+

self.correct_num_char += 1

|

| 227 |

+

self.total_num_char += len(gt_text[i])

|

| 228 |

+

|

| 229 |

+

return {'last_output': last_output}

|

| 230 |

+

|

| 231 |

+

def on_epoch_end(self, last_metrics, **kwargs):

|

| 232 |

+

mets = [self.correct_num_char / self.total_num_char,

|

| 233 |

+

self.correct_num_word / self.total_num_word,

|

| 234 |

+

self.total_ed,

|

| 235 |

+

self.total_ned,

|

| 236 |

+

self.total_ed / self.total_num_word]

|

| 237 |

+

return add_metrics(last_metrics, mets)

|

| 238 |

+

|

| 239 |

+

def decode(self, logit):

|

| 240 |

+

""" Greed decode """

|

| 241 |

+

# TODO: test running time and decode on GPU

|

| 242 |

+

out = F.softmax(logit, dim=2)

|

| 243 |

+

pt_text, pt_scores, pt_lengths = [], [], []

|

| 244 |

+

for o in out:

|

| 245 |

+

text = self.charset.get_text(o.argmax(dim=1), padding=False, trim=False)

|

| 246 |

+

text = text.split(self.charset.null_char)[0] # end at end-token

|

| 247 |

+

pt_text.append(text)

|

| 248 |

+

pt_scores.append(o.max(dim=1)[0])

|

| 249 |

+

pt_lengths.append(min(len(text) + 1, self.max_length)) # one for end-token

|

| 250 |

+

pt_scores = torch.stack(pt_scores)

|

| 251 |

+

pt_lengths = pt_scores.new_tensor(pt_lengths, dtype=torch.long)

|

| 252 |

+

return pt_text, pt_scores, pt_lengths

|

| 253 |

+

|

| 254 |

+

|

| 255 |

+

class TopKTextAccuracy(TextAccuracy):

|

| 256 |

+

_names = ['ccr', 'cwr']

|

| 257 |

+

def __init__(self, k, charset_path, max_length, case_sensitive, model_eval):

|

| 258 |

+

self.k = k

|

| 259 |

+

self.charset_path = charset_path

|

| 260 |

+

self.max_length = max_length

|

| 261 |

+

self.case_sensitive = case_sensitive

|

| 262 |

+

self.charset = CharsetMapper(charset_path, self.max_length)

|

| 263 |

+

self.names = self._names

|

| 264 |

+

|

| 265 |

+

def on_epoch_begin(self, **kwargs):

|

| 266 |

+

self.total_num_char = 0.

|

| 267 |

+

self.total_num_word = 0.

|

| 268 |

+

self.correct_num_char = 0.

|

| 269 |

+

self.correct_num_word = 0.

|

| 270 |

+

|

| 271 |

+

def on_batch_end(self, last_output, last_target, **kwargs):

|

| 272 |

+

logits, pt_lengths = last_output['logits'], last_output['pt_lengths']

|

| 273 |

+

gt_labels, gt_lengths = last_target[:]

|

| 274 |

+

|

| 275 |

+

for logit, pt_length, label, length in zip(logits, pt_lengths, gt_labels, gt_lengths):

|

| 276 |

+

word_flag = True

|

| 277 |

+

for i in range(length):

|

| 278 |

+

char_logit = logit[i].topk(self.k)[1]

|

| 279 |

+

char_label = label[i].argmax(-1)

|

| 280 |

+

if char_label in char_logit: self.correct_num_char += 1

|

| 281 |

+

else: word_flag = False

|

| 282 |

+

self.total_num_char += 1

|

| 283 |

+

if pt_length == length and word_flag:

|

| 284 |

+

self.correct_num_word += 1

|

| 285 |

+

self.total_num_word += 1

|

| 286 |

+

|

| 287 |

+

def on_epoch_end(self, last_metrics, **kwargs):

|

| 288 |

+

mets = [self.correct_num_char / self.total_num_char,

|

| 289 |

+

self.correct_num_word / self.total_num_word,

|

| 290 |

+

0., 0., 0.]

|

| 291 |

+

return add_metrics(last_metrics, mets)

|

| 292 |

+

|

| 293 |

+

|

| 294 |

+

class DumpPrediction(LearnerCallback):

|

| 295 |

+

|

| 296 |

+

def __init__(self, learn, dataset, charset_path, model_eval, image_only=False, debug=False):

|

| 297 |

+

super().__init__(learn=learn)

|

| 298 |

+

self.debug = debug

|

| 299 |

+

self.model_eval = model_eval or 'alignment'

|

| 300 |

+

self.image_only = image_only

|

| 301 |

+

assert self.model_eval in ['vision', 'language', 'alignment']

|

| 302 |

+

|

| 303 |

+

self.dataset, self.root = dataset, Path(self.learn.path)/f'{dataset}-{self.model_eval}'

|

| 304 |

+

self.attn_root = self.root/'attn'

|

| 305 |

+

self.charset = CharsetMapper(charset_path)

|

| 306 |

+

if self.root.exists(): shutil.rmtree(self.root)

|

| 307 |

+

self.root.mkdir(), self.attn_root.mkdir()

|

| 308 |

+

|

| 309 |

+

self.pil = transforms.ToPILImage()

|

| 310 |

+

self.tensor = transforms.ToTensor()

|

| 311 |

+

size = self.learn.data.img_h, self.learn.data.img_w

|

| 312 |

+

self.resize = transforms.Resize(size=size, interpolation=0)

|

| 313 |

+

self.c = 0

|

| 314 |

+

|

| 315 |

+

def on_batch_end(self, last_input, last_output, last_target, **kwargs):

|

| 316 |

+

if isinstance(last_output, (tuple, list)):

|

| 317 |

+

for res in last_output:

|

| 318 |

+

if res['name'] == self.model_eval: pt_text = res['pt_text']

|

| 319 |

+

if res['name'] == 'vision': attn_scores = res['attn_scores'].detach().cpu()

|

| 320 |

+

if res['name'] == self.model_eval: logits = res['logits']

|

| 321 |

+

else:

|

| 322 |

+

pt_text = last_output['pt_text']

|

| 323 |

+

attn_scores = last_output['attn_scores'].detach().cpu()

|

| 324 |

+

logits = last_output['logits']

|

| 325 |

+

|

| 326 |

+

images = last_input[0] if isinstance(last_input, (tuple, list)) else last_input

|

| 327 |

+

images = images.detach().cpu()

|

| 328 |

+

pt_text = [self.charset.trim(t) for t in pt_text]

|

| 329 |

+

gt_label = last_target[0]

|

| 330 |

+

if gt_label.dim() == 3: gt_label = gt_label.argmax(dim=-1) # one-hot label

|

| 331 |

+

gt_text = [self.charset.get_text(l, trim=True) for l in gt_label]

|

| 332 |

+

|

| 333 |

+

prediction, false_prediction = [], []

|

| 334 |

+

for gt, pt, image, attn, logit in zip(gt_text, pt_text, images, attn_scores, logits):

|

| 335 |

+

prediction.append(f'{gt}\t{pt}\n')

|

| 336 |

+

if gt != pt:

|

| 337 |

+

if self.debug:

|

| 338 |

+

scores = torch.softmax(logit, dim=-1)[:max(len(pt), len(gt)) + 1]

|

| 339 |

+

logging.info(f'{self.c} gt {gt}, pt {pt}, logit {logit.shape}, scores {scores.topk(5, dim=-1)}')

|

| 340 |

+

false_prediction.append(f'{gt}\t{pt}\n')

|

| 341 |

+

|

| 342 |

+

image = self.learn.data.denorm(image)

|

| 343 |

+

if not self.image_only:

|

| 344 |

+

image_np = np.array(self.pil(image))

|

| 345 |

+

attn_pil = [self.pil(a) for a in attn[:, None, :, :]]

|

| 346 |

+

attn = [self.tensor(self.resize(a)).repeat(3, 1, 1) for a in attn_pil]

|

| 347 |

+

attn_sum = np.array([np.array(a) for a in attn_pil[:len(pt)]]).sum(axis=0)

|

| 348 |

+

blended_sum = self.tensor(blend_mask(image_np, attn_sum))

|

| 349 |

+

blended = [self.tensor(blend_mask(image_np, np.array(a))) for a in attn_pil]

|

| 350 |

+

save_image = torch.stack([image] + attn + [blended_sum] + blended)

|

| 351 |

+

save_image = save_image.view(2, -1, *save_image.shape[1:])

|

| 352 |

+

save_image = save_image.permute(1, 0, 2, 3, 4).flatten(0, 1)

|

| 353 |

+

vutils.save_image(save_image, self.attn_root/f'{self.c}_{gt}_{pt}.jpg',

|

| 354 |

+

nrow=2, normalize=True, scale_each=True)

|

| 355 |

+

else:

|

| 356 |

+

self.pil(image).save(self.attn_root/f'{self.c}_{gt}_{pt}.jpg')

|

| 357 |

+

self.c += 1

|

| 358 |

+

|

| 359 |

+

with open(self.root/f'{self.model_eval}.txt', 'a') as f: f.writelines(prediction)

|

| 360 |

+

with open(self.root/f'{self.model_eval}-false.txt', 'a') as f: f.writelines(false_prediction)

|

configs/pretrain_language_model.yaml

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

global:

|

| 2 |

+

name: pretrain-language-model

|

| 3 |

+

phase: train

|

| 4 |

+

stage: pretrain-language

|

| 5 |

+

workdir: workdir

|

| 6 |

+

seed: ~

|

| 7 |

+

|

| 8 |

+

dataset:

|

| 9 |

+

train: {

|

| 10 |

+

roots: ['data/WikiText-103.csv'],

|

| 11 |

+

batch_size: 4096

|

| 12 |

+

}

|

| 13 |

+

test: {

|

| 14 |

+

roots: ['data/WikiText-103_eval_d1.csv'],

|

| 15 |

+

batch_size: 4096

|

| 16 |

+

}

|

| 17 |

+

|

| 18 |

+

training:

|

| 19 |

+

epochs: 80

|

| 20 |

+

show_iters: 50

|

| 21 |

+

eval_iters: 6000

|

| 22 |

+

save_iters: 3000

|

| 23 |

+

|

| 24 |

+

optimizer:

|

| 25 |

+

type: Adam

|

| 26 |

+

true_wd: False

|

| 27 |

+

wd: 0.0

|

| 28 |

+

bn_wd: False

|

| 29 |

+

clip_grad: 20

|

| 30 |

+

lr: 0.0001

|

| 31 |

+

args: {

|

| 32 |

+

betas: !!python/tuple [0.9, 0.999], # for default Adam

|

| 33 |

+

}

|

| 34 |

+

scheduler: {

|

| 35 |

+

periods: [70, 10],

|

| 36 |

+

gamma: 0.1,

|

| 37 |

+

}

|

| 38 |

+

|

| 39 |

+

model:

|

| 40 |

+

name: 'modules.model_language.BCNLanguage'

|

| 41 |

+

language: {

|

| 42 |

+

num_layers: 4,

|

| 43 |

+

loss_weight: 1.,

|

| 44 |

+

use_self_attn: False

|

| 45 |

+

}

|

configs/pretrain_vision_model.yaml

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

global:

|

| 2 |

+

name: pretrain-vision-model

|

| 3 |

+

phase: train

|

| 4 |

+

stage: pretrain-vision

|

| 5 |

+

workdir: workdir

|

| 6 |

+

seed: ~

|

| 7 |

+

|

| 8 |

+

dataset:

|

| 9 |

+

train: {

|

| 10 |

+

roots: ['data/training/MJ/MJ_train/',

|

| 11 |

+

'data/training/MJ/MJ_test/',

|

| 12 |

+

'data/training/MJ/MJ_valid/',

|

| 13 |

+

'data/training/ST'],

|

| 14 |

+

batch_size: 384

|

| 15 |

+

}

|

| 16 |

+

test: {

|

| 17 |

+

roots: ['data/evaluation/IIIT5k_3000',

|

| 18 |

+

'data/evaluation/SVT',

|

| 19 |

+

'data/evaluation/SVTP',

|

| 20 |

+

'data/evaluation/IC13_857',

|

| 21 |

+

'data/evaluation/IC15_1811',

|

| 22 |

+

'data/evaluation/CUTE80'],

|

| 23 |

+

batch_size: 384

|

| 24 |

+

}

|

| 25 |

+

data_aug: True

|

| 26 |

+

multiscales: False

|

| 27 |

+

num_workers: 14

|

| 28 |

+

|

| 29 |

+

training:

|

| 30 |

+

epochs: 8

|

| 31 |

+

show_iters: 50

|

| 32 |

+

eval_iters: 3000

|

| 33 |

+

save_iters: 3000

|

| 34 |

+

|

| 35 |

+

optimizer:

|

| 36 |

+

type: Adam

|

| 37 |

+

true_wd: False

|

| 38 |

+

wd: 0.0

|

| 39 |

+

bn_wd: False

|

| 40 |

+

clip_grad: 20

|

| 41 |

+

lr: 0.0001

|

| 42 |

+

args: {

|

| 43 |

+

betas: !!python/tuple [0.9, 0.999], # for default Adam

|

| 44 |

+

}

|

| 45 |

+

scheduler: {

|

| 46 |

+

periods: [6, 2],

|

| 47 |

+

gamma: 0.1,

|

| 48 |

+

}

|

| 49 |

+

|

| 50 |

+

model:

|

| 51 |

+

name: 'modules.model_vision.BaseVision'

|

| 52 |

+

checkpoint: ~

|

| 53 |

+

vision: {

|

| 54 |

+

loss_weight: 1.,

|

| 55 |

+

attention: 'position',

|

| 56 |

+

backbone: 'transformer',

|

| 57 |

+

backbone_ln: 3,

|

| 58 |

+

}

|

configs/pretrain_vision_model_sv.yaml

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

global:

|

| 2 |

+

name: pretrain-vision-model-sv

|

| 3 |

+

phase: train

|

| 4 |

+

stage: pretrain-vision

|

| 5 |

+

workdir: workdir

|

| 6 |

+

seed: ~

|

| 7 |

+

|

| 8 |

+

dataset:

|

| 9 |

+

train: {

|

| 10 |

+

roots: ['data/training/MJ/MJ_train/',

|

| 11 |

+

'data/training/MJ/MJ_test/',

|

| 12 |

+

'data/training/MJ/MJ_valid/',

|

| 13 |

+

'data/training/ST'],

|

| 14 |

+

batch_size: 384

|

| 15 |

+

}

|

| 16 |

+

test: {

|

| 17 |

+

roots: ['data/evaluation/IIIT5k_3000',

|

| 18 |

+

'data/evaluation/SVT',

|

| 19 |

+

'data/evaluation/SVTP',

|

| 20 |

+

'data/evaluation/IC13_857',

|

| 21 |

+

'data/evaluation/IC15_1811',

|

| 22 |

+

'data/evaluation/CUTE80'],

|

| 23 |

+

batch_size: 384

|

| 24 |

+

}

|

| 25 |

+

data_aug: True

|

| 26 |

+

multiscales: False

|

| 27 |

+

num_workers: 14

|

| 28 |

+

|

| 29 |

+

training:

|

| 30 |

+

epochs: 8

|

| 31 |

+

show_iters: 50

|

| 32 |

+

eval_iters: 3000

|

| 33 |

+

save_iters: 3000

|

| 34 |

+

|

| 35 |

+

optimizer:

|

| 36 |

+

type: Adam

|

| 37 |

+

true_wd: False

|

| 38 |

+

wd: 0.0

|

| 39 |

+

bn_wd: False

|

| 40 |

+

clip_grad: 20

|

| 41 |

+

lr: 0.0001

|

| 42 |

+

args: {

|

| 43 |

+

betas: !!python/tuple [0.9, 0.999], # for default Adam

|

| 44 |

+

}

|

| 45 |

+

scheduler: {

|

| 46 |

+

periods: [6, 2],

|

| 47 |

+

gamma: 0.1,

|

| 48 |

+

}

|

| 49 |

+

|

| 50 |

+

model:

|

| 51 |

+

name: 'modules.model_vision.BaseVision'

|

| 52 |

+

checkpoint: ~

|

| 53 |

+

vision: {

|

| 54 |

+

loss_weight: 1.,

|

| 55 |

+

attention: 'attention',

|

| 56 |

+

backbone: 'transformer',

|

| 57 |

+

backbone_ln: 2,

|

| 58 |

+

}

|

configs/template.yaml

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

global:

|

| 2 |

+

name: exp

|

| 3 |

+

phase: train

|

| 4 |

+

stage: pretrain-vision

|

| 5 |

+

workdir: /tmp/workdir

|

| 6 |

+

seed: ~

|

| 7 |

+

|

| 8 |

+

dataset:

|

| 9 |

+

train: {

|

| 10 |

+

roots: ['data/training/MJ/MJ_train/',

|

| 11 |

+

'data/training/MJ/MJ_test/',

|

| 12 |

+

'data/training/MJ/MJ_valid/',

|

| 13 |

+

'data/training/ST'],

|

| 14 |

+

batch_size: 128

|

| 15 |

+

}

|

| 16 |

+

test: {

|

| 17 |

+

roots: ['data/evaluation/IIIT5k_3000',

|

| 18 |

+

'data/evaluation/SVT',

|

| 19 |

+

'data/evaluation/SVTP',

|

| 20 |

+

'data/evaluation/IC13_857',

|

| 21 |

+

'data/evaluation/IC15_1811',

|

| 22 |

+

'data/evaluation/CUTE80'],

|

| 23 |

+

batch_size: 128

|

| 24 |

+

}

|

| 25 |

+

charset_path: data/charset_36.txt

|

| 26 |

+

num_workers: 4

|

| 27 |

+

max_length: 25 # 30

|

| 28 |

+

image_height: 32

|

| 29 |

+

image_width: 128

|

| 30 |

+

case_sensitive: False

|

| 31 |

+

eval_case_sensitive: False

|

| 32 |

+

data_aug: True

|

| 33 |

+

multiscales: False

|

| 34 |

+

pin_memory: True

|

| 35 |

+

smooth_label: False

|

| 36 |

+

smooth_factor: 0.1

|

| 37 |

+

one_hot_y: True

|

| 38 |

+

use_sm: False

|

| 39 |

+

|

| 40 |

+

training:

|

| 41 |

+

epochs: 6

|

| 42 |

+

show_iters: 50

|

| 43 |

+

eval_iters: 3000

|

| 44 |

+

save_iters: 20000

|

| 45 |

+

start_iters: 0

|

| 46 |

+

stats_iters: 100000

|

| 47 |

+

|

| 48 |

+

optimizer:

|

| 49 |

+

type: Adadelta # Adadelta, Adam

|

| 50 |

+

true_wd: False

|

| 51 |

+

wd: 0. # 0.001

|

| 52 |

+

bn_wd: False

|

| 53 |

+

args: {

|

| 54 |

+

# betas: !!python/tuple [0.9, 0.99], # betas=(0.9,0.99) for AdamW

|

| 55 |

+

# betas: !!python/tuple [0.9, 0.999], # for default Adam

|

| 56 |

+

}

|

| 57 |

+

clip_grad: 20

|

| 58 |

+

lr: [1.0, 1.0, 1.0] # lr: [0.005, 0.005, 0.005]

|

| 59 |

+

scheduler: {

|

| 60 |

+

periods: [3, 2, 1],

|

| 61 |

+

gamma: 0.1,

|

| 62 |

+

}

|

| 63 |

+

|

| 64 |

+

model:

|

| 65 |

+

name: 'modules.model_abinet.ABINetModel'

|

| 66 |

+

checkpoint: ~

|

| 67 |

+

strict: True

|

configs/train_abinet.yaml

ADDED

|

@@ -0,0 +1,71 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|