Spaces:

Running

on

T4

Running

on

T4

Robin Rombach

commited on

Commit

•

1fe8a15

1

Parent(s):

677e3db

stable diffusion

Browse filesFormer-commit-id: 2ff270f4e0c884d9684fa038f6d84d8600a94b39

- LICENSE +6 -13

- README.md +105 -144

- Stable_Diffusion_v1_Model_Card.md +140 -0

- assets/a-painting-of-a-fire.png +0 -0

- assets/a-photograph-of-a-fire.png +0 -0

- assets/a-shirt-with-a-fire-printed-on-it.png +0 -0

- assets/a-shirt-with-the-inscription-'fire'.png +0 -0

- assets/a-watercolor-painting-of-a-fire.png +0 -0

- assets/birdhouse.png +0 -0

- assets/fire.png +0 -0

- assets/rdm-preview.jpg +0 -0

- assets/stable-samples/img2img/mountains-1.png +0 -0

- assets/stable-samples/img2img/mountains-2.png +0 -0

- assets/stable-samples/img2img/mountains-3.png +0 -0

- assets/stable-samples/img2img/sketch-mountains-input.jpg +0 -0

- assets/stable-samples/img2img/upscaling-in.png.REMOVED.git-id +1 -0

- assets/stable-samples/img2img/upscaling-out.png.REMOVED.git-id +1 -0

- assets/stable-samples/txt2img/000002025.png +0 -0

- assets/stable-samples/txt2img/000002035.png +0 -0

- assets/stable-samples/txt2img/merged-0005.png.REMOVED.git-id +1 -0

- assets/stable-samples/txt2img/merged-0006.png.REMOVED.git-id +1 -0

- assets/stable-samples/txt2img/merged-0007.png.REMOVED.git-id +1 -0

- assets/the-earth-is-on-fire,-oil-on-canvas.png +0 -0

- assets/txt2img-convsample.png +0 -0

- assets/txt2img-preview.png.REMOVED.git-id +1 -0

- assets/v1-variants-scores.jpg +0 -0

- configs/latent-diffusion/cin256-v2.yaml +68 -0

- configs/latent-diffusion/txt2img-1p4B-eval.yaml +71 -0

- configs/retrieval-augmented-diffusion/768x768.yaml +68 -0

- configs/stable-diffusion/v1-inference.yaml +70 -0

- data/imagenet_clsidx_to_label.txt +1000 -0

- environment.yaml +5 -5

- ldm/models/diffusion/ddim.py +62 -6

- ldm/models/diffusion/plms.py +236 -0

- ldm/modules/diffusionmodules/openaimodel.py +39 -14

- ldm/modules/encoders/modules.py +103 -0

- ldm/modules/x_transformer.py +1 -1

- ldm/util.py +120 -3

- scripts/img2img.py +293 -0

- scripts/knn2img.py +398 -0

- scripts/latent_imagenet_diffusion.ipynb.REMOVED.git-id +1 -0

- scripts/train_searcher.py +147 -0

- scripts/txt2img.py +279 -0

LICENSE

CHANGED

|

@@ -1,16 +1,9 @@

|

|

| 1 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

|

| 3 |

-

Copyright (c) 2022 Machine Vision and Learning Group, LMU Munich

|

| 4 |

-

|

| 5 |

-

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

-

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

-

in the Software without restriction, including without limitation the rights

|

| 8 |

-

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

-

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

-

furnished to do so, subject to the following conditions:

|

| 11 |

-

|

| 12 |

-

The above copyright notice and this permission notice shall be included in all

|

| 13 |

-

copies or substantial portions of the Software.

|

| 14 |

|

| 15 |

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

|

@@ -18,4 +11,4 @@ FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

|

| 18 |

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

-

SOFTWARE.

|

|

|

|

| 1 |

+

All rights reserved by the authors.

|

| 2 |

+

You must not distribute the weights provided to you directly or indirectly without explicit consent of the authors.

|

| 3 |

+

You must not distribute harmful, offensive, dehumanizing content or otherwise harmful representations of people or their environments, cultures, religions, etc. produced with the model weights

|

| 4 |

+

or other generated content described in the "Misuse and Malicious Use" section in the model card.

|

| 5 |

+

The model weights are provided for research purposes only.

|

| 6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 |

|

| 8 |

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 9 |

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

|

|

|

| 11 |

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 12 |

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 13 |

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 14 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,11 +1,5 @@

|

|

| 1 |

-

#

|

| 2 |

-

[

|

| 3 |

-

|

| 4 |

-

<p align="center">

|

| 5 |

-

<img src=assets/results.gif />

|

| 6 |

-

</p>

|

| 7 |

-

|

| 8 |

-

|

| 9 |

|

| 10 |

[**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)<br/>

|

| 11 |

[Robin Rombach](https://github.com/rromb)\*,

|

|

@@ -13,12 +7,19 @@

|

|

| 13 |

[Dominik Lorenz](https://github.com/qp-qp)\,

|

| 14 |

[Patrick Esser](https://github.com/pesser),

|

| 15 |

[Björn Ommer](https://hci.iwr.uni-heidelberg.de/Staff/bommer)<br/>

|

| 16 |

-

\* equal contribution

|

| 17 |

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 21 |

|

|

|

|

| 22 |

## Requirements

|

| 23 |

A suitable [conda](https://conda.io/) environment named `ldm` can be created

|

| 24 |

and activated with:

|

|

@@ -28,176 +29,135 @@ conda env create -f environment.yaml

|

|

| 28 |

conda activate ldm

|

| 29 |

```

|

| 30 |

|

| 31 |

-

|

| 32 |

-

|

| 33 |

-

## Pretrained Autoencoding Models

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

All models were trained until convergence (no further substantial improvement in rFID).

|

| 37 |

-

|

| 38 |

-

| Model | rFID vs val | train steps |PSNR | PSIM | Link | Comments

|

| 39 |

-

|-------------------------|------------|----------------|----------------|---------------|-------------------------------------------------------------------------------------------------------------------------------------------------------|-----------------------|

|

| 40 |

-

| f=4, VQ (Z=8192, d=3) | 0.58 | 533066 | 27.43 +/- 4.26 | 0.53 +/- 0.21 | https://ommer-lab.com/files/latent-diffusion/vq-f4.zip | |

|

| 41 |

-

| f=4, VQ (Z=8192, d=3) | 1.06 | 658131 | 25.21 +/- 4.17 | 0.72 +/- 0.26 | https://heibox.uni-heidelberg.de/f/9c6681f64bb94338a069/?dl=1 | no attention |

|

| 42 |

-

| f=8, VQ (Z=16384, d=4) | 1.14 | 971043 | 23.07 +/- 3.99 | 1.17 +/- 0.36 | https://ommer-lab.com/files/latent-diffusion/vq-f8.zip | |

|

| 43 |

-

| f=8, VQ (Z=256, d=4) | 1.49 | 1608649 | 22.35 +/- 3.81 | 1.26 +/- 0.37 | https://ommer-lab.com/files/latent-diffusion/vq-f8-n256.zip |

|

| 44 |

-

| f=16, VQ (Z=16384, d=8) | 5.15 | 1101166 | 20.83 +/- 3.61 | 1.73 +/- 0.43 | https://heibox.uni-heidelberg.de/f/0e42b04e2e904890a9b6/?dl=1 | |

|

| 45 |

-

| | | | | | | |

|

| 46 |

-

| f=4, KL | 0.27 | 176991 | 27.53 +/- 4.54 | 0.55 +/- 0.24 | https://ommer-lab.com/files/latent-diffusion/kl-f4.zip | |

|

| 47 |

-

| f=8, KL | 0.90 | 246803 | 24.19 +/- 4.19 | 1.02 +/- 0.35 | https://ommer-lab.com/files/latent-diffusion/kl-f8.zip | |

|

| 48 |

-

| f=16, KL (d=16) | 0.87 | 442998 | 24.08 +/- 4.22 | 1.07 +/- 0.36 | https://ommer-lab.com/files/latent-diffusion/kl-f16.zip | |

|

| 49 |

-

| f=32, KL (d=64) | 2.04 | 406763 | 22.27 +/- 3.93 | 1.41 +/- 0.40 | https://ommer-lab.com/files/latent-diffusion/kl-f32.zip | |

|

| 50 |

|

| 51 |

-

### Get the models

|

| 52 |

-

|

| 53 |

-

Running the following script downloads und extracts all available pretrained autoencoding models.

|

| 54 |

-

```shell script

|

| 55 |

-

bash scripts/download_first_stages.sh

|

| 56 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

| 57 |

|

| 58 |

-

The first stage models can then be found in `models/first_stage_models/<model_spec>`

|

| 59 |

|

|

|

|

| 60 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 61 |

|

| 62 |

-

|

| 63 |

-

|

| 64 |

-

|

| 65 |

-

|

| 66 |

-

|

| 67 |

-

| LSUN-Churches | Unconditional Image Synthesis | LDM-KL-8 (400 DDIM steps, eta=0)| 4.02 (4.02) | 2.72 | 0.64 | 0.52 | https://ommer-lab.com/files/latent-diffusion/lsun_churches.zip | |

|

| 68 |

-

| LSUN-Bedrooms | Unconditional Image Synthesis | LDM-VQ-4 (200 DDIM steps, eta=1)| 2.95 (3.0) | 2.22 (2.23)| 0.66 | 0.48 | https://ommer-lab.com/files/latent-diffusion/lsun_bedrooms.zip | |

|

| 69 |

-

| ImageNet | Class-conditional Image Synthesis | LDM-VQ-8 (200 DDIM steps, eta=1) | 7.77(7.76)* /15.82** | 201.56(209.52)* /78.82** | 0.84* / 0.65** | 0.35* / 0.63** | https://ommer-lab.com/files/latent-diffusion/cin.zip | *: w/ guiding, classifier_scale 10 **: w/o guiding, scores in bracket calculated with script provided by [ADM](https://github.com/openai/guided-diffusion) |

|

| 70 |

-

| Conceptual Captions | Text-conditional Image Synthesis | LDM-VQ-f4 (100 DDIM steps, eta=0) | 16.79 | 13.89 | N/A | N/A | https://ommer-lab.com/files/latent-diffusion/text2img.zip | finetuned from LAION |

|

| 71 |

-

| OpenImages | Super-resolution | LDM-VQ-4 | N/A | N/A | N/A | N/A | https://ommer-lab.com/files/latent-diffusion/sr_bsr.zip | BSR image degradation |

|

| 72 |

-

| OpenImages | Layout-to-Image Synthesis | LDM-VQ-4 (200 DDIM steps, eta=0) | 32.02 | 15.92 | N/A | N/A | https://ommer-lab.com/files/latent-diffusion/layout2img_model.zip | |

|

| 73 |

-

| Landscapes | Semantic Image Synthesis | LDM-VQ-4 | N/A | N/A | N/A | N/A | https://ommer-lab.com/files/latent-diffusion/semantic_synthesis256.zip | |

|

| 74 |

-

| Landscapes | Semantic Image Synthesis | LDM-VQ-4 | N/A | N/A | N/A | N/A | https://ommer-lab.com/files/latent-diffusion/semantic_synthesis.zip | finetuned on resolution 512x512 |

|

| 75 |

|

|

|

|

| 76 |

|

| 77 |

-

###

|

| 78 |

|

| 79 |

-

|

|

|

|

| 80 |

|

| 81 |

-

|

| 82 |

-

|

| 83 |

-

|

|

|

|

|

|

|

|

|

|

| 84 |

|

| 85 |

-

|

|

|

|

|

|

|

|

|

|

| 86 |

|

| 87 |

-

### Sampling with unconditional models

|

| 88 |

|

| 89 |

-

We provide a first script for sampling from our unconditional models. Start it via

|

| 90 |

|

| 91 |

-

|

| 92 |

-

|

| 93 |

-

|

| 94 |

|

| 95 |

-

|

| 96 |

-

|

| 97 |

|

| 98 |

-

|

| 99 |

```

|

| 100 |

-

|

|

|

|

| 101 |

```

|

| 102 |

-

|

| 103 |

and sample with

|

| 104 |

```

|

| 105 |

-

python scripts/

|

| 106 |

```

|

| 107 |

-

|

| 108 |

-

|

| 109 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 110 |

|

| 111 |

-

# Train your own LDMs

|

| 112 |

-

|

| 113 |

-

## Data preparation

|

| 114 |

-

|

| 115 |

-

### Faces

|

| 116 |

-

For downloading the CelebA-HQ and FFHQ datasets, proceed as described in the [taming-transformers](https://github.com/CompVis/taming-transformers#celeba-hq)

|

| 117 |

-

repository.

|

| 118 |

-

|

| 119 |

-

### LSUN

|

| 120 |

-

|

| 121 |

-

The LSUN datasets can be conveniently downloaded via the script available [here](https://github.com/fyu/lsun).

|

| 122 |

-

We performed a custom split into training and validation images, and provide the corresponding filenames

|

| 123 |

-

at [https://ommer-lab.com/files/lsun.zip](https://ommer-lab.com/files/lsun.zip).

|

| 124 |

-

After downloading, extract them to `./data/lsun`. The beds/cats/churches subsets should

|

| 125 |

-

also be placed/symlinked at `./data/lsun/bedrooms`/`./data/lsun/cats`/`./data/lsun/churches`, respectively.

|

| 126 |

-

|

| 127 |

-

### ImageNet

|

| 128 |

-

The code will try to download (through [Academic

|

| 129 |

-

Torrents](http://academictorrents.com/)) and prepare ImageNet the first time it

|

| 130 |

-

is used. However, since ImageNet is quite large, this requires a lot of disk

|

| 131 |

-

space and time. If you already have ImageNet on your disk, you can speed things

|

| 132 |

-

up by putting the data into

|

| 133 |

-

`${XDG_CACHE}/autoencoders/data/ILSVRC2012_{split}/data/` (which defaults to

|

| 134 |

-

`~/.cache/autoencoders/data/ILSVRC2012_{split}/data/`), where `{split}` is one

|

| 135 |

-

of `train`/`validation`. It should have the following structure:

|

| 136 |

-

|

| 137 |

-

```

|

| 138 |

-

${XDG_CACHE}/autoencoders/data/ILSVRC2012_{split}/data/

|

| 139 |

-

├── n01440764

|

| 140 |

-

│ ├── n01440764_10026.JPEG

|

| 141 |

-

│ ├── n01440764_10027.JPEG

|

| 142 |

-

│ ├── ...

|

| 143 |

-

├── n01443537

|

| 144 |

-

│ ├── n01443537_10007.JPEG

|

| 145 |

-

│ ├── n01443537_10014.JPEG

|

| 146 |

-

│ ├── ...

|

| 147 |

-

├── ...

|

| 148 |

```

|

|

|

|

|

|

|

|

|

|

|

|

|

| 149 |

|

| 150 |

-

If you haven't extracted the data, you can also place

|

| 151 |

-

`ILSVRC2012_img_train.tar`/`ILSVRC2012_img_val.tar` (or symlinks to them) into

|

| 152 |

-

`${XDG_CACHE}/autoencoders/data/ILSVRC2012_train/` /

|

| 153 |

-

`${XDG_CACHE}/autoencoders/data/ILSVRC2012_validation/`, which will then be

|

| 154 |

-

extracted into above structure without downloading it again. Note that this

|

| 155 |

-

will only happen if neither a folder

|

| 156 |

-

`${XDG_CACHE}/autoencoders/data/ILSVRC2012_{split}/data/` nor a file

|

| 157 |

-

`${XDG_CACHE}/autoencoders/data/ILSVRC2012_{split}/.ready` exist. Remove them

|

| 158 |

-

if you want to force running the dataset preparation again.

|

| 159 |

|

|

|

|

| 160 |

|

| 161 |

-

|

|

|

|

|

|

|

| 162 |

|

| 163 |

-

|

| 164 |

-

|

| 165 |

-

### Training autoencoder models

|

| 166 |

-

|

| 167 |

-

Configs for training a KL-regularized autoencoder on ImageNet are provided at `configs/autoencoder`.

|

| 168 |

-

Training can be started by running

|

| 169 |

```

|

| 170 |

-

|

| 171 |

```

|

| 172 |

-

|

| 173 |

-

|

| 174 |

-

|

| 175 |

-

For training VQ-regularized models, see the [taming-transformers](https://github.com/CompVis/taming-transformers)

|

| 176 |

-

repository.

|

| 177 |

|

| 178 |

-

|

| 179 |

|

| 180 |

-

|

| 181 |

-

Training can be started by running

|

| 182 |

|

| 183 |

-

|

| 184 |

-

CUDA_VISIBLE_DEVICES=<GPU_ID> python main.py --base configs/latent-diffusion/<config_spec>.yaml -t --gpus 0,

|

| 185 |

-

```

|

| 186 |

-

|

| 187 |

-

where ``<config_spec>`` is one of {`celebahq-ldm-vq-4`(f=4, VQ-reg. autoencoder, spatial size 64x64x3),`ffhq-ldm-vq-4`(f=4, VQ-reg. autoencoder, spatial size 64x64x3),

|

| 188 |

-

`lsun_bedrooms-ldm-vq-4`(f=4, VQ-reg. autoencoder, spatial size 64x64x3),

|

| 189 |

-

`lsun_churches-ldm-vq-4`(f=8, KL-reg. autoencoder, spatial size 32x32x4),`cin-ldm-vq-8`(f=8, VQ-reg. autoencoder, spatial size 32x32x4)}.

|

| 190 |

|

| 191 |

-

|

|

|

|

| 192 |

|

| 193 |

-

|

| 194 |

-

* In the meantime, you can play with our colab notebook https://colab.research.google.com/drive/1xqzUi2iXQXDqXBHQGP9Mqt2YrYW6cx-J?usp=sharing

|

| 195 |

-

* We will also release some further pretrained models.

|

| 196 |

|

| 197 |

|

| 198 |

## Comments

|

| 199 |

|

| 200 |

-

- Our codebase for the diffusion models builds heavily on [OpenAI's codebase](https://github.com/openai/guided-diffusion)

|

| 201 |

and [https://github.com/lucidrains/denoising-diffusion-pytorch](https://github.com/lucidrains/denoising-diffusion-pytorch).

|

| 202 |

Thanks for open-sourcing!

|

| 203 |

|

|

@@ -215,6 +175,7 @@ Thanks for open-sourcing!

|

|

| 215 |

archivePrefix={arXiv},

|

| 216 |

primaryClass={cs.CV}

|

| 217 |

}

|

|

|

|

| 218 |

```

|

| 219 |

|

| 220 |

|

|

|

|

| 1 |

+

# Stable Diffusion

|

| 2 |

+

*Stable Diffusion was made possible thanks to a collaboration with [Stability AI](https://stability.ai/) and [Runway](https://runwayml.com/) and builds upon our previous work:*

|

|

|

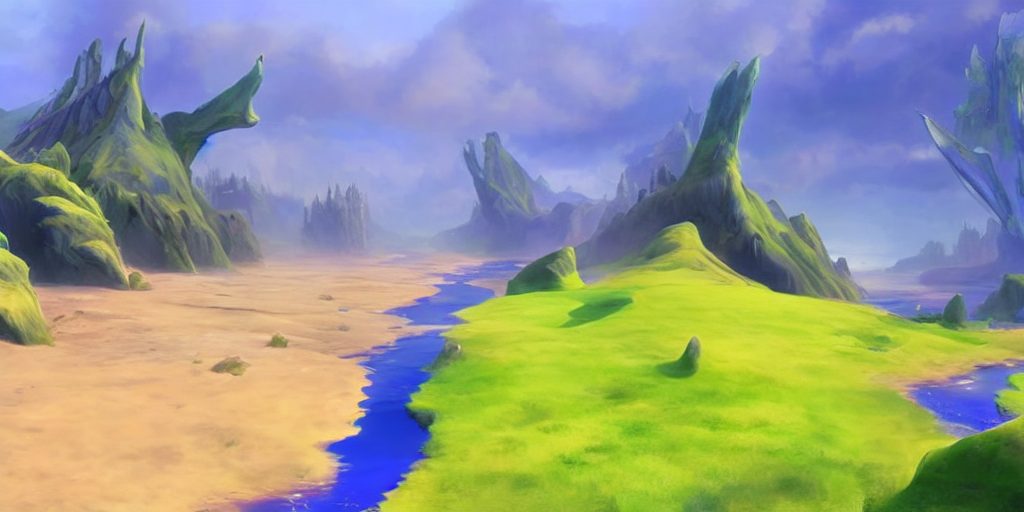

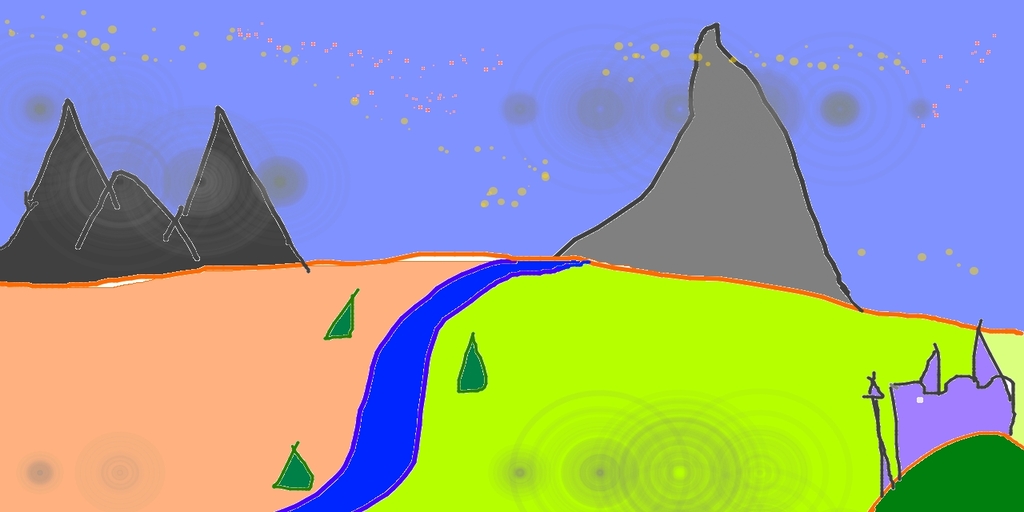

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

|

| 4 |

[**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)<br/>

|

| 5 |

[Robin Rombach](https://github.com/rromb)\*,

|

|

|

|

| 7 |

[Dominik Lorenz](https://github.com/qp-qp)\,

|

| 8 |

[Patrick Esser](https://github.com/pesser),

|

| 9 |

[Björn Ommer](https://hci.iwr.uni-heidelberg.de/Staff/bommer)<br/>

|

|

|

|

| 10 |

|

| 11 |

+

which is available on [GitHub](https://github.com/CompVis/latent-diffusion).

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

[Stable Diffusion](#stable-diffusion-v1) is a latent text-to-image diffusion

|

| 15 |

+

model.

|

| 16 |

+

Thanks to a generous compute donation from [Stability AI](https://stability.ai/) and support from [LAION](https://laion.ai/), we were able to train a Latent Diffusion Model on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database.

|

| 17 |

+

Similar to Google's [Imagen](https://arxiv.org/abs/2205.11487),

|

| 18 |

+

this model uses a frozen CLIP ViT-L/14 text encoder to condition the model on text prompts.

|

| 19 |

+

With its 860M UNet and 123M text encoder, the model is relatively lightweight and runs on a GPU with at least 10GB VRAM.

|

| 20 |

+

See [this section](#stable-diffusion-v1) below and the [model card](https://huggingface.co/CompVis/stable-diffusion).

|

| 21 |

|

| 22 |

+

|

| 23 |

## Requirements

|

| 24 |

A suitable [conda](https://conda.io/) environment named `ldm` can be created

|

| 25 |

and activated with:

|

|

|

|

| 29 |

conda activate ldm

|

| 30 |

```

|

| 31 |

|

| 32 |

+

You can also update an existing [latent diffusion](https://github.com/CompVis/latent-diffusion) environment by running

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 34 |

```

|

| 35 |

+

conda install pytorch torchvision -c pytorch

|

| 36 |

+

pip install transformers==4.19.2

|

| 37 |

+

pip install -e .

|

| 38 |

+

```

|

| 39 |

|

|

|

|

| 40 |

|

| 41 |

+

## Stable Diffusion v1

|

| 42 |

|

| 43 |

+

Stable Diffusion v1 refers to a specific configuration of the model

|

| 44 |

+

architecture that uses a downsampling-factor 8 autoencoder with an 860M UNet

|

| 45 |

+

and CLIP ViT-L/14 text encoder for the diffusion model. The model was pretrained on 256x256 images and

|

| 46 |

+

then finetuned on 512x512 images.

|

| 47 |

|

| 48 |

+

*Note: Stable Diffusion v1 is a general text-to-image diffusion model and therefore mirrors biases and (mis-)conceptions that are present

|

| 49 |

+

in its training data.

|

| 50 |

+

Details on the training procedure and data, as well as the intended use of the model can be found in the corresponding [model card](https://huggingface.co/CompVis/stable-diffusion).

|

| 51 |

+

Research into the safe deployment of general text-to-image models is an ongoing effort. To prevent misuse and harm, we currently provide access to the checkpoints only for [academic research purposes upon request](TODO).

|

| 52 |

+

**This is an experiment in safe and community-driven publication of a capable and general text-to-image model. We are working on a public release with a more permissive license that also incorporates ethical considerations.***

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 53 |

|

| 54 |

+

[Request access to Stable Diffusion v1 checkpoints for academic research](TODO)

|

| 55 |

|

| 56 |

+

### Weights

|

| 57 |

|

| 58 |

+

We currently provide three checkpoints, `sd-v1-1.ckpt`, `sd-v1-2.ckpt` and `sd-v1-3.ckpt`,

|

| 59 |

+

which were trained as follows,

|

| 60 |

|

| 61 |

+

- `sd-v1-1.ckpt`: 237k steps at resolution `256x256` on [laion2B-en](https://huggingface.co/datasets/laion/laion2B-en).

|

| 62 |

+

194k steps at resolution `512x512` on [laion-high-resolution](https://huggingface.co/datasets/laion/laion-high-resolution) (170M examples from LAION-5B with resolution `>= 1024x1024`).

|

| 63 |

+

- `sd-v1-2.ckpt`: Resumed from `sd-v1-1.ckpt`.

|

| 64 |

+

515k steps at resolution `512x512` on "laion-improved-aesthetics" (a subset of laion2B-en,

|

| 65 |

+

filtered to images with an original size `>= 512x512`, estimated aesthetics score `> 5.0`, and an estimated watermark probability `< 0.5`. The watermark estimate is from the LAION-5B metadata, the aesthetics score is estimated using an [improved aesthetics estimator](https://github.com/christophschuhmann/improved-aesthetic-predictor)).

|

| 66 |

+

- `sd-v1-3.ckpt`: Resumed from `sd-v1-2.ckpt`. 195k steps at resolution `512x512` on "laion-improved-aesthetics" and 10\% dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

|

| 67 |

|

| 68 |

+

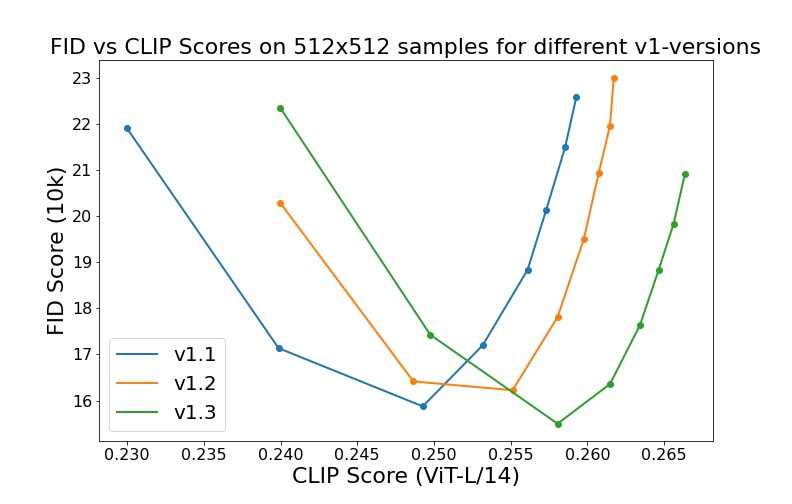

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

|

| 69 |

+

5.0, 6.0, 7.0, 8.0) and 50 PLMS sampling

|

| 70 |

+

steps show the relative improvements of the checkpoints:

|

| 71 |

+

|

| 72 |

|

|

|

|

| 73 |

|

|

|

|

| 74 |

|

| 75 |

+

### Text-to-Image with Stable Diffusion

|

| 76 |

+

|

| 77 |

+

|

| 78 |

|

| 79 |

+

Stable Diffusion is a latent diffusion model conditioned on the (non-pooled) text embeddings of a CLIP ViT-L/14 text encoder.

|

|

|

|

| 80 |

|

| 81 |

+

After [obtaining the weights](#weights), link them

|

| 82 |

```

|

| 83 |

+

mkdir -p models/ldm/stable-diffusion-v1/

|

| 84 |

+

ln -s <path/to/model.ckpt> models/ldm/stable-diffusion-v1/model.ckpt

|

| 85 |

```

|

|

|

|

| 86 |

and sample with

|

| 87 |

```

|

| 88 |

+

python scripts/txt2img.py --prompt "a photograph of an astronaut riding a horse" --plms

|

| 89 |

```

|

| 90 |

+

By default, this uses a guidance scale of `--scale 7.5`, [Katherine Crowson's implementation](https://github.com/CompVis/latent-diffusion/pull/51) of the [PLMS](https://arxiv.org/abs/2202.09778) sampler,

|

| 91 |

+

and renders images of size 512x512 (which it was trained on) in 50 steps. All supported arguments are listed below (type `python scripts/txt2img.py --help`).

|

| 92 |

+

|

| 93 |

+

```commandline

|

| 94 |

+

usage: txt2img.py [-h] [--prompt [PROMPT]] [--outdir [OUTDIR]] [--skip_grid] [--skip_save] [--ddim_steps DDIM_STEPS] [--plms] [--laion400m] [--fixed_code] [--ddim_eta DDIM_ETA] [--n_iter N_ITER] [--H H] [--W W] [--C C] [--f F] [--n_samples N_SAMPLES] [--n_rows N_ROWS]

|

| 95 |

+

[--scale SCALE] [--from-file FROM_FILE] [--config CONFIG] [--ckpt CKPT] [--seed SEED] [--precision {full,autocast}]

|

| 96 |

+

|

| 97 |

+

optional arguments:

|

| 98 |

+

-h, --help show this help message and exit

|

| 99 |

+

--prompt [PROMPT] the prompt to render

|

| 100 |

+

--outdir [OUTDIR] dir to write results to

|

| 101 |

+

--skip_grid do not save a grid, only individual samples. Helpful when evaluating lots of samples

|

| 102 |

+

--skip_save do not save individual samples. For speed measurements.

|

| 103 |

+

--ddim_steps DDIM_STEPS

|

| 104 |

+

number of ddim sampling steps

|

| 105 |

+

--plms use plms sampling

|

| 106 |

+

--laion400m uses the LAION400M model

|

| 107 |

+

--fixed_code if enabled, uses the same starting code across samples

|

| 108 |

+

--ddim_eta DDIM_ETA ddim eta (eta=0.0 corresponds to deterministic sampling

|

| 109 |

+

--n_iter N_ITER sample this often

|

| 110 |

+

--H H image height, in pixel space

|

| 111 |

+

--W W image width, in pixel space

|

| 112 |

+

--C C latent channels

|

| 113 |

+

--f F downsampling factor

|

| 114 |

+

--n_samples N_SAMPLES

|

| 115 |

+

how many samples to produce for each given prompt. A.k.a. batch size

|

| 116 |

+

--n_rows N_ROWS rows in the grid (default: n_samples)

|

| 117 |

+

--scale SCALE unconditional guidance scale: eps = eps(x, empty) + scale * (eps(x, cond) - eps(x, empty))

|

| 118 |

+

--from-file FROM_FILE

|

| 119 |

+

if specified, load prompts from this file

|

| 120 |

+

--config CONFIG path to config which constructs model

|

| 121 |

+

--ckpt CKPT path to checkpoint of model

|

| 122 |

+

--seed SEED the seed (for reproducible sampling)

|

| 123 |

+

--precision {full,autocast}

|

| 124 |

+

evaluate at this precision

|

| 125 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 126 |

```

|

| 127 |

+

Note: The inference config for all v1 versions is designed to be used with EMA-only checkpoints.

|

| 128 |

+

For this reason `use_ema=False` is set in the configuration, otherwise the code will try to switch from

|

| 129 |

+

non-EMA to EMA weights. If you want to examine the effect of EMA vs no EMA, we provide "full" checkpoints

|

| 130 |

+

which contain both types of weights. For these, `use_ema=False` will load and use the non-EMA weights.

|

| 131 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 132 |

|

| 133 |

+

### Image Modification with Stable Diffusion

|

| 134 |

|

| 135 |

+

By using a diffusion-denoising mechanism as first proposed by [SDEdit](https://arxiv.org/abs/2108.01073), the model can be used for different

|

| 136 |

+

tasks such as text-guided image-to-image translation and upscaling. Similar to the txt2img sampling script,

|

| 137 |

+

we provide a script to perform image modification with Stable Diffusion.

|

| 138 |

|

| 139 |

+

The following describes an example where a rough sketch made in [Pinta](https://www.pinta-project.com/) is converted into a detailed artwork.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 140 |

```

|

| 141 |

+

python scripts/img2img.py --prompt "A fantasy landscape, trending on artstation" --init-img <path-to-img.jpg> --strength 0.8

|

| 142 |

```

|

| 143 |

+

Here, strength is a value between 0.0 and 1.0, that controls the amount of noise that is added to the input image.

|

| 144 |

+

Values that approach 1.0 allow for lots of variations but will also produce images that are not semantically consistent with the input. See the following example.

|

|

|

|

|

|

|

|

|

|

| 145 |

|

| 146 |

+

**Input**

|

| 147 |

|

| 148 |

+

|

|

|

|

| 149 |

|

| 150 |

+

**Outputs**

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 151 |

|

| 152 |

+

|

| 153 |

+

|

| 154 |

|

| 155 |

+

This procedure can, for example, also be used to upscale samples from the base model.

|

|

|

|

|

|

|

| 156 |

|

| 157 |

|

| 158 |

## Comments

|

| 159 |

|

| 160 |

+

- Our codebase for the diffusion models builds heavily on [OpenAI's ADM codebase](https://github.com/openai/guided-diffusion)

|

| 161 |

and [https://github.com/lucidrains/denoising-diffusion-pytorch](https://github.com/lucidrains/denoising-diffusion-pytorch).

|

| 162 |

Thanks for open-sourcing!

|

| 163 |

|

|

|

|

| 175 |

archivePrefix={arXiv},

|

| 176 |

primaryClass={cs.CV}

|

| 177 |

}

|

| 178 |

+

|

| 179 |

```

|

| 180 |

|

| 181 |

|

Stable_Diffusion_v1_Model_Card.md

ADDED

|

@@ -0,0 +1,140 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Stable Diffusion v1 Model Card

|

| 2 |

+

This model card focuses on the model associated with the Stable Diffusion model, available [here](https://github.com/CompVis/stable-diffusion).

|

| 3 |

+

|

| 4 |

+

## Model Details

|

| 5 |

+

- **Developed by:** Robin Rombach, Patrick Esser

|

| 6 |

+

- **Model type:** Diffusion-based text-to-image generation model

|

| 7 |

+

- **Language(s):** English

|

| 8 |

+

- **License:** [Proprietary](LICENSE)

|

| 9 |

+

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([CLIP ViT-L/14](https://arxiv.org/abs/2103.00020)) as suggested in the [Imagen paper](https://arxiv.org/abs/2205.11487).

|

| 10 |

+

- **Resources for more information:** [GitHub Repository](https://github.com/CompVis/stable-diffusion), [Paper](https://arxiv.org/abs/2112.10752).

|

| 11 |

+

- **Cite as:**

|

| 12 |

+

|

| 13 |

+

@InProceedings{Rombach_2022_CVPR,

|

| 14 |

+

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

|

| 15 |

+

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

|

| 16 |

+

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

|

| 17 |

+

month = {June},

|

| 18 |

+

year = {2022},

|

| 19 |

+

pages = {10684-10695}

|

| 20 |

+

}

|

| 21 |

+

|

| 22 |

+

# Uses

|

| 23 |

+

|

| 24 |

+

## Direct Use

|

| 25 |

+

The model is intended for research purposes only. Possible research areas and

|

| 26 |

+

tasks include

|

| 27 |

+

|

| 28 |

+

- Safe deployment of models which have the potential to generate harmful content.

|

| 29 |

+

- Probing and understanding the limitations and biases of generative models.

|

| 30 |

+

- Generation of artworks and use in design and other artistic processes.

|

| 31 |

+

- Applications in educational or creative tools.

|

| 32 |

+

- Research on generative models.

|

| 33 |

+

|

| 34 |

+

Excluded uses are described below.

|

| 35 |

+

|

| 36 |

+

### Misuse, Malicious Use, and Out-of-Scope Use

|

| 37 |

+

_Note: This section is taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), but applies in the same way to Stable Diffusion v1_.

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

|

| 41 |

+

#### Out-of-Scope Use

|

| 42 |

+

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

|

| 43 |

+

#### Misuse and Malicious Use

|

| 44 |

+

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

|

| 45 |

+

|

| 46 |

+

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

|

| 47 |

+

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

|

| 48 |

+

- Impersonating individuals without their consent.

|

| 49 |

+

- Sexual content without consent of the people who might see it.

|

| 50 |

+

- Mis- and disinformation

|

| 51 |

+

- Representations of egregious violence and gore

|

| 52 |

+

- Sharing of copyrighted or licensed material in violation of its terms of use.

|

| 53 |

+

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

|

| 54 |

+

|

| 55 |

+

## Limitations and Bias

|

| 56 |

+

|

| 57 |

+

### Limitations

|

| 58 |

+

|

| 59 |

+

- The model does not achieve perfect photorealism

|

| 60 |

+

- The model cannot render legible text

|

| 61 |

+

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

|

| 62 |

+

- Faces and people in general may not be generated properly.

|

| 63 |

+

- The model was trained mainly with English captions and will not work as well in other languages.

|

| 64 |

+

- The autoencoding part of the model is lossy

|

| 65 |

+

- The model was trained on a large-scale dataset

|

| 66 |

+

[LAION-5B](https://laion.ai/blog/laion-5b/) which contains adult material

|

| 67 |

+

and is not fit for product use without additional safety mechanisms and

|

| 68 |

+

considerations.

|

| 69 |

+

|

| 70 |

+

### Bias

|

| 71 |

+

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

|

| 72 |

+

Stable Diffusion v1 was trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

|

| 73 |

+

which consists of images that are primarily limited to English descriptions.

|

| 74 |

+

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

|

| 75 |

+

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

|

| 76 |

+

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

## Training

|

| 80 |

+

|

| 81 |

+

**Training Data**

|

| 82 |

+

The model developers used the following dataset for training the model:

|

| 83 |

+

|

| 84 |

+

- LAION-2B (en) and subsets thereof (see next section)

|

| 85 |

+

|

| 86 |

+

**Training Procedure**

|

| 87 |

+

Stable Diffusion v1 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

|

| 88 |

+

|

| 89 |

+

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

|

| 90 |

+

- Text prompts are encoded through a ViT-L/14 text-encoder.

|

| 91 |

+

- The non-pooled output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

|

| 92 |

+

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet.

|

| 93 |

+

|

| 94 |

+

We currently provide three checkpoints, `sd-v1-1.ckpt`, `sd-v1-2.ckpt` and `sd-v1-3.ckpt`,

|

| 95 |

+

which were trained as follows,

|

| 96 |

+

|

| 97 |

+

- `sd-v1-1.ckpt`: 237k steps at resolution `256x256` on [laion2B-en](https://huggingface.co/datasets/laion/laion2B-en).

|

| 98 |

+

194k steps at resolution `512x512` on [laion-high-resolution](https://huggingface.co/datasets/laion/laion-high-resolution) (170M examples from LAION-5B with resolution `>= 1024x1024`).

|

| 99 |

+

- `sd-v1-2.ckpt`: Resumed from `sd-v1-1.ckpt`.

|

| 100 |

+

515k steps at resolution `512x512` on "laion-improved-aesthetics" (a subset of laion2B-en,

|

| 101 |

+

filtered to images with an original size `>= 512x512`, estimated aesthetics score `> 5.0`, and an estimated watermark probability `< 0.5`. The watermark estimate is from the LAION-5B metadata, the aesthetics score is estimated using an [improved aesthetics estimator](https://github.com/christophschuhmann/improved-aesthetic-predictor)).

|

| 102 |

+

- `sd-v1-3.ckpt`: Resumed from `sd-v1-2.ckpt`. 195k steps at resolution `512x512` on "laion-improved-aesthetics" and 10\% dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

- **Hardware:** 32 x 8 x A100 GPUs

|

| 106 |

+

- **Optimizer:** AdamW

|

| 107 |

+

- **Gradient Accumulations**: 2

|

| 108 |

+

- **Batch:** 32 x 8 x 2 x 4 = 2048

|

| 109 |

+

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

|

| 110 |

+

|

| 111 |

+

## Evaluation Results

|

| 112 |

+

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

|

| 113 |

+

5.0, 6.0, 7.0, 8.0) and 50 PLMS sampling

|

| 114 |

+

steps show the relative improvements of the checkpoints:

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

Evaluated using 50 PLMS steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

|

| 119 |

+

## Environmental Impact

|

| 120 |

+

|

| 121 |

+

**Stable Diffusion v1** **Estimated Emissions**

|

| 122 |

+

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

|

| 123 |

+

|

| 124 |

+

- **Hardware Type:** A100 PCIe 40GB

|

| 125 |

+

- **Hours used:** 150000

|

| 126 |

+

- **Cloud Provider:** AWS

|

| 127 |

+

- **Compute Region:** US-east

|

| 128 |

+

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 11250 kg CO2 eq.

|

| 129 |

+

## Citation

|

| 130 |

+

@InProceedings{Rombach_2022_CVPR,

|

| 131 |

+

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

|

| 132 |

+

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

|

| 133 |

+

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

|

| 134 |

+

month = {June},

|

| 135 |

+

year = {2022},

|

| 136 |

+

pages = {10684-10695}

|

| 137 |

+

}

|

| 138 |

+

|

| 139 |

+

*This model card was written by: Robin Rombach and Patrick Esser and is based on the [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).*

|

| 140 |

+

|

assets/a-painting-of-a-fire.png

ADDED

|

assets/a-photograph-of-a-fire.png

ADDED

|

assets/a-shirt-with-a-fire-printed-on-it.png

ADDED

|

assets/a-shirt-with-the-inscription-'fire'.png

ADDED

|

assets/a-watercolor-painting-of-a-fire.png

ADDED

|

assets/birdhouse.png

ADDED

|

assets/fire.png

ADDED

|

assets/rdm-preview.jpg

ADDED

|

assets/stable-samples/img2img/mountains-1.png

ADDED

|

assets/stable-samples/img2img/mountains-2.png

ADDED

|

assets/stable-samples/img2img/mountains-3.png

ADDED

|

assets/stable-samples/img2img/sketch-mountains-input.jpg

ADDED

|

assets/stable-samples/img2img/upscaling-in.png.REMOVED.git-id

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

501c31c21751664957e69ce52cad1818b6d2f4ce

|

assets/stable-samples/img2img/upscaling-out.png.REMOVED.git-id

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

1c4bb25a779f34d86b2d90e584ac67af91bb1303

|

assets/stable-samples/txt2img/000002025.png

ADDED

|

assets/stable-samples/txt2img/000002035.png

ADDED

|

assets/stable-samples/txt2img/merged-0005.png.REMOVED.git-id

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

ca0a1af206555f0f208a1ab879e95efedc1b1c5b

|

assets/stable-samples/txt2img/merged-0006.png.REMOVED.git-id

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

999f3703230580e8c89e9081abd6a1f8f50896d4

|

assets/stable-samples/txt2img/merged-0007.png.REMOVED.git-id

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

af390acaf601283782d6f479d4cade4d78e30b26

|

assets/the-earth-is-on-fire,-oil-on-canvas.png

ADDED

|

assets/txt2img-convsample.png

ADDED

|

assets/txt2img-preview.png.REMOVED.git-id

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

51ee1c235dfdc63d4c41de7d303d03730e43c33c

|

assets/v1-variants-scores.jpg

ADDED

|

configs/latent-diffusion/cin256-v2.yaml

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

base_learning_rate: 0.0001

|

| 3 |

+

target: ldm.models.diffusion.ddpm.LatentDiffusion

|

| 4 |

+

params:

|

| 5 |

+

linear_start: 0.0015

|

| 6 |

+

linear_end: 0.0195

|

| 7 |

+

num_timesteps_cond: 1

|

| 8 |

+

log_every_t: 200

|

| 9 |

+

timesteps: 1000

|

| 10 |

+

first_stage_key: image

|

| 11 |

+

cond_stage_key: class_label

|

| 12 |

+

image_size: 64

|

| 13 |

+

channels: 3

|

| 14 |

+

cond_stage_trainable: true

|

| 15 |

+

conditioning_key: crossattn

|

| 16 |

+

monitor: val/loss

|

| 17 |

+

use_ema: False

|

| 18 |

+

|

| 19 |

+

unet_config:

|

| 20 |

+

target: ldm.modules.diffusionmodules.openaimodel.UNetModel

|

| 21 |

+

params:

|

| 22 |

+

image_size: 64

|

| 23 |

+

in_channels: 3

|

| 24 |

+

out_channels: 3

|

| 25 |

+

model_channels: 192

|

| 26 |

+

attention_resolutions:

|

| 27 |

+

- 8

|

| 28 |

+

- 4

|

| 29 |

+

- 2

|

| 30 |

+

num_res_blocks: 2

|

| 31 |

+

channel_mult:

|

| 32 |

+

- 1

|

| 33 |

+

- 2

|

| 34 |

+

- 3

|

| 35 |

+

- 5

|

| 36 |

+

num_heads: 1

|

| 37 |

+

use_spatial_transformer: true

|

| 38 |

+

transformer_depth: 1

|

| 39 |

+

context_dim: 512

|

| 40 |

+

|

| 41 |

+

first_stage_config:

|

| 42 |

+

target: ldm.models.autoencoder.VQModelInterface

|

| 43 |

+

params:

|

| 44 |

+

embed_dim: 3

|

| 45 |

+

n_embed: 8192

|

| 46 |

+

ddconfig:

|

| 47 |

+

double_z: false

|

| 48 |

+

z_channels: 3

|

| 49 |

+

resolution: 256

|

| 50 |

+

in_channels: 3

|

| 51 |

+

out_ch: 3

|

| 52 |

+

ch: 128

|

| 53 |

+

ch_mult:

|

| 54 |

+

- 1

|

| 55 |

+

- 2

|

| 56 |

+

- 4

|

| 57 |

+

num_res_blocks: 2

|

| 58 |

+

attn_resolutions: []

|

| 59 |

+

dropout: 0.0

|

| 60 |

+

lossconfig:

|

| 61 |

+

target: torch.nn.Identity

|

| 62 |

+

|

| 63 |

+

cond_stage_config:

|

| 64 |

+

target: ldm.modules.encoders.modules.ClassEmbedder

|

| 65 |

+

params:

|

| 66 |

+

n_classes: 1001

|

| 67 |

+

embed_dim: 512

|

| 68 |

+

key: class_label

|

configs/latent-diffusion/txt2img-1p4B-eval.yaml

ADDED

|

@@ -0,0 +1,71 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

base_learning_rate: 5.0e-05

|

| 3 |

+

target: ldm.models.diffusion.ddpm.LatentDiffusion

|

| 4 |

+

params:

|

| 5 |

+

linear_start: 0.00085

|

| 6 |

+

linear_end: 0.012

|

| 7 |

+

num_timesteps_cond: 1

|

| 8 |

+

log_every_t: 200

|

| 9 |

+

timesteps: 1000

|

| 10 |

+

first_stage_key: image

|

| 11 |

+

cond_stage_key: caption

|

| 12 |

+

image_size: 32

|

| 13 |

+

channels: 4

|

| 14 |

+

cond_stage_trainable: true

|

| 15 |

+

conditioning_key: crossattn

|

| 16 |

+

monitor: val/loss_simple_ema

|

| 17 |

+

scale_factor: 0.18215

|

| 18 |

+

use_ema: False

|

| 19 |

+

|

| 20 |

+

unet_config:

|

| 21 |

+

target: ldm.modules.diffusionmodules.openaimodel.UNetModel

|

| 22 |

+

params:

|

| 23 |

+

image_size: 32

|

| 24 |

+

in_channels: 4

|

| 25 |

+

out_channels: 4

|

| 26 |

+

model_channels: 320

|

| 27 |

+

attention_resolutions:

|

| 28 |

+

- 4

|

| 29 |

+

- 2

|

| 30 |

+

- 1

|

| 31 |

+

num_res_blocks: 2

|

| 32 |

+

channel_mult:

|

| 33 |

+

- 1

|

| 34 |

+

- 2

|

| 35 |

+

- 4

|

| 36 |

+

- 4

|

| 37 |

+

num_heads: 8

|

| 38 |

+

use_spatial_transformer: true

|

| 39 |

+

transformer_depth: 1

|

| 40 |

+

context_dim: 1280

|

| 41 |

+

use_checkpoint: true

|

| 42 |

+

legacy: False

|

| 43 |

+

|

| 44 |

+

first_stage_config:

|

| 45 |

+

target: ldm.models.autoencoder.AutoencoderKL

|

| 46 |

+

params:

|

| 47 |

+

embed_dim: 4

|

| 48 |

+

monitor: val/rec_loss

|

| 49 |

+

ddconfig:

|

| 50 |

+

double_z: true

|

| 51 |

+

z_channels: 4

|

| 52 |

+

resolution: 256

|

| 53 |

+

in_channels: 3

|

| 54 |

+

out_ch: 3

|

| 55 |

+

ch: 128

|

| 56 |

+

ch_mult:

|

| 57 |

+

- 1

|

| 58 |

+

- 2

|

| 59 |

+

- 4

|

| 60 |

+

- 4

|

| 61 |

+

num_res_blocks: 2

|

| 62 |

+

attn_resolutions: []

|

| 63 |

+

dropout: 0.0

|

| 64 |

+

lossconfig:

|

| 65 |

+

target: torch.nn.Identity

|

| 66 |

+

|

| 67 |

+

cond_stage_config:

|

| 68 |

+

target: ldm.modules.encoders.modules.BERTEmbedder

|

| 69 |

+

params:

|

| 70 |

+

n_embed: 1280

|

| 71 |

+

n_layer: 32

|

configs/retrieval-augmented-diffusion/768x768.yaml

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

base_learning_rate: 0.0001

|

| 3 |

+

target: ldm.models.diffusion.ddpm.LatentDiffusion

|

| 4 |

+

params:

|

| 5 |

+

linear_start: 0.0015

|

| 6 |

+

linear_end: 0.015

|

| 7 |

+

num_timesteps_cond: 1

|

| 8 |

+

log_every_t: 200

|

| 9 |

+

timesteps: 1000

|

| 10 |

+

first_stage_key: jpg

|

| 11 |

+

cond_stage_key: nix

|

| 12 |

+

image_size: 48

|

| 13 |

+

channels: 16

|

| 14 |

+

cond_stage_trainable: false

|

| 15 |

+

conditioning_key: crossattn

|

| 16 |

+

monitor: val/loss_simple_ema

|

| 17 |

+

scale_by_std: false

|

| 18 |

+

scale_factor: 0.22765929

|

| 19 |

+

unet_config:

|

| 20 |

+

target: ldm.modules.diffusionmodules.openaimodel.UNetModel

|

| 21 |

+

params:

|

| 22 |

+

image_size: 48

|

| 23 |

+

in_channels: 16

|

| 24 |

+

out_channels: 16

|

| 25 |

+

model_channels: 448

|

| 26 |

+

attention_resolutions:

|

| 27 |

+

- 4

|

| 28 |

+

- 2

|

| 29 |

+

- 1

|

| 30 |

+

num_res_blocks: 2

|

| 31 |

+

channel_mult:

|

| 32 |

+

- 1

|

| 33 |

+

- 2

|

| 34 |

+

- 3

|

| 35 |

+

- 4

|

| 36 |

+

use_scale_shift_norm: false

|

| 37 |

+

resblock_updown: false

|

| 38 |

+

num_head_channels: 32

|

| 39 |

+

use_spatial_transformer: true

|

| 40 |

+

transformer_depth: 1

|

| 41 |

+

context_dim: 768

|

| 42 |

+

use_checkpoint: true

|

| 43 |

+

first_stage_config:

|

| 44 |

+

target: ldm.models.autoencoder.AutoencoderKL

|

| 45 |

+

params:

|

| 46 |

+

monitor: val/rec_loss

|

| 47 |

+

embed_dim: 16

|

| 48 |

+

ddconfig:

|

| 49 |

+

double_z: true

|

| 50 |

+

z_channels: 16

|

| 51 |

+

resolution: 256

|

| 52 |

+

in_channels: 3

|

| 53 |

+

out_ch: 3

|

| 54 |

+

ch: 128

|

| 55 |

+

ch_mult:

|

| 56 |

+

- 1

|

| 57 |

+

- 1

|

| 58 |

+

- 2

|

| 59 |

+

- 2

|

| 60 |

+

- 4

|

| 61 |

+

num_res_blocks: 2

|

| 62 |

+

attn_resolutions:

|

| 63 |

+

- 16

|

| 64 |

+

dropout: 0.0

|

| 65 |

+

lossconfig:

|

| 66 |

+

target: torch.nn.Identity

|

| 67 |

+

cond_stage_config:

|

| 68 |

+

target: torch.nn.Identity

|

configs/stable-diffusion/v1-inference.yaml

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

base_learning_rate: 1.0e-04

|

| 3 |

+

target: ldm.models.diffusion.ddpm.LatentDiffusion

|

| 4 |

+

params:

|

| 5 |

+

linear_start: 0.00085

|

| 6 |

+

linear_end: 0.0120

|

| 7 |

+

num_timesteps_cond: 1

|

| 8 |

+

log_every_t: 200

|

| 9 |

+

timesteps: 1000

|

| 10 |

+

first_stage_key: "jpg"

|

| 11 |

+

cond_stage_key: "txt"

|

| 12 |

+

image_size: 64

|

| 13 |

+

channels: 4

|

| 14 |

+

cond_stage_trainable: false # Note: different from the one we trained before

|

| 15 |

+

conditioning_key: crossattn

|

| 16 |

+

monitor: val/loss_simple_ema

|

| 17 |

+

scale_factor: 0.18215

|

| 18 |

+

use_ema: False

|

| 19 |

+

|

| 20 |

+

scheduler_config: # 10000 warmup steps

|

| 21 |

+

target: ldm.lr_scheduler.LambdaLinearScheduler

|

| 22 |

+

params:

|

| 23 |

+

warm_up_steps: [ 10000 ]

|

| 24 |

+