Thiago Hersan

commited on

Commit

·

7bb7f6b

1

Parent(s):

15625a2

adds examples

Browse files- README.md +1 -1

- app.py +22 -7

- examples/map-000.jpg +0 -0

- examples/map-010.jpg +0 -0

- examples/map-018.jpg +0 -0

- examples/map-114.jpg +0 -0

- requirements.txt +3 -2

README.md

CHANGED

|

@@ -4,7 +4,7 @@ emoji: 🥦

|

|

| 4 |

colorFrom: pink

|

| 5 |

colorTo: indigo

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 3.16.

|

| 8 |

app_file: app.py

|

| 9 |

models:

|

| 10 |

- "facebook/maskformer-swin-large-coco"

|

|

|

|

| 4 |

colorFrom: pink

|

| 5 |

colorTo: indigo

|

| 6 |

sdk: gradio

|

| 7 |

+

sdk_version: 3.16.2

|

| 8 |

app_file: app.py

|

| 9 |

models:

|

| 10 |

- "facebook/maskformer-swin-large-coco"

|

app.py

CHANGED

|

@@ -1,5 +1,7 @@

|

|

|

|

|

| 1 |

import gradio as gr

|

| 2 |

import numpy as np

|

|

|

|

| 3 |

from transformers import MaskFormerFeatureExtractor, MaskFormerForInstanceSegmentation

|

| 4 |

|

| 5 |

|

|

@@ -8,6 +10,8 @@ from transformers import MaskFormerFeatureExtractor, MaskFormerForInstanceSegmen

|

|

| 8 |

feature_extractor = MaskFormerFeatureExtractor.from_pretrained("facebook/maskformer-swin-large-coco")

|

| 9 |

model = MaskFormerForInstanceSegmentation.from_pretrained("facebook/maskformer-swin-large-coco")

|

| 10 |

|

|

|

|

|

|

|

| 11 |

def visualize_instance_seg_mask(img_in, mask, id2label):

|

| 12 |

img_out = np.zeros((mask.shape[0], mask.shape[1], 3))

|

| 13 |

image_total_pixels = mask.shape[0] * mask.shape[1]

|

|

@@ -28,7 +32,7 @@ def visualize_instance_seg_mask(img_in, mask, id2label):

|

|

| 28 |

img_out[i, j, :] = id2color[mask[i, j]]

|

| 29 |

id2count[mask[i, j]] = id2count[mask[i, j]] + 1

|

| 30 |

|

| 31 |

-

image_res = (0.5 * img_in + 0.5 * img_out)

|

| 32 |

|

| 33 |

vegetation_count = sum([id2count[id] for id in label_ids if id2label[id] in vegetation_labels])

|

| 34 |

|

|

@@ -47,28 +51,39 @@ def visualize_instance_seg_mask(img_in, mask, id2label):

|

|

| 47 |

f"{(100 * vegetation_count / image_total_pixels):.2f} %",

|

| 48 |

f"{np.sqrt(vegetation_count / image_total_pixels):.2f} m"]]

|

| 49 |

|

| 50 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 51 |

|

| 52 |

|

| 53 |

-

def query_image(

|

|

|

|

| 54 |

img_size = (img.shape[0], img.shape[1])

|

| 55 |

inputs = feature_extractor(images=img, return_tensors="pt")

|

| 56 |

outputs = model(**inputs)

|

| 57 |

results = feature_extractor.post_process_semantic_segmentation(outputs=outputs, target_sizes=[img_size])[0]

|

| 58 |

-

|

| 59 |

-

return

|

| 60 |

|

| 61 |

|

| 62 |

demo = gr.Interface(

|

| 63 |

query_image,

|

| 64 |

-

inputs=[gr.Image(label="Input Image")],

|

| 65 |

outputs=[

|

| 66 |

gr.Image(label="Vegetation"),

|

| 67 |

gr.DataFrame(label="Info", headers=["Object Label", "Pixel Percent", "Square Length"])

|

| 68 |

],

|

| 69 |

title="Maskformer (large-coco)",

|

| 70 |

allow_flagging="never",

|

| 71 |

-

analytics_enabled=None

|

|

|

|

|

|

|

| 72 |

)

|

| 73 |

|

| 74 |

demo.launch(show_api=False)

|

|

|

|

| 1 |

+

import glob

|

| 2 |

import gradio as gr

|

| 3 |

import numpy as np

|

| 4 |

+

from PIL import Image

|

| 5 |

from transformers import MaskFormerFeatureExtractor, MaskFormerForInstanceSegmentation

|

| 6 |

|

| 7 |

|

|

|

|

| 10 |

feature_extractor = MaskFormerFeatureExtractor.from_pretrained("facebook/maskformer-swin-large-coco")

|

| 11 |

model = MaskFormerForInstanceSegmentation.from_pretrained("facebook/maskformer-swin-large-coco")

|

| 12 |

|

| 13 |

+

example_images = sorted(glob.glob('examples/map*.jpg'))

|

| 14 |

+

|

| 15 |

def visualize_instance_seg_mask(img_in, mask, id2label):

|

| 16 |

img_out = np.zeros((mask.shape[0], mask.shape[1], 3))

|

| 17 |

image_total_pixels = mask.shape[0] * mask.shape[1]

|

|

|

|

| 32 |

img_out[i, j, :] = id2color[mask[i, j]]

|

| 33 |

id2count[mask[i, j]] = id2count[mask[i, j]] + 1

|

| 34 |

|

| 35 |

+

image_res = (0.5 * img_in + 0.5 * img_out).astype(np.uint8)

|

| 36 |

|

| 37 |

vegetation_count = sum([id2count[id] for id in label_ids if id2label[id] in vegetation_labels])

|

| 38 |

|

|

|

|

| 51 |

f"{(100 * vegetation_count / image_total_pixels):.2f} %",

|

| 52 |

f"{np.sqrt(vegetation_count / image_total_pixels):.2f} m"]]

|

| 53 |

|

| 54 |

+

dataframe = dataframe_vegetation_total

|

| 55 |

+

if len(dataframe) < 1:

|

| 56 |

+

dataframe = [[

|

| 57 |

+

f"",

|

| 58 |

+

f"{(0):.2f} %",

|

| 59 |

+

f"{(0):.2f} m"

|

| 60 |

+

]]

|

| 61 |

+

|

| 62 |

+

return image_res, dataframe

|

| 63 |

|

| 64 |

|

| 65 |

+

def query_image(image_path):

|

| 66 |

+

img = np.array(Image.open(image_path))

|

| 67 |

img_size = (img.shape[0], img.shape[1])

|

| 68 |

inputs = feature_extractor(images=img, return_tensors="pt")

|

| 69 |

outputs = model(**inputs)

|

| 70 |

results = feature_extractor.post_process_semantic_segmentation(outputs=outputs, target_sizes=[img_size])[0]

|

| 71 |

+

mask_img, dataframe = visualize_instance_seg_mask(img, results.numpy(), model.config.id2label)

|

| 72 |

+

return mask_img, dataframe

|

| 73 |

|

| 74 |

|

| 75 |

demo = gr.Interface(

|

| 76 |

query_image,

|

| 77 |

+

inputs=[gr.Image(type="filepath", label="Input Image")],

|

| 78 |

outputs=[

|

| 79 |

gr.Image(label="Vegetation"),

|

| 80 |

gr.DataFrame(label="Info", headers=["Object Label", "Pixel Percent", "Square Length"])

|

| 81 |

],

|

| 82 |

title="Maskformer (large-coco)",

|

| 83 |

allow_flagging="never",

|

| 84 |

+

analytics_enabled=None,

|

| 85 |

+

examples=example_images,

|

| 86 |

+

cache_examples=True

|

| 87 |

)

|

| 88 |

|

| 89 |

demo.launch(show_api=False)

|

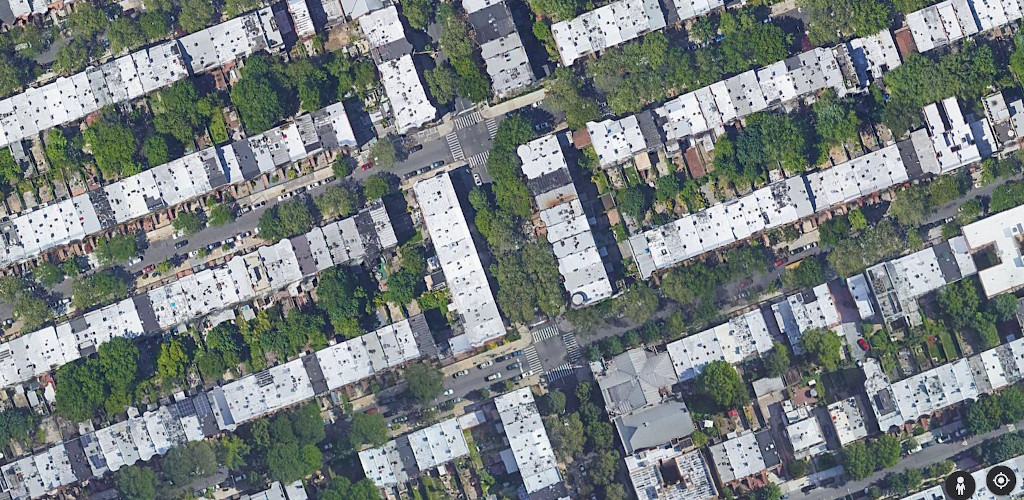

examples/map-000.jpg

ADDED

|

examples/map-010.jpg

ADDED

|

examples/map-018.jpg

ADDED

|

examples/map-114.jpg

ADDED

|

requirements.txt

CHANGED

|

@@ -1,3 +1,4 @@

|

|

| 1 |

-

|

|

|

|

| 2 |

torch

|

| 3 |

-

|

|

|

|

| 1 |

+

Pillow

|

| 2 |

+

scipy

|

| 3 |

torch

|

| 4 |

+

transformers

|