Spaces:

Runtime error

Runtime error

Commit

•

eca77db

1

Parent(s):

b314b18

first commit

Browse files- .gitignore +133 -0

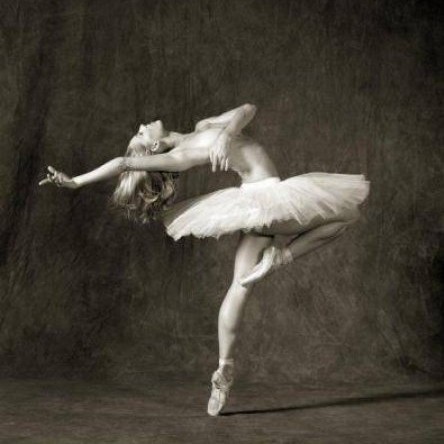

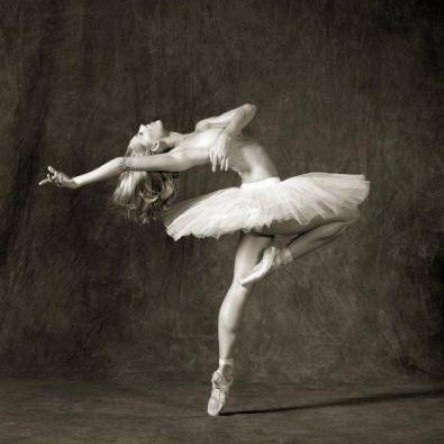

- dancing_content.jpg +0 -0

- gradio_cached_examples/14/log.csv +2 -0

- gradio_cached_examples/14/output/b3bac432e10f60f0fc74d6dd737f3e5b190aff2f/tmpftrz8no9.png +0 -0

- images/content/dancing.jpg +0 -0

- images/content/neckarfront.jpg +0 -0

- images/style/gogh.jpg +0 -0

- images/style/kandinsky.jpg +0 -0

- images/style/picasso.jpg +0 -0

- images/style/turner.jpg +0 -0

- misc/result_collage.png +0 -0

- nst/__init__.py +0 -0

- nst/losses.py +42 -0

- nst/models/__init__.py +0 -0

- nst/models/vgg19.py +94 -0

- nst/train.py +229 -0

- paper/neural_style_transfer.pdf +0 -0

- picasso_style.jpg +0 -0

- requirements.txt +7 -0

- setup.cfg +43 -0

- setup.py +14 -0

.gitignore

ADDED

|

@@ -0,0 +1,133 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

.github

|

| 6 |

+

|

| 7 |

+

# C extensions

|

| 8 |

+

*.so

|

| 9 |

+

|

| 10 |

+

# Distribution / packaging

|

| 11 |

+

.Python

|

| 12 |

+

build/

|

| 13 |

+

develop-eggs/

|

| 14 |

+

dist/

|

| 15 |

+

downloads/

|

| 16 |

+

eggs/

|

| 17 |

+

.eggs/

|

| 18 |

+

lib/

|

| 19 |

+

lib64/

|

| 20 |

+

parts/

|

| 21 |

+

sdist/

|

| 22 |

+

var/

|

| 23 |

+

wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# Lightning /research

|

| 30 |

+

test_tube_exp/

|

| 31 |

+

tests/tests_tt_dir/

|

| 32 |

+

tests/save_dir

|

| 33 |

+

default/

|

| 34 |

+

data/

|

| 35 |

+

test_tube_logs/

|

| 36 |

+

test_tube_data/

|

| 37 |

+

datasets/

|

| 38 |

+

model_weights/

|

| 39 |

+

tests/save_dir

|

| 40 |

+

tests/tests_tt_dir/

|

| 41 |

+

processed/

|

| 42 |

+

raw/

|

| 43 |

+

|

| 44 |

+

# PyInstaller

|

| 45 |

+

# Usually these files are written by a python script from a template

|

| 46 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 47 |

+

*.manifest

|

| 48 |

+

*.spec

|

| 49 |

+

|

| 50 |

+

# Installer logs

|

| 51 |

+

pip-log.txt

|

| 52 |

+

pip-delete-this-directory.txt

|

| 53 |

+

|

| 54 |

+

# Unit test / coverage reports

|

| 55 |

+

htmlcov/

|

| 56 |

+

.tox/

|

| 57 |

+

.coverage

|

| 58 |

+

.coverage.*

|

| 59 |

+

.cache

|

| 60 |

+

nosetests.xml

|

| 61 |

+

coverage.xml

|

| 62 |

+

*.cover

|

| 63 |

+

.hypothesis/

|

| 64 |

+

.pytest_cache/

|

| 65 |

+

|

| 66 |

+

# Translations

|

| 67 |

+

*.mo

|

| 68 |

+

*.pot

|

| 69 |

+

|

| 70 |

+

# Django stuff:

|

| 71 |

+

*.log

|

| 72 |

+

local_settings.py

|

| 73 |

+

db.sqlite3

|

| 74 |

+

|

| 75 |

+

# Flask stuff:

|

| 76 |

+

instance/

|

| 77 |

+

.webassets-cache

|

| 78 |

+

|

| 79 |

+

# Scrapy stuff:

|

| 80 |

+

.scrapy

|

| 81 |

+

|

| 82 |

+

# Sphinx documentation

|

| 83 |

+

docs/_build/

|

| 84 |

+

|

| 85 |

+

# PyBuilder

|

| 86 |

+

target/

|

| 87 |

+

|

| 88 |

+

# Jupyter Notebook

|

| 89 |

+

.ipynb_checkpoints

|

| 90 |

+

|

| 91 |

+

# pyenv

|

| 92 |

+

.python-version

|

| 93 |

+

|

| 94 |

+

# celery beat schedule file

|

| 95 |

+

celerybeat-schedule

|

| 96 |

+

|

| 97 |

+

# SageMath parsed files

|

| 98 |

+

*.sage.py

|

| 99 |

+

|

| 100 |

+

# Environments

|

| 101 |

+

.env

|

| 102 |

+

.venv

|

| 103 |

+

env/

|

| 104 |

+

venv/

|

| 105 |

+

ENV/

|

| 106 |

+

env.bak/

|

| 107 |

+

venv.bak/

|

| 108 |

+

|

| 109 |

+

# Spyder project settings

|

| 110 |

+

.spyderproject

|

| 111 |

+

.spyproject

|

| 112 |

+

|

| 113 |

+

# Rope project settings

|

| 114 |

+

.ropeproject

|

| 115 |

+

|

| 116 |

+

# mkdocs documentation

|

| 117 |

+

/site

|

| 118 |

+

|

| 119 |

+

# mypy

|

| 120 |

+

.mypy_cache/

|

| 121 |

+

|

| 122 |

+

# IDEs

|

| 123 |

+

.idea

|

| 124 |

+

.vscode

|

| 125 |

+

|

| 126 |

+

# seed project

|

| 127 |

+

lightning_logs/

|

| 128 |

+

MNIST

|

| 129 |

+

.DS_Store

|

| 130 |

+

|

| 131 |

+

# ignore CI testing for now

|

| 132 |

+

.github/

|

| 133 |

+

tests/

|

dancing_content.jpg

ADDED

|

gradio_cached_examples/14/log.csv

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

output,flag,username,timestamp

|

| 2 |

+

/Users/abhiroop/Developer/neural-style-transfer/gradio_cached_examples/14/output/b3bac432e10f60f0fc74d6dd737f3e5b190aff2f/tmpftrz8no9.png,,,2023-09-07 19:03:10.521984

|

gradio_cached_examples/14/output/b3bac432e10f60f0fc74d6dd737f3e5b190aff2f/tmpftrz8no9.png

ADDED

|

images/content/dancing.jpg

ADDED

|

images/content/neckarfront.jpg

ADDED

|

images/style/gogh.jpg

ADDED

|

images/style/kandinsky.jpg

ADDED

|

images/style/picasso.jpg

ADDED

|

images/style/turner.jpg

ADDED

|

misc/result_collage.png

ADDED

|

nst/__init__.py

ADDED

|

File without changes

|

nst/losses.py

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

|

| 5 |

+

class ContentLoss(nn.Module):

|

| 6 |

+

"""

|

| 7 |

+

Content Loss for the neural style transfer algorithm.

|

| 8 |

+

"""

|

| 9 |

+

def __init__(self, target: torch.Tensor, device: torch.device) -> None:

|

| 10 |

+

super(ContentLoss, self).__init__()

|

| 11 |

+

batch_size, channels, height, width = target.size()

|

| 12 |

+

target = target.view(batch_size * channels, height * width)

|

| 13 |

+

self.target = target.detach().to(device)

|

| 14 |

+

|

| 15 |

+

def __str__(self) -> str:

|

| 16 |

+

return "Content loss"

|

| 17 |

+

|

| 18 |

+

def forward(self, input: torch.Tensor) -> torch.Tensor:

|

| 19 |

+

batch_size, channels, height, width = input.size()

|

| 20 |

+

input = input.view(batch_size * channels, height * width)

|

| 21 |

+

return F.mse_loss(input, self.target)

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

class StyleLoss(nn.Module):

|

| 25 |

+

"""

|

| 26 |

+

Style loss for the neural style transfer algorithm.

|

| 27 |

+

"""

|

| 28 |

+

def __init__(self, target: torch.Tensor, device: torch.device) -> None:

|

| 29 |

+

super(StyleLoss, self).__init__()

|

| 30 |

+

self.target = self.compute_gram_matrix(target).detach().to(device)

|

| 31 |

+

|

| 32 |

+

def __str__(self) -> str:

|

| 33 |

+

return "Style loss"

|

| 34 |

+

|

| 35 |

+

def forward(self, input: torch.Tensor) -> torch.Tensor:

|

| 36 |

+

input = self.compute_gram_matrix(input)

|

| 37 |

+

return F.mse_loss(input, self.target)

|

| 38 |

+

|

| 39 |

+

def compute_gram_matrix(self, input: torch.Tensor) -> torch.Tensor:

|

| 40 |

+

batch_size, channels, height, width = input.size()

|

| 41 |

+

input = input.view(batch_size * channels, height * width)

|

| 42 |

+

return torch.matmul(input, input.T).div(batch_size * channels * height * width)

|

nst/models/__init__.py

ADDED

|

File without changes

|

nst/models/vgg19.py

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

|

| 5 |

+

from typing import Tuple, Dict, Optional

|

| 6 |

+

|

| 7 |

+

class Normalization(nn.Module):

|

| 8 |

+

"""

|

| 9 |

+

Normalization module for VGG19.

|

| 10 |

+

"""

|

| 11 |

+

def __init__(self, mean: torch.Tensor, std: torch.Tensor) -> None:

|

| 12 |

+

super(Normalization, self).__init__()

|

| 13 |

+

self.mean = mean.view(-1, 1, 1)

|

| 14 |

+

self.std = std.view(-1, 1, 1)

|

| 15 |

+

|

| 16 |

+

def forward(self, x: torch.Tensor) -> torch.Tensor:

|

| 17 |

+

return (x - self.mean) / self.std

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

class ConvBlock1(nn.Module):

|

| 21 |

+

"""

|

| 22 |

+

Convolution block for VGG19 [conv2d, conv2d, maxpool2d].

|

| 23 |

+

"""

|

| 24 |

+

def __init__(self, in_channels: int, out_channels: int) -> None:

|

| 25 |

+

super(ConvBlock1, self).__init__()

|

| 26 |

+

self.conv1 = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=3, padding=1)

|

| 27 |

+

self.relu1 = nn.ReLU(inplace=False)

|

| 28 |

+

self.conv2 = nn.Conv2d(in_channels=out_channels, out_channels=out_channels, kernel_size=3, padding=1)

|

| 29 |

+

self.relu2 = nn.ReLU(inplace=False)

|

| 30 |

+

self.max_pool2d = nn.MaxPool2d(kernel_size=2)

|

| 31 |

+

|

| 32 |

+

def forward(self, x: torch.Tensor) -> Tuple[torch.Tensor]:

|

| 33 |

+

conv1 = self.relu1(self.conv1(x))

|

| 34 |

+

conv2 = self.relu2(self.conv2(conv1))

|

| 35 |

+

out = self.max_pool2d(conv2)

|

| 36 |

+

return conv1, conv2, out

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

class ConvBlock2(nn.Module):

|

| 40 |

+

"""

|

| 41 |

+

Convolution block for VGG19 [conv2d, conv2d, conv2d, conv2d, maxpool2d].

|

| 42 |

+

"""

|

| 43 |

+

def __init__(self, in_channels: int, out_channels: int) -> None:

|

| 44 |

+

super(ConvBlock2, self).__init__()

|

| 45 |

+

self.conv1 = nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=3, padding=1)

|

| 46 |

+

self.relu1 = nn.ReLU(inplace=False)

|

| 47 |

+

self.conv2 = nn.Conv2d(in_channels=out_channels, out_channels=out_channels, kernel_size=3, padding=1)

|

| 48 |

+

self.relu2 = nn.ReLU(inplace=False)

|

| 49 |

+

self.conv3 = nn.Conv2d(in_channels=out_channels, out_channels=out_channels, kernel_size=3, padding=1)

|

| 50 |

+

self.relu3 = nn.ReLU(inplace=False)

|

| 51 |

+

self.conv4 = nn.Conv2d(in_channels=out_channels, out_channels=out_channels, kernel_size=3, padding=1)

|

| 52 |

+

self.relu4 = nn.ReLU(inplace=False)

|

| 53 |

+

self.max_pool2d = nn.MaxPool2d(kernel_size=2)

|

| 54 |

+

|

| 55 |

+

def forward(self, x: torch.Tensor) -> Tuple[torch.Tensor]:

|

| 56 |

+

conv1 = self.relu1(self.conv1(x))

|

| 57 |

+

conv2 = self.relu2(self.conv2(conv1))

|

| 58 |

+

conv3 = self.relu3(self.conv3(conv2))

|

| 59 |

+

conv4 = self.relu4(self.conv4(conv3))

|

| 60 |

+

out = self.max_pool2d(conv2)

|

| 61 |

+

return conv1, conv2, conv3, conv4, out

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

class VGG19(nn.Module):

|

| 65 |

+

"""

|

| 66 |

+

VGG19 module with only the feature extractor.

|

| 67 |

+

"""

|

| 68 |

+

def __init__(self, in_channels: int=3, out_channels: int=64,

|

| 69 |

+

mean: Optional[torch.Tensor]=None, std: Optional[torch.Tensor]=None) -> None:

|

| 70 |

+

super(VGG19, self).__init__()

|

| 71 |

+

self.norm = Normalization(mean=mean, std=std)

|

| 72 |

+

self.conv1 = ConvBlock1(in_channels=in_channels, out_channels=out_channels)

|

| 73 |

+

self.conv2 = ConvBlock1(in_channels=out_channels, out_channels=out_channels * 2)

|

| 74 |

+

self.conv3 = ConvBlock2(in_channels=out_channels * 2, out_channels=out_channels * 4)

|

| 75 |

+

self.conv4 = ConvBlock2(in_channels=out_channels * 4, out_channels=out_channels * 8)

|

| 76 |

+

self.conv5 = ConvBlock2(in_channels=out_channels * 8, out_channels = out_channels * 8)

|

| 77 |

+

|

| 78 |

+

def forward(self, x: torch.Tensor) -> Dict[str, torch.Tensor]:

|

| 79 |

+

x = self.norm(x)

|

| 80 |

+

conv1_1, conv1_2, out = self.conv1(x)

|

| 81 |

+

conv2_1, conv2_2, out = self.conv2(out)

|

| 82 |

+

conv3_1, conv3_2, conv3_3, conv3_4, out = self.conv3(out)

|

| 83 |

+

conv4_1, conv4_2, conv4_3, conv4_4, out = self.conv4(out)

|

| 84 |

+

conv5_1, conv5_2, conv5_3, conv5_4, out = self.conv5(out)

|

| 85 |

+

outputs = {

|

| 86 |

+

"conv1": [conv1_1, conv1_2],

|

| 87 |

+

"conv2": [conv2_1, conv2_2],

|

| 88 |

+

"conv3": [conv3_1, conv3_2, conv3_3, conv3_4],

|

| 89 |

+

"conv4": [conv4_1, conv4_2, conv4_3, conv4_4],

|

| 90 |

+

"conv5": [conv5_1, conv5_2, conv5_3, conv5_4],

|

| 91 |

+

"out" : out

|

| 92 |

+

}

|

| 93 |

+

|

| 94 |

+

return outputs

|

nst/train.py

ADDED

|

@@ -0,0 +1,229 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import sys

|

| 3 |

+

sys.path.append(os.path.abspath(os.path.pardir))

|

| 4 |

+

from argparse import ArgumentParser

|

| 5 |

+

|

| 6 |

+

import torch

|

| 7 |

+

from torch import nn

|

| 8 |

+

import torch.nn.functional as F

|

| 9 |

+

import torch.optim as optim

|

| 10 |

+

|

| 11 |

+

from torchvision import transforms

|

| 12 |

+

from torchvision.models import vgg19

|

| 13 |

+

|

| 14 |

+

from PIL import Image

|

| 15 |

+

import matplotlib.pyplot as plt

|

| 16 |

+

|

| 17 |

+

from nst.models.vgg19 import VGG19

|

| 18 |

+

from nst.losses import ContentLoss, StyleLoss

|

| 19 |

+

|

| 20 |

+

from tqdm import tqdm

|

| 21 |

+

from typing import List, Union

|

| 22 |

+

|

| 23 |

+

def main() -> None:

|

| 24 |

+

# command line args

|

| 25 |

+

parser = ArgumentParser()

|

| 26 |

+

parser.add_argument("--use_gpu", default=True, type=bool)

|

| 27 |

+

parser.add_argument("--content_dir", default="../images/content/dancing.jpg", type=str)

|

| 28 |

+

parser.add_argument("--style_dir", default="../images/style/picasso.jpg", type=str)

|

| 29 |

+

parser.add_argument("--input_image", default="content", type=str)

|

| 30 |

+

parser.add_argument("--output_dir", default="../result/result.jpg", type=str)

|

| 31 |

+

parser.add_argument("--iterations", default=100, type=int)

|

| 32 |

+

parser.add_argument("--alpha", default=1, type=int)

|

| 33 |

+

parser.add_argument("--beta", default=1000000, type=int)

|

| 34 |

+

parser.add_argument("--style_layer_weight", default=1.0, type=float)

|

| 35 |

+

args = parser.parse_args()

|

| 36 |

+

|

| 37 |

+

device = torch.device("cuda") if (torch.cuda.is_available() and args.use_gpu) else torch.device("cpu")

|

| 38 |

+

print(f"training on device {device}")

|

| 39 |

+

|

| 40 |

+

# content and style images

|

| 41 |

+

content = image_loader(args.content_dir, device)

|

| 42 |

+

style = image_loader(args.style_dir, device)

|

| 43 |

+

|

| 44 |

+

# input image

|

| 45 |

+

if args.input_image == "content":

|

| 46 |

+

x = content.clone()

|

| 47 |

+

elif args.input_image == "style":

|

| 48 |

+

x = style.clone()

|

| 49 |

+

else:

|

| 50 |

+

x = torch.randn(content.data.size(), device=device)

|

| 51 |

+

|

| 52 |

+

# mean and std for vgg19

|

| 53 |

+

mean = torch.tensor([0.485, 0.456, 0.406]).to(device)

|

| 54 |

+

std = torch.tensor([0.229, 0.224, 0.225]).to(device)

|

| 55 |

+

|

| 56 |

+

# vgg19 model

|

| 57 |

+

model = VGG19(mean=mean, std=std).to(device=device)

|

| 58 |

+

model = load_vgg19_weights(model, device)

|

| 59 |

+

# LBFGS optimizer like in paper

|

| 60 |

+

optimizer = optim.LBFGS([x.requires_grad_()])

|

| 61 |

+

|

| 62 |

+

# computing content and style representations

|

| 63 |

+

content_outputs = model(content)

|

| 64 |

+

style_outputs = model(style)

|

| 65 |

+

|

| 66 |

+

# defining content and style losses

|

| 67 |

+

content_loss = ContentLoss(content_outputs["conv4"][1], device)

|

| 68 |

+

style_losses = []

|

| 69 |

+

for i in range(1, 6):

|

| 70 |

+

style_losses.append(StyleLoss(style_outputs[f"conv{i}"][0], device))

|

| 71 |

+

|

| 72 |

+

# run style transfer

|

| 73 |

+

output = train(model, optimizer, content_loss, style_losses, x,

|

| 74 |

+

iterations=args.iterations, alpha=args.alpha, beta=args.beta,

|

| 75 |

+

style_weight=args.style_layer_weight)

|

| 76 |

+

output = output.detach().to("cpu")

|

| 77 |

+

|

| 78 |

+

# save result

|

| 79 |

+

plt.imsave(args.output_dir, output[0].permute(1, 2, 0).numpy())

|

| 80 |

+

|

| 81 |

+

def image_loader(path: str, device: torch.device=torch.device("cuda")) -> torch.Tensor:

|

| 82 |

+

"""

|

| 83 |

+

Loads and resizes the image.

|

| 84 |

+

|

| 85 |

+

Args:

|

| 86 |

+

path (str): Path to the image.

|

| 87 |

+

device (torch.device): device to load the image in.

|

| 88 |

+

|

| 89 |

+

Returns:

|

| 90 |

+

img (torch.Tensor): Loaded image as torch.Tensor.

|

| 91 |

+

"""

|

| 92 |

+

transform = transforms.Compose([

|

| 93 |

+

transforms.Resize((512, 512)),

|

| 94 |

+

transforms.ToTensor(),

|

| 95 |

+

])

|

| 96 |

+

img = Image.open(path)

|

| 97 |

+

img = transform(img)

|

| 98 |

+

img = img.unsqueeze(0).to(device=device)

|

| 99 |

+

return img

|

| 100 |

+

|

| 101 |

+

def load_vgg19_weights(model: nn.Module, device: torch.device) -> nn.Module:

|

| 102 |

+

"""

|

| 103 |

+

Loads VGG19 pretrained weights from ImageNet for style transfer.

|

| 104 |

+

|

| 105 |

+

Args:

|

| 106 |

+

model (nn.Module): VGG19 feature module with randomized weights.

|

| 107 |

+

device (torch.device): The device to load the model in.

|

| 108 |

+

|

| 109 |

+

Returns:

|

| 110 |

+

model (nn.Module): VGG19 module with pretrained ImageNet weights loaded.

|

| 111 |

+

"""

|

| 112 |

+

pretrained_model = vgg19(pretrained=True).features.to(device).eval()

|

| 113 |

+

|

| 114 |

+

matching_keys = {

|

| 115 |

+

"conv1.conv1.weight": "0.weight",

|

| 116 |

+

"conv1.conv1.bias": "0.bias",

|

| 117 |

+

"conv1.conv2.weight": "2.weight",

|

| 118 |

+

"conv1.conv2.bias": "2.bias",

|

| 119 |

+

|

| 120 |

+

"conv2.conv1.weight": "5.weight",

|

| 121 |

+

"conv2.conv1.bias": "5.bias",

|

| 122 |

+

"conv2.conv2.weight": "7.weight",

|

| 123 |

+

"conv2.conv2.bias": "7.bias",

|

| 124 |

+

|

| 125 |

+

"conv3.conv1.weight": "10.weight",

|

| 126 |

+

"conv3.conv1.bias": "10.bias",

|

| 127 |

+

"conv3.conv2.weight": "12.weight",

|

| 128 |

+

"conv3.conv2.bias": "12.bias",

|

| 129 |

+

"conv3.conv3.weight": "14.weight",

|

| 130 |

+

"conv3.conv3.bias": "14.bias",

|

| 131 |

+

"conv3.conv4.weight": "16.weight",

|

| 132 |

+

"conv3.conv4.bias": "16.bias",

|

| 133 |

+

|

| 134 |

+

"conv4.conv1.weight": "19.weight",

|

| 135 |

+

"conv4.conv1.bias": "19.bias",

|

| 136 |

+

"conv4.conv2.weight": "21.weight",

|

| 137 |

+

"conv4.conv2.bias": "21.bias",

|

| 138 |

+

"conv4.conv3.weight": "23.weight",

|

| 139 |

+

"conv4.conv3.bias": "23.bias",

|

| 140 |

+

"conv4.conv4.weight": "25.weight",

|

| 141 |

+

"conv4.conv4.bias": "25.bias",

|

| 142 |

+

|

| 143 |

+

"conv5.conv1.weight": "28.weight",

|

| 144 |

+

"conv5.conv1.bias": "28.bias",

|

| 145 |

+

"conv5.conv2.weight": "30.weight",

|

| 146 |

+

"conv5.conv2.bias": "30.bias",

|

| 147 |

+

"conv5.conv3.weight": "32.weight",

|

| 148 |

+

"conv5.conv3.bias": "32.bias",

|

| 149 |

+

"conv5.conv4.weight": "34.weight",

|

| 150 |

+

"conv5.conv4.bias": "34.bias",

|

| 151 |

+

}

|

| 152 |

+

|

| 153 |

+

pretrained_dict = pretrained_model.state_dict()

|

| 154 |

+

model_dict = model.state_dict()

|

| 155 |

+

|

| 156 |

+

for key, value in matching_keys.items():

|

| 157 |

+

model_dict[key] = pretrained_dict[value]

|

| 158 |

+

|

| 159 |

+

model.load_state_dict(model_dict)

|

| 160 |

+

|

| 161 |

+

return model

|

| 162 |

+

|

| 163 |

+

|

| 164 |

+

def train(model: nn.Module, optimizer: torch.optim, content_loss: ContentLoss, style_losses: List[StyleLoss],

|

| 165 |

+

x: torch.Tensor, iterations: int=100, alpha: int=1, beta: int=1000000, style_weight: Union[int, float]=1.0) -> torch.Tensor:

|

| 166 |

+

"""

|

| 167 |

+

Train the neural style transfer algorithm.

|

| 168 |

+

|

| 169 |

+

Args:

|

| 170 |

+

model (nn.Module): The VGG19 feature extractor for training the style transfer algorithm.

|

| 171 |

+

optimizer (torch.optim): The optimization module to use.

|

| 172 |

+

content_loss (ContentLoss): The content loss to preserve the content representation during style transfer.

|

| 173 |

+

style_losses (List[StyleLoss]): A list of style loss objects to preserve the style representation across

|

| 174 |

+

different layers during style transfer.

|

| 175 |

+

x (torch.Tensor): The input image for style transfer.

|

| 176 |

+

iterations (int): Number of iterations to run.

|

| 177 |

+

alpha (int): The weight given to content loss while computing the total loss.

|

| 178 |

+

beta (int): The weight given to style loss while computing the total loss.

|

| 179 |

+

style_weight Union[int, float]: The weight given to style loss of each layer while computing total style loss.

|

| 180 |

+

|

| 181 |

+

Returns:

|

| 182 |

+

x (torch.Tensor): The input image with the content and style transfered.

|

| 183 |

+

"""

|

| 184 |

+

|

| 185 |

+

with tqdm(range(iterations)) as iterations:

|

| 186 |

+

for iteration in iterations:

|

| 187 |

+

iterations.set_description(f"Iteration: {iteration}")

|

| 188 |

+

|

| 189 |

+

def closure():

|

| 190 |

+

optimizer.zero_grad()

|

| 191 |

+

|

| 192 |

+

# correcting to 0-1 range

|

| 193 |

+

x.data.clamp_(0, 1)

|

| 194 |

+

outputs = model(x)

|

| 195 |

+

|

| 196 |

+

# input content and style representations

|

| 197 |

+

content_feature_maps = outputs["conv4"][1]

|

| 198 |

+

style_feature_maps = []

|

| 199 |

+

for i in range(1, 6):

|

| 200 |

+

style_feature_maps.append(outputs[f"conv{i}"][0])

|

| 201 |

+

|

| 202 |

+

# input content and style losses

|

| 203 |

+

total_content_loss = content_loss(content_feature_maps)

|

| 204 |

+

|

| 205 |

+

total_style_loss = 0

|

| 206 |

+

for feature_map, style_loss in zip(style_feature_maps, style_losses):

|

| 207 |

+

total_style_loss += (style_weight * style_loss(feature_map))

|

| 208 |

+

|

| 209 |

+

# total loss

|

| 210 |

+

loss = (alpha * total_content_loss) + (beta * total_style_loss)

|

| 211 |

+

loss.backward()

|

| 212 |

+

|

| 213 |

+

iterations.set_postfix({

|

| 214 |

+

"content loss": total_content_loss.item(),

|

| 215 |

+

"style loss": total_style_loss.item(),

|

| 216 |

+

"total loss": loss.item()

|

| 217 |

+

})

|

| 218 |

+

|

| 219 |

+

return loss

|

| 220 |

+

|

| 221 |

+

optimizer.step(closure)

|

| 222 |

+

|

| 223 |

+

# final correction

|

| 224 |

+

x.data.clamp_(0, 1)

|

| 225 |

+

return x

|

| 226 |

+

|

| 227 |

+

|

| 228 |

+

if __name__ == "__main__":

|

| 229 |

+

main()

|

paper/neural_style_transfer.pdf

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

picasso_style.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

torchvision

|

| 3 |

+

numpy

|

| 4 |

+

matplotlib

|

| 5 |

+

tqdm

|

| 6 |

+

pillow

|

| 7 |

+

gradio

|

setup.cfg

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[tool:pytest]

|

| 2 |

+

norecursedirs =

|

| 3 |

+

.git

|

| 4 |

+

dist

|

| 5 |

+

build

|

| 6 |

+

addopts =

|

| 7 |

+

--strict

|

| 8 |

+

--doctest-modules

|

| 9 |

+

--durations=0

|

| 10 |

+

|

| 11 |

+

[coverage:report]

|

| 12 |

+

exclude_lines =

|

| 13 |

+

pragma: no-cover

|

| 14 |

+

pass

|

| 15 |

+

|

| 16 |

+

[flake8]

|

| 17 |

+

max-line-length = 120

|

| 18 |

+

exclude = .tox,*.egg,build,temp

|

| 19 |

+

select = E,W,F

|

| 20 |

+

doctests = True

|

| 21 |

+

verbose = 2

|

| 22 |

+

# https://pep8.readthedocs.io/en/latest/intro.html#error-codes

|

| 23 |

+

format = pylint

|

| 24 |

+

# see: https://www.flake8rules.com/

|

| 25 |

+

ignore =

|

| 26 |

+

E731 # Do not assign a lambda expression, use a def

|

| 27 |

+

W504 # Line break occurred after a binary operator

|

| 28 |

+

F401 # Module imported but unused

|

| 29 |

+

F841 # Local variable name is assigned to but never used

|

| 30 |

+

W605 # Invalid escape sequence 'x'

|

| 31 |

+

|

| 32 |

+

# setup.cfg or tox.ini

|

| 33 |

+

[check-manifest]

|

| 34 |

+

ignore =

|

| 35 |

+

*.yml

|

| 36 |

+

.github

|

| 37 |

+

.github/*

|

| 38 |

+

|

| 39 |

+

[metadata]

|

| 40 |

+

license_file = LICENSE

|

| 41 |

+

description-file = README.md

|

| 42 |

+

# long_description = file:README.md

|

| 43 |

+

# long_description_content_type = text/markdown

|

setup.py

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python

|

| 2 |

+

|

| 3 |

+

from setuptools import setup, find_packages

|

| 4 |

+

|

| 5 |

+

setup(

|

| 6 |

+

name='nst',

|

| 7 |

+

version='0.1.0',

|

| 8 |

+

description='Implementation of Neural Style Transfer using PyTorch',

|

| 9 |

+

author='Abhiroop Tejomay',

|

| 10 |

+

author_email='abhirooptejomay@gmail.com',

|

| 11 |

+

url='https://github.com/visualCalculus/neural-style-transfer',

|

| 12 |

+

packages=find_packages(include=["nst", "nst.*"]),

|

| 13 |

+

)

|

| 14 |

+

|