update

Browse files- 5kstbz-0001.png +0 -0

- Blue_Jay_0044_62759.jpg +0 -0

- ILSVRC2012_val_00000008.JPEG +0 -0

- app.py +19 -5

5kstbz-0001.png

ADDED

|

Blue_Jay_0044_62759.jpg

ADDED

|

ILSVRC2012_val_00000008.JPEG

ADDED

|

|

app.py

CHANGED

|

@@ -58,9 +58,8 @@ def clear_chat(history):

|

|

| 58 |

|

| 59 |

|

| 60 |

with gr.Blocks() as demo:

|

| 61 |

-

gr.Markdown("# BLIP-2")

|

| 62 |

gr.Markdown(

|

| 63 |

-

"## Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models"

|

| 64 |

)

|

| 65 |

gr.Markdown(

|

| 66 |

"This demo uses `OPT2.7B` weights. For more information please see [Github](https://github.com/salesforce/LAVIS/tree/main/projects/blip2) or [Paper](https://arxiv.org/abs/2301.12597)."

|

|

@@ -71,7 +70,7 @@ with gr.Blocks() as demo:

|

|

| 71 |

input_image = gr.Image(label="Image", type="pil")

|

| 72 |

caption_type = gr.Radio(

|

| 73 |

["Beam Search", "Nucleus Sampling"],

|

| 74 |

-

label="Caption

|

| 75 |

value="Beam Search",

|

| 76 |

)

|

| 77 |

btn_caption = gr.Button("Generate Caption")

|

|

@@ -98,8 +97,23 @@ with gr.Blocks() as demo:

|

|

| 98 |

btn_clear.click(clear_chat, inputs=[chat_state], outputs=[chatbot, chat_state])

|

| 99 |

|

| 100 |

gr.Examples(

|

| 101 |

-

[

|

| 102 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 103 |

)

|

| 104 |

|

|

|

|

|

|

|

| 105 |

demo.launch()

|

|

|

|

| 58 |

|

| 59 |

|

| 60 |

with gr.Blocks() as demo:

|

|

|

|

| 61 |

gr.Markdown(

|

| 62 |

+

"## BLIP-2 - Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models"

|

| 63 |

)

|

| 64 |

gr.Markdown(

|

| 65 |

"This demo uses `OPT2.7B` weights. For more information please see [Github](https://github.com/salesforce/LAVIS/tree/main/projects/blip2) or [Paper](https://arxiv.org/abs/2301.12597)."

|

|

|

|

| 70 |

input_image = gr.Image(label="Image", type="pil")

|

| 71 |

caption_type = gr.Radio(

|

| 72 |

["Beam Search", "Nucleus Sampling"],

|

| 73 |

+

label="Caption Decoding Strategy",

|

| 74 |

value="Beam Search",

|

| 75 |

)

|

| 76 |

btn_caption = gr.Button("Generate Caption")

|

|

|

|

| 97 |

btn_clear.click(clear_chat, inputs=[chat_state], outputs=[chatbot, chat_state])

|

| 98 |

|

| 99 |

gr.Examples(

|

| 100 |

+

[

|

| 101 |

+

["./merlion.png", "Beam Search", "which city is this?"],

|

| 102 |

+

[

|

| 103 |

+

"./Blue_Jay_0044_62759.jpg",

|

| 104 |

+

"Beam Search",

|

| 105 |

+

"what is the name of this bird?",

|

| 106 |

+

],

|

| 107 |

+

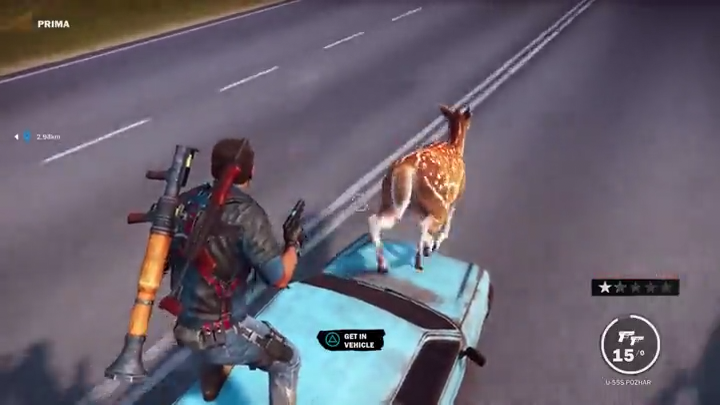

["./5kstbz-0001.png", "Beam Search", "where is the man standing?"],

|

| 108 |

+

[

|

| 109 |

+

"ILSVRC2012_val_00000008.JPEG",

|

| 110 |

+

"eam Search",

|

| 111 |

+

"Name the colors of macarons you see in the image.",

|

| 112 |

+

],

|

| 113 |

+

],

|

| 114 |

+

inputs=[input_image, caption_type, question_txt],

|

| 115 |

)

|

| 116 |

|

| 117 |

+

gr.Markdown("Sample images are taken from ImageNet, CUB and GamePhysics datasets.")

|

| 118 |

+

|

| 119 |

demo.launch()

|