Spaces:

Runtime error

Runtime error

Alex Strick van Linschoten

commited on

Commit

•

64c717a

1

Parent(s):

ef4decc

upload app

Browse files- README.md +6 -5

- app.py +107 -0

- article.md +45 -0

- packages.txt +1 -0

- requirements.txt +10 -0

- test1.jpg +0 -0

- test1.pdf +0 -0

- test2.pdf +0 -0

README.md

CHANGED

|

@@ -1,13 +1,14 @@

|

|

| 1 |

---

|

| 2 |

title: Redaction Detector

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 2.9.4

|

| 8 |

app_file: app.py

|

| 9 |

-

pinned:

|

| 10 |

license: apache-2.0

|

| 11 |

---

|

| 12 |

|

| 13 |

-

Check out the configuration reference at

|

|

|

| 1 |

---

|

| 2 |

title: Redaction Detector

|

| 3 |

+

emoji: 📄

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: yellow

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 2.9.4

|

| 8 |

app_file: app.py

|

| 9 |

+

pinned: true

|

| 10 |

license: apache-2.0

|

| 11 |

---

|

| 12 |

|

| 13 |

+

Check out the configuration reference at

|

| 14 |

+

https://huggingface.co/docs/hub/spaces#reference

|

app.py

ADDED

|

@@ -0,0 +1,107 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import skimage

|

| 3 |

+

from fastai.learner import load_learner

|

| 4 |

+

from fastai.vision.all import *

|

| 5 |

+

from huggingface_hub import hf_hub_download

|

| 6 |

+

import fitz

|

| 7 |

+

import tempfile

|

| 8 |

+

import os

|

| 9 |

+

from fpdf import FPDF

|

| 10 |

+

|

| 11 |

+

learn = load_learner(

|

| 12 |

+

hf_hub_download("strickvl/redaction-classifier-fastai", "model.pkl")

|

| 13 |

+

)

|

| 14 |

+

|

| 15 |

+

labels = learn.dls.vocab

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def predict(pdf, confidence, generate_file):

|

| 19 |

+

document = fitz.open(pdf.name)

|

| 20 |

+

results = []

|

| 21 |

+

images = []

|

| 22 |

+

tmp_dir = tempfile.gettempdir()

|

| 23 |

+

for page_num, page in enumerate(document, start=1):

|

| 24 |

+

image_pixmap = page.get_pixmap()

|

| 25 |

+

image = image_pixmap.tobytes()

|

| 26 |

+

_, _, probs = learn.predict(image)

|

| 27 |

+

results.append(

|

| 28 |

+

{labels[i]: float(probs[i]) for i in range(len(labels))}

|

| 29 |

+

)

|

| 30 |

+

if probs[0] > (confidence / 100):

|

| 31 |

+

redaction_count = len(images)

|

| 32 |

+

image_pixmap.save(os.path.join(tmp_dir, f"page-{page_num}.png"))

|

| 33 |

+

images.append(

|

| 34 |

+

[

|

| 35 |

+

f"Redacted page #{redaction_count + 1} on page {page_num}",

|

| 36 |

+

os.path.join(tmp_dir, f"page-{page_num}.png"),

|

| 37 |

+

]

|

| 38 |

+

)

|

| 39 |

+

|

| 40 |

+

redacted_pages = [

|

| 41 |

+

str(page + 1)

|

| 42 |

+

for page in range(len(results))

|

| 43 |

+

if results[page]["redacted"] > (confidence / 100)

|

| 44 |

+

]

|

| 45 |

+

report = os.path.join(tmp_dir, "redacted_pages.pdf")

|

| 46 |

+

if generate_file:

|

| 47 |

+

pdf = FPDF()

|

| 48 |

+

pdf.set_auto_page_break(0)

|

| 49 |

+

imagelist = sorted(

|

| 50 |

+

[i for i in os.listdir(tmp_dir) if i.endswith("png")]

|

| 51 |

+

)

|

| 52 |

+

for image in imagelist:

|

| 53 |

+

pdf.add_page()

|

| 54 |

+

pdf.image(os.path.join(tmp_dir, image), w=190, h=280)

|

| 55 |

+

pdf.output(report, "F")

|

| 56 |

+

text_output = f"A total of {len(redacted_pages)} pages were redacted. \n\n The redacted page numbers were: {', '.join(redacted_pages)}."

|

| 57 |

+

if generate_file:

|

| 58 |

+

return text_output, images, report

|

| 59 |

+

else:

|

| 60 |

+

return text_output, images, None

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

title = "Redaction Detector"

|

| 64 |

+

|

| 65 |

+

description = "A classifier trained on publicly released redacted (and unredacted) FOIA documents, using [fastai](https://github.com/fastai/fastai)."

|

| 66 |

+

|

| 67 |

+

with open("article.md") as f:

|

| 68 |

+

article = f.read()

|

| 69 |

+

|

| 70 |

+

examples = [["test1.pdf", 80, False], ["test2.pdf", 80, False]]

|

| 71 |

+

interpretation = "default"

|

| 72 |

+

enable_queue = True

|

| 73 |

+

theme = "grass"

|

| 74 |

+

allow_flagging = "never"

|

| 75 |

+

|

| 76 |

+

demo = gr.Interface(

|

| 77 |

+

fn=predict,

|

| 78 |

+

inputs=[

|

| 79 |

+

"file",

|

| 80 |

+

gr.inputs.Slider(

|

| 81 |

+

minimum=0,

|

| 82 |

+

maximum=100,

|

| 83 |

+

step=None,

|

| 84 |

+

default=80,

|

| 85 |

+

label="Confidence",

|

| 86 |

+

optional=False,

|

| 87 |

+

),

|

| 88 |

+

"checkbox",

|

| 89 |

+

],

|

| 90 |

+

outputs=[

|

| 91 |

+

gr.outputs.Textbox(label="Document Analysis"),

|

| 92 |

+

gr.outputs.Carousel(["text", "image"], label="Redacted pages"),

|

| 93 |

+

gr.outputs.File(label="Download redacted pages"),

|

| 94 |

+

],

|

| 95 |

+

title=title,

|

| 96 |

+

description=description,

|

| 97 |

+

article=article,

|

| 98 |

+

theme=theme,

|

| 99 |

+

allow_flagging=allow_flagging,

|

| 100 |

+

examples=examples,

|

| 101 |

+

interpretation=interpretation,

|

| 102 |

+

)

|

| 103 |

+

|

| 104 |

+

demo.launch(

|

| 105 |

+

cache_examples=True,

|

| 106 |

+

enable_queue=enable_queue,

|

| 107 |

+

)

|

article.md

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

I've been working through the first two lessons of

|

| 2 |

+

[the fastai course](https://course.fast.ai/). For lesson one I trained a model

|

| 3 |

+

to recognise my cat, Mr Blupus. For lesson two the emphasis is on getting those

|

| 4 |

+

models out in the world as some kind of demo or application.

|

| 5 |

+

[Gradio](https://gradio.app) and

|

| 6 |

+

[Huggingface Spaces](https://huggingface.co/spaces) makes it super easy to get a

|

| 7 |

+

prototype of your model on the internet.

|

| 8 |

+

|

| 9 |

+

This model has an accuracy of ~96% on the validation dataset.

|

| 10 |

+

|

| 11 |

+

## The Dataset

|

| 12 |

+

|

| 13 |

+

I downloaded a few thousand publicly-available FOIA documents from a government

|

| 14 |

+

website. I split the PDFs up into individual `.jpg` files and then used

|

| 15 |

+

[Prodigy](https://prodi.gy/) to annotate the data. (This process was described

|

| 16 |

+

in

|

| 17 |

+

[a blogpost written last year](https://mlops.systems/fastai/redactionmodel/computervision/datalabelling/2021/09/06/redaction-classification-chapter-2.html).)

|

| 18 |

+

|

| 19 |

+

## Training the model

|

| 20 |

+

|

| 21 |

+

I trained the model with fastai's flexible `vision_learner`, fine-tuning

|

| 22 |

+

`resnet18` which was both smaller than `resnet34` (no surprises there) and less

|

| 23 |

+

liable to early overfitting. I trained the model for 10 epochs.

|

| 24 |

+

|

| 25 |

+

## Further Reading

|

| 26 |

+

|

| 27 |

+

This initial dataset spurred an ongoing interest in the domain and I've since

|

| 28 |

+

been working on the problem of object detection, i.e. identifying exactly which

|

| 29 |

+

parts of the image contain redactions.

|

| 30 |

+

|

| 31 |

+

Some of the key blogs I've written about this project:

|

| 32 |

+

|

| 33 |

+

- How to annotate data for an object detection problem with Prodigy

|

| 34 |

+

([link](https://mlops.systems/redactionmodel/computervision/datalabelling/2021/11/29/prodigy-object-detection-training.html))

|

| 35 |

+

- How to create synthetic images to supplement a small dataset

|

| 36 |

+

([link](https://mlops.systems/redactionmodel/computervision/python/tools/2022/02/10/synthetic-image-data.html))

|

| 37 |

+

- How to use error analysis and visual tools like FiftyOne to improve model

|

| 38 |

+

performance

|

| 39 |

+

([link](https://mlops.systems/redactionmodel/computervision/tools/debugging/jupyter/2022/03/12/fiftyone-computervision.html))

|

| 40 |

+

- Creating more synthetic data focused on the tasks my model finds hard

|

| 41 |

+

([link](https://mlops.systems/tools/redactionmodel/computervision/2022/04/06/synthetic-data-results.html))

|

| 42 |

+

- Data validation for object detection / computer vision (a three part series —

|

| 43 |

+

[part 1](https://mlops.systems/tools/redactionmodel/computervision/datavalidation/2022/04/19/data-validation-great-expectations-part-1.html),

|

| 44 |

+

[part 2](https://mlops.systems/tools/redactionmodel/computervision/datavalidation/2022/04/26/data-validation-great-expectations-part-2.html),

|

| 45 |

+

[part 3](https://mlops.systems/tools/redactionmodel/computervision/datavalidation/2022/04/28/data-validation-great-expectations-part-3.html))

|

packages.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

| 1 |

+

python3-opencv

|

requirements.txt

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

--find-links https://download.openmmlab.com/mmcv/dist/cpu/torch1.10.0/index.html

|

| 2 |

+

mmcv-full==1.3.17

|

| 3 |

+

mmdet==2.17.0

|

| 4 |

+

gradio==2.7.5

|

| 5 |

+

icevision[all]==0.12.0

|

| 6 |

+

|

| 7 |

+

fastai

|

| 8 |

+

scikit-image

|

| 9 |

+

pymupdf

|

| 10 |

+

fpdf

|

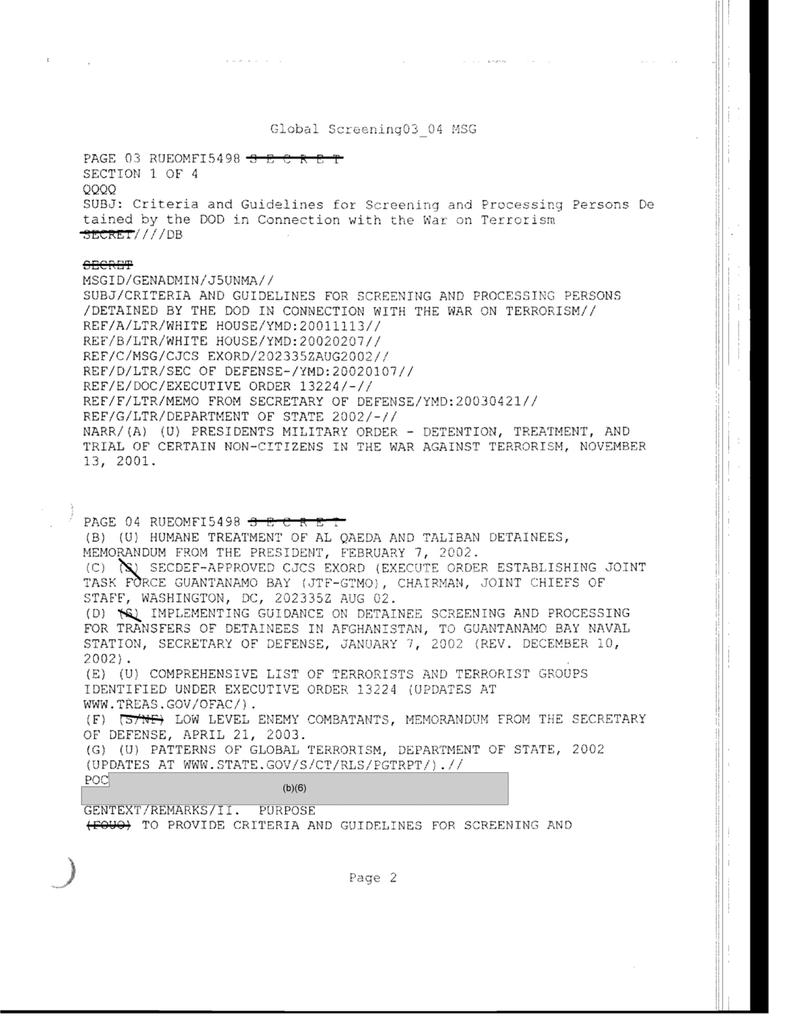

test1.jpg

ADDED

|

test1.pdf

ADDED

|

Binary file (921 kB). View file

|

test2.pdf

ADDED

|

Binary file (740 kB). View file

|