Spaces:

Running

on

T4

Running

on

T4

stevengrove

commited on

Commit

·

186701e

1

Parent(s):

d912a42

initial commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +127 -0

- README.md +141 -11

- app.py +61 -0

- assets/yolo_arch.png +0 -0

- assets/yolo_logo.png +0 -0

- configs/deploy/detection_onnxruntime-fp16_dynamic.py +18 -0

- configs/deploy/detection_onnxruntime-int8_dynamic.py +20 -0

- configs/deploy/detection_onnxruntime_static.py +18 -0

- configs/deploy/detection_tensorrt-fp16_static-640x640.py +38 -0

- configs/deploy/detection_tensorrt-int8_static-640x640.py +30 -0

- configs/finetune_coco/yolo_world_l_t2i_bn_2e-4_100e_4x8gpus_coco_finetune.py +183 -0

- configs/finetune_coco/yolo_world_m_t2i_bn_2e-4_100e_4x8gpus_coco_finetune.py +183 -0

- configs/finetune_coco/yolo_world_s_t2i_bn_2e-4_100e_4x8gpus_coco_finetune.py +183 -0

- configs/pretrain/yolo_world_l_dual_3block_l2norm_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +173 -0

- configs/pretrain/yolo_world_l_t2i_bn_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +182 -0

- configs/pretrain/yolo_world_m_dual_3block_l2norm_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +173 -0

- configs/pretrain/yolo_world_m_t2i_bn_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +171 -0

- configs/pretrain/yolo_world_s_dual_l2norm_3block_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +173 -0

- configs/pretrain/yolo_world_s_t2i_bn_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py +172 -0

- configs/scaleup/yolo_world_l_t2i_bn_2e-4_20e_4x8gpus_obj365v1_goldg_train_lvis_minival_s1024.py +216 -0

- configs/scaleup/yolo_world_l_t2i_bn_2e-4_20e_4x8gpus_obj365v1_goldg_train_lvis_minival_s1280.py +216 -0

- configs/scaleup/yolo_world_l_t2i_bn_2e-4_20e_4x8gpus_obj365v1_goldg_train_lvis_minival_s1280_v2.py +216 -0

- deploy/__init__.py +1 -0

- deploy/models/__init__.py +4 -0

- docs/data.md +19 -0

- docs/deploy.md +0 -0

- docs/install.md +0 -0

- docs/training.md +0 -0

- requirements.txt +1 -0

- setup.py +190 -0

- taiji/drun +35 -0

- taiji/erun +23 -0

- taiji/etorchrun +51 -0

- taiji/jizhi_run_vanilla +105 -0

- third_party/mmyolo/.circleci/config.yml +34 -0

- third_party/mmyolo/.circleci/docker/Dockerfile +11 -0

- third_party/mmyolo/.circleci/test.yml +213 -0

- third_party/mmyolo/.dev_scripts/gather_models.py +312 -0

- third_party/mmyolo/.dev_scripts/print_registers.py +448 -0

- third_party/mmyolo/.github/CODE_OF_CONDUCT.md +76 -0

- third_party/mmyolo/.github/CONTRIBUTING.md +1 -0

- third_party/mmyolo/.github/ISSUE_TEMPLATE/1-bug-report.yml +67 -0

- third_party/mmyolo/.github/ISSUE_TEMPLATE/2-feature-request.yml +32 -0

- third_party/mmyolo/.github/ISSUE_TEMPLATE/3-new-model.yml +30 -0

- third_party/mmyolo/.github/ISSUE_TEMPLATE/4-documentation.yml +22 -0

- third_party/mmyolo/.github/ISSUE_TEMPLATE/5-reimplementation.yml +87 -0

- third_party/mmyolo/.github/ISSUE_TEMPLATE/config.yml +9 -0

- third_party/mmyolo/.github/pull_request_template.md +25 -0

- third_party/mmyolo/.github/workflows/deploy.yml +28 -0

- third_party/mmyolo/.gitignore +126 -0

.gitignore

ADDED

|

@@ -0,0 +1,127 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

*.egg-info/

|

| 24 |

+

.installed.cfg

|

| 25 |

+

*.egg

|

| 26 |

+

MANIFEST

|

| 27 |

+

|

| 28 |

+

# PyInstaller

|

| 29 |

+

# Usually these files are written by a python script from a template

|

| 30 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 31 |

+

*.manifest

|

| 32 |

+

*.spec

|

| 33 |

+

|

| 34 |

+

# Installer logs

|

| 35 |

+

pip-log.txt

|

| 36 |

+

pip-delete-this-directory.txt

|

| 37 |

+

|

| 38 |

+

# Unit test / coverage reports

|

| 39 |

+

htmlcov/

|

| 40 |

+

.tox/

|

| 41 |

+

.coverage

|

| 42 |

+

.coverage.*

|

| 43 |

+

.cache

|

| 44 |

+

nosetests.xml

|

| 45 |

+

coverage.xml

|

| 46 |

+

*.cover

|

| 47 |

+

.hypothesis/

|

| 48 |

+

.pytest_cache/

|

| 49 |

+

|

| 50 |

+

# Translations

|

| 51 |

+

*.mo

|

| 52 |

+

*.pot

|

| 53 |

+

|

| 54 |

+

# Django stuff:

|

| 55 |

+

*.log

|

| 56 |

+

local_settings.py

|

| 57 |

+

db.sqlite3

|

| 58 |

+

|

| 59 |

+

# Flask stuff:

|

| 60 |

+

instance/

|

| 61 |

+

.webassets-cache

|

| 62 |

+

|

| 63 |

+

# Scrapy stuff:

|

| 64 |

+

.scrapy

|

| 65 |

+

|

| 66 |

+

# Sphinx documentation

|

| 67 |

+

docs/en/_build/

|

| 68 |

+

docs/zh_cn/_build/

|

| 69 |

+

|

| 70 |

+

# PyBuilder

|

| 71 |

+

target/

|

| 72 |

+

|

| 73 |

+

# Jupyter Notebook

|

| 74 |

+

.ipynb_checkpoints

|

| 75 |

+

|

| 76 |

+

# pyenv

|

| 77 |

+

.python-version

|

| 78 |

+

|

| 79 |

+

# celery beat schedule file

|

| 80 |

+

celerybeat-schedule

|

| 81 |

+

|

| 82 |

+

# SageMath parsed files

|

| 83 |

+

*.sage.py

|

| 84 |

+

|

| 85 |

+

# Environments

|

| 86 |

+

.env

|

| 87 |

+

.venv

|

| 88 |

+

env/

|

| 89 |

+

venv/

|

| 90 |

+

ENV/

|

| 91 |

+

env.bak/

|

| 92 |

+

venv.bak/

|

| 93 |

+

|

| 94 |

+

# Spyder project settings

|

| 95 |

+

.spyderproject

|

| 96 |

+

.spyproject

|

| 97 |

+

|

| 98 |

+

# Rope project settings

|

| 99 |

+

.ropeproject

|

| 100 |

+

|

| 101 |

+

# mkdocs documentation

|

| 102 |

+

/site

|

| 103 |

+

|

| 104 |

+

# mypy

|

| 105 |

+

.mypy_cache/

|

| 106 |

+

data/

|

| 107 |

+

data

|

| 108 |

+

.vscode

|

| 109 |

+

.idea

|

| 110 |

+

.DS_Store

|

| 111 |

+

|

| 112 |

+

# custom

|

| 113 |

+

*.pkl

|

| 114 |

+

*.pkl.json

|

| 115 |

+

*.log.json

|

| 116 |

+

docs/modelzoo_statistics.md

|

| 117 |

+

mmdet/.mim

|

| 118 |

+

work_dirs

|

| 119 |

+

|

| 120 |

+

# Pytorch

|

| 121 |

+

*.pth

|

| 122 |

+

*.py~

|

| 123 |

+

*.sh~

|

| 124 |

+

|

| 125 |

+

# venus

|

| 126 |

+

venus_run.sh

|

| 127 |

+

|

README.md

CHANGED

|

@@ -1,11 +1,141 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div align="center">

|

| 2 |

+

<center>

|

| 3 |

+

<img width=500px src="./assets/yolo_logo.png">

|

| 4 |

+

</center>

|

| 5 |

+

<br>

|

| 6 |

+

<a href="https://scholar.google.com/citations?hl=zh-CN&user=PH8rJHYAAAAJ">Tianheng Cheng*</a><sup><span>2,3</span></sup>,

|

| 7 |

+

<a href="https://linsong.info/">Lin Song*</a><sup><span>1</span></sup>,

|

| 8 |

+

<a href="">Yixiao Ge</a><sup><span>1,2</span></sup>,

|

| 9 |

+

<a href="">Xinggang Wang</a><sup><span>3</span></sup>,

|

| 10 |

+

<a href="http://eic.hust.edu.cn/professor/liuwenyu/"> Wenyu Liu</a><sup><span>3</span></sup>,

|

| 11 |

+

<a href="">Ying Shan</a><sup><span>1,2</span></sup>

|

| 12 |

+

</br>

|

| 13 |

+

|

| 14 |

+

<sup>1</sup> Tencent AI Lab, <sup>2</sup> ARC Lab, Tencent PCG

|

| 15 |

+

<sup>3</sup> Huazhong University of Science and Technology

|

| 16 |

+

<br>

|

| 17 |

+

<div>

|

| 18 |

+

|

| 19 |

+

[](https://arxiv.org/abs/)

|

| 20 |

+

[](https://huggingface.co/)

|

| 21 |

+

[](LICENSE)

|

| 22 |

+

|

| 23 |

+

</div>

|

| 24 |

+

</div>

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

## Updates

|

| 28 |

+

|

| 29 |

+

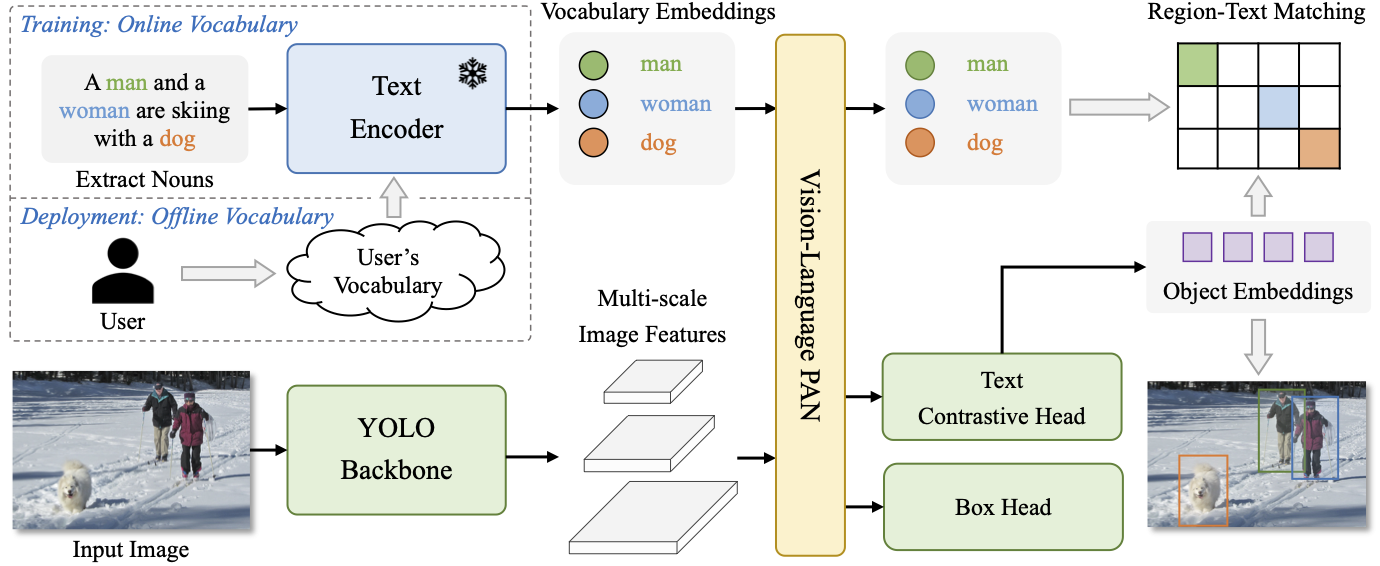

`[2024-1-25]:` We are excited to launch **YOLO-World**, a cutting-edge real-time open-vocabulary object detector.

|

| 30 |

+

|

| 31 |

+

## Highlights

|

| 32 |

+

|

| 33 |

+

This repo contains the PyTorch implementation, pre-trained weights, and pre-training/fine-tuning code for YOLO-World.

|

| 34 |

+

|

| 35 |

+

* YOLO-World is pre-trained on large-scale datasets, including detection, grounding, and image-text datasets.

|

| 36 |

+

|

| 37 |

+

* YOLO-World is the next-generation YOLO detector, with a strong open-vocabulary detection capability and grounding ability.

|

| 38 |

+

|

| 39 |

+

* YOLO-World presents a *prompt-then-detect* paradigm for efficient user-vocabulary inference, which re-parameterizes vocabulary embeddings as parameters into the model and achieve superior inference speed. You can try to export your own detection model without extra training or fine-tuning in our [online demo]()!

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

<center>

|

| 43 |

+

<img width=800px src="./assets/yolo_arch.png">

|

| 44 |

+

</center>

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

## Abstract

|

| 48 |

+

|

| 49 |

+

The You Only Look Once (YOLO) series of detectors have established themselves as efficient and practical tools. However, their reliance on predefined and trained object categories limits their applicability in open scenarios. Addressing this limitation, we introduce YOLO-World, an innovative approach that enhances YOLO with open-vocabulary detection capabilities through vision-language modeling and pre-training on large-scale datasets. Specifically, we propose a new Re-parameterizable Vision-Language Path Aggregation Network (RepVL-PAN) and region-text contrastive loss to facilitate the interaction between visual and linguistic information. Our method excels in detecting a wide range of objects in a zero-shot manner with high efficiency. On the challenging LVIS dataset, YOLO-World achieves 35.4 AP with 52.0 FPS on V100, which outperforms many state-of-the-art methods in terms of both accuracy and speed. Furthermore, the fine-tuned YOLO-World achieves remarkable performance on several downstream tasks, including object detection and open-vocabulary instance segmentation.

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

## Demo

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

## Main Results

|

| 56 |

+

|

| 57 |

+

We've pre-trained YOLO-World-S/M/L from scratch and evaluate on the `LVIS val-1.0` and `LVIS minival`. We provide the pre-trained model weights and training logs for applications/research or re-producing the results.

|

| 58 |

+

|

| 59 |

+

### Zero-shot Inference on LVIS dataset

|

| 60 |

+

|

| 61 |

+

| model | Pre-train Data | AP | AP<sub>r</sub> | AP<sub>c</sub> | AP<sub>f</sub> | FPS(V100) | weights | log |

|

| 62 |

+

| :---- | :------------- | :-:| :------------: |:-------------: | :-------: | :-----: | :---: | :---: |

|

| 63 |

+

| [YOLO-World-S](./configs/pretrain/yolo_world_s_t2i_bn_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py) | O365+GoldG | 17.6 | 11.9 | 14.5 | 23.2 | - | [wecom](https://drive.weixin.qq.com/s?k=AJEAIQdfAAoREsieRl) | [log]() |

|

| 64 |

+

| [YOLO-World-M](./configs/pretrain/yolo_world_m_t2i_bn_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py) | O365+GoldG | 23.5 | 17.2 | 20.4 | 29.6 | - | [wecom](https://drive.weixin.qq.com/s?k=AJEAIQdfAAoj0byBC0) | [log]() |

|

| 65 |

+

| [YOLO-World-L](./configs/pretrain/yolo_world_l_t2i_bn_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py) | O365+GoldG | 25.7 | 18.7 | 22.6 | 32.2 | - | [wecom](https://drive.weixin.qq.com/s?k=AJEAIQdfAAoK06oxO2) | [log]() |

|

| 66 |

+

|

| 67 |

+

**NOTE:**

|

| 68 |

+

1. The evaluation results are tested on LVIS minival in a zero-shot manner.

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

## Getting started

|

| 72 |

+

|

| 73 |

+

### 1. Installation

|

| 74 |

+

|

| 75 |

+

YOLO-World is developed based on `torch==1.11.0` `mmyolo==0.6.0` and `mmdetection==3.0.0`.

|

| 76 |

+

|

| 77 |

+

```bash

|

| 78 |

+

# install key dependencies

|

| 79 |

+

pip install mmdetection==3.0.0 mmengine transformers

|

| 80 |

+

|

| 81 |

+

# clone the repo

|

| 82 |

+

git clone https://xxxx.YOLO-World.git

|

| 83 |

+

cd YOLO-World

|

| 84 |

+

|

| 85 |

+

# install mmyolo

|

| 86 |

+

mkdir third_party

|

| 87 |

+

git clone https://github.com/open-mmlab/mmyolo.git

|

| 88 |

+

cd ..

|

| 89 |

+

|

| 90 |

+

```

|

| 91 |

+

|

| 92 |

+

### 2. Preparing Data

|

| 93 |

+

|

| 94 |

+

We provide the details about the pre-training data in [docs/data](./docs/data.md).

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

## Training & Evaluation

|

| 98 |

+

|

| 99 |

+

We adopt the default [training](./tools/train.py) or [evaluation](./tools/test.py) scripts of [mmyolo](https://github.com/open-mmlab/mmyolo).

|

| 100 |

+

We provide the configs for pre-training and fine-tuning in `configs/pretrain` and `configs/finetune_coco`.

|

| 101 |

+

Training YOLO-World is easy:

|

| 102 |

+

|

| 103 |

+

```bash

|

| 104 |

+

chmod +x tools/dist_train.sh

|

| 105 |

+

# sample command for pre-training, use AMP for mixed-precision training

|

| 106 |

+

./tools/dist_train.sh configs/pretrain/yolo_world_l_t2i_bn_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py 8 --amp

|

| 107 |

+

```

|

| 108 |

+

**NOTE:** YOLO-World is pre-trained on 4 nodes with 8 GPUs per node (32 GPUs in total). For pre-training, the `node_rank` and `nnodes` for multi-node training should be specified.

|

| 109 |

+

|

| 110 |

+

Evalutating YOLO-World is also easy:

|

| 111 |

+

|

| 112 |

+

```bash

|

| 113 |

+

chmod +x tools/dist_test.sh

|

| 114 |

+

./tools/dist_test.sh path/to/config path/to/weights 8

|

| 115 |

+

```

|

| 116 |

+

|

| 117 |

+

**NOTE:** We mainly evaluate the performance on LVIS-minival for pre-training.

|

| 118 |

+

|

| 119 |

+

## Deployment

|

| 120 |

+

|

| 121 |

+

We provide the details about deployment for downstream applications in [docs/deployment](./docs/deploy.md).

|

| 122 |

+

You can directly download the ONNX model through the online [demo]() in Huggingface Spaces 🤗.

|

| 123 |

+

|

| 124 |

+

## Acknowledgement

|

| 125 |

+

|

| 126 |

+

We sincerely thank [mmyolo](https://github.com/open-mmlab/mmyolo), [mmdetection](https://github.com/open-mmlab/mmdetection), and [transformers](https://github.com/huggingface/transformers) for providing their wonderful code to the community!

|

| 127 |

+

|

| 128 |

+

## Citations

|

| 129 |

+

If you find YOLO-World is useful in your research or applications, please consider giving us a star 🌟 and citing it.

|

| 130 |

+

|

| 131 |

+

```bibtex

|

| 132 |

+

@article{cheng2024yolow,

|

| 133 |

+

title={YOLO-World: Real-Time Open-Vocabulary Object Detection},

|

| 134 |

+

author={Cheng, Tianheng and Song, Lin and Ge, Yixiao and Liu, Wenyu and Wang, Xinggang and Shan, Ying},

|

| 135 |

+

journal={arXiv preprint arXiv:},

|

| 136 |

+

year={2024}

|

| 137 |

+

}

|

| 138 |

+

```

|

| 139 |

+

|

| 140 |

+

## Licence

|

| 141 |

+

YOLO-World is under the GPL-v3 Licence and is supported for comercial usage.

|

app.py

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import os.path as osp

|

| 3 |

+

|

| 4 |

+

from mmengine.config import Config, DictAction

|

| 5 |

+

from mmengine.runner import Runner

|

| 6 |

+

from mmengine.dataset import Compose

|

| 7 |

+

from mmyolo.registry import RUNNERS

|

| 8 |

+

|

| 9 |

+

from tools.demo import demo

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def parse_args():

|

| 13 |

+

parser = argparse.ArgumentParser(

|

| 14 |

+

description='YOLO-World Demo')

|

| 15 |

+

parser.add_argument('--config', default='configs/pretrain/yolo_world_l_t2i_bn_2e-4_100e_4x8gpus_obj365v1_goldg_train_lvis_minival.py')

|

| 16 |

+

parser.add_argument('--checkpoint', default='model_zoo/yolow-v8_l_clipv2_frozen_t2iv2_bn_o365_goldg_pretrain.pth')

|

| 17 |

+

parser.add_argument(

|

| 18 |

+

'--work-dir',

|

| 19 |

+

help='the directory to save the file containing evaluation metrics')

|

| 20 |

+

parser.add_argument(

|

| 21 |

+

'--cfg-options',

|

| 22 |

+

nargs='+',

|

| 23 |

+

action=DictAction,

|

| 24 |

+

help='override some settings in the used config, the key-value pair '

|

| 25 |

+

'in xxx=yyy format will be merged into config file. If the value to '

|

| 26 |

+

'be overwritten is a list, it should be like key="[a,b]" or key=a,b '

|

| 27 |

+

'It also allows nested list/tuple values, e.g. key="[(a,b),(c,d)]" '

|

| 28 |

+

'Note that the quotation marks are necessary and that no white space '

|

| 29 |

+

'is allowed.')

|

| 30 |

+

args = parser.parse_args()

|

| 31 |

+

return args

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

if __name__ == '__main__':

|

| 35 |

+

args = parse_args()

|

| 36 |

+

|

| 37 |

+

# load config

|

| 38 |

+

cfg = Config.fromfile(args.config)

|

| 39 |

+

if args.cfg_options is not None:

|

| 40 |

+

cfg.merge_from_dict(args.cfg_options)

|

| 41 |

+

|

| 42 |

+

if args.work_dir is not None:

|

| 43 |

+

cfg.work_dir = args.work_dir

|

| 44 |

+

elif cfg.get('work_dir', None) is None:

|

| 45 |

+

cfg.work_dir = osp.join('./work_dirs',

|

| 46 |

+

osp.splitext(osp.basename(args.config))[0])

|

| 47 |

+

|

| 48 |

+

cfg.load_from = args.checkpoint

|

| 49 |

+

|

| 50 |

+

if 'runner_type' not in cfg:

|

| 51 |

+

runner = Runner.from_cfg(cfg)

|

| 52 |

+

else:

|

| 53 |

+

runner = RUNNERS.build(cfg)

|

| 54 |

+

|

| 55 |

+

runner.call_hook('before_run')

|

| 56 |

+

runner.load_or_resume()

|

| 57 |

+

pipeline = cfg.test_dataloader.dataset.pipeline

|

| 58 |

+

runner.pipeline = Compose(pipeline)

|

| 59 |

+

runner.model.eval()

|

| 60 |

+

demo(runner, args)

|

| 61 |

+

|

assets/yolo_arch.png

ADDED

|

assets/yolo_logo.png

ADDED

|

configs/deploy/detection_onnxruntime-fp16_dynamic.py

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_ = (

|

| 2 |

+

'../../third_party/mmdeploy/configs/mmdet/detection/'

|

| 3 |

+

'detection_onnxruntime-fp16_dynamic.py')

|

| 4 |

+

codebase_config = dict(

|

| 5 |

+

type='mmyolo',

|

| 6 |

+

task='ObjectDetection',

|

| 7 |

+

model_type='end2end',

|

| 8 |

+

post_processing=dict(

|

| 9 |

+

score_threshold=0.1,

|

| 10 |

+

confidence_threshold=0.005,

|

| 11 |

+

iou_threshold=0.3,

|

| 12 |

+

max_output_boxes_per_class=100,

|

| 13 |

+

pre_top_k=1000,

|

| 14 |

+

keep_top_k=100,

|

| 15 |

+

background_label_id=-1),

|

| 16 |

+

module=['mmyolo.deploy'])

|

| 17 |

+

backend_config = dict(

|

| 18 |

+

type='onnxruntime')

|

configs/deploy/detection_onnxruntime-int8_dynamic.py

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_ = (

|

| 2 |

+

'../../third_party/mmdeploy/configs/mmdet/detection/'

|

| 3 |

+

'detection_onnxruntime-fp16_dynamic.py')

|

| 4 |

+

backend_config = dict(

|

| 5 |

+

precision='int8')

|

| 6 |

+

codebase_config = dict(

|

| 7 |

+

type='mmyolo',

|

| 8 |

+

task='ObjectDetection',

|

| 9 |

+

model_type='end2end',

|

| 10 |

+

post_processing=dict(

|

| 11 |

+

score_threshold=0.1,

|

| 12 |

+

confidence_threshold=0.005,

|

| 13 |

+

iou_threshold=0.3,

|

| 14 |

+

max_output_boxes_per_class=100,

|

| 15 |

+

pre_top_k=1000,

|

| 16 |

+

keep_top_k=100,

|

| 17 |

+

background_label_id=-1),

|

| 18 |

+

module=['mmyolo.deploy'])

|

| 19 |

+

backend_config = dict(

|

| 20 |

+

type='onnxruntime')

|

configs/deploy/detection_onnxruntime_static.py

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_ = (

|

| 2 |

+

'../../third_party/mmyolo/configs/deploy/'

|

| 3 |

+

'detection_onnxruntime_static.py')

|

| 4 |

+

codebase_config = dict(

|

| 5 |

+

type='mmyolo',

|

| 6 |

+

task='ObjectDetection',

|

| 7 |

+

model_type='end2end',

|

| 8 |

+

post_processing=dict(

|

| 9 |

+

score_threshold=0.25,

|

| 10 |

+

confidence_threshold=0.005,

|

| 11 |

+

iou_threshold=0.65,

|

| 12 |

+

max_output_boxes_per_class=200,

|

| 13 |

+

pre_top_k=1000,

|

| 14 |

+

keep_top_k=100,

|

| 15 |

+

background_label_id=-1),

|

| 16 |

+

module=['mmyolo.deploy'])

|

| 17 |

+

backend_config = dict(

|

| 18 |

+

type='onnxruntime')

|

configs/deploy/detection_tensorrt-fp16_static-640x640.py

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_ = (

|

| 2 |

+

'../../third_party/mmyolo/configs/deploy/'

|

| 3 |

+

'detection_tensorrt-fp16_static-640x640.py')

|

| 4 |

+

onnx_config = dict(

|

| 5 |

+

type='onnx',

|

| 6 |

+

export_params=True,

|

| 7 |

+

keep_initializers_as_inputs=False,

|

| 8 |

+

opset_version=11,

|

| 9 |

+

save_file='end2end.onnx',

|

| 10 |

+

input_names=['input'],

|

| 11 |

+

output_names=['dets', 'labels'],

|

| 12 |

+

input_shape=(640, 640),

|

| 13 |

+

optimize=True)

|

| 14 |

+

backend_config = dict(

|

| 15 |

+

type='tensorrt',

|

| 16 |

+

common_config=dict(fp16_mode=True, max_workspace_size=1 << 34),

|

| 17 |

+

model_inputs=[

|

| 18 |

+

dict(

|

| 19 |

+

input_shapes=dict(

|

| 20 |

+

input=dict(

|

| 21 |

+

min_shape=[1, 3, 640, 640],

|

| 22 |

+

opt_shape=[1, 3, 640, 640],

|

| 23 |

+

max_shape=[1, 3, 640, 640])))

|

| 24 |

+

])

|

| 25 |

+

use_efficientnms = False # whether to replace TRTBatchedNMS plugin with EfficientNMS plugin # noqa E501

|

| 26 |

+

codebase_config = dict(

|

| 27 |

+

type='mmyolo',

|

| 28 |

+

task='ObjectDetection',

|

| 29 |

+

model_type='end2end',

|

| 30 |

+

post_processing=dict(

|

| 31 |

+

score_threshold=0.25,

|

| 32 |

+

confidence_threshold=0.005,

|

| 33 |

+

iou_threshold=0.65,

|

| 34 |

+

max_output_boxes_per_class=100,

|

| 35 |

+

pre_top_k=1,

|

| 36 |

+

keep_top_k=1,

|

| 37 |

+

background_label_id=-1),

|

| 38 |

+

module=['mmyolo.deploy'])

|

configs/deploy/detection_tensorrt-int8_static-640x640.py

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_ = [

|

| 2 |

+

'../../third_party/mmdeploy/configs/mmdet/_base_/base_static.py',

|

| 3 |

+

'../../third_party/mmdeploy/configs/_base_/backends/tensorrt-int8.py']

|

| 4 |

+

|

| 5 |

+

onnx_config = dict(input_shape=(640, 640))

|

| 6 |

+

|

| 7 |

+

backend_config = dict(

|

| 8 |

+

common_config=dict(max_workspace_size=1 << 30),

|

| 9 |

+

model_inputs=[

|

| 10 |

+

dict(

|

| 11 |

+

input_shapes=dict(

|

| 12 |

+

input=dict(

|

| 13 |

+

min_shape=[1, 3, 640, 640],

|

| 14 |

+

opt_shape=[1, 3, 640, 640],

|

| 15 |

+

max_shape=[1, 3, 640, 640])))

|

| 16 |

+

])

|

| 17 |

+

|

| 18 |

+

codebase_config = dict(

|

| 19 |

+

type='mmyolo',

|

| 20 |

+

task='ObjectDetection',

|

| 21 |

+

model_type='end2end',

|

| 22 |

+

post_processing=dict(

|

| 23 |

+

score_threshold=0.1,

|

| 24 |

+

confidence_threshold=0.005,

|

| 25 |

+

iou_threshold=0.3,

|

| 26 |

+

max_output_boxes_per_class=100,

|

| 27 |

+

pre_top_k=1000,

|

| 28 |

+

keep_top_k=100,

|

| 29 |

+

background_label_id=-1),

|

| 30 |

+

module=['mmyolo.deploy'])

|

configs/finetune_coco/yolo_world_l_t2i_bn_2e-4_100e_4x8gpus_coco_finetune.py

ADDED

|

@@ -0,0 +1,183 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_ = ('../../third_party/mmyolo/configs/yolov8/'

|

| 2 |

+

'yolov8_l_mask-refine_syncbn_fast_8xb16-500e_coco.py')

|

| 3 |

+

custom_imports = dict(imports=['yolo_world'],

|

| 4 |

+

allow_failed_imports=False)

|

| 5 |

+

|

| 6 |

+

# hyper-parameters

|

| 7 |

+

num_classes = 80

|

| 8 |

+

num_training_classes = 80

|

| 9 |

+

max_epochs = 80 # Maximum training epochs

|

| 10 |

+

close_mosaic_epochs = 10

|

| 11 |

+

save_epoch_intervals = 5

|

| 12 |

+

text_channels = 512

|

| 13 |

+

neck_embed_channels = [128, 256, _base_.last_stage_out_channels // 2]

|

| 14 |

+

neck_num_heads = [4, 8, _base_.last_stage_out_channels // 2 // 32]

|

| 15 |

+

base_lr = 2e-4

|

| 16 |

+

weight_decay = 0.05

|

| 17 |

+

train_batch_size_per_gpu = 16

|

| 18 |

+

load_from = 'weights/yolow-v8_l_clipv2_frozen_t2iv2_bn_o365_goldg_pretrain.pth'

|

| 19 |

+

persistent_workers = False

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

# model settings

|

| 23 |

+

model = dict(

|

| 24 |

+

type='YOLOWorldDetector',

|

| 25 |

+

mm_neck=True,

|

| 26 |

+

num_train_classes=num_training_classes,

|

| 27 |

+

num_test_classes=num_classes,

|

| 28 |

+

data_preprocessor=dict(type='YOLOWDetDataPreprocessor'),

|

| 29 |

+

backbone=dict(

|

| 30 |

+

_delete_=True,

|

| 31 |

+

type='MultiModalYOLOBackbone',

|

| 32 |

+

image_model={{_base_.model.backbone}},

|

| 33 |

+

text_model=dict(

|

| 34 |

+

type='HuggingCLIPLanguageBackbone',

|

| 35 |

+

model_name='pretrained_models/clip-vit-base-patch32-projection',

|

| 36 |

+

frozen_modules=['all'])),

|

| 37 |

+

neck=dict(type='YOLOWorldPAFPN',

|

| 38 |

+

guide_channels=text_channels,

|

| 39 |

+

embed_channels=neck_embed_channels,

|

| 40 |

+

num_heads=neck_num_heads,

|

| 41 |

+

block_cfg=dict(type='MaxSigmoidCSPLayerWithTwoConv'),

|

| 42 |

+

num_csp_blocks=2),

|

| 43 |

+

bbox_head=dict(type='YOLOWorldHead',

|

| 44 |

+

head_module=dict(type='YOLOWorldHeadModule',

|

| 45 |

+

embed_dims=text_channels,

|

| 46 |

+

use_bn_head=True,

|

| 47 |

+

num_classes=num_training_classes)),

|

| 48 |

+

train_cfg=dict(assigner=dict(num_classes=num_training_classes)))

|

| 49 |

+

|

| 50 |

+

# dataset settings

|

| 51 |

+

text_transform = [

|

| 52 |

+

dict(type='RandomLoadText',

|

| 53 |

+

num_neg_samples=(num_classes, num_classes),

|

| 54 |

+

max_num_samples=num_training_classes,

|

| 55 |

+

padding_to_max=True,

|

| 56 |

+

padding_value=''),

|

| 57 |

+

dict(type='mmdet.PackDetInputs',

|

| 58 |

+

meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape', 'flip',

|

| 59 |

+

'flip_direction', 'texts'))

|

| 60 |

+

]

|

| 61 |

+

mosaic_affine_transform = [

|

| 62 |

+

dict(

|

| 63 |

+

type='MultiModalMosaic',

|

| 64 |

+

img_scale=_base_.img_scale,

|

| 65 |

+

pad_val=114.0,

|

| 66 |

+

pre_transform=_base_.pre_transform),

|

| 67 |

+

dict(type='YOLOv5CopyPaste', prob=_base_.copypaste_prob),

|

| 68 |

+

dict(

|

| 69 |

+

type='YOLOv5RandomAffine',

|

| 70 |

+

max_rotate_degree=0.0,

|

| 71 |

+

max_shear_degree=0.0,

|

| 72 |

+

max_aspect_ratio=100.,

|

| 73 |

+

scaling_ratio_range=(1 - _base_.affine_scale,

|

| 74 |

+

1 + _base_.affine_scale),

|

| 75 |

+

# img_scale is (width, height)

|

| 76 |

+

border=(-_base_.img_scale[0] // 2, -_base_.img_scale[1] // 2),

|

| 77 |

+

border_val=(114, 114, 114),

|

| 78 |

+

min_area_ratio=_base_.min_area_ratio,

|

| 79 |

+

use_mask_refine=_base_.use_mask2refine)

|

| 80 |

+

]

|

| 81 |

+

train_pipeline = [

|

| 82 |

+

*_base_.pre_transform,

|

| 83 |

+

*mosaic_affine_transform,

|

| 84 |

+

dict(

|

| 85 |

+

type='YOLOv5MultiModalMixUp',

|

| 86 |

+

prob=_base_.mixup_prob,

|

| 87 |

+

pre_transform=[*_base_.pre_transform,

|

| 88 |

+

*mosaic_affine_transform]),

|

| 89 |

+

*_base_.last_transform[:-1],

|

| 90 |

+

*text_transform

|

| 91 |

+

]

|

| 92 |

+

train_pipeline_stage2 = [

|

| 93 |

+

*_base_.train_pipeline_stage2[:-1],

|

| 94 |

+

*text_transform

|

| 95 |

+

]

|

| 96 |

+

coco_train_dataset = dict(

|

| 97 |

+

_delete_=True,

|

| 98 |

+

type='MultiModalDataset',

|

| 99 |

+

dataset=dict(

|

| 100 |

+

type='YOLOv5CocoDataset',

|

| 101 |

+

data_root='data/coco',

|

| 102 |

+

ann_file='annotations/instances_train2017.json',

|

| 103 |

+

data_prefix=dict(img='train2017/'),

|

| 104 |

+

filter_cfg=dict(filter_empty_gt=False, min_size=32)),

|

| 105 |

+

class_text_path='data/captions/coco_class_captions.json',

|

| 106 |

+

pipeline=train_pipeline)

|

| 107 |

+

train_dataloader = dict(

|

| 108 |

+

persistent_workers=persistent_workers,

|

| 109 |

+

batch_size=train_batch_size_per_gpu,

|

| 110 |

+

collate_fn=dict(type='yolow_collate'),

|

| 111 |

+

dataset=coco_train_dataset)

|

| 112 |

+

test_pipeline = [

|

| 113 |

+

*_base_.test_pipeline[:-1],

|

| 114 |

+

dict(type='LoadTextFixed'),

|

| 115 |

+

dict(

|

| 116 |

+

type='mmdet.PackDetInputs',

|

| 117 |

+

meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

|

| 118 |

+

'scale_factor', 'pad_param', 'texts'))

|

| 119 |

+

]

|

| 120 |

+

coco_val_dataset = dict(

|

| 121 |

+

_delete_=True,

|

| 122 |

+

type='MultiModalDataset',

|

| 123 |

+

dataset=dict(

|

| 124 |

+

type='YOLOv5CocoDataset',

|

| 125 |

+

data_root='data/coco',

|

| 126 |

+

ann_file='annotations/instances_val2017.json',

|

| 127 |

+

data_prefix=dict(img='val2017/'),

|

| 128 |

+

filter_cfg=dict(filter_empty_gt=False, min_size=32)),

|

| 129 |

+

class_text_path='data/captions/coco_class_captions.json',

|

| 130 |

+

pipeline=test_pipeline)

|

| 131 |

+

val_dataloader = dict(dataset=coco_val_dataset)

|

| 132 |

+

test_dataloader = val_dataloader

|

| 133 |

+

# training settings

|

| 134 |

+

default_hooks = dict(

|

| 135 |

+

param_scheduler=dict(

|

| 136 |

+

scheduler_type='linear',

|

| 137 |

+

lr_factor=0.01,

|

| 138 |

+

max_epochs=max_epochs),

|

| 139 |

+

checkpoint=dict(

|

| 140 |

+

max_keep_ckpts=-1,

|

| 141 |

+

save_best=None,

|

| 142 |

+

interval=save_epoch_intervals))

|

| 143 |

+

custom_hooks = [

|

| 144 |

+

dict(

|

| 145 |

+

type='EMAHook',

|

| 146 |

+

ema_type='ExpMomentumEMA',

|

| 147 |

+

momentum=0.0001,

|

| 148 |

+

update_buffers=True,

|

| 149 |

+

strict_load=False,

|

| 150 |

+

priority=49),

|

| 151 |

+

dict(

|

| 152 |

+

type='mmdet.PipelineSwitchHook',

|

| 153 |

+

switch_epoch=max_epochs - close_mosaic_epochs,

|

| 154 |

+

switch_pipeline=train_pipeline_stage2)

|

| 155 |

+

]

|

| 156 |

+

train_cfg = dict(

|

| 157 |

+

max_epochs=max_epochs,

|

| 158 |

+

val_interval=5,

|

| 159 |

+

dynamic_intervals=[((max_epochs - close_mosaic_epochs),

|

| 160 |

+

_base_.val_interval_stage2)])

|

| 161 |

+

optim_wrapper = dict(

|

| 162 |

+

optimizer=dict(

|

| 163 |

+

_delete_=True,

|

| 164 |

+

type='AdamW',

|

| 165 |

+

lr=base_lr,

|

| 166 |

+

weight_decay=weight_decay,

|

| 167 |

+

batch_size_per_gpu=train_batch_size_per_gpu),

|

| 168 |

+

paramwise_cfg=dict(

|

| 169 |

+

bias_decay_mult=0.0,

|

| 170 |

+

norm_decay_mult=0.0,

|

| 171 |

+

custom_keys={'backbone.text_model': dict(lr_mult=0.01),

|

| 172 |

+

'logit_scale': dict(weight_decay=0.0)}),

|

| 173 |

+

constructor='YOLOWv5OptimizerConstructor')

|

| 174 |

+

|

| 175 |

+

# evaluation settings

|

| 176 |

+

val_evaluator = dict(

|

| 177 |

+

_delete_=True,

|

| 178 |

+

type='mmdet.CocoMetric',

|

| 179 |

+

proposal_nums=(100, 1, 10),

|

| 180 |

+

ann_file='data/coco/annotations/instances_val2017.json',

|

| 181 |

+

metric='bbox')

|

| 182 |

+

|

| 183 |

+

test_evaluator = val_evaluator

|

configs/finetune_coco/yolo_world_m_t2i_bn_2e-4_100e_4x8gpus_coco_finetune.py

ADDED

|

@@ -0,0 +1,183 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_ = ('../../third_party/mmyolo/configs/yolov8/'

|

| 2 |

+

'yolov8_m_mask-refine_syncbn_fast_8xb16-500e_coco.py')

|

| 3 |

+

custom_imports = dict(imports=['yolo_world'],

|

| 4 |

+

allow_failed_imports=False)

|

| 5 |

+

|

| 6 |

+

# hyper-parameters

|

| 7 |

+

num_classes = 80

|

| 8 |

+

num_training_classes = 80

|

| 9 |

+

max_epochs = 80 # Maximum training epochs

|

| 10 |

+

close_mosaic_epochs = 10

|

| 11 |

+

save_epoch_intervals = 5

|

| 12 |

+

text_channels = 512

|

| 13 |

+

neck_embed_channels = [128, 256, _base_.last_stage_out_channels // 2]

|

| 14 |

+

neck_num_heads = [4, 8, _base_.last_stage_out_channels // 2 // 32]

|

| 15 |

+

base_lr = 2e-4

|

| 16 |

+

weight_decay = 0.05

|

| 17 |

+

train_batch_size_per_gpu = 16

|

| 18 |

+

load_from = 'weights/yolow-v8_m_clipv2_frozen_t2iv2_bn_o365_goldg_pretrain.pth'

|

| 19 |

+

persistent_workers = False

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

# model settings

|

| 23 |

+

model = dict(

|

| 24 |

+

type='YOLOWorldDetector',

|

| 25 |

+

mm_neck=True,

|

| 26 |

+

num_train_classes=num_training_classes,

|

| 27 |

+

num_test_classes=num_classes,

|

| 28 |

+

data_preprocessor=dict(type='YOLOWDetDataPreprocessor'),

|

| 29 |

+

backbone=dict(

|

| 30 |

+

_delete_=True,

|

| 31 |

+

type='MultiModalYOLOBackbone',

|

| 32 |

+

image_model={{_base_.model.backbone}},

|

| 33 |

+

text_model=dict(

|

| 34 |

+

type='HuggingCLIPLanguageBackbone',

|

| 35 |

+

model_name='pretrained_models/clip-vit-base-patch32-projection',

|

| 36 |

+

frozen_modules=['all'])),

|

| 37 |

+

neck=dict(type='YOLOWorldPAFPN',

|

| 38 |

+

guide_channels=text_channels,

|

| 39 |

+

embed_channels=neck_embed_channels,

|

| 40 |

+

num_heads=neck_num_heads,

|

| 41 |

+

block_cfg=dict(type='MaxSigmoidCSPLayerWithTwoConv'),

|

| 42 |

+

num_csp_blocks=2),

|

| 43 |

+

bbox_head=dict(type='YOLOWorldHead',

|

| 44 |

+

head_module=dict(type='YOLOWorldHeadModule',

|

| 45 |

+

embed_dims=text_channels,

|

| 46 |

+

use_bn_head=True,

|

| 47 |

+

num_classes=num_training_classes)),

|

| 48 |

+

train_cfg=dict(assigner=dict(num_classes=num_training_classes)))

|

| 49 |

+

|

| 50 |

+

# dataset settings

|

| 51 |

+

text_transform = [

|

| 52 |

+

dict(type='RandomLoadText',

|

| 53 |

+

num_neg_samples=(num_classes, num_classes),

|

| 54 |

+

max_num_samples=num_training_classes,

|

| 55 |

+

padding_to_max=True,

|

| 56 |

+

padding_value=''),

|

| 57 |

+

dict(type='mmdet.PackDetInputs',

|

| 58 |

+

meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape', 'flip',

|

| 59 |

+

'flip_direction', 'texts'))

|

| 60 |

+

]

|

| 61 |

+

mosaic_affine_transform = [

|

| 62 |

+

dict(

|

| 63 |

+

type='MultiModalMosaic',

|

| 64 |

+

img_scale=_base_.img_scale,

|

| 65 |

+

pad_val=114.0,

|

| 66 |

+

pre_transform=_base_.pre_transform),

|

| 67 |

+

dict(type='YOLOv5CopyPaste', prob=_base_.copypaste_prob),

|

| 68 |

+

dict(

|

| 69 |

+

type='YOLOv5RandomAffine',

|

| 70 |

+

max_rotate_degree=0.0,

|

| 71 |

+

max_shear_degree=0.0,

|

| 72 |

+

max_aspect_ratio=100.,

|

| 73 |

+

scaling_ratio_range=(1 - _base_.affine_scale,

|

| 74 |

+

1 + _base_.affine_scale),

|

| 75 |

+

# img_scale is (width, height)

|

| 76 |

+

border=(-_base_.img_scale[0] // 2, -_base_.img_scale[1] // 2),

|

| 77 |

+

border_val=(114, 114, 114),

|

| 78 |

+

min_area_ratio=_base_.min_area_ratio,

|

| 79 |

+

use_mask_refine=_base_.use_mask2refine)

|

| 80 |

+

]

|

| 81 |

+

train_pipeline = [

|

| 82 |

+

*_base_.pre_transform,

|

| 83 |

+

*mosaic_affine_transform,

|

| 84 |

+

dict(

|

| 85 |

+

type='YOLOv5MultiModalMixUp',

|

| 86 |

+

prob=_base_.mixup_prob,

|

| 87 |

+

pre_transform=[*_base_.pre_transform,

|

| 88 |

+

*mosaic_affine_transform]),

|

| 89 |

+

*_base_.last_transform[:-1],

|

| 90 |

+

*text_transform

|

| 91 |

+

]

|

| 92 |

+

train_pipeline_stage2 = [

|

| 93 |

+

*_base_.train_pipeline_stage2[:-1],

|

| 94 |

+

*text_transform

|

| 95 |

+

]

|

| 96 |

+

coco_train_dataset = dict(

|

| 97 |

+

_delete_=True,

|

| 98 |

+

type='MultiModalDataset',

|

| 99 |

+

dataset=dict(

|

| 100 |

+

type='YOLOv5CocoDataset',

|

| 101 |

+

data_root='data/coco',

|

| 102 |

+

ann_file='annotations/instances_train2017.json',

|

| 103 |

+

data_prefix=dict(img='train2017/'),

|

| 104 |

+

filter_cfg=dict(filter_empty_gt=False, min_size=32)),

|

| 105 |

+

class_text_path='data/captions/coco_class_captions.json',

|

| 106 |

+

pipeline=train_pipeline)

|

| 107 |

+

train_dataloader = dict(

|

| 108 |

+

persistent_workers=persistent_workers,

|

| 109 |

+

batch_size=train_batch_size_per_gpu,

|

| 110 |

+

collate_fn=dict(type='yolow_collate'),

|

| 111 |

+

dataset=coco_train_dataset)

|

| 112 |

+

test_pipeline = [

|

| 113 |

+

*_base_.test_pipeline[:-1],

|

| 114 |

+

dict(type='LoadTextFixed'),

|

| 115 |

+

dict(

|

| 116 |

+

type='mmdet.PackDetInputs',

|

| 117 |

+

meta_keys=('img_id', 'img_path', 'ori_shape', 'img_shape',

|

| 118 |

+

'scale_factor', 'pad_param', 'texts'))

|

| 119 |

+

]

|

| 120 |

+

coco_val_dataset = dict(

|

| 121 |

+

_delete_=True,

|

| 122 |

+

type='MultiModalDataset',

|

| 123 |

+

dataset=dict(

|

| 124 |

+

type='YOLOv5CocoDataset',

|

| 125 |

+

data_root='data/coco',

|

| 126 |

+

ann_file='annotations/instances_val2017.json',

|

| 127 |

+

data_prefix=dict(img='val2017/'),

|

| 128 |

+

filter_cfg=dict(filter_empty_gt=False, min_size=32)),

|

| 129 |

+

class_text_path='data/captions/coco_class_captions.json',

|

| 130 |

+

pipeline=test_pipeline)

|

| 131 |

+

val_dataloader = dict(dataset=coco_val_dataset)

|

| 132 |

+

test_dataloader = val_dataloader

|

| 133 |

+

# training settings

|

| 134 |

+

default_hooks = dict(

|

| 135 |

+

param_scheduler=dict(

|

| 136 |

+

scheduler_type='linear',

|

| 137 |

+

lr_factor=0.01,

|

| 138 |

+

max_epochs=max_epochs),

|

| 139 |

+

checkpoint=dict(

|

| 140 |

+

max_keep_ckpts=-1,

|

| 141 |

+

save_best=None,

|

| 142 |

+

interval=save_epoch_intervals))

|

| 143 |

+

custom_hooks = [

|

| 144 |

+

dict(

|

| 145 |

+

type='EMAHook',

|

| 146 |

+

ema_type='ExpMomentumEMA',

|

| 147 |

+

momentum=0.0001,

|

| 148 |

+

update_buffers=True,

|

| 149 |

+

strict_load=False,

|

| 150 |

+

priority=49),

|

| 151 |

+

dict(

|

| 152 |

+

type='mmdet.PipelineSwitchHook',

|

| 153 |

+

switch_epoch=max_epochs - close_mosaic_epochs,

|

| 154 |

+

switch_pipeline=train_pipeline_stage2)

|

| 155 |

+

]

|

| 156 |

+

train_cfg = dict(

|

| 157 |

+

max_epochs=max_epochs,

|

| 158 |

+

val_interval=5,

|

| 159 |

+

dynamic_intervals=[((max_epochs - close_mosaic_epochs),

|

| 160 |

+

_base_.val_interval_stage2)])

|

| 161 |

+

optim_wrapper = dict(

|

| 162 |

+

optimizer=dict(

|

| 163 |

+

_delete_=True,

|

| 164 |

+

type='AdamW',

|

| 165 |

+

lr=base_lr,

|

| 166 |

+

weight_decay=weight_decay,

|

| 167 |

+

batch_size_per_gpu=train_batch_size_per_gpu),

|

| 168 |

+

paramwise_cfg=dict(

|

| 169 |

+

bias_decay_mult=0.0,

|

| 170 |

+

norm_decay_mult=0.0,

|

| 171 |

+

custom_keys={'backbone.text_model': dict(lr_mult=0.01),

|

| 172 |

+

'logit_scale': dict(weight_decay=0.0)}),

|

| 173 |

+

constructor='YOLOWv5OptimizerConstructor')

|

| 174 |

+

|

| 175 |

+

# evaluation settings

|

| 176 |

+

val_evaluator = dict(

|

| 177 |

+

_delete_=True,

|

| 178 |

+

type='mmdet.CocoMetric',

|

| 179 |

+

proposal_nums=(100, 1, 10),

|

| 180 |

+