Spaces:

Sleeping

Sleeping

Reset again!

Browse files- .gitattributes +0 -34

- .gitignore +0 -163

- .gitmodules +0 -3

- .idea/.gitignore +8 -0

- .idea/inspectionProfiles/profiles_settings.xml +6 -0

- .idea/misc.xml +4 -0

- .idea/modules.xml +8 -0

- .idea/prismer_demo.iml +8 -0

- .idea/vcs.xml +7 -0

- .pre-commit-config.yaml +0 -36

- .style.yapf +0 -5

- app.py +9 -0

- app_caption.py +13 -3

- patch +82 -0

- prismer/.gitignore +10 -0

- prismer/LICENSE +97 -0

- prismer/README.md +156 -0

- prismer/dataset/__init__.py +12 -1

- prismer/dataset/ade_features.pt +0 -0

- prismer/dataset/background_features.pt +0 -0

- prismer/dataset/caption_dataset.py +1 -1

- prismer/dataset/classification_dataset.py +72 -0

- prismer/dataset/clip_pca.pkl +0 -0

- prismer/dataset/coco_features.pt +0 -0

- prismer/dataset/detection_features.pt +0 -0

- prismer/dataset/pretrain_dataset.py +73 -0

- prismer/dataset/utils.py +4 -8

- prismer/dataset/vqa_dataset.py +0 -2

- prismer/experts/generate_depth.py +1 -1

- prismer/experts/generate_edge.py +1 -1

- prismer/experts/generate_normal.py +1 -1

- prismer/experts/generate_objdet.py +1 -1

- prismer/experts/generate_ocrdet.py +1 -1

- prismer/experts/generate_segmentation.py +1 -1

- prismer/{images → helpers/images}/COCO_test2015_000000000014.jpg +0 -0

- prismer/{images → helpers/images}/COCO_test2015_000000000016.jpg +0 -0

- prismer/{images → helpers/images}/COCO_test2015_000000000019.jpg +0 -0

- prismer/{images → helpers/images}/COCO_test2015_000000000128.jpg +0 -0

- prismer/{images → helpers/images}/COCO_test2015_000000000155.jpg +0 -0

- prismer/helpers/intro.png +0 -0

- prismer/model/prismer.py +1 -4

- prismer/requirements.txt +19 -0

- prismer/train_caption.py +208 -0

- prismer/train_classification.py +164 -0

- prismer/train_pretrain.py +140 -0

- prismer/train_vqa.py +180 -0

- prismer_model.py +82 -26

.gitattributes

DELETED

|

@@ -1,34 +0,0 @@

|

|

| 1 |

-

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

-

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

.gitignore

DELETED

|

@@ -1,163 +0,0 @@

|

|

| 1 |

-

cache/

|

| 2 |

-

.idea

|

| 3 |

-

|

| 4 |

-

# Byte-compiled / optimized / DLL files

|

| 5 |

-

__pycache__/

|

| 6 |

-

*.py[cod]

|

| 7 |

-

*$py.class

|

| 8 |

-

|

| 9 |

-

# C extensions

|

| 10 |

-

*.so

|

| 11 |

-

|

| 12 |

-

# Distribution / packaging

|

| 13 |

-

.Python

|

| 14 |

-

build/

|

| 15 |

-

develop-eggs/

|

| 16 |

-

dist/

|

| 17 |

-

downloads/

|

| 18 |

-

eggs/

|

| 19 |

-

.eggs/

|

| 20 |

-

lib/

|

| 21 |

-

lib64/

|

| 22 |

-

parts/

|

| 23 |

-

sdist/

|

| 24 |

-

var/

|

| 25 |

-

wheels/

|

| 26 |

-

share/python-wheels/

|

| 27 |

-

*.egg-info/

|

| 28 |

-

.installed.cfg

|

| 29 |

-

*.egg

|

| 30 |

-

MANIFEST

|

| 31 |

-

|

| 32 |

-

# PyInstaller

|

| 33 |

-

# Usually these files are written by a python script from a template

|

| 34 |

-

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 35 |

-

*.manifest

|

| 36 |

-

*.spec

|

| 37 |

-

|

| 38 |

-

# Installer logs

|

| 39 |

-

pip-log.txt

|

| 40 |

-

pip-delete-this-directory.txt

|

| 41 |

-

|

| 42 |

-

# Unit test / coverage reports

|

| 43 |

-

htmlcov/

|

| 44 |

-

.tox/

|

| 45 |

-

.nox/

|

| 46 |

-

.coverage

|

| 47 |

-

.coverage.*

|

| 48 |

-

.cache

|

| 49 |

-

nosetests.xml

|

| 50 |

-

coverage.xml

|

| 51 |

-

*.cover

|

| 52 |

-

*.py,cover

|

| 53 |

-

.hypothesis/

|

| 54 |

-

.pytest_cache/

|

| 55 |

-

cover/

|

| 56 |

-

|

| 57 |

-

# Translations

|

| 58 |

-

*.mo

|

| 59 |

-

*.pot

|

| 60 |

-

|

| 61 |

-

# Django stuff:

|

| 62 |

-

*.log

|

| 63 |

-

local_settings.py

|

| 64 |

-

db.sqlite3

|

| 65 |

-

db.sqlite3-journal

|

| 66 |

-

|

| 67 |

-

# Flask stuff:

|

| 68 |

-

instance/

|

| 69 |

-

.webassets-cache

|

| 70 |

-

|

| 71 |

-

# Scrapy stuff:

|

| 72 |

-

.scrapy

|

| 73 |

-

|

| 74 |

-

# Sphinx documentation

|

| 75 |

-

docs/_build/

|

| 76 |

-

|

| 77 |

-

# PyBuilder

|

| 78 |

-

.pybuilder/

|

| 79 |

-

target/

|

| 80 |

-

|

| 81 |

-

# Jupyter Notebook

|

| 82 |

-

.ipynb_checkpoints

|

| 83 |

-

|

| 84 |

-

# IPython

|

| 85 |

-

profile_default/

|

| 86 |

-

ipython_config.py

|

| 87 |

-

|

| 88 |

-

# pyenv

|

| 89 |

-

# For a library or package, you might want to ignore these files since the code is

|

| 90 |

-

# intended to run in multiple environments; otherwise, check them in:

|

| 91 |

-

# .python-version

|

| 92 |

-

|

| 93 |

-

# pipenv

|

| 94 |

-

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 95 |

-

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 96 |

-

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 97 |

-

# install all needed dependencies.

|

| 98 |

-

#Pipfile.lock

|

| 99 |

-

|

| 100 |

-

# poetry

|

| 101 |

-

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 102 |

-

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 103 |

-

# commonly ignored for libraries.

|

| 104 |

-

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 105 |

-

#poetry.lock

|

| 106 |

-

|

| 107 |

-

# pdm

|

| 108 |

-

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 109 |

-

#pdm.lock

|

| 110 |

-

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 111 |

-

# in version control.

|

| 112 |

-

# https://pdm.fming.dev/#use-with-ide

|

| 113 |

-

.pdm.toml

|

| 114 |

-

|

| 115 |

-

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 116 |

-

__pypackages__/

|

| 117 |

-

|

| 118 |

-

# Celery stuff

|

| 119 |

-

celerybeat-schedule

|

| 120 |

-

celerybeat.pid

|

| 121 |

-

|

| 122 |

-

# SageMath parsed files

|

| 123 |

-

*.sage.py

|

| 124 |

-

|

| 125 |

-

# Environments

|

| 126 |

-

.env

|

| 127 |

-

.venv

|

| 128 |

-

env/

|

| 129 |

-

venv/

|

| 130 |

-

ENV/

|

| 131 |

-

env.bak/

|

| 132 |

-

venv.bak/

|

| 133 |

-

|

| 134 |

-

# Spyder project settings

|

| 135 |

-

.spyderproject

|

| 136 |

-

.spyproject

|

| 137 |

-

|

| 138 |

-

# Rope project settings

|

| 139 |

-

.ropeproject

|

| 140 |

-

|

| 141 |

-

# mkdocs documentation

|

| 142 |

-

/site

|

| 143 |

-

|

| 144 |

-

# mypy

|

| 145 |

-

.mypy_cache/

|

| 146 |

-

.dmypy.json

|

| 147 |

-

dmypy.json

|

| 148 |

-

|

| 149 |

-

# Pyre type checker

|

| 150 |

-

.pyre/

|

| 151 |

-

|

| 152 |

-

# pytype static type analyzer

|

| 153 |

-

.pytype/

|

| 154 |

-

|

| 155 |

-

# Cython debug symbols

|

| 156 |

-

cython_debug/

|

| 157 |

-

|

| 158 |

-

# PyCharm

|

| 159 |

-

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 160 |

-

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 161 |

-

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 162 |

-

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 163 |

-

#.idea/

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

.gitmodules

DELETED

|

@@ -1,3 +0,0 @@

|

|

| 1 |

-

[submodule "prismer"]

|

| 2 |

-

path = prismer

|

| 3 |

-

url = https://github.com/nvlabs/prismer

|

|

|

|

|

|

|

|

|

|

|

|

.idea/.gitignore

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Default ignored files

|

| 2 |

+

/shelf/

|

| 3 |

+

/workspace.xml

|

| 4 |

+

# Editor-based HTTP Client requests

|

| 5 |

+

/httpRequests/

|

| 6 |

+

# Datasource local storage ignored files

|

| 7 |

+

/dataSources/

|

| 8 |

+

/dataSources.local.xml

|

.idea/inspectionProfiles/profiles_settings.xml

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<component name="InspectionProjectProfileManager">

|

| 2 |

+

<settings>

|

| 3 |

+

<option name="USE_PROJECT_PROFILE" value="false" />

|

| 4 |

+

<version value="1.0" />

|

| 5 |

+

</settings>

|

| 6 |

+

</component>

|

.idea/misc.xml

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<?xml version="1.0" encoding="UTF-8"?>

|

| 2 |

+

<project version="4">

|

| 3 |

+

<component name="ProjectRootManager" version="2" project-jdk-name="Python 3.9" project-jdk-type="Python SDK" />

|

| 4 |

+

</project>

|

.idea/modules.xml

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<?xml version="1.0" encoding="UTF-8"?>

|

| 2 |

+

<project version="4">

|

| 3 |

+

<component name="ProjectModuleManager">

|

| 4 |

+

<modules>

|

| 5 |

+

<module fileurl="file://$PROJECT_DIR$/.idea/prismer_demo.iml" filepath="$PROJECT_DIR$/.idea/prismer_demo.iml" />

|

| 6 |

+

</modules>

|

| 7 |

+

</component>

|

| 8 |

+

</project>

|

.idea/prismer_demo.iml

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<?xml version="1.0" encoding="UTF-8"?>

|

| 2 |

+

<module type="PYTHON_MODULE" version="4">

|

| 3 |

+

<component name="NewModuleRootManager">

|

| 4 |

+

<content url="file://$MODULE_DIR$" />

|

| 5 |

+

<orderEntry type="inheritedJdk" />

|

| 6 |

+

<orderEntry type="sourceFolder" forTests="false" />

|

| 7 |

+

</component>

|

| 8 |

+

</module>

|

.idea/vcs.xml

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<?xml version="1.0" encoding="UTF-8"?>

|

| 2 |

+

<project version="4">

|

| 3 |

+

<component name="VcsDirectoryMappings">

|

| 4 |

+

<mapping directory="$PROJECT_DIR$" vcs="Git" />

|

| 5 |

+

<mapping directory="$PROJECT_DIR$/prismer" vcs="Git" />

|

| 6 |

+

</component>

|

| 7 |

+

</project>

|

.pre-commit-config.yaml

DELETED

|

@@ -1,36 +0,0 @@

|

|

| 1 |

-

repos:

|

| 2 |

-

- repo: https://github.com/pre-commit/pre-commit-hooks

|

| 3 |

-

rev: v4.2.0

|

| 4 |

-

hooks:

|

| 5 |

-

- id: check-executables-have-shebangs

|

| 6 |

-

- id: check-json

|

| 7 |

-

- id: check-merge-conflict

|

| 8 |

-

- id: check-shebang-scripts-are-executable

|

| 9 |

-

- id: check-toml

|

| 10 |

-

- id: check-yaml

|

| 11 |

-

- id: double-quote-string-fixer

|

| 12 |

-

- id: end-of-file-fixer

|

| 13 |

-

- id: mixed-line-ending

|

| 14 |

-

args: ['--fix=lf']

|

| 15 |

-

- id: requirements-txt-fixer

|

| 16 |

-

- id: trailing-whitespace

|

| 17 |

-

- repo: https://github.com/myint/docformatter

|

| 18 |

-

rev: v1.4

|

| 19 |

-

hooks:

|

| 20 |

-

- id: docformatter

|

| 21 |

-

args: ['--in-place']

|

| 22 |

-

- repo: https://github.com/pycqa/isort

|

| 23 |

-

rev: 5.12.0

|

| 24 |

-

hooks:

|

| 25 |

-

- id: isort

|

| 26 |

-

- repo: https://github.com/pre-commit/mirrors-mypy

|

| 27 |

-

rev: v0.991

|

| 28 |

-

hooks:

|

| 29 |

-

- id: mypy

|

| 30 |

-

args: ['--ignore-missing-imports']

|

| 31 |

-

additional_dependencies: ['types-python-slugify']

|

| 32 |

-

- repo: https://github.com/google/yapf

|

| 33 |

-

rev: v0.32.0

|

| 34 |

-

hooks:

|

| 35 |

-

- id: yapf

|

| 36 |

-

args: ['--parallel', '--in-place']

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

.style.yapf

DELETED

|

@@ -1,5 +0,0 @@

|

|

| 1 |

-

[style]

|

| 2 |

-

based_on_style = pep8

|

| 3 |

-

blank_line_before_nested_class_or_def = false

|

| 4 |

-

spaces_before_comment = 2

|

| 5 |

-

split_before_logical_operator = true

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

app.py

CHANGED

|

@@ -5,11 +5,20 @@ from __future__ import annotations

|

|

| 5 |

import os

|

| 6 |

import shutil

|

| 7 |

import subprocess

|

|

|

|

| 8 |

import gradio as gr

|

| 9 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 |

from app_caption import create_demo as create_demo_caption

|

| 11 |

from prismer_model import build_deformable_conv, download_models

|

| 12 |

|

|

|

|

| 13 |

# Prepare model checkpoints

|

| 14 |

download_models()

|

| 15 |

build_deformable_conv()

|

|

|

|

| 5 |

import os

|

| 6 |

import shutil

|

| 7 |

import subprocess

|

| 8 |

+

|

| 9 |

import gradio as gr

|

| 10 |

|

| 11 |

+

if os.getenv('SYSTEM') == 'spaces':

|

| 12 |

+

with open('patch') as f:

|

| 13 |

+

subprocess.run('patch -p1'.split(), cwd='prismer', stdin=f)

|

| 14 |

+

shutil.copytree('prismer/helpers/images',

|

| 15 |

+

'prismer/images',

|

| 16 |

+

dirs_exist_ok=True)

|

| 17 |

+

|

| 18 |

from app_caption import create_demo as create_demo_caption

|

| 19 |

from prismer_model import build_deformable_conv, download_models

|

| 20 |

|

| 21 |

+

|

| 22 |

# Prepare model checkpoints

|

| 23 |

download_models()

|

| 24 |

build_deformable_conv()

|

app_caption.py

CHANGED

|

@@ -15,8 +15,10 @@ def create_demo():

|

|

| 15 |

|

| 16 |

with gr.Row():

|

| 17 |

with gr.Column():

|

| 18 |

-

image = gr.Image(label='Input

|

| 19 |

-

model_name = gr.Dropdown(label='Model

|

|

|

|

|

|

|

| 20 |

run_button = gr.Button('Run')

|

| 21 |

with gr.Column(scale=1.5):

|

| 22 |

caption = gr.Text(label='Caption')

|

|

@@ -30,7 +32,15 @@ def create_demo():

|

|

| 30 |

ocr = gr.Image(label='OCR Detection')

|

| 31 |

|

| 32 |

inputs = [image, model_name]

|

| 33 |

-

outputs = [

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 34 |

|

| 35 |

paths = sorted(pathlib.Path('prismer/images').glob('*'))

|

| 36 |

examples = [[path.as_posix(), 'prismer_base'] for path in paths]

|

|

|

|

| 15 |

|

| 16 |

with gr.Row():

|

| 17 |

with gr.Column():

|

| 18 |

+

image = gr.Image(label='Input', type='filepath')

|

| 19 |

+

model_name = gr.Dropdown(label='Model',

|

| 20 |

+

choices=['prismer_base'],

|

| 21 |

+

value='prismer_base')

|

| 22 |

run_button = gr.Button('Run')

|

| 23 |

with gr.Column(scale=1.5):

|

| 24 |

caption = gr.Text(label='Caption')

|

|

|

|

| 32 |

ocr = gr.Image(label='OCR Detection')

|

| 33 |

|

| 34 |

inputs = [image, model_name]

|

| 35 |

+

outputs = [

|

| 36 |

+

caption,

|

| 37 |

+

depth,

|

| 38 |

+

edge,

|

| 39 |

+

normals,

|

| 40 |

+

segmentation,

|

| 41 |

+

object_detection,

|

| 42 |

+

ocr,

|

| 43 |

+

]

|

| 44 |

|

| 45 |

paths = sorted(pathlib.Path('prismer/images').glob('*'))

|

| 46 |

examples = [[path.as_posix(), 'prismer_base'] for path in paths]

|

patch

ADDED

|

@@ -0,0 +1,82 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

diff --git a/dataset/caption_dataset.py b/dataset/caption_dataset.py

|

| 2 |

+

index 266fdda..0cc5d3f 100644

|

| 3 |

+

--- a/dataset/caption_dataset.py

|

| 4 |

+

+++ b/dataset/caption_dataset.py

|

| 5 |

+

@@ -50,7 +50,7 @@ class Caption(Dataset):

|

| 6 |

+

elif self.dataset == 'demo':

|

| 7 |

+

img_path_split = self.data_list[index]['image'].split('/')

|

| 8 |

+

img_name = img_path_split[-2] + '/' + img_path_split[-1]

|

| 9 |

+

- image, labels, labels_info = get_expert_labels('', self.label_path, img_name, 'helpers', self.experts)

|

| 10 |

+

+ image, labels, labels_info = get_expert_labels('prismer', self.label_path, img_name, 'helpers', self.experts)

|

| 11 |

+

|

| 12 |

+

experts = self.transform(image, labels)

|

| 13 |

+

experts = post_label_process(experts, labels_info)

|

| 14 |

+

diff --git a/dataset/utils.py b/dataset/utils.py

|

| 15 |

+

index b368aac..418358c 100644

|

| 16 |

+

--- a/dataset/utils.py

|

| 17 |

+

+++ b/dataset/utils.py

|

| 18 |

+

@@ -5,6 +5,7 @@

|

| 19 |

+

# https://github.com/NVlabs/prismer/blob/main/LICENSE

|

| 20 |

+

|

| 21 |

+

import os

|

| 22 |

+

+import pathlib

|

| 23 |

+

import re

|

| 24 |

+

import json

|

| 25 |

+

import torch

|

| 26 |

+

@@ -14,10 +15,12 @@ import torchvision.transforms as transforms

|

| 27 |

+

import torchvision.transforms.functional as transforms_f

|

| 28 |

+

from dataset.randaugment import RandAugment

|

| 29 |

+

|

| 30 |

+

-COCO_FEATURES = torch.load('dataset/coco_features.pt')['features']

|

| 31 |

+

-ADE_FEATURES = torch.load('dataset/ade_features.pt')['features']

|

| 32 |

+

-DETECTION_FEATURES = torch.load('dataset/detection_features.pt')['features']

|

| 33 |

+

-BACKGROUND_FEATURES = torch.load('dataset/background_features.pt')

|

| 34 |

+

+cur_dir = pathlib.Path(__file__).parent

|

| 35 |

+

+

|

| 36 |

+

+COCO_FEATURES = torch.load(cur_dir / 'coco_features.pt')['features']

|

| 37 |

+

+ADE_FEATURES = torch.load(cur_dir / 'ade_features.pt')['features']

|

| 38 |

+

+DETECTION_FEATURES = torch.load(cur_dir / 'detection_features.pt')['features']

|

| 39 |

+

+BACKGROUND_FEATURES = torch.load(cur_dir / 'background_features.pt')

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

class Transform:

|

| 43 |

+

diff --git a/model/prismer.py b/model/prismer.py

|

| 44 |

+

index 080253a..02362a4 100644

|

| 45 |

+

--- a/model/prismer.py

|

| 46 |

+

+++ b/model/prismer.py

|

| 47 |

+

@@ -5,6 +5,7 @@

|

| 48 |

+

# https://github.com/NVlabs/prismer/blob/main/LICENSE

|

| 49 |

+

|

| 50 |

+

import json

|

| 51 |

+

+import pathlib

|

| 52 |

+

import torch.nn as nn

|

| 53 |

+

|

| 54 |

+

from model.modules.vit import load_encoder

|

| 55 |

+

@@ -12,6 +13,9 @@ from model.modules.roberta import load_decoder

|

| 56 |

+

from transformers import RobertaTokenizer, RobertaConfig

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

+cur_dir = pathlib.Path(__file__).parent

|

| 60 |

+

+

|

| 61 |

+

+

|

| 62 |

+

class Prismer(nn.Module):

|

| 63 |

+

def __init__(self, config):

|

| 64 |

+

super().__init__()

|

| 65 |

+

@@ -26,7 +30,7 @@ class Prismer(nn.Module):

|

| 66 |

+

elif exp in ['obj_detection', 'ocr_detection']:

|

| 67 |

+

self.experts[exp] = 64

|

| 68 |

+

|

| 69 |

+

- prismer_config = json.load(open('configs/prismer.json', 'r'))[config['prismer_model']]

|

| 70 |

+

+ prismer_config = json.load(open(f'{cur_dir.parent}/configs/prismer.json', 'r'))[config['prismer_model']]

|

| 71 |

+

roberta_config = RobertaConfig.from_dict(prismer_config['roberta_model'])

|

| 72 |

+

|

| 73 |

+

self.tokenizer = RobertaTokenizer.from_pretrained(prismer_config['roberta_model']['model_name'])

|

| 74 |

+

@@ -35,7 +39,7 @@ class Prismer(nn.Module):

|

| 75 |

+

|

| 76 |

+

self.prepare_to_train(config['freeze'])

|

| 77 |

+

self.ignored_modules = self.get_ignored_modules(config['freeze'])

|

| 78 |

+

-

|

| 79 |

+

+

|

| 80 |

+

def prepare_to_train(self, mode='none'):

|

| 81 |

+

for name, params in self.named_parameters():

|

| 82 |

+

if mode == 'freeze_lang':

|

prismer/.gitignore

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.idea

|

| 2 |

+

cache

|

| 3 |

+

.DS_Store

|

| 4 |

+

**/__pycache__/*

|

| 5 |

+

helpers/data/*

|

| 6 |

+

helpers/images2/*

|

| 7 |

+

helpers/labels/*

|

| 8 |

+

experts/expert_weights

|

| 9 |

+

logging/*

|

| 10 |

+

flagged/*

|

prismer/LICENSE

ADDED

|

@@ -0,0 +1,97 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright (c) 2023, NVIDIA Corporation & affiliates. All rights reserved.

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

NVIDIA Source Code License for Prismer

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

=======================================================================

|

| 8 |

+

|

| 9 |

+

1. Definitions

|

| 10 |

+

|

| 11 |

+

"Licensor" means any person or entity that distributes its Work.

|

| 12 |

+

|

| 13 |

+

"Software" means the original work of authorship made available under

|

| 14 |

+

this License.

|

| 15 |

+

|

| 16 |

+

"Work" means the Software and any additions to or derivative works of

|

| 17 |

+

the Software that are made available under this License.

|

| 18 |

+

|

| 19 |

+

The terms "reproduce," "reproduction," "derivative works," and

|

| 20 |

+

"distribution" have the meaning as provided under U.S. copyright law;

|

| 21 |

+

provided, however, that for the purposes of this License, derivative

|

| 22 |

+

works shall not include works that remain separable from, or merely

|

| 23 |

+

link (or bind by name) to the interfaces of, the Work.

|

| 24 |

+

|

| 25 |

+

Works, including the Software, are "made available" under this License

|

| 26 |

+

by including in or with the Work either (a) a copyright notice

|

| 27 |

+

referencing the applicability of this License to the Work, or (b) a

|

| 28 |

+

copy of this License.

|

| 29 |

+

|

| 30 |

+

2. License Grants

|

| 31 |

+

|

| 32 |

+

2.1 Copyright Grant. Subject to the terms and conditions of this

|

| 33 |

+

License, each Licensor grants to you a perpetual, worldwide,

|

| 34 |

+

non-exclusive, royalty-free, copyright license to reproduce,

|

| 35 |

+

prepare derivative works of, publicly display, publicly perform,

|

| 36 |

+

sublicense and distribute its Work and any resulting derivative

|

| 37 |

+

works in any form.

|

| 38 |

+

|

| 39 |

+

3. Limitations

|

| 40 |

+

|

| 41 |

+

3.1 Redistribution. You may reproduce or distribute the Work only

|

| 42 |

+

if (a) you do so under this License, (b) you include a complete

|

| 43 |

+

copy of this License with your distribution, and (c) you retain

|

| 44 |

+

without modification any copyright, patent, trademark, or

|

| 45 |

+

attribution notices that are present in the Work.

|

| 46 |

+

|

| 47 |

+

3.2 Derivative Works. You may specify that additional or different

|

| 48 |

+

terms apply to the use, reproduction, and distribution of your

|

| 49 |

+

derivative works of the Work ("Your Terms") only if (a) Your Terms

|

| 50 |

+

provide that the use limitation in Section 3.3 applies to your

|

| 51 |

+

derivative works, and (b) you identify the specific derivative

|

| 52 |

+

works that are subject to Your Terms. Notwithstanding Your Terms,

|

| 53 |

+

this License (including the redistribution requirements in Section

|

| 54 |

+

3.1) will continue to apply to the Work itself.

|

| 55 |

+

|

| 56 |

+

3.3 Use Limitation. The Work and any derivative works thereof only

|

| 57 |

+

may be used or intended for use non-commercially. Notwithstanding

|

| 58 |

+

the foregoing, NVIDIA and its affiliates may use the Work and any

|

| 59 |

+

derivative works commercially. As used herein, "non-commercially"

|

| 60 |

+

means for research or evaluation purposes only.

|

| 61 |

+

|

| 62 |

+

3.4 Patent Claims. If you bring or threaten to bring a patent claim

|

| 63 |

+

against any Licensor (including any claim, cross-claim or

|

| 64 |

+

counterclaim in a lawsuit) to enforce any patents that you allege

|

| 65 |

+

are infringed by any Work, then your rights under this License from

|

| 66 |

+

such Licensor (including the grant in Section 2.1) will terminate

|

| 67 |

+

immediately.

|

| 68 |

+

|

| 69 |

+

3.5 Trademarks. This License does not grant any rights to use any

|

| 70 |

+

Licensor’s or its affiliates’ names, logos, or trademarks, except

|

| 71 |

+

as necessary to reproduce the notices described in this License.

|

| 72 |

+

|

| 73 |

+

3.6 Termination. If you violate any term of this License, then your

|

| 74 |

+

rights under this License (including the grant in Section 2.1) will

|

| 75 |

+

terminate immediately.

|

| 76 |

+

|

| 77 |

+

4. Disclaimer of Warranty.

|

| 78 |

+

|

| 79 |

+

THE WORK IS PROVIDED "AS IS" WITHOUT WARRANTIES OR CONDITIONS OF ANY

|

| 80 |

+

KIND, EITHER EXPRESS OR IMPLIED, INCLUDING WARRANTIES OR CONDITIONS OF

|

| 81 |

+

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE OR

|

| 82 |

+

NON-INFRINGEMENT. YOU BEAR THE RISK OF UNDERTAKING ANY ACTIVITIES UNDER

|

| 83 |

+

THIS LICENSE.

|

| 84 |

+

|

| 85 |

+

5. Limitation of Liability.

|

| 86 |

+

|

| 87 |

+

EXCEPT AS PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL

|

| 88 |

+

THEORY, WHETHER IN TORT (INCLUDING NEGLIGENCE), CONTRACT, OR OTHERWISE

|

| 89 |

+

SHALL ANY LICENSOR BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY DIRECT,

|

| 90 |

+

INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF

|

| 91 |

+

OR RELATED TO THIS LICENSE, THE USE OR INABILITY TO USE THE WORK

|

| 92 |

+

(INCLUDING BUT NOT LIMITED TO LOSS OF GOODWILL, BUSINESS INTERRUPTION,

|

| 93 |

+

LOST PROFITS OR DATA, COMPUTER FAILURE OR MALFUNCTION, OR ANY OTHER

|

| 94 |

+

COMMERCIAL DAMAGES OR LOSSES), EVEN IF THE LICENSOR HAS BEEN ADVISED OF

|

| 95 |

+

THE POSSIBILITY OF SUCH DAMAGES.

|

| 96 |

+

|

| 97 |

+

=======================================================================

|

prismer/README.md

ADDED

|

@@ -0,0 +1,156 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

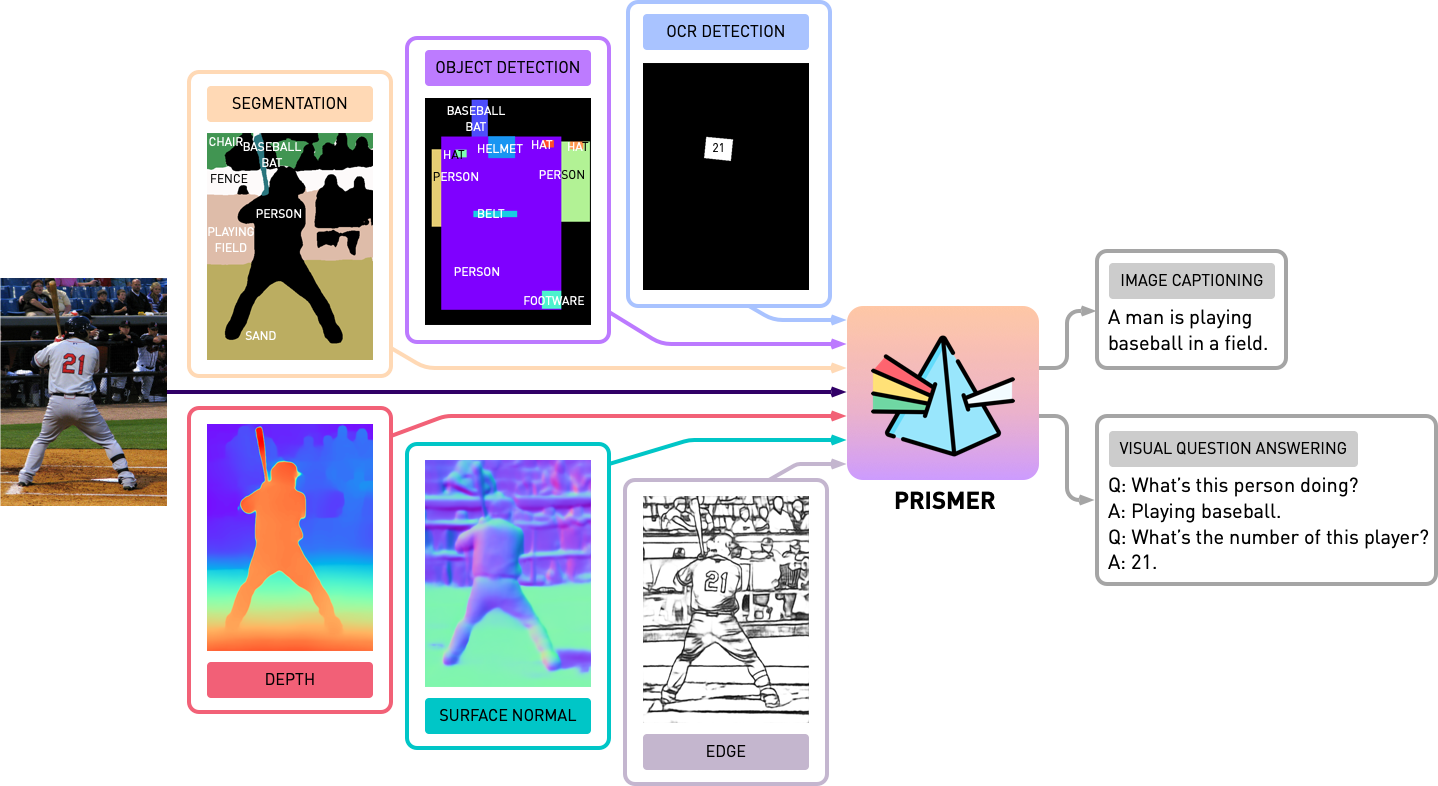

| 1 |

+

# Prismer

|

| 2 |

+

|

| 3 |

+

This repository contains the source code of **Prismer** and **PrismerZ** from the paper, [Prismer: A Vision-Language Model with An Ensemble of Experts](https://arxiv.org/abs/2303.02506).

|

| 4 |

+

|

| 5 |

+

<img src="helpers/intro.png" width="100%"/>

|

| 6 |

+

|

| 7 |

+

## Get Started

|

| 8 |

+

The implementation is based on `PyTorch 1.13`, and highly integrated with Huggingface [`accelerate`](https://github.com/huggingface/accelerate) toolkit for readable and optimised multi-node multi-gpu training.

|

| 9 |

+

|

| 10 |

+

First, let's install all package dependencies by running

|

| 11 |

+

```bash

|

| 12 |

+

pip install -r requirements.txt

|

| 13 |

+

```

|

| 14 |

+

|

| 15 |

+

### Prepare Accelerator Config

|

| 16 |

+

Then we generate the corresponding `accelerate` config based on your training server configuration. For both single-node multi-gpu and multi-node multi-gpu training, simply run

|

| 17 |

+

```bash

|

| 18 |

+

# to get your machine rank 0 IP address

|

| 19 |

+

hostname -i

|

| 20 |

+

|

| 21 |

+

# and for each machine, run the following command, set --num_machines 1 in a single-node setting

|

| 22 |

+

python generate_config.py —-main_ip {MAIN_IP} -—rank {MACHINE_RANK} —-num_machines {TOTAL_MACHINES}

|

| 23 |

+

```

|

| 24 |

+

|

| 25 |

+

## Datasets

|

| 26 |

+

|

| 27 |

+

### Pre-training

|

| 28 |

+

We pre-train Prismer/PrismerZ with a combination of five widely used image-alt/text datasets, with pre-organised data lists provided below.

|

| 29 |

+

- [COCO 2014](https://www.dropbox.com/s/6btr8hz5n1e1q4d/coco_karpathy_train.json?dl=0): the Karpathy training split (which will also be used for fine-tuning).

|

| 30 |

+

- [Visual Genome](https://www.dropbox.com/s/kailbaay0sqraxc/vg_caption.json?dl=0): the official Visual Genome captioning dataset.

|

| 31 |

+

- [CC3M + SGU](https://www.dropbox.com/s/xp2nuhc88f1czxm/filtered_cc3m_sbu.json?dl=0): filtered and re-captioned by BLIP-Large.

|

| 32 |

+

- [CC12M](https://www.dropbox.com/s/th358bb6wqkpwbz/filtered_cc12m.json?dl=0): filtered and re-captioned by BLIP-Large.

|

| 33 |

+

|

| 34 |

+

The web datasets (CC3M, SGU, CC12M) is composed with image urls. It is highly recommended to use [img2dataset](https://github.com/rom1504/img2dataset), a highly optimised toolkit for large-scale web scraping to download these images. An example bash script of using `img2dataset` to download `cc12m` dataset is provided below.

|

| 35 |

+

```bash

|

| 36 |

+

img2dataset --url_list filtered_cc12m.json --input_format "json" --url_col "url" --caption_col "caption" --output_folder cc12m --processes_count 16 --thread_count 64 --image_size 256

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

*Note: It is expected that the number of downloaded images is less than the number of images in the json file, because some urls might not be valid or require long loading time.*

|

| 40 |

+

|

| 41 |

+

### Image Captioning / VQA

|

| 42 |

+

We evaluate image captioning performance on two datasets, COCO 2014 and NoCaps; and VQA performance on VQAv2 dataset. In VQA tasks, we additionally augment the training data with Visual Genome QA, following BLIP. Again, we have prepared and organised the training and evaluation data lists provided below.

|

| 43 |

+

|

| 44 |

+

- [Image Captioning](https://www.dropbox.com/sh/quu6v5hzdetjcdz/AACze0_h6BO8LJmSsEq4MM8-a?dl=0): including COCO (Karpathy Split) and NoCaps.

|

| 45 |

+

- [VQAv2](https://www.dropbox.com/sh/hqtxl1k8gkbhhoi/AACiax5qi7no3pJgO1E57Xefa?dl=0): including VQAv2 and VG QA.

|

| 46 |

+

|

| 47 |

+

## Generating Expert Labels

|

| 48 |

+

Before starting any experiments with Prismer, we need to first pre-generate the modality expert labels, so we may construct a multi-label dataset. In `experts` folder, we have included all 6 experts we introduced in our paper. We have organised each expert's codebase with a shared and simple API.

|

| 49 |

+

|

| 50 |

+

*Note: Specifically for segmentation experts, please first install deformable convolution operations by `cd experts/segmentation/mask2former/modeling/pixel_decoder/ops` and run `sh make.sh`.*

|

| 51 |

+

|

| 52 |

+

To download pre-trained modality experts, run

|

| 53 |

+

```bash

|

| 54 |

+

python download_checkpoints.py --download_experts=True

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

To generate the expert labels, simply edit the `configs/experts.yaml` with the corresponding data paths, and run

|

| 58 |

+

```bash

|

| 59 |

+

export PYTHONPATH=.

|

| 60 |

+

accelerate experts/generate_{EXPERT_NAME}.py

|

| 61 |

+

```

|

| 62 |

+

*Note: Expert label generation is only required for Prismer models, not for PrismerZ models.*

|

| 63 |

+

|

| 64 |

+

## Experiments

|

| 65 |

+

We have provided both Prismer and PrismerZ for pre-trained checkpoints (for zero-shot image captioning), as well as fined-tuned checkpoints on VQAv2 and COCO datasets. With these checkpoints, it should be expected to reproduce the exact performance listed below.

|

| 66 |

+

|

| 67 |

+

| Model | Pre-trained [Zero-shot] | COCO [Fine-tuned] | VQAv2 [Fine-tuned] |

|

| 68 |

+

|----------------|-------------------------|---------------------|-------------------|

|

| 69 |

+

| PrismerZ-BASE | COCO CIDEr [109.6] | COCO CIDEr [133.7] | test-dev [76.58] |

|

| 70 |

+

| Prismer-BASE | COCO CIDEr [122.6] | COCO CIDEr [135.1] | test-dev [76.84] |

|

| 71 |

+

| PrismerZ-LARGE | COCO CIDEr [124.8] | COCO CIDEr [135.7] | test-dev [77.49] |

|

| 72 |

+

| Prismer-LARGE | COCO CIDEr [129.7] | COCO CIDEr [136.5] | test-dev [78.42] |

|

| 73 |

+

|

| 74 |

+

To download pre-trained/fined-tuned checkpoints, run

|

| 75 |

+

```bash

|

| 76 |

+

# to download all model checkpoints (12 models in total)

|

| 77 |

+

python download_checkpoints.py --download_models=True

|

| 78 |

+

|

| 79 |

+

# to download specific checkpoints (Prismer-Base for fine-tuned VQA) in this example

|

| 80 |

+

python download_checkpoints.py --download_models="vqa_prismer_base"

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

*Note: Remember to install java via `sudo apt-get install default-jre` which is required to run the official COCO caption evaluation scripts.*

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

### Evaluation

|

| 88 |

+

To evaluate the model checkpoints, please run

|

| 89 |

+

```bash

|

| 90 |

+

# zero-shot image captioning (remember to remove caption prefix in the config files)

|

| 91 |

+

python train_caption.py --exp_name {MODEL_NAME} --evaluate

|

| 92 |

+

|

| 93 |

+

# fine-tuned image captioning

|

| 94 |

+

python train_caption.py --exp_name {MODEL_NAME} --from_checkpoint --evaluate

|

| 95 |

+

|

| 96 |

+

# fine-tuned VQA

|

| 97 |

+

python train_vqa.py --exp_name {MODEL_NAME} --from_checkpoint --evaluate

|

| 98 |

+

```

|

| 99 |

+

|

| 100 |

+

### Training / Fine-tuning

|

| 101 |

+

To pre-train or fine-tune any model with or without checkpoints, please run

|

| 102 |

+

```bash

|

| 103 |

+

# to train/fine-tuning from scratch

|

| 104 |

+

python train_{TASK}.py --exp_name {MODEL_NAME}

|

| 105 |

+

|

| 106 |

+

# to train/fine-tuning from the latest checkpoints (saved every epoch)

|

| 107 |

+

python train_{TASK}.py --exp_name {MODEL_NAME} --from_checkpoint

|

| 108 |

+

```

|

| 109 |

+

|

| 110 |

+

We have also included model sharding in the current training script via PyTorch's official [FSDP plugin](https://pytorch.org/tutorials/intermediate/FSDP_tutorial.html). With the same training commands, additionally add `--shard_grad_op` for ZeRO-2 Sharding (Gradients + Optimiser States), or `--full_shard` for ZeRO-3 Sharding (ZeRO-2 + Network Parameters).

|

| 111 |

+

|

| 112 |

+

*Note: You should expect the error range for VQAv2 Acc. to be less than 0.1; for COCO/NoCAPs CIDEr score to be less than 1.0.*

|

| 113 |

+

|

| 114 |

+

## Demo

|

| 115 |

+

Finally, we have offered a minimalist example to perform image captioning in a single GPU with our fine-tuned Prismer/PrismerZ checkpoint. Simply put your images under `helpers/images` (`.jpg` images), and run

|

| 116 |

+

```bash

|

| 117 |

+

python demo.py --exp_name {MODEL_NAME}

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

You then can see all generated modality expert labels in the `helpers/labels` folder and the generated captions in the `helpers/images` folder.

|

| 121 |

+

|

| 122 |

+

Particularly for the Prismer models, we have also offered a simple script to prettify the generated expert labels. To prettify and visualise the expert labels as well as its predicted captions, run

|

| 123 |

+

```bash

|

| 124 |

+

python demo_vis.py

|

| 125 |

+

```

|

| 126 |

+

|

| 127 |

+

*Note: Remember to set up the corresponding config in the `configs/caption.yaml` demo section. The default demo model config is for Prismer-Base.*

|

| 128 |

+

|

| 129 |

+

## Citation

|

| 130 |

+

|

| 131 |

+

If you found this code/work to be useful in your own research, please considering citing the following:

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

```bibtex

|

| 135 |

+

@article{liu2023prismer,

|

| 136 |

+

title={Prismer: A Vision-Language Model with An Ensemble of Experts},

|

| 137 |

+

author={Liu, Shikun and Fan, Linxi and Johns, Edward and Yu, Zhiding and Xiao, Chaowei and Anandkumar, Anima},

|

| 138 |

+

journal={arXiv preprint arXiv:2303.02506},

|

| 139 |

+

year={2023}

|

| 140 |

+

}

|

| 141 |

+

```

|

| 142 |

+

|

| 143 |

+

## License

|

| 144 |

+

Copyright © 2023, NVIDIA Corporation. All rights reserved.

|

| 145 |

+

|

| 146 |

+

This work is made available under the Nvidia Source Code License-NC.

|

| 147 |

+

|

| 148 |

+

The model checkpoints are shared under CC-BY-NC-SA-4.0. If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

|

| 149 |

+

|

| 150 |

+

For business inquiries, please visit our website and submit the form: [NVIDIA Research Licensing](https://www.nvidia.com/en-us/research/inquiries/).

|

| 151 |

+

|

| 152 |

+

## Acknowledgement

|

| 153 |

+

We would like to thank all the researchers who open source their works to make this project possible. [@bjoernpl](https://github.com/bjoernpl) for contributing an automated checkpoint download script.

|

| 154 |

+

|

| 155 |

+

## Contact

|

| 156 |

+

If you have any questions, please contact `sk.lorenmt@gmail.com`.

|

prismer/dataset/__init__.py

CHANGED

|

@@ -6,12 +6,18 @@

|

|

| 6 |

|

| 7 |

from torch.utils.data import DataLoader

|

| 8 |

|

|

|

|

| 9 |

from dataset.vqa_dataset import VQA

|

| 10 |

from dataset.caption_dataset import Caption

|

|

|

|

| 11 |

|

| 12 |

|

| 13 |

def create_dataset(dataset, config):

|

| 14 |

-

if dataset == '

|

|

|

|

|

|

|

|

|

|

|

|

|

| 15 |

train_dataset = VQA(config, train=True)

|

| 16 |

test_dataset = VQA(config, train=False)

|

| 17 |

return train_dataset, test_dataset

|

|

@@ -20,6 +26,11 @@ def create_dataset(dataset, config):

|

|

| 20 |

train_dataset = Caption(config, train=True)

|

| 21 |

test_dataset = Caption(config, train=False)

|

| 22 |

return train_dataset, test_dataset

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

|

| 24 |

|

| 25 |

def create_loader(dataset, batch_size, num_workers, train, collate_fn=None):

|

|

|

|

| 6 |

|

| 7 |

from torch.utils.data import DataLoader

|

| 8 |

|

| 9 |

+

from dataset.pretrain_dataset import Pretrain

|

| 10 |

from dataset.vqa_dataset import VQA

|

| 11 |

from dataset.caption_dataset import Caption

|

| 12 |

+

from dataset.classification_dataset import Classification

|

| 13 |

|

| 14 |

|

| 15 |

def create_dataset(dataset, config):

|

| 16 |

+

if dataset == 'pretrain':

|

| 17 |

+

dataset = Pretrain(config)

|

| 18 |

+

return dataset

|

| 19 |

+

|

| 20 |

+

elif dataset == 'vqa':

|

| 21 |

train_dataset = VQA(config, train=True)

|

| 22 |

test_dataset = VQA(config, train=False)

|

| 23 |

return train_dataset, test_dataset

|

|

|

|

| 26 |

train_dataset = Caption(config, train=True)

|

| 27 |

test_dataset = Caption(config, train=False)

|

| 28 |

return train_dataset, test_dataset

|

| 29 |

+

|

| 30 |

+

elif dataset == 'classification':

|

| 31 |

+

train_dataset = Classification(config, train=True)

|

| 32 |

+

test_dataset = Classification(config, train=False)

|

| 33 |

+

return train_dataset, test_dataset

|

| 34 |

|

| 35 |

|

| 36 |

def create_loader(dataset, batch_size, num_workers, train, collate_fn=None):

|

prismer/dataset/ade_features.pt

CHANGED

|

Binary files a/prismer/dataset/ade_features.pt and b/prismer/dataset/ade_features.pt differ

|

|

|

prismer/dataset/background_features.pt

CHANGED

|

Binary files a/prismer/dataset/background_features.pt and b/prismer/dataset/background_features.pt differ

|

|

|

prismer/dataset/caption_dataset.py

CHANGED

|

@@ -50,7 +50,7 @@ class Caption(Dataset):

|

|

| 50 |

elif self.dataset == 'demo':

|

| 51 |

img_path_split = self.data_list[index]['image'].split('/')

|

| 52 |

img_name = img_path_split[-2] + '/' + img_path_split[-1]

|

| 53 |

-

image, labels, labels_info = get_expert_labels('

|

| 54 |

|

| 55 |

experts = self.transform(image, labels)

|

| 56 |

experts = post_label_process(experts, labels_info)

|

|

|

|

| 50 |

elif self.dataset == 'demo':

|

| 51 |

img_path_split = self.data_list[index]['image'].split('/')

|

| 52 |

img_name = img_path_split[-2] + '/' + img_path_split[-1]

|

| 53 |

+

image, labels, labels_info = get_expert_labels('', self.label_path, img_name, 'helpers', self.experts)

|

| 54 |

|

| 55 |

experts = self.transform(image, labels)

|

| 56 |

experts = post_label_process(experts, labels_info)

|

prismer/dataset/classification_dataset.py

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) 2023, NVIDIA Corporation & Affiliates. All rights reserved.

|

| 2 |

+

#

|

| 3 |

+

# This work is made available under the Nvidia Source Code License-NC.

|

| 4 |

+

# To view a copy of this license, visit

|

| 5 |

+

# https://github.com/NVlabs/prismer/blob/main/LICENSE

|

| 6 |

+

|

| 7 |

+

import glob

|

| 8 |

+

from torch.utils.data import Dataset

|

| 9 |

+

from dataset.utils import *

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class Classification(Dataset):

|

| 13 |

+

def __init__(self, config, train):

|

| 14 |

+

self.data_path = config['data_path']

|

| 15 |

+

self.label_path = config['label_path']

|

| 16 |

+

self.experts = config['experts']

|

| 17 |

+

self.dataset = config['dataset']

|

| 18 |

+

self.shots = config['shots']

|

| 19 |

+

self.prefix = config['prefix']

|

| 20 |

+

|

| 21 |

+

self.train = train

|

| 22 |

+

self.transform = Transform(resize_resolution=config['image_resolution'], scale_size=[0.5, 1.0], train=True)

|

| 23 |

+

|

| 24 |

+

if train:

|

| 25 |

+

data_folders = glob.glob(f'{self.data_path}/imagenet_train/*/')

|

| 26 |

+

self.data_list = [{'image': data} for f in data_folders for data in glob.glob(f + '*.JPEG')[:self.shots]]

|

| 27 |

+

self.answer_list = json.load(open(f'{self.data_path}/imagenet/' + 'imagenet_answer.json'))

|

| 28 |

+

self.class_list = json.load(open(f'{self.data_path}/imagenet/' + 'imagenet_class.json'))

|

| 29 |

+

else:

|

| 30 |

+

data_folders = glob.glob(f'{self.data_path}/imagenet/*/')

|

| 31 |

+

self.data_list = [{'image': data} for f in data_folders for data in glob.glob(f + '*.JPEG')]