Spaces:

Runtime error

Runtime error

Commit

•

8ed9e1d

1

Parent(s):

35ef920

CPU only for now

Browse files- app.py +13 -13

- real_im_emb_plot.jpg +0 -0

- requirements.txt +1 -0

app.py

CHANGED

|

@@ -3,10 +3,10 @@

|

|

| 3 |

|

| 4 |

Automatically generated by Colab.

|

| 5 |

|

| 6 |

-

Original file is located at

|

| 7 |

-

https://colab.research.google.com/drive/1I47sLakpuwERGzn-XoNct67mwiDS1mQD

|

| 8 |

"""

|

| 9 |

|

|

|

|

|

|

|

| 10 |

import matplotlib.pyplot as plt

|

| 11 |

import matplotlib

|

| 12 |

|

|

@@ -108,7 +108,7 @@ def latent_code_from_text(text,):# args):

|

|

| 108 |

coded1 =torch.Tensor.long(coded1)

|

| 109 |

with torch.no_grad():

|

| 110 |

x0 = coded1

|

| 111 |

-

x0 = x0.to(

|

| 112 |

pooled_hidden_fea = model_vae.encoder(x0, attention_mask=(x0 > 0).float())[1]

|

| 113 |

mean, logvar = model_vae.encoder.linear(pooled_hidden_fea).chunk(2, -1)

|

| 114 |

latent_z = mean.squeeze(1)

|

|

@@ -128,9 +128,9 @@ def text_from_latent_code(latent_z):

|

|

| 128 |

length= length, # Chunyuan: Fix length; or use <EOS> to complete a sentence

|

| 129 |

temperature=.5,

|

| 130 |

top_k=100,

|

| 131 |

-

top_p=.

|

| 132 |

-

device=

|

| 133 |

-

decoder_tokenizer

|

| 134 |

)

|

| 135 |

text_x1 = tokenizer_decoder.decode(out[0,:].tolist(), clean_up_tokenization_spaces=True)

|

| 136 |

text_x1 = text_x1.split()[1:-1]

|

|

@@ -158,7 +158,7 @@ encoder_config_class, encoder_model_class, encoder_tokenizer_class = MODEL_CLASS

|

|

| 158 |

model_encoder = encoder_model_class.from_pretrained(encoder_path, latent_size=latent_size)

|

| 159 |

tokenizer_encoder = encoder_tokenizer_class.from_pretrained('bert-base-cased', do_lower_case=True)

|

| 160 |

|

| 161 |

-

model_encoder.to(

|

| 162 |

if block_size <= 0:

|

| 163 |

block_size = tokenizer_encoder.max_len_single_sentence # Our input block size will be the max possible for the model

|

| 164 |

block_size = min(block_size, tokenizer_encoder.max_len_single_sentence)

|

|

@@ -167,7 +167,7 @@ block_size = min(block_size, tokenizer_encoder.max_len_single_sentence)

|

|

| 167 |

decoder_config_class, decoder_model_class, decoder_tokenizer_class = MODEL_CLASSES['gpt2']

|

| 168 |

model_decoder = decoder_model_class.from_pretrained(decoder_path, latent_size=latent_size)

|

| 169 |

tokenizer_decoder = decoder_tokenizer_class.from_pretrained('gpt2', do_lower_case=False)

|

| 170 |

-

model_decoder.to(

|

| 171 |

if block_size <= 0:

|

| 172 |

block_size = tokenizer_decoder.max_len_single_sentence # Our input block size will be the max possible for the model

|

| 173 |

block_size = min(block_size, tokenizer_decoder.max_len_single_sentence)

|

|

@@ -185,10 +185,10 @@ assert tokenizer_decoder.pad_token == '<PAD>'

|

|

| 185 |

|

| 186 |

|

| 187 |

# Evaluation

|

| 188 |

-

model_vae = VAE(model_encoder, model_decoder, tokenizer_encoder, tokenizer_decoder, SimpleNamespace(**{'latent_size': latent_size, 'device':

|

| 189 |

model_vae.load_state_dict(checkpoint['model_state_dict'])

|

| 190 |

print("Pre-trained Optimus is successfully loaded")

|

| 191 |

-

model_vae.to(

|

| 192 |

model_vae = torch.compile(model_vae)

|

| 193 |

|

| 194 |

l = latent_code_from_text('A photo of a mountain.')[0]

|

|

@@ -222,17 +222,17 @@ def generate(prompt, in_embs=None,):

|

|

| 222 |

if prompt != '':

|

| 223 |

print(prompt)

|

| 224 |

in_embs = in_embs / in_embs.abs().max() * .6 if in_embs != None else None

|

| 225 |

-

in_embs = 1 * in_embs.to(

|

| 226 |

else:

|

| 227 |

print('From embeds.')

|

| 228 |

in_embs = in_embs / in_embs.abs().max() * .6

|

| 229 |

-

in_embs = in_embs.to(

|

| 230 |

plt.close('all')

|

| 231 |

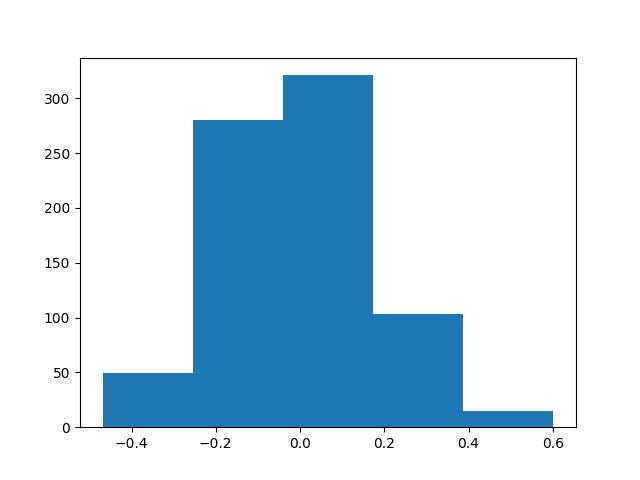

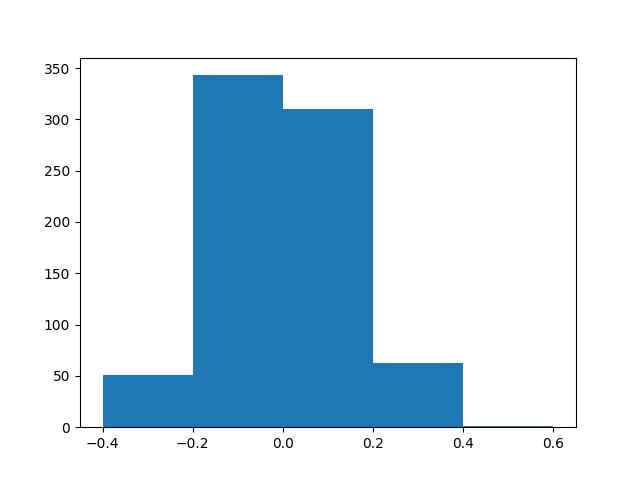

plt.hist(np.array(in_embs.detach().to('cpu').to(torch.float)).flatten(), bins=5)

|

| 232 |

plt.savefig('real_im_emb_plot.jpg')

|

| 233 |

|

| 234 |

|

| 235 |

-

text = text_from_latent_code(in_embs).replace('<unk>

|

| 236 |

in_embs = latent_code_from_text(text)[0]

|

| 237 |

print(text)

|

| 238 |

return text, in_embs.to('cpu')

|

|

|

|

| 3 |

|

| 4 |

Automatically generated by Colab.

|

| 5 |

|

|

|

|

|

|

|

| 6 |

"""

|

| 7 |

|

| 8 |

+

DEVICE = 'cpu'

|

| 9 |

+

|

| 10 |

import matplotlib.pyplot as plt

|

| 11 |

import matplotlib

|

| 12 |

|

|

|

|

| 108 |

coded1 =torch.Tensor.long(coded1)

|

| 109 |

with torch.no_grad():

|

| 110 |

x0 = coded1

|

| 111 |

+

x0 = x0.to(DEVICE)

|

| 112 |

pooled_hidden_fea = model_vae.encoder(x0, attention_mask=(x0 > 0).float())[1]

|

| 113 |

mean, logvar = model_vae.encoder.linear(pooled_hidden_fea).chunk(2, -1)

|

| 114 |

latent_z = mean.squeeze(1)

|

|

|

|

| 128 |

length= length, # Chunyuan: Fix length; or use <EOS> to complete a sentence

|

| 129 |

temperature=.5,

|

| 130 |

top_k=100,

|

| 131 |

+

top_p=.98,

|

| 132 |

+

device=DEVICE,

|

| 133 |

+

decoder_tokenizer=tokenizer_decoder

|

| 134 |

)

|

| 135 |

text_x1 = tokenizer_decoder.decode(out[0,:].tolist(), clean_up_tokenization_spaces=True)

|

| 136 |

text_x1 = text_x1.split()[1:-1]

|

|

|

|

| 158 |

model_encoder = encoder_model_class.from_pretrained(encoder_path, latent_size=latent_size)

|

| 159 |

tokenizer_encoder = encoder_tokenizer_class.from_pretrained('bert-base-cased', do_lower_case=True)

|

| 160 |

|

| 161 |

+

model_encoder.to(DEVICE)

|

| 162 |

if block_size <= 0:

|

| 163 |

block_size = tokenizer_encoder.max_len_single_sentence # Our input block size will be the max possible for the model

|

| 164 |

block_size = min(block_size, tokenizer_encoder.max_len_single_sentence)

|

|

|

|

| 167 |

decoder_config_class, decoder_model_class, decoder_tokenizer_class = MODEL_CLASSES['gpt2']

|

| 168 |

model_decoder = decoder_model_class.from_pretrained(decoder_path, latent_size=latent_size)

|

| 169 |

tokenizer_decoder = decoder_tokenizer_class.from_pretrained('gpt2', do_lower_case=False)

|

| 170 |

+

model_decoder.to(DEVICE)

|

| 171 |

if block_size <= 0:

|

| 172 |

block_size = tokenizer_decoder.max_len_single_sentence # Our input block size will be the max possible for the model

|

| 173 |

block_size = min(block_size, tokenizer_decoder.max_len_single_sentence)

|

|

|

|

| 185 |

|

| 186 |

|

| 187 |

# Evaluation

|

| 188 |

+

model_vae = VAE(model_encoder, model_decoder, tokenizer_encoder, tokenizer_decoder, SimpleNamespace(**{'latent_size': latent_size, 'device':DEVICE}))

|

| 189 |

model_vae.load_state_dict(checkpoint['model_state_dict'])

|

| 190 |

print("Pre-trained Optimus is successfully loaded")

|

| 191 |

+

model_vae.to(DEVICE).to(torch.bfloat16)

|

| 192 |

model_vae = torch.compile(model_vae)

|

| 193 |

|

| 194 |

l = latent_code_from_text('A photo of a mountain.')[0]

|

|

|

|

| 222 |

if prompt != '':

|

| 223 |

print(prompt)

|

| 224 |

in_embs = in_embs / in_embs.abs().max() * .6 if in_embs != None else None

|

| 225 |

+

in_embs = 1 * in_embs.to(DEVICE) + 1 * latent_code_from_text(prompt)[0] if in_embs != None else latent_code_from_text(prompt)[0]

|

| 226 |

else:

|

| 227 |

print('From embeds.')

|

| 228 |

in_embs = in_embs / in_embs.abs().max() * .6

|

| 229 |

+

in_embs = in_embs.to(DEVICE).to(torch.bfloat16)

|

| 230 |

plt.close('all')

|

| 231 |

plt.hist(np.array(in_embs.detach().to('cpu').to(torch.float)).flatten(), bins=5)

|

| 232 |

plt.savefig('real_im_emb_plot.jpg')

|

| 233 |

|

| 234 |

|

| 235 |

+

text = ' '.join(text_from_latent_code(in_embs).replace( '<unk>', '').split())

|

| 236 |

in_embs = latent_code_from_text(text)[0]

|

| 237 |

print(text)

|

| 238 |

return text, in_embs.to('cpu')

|

real_im_emb_plot.jpg

CHANGED

|

|

requirements.txt

CHANGED

|

@@ -1,4 +1,5 @@

|

|

| 1 |

gradio

|

|

|

|

| 2 |

numpy

|

| 3 |

scikit-learn

|

| 4 |

pandas

|

|

|

|

| 1 |

gradio

|

| 2 |

+

boto3

|

| 3 |

numpy

|

| 4 |

scikit-learn

|

| 5 |

pandas

|