Spaces:

Sleeping

Sleeping

ryanrahmadifa

commited on

Commit

·

79e1719

1

Parent(s):

d93c9b1

Added files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +5 -6

- app.py +6 -0

- convert_first.csv +150 -0

- data/all_platts_1week_clean.csv +0 -0

- data/dated_brent_allbate.csv +0 -0

- data/results_platts_09082024_clean.csv +0 -0

- data/topresults_platts_09082024_clean.csv +12 -0

- evaluation.xlsx +0 -0

- experimentation_mlops/example/MLProject +36 -0

- experimentation_mlops/example/als.py +69 -0

- experimentation_mlops/example/etl_data.py +42 -0

- experimentation_mlops/example/load_raw_data.py +42 -0

- experimentation_mlops/example/main.py +107 -0

- experimentation_mlops/example/python_env.yaml +10 -0

- experimentation_mlops/example/spark-defaults.conf +1 -0

- experimentation_mlops/example/train_keras.py +116 -0

- experimentation_mlops/mlops/MLProject +13 -0

- experimentation_mlops/mlops/data/2week_news_data.csv +0 -0

- experimentation_mlops/mlops/data/2week_news_data.json +0 -0

- experimentation_mlops/mlops/data/2week_news_data.parquet +3 -0

- experimentation_mlops/mlops/data/2week_news_data.xlsx +0 -0

- experimentation_mlops/mlops/data/2week_news_data.zip +3 -0

- experimentation_mlops/mlops/desktop.ini +4 -0

- experimentation_mlops/mlops/end-to-end.ipynb +0 -0

- experimentation_mlops/mlops/evaluation.py +42 -0

- experimentation_mlops/mlops/ingest_convert.py +51 -0

- experimentation_mlops/mlops/ingest_request.py +54 -0

- experimentation_mlops/mlops/main.py +104 -0

- experimentation_mlops/mlops/ml-doc.md +59 -0

- experimentation_mlops/mlops/modules/transformations.py +39 -0

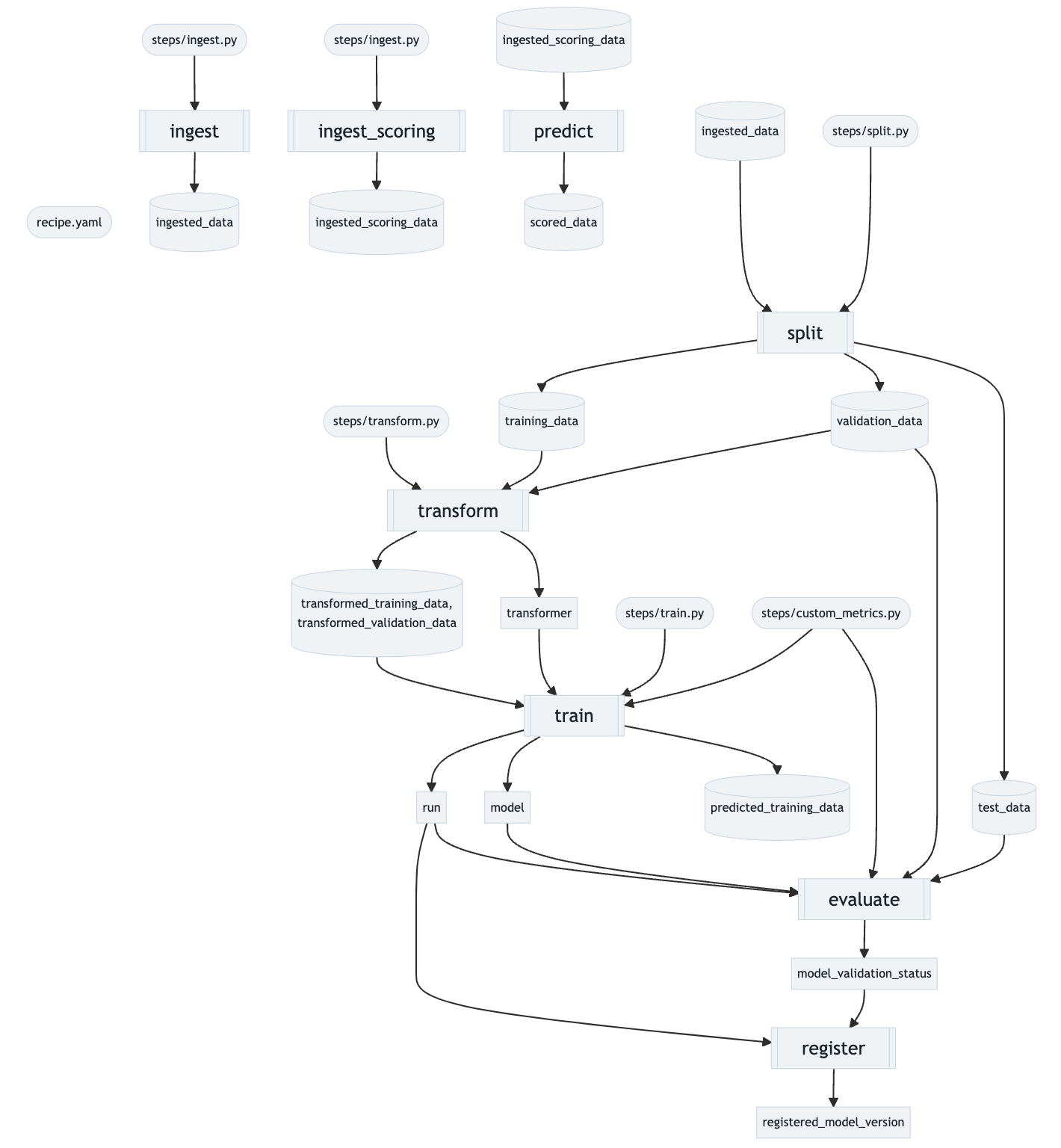

- experimentation_mlops/mlops/pics/pipeline.png +0 -0

- experimentation_mlops/mlops/python_env.yaml +11 -0

- experimentation_mlops/mlops/requirements.txt +32 -0

- experimentation_mlops/mlops/spark-defaults.conf +1 -0

- experimentation_mlops/mlops/test.ipynb +490 -0

- experimentation_mlops/mlops/train.py +166 -0

- experimentation_mlops/mlops/transform.py +85 -0

- modules/__init__.py +0 -0

- modules/__pycache__/__init__.cpython-39.pyc +0 -0

- modules/__pycache__/data_preparation.cpython-39.pyc +0 -0

- modules/__pycache__/semantic.cpython-39.pyc +0 -0

- modules/data_preparation.py +86 -0

- modules/semantic.py +198 -0

- page_1.py +85 -0

- page_2.py +63 -0

- page_3.py +79 -0

- price_forecasting_ml/NeuralForecast.ipynb +0 -0

- price_forecasting_ml/__pycache__/train.cpython-38.pyc +0 -0

- price_forecasting_ml/artifacts/crude_oil_8998a364-2ecc-483d-8079-f04d455b4522/forecast_plot.jpg +0 -0

- price_forecasting_ml/artifacts/crude_oil_8998a364-2ecc-483d-8079-f04d455b4522/ingested_dataset.csv +0 -0

README.md

CHANGED

|

@@ -1,14 +1,13 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: streamlit

|

| 7 |

-

sdk_version: 1.

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: apache-2.0

|

| 11 |

-

short_description: Bioma AI Prototype

|

| 12 |

---

|

| 13 |

|

| 14 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Trend Prediction App

|

| 3 |

+

emoji: 🚀

|

| 4 |

+

colorFrom: indigo

|

| 5 |

+

colorTo: pink

|

| 6 |

sdk: streamlit

|

| 7 |

+

sdk_version: 1.37.1

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: apache-2.0

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

app.py

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

|

| 3 |

+

pg = st.navigation({"Bioma AI PoC":[st.Page("page_1.py", title="Semantic Analysis"),

|

| 4 |

+

st.Page("page_2.py", title="Price Forecasting"),

|

| 5 |

+

st.Page("page_3.py", title="MLOps Pipeline")]})

|

| 6 |

+

pg.run()

|

convert_first.csv

ADDED

|

@@ -0,0 +1,150 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

,headline,topic_verification

|

| 2 |

+

0,SPAIN DATA: H1 crude imports rise 11% to 1.4 million b/d,Crude Oil

|

| 3 |

+

1,REFINERY NEWS: Host of Chinese units back from works; Jinling maintenance in Nov-Dec,Macroeconomic & Geopolitics

|

| 4 |

+

2,REFINERY NEWS ROUNDUP: Mixed runs in Asia-Pacific,Macroeconomic & Geopolitics

|

| 5 |

+

3,Physical 1%S fuel oil Med-North spread hits record high on competitive bidding in Platts MOC,Middle Distillates

|

| 6 |

+

4,"Indian ports see Jan-July bunker, STS calls up 64% on year, monsoon hits July demand",Macroeconomic & Geopolitics

|

| 7 |

+

5,LNG bunker prices in Europe hit 8-month high amid rising demand,Light Ends

|

| 8 |

+

6,REFINERY NEWS: Wellbred Trading acquires La Nivernaise de Raffinage in France,Heavy Distillates

|

| 9 |

+

7,Wellbred Trading buys French diesel refinery that runs on used cooking oil,Macroeconomic & Geopolitics

|

| 10 |

+

8,EU's climate monitor says 2024 'increasingly likely' to be warmest year on record,Macroeconomic & Geopolitics

|

| 11 |

+

9,"European LPG discount to naphtha narrows, shifting petchem feedstock appetite",Light Ends

|

| 12 |

+

10,REFINERY NEWS: Thai Oil’s Q2 utilization drops on planned CDU shutdown,Crude Oil

|

| 13 |

+

11,South Korea’s top oil refiner SK Innovation joins carbon storage project in Australia,Middle Distillates

|

| 14 |

+

12,CRUDE MOC: Middle East sour crude cash differentials hit month-to-date highs,Crude Oil

|

| 15 |

+

13,CNOOC approves 100 Bcm of proven reserves at South China Sea gas field,Crude Oil

|

| 16 |

+

14,"Singapore to work with Shell’s refinery, petrochemicals asset buyers to decarbonize: minister",Light Ends

|

| 17 |

+

15,BLM federal Montana-Dakotas oil and gas lease sale nets nearly $24 mil: Energynet.com,Middle Distillates

|

| 18 |

+

16,REFINERY NEWS: Oman's Sohar undergoes unplanned shutdown: sources,Crude Oil

|

| 19 |

+

17,"OIL FUTURES: Crude prices higher as US stockpiles extend decline, demand concerns cap gains",Crude Oil

|

| 20 |

+

18,Qatar announces acceptance of Sep LPG cargoes with no cuts or delays heard,Light Ends

|

| 21 |

+

19,"South Korea aims for full GCC FTA execution by year-end, refiners hopeful for cheaper sour crude",Crude Oil

|

| 22 |

+

20,"Indonesia sets Minas crude price at $84.95/b for July, rising $3.35/b from June",Crude Oil

|

| 23 |

+

21,Cathay Pacific H1 2024 passenger traffic rises 36% on year; Hong Kong’s jet fuel demand bolstered,Middle Distillates

|

| 24 |

+

22,US DATA: Total ULSD stocks near a six-month high as demand continues to fall,Middle Distillates

|

| 25 |

+

23,US DATA: Product supplied of propane and propylene reach three-month high,Light Ends

|

| 26 |

+

24,Internatonal Seaways focused on replacing aging fleet during second quarter: CEO,Crude Oil

|

| 27 |

+

25,"Devon Energy's oil output hits all-time record high from Delaware, Eagle Ford operations",Crude Oil

|

| 28 |

+

26,"Brazil's Prio still waiting on IBAMA license approvals to boost oil, gas output",Crude Oil

|

| 29 |

+

27,REFINERY NEWS: Delek US sees Q3 refinery utilization dip from record Q2 highs,Light Ends

|

| 30 |

+

28,"OIL FUTURES: Crude rallies as traders eye tighter US supply, global financial market stabilization",Crude Oil

|

| 31 |

+

29,"Prompt DFL, CFD contracts rally",Crude Oil

|

| 32 |

+

30,"REFINERY NEWS: Petroperú sees 2Q refined fuel sales drop 4.4% on year to 93,700 b/d",Middle Distillates

|

| 33 |

+

31,W&T Offshore nears close of new US Gulf of Mexico drilling joint venture,Crude Oil

|

| 34 |

+

32,"Imrproved efficiencies, continued M&A activity to drive growth for Permian Resources",Heavy Distillates

|

| 35 |

+

33,Mexico's Pemex to explore deposit adjacent to major onshore gas field Quesqui,Middle Distillates

|

| 36 |

+

34,REFINERY NEWS: Par Pacific reports softer south Rockies results as Midwest barrels spill into region,Middle Distillates

|

| 37 |

+

35,"Suncor sees improved H2 oil and gas output, completes major Q2 turnarounds",Middle Distillates

|

| 38 |

+

36,"Brazil's Petrobras, Espirito Santo state to study potential CCUS, hydrogen hubs",Middle Distillates

|

| 39 |

+

37,"Argentina raises biodiesel, ethanol prices for blending by 1.5% in August",Middle Distillates

|

| 40 |

+

38,Bolivia offers tax breaks to import equipment for biodiesel plants following fuel shortages,Light Ends

|

| 41 |

+

39,"US DATA: West Coast fuel oil stocks hit a six-week low, EIA says",Middle Distillates

|

| 42 |

+

40,Iraq’s SOMO cuts official selling prices for September-loading crude oil for Europe,Crude Oil

|

| 43 |

+

41,Nigeria's Dangote refinery plans to divest 12.75% stake: ratings agency,Middle Distillates

|

| 44 |

+

42,REFINERY NEWS: Kazakhstan's Atyrau processes 2.9 mil mt crude in H1,Middle Distillates

|

| 45 |

+

43,REFINERY NEWS: Thailand's IRPC reports Q2 utilization of 94%,Light Ends

|

| 46 |

+

44,DNO reports higher Q2 crude production in Iraq's Kurdish region,Crude Oil

|

| 47 |

+

45,"ADNOC L&S expects ‘strong rates’ in tankers, dry-bulk, containers in 2024",Crude Oil

|

| 48 |

+

46,WAF crude tanker rates hit 10-month lows amid sluggish inquiry levels,Crude Oil

|

| 49 |

+

47,Senegal's inaugural crude stream Sangomar to load 3.8 mil barrels in September,Crude Oil

|

| 50 |

+

48,China's July vegetable oil imports rise 3% on month as buyers replenish domestic stocks,Macroeconomic & Geopolitics

|

| 51 |

+

49,CRUDE MOC: Middle East sour crude cash differentials rebound,Crude Oil

|

| 52 |

+

50,OIL FUTURES: Crude oil recovers as financial markets improve,Crude Oil

|

| 53 |

+

51,"Tullow sees rise in crude output, profits on-year in H1 2024",Crude Oil

|

| 54 |

+

52,Russia's Taman port June-July oil products throughput up 26% on year,Heavy Distillates

|

| 55 |

+

53,JAPAN DATA: Oil product exports rise 4.5% on week to 2.42 mil barrels,Crude Oil

|

| 56 |

+

54,REFINERY NEWS: Petro Rabigh to be upgraded after Aramco takes control,Crude Oil

|

| 57 |

+

55,Canada's ShaMaran closes acquisition of Atrush oil field,Crude Oil

|

| 58 |

+

56,CHINA DATA: July natural gas imports rise 5% on year to 10.9 mil mt,Light Ends

|

| 59 |

+

57,"OIL FUTURES: Crude stabilizes on technical bounce, supply uncertainty",Crude Oil

|

| 60 |

+

58,JAPAN DATA: Oil product stocks rise 0.8% on week to 55.32 mil barrels,Crude Oil

|

| 61 |

+

59,Japan cuts Aug 8-14 fuel subsidy by 21% as crude prices drop,Middle Distillates

|

| 62 |

+

60,JAPAN DATA: Refinery runs rise to 67% over July 28-Aug 3 on higher crude throughput,Light Ends

|

| 63 |

+

61,Asian reforming spread hits over two-year low as gasoline prices lag naphtha,Light Ends

|

| 64 |

+

62,Asia medium sulfur gasoil differential weakens as Indonesia demand tapers,Middle Distillates

|

| 65 |

+

63,"QatarEnergy raises Sep Land, Marine crude OSPs by 45-75 cents/b from Aug",Heavy Distillates

|

| 66 |

+

64,ADNOC sets Murban Sep OSP $1.28/b higher on month at $83.80/b,Heavy Distillates

|

| 67 |

+

65,"Diamondback Energy keeps pushing well drilling, completion efficiencies in Q2",Middle Distillates

|

| 68 |

+

66,"Genel Energy’s oil production from Tawke field increases to 19,510 b/d in 1H 2024",Middle Distillates

|

| 69 |

+

67,Longer laterals and higher well performance drive Rocky Mountain production: Oneok,Light Ends

|

| 70 |

+

68,US DOE seeks to buy 3.5 million barrels of crude for delivery to SPR in January 2025,Crude Oil

|

| 71 |

+

69,"FPSO Maria Quiteria arrives offshore Brazil, to reduce emissions: Petrobras",Middle Distillates

|

| 72 |

+

70,OIL FUTURES: Crude edges higher as market stabilizes amid Middle Eastern supply concerns,Crude Oil

|

| 73 |

+

71,"US EIA lowers 2024 oil price outlook by $2/b, but still predicts increases",Crude Oil

|

| 74 |

+

72,"Shell, BP to fund South Africa's Sapref refinery operations in government takeover",Light Ends

|

| 75 |

+

73,"Indian Oil cancels tender to build a 10,000 mt/yr renewable hydrogen plant",Light Ends

|

| 76 |

+

74,"Brazil's Prio July oil equivalent output falls 31.7% on maintenance, shuttered wells",Crude Oil

|

| 77 |

+

75,Eni follows Ivory Coast discoveries with four new licenses,Crude Oil

|

| 78 |

+

76,EU DATA: MY 2024-25 soybean meal imports rise 8% on year as of Aug 4,Macroeconomic & Geopolitics

|

| 79 |

+

77,"Greek PPC to buy a 600 MW Romanian wind farm, portfolio from Macquarie-owned developer",Macroeconomic & Geopolitics

|

| 80 |

+

78,Vitol to take Italian refiner Saras private after acquiring 51% stake,Macroeconomic & Geopolitics

|

| 81 |

+

79,Mediterranean sweet crude market shows muted response to Sharara shutdown,Macroeconomic & Geopolitics

|

| 82 |

+

80,REFINERY NEWS: Vitol acquires 51% in Italian refiner Saras,Macroeconomic & Geopolitics

|

| 83 |

+

81,Rotterdam LNG bunkers spread with VLSFO narrows to 2024 low,Light Ends

|

| 84 |

+

82,Argentina’s YPF finds buyers for 15 maturing conventional blocks as it focuses on Vaca Muerta,Heavy Distillates

|

| 85 |

+

83,REFINERY NEWS ROUNDUP: Nigerian plants in focus,Macroeconomic & Geopolitics

|

| 86 |

+

84,"REFINERY NEWS: Valero shuts CDU, FCCU at McKee refinery for planned work",Macroeconomic & Geopolitics

|

| 87 |

+

85,Kazakhstan extends ban on oil products exports by truck for six months,Macroeconomic & Geopolitics

|

| 88 |

+

86,Physical Hi-Lo spread hits 3 month high amid prompt LSFO demand,Heavy Distillates

|

| 89 |

+

87,CRUDE MOC: Middle East sour crude cash differentials slip to fresh lows,Crude Oil

|

| 90 |

+

88,"Nigeria launches new Utapate crude grade, first cargo heads to Spain",Crude Oil

|

| 91 |

+

89,REFINERY NEWS: Turkish Tupras Q2 output rises 15% on the quarter and year,Middle Distillates

|

| 92 |

+

90,"CHINA DATA: Independent refineries’ Iranian crude imports fall in July, ESPO inflows rebound",Crude Oil

|

| 93 |

+

91,Gunvor acquires TotalEnergies' 50% stake in Pakistan retail fuel business,Middle Distillates

|

| 94 |

+

92,INTERVIEW: Coal to remain a dominant power source in India: Menar MD,Macroeconomic & Geopolitics

|

| 95 |

+

93,OIL FUTURES: Crude price holds steady as demand expectations cap gains,Crude Oil

|

| 96 |

+

94,Fujairah’s HSFO August HSFO ex-wharf premiums slip; stocks adequate,Heavy Distillates

|

| 97 |

+

95,JAPAN DATA: US crude imports more than double in March as Middle East dependency eases,Crude Oil

|

| 98 |

+

96,Dubai crude futures traded volume on TOCOM rebounds in July from record low,Crude Oil

|

| 99 |

+

97,Japan's spot electricity price retreats 8% as temperatures ease,Macroeconomic & Geopolitics

|

| 100 |

+

98,"HONG KONG DATA: June oil product imports surge 32% on month to 226,475 barrels",Crude Oil

|

| 101 |

+

99,NextDecade signs contract with Bechtel to build Rio Grande LNG expansion,Light Ends

|

| 102 |

+

100,"Kosmos sees 2024 total output of 90,000 boe/d, despite Q2 operations thorns: CEO",Crude Oil

|

| 103 |

+

101,"Dated Brent reaches two-month low Aug. 5 as physical, derivatives prices slide on day",Middle Distillates

|

| 104 |

+

102,"Alaska North Slope crude output up in July, but long-term decline continues",Crude Oil

|

| 105 |

+

103,Balance-month DFL contract slips to seven-week low in bearish sign for physical crude fundamentals,Crude Oil

|

| 106 |

+

104,Iraqi Kurdistan officials order crackdown on illegal refineries over pollution,Macroeconomic & Geopolitics

|

| 107 |

+

105,Rhine barge cargo navigation limits set to kick in amid dryer weather,Middle Distillates

|

| 108 |

+

106,Bolivia returns diesel supplies to normal following shortages,Middle Distillates

|

| 109 |

+

107,OCI optimistic about methanol demand driven by decarbonization efforts,Light Ends

|

| 110 |

+

108,Mitsubishi to supply turbine for 30% hydrogen co-firing in Malaysia power plant,Middle Distillates

|

| 111 |

+

109,ATLANTIC LNG: Key market indicators for Aug. 5-9,Light Ends

|

| 112 |

+

110,"Eurobob swap, gas-nap spread falls below 6-month low amid crude selloff",Light Ends

|

| 113 |

+

111,EMEA PETROCHEMICALS: Key market indicators for Aug 5-9,Light Ends

|

| 114 |

+

112,EMEA LIGHT ENDS: Key market indicators for Aug 5 – 9,Light Ends

|

| 115 |

+

113,EUROPE AND AFRICA RESIDUAL AND MARINE FUEL: Key market indicators Aug 5-9,Heavy Distillates

|

| 116 |

+

114,TURKEY DATA: June crude flows via BTC pipeline up 8.1% on month,Crude Oil

|

| 117 |

+

115,EMEA AGRICULTURE: Key market indicators for Aug 5–9,Macroeconomic & Geopolitics

|

| 118 |

+

116,OIL FUTURES: Crude oil faces downward pressure amid wider weakness in financial markets,Crude Oil

|

| 119 |

+

117,Woodside to acquire OCI’s low carbon ammonia project with CO2 capture in US,Middle Distillates

|

| 120 |

+

118,Maire secures feasibility study for sustainable aviation fuel project in Indonesia,Middle Distillates

|

| 121 |

+

119,CRUDE MOC: Middle East sour crude cash differentials plunge on risk-off sentiment,Middle Distillates

|

| 122 |

+

120,"Zhoushan LSFO storage availability rises for 3rd month in Aug, hits record high",Middle Distillates

|

| 123 |

+

121,Oil storage in Russia's Rostov region hit by drone strike,Macroeconomic & Geopolitics

|

| 124 |

+

122,WAF TRACKING: Nigerian crude exports to Netherlands top 5-year high in July,Crude Oil

|

| 125 |

+

123,"Vietnam’s Hai Linh receives license to import, export LNG",Light Ends

|

| 126 |

+

124,Japan's Idemitsu could restart Tokuyama steam cracker on Aug 11,Light Ends

|

| 127 |

+

125,Indonesia's biodiesel output up 12% in H1 on increased domestic mandates: APROBI,Middle Distillates

|

| 128 |

+

126,CHINA DATA: Independent refiners' July feedstocks imports hit 3-month low at 3.65 mil b/d,Light Ends

|

| 129 |

+

127,"Singapore’s Aug ex-wharf term LSFO premiums rise, demand moderate",Heavy Distillates

|

| 130 |

+

128,"OIL FUTURES: Crude slumps as market volatility rages on recession, Middle East risks",Crude Oil

|

| 131 |

+

129,Pakistan's HSFO exports nearly triple as focus shifts to cheaper power sources,Heavy Distillates

|

| 132 |

+

130,"TAIWAN DATA: June oil products demand falls 3% on month to 758,139 b/d",Light Ends

|

| 133 |

+

131,REFINERY NEWS: Japan's Cosmo restarts No. 1 Chiba CDU after glitches,Crude Oil

|

| 134 |

+

132,ASIA PETROCHEMICALS: Key market indicators for Aug 5-9,Light Ends

|

| 135 |

+

133,DME Oman crude futures traded volume rises for seventh straight month in July,Crude Oil

|

| 136 |

+

134,ICE front-month Singapore gasoline swaps open interest rises 14.6% on month in July,Light Ends

|

| 137 |

+

135,ASIA OCTANE: Key market indicators for Aug 5-9,Light Ends

|

| 138 |

+

136,ICE Dubai crude futures July total traded volume rises 11.4% on month,Crude Oil

|

| 139 |

+

137,"Lower-than-expected Aramco Sep OSPs a nod to weak Asian market, OPEC+ cut unwind",Crude Oil

|

| 140 |

+

138,ASIA CRUDE OIL: Key market indicators for Aug 5-8,Crude Oil

|

| 141 |

+

139,ASIA LIGHT ENDS: Key market indicators for Aug 5-8,Light Ends

|

| 142 |

+

140,China fuel oil quotas decline seen supporting Q3 LSFO premiums in Zhoushan,Middle Distillates

|

| 143 |

+

141,South Korea's short-term diesel demand under pressure on e-commerce firms' bankruptcy,Middle Distillates

|

| 144 |

+

142,ICE front-month Singapore 10 ppm gasoil swap open interest rebounds 2% on month in July,Middle Distillates

|

| 145 |

+

143,Saudi Aramco maintains or raises Asia-bound Sep crude OSPs by 10-20 cents/b,Crude Oil

|

| 146 |

+

144,ASIA MIDDLE DISTILLATES: Key market indicators for Aug 5-8,Middle Distillates

|

| 147 |

+

145,ICE front-month Singapore HSFO open interest rises 19.6% on month in July,Heavy Distillates

|

| 148 |

+

146,REFINERY NEWS: Fort Energy at Fujairah ‘remains operational’,Macroeconomic & Geopolitics

|

| 149 |

+

147,Container ship Groton attacked near Yemen amid growing Middle East security risks,Macroeconomic & Geopolitics

|

| 150 |

+

148,Oil depot in Russia’s Belgorod region hit by drone strike,Macroeconomic & Geopolitics

|

data/all_platts_1week_clean.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

data/dated_brent_allbate.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

data/results_platts_09082024_clean.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

data/topresults_platts_09082024_clean.csv

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

,body,headline,updatedDate,topic_prediction,topic_verification,negative_score,neutral_score,positive_score,trend_prediction,trend_verification

|

| 2 |

+

0," OPEC+ crude production in July made its biggest jump in almost a year, as Iraq and Kazakhstan raised their output despite committing to deeper cuts, while Russia also remained well over its quota. The group's overall production was up 160,000 b/d compared with June, totaling 41.03 million b/d, the Platts OPEC+ survey from S&P Global Commodity Insights showed Aug. 8. Member countries with quotas produced 437,000 b/d above target in July, up from 229,000 b/d in June. July was the first month of compensation plans introduced by three countries that overproduced in the first half of 2024. Iraq pledged to cut an additional 70,000 b/d in July and Kazakhstan pledged to cut a further 18,000 b/d. Russia's compensation plan does not include additional cuts until October 2024. The survey found that Iraq produced 4.33 million b/d in July, 400,000 b/d above its quota. This contributed to growth in OPEC production of 130,000 b/d to 26.89 million b/d. Non-OPEC producers added a further 14.14 million b/d, up 30,000 b/d month on month. This was driven by Kazakhstan, which increased output by 30,000 b/d. It is now producing 120,000 b/d above quota, taking into account its compensation cut. Russia is also producing above quota, with output at 9.10 million b/d in July, against a quota of 8.98 million b/d. The overproducers are part of a group that is implementing a combined 2.2 million b/d of voluntary cuts, currently in place until the end of the third quarter. The group then plans to gradually bring some of those barrels back to market from September if conditions allow. A further 3.6 million b/d of group-wide cuts are in place until the end of 2025. The rise in output in July came despite the poor performance of the alliance's African contingent, with production in Nigeria, South Sudan, Gabon and Libya falling by a collective 80,000 b/d. Pressure on overproducers has increased in recent weeks, as recession fears have driven oil prices below $80/b. Platts, part of Commodity Insights, assessed Dated Brent at $79.91/b Aug. 7. A long-awaited rise in Chinese demand and high production from non-OPEC countries in the Americas -- including the US, Canada, Brazil and Guyana -- have also weakened prices in recent months. OPEC+ has pledged to stick to its strategy of major production cuts through the third quarter, before gradually bringing barrels back to market. Overproduction and depressed oil prices threaten these plans. The next meeting of the Joint Ministerial Monitoring Committee overseeing the agreement, which is co-chaired by Saudi Arabia and Russia, is scheduled for Oct. 2. A full ministerial meeting is scheduled for Dec. 1. The Platts survey measures wellhead production and is compiled using information from oil industry officials, traders and analysts, as well as by reviewing proprietary shipping, satellite and inventory data. OPEC+ crude production (million b/d) OPEC-9 July-24 Change June-24 Quota Over/under Algeria 0.90 0.00 0.90 0.908 -0.008 Congo-Brazzaville 0.26 0.00 0.26 0.277 -0.017 Equatorial Guinea 0.05 0.00 0.05 0.070 -0.020 Gabon 0.21 -0.01 0.22 0.169 0.041 Iraq*† 4.33 0.11 4.22 3.930 0.400 Kuwait 2.42 0.00 2.42 2.413 0.007 Nigeria 1.46 -0.04 1.50 1.500 -0.040 Saudi Arabia 8.99 0.01 8.98 8.978 0.012 UAE 2.99 0.02 2.97 2.912 0.078 TOTAL OPEC-9 21.61 0.09 21.52 21.157 0.453 OPEC EXEMPT Change Quota Over/under Iran 3.20 0.00 3.20 N/A N/A Libya 1.15 -0.01 1.16 N/A N/A Venezuela 0.93 0.05 0.88 N/A N/A TOTAL OPEC-12 26.89 0.13 26.76 N/A N/A NON-OPEC WITH QUOTAS Change Quota Over/under Azerbaijan 0.49 0.01 0.48 0.551 -0.061 Bahrain 0.18 0.00 0.18 0.196 -0.016 Brunei 0.07 0.01 0.06 0.083 -0.013 Kazakhstan† 1.57 0.03 1.54 1.450 0.120 Malaysia 0.35 0.00 0.35 0.401 -0.051 Oman 0.76 0.00 0.76 0.759 0.001 Russia 9.10 0.00 9.10 8.978 0.122 Sudan 0.03 0.00 0.03 0.064 -0.034 South Sudan 0.04 -0.02 0.06 0.124 -0.084 TOTAL NON-OPEC WITH QUOTAS 12.59 0.03 12.56 12.606 -0.016 NON-OPEC EXEMPT Change Quota Over/under Mexico 1.55 0 1.55 N/A N/A TOTAL NON-OPEC 14.14 0.03 14.11 N/A N/A OPEC+ MEMBERS WITH QUOTAS Change Quota Over/under TOTAL 34.20 0.12 34.08 33.76 0.437 OPEC+ Change Quota Over/under TOTAL 41.03 0.16 40.87 N/A N/A * Includes estimated 250,000 b/d production in the semi-autonomous Kurdistan region of Iraq † Iraq and Kazakhstan quotas reduced in line with compensation plans Source: Platts OPEC+ survey by S&P Global Commodity Insights ","OPEC+ produces 437,000 b/d above quota in first month of compensation cuts",2024-08-08 17:36:29+00:00,Macroeconomic & Geopolitics,Macroeconomic & Geopolitics,0.9936981650538123,0.03949102865047352,0.07103689164109918,Bearish,Bearish

|

| 3 |

+

1,nan,"Non-OPEC July output up 30,000 b/d at 14.14 mil b/d: Platts survey",2024-08-08 14:00:12+00:00,Macroeconomic & Geopolitics,Macroeconomic & Geopolitics,0.9932350855162315,0.024123551368425825,0.12366691833078211,Bearish,Bearish

|

| 4 |

+

2,nan,"OPEC+ producers with quotas 437,000 b/d above target in July: Platts survey",2024-08-08 14:00:11+00:00,Macroeconomic & Geopolitics,Macroeconomic & Geopolitics,0.9936222216140704,0.048969414364614584,0.06152339592702592,Bearish,Bearish

|

| 5 |

+

3,nan,"OPEC crude output up 130,000 b/d at 26.89 mil b/d in July: Platts survey",2024-08-08 14:00:11+00:00,Macroeconomic & Geopolitics,Macroeconomic & Geopolitics,0.9933905710185933,0.03299545514176609,0.10238077772498969,Bearish,Bearish

|

| 6 |

+

4,nan,"OPEC+ July crude output up 160,000 b/d at 41.03 mil b/d: Platts survey",2024-08-08 14:00:10+00:00,Macroeconomic & Geopolitics,Macroeconomic & Geopolitics,0.9870346671527929,0.02437343317152671,0.2153639976093806,Bearish,Bearish

|

| 7 |

+

5,nan,"Iraq, Russia, Kazakhstan overproduce in first month of compensation cuts: Platts survey",2024-08-08 14:00:10+00:00,Crude Oil,Crude Oil,0.6294152478714086,0.06749551758023337,0.927845667131505,Bullish,Bullish

|

| 8 |

+

6," UK-based upstream producer Harbour Energy plans to start its new Talbot oil and gas tie-in project at the J-Area hub in the North Sea by the end of 2024, boosting Ekofisk blend volumes, it said Aug. 8. Harbour, in a statement, reported a 19% year-on-year drop in its UK oil and gas production in the first half of 2024 to 149,000 b/d of oil equivalent. It noted a significant maintenance impact, including a planned shutdown in June at the J-Area, which sends oil and gas to Teesside, with the liquids loaded as Ekofisk blend. Ekofisk is a component in the Platts Dated Brent price assessment process. Talbot, a multiwell development, is expected to recover 18 million boe of light oil and gas over 16 years. It will add to oil volumes flowing through the J-Area into the Norpipe route to Teesside, contributing to the predominantly Norwegian Ekofisk blend. Harbour also flagged an ongoing maintenance impact on production through much of the Q3 2024, including a 40-day shutdown at the Britannia hub starting in August, which will impact flows into the Forties blend. The maintenance was expected to start in the next few days and be completed in September, according to a source close to the situation. Britannia was also expected to be impacted by a four-week shutdown of the SAGE gas pipeline starting Aug. 27 . Harbour has made ""good progress to date on the maintenance shutdowns and our UK capital projects, which are on track to materially increase production in the fourth quarter,"" it said. The North Sea typically sees a drop in production volumes in the summer due to maintenance. Non-UK diversification Harbour reiterated its efforts to diversify away from the UK, with an acquisition of Wintershall Dea assets underway, having strongly objected to punitive tax rates. It said its overall effective tax rate in the first half of 2024 was 85%, partly reflecting not-fully deductible costs under the UK tax regime. Harbour reported 10,000 boe/d of additional production outside the UK in the first half of the year, in Indonesia and Vietnam. It noted progress in Mexico, where Front End Engineering and Design has begun for the Zama oil project, estimated at 700 million barrels of light crude. Harbour is set to increase its Zama stake from 12% to 32% following the Wintershall acquisition. In the first half of 2024 ""we made significant progress towards completing the Wintershall Dea acquisition, which is now expected early in the fourth quarter,"" CEO Linda Cook said. ""The acquisition will transform the scale, geographical diversity and longevity of our portfolio and strengthen our capital structure, enabling us to deliver enhanced shareholder returns over the long run while also positioning us for further opportunities.” Platts Dated Brent was assessed at $79.91/b on Aug. 8, up $3.64 on the day. Platts is part of S&P Global Commodity Insights. ",UK's Harbour Energy says on track with North Sea Talbot oil tie-in,2024-08-08 13:54:45+00:00,Crude Oil,Crude Oil,0.31882130542268583,0.04218598724364094,0.9882147680155492,Bullish,Bullish

|

| 9 |

+

7," The INPEX-operated Ichthys LNG project in Australia has recovered to an 85% overall production rate after Train 2 restarted on July 28 following an outage on July 20 that was caused by a glitch, an INPEX spokesperson told S&P Global Commodity Insights Aug. 8. Currently, the onshore Ichthys LNG plant is running at 100% at Train 1, and about 70% at Train 2, putting the overall production rate at about 85%, the spokesperson said. The Ichthys LNG project is slated to resume full runs in October, when it plans to carry out some scheduled maintenance work lasting around a week, the spokesperson said. INPEX has estimated that fewer than five LNG cargoes of Ichthys LNG shipments will be affected as a result of the glitch, the spokesperson said. However, the INPEX spokesperson declined to elaborate on actual production volumes at the Ichthys LNG plant, which has yet to reach its operational capacity of 9.3 million mt/year. INPEX has been building a framework for a stable supply of 9.3 million mt/year of LNG at its operated Ichthys project by debottlenecking the facility, upgrading the cooling systems for liquefication and taking measures to address vibration issues. As of July, the Ichthys project has shipped a total of 76 LNG cargoes this year, with July shipments having slipped to 10 cargoes from 11 cargoes in June. Ichthys LNG shipments will slow to 10 cargoes per month in the second half of 2024, the spokesperson said, compared with an average of 11 cargoes per month in the first half of the year. In the first seven months of the year the Ichthys project shipped 14 plant condensate cargoes, 18 field condensate cargoes and 20 LPG cargoes. In the January-June period INPEX produced 662,000 b/d of oil equivalent, and it now expects its 2024 production to be 644,800 boe/d, down from its May outlook of 645,300 boe/d for the year as a result of the Ichthys LNG production issues, the spokesperson said. The project, operated by INPEX with 67.82%, involves piping gas from the offshore Ichthys field in the Browse Basin in Northwestern Australia more than 890 km (552 miles) to the onshore LNG plant near Darwin, which has an 8.9 million mt/year nameplate capacity. At peak, it has the capacity to produce 1.65 million mt/year of LPG and 100,000 b/d of condensate. ",Australia's Ichthys LNG recovers 85% output after Train 2 outage; to recover full runs in Oct,2024-08-08 11:53:44+00:00,Other,Other,0.770051212604236,0.010564989240227092,0.9773946433377442,Bullish,Bullish

|

| 10 |

+

8," NTPC Limited, India’s largest power generation utility, has partnered with LanzaTech to implement carbon recycling technology at its new facility in central India, in a significant move towards sustainable energy. The project will convert CO2 emissions and green hydrogen into ethanol using LanzaTech's second-generation bioreactor, the US-based company said in a statement Aug. 7. NTPC's upcoming plant will be the first in India to deploy this advanced technology, which captures carbon-rich gases before they enter the atmosphere. The LanzaTech bioreactor uses proprietary microbes to transform these gases into sustainable fuels, chemicals, and raw materials. The microbes convert CO2 and H2 into ethanol, a critical component for producing green energy products such as sustainable aviation fuels (SAF) and renewable diesel. This in turn boosts NTPC's goals by producing ethanol from waste-based feedstocks, promoting a circular carbon economy. According to the statement, the project was conceptualized and designed in collaboration with NTPC's research and development arm, NETRA (NTPC Energy Technology Research Alliance). The facility aims to demonstrate the commercial viability of LanzaTech’s technology in producing ethanol from waste-based feedstocks by leveraging CO2 as sole carbon source. Jakson Green, a new energy firm, is responsible for development of this Chhattisgarh-based facility, handling from design and engineering to procurement and construction. This first-of-its-kind plant is projected to abate 7,300 mt/year of CO2 annually, equivalent to the carbon sequestered by 8,523 acres of forest land. The carbon and hydrogen to renewable ethanol facility is slated to begin operations within two years. Dr. Jennifer Holmgren, CEO of LanzaTech, emphasized the strategic importance of this partnership, stating, “Our collaboration with NTPC and Jakson Green sets a roadmap for the commercial deployment of CO2 as a key feedstock.” Jakson Green is already developing India’s largest green hydrogen fueling station and a low-carbon methanol plant for leading government companies. LanzaTech technology is also being used at various other operations in India, producing ethanol at Indian Oil Corporation’s Panipat facility which will also be used for SAF. The company has also partnered with GAIL and Mangalore Refinery and Petrochemicals Limited on similar projects. Platts, part of Commodity Insights, assessed SAF production costs (palm fatty acid distillate) in Southeast Asia at $1,589.91/mt Aug. 7, down $19.50/mt from the previous assessment. ",NTPC advances clean energy goals with LanzaTech CO2-to-ethanol technology,2024-08-08 11:30:49+00:00,Light Ends,Light Ends,0.21314498348994937,0.11135607578700647,0.9908829648109232,Bullish,Bullish

|

| 11 |

+

9," UAE-based Dana Gas said it expects to resume drilling activities in Egypt after the country’s parliament ratified a law to consolidate its concessions to operate in the country under a new concession with Egyptian Natural Gas Holding Co. The new agreement ratified by the Egyptian parliament was already approved by the Egyptian Cabinet in March, authorizing the country’s minister of oil and Egyptian Natural Gas to finalize a new concession agreement with Dana Gas, the company said in an Aug. 8 statement. Since 2001, Dana Gas has been in discussions with Egyptian Natural Gas to consolidate three of its four concessions into a new concession with improved terms, according to Dana Gas’s website. “The revised terms should enable meaningful future investments alongside a resumption of drilling activities, positively impacting the company’s production levels in Egypt and helping the country meet its growing gas demand,” Dana Gas said in the statement. Egypt has halted LNG exports during the summer months and has turned to LNG imports instead to meet high seasonal demand amid declining domestic production. The development comes as delivered spot LNG prices to the East Mediterranean continue to trade above $10/MMBtu. Platts, part of S&P Global Commodity Insights, assessed the DES LNG East Mediterranean marker at $12.47/MMBtu Aug. 7, the highest since the assessment started in December 2023. The company’s first-half 2024 production in Egypt was 59,800 boe/d, down 25% from the same period a year earlier, mostly due to natural field declines, according to the statement. Dana Gas did not state when it expects to bring new production streams online in the country. Dana Gas's production in the Kurdish region of northern Iraq increased 3% over the same period to 37,600 boe/d due to increased demand for gas from local power plants, the company said. ",Dana Gas expects to resume drilling activities in Egypt after new concession,2024-08-08 11:23:48+00:00,Other,Other,0.023988005652641385,0.7891432360374782,0.9608502193290972,Bullish,Bullish

|

| 12 |

+

10,nan,"Indonesia sets Minas crude price at $84.95/b for July, rising $3.35/b from June",2024-08-08 01:41:13+00:00,Middle Distillates,Middle Distillates,0.9926734319450401,0.04286090006550804,0.07892061673161296,Bearish,Bearish

|

evaluation.xlsx

ADDED

|

Binary file (214 kB). View file

|

|

|

experimentation_mlops/example/MLProject

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: multistep_example

|

| 2 |

+

|

| 3 |

+

python_env: python_env.yaml

|

| 4 |

+

|

| 5 |

+

entry_points:

|

| 6 |

+

load_raw_data:

|

| 7 |

+

command: "python load_raw_data.py"

|

| 8 |

+

|

| 9 |

+

etl_data:

|

| 10 |

+

parameters:

|

| 11 |

+

ratings_csv: path

|

| 12 |

+

max_row_limit: {type: int, default: 100000}

|

| 13 |

+

command: "python etl_data.py --ratings-csv {ratings_csv} --max-row-limit {max_row_limit}"

|

| 14 |

+

|

| 15 |

+

als:

|

| 16 |

+

parameters:

|

| 17 |

+

ratings_data: path

|

| 18 |

+

max_iter: {type: int, default: 10}

|

| 19 |

+

reg_param: {type: float, default: 0.1}

|

| 20 |

+

rank: {type: int, default: 12}

|

| 21 |

+

command: "python als.py --ratings-data {ratings_data} --max-iter {max_iter} --reg-param {reg_param} --rank {rank}"

|

| 22 |

+

|

| 23 |

+

train_keras:

|

| 24 |

+

parameters:

|

| 25 |

+

ratings_data: path

|

| 26 |

+

als_model_uri: string

|

| 27 |

+

hidden_units: {type: int, default: 20}

|

| 28 |

+

command: "python train_keras.py --ratings-data {ratings_data} --als-model-uri {als_model_uri} --hidden-units {hidden_units}"

|

| 29 |

+

|

| 30 |

+

main:

|

| 31 |

+

parameters:

|

| 32 |

+

als_max_iter: {type: int, default: 10}

|

| 33 |

+

keras_hidden_units: {type: int, default: 20}

|

| 34 |

+

max_row_limit: {type: int, default: 100000}

|

| 35 |

+

command: "python main.py --als-max-iter {als_max_iter} --keras-hidden-units {keras_hidden_units}

|

| 36 |

+

--max-row-limit {max_row_limit}"

|

experimentation_mlops/example/als.py

ADDED

|

@@ -0,0 +1,69 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Trains an Alternating Least Squares (ALS) model for user/movie ratings.

|

| 3 |

+

The input is a Parquet ratings dataset (see etl_data.py), and we output

|

| 4 |

+

an mlflow artifact called 'als-model'.

|

| 5 |

+

"""

|

| 6 |

+

import click

|

| 7 |

+

import pyspark

|

| 8 |

+

from pyspark.ml import Pipeline

|

| 9 |

+

from pyspark.ml.evaluation import RegressionEvaluator

|

| 10 |

+

from pyspark.ml.recommendation import ALS

|

| 11 |

+

|

| 12 |

+

import mlflow

|

| 13 |

+

import mlflow.spark

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

@click.command()

|

| 17 |

+

@click.option("--ratings-data")

|

| 18 |

+

@click.option("--split-prop", default=0.8, type=float)

|

| 19 |

+

@click.option("--max-iter", default=10, type=int)

|

| 20 |

+

@click.option("--reg-param", default=0.1, type=float)

|

| 21 |

+

@click.option("--rank", default=12, type=int)

|

| 22 |

+

@click.option("--cold-start-strategy", default="drop")

|

| 23 |

+

def train_als(ratings_data, split_prop, max_iter, reg_param, rank, cold_start_strategy):

|

| 24 |

+

seed = 42

|

| 25 |

+

|

| 26 |

+

with pyspark.sql.SparkSession.builder.getOrCreate() as spark:

|

| 27 |

+

ratings_df = spark.read.parquet(ratings_data)

|

| 28 |

+

(training_df, test_df) = ratings_df.randomSplit([split_prop, 1 - split_prop], seed=seed)

|

| 29 |

+

training_df.cache()

|

| 30 |

+

test_df.cache()

|

| 31 |

+

|

| 32 |

+

mlflow.log_metric("training_nrows", training_df.count())

|

| 33 |

+

mlflow.log_metric("test_nrows", test_df.count())

|

| 34 |

+

|

| 35 |

+

print(f"Training: {training_df.count()}, test: {test_df.count()}")

|

| 36 |

+

|

| 37 |

+

als = (

|

| 38 |

+

ALS()

|

| 39 |

+

.setUserCol("userId")

|

| 40 |

+

.setItemCol("movieId")

|

| 41 |

+

.setRatingCol("rating")

|

| 42 |

+

.setPredictionCol("predictions")

|

| 43 |

+

.setMaxIter(max_iter)

|

| 44 |

+

.setSeed(seed)

|

| 45 |

+

.setRegParam(reg_param)

|

| 46 |

+

.setColdStartStrategy(cold_start_strategy)

|

| 47 |

+

.setRank(rank)

|

| 48 |

+

)

|

| 49 |

+

|

| 50 |

+

als_model = Pipeline(stages=[als]).fit(training_df)

|

| 51 |

+

|

| 52 |

+

reg_eval = RegressionEvaluator(

|

| 53 |

+

predictionCol="predictions", labelCol="rating", metricName="mse"

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

predicted_test_dF = als_model.transform(test_df)

|

| 57 |

+

|

| 58 |

+

test_mse = reg_eval.evaluate(predicted_test_dF)

|

| 59 |

+

train_mse = reg_eval.evaluate(als_model.transform(training_df))

|

| 60 |

+

|

| 61 |

+

print(f"The model had a MSE on the test set of {test_mse}")

|

| 62 |

+

print(f"The model had a MSE on the (train) set of {train_mse}")

|

| 63 |

+

mlflow.log_metric("test_mse", test_mse)

|

| 64 |

+

mlflow.log_metric("train_mse", train_mse)

|

| 65 |

+

mlflow.spark.log_model(als_model, "als-model")

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

if __name__ == "__main__":

|

| 69 |

+

train_als()

|

experimentation_mlops/example/etl_data.py

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Converts the raw CSV form to a Parquet form with just the columns we want

|

| 3 |

+

"""

|

| 4 |

+

import os

|

| 5 |

+

import tempfile

|

| 6 |

+

|

| 7 |

+

import click

|

| 8 |

+

import pyspark

|

| 9 |

+

|

| 10 |

+

import mlflow

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

@click.command(

|

| 14 |

+

help="Given a CSV file (see load_raw_data), transforms it into Parquet "

|

| 15 |

+

"in an mlflow artifact called 'ratings-parquet-dir'"

|

| 16 |

+

)

|

| 17 |

+

@click.option("--ratings-csv")

|

| 18 |

+

@click.option(

|

| 19 |

+

"--max-row-limit", default=10000, help="Limit the data size to run comfortably on a laptop."

|

| 20 |

+

)

|

| 21 |

+

def etl_data(ratings_csv, max_row_limit):

|

| 22 |

+

with mlflow.start_run():

|

| 23 |

+

tmpdir = tempfile.mkdtemp()

|

| 24 |

+

ratings_parquet_dir = os.path.join(tmpdir, "ratings-parquet")

|

| 25 |

+

print(f"Converting ratings CSV {ratings_csv} to Parquet {ratings_parquet_dir}")

|

| 26 |

+

with pyspark.sql.SparkSession.builder.getOrCreate() as spark:

|

| 27 |

+

ratings_df = (

|

| 28 |

+

spark.read.option("header", "true")

|

| 29 |

+

.option("inferSchema", "true")

|

| 30 |

+

.csv(ratings_csv)

|

| 31 |

+

.drop("timestamp")

|

| 32 |

+

) # Drop unused column

|

| 33 |

+

ratings_df.show()

|

| 34 |

+

if max_row_limit != -1:

|

| 35 |

+

ratings_df = ratings_df.limit(max_row_limit)

|

| 36 |

+

ratings_df.write.parquet(ratings_parquet_dir)

|

| 37 |

+

print(f"Uploading Parquet ratings: {ratings_parquet_dir}")

|

| 38 |

+

mlflow.log_artifacts(ratings_parquet_dir, "ratings-parquet-dir")

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

if __name__ == "__main__":

|

| 42 |

+

etl_data()

|

experimentation_mlops/example/load_raw_data.py

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Downloads the MovieLens dataset and saves it as an artifact

|

| 3 |

+

"""

|

| 4 |

+

import os

|

| 5 |

+

import tempfile

|

| 6 |

+

import zipfile

|

| 7 |

+

|

| 8 |

+

import click

|

| 9 |

+

import requests

|

| 10 |

+

|

| 11 |

+

import mlflow

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

@click.command(

|

| 15 |

+

help="Downloads the MovieLens dataset and saves it as an mlflow artifact "

|

| 16 |

+

"called 'ratings-csv-dir'."

|

| 17 |

+

)

|

| 18 |

+

@click.option("--url", default="http://files.grouplens.org/datasets/movielens/ml-20m.zip")

|

| 19 |

+

def load_raw_data(url):

|

| 20 |

+

with mlflow.start_run():

|

| 21 |

+

local_dir = tempfile.mkdtemp()

|

| 22 |

+

local_filename = os.path.join(local_dir, "ml-20m.zip")

|

| 23 |

+

print(f"Downloading {url} to {local_filename}")

|

| 24 |

+

r = requests.get(url, stream=True)

|

| 25 |

+

with open(local_filename, "wb") as f:

|

| 26 |

+

for chunk in r.iter_content(chunk_size=1024):

|

| 27 |

+

if chunk: # filter out keep-alive new chunks

|

| 28 |

+

f.write(chunk)

|

| 29 |

+

|

| 30 |

+

extracted_dir = os.path.join(local_dir, "ml-20m")

|

| 31 |

+

print(f"Extracting {local_filename} into {extracted_dir}")

|

| 32 |

+

with zipfile.ZipFile(local_filename, "r") as zip_ref:

|

| 33 |

+

zip_ref.extractall(local_dir)

|

| 34 |

+

|

| 35 |

+

ratings_file = os.path.join(extracted_dir, "ratings.csv")

|

| 36 |

+

|

| 37 |

+

print(f"Uploading ratings: {ratings_file}")

|

| 38 |

+

mlflow.log_artifact(ratings_file, "ratings-csv-dir")

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

if __name__ == "__main__":

|

| 42 |

+

load_raw_data()

|

experimentation_mlops/example/main.py

ADDED

|

@@ -0,0 +1,107 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Downloads the MovieLens dataset, ETLs it into Parquet, trains an

|

| 3 |

+

ALS model, and uses the ALS model to train a Keras neural network.

|

| 4 |

+

|

| 5 |

+

See README.rst for more details.

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

import os

|

| 9 |

+

|

| 10 |

+

import click

|

| 11 |

+

|

| 12 |

+

import mlflow

|

| 13 |

+

from mlflow.entities import RunStatus

|

| 14 |

+

from mlflow.tracking import MlflowClient

|

| 15 |

+

from mlflow.tracking.fluent import _get_experiment_id

|

| 16 |

+

from mlflow.utils import mlflow_tags

|

| 17 |

+

from mlflow.utils.logging_utils import eprint

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def _already_ran(entry_point_name, parameters, git_commit, experiment_id=None):

|

| 21 |

+

"""Best-effort detection of if a run with the given entrypoint name,

|

| 22 |

+

parameters, and experiment id already ran. The run must have completed

|

| 23 |

+

successfully and have at least the parameters provided.

|

| 24 |

+

"""

|

| 25 |

+

experiment_id = experiment_id if experiment_id is not None else _get_experiment_id()

|

| 26 |

+

client = MlflowClient()

|

| 27 |

+

all_runs = reversed(client.search_runs([experiment_id]))

|

| 28 |

+

for run in all_runs:

|

| 29 |

+

tags = run.data.tags

|

| 30 |

+

if tags.get(mlflow_tags.MLFLOW_PROJECT_ENTRY_POINT, None) != entry_point_name:

|

| 31 |

+

continue

|

| 32 |

+

match_failed = False

|

| 33 |

+

for param_key, param_value in parameters.items():

|

| 34 |

+

run_value = run.data.params.get(param_key)

|

| 35 |

+

if run_value != param_value:

|

| 36 |

+

match_failed = True

|

| 37 |

+

break

|

| 38 |

+

if match_failed:

|

| 39 |

+

continue

|

| 40 |

+

|

| 41 |

+

if run.info.to_proto().status != RunStatus.FINISHED:

|

| 42 |

+

eprint(

|

| 43 |

+

("Run matched, but is not FINISHED, so skipping (run_id={}, status={})").format(

|

| 44 |

+

run.info.run_id, run.info.status

|

| 45 |

+

)

|

| 46 |

+

)

|

| 47 |

+

continue

|

| 48 |

+

|

| 49 |

+

previous_version = tags.get(mlflow_tags.MLFLOW_GIT_COMMIT, None)

|

| 50 |

+

if git_commit != previous_version:

|

| 51 |

+

eprint(

|

| 52 |

+

"Run matched, but has a different source version, so skipping "

|

| 53 |

+

f"(found={previous_version}, expected={git_commit})"

|

| 54 |

+

)

|

| 55 |

+

continue

|

| 56 |

+

return client.get_run(run.info.run_id)

|

| 57 |

+

eprint("No matching run has been found.")

|

| 58 |

+

return None

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

# TODO(aaron): This is not great because it doesn't account for:

|

| 62 |

+

# - changes in code

|

| 63 |

+

# - changes in dependent steps

|

| 64 |

+

def _get_or_run(entrypoint, parameters, git_commit, use_cache=True):

|

| 65 |

+

existing_run = _already_ran(entrypoint, parameters, git_commit)

|

| 66 |

+

if use_cache and existing_run:

|

| 67 |

+

print(f"Found existing run for entrypoint={entrypoint} and parameters={parameters}")

|

| 68 |

+

return existing_run

|

| 69 |

+

print(f"Launching new run for entrypoint={entrypoint} and parameters={parameters}")

|

| 70 |

+

submitted_run = mlflow.run(".", entrypoint, parameters=parameters, env_manager="local")

|

| 71 |

+

return MlflowClient().get_run(submitted_run.run_id)

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

@click.command()

|

| 75 |

+

@click.option("--als-max-iter", default=10, type=int)

|

| 76 |

+

@click.option("--keras-hidden-units", default=20, type=int)

|

| 77 |

+

@click.option("--max-row-limit", default=100000, type=int)

|

| 78 |

+

def workflow(als_max_iter, keras_hidden_units, max_row_limit):

|

| 79 |

+

# Note: The entrypoint names are defined in MLproject. The artifact directories

|

| 80 |

+

# are documented by each step's .py file.

|

| 81 |

+

with mlflow.start_run() as active_run:

|

| 82 |

+

os.environ["SPARK_CONF_DIR"] = os.path.abspath(".")

|

| 83 |

+

git_commit = active_run.data.tags.get(mlflow_tags.MLFLOW_GIT_COMMIT)

|

| 84 |

+

load_raw_data_run = _get_or_run("load_raw_data", {}, git_commit)

|

| 85 |

+

ratings_csv_uri = os.path.join(load_raw_data_run.info.artifact_uri, "ratings-csv-dir")

|

| 86 |

+

etl_data_run = _get_or_run(

|

| 87 |

+

"etl_data", {"ratings_csv": ratings_csv_uri, "max_row_limit": max_row_limit}, git_commit

|

| 88 |

+

)

|

| 89 |

+

ratings_parquet_uri = os.path.join(etl_data_run.info.artifact_uri, "ratings-parquet-dir")

|

| 90 |

+

|

| 91 |

+

# We specify a spark-defaults.conf to override the default driver memory. ALS requires

|

| 92 |

+

# significant memory. The driver memory property cannot be set by the application itself.

|

| 93 |

+

als_run = _get_or_run(

|

| 94 |

+

"als", {"ratings_data": ratings_parquet_uri, "max_iter": str(als_max_iter)}, git_commit

|

| 95 |

+

)

|

| 96 |

+

als_model_uri = os.path.join(als_run.info.artifact_uri, "als-model")

|

| 97 |

+

|

| 98 |

+

keras_params = {

|

| 99 |

+

"ratings_data": ratings_parquet_uri,

|

| 100 |

+

"als_model_uri": als_model_uri,

|

| 101 |

+

"hidden_units": keras_hidden_units,

|

| 102 |

+

}

|

| 103 |

+

_get_or_run("train_keras", keras_params, git_commit, use_cache=False)

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

if __name__ == "__main__":

|

| 107 |

+

workflow()

|

experimentation_mlops/example/python_env.yaml

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

python: "3.8"

|

| 2 |

+

build_dependencies:

|

| 3 |

+

- pip

|

| 4 |

+

dependencies:

|

| 5 |

+

- tensorflow==1.15.2

|

| 6 |

+

- keras==2.2.4

|

| 7 |

+

- mlflow>=1.0

|

| 8 |

+

- pyspark

|

| 9 |

+

- requests

|

| 10 |

+

- click

|

experimentation_mlops/example/spark-defaults.conf

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

spark.driver.memory 8g

|

experimentation_mlops/example/train_keras.py

ADDED

|

@@ -0,0 +1,116 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Trains a Keras model for user/movie ratings. The input is a Parquet

|

| 3 |

+

ratings dataset (see etl_data.py) and an ALS model (see als.py), which we

|

| 4 |

+