v1 of chess space

Browse files- README.md +3 -11

- app.py +57 -0

- chessfenbot/.DS_Store +0 -0

- chessfenbot/.gitignore +21 -0

- chessfenbot/Dockerfile +31 -0

- chessfenbot/LICENSE +21 -0

- chessfenbot/__init__ +0 -0

- chessfenbot/cfb_helpers.py +22 -0

- chessfenbot/chessboard_finder.py +426 -0

- chessfenbot/chessbot.py +165 -0

- chessfenbot/dataset.py +61 -0

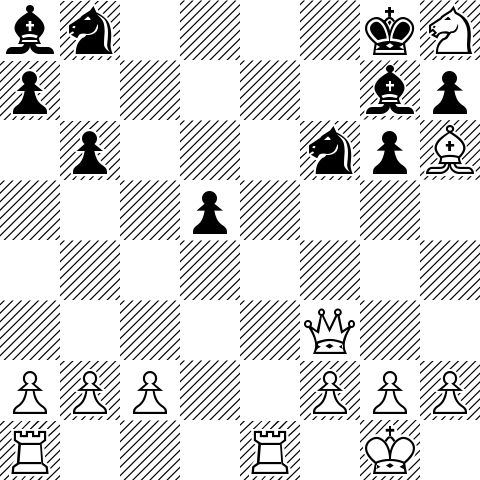

- chessfenbot/example_input.png +0 -0

- chessfenbot/helper_functions.py +172 -0

- chessfenbot/helper_functions_chessbot.py +163 -0

- chessfenbot/helper_image_loading.py +109 -0

- chessfenbot/helper_webkit2png.py +76 -0

- chessfenbot/message_template.py +38 -0

- chessfenbot/readme.md +127 -0

- chessfenbot/requirements.txt +7 -0

- chessfenbot/run_chessbot.sh +4 -0

- chessfenbot/save_graph.py +111 -0

- chessfenbot/saved_models/.DS_Store +0 -0

- chessfenbot/saved_models/cf_v1.0.tflite +0 -0

- chessfenbot/saved_models/checkpoint +2 -0

- chessfenbot/saved_models/frozen_graph.pb +3 -0

- chessfenbot/saved_models/graph.pb +3 -0

- chessfenbot/saved_models/graph.pbtxt +0 -0

- chessfenbot/saved_models/model_10000.ckpt +3 -0

- chessfenbot/tensorflow_chessbot.py +212 -0

- chessfenbot/tileset_generator.py +97 -0

- chessfenbot/webkit2png.py +414 -0

- requirements.txt +4 -0

README.md

CHANGED

|

@@ -1,12 +1,4 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

colorFrom: blue

|

| 5 |

-

colorTo: gray

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 3.0.19

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

---

|

| 11 |

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

+

# Working on setting up a HuggingFace space for chess, using Gradio

|

| 2 |

+

|

| 3 |

+

Step 1: deploy a model to predict the position from an image (using https://github.com/Elucidation/tensorflow_chessbot)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

|

|

|

app.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import re

|

| 2 |

+

import gradio as gr

|

| 3 |

+

|

| 4 |

+

from chessfenbot.chessboard_finder import findGrayscaleTilesInImage

|

| 5 |

+

from chessfenbot.tensorflow_chessbot import ChessboardPredictor

|

| 6 |

+

from chessfenbot.helper_functions import shortenFEN

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def predict(img, active="w"):

|

| 10 |

+

"""

|

| 11 |

+

main predict function for gradio.

|

| 12 |

+

Predict a chessboard FEN.

|

| 13 |

+

Wraps model from https://github.com/Elucidation/tensorflow_chessbot/tree/chessfenbot

|

| 14 |

+

|

| 15 |

+

Args:

|

| 16 |

+

img (PIL image): input image of a chess board

|

| 17 |

+

active (str): defaults to "w"

|

| 18 |

+

"""

|

| 19 |

+

|

| 20 |

+

# Look for chessboard in image, get corners and split chessboard into tiles

|

| 21 |

+

tiles, corners = findGrayscaleTilesInImage(img)

|

| 22 |

+

|

| 23 |

+

# Initialize predictor, takes a while, but only needed once

|

| 24 |

+

predictor = ChessboardPredictor(frozen_graph_path='chessfenbot/saved_models/frozen_graph.pb')

|

| 25 |

+

fen, tile_certainties = predictor.getPrediction(tiles)

|

| 26 |

+

predictor.close()

|

| 27 |

+

short_fen = shortenFEN(fen)

|

| 28 |

+

# Use the worst case certainty as our final uncertainty score

|

| 29 |

+

certainty = tile_certainties.min()

|

| 30 |

+

|

| 31 |

+

print('Per-tile certainty:')

|

| 32 |

+

print(tile_certainties)

|

| 33 |

+

print("Certainty range [%g - %g], Avg: %g" % (

|

| 34 |

+

tile_certainties.min(), tile_certainties.max(), tile_certainties.mean()))

|

| 35 |

+

|

| 36 |

+

# predicted FEN

|

| 37 |

+

fen_out = f"{short_fen} {active} - - 0 1"

|

| 38 |

+

# certainty

|

| 39 |

+

certainty = "%.1f%%" % (certainty*100)

|

| 40 |

+

# link to analysis board on Lichess

|

| 41 |

+

lichess_link = f'https://lichess.org/analysis/standard/{re.sub(" ", "_", fen_out)}'

|

| 42 |

+

|

| 43 |

+

return fen_out, certainty, lichess_link

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

gr.Interface(

|

| 47 |

+

predict,

|

| 48 |

+

inputs=gr.inputs.Image(label="Upload chess board", type="pil"),

|

| 49 |

+

outputs=[

|

| 50 |

+

gr.Textbox(label="FEN"),

|

| 51 |

+

gr.Textbox(label="certainty"),

|

| 52 |

+

gr.Textbox(label="Link to Lichess analysis board (copy and paste into URL)"),

|

| 53 |

+

],

|

| 54 |

+

title="Chess FEN bot",

|

| 55 |

+

examples=["chessfenbot/example_input.png"],

|

| 56 |

+

description="Simple wrapper around TensorFlow Chessbot (https://github.com/Elucidation/tensorflow_chessbot)"

|

| 57 |

+

).launch()

|

chessfenbot/.DS_Store

ADDED

|

Binary file (8.2 kB). View file

|

|

|

chessfenbot/.gitignore

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.ipynb_checkpoints

|

| 2 |

+

*.pyc

|

| 3 |

+

*.png

|

| 4 |

+

*.jpg

|

| 5 |

+

*.gif

|

| 6 |

+

|

| 7 |

+

!example_input.png

|

| 8 |

+

|

| 9 |

+

# Ignore chessboard input images and tile outputs

|

| 10 |

+

chessboards/

|

| 11 |

+

tiles/

|

| 12 |

+

|

| 13 |

+

# Ignore reddit username/password config file

|

| 14 |

+

auth_config.py

|

| 15 |

+

praw.ini

|

| 16 |

+

|

| 17 |

+

# Ignore tracking files

|

| 18 |

+

*.txt

|

| 19 |

+

!requirements.txt

|

| 20 |

+

|

| 21 |

+

!readme_images/*

|

chessfenbot/Dockerfile

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM tensorflow/tensorflow

|

| 2 |

+

MAINTAINER Sam <elucidation@gmail.com>

|

| 3 |

+

|

| 4 |

+

# Install python and pip and use pip to install the python reddit api PRAW

|

| 5 |

+

RUN apt-get -y update && apt-get install -y \

|

| 6 |

+

python-dev \

|

| 7 |

+

libxml2-dev \

|

| 8 |

+

libxslt1-dev \

|

| 9 |

+

libjpeg-dev \

|

| 10 |

+

vim \

|

| 11 |

+

&& apt-get clean

|

| 12 |

+

|

| 13 |

+

# Install python reddit api related files

|

| 14 |

+

RUN pip install praw==4.3.0 beautifulsoup4==4.4.1 lxml==3.3.3 Pillow==4.0.0 html5lib==1.0b8

|

| 15 |

+

|

| 16 |

+

# Clean up APT when done.

|

| 17 |

+

RUN apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

|

| 18 |

+

|

| 19 |

+

# Remove jupyter related files

|

| 20 |

+

RUN rm -rf /notebooks /run_jupyter.sh

|

| 21 |

+

|

| 22 |

+

# Copy code over

|

| 23 |

+

COPY . /tcb/

|

| 24 |

+

|

| 25 |

+

WORKDIR /tcb

|

| 26 |

+

|

| 27 |

+

# Run chessbot by default

|

| 28 |

+

CMD ["/tcb/run_chessbot.sh"]

|

| 29 |

+

|

| 30 |

+

# Start up the docker instance with the proper auth file using

|

| 31 |

+

# <machine>$ docker run -dt --rm --name cfb -v <local_auth_file>:/tcb/auth_config.py elucidation/tensorflow_chessbot

|

chessfenbot/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The MIT License (MIT)

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2016 Sameer Ansari

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

chessfenbot/__init__

ADDED

|

File without changes

|

chessfenbot/cfb_helpers.py

ADDED

|

@@ -0,0 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import time

|

| 2 |

+

from datetime import datetime

|

| 3 |

+

|

| 4 |

+

# Check if submission has a comment by this bot already

|

| 5 |

+

def previouslyRepliedTo(submission, me):

|

| 6 |

+

for comment in submission.comments:

|

| 7 |

+

if comment.author == me:

|

| 8 |

+

return True

|

| 9 |

+

return False

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def waitWithComments(sleep_time, segment=60):

|

| 13 |

+

"""Sleep for sleep_time seconds, printing to stdout every segment of time"""

|

| 14 |

+

print("\t%s - %s seconds to go..." % (datetime.now(), sleep_time))

|

| 15 |

+

while sleep_time > segment:

|

| 16 |

+

time.sleep(segment) # sleep in increments of 1 minute

|

| 17 |

+

sleep_time -= segment

|

| 18 |

+

print("\t%s - %s seconds to go..." % (datetime.now(), sleep_time))

|

| 19 |

+

time.sleep(sleep_time)

|

| 20 |

+

|

| 21 |

+

def logMessage(submission, status=""):

|

| 22 |

+

print("{} | {} {}: {}".format(datetime.now(), submission.id, status, submission.title.encode('utf-8')))

|

chessfenbot/chessboard_finder.py

ADDED

|

@@ -0,0 +1,426 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

# -*- coding: utf-8 -*-

|

| 3 |

+

# Pass in image of online chessboard screenshot, returns corners of chessboard

|

| 4 |

+

# usage: chessboard_finder.py [-h] urls [urls ...]

|

| 5 |

+

|

| 6 |

+

# Find orthorectified chessboard corners in image

|

| 7 |

+

|

| 8 |

+

# positional arguments:

|

| 9 |

+

# urls Input image urls

|

| 10 |

+

|

| 11 |

+

# optional arguments:

|

| 12 |

+

# -h, --help show this help message and exit

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

# sudo apt-get install libatlas-base-dev for numpy error, see https://github.com/Kitt-AI/snowboy/issues/262

|

| 16 |

+

import numpy as np

|

| 17 |

+

# sudo apt-get install libopenjp2-7 libtiff5

|

| 18 |

+

import PIL.Image

|

| 19 |

+

import argparse

|

| 20 |

+

from time import time

|

| 21 |

+

from .helper_image_loading import *

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

def nonmax_suppress_1d(arr, winsize=5):

|

| 25 |

+

"""Return 1d array with only peaks, use neighborhood window of winsize px"""

|

| 26 |

+

_arr = arr.copy()

|

| 27 |

+

|

| 28 |

+

for i in range(_arr.size):

|

| 29 |

+

if i == 0:

|

| 30 |

+

left_neighborhood = 0

|

| 31 |

+

else:

|

| 32 |

+

left_neighborhood = arr[max(0,i-winsize):i]

|

| 33 |

+

if i >= _arr.size-2:

|

| 34 |

+

right_neighborhood = 0

|

| 35 |

+

else:

|

| 36 |

+

right_neighborhood = arr[i+1:min(arr.size-1,i+winsize)]

|

| 37 |

+

|

| 38 |

+

if arr[i] < np.max(left_neighborhood) or arr[i] <= np.max(right_neighborhood):

|

| 39 |

+

_arr[i] = 0

|

| 40 |

+

return _arr

|

| 41 |

+

|

| 42 |

+

def findChessboardCorners(img_arr_gray, noise_threshold = 8000):

|

| 43 |

+

# Load image grayscale as an numpy array

|

| 44 |

+

# Return None on failure to find a chessboard

|

| 45 |

+

#

|

| 46 |

+

# noise_threshold: Ratio of standard deviation of hough values along an axis

|

| 47 |

+

# versus the number of pixels, manually measured bad trigger images

|

| 48 |

+

# at < 5,000 and good chessboards values at > 10,000

|

| 49 |

+

|

| 50 |

+

# Get gradients, split into positive and inverted negative components

|

| 51 |

+

gx, gy = np.gradient(img_arr_gray)

|

| 52 |

+

gx_pos = gx.copy()

|

| 53 |

+

gx_pos[gx_pos<0] = 0

|

| 54 |

+

gx_neg = -gx.copy()

|

| 55 |

+

gx_neg[gx_neg<0] = 0

|

| 56 |

+

|

| 57 |

+

gy_pos = gy.copy()

|

| 58 |

+

gy_pos[gy_pos<0] = 0

|

| 59 |

+

gy_neg = -gy.copy()

|

| 60 |

+

gy_neg[gy_neg<0] = 0

|

| 61 |

+

|

| 62 |

+

# 1-D ampltitude of hough transform of gradients about X & Y axes

|

| 63 |

+

num_px = img_arr_gray.shape[0] * img_arr_gray.shape[1]

|

| 64 |

+

hough_gx = gx_pos.sum(axis=1) * gx_neg.sum(axis=1)

|

| 65 |

+

hough_gy = gy_pos.sum(axis=0) * gy_neg.sum(axis=0)

|

| 66 |

+

|

| 67 |

+

# Check that gradient peak signal is strong enough by

|

| 68 |

+

# comparing normalized standard deviation to threshold

|

| 69 |

+

if min(hough_gx.std() / hough_gx.size,

|

| 70 |

+

hough_gy.std() / hough_gy.size) < noise_threshold:

|

| 71 |

+

return None

|

| 72 |

+

|

| 73 |

+

# Normalize and skeletonize to just local peaks

|

| 74 |

+

hough_gx = nonmax_suppress_1d(hough_gx) / hough_gx.max()

|

| 75 |

+

hough_gy = nonmax_suppress_1d(hough_gy) / hough_gy.max()

|

| 76 |

+

|

| 77 |

+

# Arbitrary threshold of 20% of max

|

| 78 |

+

hough_gx[hough_gx<0.2] = 0

|

| 79 |

+

hough_gy[hough_gy<0.2] = 0

|

| 80 |

+

|

| 81 |

+

# Now we have a set of potential vertical and horizontal lines that

|

| 82 |

+

# may contain some noisy readings, try different subsets of them with

|

| 83 |

+

# consistent spacing until we get a set of 7, choose strongest set of 7

|

| 84 |

+

pot_lines_x = np.where(hough_gx)[0]

|

| 85 |

+

pot_lines_y = np.where(hough_gy)[0]

|

| 86 |

+

pot_lines_x_vals = hough_gx[pot_lines_x]

|

| 87 |

+

pot_lines_y_vals = hough_gy[pot_lines_y]

|

| 88 |

+

|

| 89 |

+

# Get all possible length 7+ sequences

|

| 90 |

+

seqs_x = getAllSequences(pot_lines_x)

|

| 91 |

+

seqs_y = getAllSequences(pot_lines_y)

|

| 92 |

+

|

| 93 |

+

if len(seqs_x) == 0 or len(seqs_y) == 0:

|

| 94 |

+

return None

|

| 95 |

+

|

| 96 |

+

# Score sequences by the strength of their hough peaks

|

| 97 |

+

seqs_x_vals = [pot_lines_x_vals[[v in seq for v in pot_lines_x]] for seq in seqs_x]

|

| 98 |

+

seqs_y_vals = [pot_lines_y_vals[[v in seq for v in pot_lines_y]] for seq in seqs_y]

|

| 99 |

+

|

| 100 |

+

# shorten sequences to up to 9 values based on score

|

| 101 |

+

# X sequences

|

| 102 |

+

for i in range(len(seqs_x)):

|

| 103 |

+

seq = seqs_x[i]

|

| 104 |

+

seq_val = seqs_x_vals[i]

|

| 105 |

+

|

| 106 |

+

# if the length of sequence is more than 7 + edges = 9

|

| 107 |

+

# strip weakest edges

|

| 108 |

+

if len(seq) > 9:

|

| 109 |

+

# while not inner 7 chess lines, strip weakest edges

|

| 110 |

+

while len(seq) > 7:

|

| 111 |

+

if seq_val[0] > seq_val[-1]:

|

| 112 |

+

seq = seq[:-1]

|

| 113 |

+

seq_val = seq_val[:-1]

|

| 114 |

+

else:

|

| 115 |

+

seq = seq[1:]

|

| 116 |

+

seq_val = seq_val[1:]

|

| 117 |

+

|

| 118 |

+

seqs_x[i] = seq

|

| 119 |

+

seqs_x_vals[i] = seq_val

|

| 120 |

+

|

| 121 |

+

# Y sequences

|

| 122 |

+

for i in range(len(seqs_y)):

|

| 123 |

+

seq = seqs_y[i]

|

| 124 |

+

seq_val = seqs_y_vals[i]

|

| 125 |

+

|

| 126 |

+

while len(seq) > 9:

|

| 127 |

+

if seq_val[0] > seq_val[-1]:

|

| 128 |

+

seq = seq[:-1]

|

| 129 |

+

seq_val = seq_val[:-1]

|

| 130 |

+

else:

|

| 131 |

+

seq = seq[1:]

|

| 132 |

+

seq_val = seq_val[1:]

|

| 133 |

+

|

| 134 |

+

seqs_y[i] = seq

|

| 135 |

+

seqs_y_vals[i] = seq_val

|

| 136 |

+

|

| 137 |

+

# Now that we only have length 7-9 sequences, score and choose the best one

|

| 138 |

+

scores_x = np.array([np.mean(v) for v in seqs_x_vals])

|

| 139 |

+

scores_y = np.array([np.mean(v) for v in seqs_y_vals])

|

| 140 |

+

|

| 141 |

+

# Keep first sequence with the largest step size

|

| 142 |

+

# scores_x = np.array([np.median(np.diff(s)) for s in seqs_x])

|

| 143 |

+

# scores_y = np.array([np.median(np.diff(s)) for s in seqs_y])

|

| 144 |

+

|

| 145 |

+

#TODO(elucidation): Choose heuristic score between step size and hough response

|

| 146 |

+

|

| 147 |

+

best_seq_x = seqs_x[scores_x.argmax()]

|

| 148 |

+

best_seq_y = seqs_y[scores_y.argmax()]

|

| 149 |

+

# print(best_seq_x, best_seq_y)

|

| 150 |

+

|

| 151 |

+

# Now if we have sequences greater than length 7, (up to 9),

|

| 152 |

+

# that means we have up to 9 possible combinations of sets of 7 sequences

|

| 153 |

+

# We try all of them and see which has the best checkerboard response

|

| 154 |

+

sub_seqs_x = [best_seq_x[k:k+7] for k in range(len(best_seq_x) - 7 + 1)]

|

| 155 |

+

sub_seqs_y = [best_seq_y[k:k+7] for k in range(len(best_seq_y) - 7 + 1)]

|

| 156 |

+

|

| 157 |

+

dx = np.median(np.diff(best_seq_x))

|

| 158 |

+

dy = np.median(np.diff(best_seq_y))

|

| 159 |

+

corners = np.zeros(4, dtype=int)

|

| 160 |

+

|

| 161 |

+

# Add 1 buffer to include the outer tiles, since sequences are only using

|

| 162 |

+

# inner chessboard lines

|

| 163 |

+

corners[0] = int(best_seq_y[0]-dy)

|

| 164 |

+

corners[1] = int(best_seq_x[0]-dx)

|

| 165 |

+

corners[2] = int(best_seq_y[-1]+dy)

|

| 166 |

+

corners[3] = int(best_seq_x[-1]+dx)

|

| 167 |

+

|

| 168 |

+

# Generate crop image with on full sequence, which may be wider than a normal

|

| 169 |

+

# chessboard by an extra 2 tiles, we'll iterate over all combinations

|

| 170 |

+

# (up to 9) and choose the one that correlates best with a chessboard

|

| 171 |

+

gray_img_crop = PIL.Image.fromarray(img_arr_gray).crop(corners)

|

| 172 |

+

|

| 173 |

+

# Build a kernel image of an idea chessboard to correlate against

|

| 174 |

+

k = 8 # Arbitrarily chose 8x8 pixel tiles for correlation image

|

| 175 |

+

quad = np.ones([k,k])

|

| 176 |

+

kernel = np.vstack([np.hstack([quad,-quad]), np.hstack([-quad,quad])])

|

| 177 |

+

kernel = np.tile(kernel,(4,4)) # Becomes an 8x8 alternating grid (chessboard)

|

| 178 |

+

kernel = kernel/np.linalg.norm(kernel) # normalize

|

| 179 |

+

# 8*8 = 64x64 pixel ideal chessboard

|

| 180 |

+

|

| 181 |

+

k = 0

|

| 182 |

+

n = max(len(sub_seqs_x), len(sub_seqs_y))

|

| 183 |

+

final_corners = None

|

| 184 |

+

best_score = None

|

| 185 |

+

|

| 186 |

+

# Iterate over all possible combinations of sub sequences and keep the corners

|

| 187 |

+

# with the best correlation response to the ideal 64x64px chessboard

|

| 188 |

+

for i in range(len(sub_seqs_x)):

|

| 189 |

+

for j in range(len(sub_seqs_y)):

|

| 190 |

+

k = k + 1

|

| 191 |

+

|

| 192 |

+

# [y, x, y, x]

|

| 193 |

+

sub_corners = np.array([

|

| 194 |

+

sub_seqs_y[j][0]-corners[0]-dy, sub_seqs_x[i][0]-corners[1]-dx,

|

| 195 |

+

sub_seqs_y[j][-1]-corners[0]+dy, sub_seqs_x[i][-1]-corners[1]+dx],

|

| 196 |

+

dtype=np.int)

|

| 197 |

+

|

| 198 |

+

# Generate crop candidate, nearest pixel is fine for correlation check

|

| 199 |

+

sub_img = gray_img_crop.crop(sub_corners).resize((64,64))

|

| 200 |

+

|

| 201 |

+

# Perform correlation score, keep running best corners as our final output

|

| 202 |

+

# Use absolute since it's possible board is rotated 90 deg

|

| 203 |

+

score = np.abs(np.sum(kernel * sub_img))

|

| 204 |

+

if best_score is None or score > best_score:

|

| 205 |

+

best_score = score

|

| 206 |

+

final_corners = sub_corners + [corners[0], corners[1], corners[0], corners[1]]

|

| 207 |

+

|

| 208 |

+

return final_corners

|

| 209 |

+

|

| 210 |

+

def getAllSequences(seq, min_seq_len=7, err_px=5):

|

| 211 |

+

"""Given sequence of increasing numbers, get all sequences with common

|

| 212 |

+

spacing (within err_px) that contain at least min_seq_len values"""

|

| 213 |

+

|

| 214 |

+

# Sanity check that there are enough values to satisfy

|

| 215 |

+

if len(seq) < min_seq_len:

|

| 216 |

+

return []

|

| 217 |

+

|

| 218 |

+

# For every value, take the next value and see how many times we can step

|

| 219 |

+

# that falls on another value within err_px points

|

| 220 |

+

seqs = []

|

| 221 |

+

for i in range(len(seq)-1):

|

| 222 |

+

for j in range(i+1, len(seq)):

|

| 223 |

+

# Check that seq[i], seq[j] not already in previous sequences

|

| 224 |

+

duplicate = False

|

| 225 |

+

for prev_seq in seqs:

|

| 226 |

+

for k in range(len(prev_seq)-1):

|

| 227 |

+

if seq[i] == prev_seq[k] and seq[j] == prev_seq[k+1]:

|

| 228 |

+

duplicate = True

|

| 229 |

+

if duplicate:

|

| 230 |

+

continue

|

| 231 |

+

d = seq[j] - seq[i]

|

| 232 |

+

|

| 233 |

+

# Ignore two points that are within error bounds of each other

|

| 234 |

+

if d < err_px:

|

| 235 |

+

continue

|

| 236 |

+

|

| 237 |

+

s = [seq[i], seq[j]]

|

| 238 |

+

n = s[-1] + d

|

| 239 |

+

while np.abs((seq-n)).min() < err_px:

|

| 240 |

+

n = seq[np.abs((seq-n)).argmin()]

|

| 241 |

+

s.append(n)

|

| 242 |

+

n = s[-1] + d

|

| 243 |

+

|

| 244 |

+

if len(s) >= min_seq_len:

|

| 245 |

+

s = np.array(s)

|

| 246 |

+

seqs.append(s)

|

| 247 |

+

return seqs

|

| 248 |

+

|

| 249 |

+

def getChessTilesColor(img, corners):

|

| 250 |

+

# img is a color RGB image

|

| 251 |

+

# corners = (x0, y0, x1, y1) for top-left corner to bot-right corner of board

|

| 252 |

+

height, width, depth = img.shape

|

| 253 |

+

if depth !=3:

|

| 254 |

+

print("Need RGB color image input")

|

| 255 |

+

return None

|

| 256 |

+

|

| 257 |

+

# corners could be outside image bounds, pad image as needed

|

| 258 |

+

padl_x = max(0, -corners[0])

|

| 259 |

+

padl_y = max(0, -corners[1])

|

| 260 |

+

padr_x = max(0, corners[2] - width)

|

| 261 |

+

padr_y = max(0, corners[3] - height)

|

| 262 |

+

|

| 263 |

+

img_padded = np.pad(img, ((padl_y,padr_y),(padl_x,padr_x), (0,0)), mode='edge')

|

| 264 |

+

|

| 265 |

+

chessboard_img = img_padded[

|

| 266 |

+

(padl_y + corners[1]):(padl_y + corners[3]),

|

| 267 |

+

(padl_x + corners[0]):(padl_x + corners[2]), :]

|

| 268 |

+

|

| 269 |

+

# 256x256 px RGB image, 32x32px individual RGB tiles, normalized 0-1 floats

|

| 270 |

+

chessboard_img_resized = np.asarray( \

|

| 271 |

+

PIL.Image.fromarray(chessboard_img) \

|

| 272 |

+

.resize([256,256], PIL.Image.BILINEAR), dtype=np.float32) / 255.0

|

| 273 |

+

|

| 274 |

+

# stack deep 64 tiles with 3 channesl RGB each

|

| 275 |

+

# so, first 3 slabs are RGB for tile A1, then next 3 slabs for tile A2 etc.

|

| 276 |

+

tiles = np.zeros([32,32,3*64], dtype=np.float32) # color

|

| 277 |

+

# Assume A1 is bottom left of image, need to reverse rank since images start

|

| 278 |

+

# with origin in top left

|

| 279 |

+

for rank in range(8): # rows (numbers)

|

| 280 |

+

for file in range(8): # columns (letters)

|

| 281 |

+

# color

|

| 282 |

+

tiles[:,:,3*(rank*8+file):3*(rank*8+file+1)] = \

|

| 283 |

+

chessboard_img_resized[(7-rank)*32:((7-rank)+1)*32,file*32:(file+1)*32]

|

| 284 |

+

|

| 285 |

+

return tiles

|

| 286 |

+

|

| 287 |

+

def getChessBoardGray(img, corners):

|

| 288 |

+

# img is a grayscale image

|

| 289 |

+

# corners = (x0, y0, x1, y1) for top-left corner to bot-right corner of board

|

| 290 |

+

height, width = img.shape

|

| 291 |

+

|

| 292 |

+

# corners could be outside image bounds, pad image as needed

|

| 293 |

+

padl_x = max(0, -corners[0])

|

| 294 |

+

padl_y = max(0, -corners[1])

|

| 295 |

+

padr_x = max(0, corners[2] - width)

|

| 296 |

+

padr_y = max(0, corners[3] - height)

|

| 297 |

+

|

| 298 |

+

img_padded = np.pad(img, ((padl_y,padr_y),(padl_x,padr_x)), mode='edge')

|

| 299 |

+

|

| 300 |

+

chessboard_img = img_padded[

|

| 301 |

+

(padl_y + corners[1]):(padl_y + corners[3]),

|

| 302 |

+

(padl_x + corners[0]):(padl_x + corners[2])]

|

| 303 |

+

|

| 304 |

+

# 256x256 px image, 32x32px individual tiles

|

| 305 |

+

# Normalized

|

| 306 |

+

chessboard_img_resized = np.asarray( \

|

| 307 |

+

PIL.Image.fromarray(chessboard_img) \

|

| 308 |

+

.resize([256,256], PIL.Image.BILINEAR), dtype=np.uint8) / 255.0

|

| 309 |

+

return chessboard_img_resized

|

| 310 |

+

|

| 311 |

+

def getChessTilesGray(img, corners):

|

| 312 |

+

chessboard_img_resized = getChessBoardGray(img, corners)

|

| 313 |

+

return getTiles(chessboard_img_resized)

|

| 314 |

+

|

| 315 |

+

|

| 316 |

+

def getTiles(processed_gray_img):

|

| 317 |

+

# Given 256x256 px normalized grayscale image of a chessboard (32x32px per tile)

|

| 318 |

+

# NOTE (values must be in range 0-1)

|

| 319 |

+

# Return a 32x32x64 tile array

|

| 320 |

+

#

|

| 321 |

+

# stack deep 64 tiles

|

| 322 |

+

# so, first slab is tile A1, then A2 etc.

|

| 323 |

+

tiles = np.zeros([32,32,64], dtype=np.float32) # grayscale

|

| 324 |

+

# Assume A1 is bottom left of image, need to reverse rank since images start

|

| 325 |

+

# with origin in top left

|

| 326 |

+

for rank in range(8): # rows (numbers)

|

| 327 |

+

for file in range(8): # columns (letters)

|

| 328 |

+

tiles[:,:,(rank*8+file)] = \

|

| 329 |

+

processed_gray_img[(7-rank)*32:((7-rank)+1)*32,file*32:(file+1)*32]

|

| 330 |

+

|

| 331 |

+

return tiles

|

| 332 |

+

|

| 333 |

+

def findGrayscaleTilesInImage(img):

|

| 334 |

+

""" Find chessboard and convert into input tiles for CNN """

|

| 335 |

+

if img is None:

|

| 336 |

+

return None, None

|

| 337 |

+

|

| 338 |

+

# Convert to grayscale numpy array

|

| 339 |

+

img_arr = np.asarray(img.convert("L"), dtype=np.float32)

|

| 340 |

+

|

| 341 |

+

# Use computer vision to find orthorectified chessboard corners in image

|

| 342 |

+

corners = findChessboardCorners(img_arr)

|

| 343 |

+

if corners is None:

|

| 344 |

+

return None, None

|

| 345 |

+

|

| 346 |

+

# Pull grayscale tiles out given image and chessboard corners

|

| 347 |

+

tiles = getChessTilesGray(img_arr, corners)

|

| 348 |

+

|

| 349 |

+

# Return both the tiles as well as chessboard corner locations in the image

|

| 350 |

+

return tiles, corners

|

| 351 |

+

|

| 352 |

+

# DEBUG

|

| 353 |

+

# from matplotlib import pyplot as plt

|

| 354 |

+

# def plotTiles(tiles):

|

| 355 |

+

# """Plot color or grayscale tiles as 8x8 subplots"""

|

| 356 |

+

# plt.figure(figsize=(6,6))

|

| 357 |

+

# files = "ABCDEFGH"

|

| 358 |

+

# for rank in range(8): # rows (numbers)

|

| 359 |

+

# for file in range(8): # columns (letters)

|

| 360 |

+

# plt.subplot(8,8,(7-rank)*8 + file + 1) # Plot rank reverse order to match image

|

| 361 |

+

|

| 362 |

+

# if tiles.shape[2] == 64:

|

| 363 |

+

# # Grayscale

|

| 364 |

+

# tile = tiles[:,:,(rank*8+file)] # grayscale

|

| 365 |

+

# plt.imshow(tile, interpolation='None', cmap='gray', vmin = 0, vmax = 1)

|

| 366 |

+

# else:

|

| 367 |

+

# #Color

|

| 368 |

+

# tile = tiles[:,:,3*(rank*8+file):3*(rank*8+file+1)] # color

|

| 369 |

+

# plt.imshow(tile, interpolation='None',)

|

| 370 |

+

|

| 371 |

+

# plt.axis('off')

|

| 372 |

+

# plt.title('%s %d' % (files[file], rank+1), fontsize=6)

|

| 373 |

+

# plt.show()

|

| 374 |

+

|

| 375 |

+

def main(url):

|

| 376 |

+

print("Loading url %s..." % url)

|

| 377 |

+

color_img, url = loadImageFromURL(url)

|

| 378 |

+

|

| 379 |

+

# Fail if can't load image

|

| 380 |

+

if color_img is None:

|

| 381 |

+

print('Couldn\'t load url: %s' % url)

|

| 382 |

+

return

|

| 383 |

+

|

| 384 |

+

if color_img.mode != 'RGB':

|

| 385 |

+

color_img = color_img.convert('RGB')

|

| 386 |

+

print("Processing...")

|

| 387 |

+

a = time()

|

| 388 |

+

img_arr = np.asarray(color_img.convert("L"), dtype=np.float32)

|

| 389 |

+

corners = findChessboardCorners(img_arr)

|

| 390 |

+

print("Took %.4fs" % (time()-a))

|

| 391 |

+

# corners = [x0, y0, x1, y1] where (x0,y0)

|

| 392 |

+

# is top left and (x1,y1) is bot right

|

| 393 |

+

|

| 394 |

+

if corners is not None:

|

| 395 |

+

print("\tFound corners for %s: %s" % (url, corners))

|

| 396 |

+

link = getVisualizeLink(corners, url)

|

| 397 |

+

print(link)

|

| 398 |

+

|

| 399 |

+

# tiles = getChessTilesColor(np.array(color_img), corners)

|

| 400 |

+

# tiles = getChessTilesGray(img_arr, corners)

|

| 401 |

+

# plotTiles(tiles)

|

| 402 |

+

|

| 403 |

+

# plt.imshow(color_img, interpolation='none')

|

| 404 |

+

# plt.plot(corners[[0,0,2,2,0]]-0.5, corners[[1,3,3,1,1]]-0.5, color='red', linewidth=1)

|

| 405 |

+

# plt.show()

|

| 406 |

+

else:

|

| 407 |

+

print('\tNo corners found in image')

|

| 408 |

+

|

| 409 |

+

if __name__ == '__main__':

|

| 410 |

+

np.set_printoptions(suppress=True, precision=2)

|

| 411 |

+

parser = argparse.ArgumentParser(description='Find orthorectified chessboard corners in image')

|

| 412 |

+

parser.add_argument('urls', default=['https://i.redd.it/1uw3h772r0fy.png'],

|

| 413 |

+

metavar='urls', type=str, nargs='*', help='Input image urls')

|

| 414 |

+

# main('http://www.chessanytime.com/img/jeudirect/simplechess.png')

|

| 415 |

+

# main('https://i.imgur.com/JpzfV3y.jpg')

|

| 416 |

+

# main('https://i.imgur.com/jsCKzU9.jpg')

|

| 417 |

+

# main('https://i.imgur.com/49htmMA.png')

|

| 418 |

+

# main('https://i.imgur.com/HHdHGBX.png')

|

| 419 |

+

# main('http://imgur.com/By2xJkO')

|

| 420 |

+

# main('http://imgur.com/p8DJMly')

|

| 421 |

+

# main('https://i.imgur.com/Ns0iBrw.jpg')

|

| 422 |

+

# main('https://i.imgur.com/KLcCiuk.jpg')

|

| 423 |

+

args = parser.parse_args()

|

| 424 |

+

for url in args.urls:

|

| 425 |

+

main(url)

|

| 426 |

+

|

chessfenbot/chessbot.py

ADDED

|

@@ -0,0 +1,165 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python

|

| 2 |

+

# ChessFenBot daemon

|

| 3 |

+

# Finds submissions with chessboard images in them,

|

| 4 |

+

# use a tensorflow convolutional neural network to predict pieces and return

|

| 5 |

+

# a lichess analysis link and FEN diagram of chessboard

|

| 6 |

+

# Run with --dry to dry run without actual submissions

|

| 7 |

+

from __future__ import print_function

|

| 8 |

+

import praw

|

| 9 |

+

import requests

|

| 10 |

+

import socket

|

| 11 |

+

import time

|

| 12 |

+

from datetime import datetime

|

| 13 |

+

import argparse

|

| 14 |

+

|

| 15 |

+

import tensorflow_chessbot # For neural network model

|

| 16 |

+

from helper_functions_chessbot import *

|

| 17 |

+

from helper_functions import shortenFEN

|

| 18 |

+

from cfb_helpers import * # logging, comment waiting and self-reply helpers

|

| 19 |

+

|

| 20 |

+

def generateResponseMessage(submission, predictor):

|

| 21 |

+

print("\n---\nImage URL: %s" % submission.url)

|

| 22 |

+

|

| 23 |

+

# Use CNN to make a prediction

|

| 24 |

+

fen, certainty, visualize_link = predictor.makePrediction(submission.url)

|

| 25 |

+

|

| 26 |

+

if fen is None:

|

| 27 |

+

print("> %s - Couldn't generate FEN, skipping..." % datetime.now())

|

| 28 |

+

print("\n---\n")

|

| 29 |

+

return None

|

| 30 |

+

|

| 31 |

+

fen = shortenFEN(fen) # ex. '111pq11r' -> '3pq2r'

|

| 32 |

+

print("Predicted FEN: %s" % fen)

|

| 33 |

+

print("Certainty: %.4f%%" % (certainty*100))

|

| 34 |

+

|

| 35 |

+

# Get side from title or fen

|

| 36 |

+

side = getSideToPlay(submission.title, fen)

|

| 37 |

+

# Generate response message

|

| 38 |

+

msg = generateMessage(fen, certainty, side, visualize_link)

|

| 39 |

+

print("fen: %s\nside: %s\n" % (fen, side))

|

| 40 |

+

return msg

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def processSubmission(submission, cfb, predictor, args, reply_wait_time=10):

|

| 44 |

+

# Check if submission passes requirements and wasn't already replied to

|

| 45 |

+

if isPotentialChessboardTopic(submission):

|

| 46 |

+

if not previouslyRepliedTo(submission, cfb):

|

| 47 |

+

# Generate response

|

| 48 |

+

response = generateResponseMessage(submission, predictor)

|

| 49 |

+

if response is None:

|

| 50 |

+

logMessage(submission,"[NO-FEN]") # Skip since couldn't generate FEN

|

| 51 |

+

return

|

| 52 |

+

|

| 53 |

+

# Reply to submission with response

|

| 54 |

+

if not args.dry:

|

| 55 |

+

logMessage(submission,"[REPLIED]")

|

| 56 |

+

submission.reply(response)

|

| 57 |

+

else:

|

| 58 |

+

logMessage(submission,"[DRY-RUN-REPLIED]")

|

| 59 |

+

|

| 60 |

+

# Wait after submitting to not overload

|

| 61 |

+

waitWithComments(reply_wait_time)

|

| 62 |

+

else:

|

| 63 |

+

logMessage(submission,"[SKIP]") # Skip since replied to already

|

| 64 |

+

|

| 65 |

+

else:

|

| 66 |

+

logMessage(submission)

|

| 67 |

+

time.sleep(1) # Wait a second between normal submissions

|

| 68 |

+

|

| 69 |

+

def main(args):

|

| 70 |

+

resetTensorflowGraph()

|

| 71 |

+

running = True

|

| 72 |

+

reddit = praw.Reddit('CFB') # client credentials set up in local praw.ini file

|

| 73 |

+

cfb = reddit.user.me() # ChessFenBot object

|

| 74 |

+

subreddit = reddit.subreddit('chess+chessbeginners+AnarchyChess+betterchess+chesspuzzles')

|

| 75 |

+

predictor = tensorflow_chessbot.ChessboardPredictor()

|

| 76 |

+

|

| 77 |

+

while running:

|

| 78 |

+

# Start live stream on all submissions in the subreddit

|

| 79 |

+

stream = subreddit.stream.submissions()

|

| 80 |

+

try:

|

| 81 |

+

for submission in stream:

|

| 82 |

+

processSubmission(submission, cfb, predictor, args)

|

| 83 |

+

except (socket.error, requests.exceptions.ReadTimeout,

|

| 84 |

+

requests.packages.urllib3.exceptions.ReadTimeoutError,

|

| 85 |

+

requests.exceptions.ConnectionError) as e:

|

| 86 |

+

print(

|

| 87 |

+

"> %s - Connection error, skipping and continuing in 30 seconds: %s" % (

|

| 88 |

+

datetime.now(), e))

|

| 89 |

+

time.sleep(30)

|

| 90 |

+

continue

|

| 91 |

+

except Exception as e:

|

| 92 |

+

print("Unknown Error, skipping and continuing in 30 seconds:",e)

|

| 93 |

+

time.sleep(30)

|

| 94 |

+

continue

|

| 95 |

+

except KeyboardInterrupt:

|

| 96 |

+

print("Keyboard Interrupt: Exiting...")

|

| 97 |

+

running = False

|

| 98 |

+

break

|

| 99 |

+

|

| 100 |

+

predictor.close()

|

| 101 |

+

print('Finished')

|

| 102 |

+

|

| 103 |

+

def resetTensorflowGraph():

|

| 104 |

+

"""WIP needed to restart predictor after an error"""

|

| 105 |

+

import tensorflow as tf

|

| 106 |

+

print('Reset TF graph')

|

| 107 |

+

tf.reset_default_graph() # clear out graph

|

| 108 |

+

|

| 109 |

+

def runSpecificSubmission(args):

|

| 110 |

+

resetTensorflowGraph()

|

| 111 |

+

reddit = praw.Reddit('CFB') # client credentials set up in local praw.ini file

|

| 112 |

+

cfb = reddit.user.me() # ChessFenBot object

|

| 113 |

+

predictor = tensorflow_chessbot.ChessboardPredictor()

|

| 114 |

+

|

| 115 |

+

submission = reddit.submission(args.sub)

|

| 116 |

+

print("URL: ", submission.url)

|

| 117 |

+

if submission:

|

| 118 |

+

print('Processing...')

|

| 119 |

+

processSubmission(submission, cfb, predictor, args)

|

| 120 |

+

|

| 121 |

+

predictor.close()

|

| 122 |

+

print('Done')

|

| 123 |

+

|

| 124 |

+

def dryRunTest(submission='5tuerh'):

|

| 125 |

+

resetTensorflowGraph()

|

| 126 |

+

reddit = praw.Reddit('CFB') # client credentials set up in local praw.ini file

|

| 127 |

+

predictor = tensorflow_chessbot.ChessboardPredictor()

|

| 128 |

+

|

| 129 |

+

# Use a specific submission

|

| 130 |

+

submission = reddit.submission(submission)

|

| 131 |

+

print('Loading %s' % submission.id)

|

| 132 |

+

# Check if submission passes requirements and wasn't already replied to

|

| 133 |

+

if isPotentialChessboardTopic(submission):

|

| 134 |

+

# Generate response

|

| 135 |

+

response = generateResponseMessage(submission, predictor)

|

| 136 |

+

print("RESPONSE:\n")

|

| 137 |

+

print('-----------------------------')

|

| 138 |

+

print(response)

|

| 139 |

+

print('-----------------------------')

|

| 140 |

+

else:

|

| 141 |

+

print('Submission not considered chessboard topic')

|

| 142 |

+

|

| 143 |

+

predictor.close()

|

| 144 |

+

print('Finished')

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

if __name__ == '__main__':

|

| 149 |

+

parser = argparse.ArgumentParser()

|

| 150 |

+

parser.add_argument('--dry', help='dry run (don\'t actually submit replies)',

|

| 151 |

+

action="store_true", default=False)

|

| 152 |

+

parser.add_argument('--test', help='Dry run test on pre-existing comment)',

|

| 153 |

+

action="store_true", default=False)

|

| 154 |

+

parser.add_argument('--sub', help='Pass submission string to process')

|

| 155 |

+

args = parser.parse_args()

|

| 156 |

+

if args.test:

|

| 157 |

+

print('Doing dry run test on submission')

|

| 158 |

+

if args.sub:

|

| 159 |

+

dryRunTest(args.sub)

|

| 160 |

+

else:

|

| 161 |

+

dryRunTest()

|

| 162 |

+

elif args.sub is not None:

|

| 163 |

+

runSpecificSubmission(args)

|

| 164 |

+

else:

|

| 165 |

+

main(args)

|

chessfenbot/dataset.py

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import tensorflow as tf

|

| 2 |

+

# From https://tensorflow.googlesource.com/tensorflow/+/master/tensorflow/examples/tutorials/mnist/input_data.py

|

| 3 |

+

class DataSet(object):

|

| 4 |

+

def __init__(self, images, labels, dtype=tf.float32):

|

| 5 |

+

"""Construct a DataSet.

|

| 6 |

+

`dtype` can be either

|

| 7 |

+

`uint8` to leave the input as `[0, 255]`, or `float32` to rescale into

|

| 8 |

+

`[0, 1]`.

|

| 9 |

+

"""

|

| 10 |

+

dtype = tf.as_dtype(dtype).base_dtype

|

| 11 |

+

|

| 12 |

+

if dtype not in (tf.uint8, tf.float32):

|

| 13 |

+

raise TypeError('Invalid image dtype %r, expected uint8 or float32' %

|

| 14 |

+

dtype)

|

| 15 |

+

assert images.shape[0] == labels.shape[0], (

|

| 16 |

+

'images.shape: %s labels.shape: %s' % (images.shape,

|

| 17 |

+

labels.shape))

|

| 18 |

+

self._num_examples = images.shape[0]

|

| 19 |

+

# Convert shape from [num examples, rows, columns, depth]

|

| 20 |

+

# to [num examples, rows*columns] (assuming depth == 1)

|

| 21 |

+

assert images.shape[3] == 1

|

| 22 |

+

images = images.reshape(images.shape[0], images.shape[1] * images.shape[2])

|

| 23 |

+

if dtype == tf.float32:

|

| 24 |

+

# Convert from [0, 255] -> [0.0, 1.0].

|

| 25 |

+

images = images.astype(np.float32)

|

| 26 |

+

images = np.multiply(images, 1.0 / 255.0)

|

| 27 |

+

|

| 28 |

+

self._images = images

|

| 29 |

+

self._labels = labels

|

| 30 |

+

self._epochs_completed = 0

|

| 31 |

+

self._index_in_epoch = 0

|

| 32 |

+

@property

|

| 33 |

+

def images(self):

|

| 34 |

+

return self._images

|

| 35 |

+

@property

|

| 36 |

+

def labels(self):

|

| 37 |

+

return self._labels

|

| 38 |

+

@property

|

| 39 |

+

def num_examples(self):

|

| 40 |

+

return self._num_examples

|

| 41 |

+

@property

|

| 42 |

+

def epochs_completed(self):

|

| 43 |

+

return self._epochs_completed

|

| 44 |

+

def next_batch(self, batch_size):

|

| 45 |

+

"""Return the next `batch_size` examples from this data set."""

|

| 46 |

+

start = self._index_in_epoch

|

| 47 |

+

self._index_in_epoch += batch_size

|

| 48 |

+

if self._index_in_epoch > self._num_examples:

|

| 49 |

+

# Finished epoch

|

| 50 |

+

self._epochs_completed += 1

|

| 51 |

+

# Shuffle the data

|

| 52 |

+

perm = np.arange(self._num_examples)

|

| 53 |

+

np.random.shuffle(perm)

|

| 54 |

+

self._images = self._images[perm]

|

| 55 |

+

self._labels = self._labels[perm]

|

| 56 |

+