Spaces:

Runtime error

Runtime error

Commit

•

733c188

1

Parent(s):

dca9a73

Upload 21 files

Browse files- 00_Introduction_Computational_Graphs.ipynb +456 -0

- 01_PyTorch_Hello_World.ipynb +490 -0

- 02_A_Gentle_Introduction_to_PyTorch.ipynb +0 -0

- 03_Pytorch_Logistic_Regression_from_Scratch.ipynb +0 -0

- 04_Concise_Logistic_Regression.ipynb +718 -0

- 05_First_Neural_Network.ipynb +421 -0

- 06_Neural_Network_from_Scratch.ipynb +432 -0

- 07_bow.ipynb +365 -0

- 08_cbow.ipynb +286 -0

- 09_deep_cbow.ipynb +285 -0

- 10_Introduction_to_GNNs_with_PyTorch_Geometric.ipynb +1064 -0

- 11_RoBERTa_Fine_Tuning_Emotion_classification.ipynb +0 -0

- 12_Text_Classification_Attention_Positional_Embeddings.ipynb +707 -0

- 13_Siamese_Network.ipynb +0 -0

- 14_Variational_Auto_Encoder.ipynb +0 -0

- 15_Object_Detection_Sliding_Window.ipynb +0 -0

- 16_Object_Detection_Selective_Search.ipynb +0 -0

- 17_Attention_Is_All_You_Need.ipynb +0 -0

- 18_Feature_Tokenizer_Transformer.ipynb +0 -0

- 19_counterfactual_explanations.ipynb +618 -0

- 20_Named_Emtity_Recognition_Transformers.ipynb +0 -0

00_Introduction_Computational_Graphs.ipynb

ADDED

|

@@ -0,0 +1,456 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "raw",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"---\n",

|

| 8 |

+

"title: 01 Introduction to Computational Graphs\n",

|

| 9 |

+

"description: A basic tutorial to learn about computational graphs\n",

|

| 10 |

+

"---"

|

| 11 |

+

]

|

| 12 |

+

},

|

| 13 |

+

{

|

| 14 |

+

"cell_type": "markdown",

|

| 15 |

+

"metadata": {},

|

| 16 |

+

"source": [

|

| 17 |

+

"<a href=\"https://colab.research.google.com/drive/1eG1AF36Wa0EaANandAhrsbC3j04487SH?usp=sharing\" target=\"_blank\"><img align=\"left\" alt=\"Colab\" title=\"Open in Colab\" src=\"https://colab.research.google.com/assets/colab-badge.svg\"></a>"

|

| 18 |

+

]

|

| 19 |

+

},

|

| 20 |

+

{

|

| 21 |

+

"cell_type": "markdown",

|

| 22 |

+

"metadata": {

|

| 23 |

+

"id": "_MbzfbWoqAaR"

|

| 24 |

+

},

|

| 25 |

+

"source": [

|

| 26 |

+

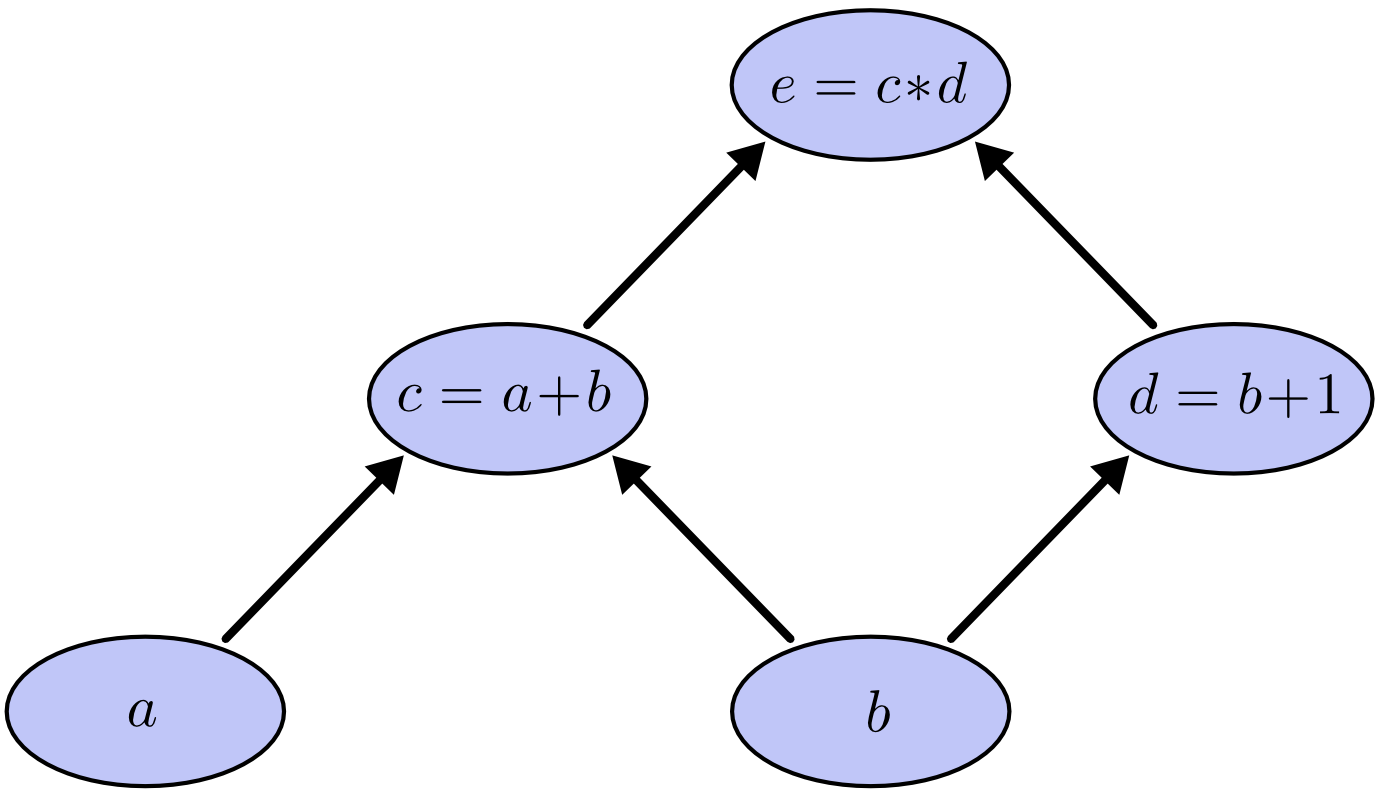

"## Introduction to Computational Graphs with PyTorch\n",

|

| 27 |

+

"\n",

|

| 28 |

+

"by [Elvis Saravia](https://twitter.com/omarsar0)\n",

|

| 29 |

+

"\n",

|

| 30 |

+

"\n",

|

| 31 |

+

"In this notebook we provide a short introduction and overview of computational graphs using PyTorch.\n",

|

| 32 |

+

"\n",

|

| 33 |

+

"There are several materials online that cover theory on the topic of computational graphs. However, I think it's much easier to learn the concept using code. I attempt to bridge the gap here which should be useful for beginner students. \n",

|

| 34 |

+

"\n",

|

| 35 |

+

"Inspired by Olah's article [\"Calculus on Computational Graphs: Backpropagation\"](https://colah.github.io/posts/2015-08-Backprop/), I've put together a few code snippets to get you started with computationsl graphs with PyTorch. This notebook should complement that article, so refer to it for more comprehensive explanations. In fact, I've tried to simplify the explanations and refer to them here."

|

| 36 |

+

]

|

| 37 |

+

},

|

| 38 |

+

{

|

| 39 |

+

"cell_type": "markdown",

|

| 40 |

+

"metadata": {

|

| 41 |

+

"id": "IGzBSo7H6xKu"

|

| 42 |

+

},

|

| 43 |

+

"source": [

|

| 44 |

+

"### Why Computational Graphs?"

|

| 45 |

+

]

|

| 46 |

+

},

|

| 47 |

+

{

|

| 48 |

+

"cell_type": "markdown",

|

| 49 |

+

"metadata": {

|

| 50 |

+

"id": "lkFMbiPDrGIp"

|

| 51 |

+

},

|

| 52 |

+

"source": [

|

| 53 |

+

"When talking about neural networks in any context, [backpropagation](https://en.wikipedia.org/wiki/Backpropagation) is an important topic to understand because it is the algorithm used for training deep neural networks. \n",

|

| 54 |

+

"\n",

|

| 55 |

+

"Backpropagation is used to calculate derivatives which is what you need to keep optimizing parameters of the model and allowing the model to learn on the task at hand. \n",

|

| 56 |

+

"\n",

|

| 57 |

+

"Many of the deep learning frameworks today like PyTorch does the backpropagation out-of-the-box using [**automatic differentiation**](https://pytorch.org/tutorials/beginner/blitz/autograd_tutorial.html). \n",

|

| 58 |

+

"\n",

|

| 59 |

+

"To better understand how this is done it's important to talk about **computational graphs** which defines the flow of computations that are carried out throughout the network. Along the way we will use `torch.autograd` to demonstrate in code how this works. "

|

| 60 |

+

]

|

| 61 |

+

},

|

| 62 |

+

{

|

| 63 |

+

"cell_type": "markdown",

|

| 64 |

+

"metadata": {

|

| 65 |

+

"id": "YXjsI50-sMAa"

|

| 66 |

+

},

|

| 67 |

+

"source": [

|

| 68 |

+

"### Getting Started\n",

|

| 69 |

+

"\n",

|

| 70 |

+

"Inspired by Olah's article on computational graphs, let's look at the following expression $e = (a + b) * (b+1)$. It helps to break it down to the following operations:\n",

|

| 71 |

+

"\n",

|

| 72 |

+

"$$\n",

|

| 73 |

+

"\\begin{aligned}&c=a+b \\\\&d=b+1 \\\\&e=c * d\\end{aligned}\n",

|

| 74 |

+

"$$"

|

| 75 |

+

]

|

| 76 |

+

},

|

| 77 |

+

{

|

| 78 |

+

"cell_type": "markdown",

|

| 79 |

+

"metadata": {

|

| 80 |

+

"id": "s0EG6DhnsnTm"

|

| 81 |

+

},

|

| 82 |

+

"source": [

|

| 83 |

+

"This is not a neural network of any sort. We are just going through a very simple example of a chain of operations which you can be represented with computational graphs. \n",

|

| 84 |

+

"\n",

|

| 85 |

+

"Let's visualize these operations using a computational graph. Computational graphs contain **nodes** which can represent and input (tensor, matrix, vector, scalar) or **operation** that can be the input to another node. The nodes are connected by **edges**, which represent a function argument, they are pointers to nodes. Note that the computation graphs are directed and acyclic. The computational graph for our example looks as follows:\n",

|

| 86 |

+

"\n",

|

| 87 |

+

"\n",

|

| 88 |

+

"\n",

|

| 89 |

+

"*Source: Christopher Olah (2015)*"

|

| 90 |

+

]

|

| 91 |

+

},

|

| 92 |

+

{

|

| 93 |

+

"cell_type": "markdown",

|

| 94 |

+

"metadata": {

|

| 95 |

+

"id": "m9VvF4CVtW0s"

|

| 96 |

+

},

|

| 97 |

+

"source": [

|

| 98 |

+

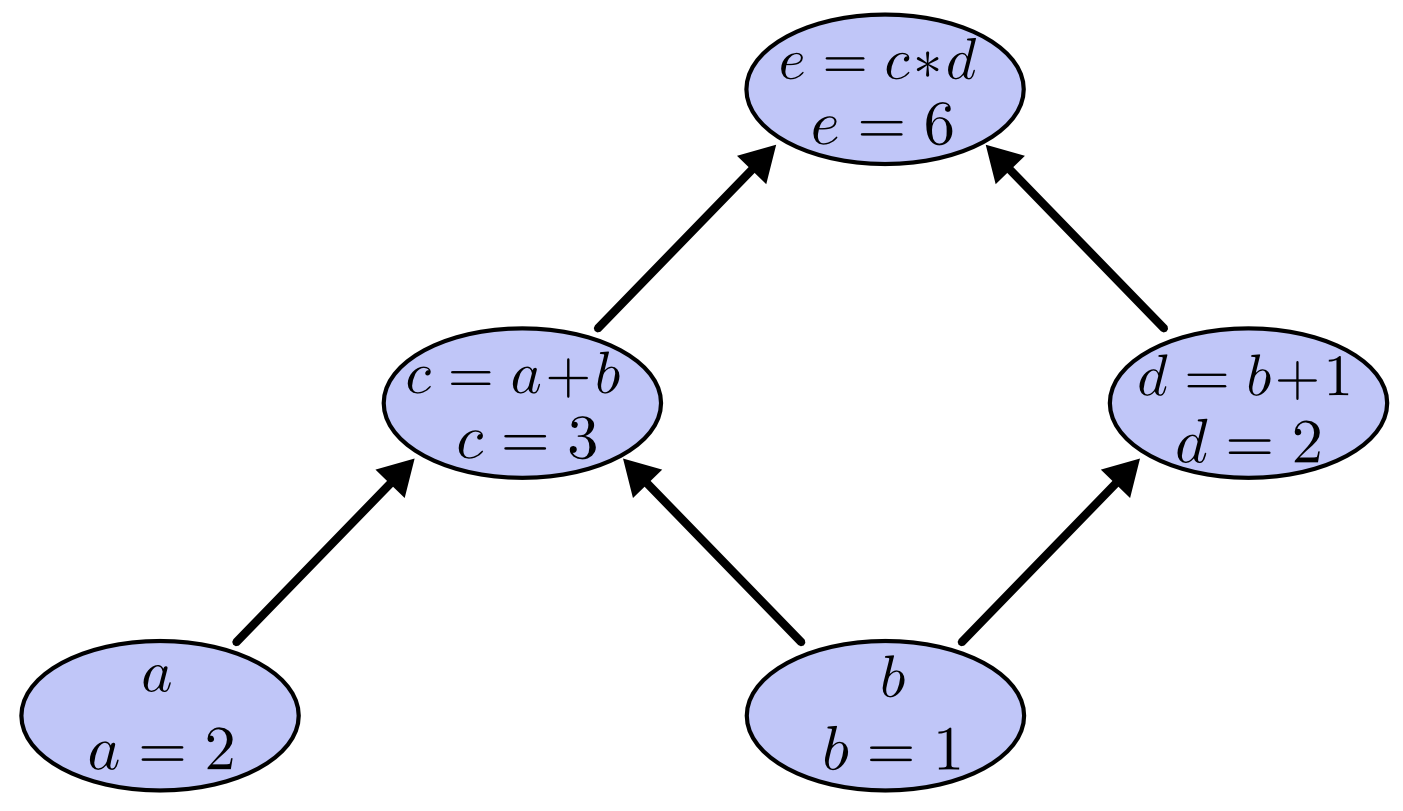

"We can evaluate the expression by setting our input variables as follows: $a=2$ and $b=1$. This will allow us to compute nodes up through the graph as shown in the computational graph above. \n",

|

| 99 |

+

"\n",

|

| 100 |

+

"Rather than doing this by hand, we can use the automatic differentation engine provided by PyTorch. \n",

|

| 101 |

+

"\n",

|

| 102 |

+

"Let's import PyTorch first:"

|

| 103 |

+

]

|

| 104 |

+

},

|

| 105 |

+

{

|

| 106 |

+

"cell_type": "code",

|

| 107 |

+

"execution_count": null,

|

| 108 |

+

"metadata": {

|

| 109 |

+

"id": "YuD6zdWZp7DP"

|

| 110 |

+

},

|

| 111 |

+

"outputs": [],

|

| 112 |

+

"source": [

|

| 113 |

+

"import torch"

|

| 114 |

+

]

|

| 115 |

+

},

|

| 116 |

+

{

|

| 117 |

+

"cell_type": "markdown",

|

| 118 |

+

"metadata": {

|

| 119 |

+

"id": "b7EKlMrouClt"

|

| 120 |

+

},

|

| 121 |

+

"source": [

|

| 122 |

+

"Define the inputs like this:"

|

| 123 |

+

]

|

| 124 |

+

},

|

| 125 |

+

{

|

| 126 |

+

"cell_type": "code",

|

| 127 |

+

"execution_count": null,

|

| 128 |

+

"metadata": {

|

| 129 |

+

"id": "OZ2pB2A3uEQZ"

|

| 130 |

+

},

|

| 131 |

+

"outputs": [],

|

| 132 |

+

"source": [

|

| 133 |

+

"a = torch.tensor([2.], requires_grad=True)\n",

|

| 134 |

+

"b = torch.tensor([1.], requires_grad=True)"

|

| 135 |

+

]

|

| 136 |

+

},

|

| 137 |

+

{

|

| 138 |

+

"cell_type": "markdown",

|

| 139 |

+

"metadata": {

|

| 140 |

+

"id": "Zm6Xl05quGZL"

|

| 141 |

+

},

|

| 142 |

+

"source": [

|

| 143 |

+

"Note that we used `requires_grad=True` to tell the autograd engine that every operation on them should be tracked. \n",

|

| 144 |

+

"\n",

|

| 145 |

+

"These are the operations in code:"

|

| 146 |

+

]

|

| 147 |

+

},

|

| 148 |

+

{

|

| 149 |

+

"cell_type": "code",

|

| 150 |

+

"execution_count": null,

|

| 151 |

+

"metadata": {

|

| 152 |

+

"id": "XwXomBUxu1Ib"

|

| 153 |

+

},

|

| 154 |

+

"outputs": [],

|

| 155 |

+

"source": [

|

| 156 |

+

"c = a + b\n",

|

| 157 |

+

"d = b + 1\n",

|

| 158 |

+

"e = c * d\n",

|

| 159 |

+

"\n",

|

| 160 |

+

"# grads populated for non-leaf nodes\n",

|

| 161 |

+

"c.retain_grad()\n",

|

| 162 |

+

"d.retain_grad()\n",

|

| 163 |

+

"e.retain_grad()"

|

| 164 |

+

]

|

| 165 |

+

},

|

| 166 |

+

{

|

| 167 |

+

"cell_type": "markdown",

|

| 168 |

+

"metadata": {

|

| 169 |

+

"id": "UzCLJvMku46r"

|

| 170 |

+

},

|

| 171 |

+

"source": [

|

| 172 |

+

"Note that we used `.retain_grad()` to allow gradients to be stored for non-leaf nodes as we are interested in inpecting those as well.\n",

|

| 173 |

+

"\n",

|

| 174 |

+

"Now that we have our computational graph, we can check the result when evaluating the expression:"

|

| 175 |

+

]

|

| 176 |

+

},

|

| 177 |

+

{

|

| 178 |

+

"cell_type": "code",

|

| 179 |

+

"execution_count": null,

|

| 180 |

+

"metadata": {

|

| 181 |

+

"colab": {

|

| 182 |

+

"base_uri": "https://localhost:8080/"

|

| 183 |

+

},

|

| 184 |

+

"id": "4t-uhE6vvH2j",

|

| 185 |

+

"outputId": "e834dbd0-0d8b-4123-d8fe-b9192aeaba9c"

|

| 186 |

+

},

|

| 187 |

+

"outputs": [

|

| 188 |

+

{

|

| 189 |

+

"name": "stdout",

|

| 190 |

+

"output_type": "stream",

|

| 191 |

+

"text": [

|

| 192 |

+

"tensor([6.], grad_fn=<MulBackward0>)\n"

|

| 193 |

+

]

|

| 194 |

+

}

|

| 195 |

+

],

|

| 196 |

+

"source": [

|

| 197 |

+

"print(e)"

|

| 198 |

+

]

|

| 199 |

+

},

|

| 200 |

+

{

|

| 201 |

+

"cell_type": "markdown",

|

| 202 |

+

"metadata": {

|

| 203 |

+

"id": "5eWub17iwi2L"

|

| 204 |

+

},

|

| 205 |

+

"source": [

|

| 206 |

+

"The output is a tensor with the value of `6.`, which verifies the results here: \n",

|

| 207 |

+

"\n",

|

| 208 |

+

"\n",

|

| 209 |

+

"*Source: Christopher Olah (2015)*"

|

| 210 |

+

]

|

| 211 |

+

},

|

| 212 |

+

{

|

| 213 |

+

"cell_type": "markdown",

|

| 214 |

+

"metadata": {

|

| 215 |

+

"id": "tjX3LCRmw22a"

|

| 216 |

+

},

|

| 217 |

+

"source": [

|

| 218 |

+

"### Derivatives on Computational Graphs\n",

|

| 219 |

+

"\n",

|

| 220 |

+

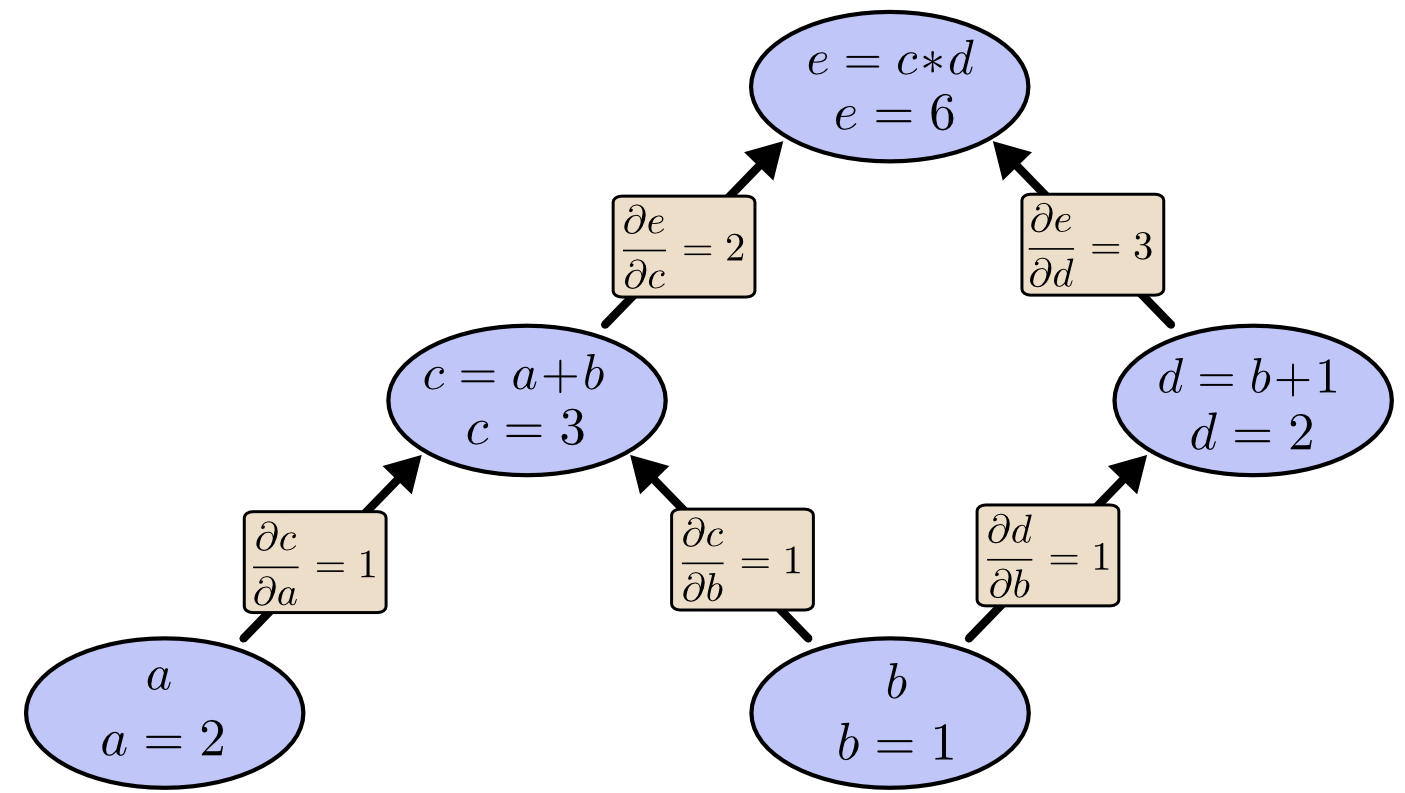

"Using the concept of computational graphs we are now interested in evaluating the **partial derivatives** of the edges of the graph. This will help in gathering the gradients of the graph. Remember that gradients are what we use to train the neural network and those calculations can be taken care of by the automatic differentation engine. \n",

|

| 221 |

+

"\n",

|

| 222 |

+

"The intuition is: we want to know, for example, if $a$ directly affects $c$, how does it affect it. In other words, if we change $a$ a little, how does $c$ change. This is referred to as the partial derivative of $c$ with respect to $a$.\n",

|

| 223 |

+

"\n",

|

| 224 |

+

"You can work this by hand, but the easy way to do this with PyTorch is by calling `.backward()` on $e$ and let the engine figure out the values. The `.backward()` signals the autograd engine to calculate the gradients and store them in the respective tensors’ `.grad` attribute.\n",

|

| 225 |

+

"\n",

|

| 226 |

+

"Let's do that now:"

|

| 227 |

+

]

|

| 228 |

+

},

|

| 229 |

+

{

|

| 230 |

+

"cell_type": "code",

|

| 231 |

+

"execution_count": null,

|

| 232 |

+

"metadata": {

|

| 233 |

+

"id": "Nc6lnO5yy1Cq"

|

| 234 |

+

},

|

| 235 |

+

"outputs": [],

|

| 236 |

+

"source": [

|

| 237 |

+

"e.backward()"

|

| 238 |

+

]

|

| 239 |

+

},

|

| 240 |

+

{

|

| 241 |

+

"cell_type": "markdown",

|

| 242 |

+

"metadata": {

|

| 243 |

+

"id": "hxbtx6OCy3I8"

|

| 244 |

+

},

|

| 245 |

+

"source": [

|

| 246 |

+

"Now, let’s say we are interested in the derivative of $e$ with respect to $a$, how do we obtain this? In other words, we are looking for $\\frac{\\partial e}{\\partial a}$."

|

| 247 |

+

]

|

| 248 |

+

},

|

| 249 |

+

{

|

| 250 |

+

"cell_type": "markdown",

|

| 251 |

+

"metadata": {

|

| 252 |

+

"id": "NvQcK9LTzD34"

|

| 253 |

+

},

|

| 254 |

+

"source": [

|

| 255 |

+

"Using PyTorch, we can do this by calling `a.grad`:"

|

| 256 |

+

]

|

| 257 |

+

},

|

| 258 |

+

{

|

| 259 |

+

"cell_type": "code",

|

| 260 |

+

"execution_count": null,

|

| 261 |

+

"metadata": {

|

| 262 |

+

"colab": {

|

| 263 |

+

"base_uri": "https://localhost:8080/"

|

| 264 |

+

},

|

| 265 |

+

"id": "5NWnWDg4zHDn",

|

| 266 |

+

"outputId": "40cfe57c-23ee-4142-e62f-f7ef4b65fff0"

|

| 267 |

+

},

|

| 268 |

+

"outputs": [

|

| 269 |

+

{

|

| 270 |

+

"name": "stdout",

|

| 271 |

+

"output_type": "stream",

|

| 272 |

+

"text": [

|

| 273 |

+

"tensor([2.])\n"

|

| 274 |

+

]

|

| 275 |

+

}

|

| 276 |

+

],

|

| 277 |

+

"source": [

|

| 278 |

+

"print(a.grad)"

|

| 279 |

+

]

|

| 280 |

+

},

|

| 281 |

+

{

|

| 282 |

+

"cell_type": "markdown",

|

| 283 |

+

"metadata": {

|

| 284 |

+

"id": "c05nEObzzbPn"

|

| 285 |

+

},

|

| 286 |

+

"source": [

|

| 287 |

+

"It is important to understand the intuition behind this. Olah puts it best:\n",

|

| 288 |

+

"\n",

|

| 289 |

+

">Let’s consider how $e$ is affected by $a$. If we change $a$ at a speed of 1, $c$ also changes at a speed of $1$. In turn, $c$ changing at a speed of $1$ causes $e$ to change at a speed of $2$. So $e$ changes at a rate of $1*2$ with respect to $a$.\n"

|

| 290 |

+

]

|

| 291 |

+

},

|

| 292 |

+

{

|

| 293 |

+

"cell_type": "markdown",

|

| 294 |

+

"metadata": {

|

| 295 |

+

"id": "8xXLOU37BYOr"

|

| 296 |

+

},

|

| 297 |

+

"source": [

|

| 298 |

+

"In other words, by hand this would be:\n",

|

| 299 |

+

"\n",

|

| 300 |

+

"$$\n",

|

| 301 |

+

"\\frac{\\partial e}{\\partial \\boldsymbol{a}}=\\frac{\\partial e}{\\partial \\boldsymbol{c}} \\frac{\\partial \\boldsymbol{c}}{\\partial \\boldsymbol{a}} = 2 * 1\n",

|

| 302 |

+

"$$"

|

| 303 |

+

]

|

| 304 |

+

},

|

| 305 |

+

{

|

| 306 |

+

"cell_type": "markdown",

|

| 307 |

+

"metadata": {

|

| 308 |

+

"id": "A2iNJu6jzT5v"

|

| 309 |

+

},

|

| 310 |

+

"source": [

|

| 311 |

+

"You can verify that this is correct by checking the manual calculations by Olah. Since $a$ is not directly connectected to $e$, we can use some special rule which allows to sum over all paths from one node to the other in the computational graph and mulitplying the derivatives on each edge of the path together.\n",

|

| 312 |

+

"\n",

|

| 313 |

+

"\n",

|

| 314 |

+

"*Source: Christopher Olah (2015)*"

|

| 315 |

+

]

|

| 316 |

+

},

|

| 317 |

+

{

|

| 318 |

+

"cell_type": "markdown",

|

| 319 |

+

"metadata": {

|

| 320 |

+

"id": "9uZE-Gl12cnB"

|

| 321 |

+

},

|

| 322 |

+

"source": [

|

| 323 |

+

"To check that this holds, let look at another example. How about caluclating the derivative of $e$ with respect to $b$, i.e., $\\frac{\\partial e}{\\partial b}$?\n",

|

| 324 |

+

"\n",

|

| 325 |

+

"We can get that through `b.grad`:"

|

| 326 |

+

]

|

| 327 |

+

},

|

| 328 |

+

{

|

| 329 |

+

"cell_type": "code",

|

| 330 |

+

"execution_count": null,

|

| 331 |

+

"metadata": {

|

| 332 |

+

"colab": {

|

| 333 |

+

"base_uri": "https://localhost:8080/"

|

| 334 |

+

},

|

| 335 |

+

"id": "2q11abV90d6i",

|

| 336 |

+

"outputId": "11571cdc-7e55-43a9-931f-ec1ecf140efa"

|

| 337 |

+

},

|

| 338 |

+

"outputs": [

|

| 339 |

+

{

|

| 340 |

+

"name": "stdout",

|

| 341 |

+

"output_type": "stream",

|

| 342 |

+

"text": [

|

| 343 |

+

"tensor([5.])\n"

|

| 344 |

+

]

|

| 345 |

+

}

|

| 346 |

+

],

|

| 347 |

+

"source": [

|

| 348 |

+

"print(b.grad)"

|

| 349 |

+

]

|

| 350 |

+

},

|

| 351 |

+

{

|

| 352 |

+

"cell_type": "markdown",

|

| 353 |

+

"metadata": {

|

| 354 |

+

"id": "2mGP1_iw0_ot"

|

| 355 |

+

},

|

| 356 |

+

"source": [

|

| 357 |

+

"If you work it out by hand, you are basically doing the following:\n",

|

| 358 |

+

"\n",

|

| 359 |

+

"$$\n",

|

| 360 |

+

"\\frac{\\partial e}{\\partial b}=1 * 2+1 * 3\n",

|

| 361 |

+

"$$\n",

|

| 362 |

+

"\n",

|

| 363 |

+

"It indicates how $b$ affects $e$ through $c$ and $d$. We are essentially summing over paths in the computational graph."

|

| 364 |

+

]

|

| 365 |

+

},

|

| 366 |

+

{

|

| 367 |

+

"cell_type": "markdown",

|

| 368 |

+

"metadata": {

|

| 369 |

+

"id": "sbJvhj5m13Zq"

|

| 370 |

+

},

|

| 371 |

+

"source": [

|

| 372 |

+

"Here are all the gradients collected, including non-leaf nodes:"

|

| 373 |

+

]

|

| 374 |

+

},

|

| 375 |

+

{

|

| 376 |

+

"cell_type": "code",

|

| 377 |

+

"execution_count": null,

|

| 378 |

+

"metadata": {

|

| 379 |

+

"colab": {

|

| 380 |

+

"base_uri": "https://localhost:8080/"

|

| 381 |

+

},

|

| 382 |

+

"id": "vrUxwsrd3-f-",

|

| 383 |

+

"outputId": "cc63c914-b2e4-43b9-8c43-dcd70975e8b0"

|

| 384 |

+

},

|

| 385 |

+

"outputs": [

|

| 386 |

+

{

|

| 387 |

+

"name": "stdout",

|

| 388 |

+

"output_type": "stream",

|

| 389 |

+

"text": [

|

| 390 |

+

"tensor([2.]) tensor([5.]) tensor([2.]) tensor([3.]) tensor([1.])\n"

|

| 391 |

+

]

|

| 392 |

+

}

|

| 393 |

+

],

|

| 394 |

+

"source": [

|

| 395 |

+

"print(a.grad, b.grad, c.grad, d.grad, e.grad)"

|

| 396 |

+

]

|

| 397 |

+

},

|

| 398 |

+

{

|

| 399 |

+

"cell_type": "markdown",

|

| 400 |

+

"metadata": {

|

| 401 |

+

"id": "HftIH5Mx4Pdj"

|

| 402 |

+

},

|

| 403 |

+

"source": [

|

| 404 |

+

"You can use the computational graph above to verify that everything is correct. This is the power of computational graphs and how they are used by automatic differentation engines. It's also a very useful concept to understand when developing neural networks architectures and their correctness."

|

| 405 |

+

]

|

| 406 |

+

},

|

| 407 |

+

{

|

| 408 |

+

"cell_type": "markdown",

|

| 409 |

+

"metadata": {

|

| 410 |

+

"id": "DxyJDoMOs1gu"

|

| 411 |

+

},

|

| 412 |

+

"source": [

|

| 413 |

+

"### Next Steps\n",

|

| 414 |

+

"\n",

|

| 415 |

+

"In this notebook, I've provided a simple and intuitive explanation to the concept of computational graphs using PyTorch. I highly recommend to go through [Olah's article](https://colah.github.io/posts/2015-08-Backprop/) for more on the topic.\n",

|

| 416 |

+

"\n",

|

| 417 |

+

"In the next tutorial, I will be applying the concept of computational graphs to more advanced operations you typically see in a neural network. In fact, if you are interested in this, and you are feeling comfortable with the topic now, you can check out these two PyTorch tutorials:\n",

|

| 418 |

+

"\n",

|

| 419 |

+

"- [A gentle introduction to `torch.autograd`](https://pytorch.org/tutorials/beginner/blitz/autograd_tutorial.html)\n",

|

| 420 |

+

"- [Automatic differentation with `torch.autograd`](https://pytorch.org/tutorials/beginner/basics/autogradqs_tutorial.html)\n",

|

| 421 |

+

"\n",

|

| 422 |

+

"And here are some other useful references used to put this article together:\n",

|

| 423 |

+

"\n",

|

| 424 |

+

"- [Hacker's guide to Neural Networks\n",

|

| 425 |

+

"](http://karpathy.github.io/neuralnets/)\n",

|

| 426 |

+

"- [Backpropagation calculus](https://www.youtube.com/watch?v=tIeHLnjs5U8&ab_channel=3Blue1Brown)\n",

|

| 427 |

+

"\n"

|

| 428 |

+

]

|

| 429 |

+

}

|

| 430 |

+

],

|

| 431 |

+

"metadata": {

|

| 432 |

+

"colab": {

|

| 433 |

+

"name": "Introduction-Computational-Graphs.ipynb",

|

| 434 |

+

"provenance": []

|

| 435 |

+

},

|

| 436 |

+

"kernelspec": {

|

| 437 |

+

"display_name": "Python 3 (ipykernel)",

|

| 438 |

+

"language": "python",

|

| 439 |

+

"name": "python3"

|

| 440 |

+

},

|

| 441 |

+

"language_info": {

|

| 442 |

+

"codemirror_mode": {

|

| 443 |

+

"name": "ipython",

|

| 444 |

+

"version": 3

|

| 445 |

+

},

|

| 446 |

+

"file_extension": ".py",

|

| 447 |

+

"mimetype": "text/x-python",

|

| 448 |

+

"name": "python",

|

| 449 |

+

"nbconvert_exporter": "python",

|

| 450 |

+

"pygments_lexer": "ipython3",

|

| 451 |

+

"version": "3.9.12"

|

| 452 |

+

}

|

| 453 |

+

},

|

| 454 |

+

"nbformat": 4,

|

| 455 |

+

"nbformat_minor": 1

|

| 456 |

+

}

|

01_PyTorch_Hello_World.ipynb

ADDED

|

@@ -0,0 +1,490 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "raw",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"---\n",

|

| 8 |

+

"title: 02 PyTorch Hello World!\n",

|

| 9 |

+

"description: Build a simple neural network and train it\n",

|

| 10 |

+

"---"

|

| 11 |

+

]

|

| 12 |

+

},

|

| 13 |

+

{

|

| 14 |

+

"cell_type": "markdown",

|

| 15 |

+

"metadata": {},

|

| 16 |

+

"source": [

|

| 17 |

+

"<a href=\"https://colab.research.google.com/drive/1ac0K9_aa46c77XEeYtaMAfSOfmH1Bl9L?usp=sharing\" target=\"_blank\"><img align=\"left\" alt=\"Colab\" title=\"Open in Colab\" src=\"https://colab.research.google.com/assets/colab-badge.svg\"></a>"

|

| 18 |

+

]

|

| 19 |

+

},

|

| 20 |

+

{

|

| 21 |

+

"cell_type": "markdown",

|

| 22 |

+

"metadata": {

|

| 23 |

+

"id": "H7gQFbUxOQtb"

|

| 24 |

+

},

|

| 25 |

+

"source": [

|

| 26 |

+

"# A First Shot at Deep Learning with PyTorch\n",

|

| 27 |

+

"\n",

|

| 28 |

+

"In this notebook, we are going to take a baby step into the world of deep learning using PyTorch. There are a ton of notebooks out there that teach you the fundamentals of deep learning and PyTorch, so here the idea is to give you some basic introduction to deep learning and PyTorch at a very high level. Therefore, this notebook is targeting beginners but it can also serve as a review for more experienced developers.\n",

|

| 29 |

+

"\n",

|

| 30 |

+

"After completion of this notebook, you are expected to know the basic components of training a basic neural network with PyTorch. I have also left a couple of exercises towards the end with the intention of encouraging more research and practise of your deep learning skills. \n",

|

| 31 |

+

"\n",

|

| 32 |

+

"---\n",

|

| 33 |

+

"\n",

|

| 34 |

+

"**Author:** Elvis Saravia([Twitter](https://twitter.com/omarsar0) | [LinkedIn](https://www.linkedin.com/in/omarsar/))\n",

|

| 35 |

+

"\n",

|

| 36 |

+

"**Complete Code Walkthrough:** [Blog post](https://medium.com/dair-ai/a-first-shot-at-deep-learning-with-pytorch-4a8252d30c75)"

|

| 37 |

+

]

|

| 38 |

+

},

|

| 39 |

+

{

|

| 40 |

+

"cell_type": "markdown",

|

| 41 |

+

"metadata": {

|

| 42 |

+

"id": "CkzttrQCwaSQ"

|

| 43 |

+

},

|

| 44 |

+

"source": [

|

| 45 |

+

"## Importing the libraries\n",

|

| 46 |

+

"\n",

|

| 47 |

+

"Like with any other programming exercise, the first step is to import the necessary libraries. As we are going to be using Google Colab to program our neural network, we need to install and import the necessary PyTorch libraries."

|

| 48 |

+

]

|

| 49 |

+

},

|

| 50 |

+

{

|

| 51 |

+

"cell_type": "code",

|

| 52 |

+

"execution_count": null,

|

| 53 |

+

"metadata": {

|

| 54 |

+

"colab": {

|

| 55 |

+

"base_uri": "https://localhost:8080/"

|

| 56 |

+

},

|

| 57 |

+

"id": "FuhJIaeXO2W9",

|

| 58 |

+

"outputId": "bf494471-115e-45a8-c7cb-15a26f12154a"

|

| 59 |

+

},

|

| 60 |

+

"outputs": [

|

| 61 |

+

{

|

| 62 |

+

"name": "stdout",

|

| 63 |

+

"output_type": "stream",

|

| 64 |

+

"text": [

|

| 65 |

+

"1.10.0+cu111\n"

|

| 66 |

+

]

|

| 67 |

+

}

|

| 68 |

+

],

|

| 69 |

+

"source": [

|

| 70 |

+

"## The usual imports\n",

|

| 71 |

+

"import torch\n",

|

| 72 |

+

"import torch.nn as nn\n",

|

| 73 |

+

"\n",

|

| 74 |

+

"## print out the pytorch version used\n",

|

| 75 |

+

"print(torch.__version__)"

|

| 76 |

+

]

|

| 77 |

+

},

|

| 78 |

+

{

|

| 79 |

+

"cell_type": "markdown",

|

| 80 |

+

"metadata": {

|

| 81 |

+

"id": "0a2C_nneO_wp"

|

| 82 |

+

},

|

| 83 |

+

"source": [

|

| 84 |

+

"## The Neural Network\n",

|

| 85 |

+

"\n",

|

| 86 |

+

"\n",

|

| 87 |

+

"\n",

|

| 88 |

+

"Before building and training a neural network the first step is to process and prepare the data. In this notebook, we are going to use syntethic data (i.e., fake data) so we won't be using any real world data. \n",

|

| 89 |

+

"\n",

|

| 90 |

+

"For the sake of simplicity, we are going to use the following input and output pairs converted to tensors, which is how data is typically represented in the world of deep learning. The x values represent the input of dimension `(6,1)` and the y values represent the output of similar dimension. The example is taken from this [tutorial](https://github.com/lmoroney/dlaicourse/blob/master/Course%201%20-%20Part%202%20-%20Lesson%202%20-%20Notebook.ipynb). \n",

|

| 91 |

+

"\n",

|

| 92 |

+

"The objective of the neural network model that we are going to build and train is to automatically learn patterns that better characterize the relationship between the `x` and `y` values. Essentially, the model learns the relationship that exists between inputs and outputs which can then be used to predict the corresponding `y` value for any given input `x`."

|

| 93 |

+

]

|

| 94 |

+

},

|

| 95 |

+

{

|

| 96 |

+

"cell_type": "code",

|

| 97 |

+

"execution_count": null,

|

| 98 |

+

"metadata": {

|

| 99 |

+

"id": "JWFtgUX85iwO"

|

| 100 |

+

},

|

| 101 |

+

"outputs": [],

|

| 102 |

+

"source": [

|

| 103 |

+

"## our data in tensor form\n",

|

| 104 |

+

"x = torch.tensor([[-1.0], [0.0], [1.0], [2.0], [3.0], [4.0]], dtype=torch.float)\n",

|

| 105 |

+

"y = torch.tensor([[-3.0], [-1.0], [1.0], [3.0], [5.0], [7.0]], dtype=torch.float)"

|

| 106 |

+

]

|

| 107 |

+

},

|

| 108 |

+

{

|

| 109 |

+

"cell_type": "code",

|

| 110 |

+

"execution_count": null,

|

| 111 |

+

"metadata": {

|

| 112 |

+

"colab": {

|

| 113 |

+

"base_uri": "https://localhost:8080/"

|

| 114 |

+

},

|

| 115 |

+

"id": "NcQUjR_95z5J",

|

| 116 |

+

"outputId": "6db5df38-6f9d-454e-87d6-cee0c29dccb3"

|

| 117 |

+

},

|

| 118 |

+

"outputs": [

|

| 119 |

+

{

|

| 120 |

+

"data": {

|

| 121 |

+

"text/plain": [

|

| 122 |

+

"torch.Size([6, 1])"

|

| 123 |

+

]

|

| 124 |

+

},

|

| 125 |

+

"execution_count": 3,

|

| 126 |

+

"metadata": {},

|

| 127 |

+

"output_type": "execute_result"

|

| 128 |

+

}

|

| 129 |

+

],

|

| 130 |

+

"source": [

|

| 131 |

+

"## print size of the input tensor\n",

|

| 132 |

+

"x.size()"

|

| 133 |

+

]

|

| 134 |

+

},

|

| 135 |

+

{

|

| 136 |

+

"cell_type": "markdown",

|

| 137 |

+

"metadata": {

|

| 138 |

+

"id": "9CJXO5WX1QtQ"

|

| 139 |

+

},

|

| 140 |

+

"source": [

|

| 141 |

+

"## The Neural Network Components\n",

|

| 142 |

+

"As said earlier, we are going to first define and build out the components of our neural network before training the model.\n",

|

| 143 |

+

"\n",

|

| 144 |

+

"### Model\n",

|

| 145 |

+

"\n",

|

| 146 |

+

"Typically, when building a neural network model, we define the layers and weights which form the basic components of the model. Below we show an example of how to define a hidden layer named `layer1` with size `(1, 1)`. For the purpose of this tutorial, we won't explicitly define the `weights` and allow the built-in functions provided by PyTorch to handle that part for us. By the way, the `nn.Linear(...)` function applies a linear transformation ($y = xA^T + b$) to the data that was provided as its input. We ignore the bias for now by setting `bias=False`.\n",

|

| 147 |

+

"\n",

|

| 148 |

+

"\n",

|

| 149 |

+

"\n"

|

| 150 |

+

]

|

| 151 |

+

},

|

| 152 |

+

{

|

| 153 |

+

"cell_type": "code",

|

| 154 |

+

"execution_count": null,

|

| 155 |

+

"metadata": {

|

| 156 |

+

"id": "N1Ii5JRz3Jud"

|

| 157 |

+

},

|

| 158 |

+

"outputs": [],

|

| 159 |

+

"source": [

|

| 160 |

+

"## Neural network with 1 hidden layer\n",

|

| 161 |

+

"layer1 = nn.Linear(1,1, bias=False)\n",

|

| 162 |

+

"model = nn.Sequential(layer1)"

|

| 163 |

+

]

|

| 164 |

+

},

|

| 165 |

+

{

|

| 166 |

+

"cell_type": "markdown",

|

| 167 |

+

"metadata": {

|

| 168 |

+

"id": "9HTWYD4aMBXQ"

|

| 169 |

+

},

|

| 170 |

+

"source": [

|

| 171 |

+

"### Loss and Optimizer\n",

|

| 172 |

+

"The loss function, `nn.MSELoss()`, is in charge of letting the model know how good it has learned the relationship between the input and output. The optimizer (in this case an `SGD`) primary role is to minimize or lower that loss value as it tunes its weights."

|

| 173 |

+

]

|

| 174 |

+

},

|

| 175 |

+

{

|

| 176 |

+

"cell_type": "code",

|

| 177 |

+

"execution_count": null,

|

| 178 |

+

"metadata": {

|

| 179 |

+

"id": "3hglFpejArxx"

|

| 180 |

+

},

|

| 181 |

+

"outputs": [],

|

| 182 |

+

"source": [

|

| 183 |

+

"## loss function\n",

|

| 184 |

+

"criterion = nn.MSELoss()\n",

|

| 185 |

+

"\n",

|

| 186 |

+

"## optimizer algorithm\n",

|

| 187 |

+

"optimizer = torch.optim.SGD(model.parameters(), lr=0.01)"

|

| 188 |

+

]

|

| 189 |

+

},

|

| 190 |

+

{

|

| 191 |

+

"cell_type": "markdown",

|

| 192 |

+

"metadata": {

|

| 193 |

+

"id": "FKj6jvZTUtGh"

|

| 194 |

+

},

|

| 195 |

+

"source": [

|

| 196 |

+

"## Training the Neural Network Model\n",

|

| 197 |

+

"We have all the components we need to train our model. Below is the code used to train our model. \n",

|

| 198 |

+

"\n",

|

| 199 |

+

"In simple terms, we train the model by feeding it the input and output pairs for a couple of rounds (i.e., `epoch`). After a series of forward and backward steps, the model somewhat learns the relationship between x and y values. This is notable by the decrease in the computed `loss`. For a more detailed explanation of this code check out this [tutorial](https://medium.com/dair-ai/a-simple-neural-network-from-scratch-with-pytorch-and-google-colab-c7f3830618e0). "

|

| 200 |

+

]

|

| 201 |

+

},

|

| 202 |

+

{

|

| 203 |

+

"cell_type": "code",

|

| 204 |

+

"execution_count": null,

|

| 205 |

+

"metadata": {

|

| 206 |

+

"colab": {

|

| 207 |

+

"base_uri": "https://localhost:8080/"

|

| 208 |

+

},

|

| 209 |

+

"id": "JeOr9i-aBzRv",

|

| 210 |

+

"outputId": "299a0b60-a64c-46c4-d031-8aaf1cacbff9"

|

| 211 |

+

},

|

| 212 |

+

"outputs": [

|

| 213 |

+

{

|

| 214 |

+

"name": "stdout",

|

| 215 |

+

"output_type": "stream",

|

| 216 |

+

"text": [

|

| 217 |

+

"Epoch: 0 | Loss: 10.1346\n",

|

| 218 |

+

"Epoch: 1 | Loss: 8.2589\n",

|

| 219 |

+

"Epoch: 2 | Loss: 6.7509\n",

|

| 220 |

+

"Epoch: 3 | Loss: 5.5385\n",

|

| 221 |

+

"Epoch: 4 | Loss: 4.5636\n",

|

| 222 |

+

"Epoch: 5 | Loss: 3.7798\n",

|

| 223 |

+

"Epoch: 6 | Loss: 3.1497\n",

|

| 224 |

+

"Epoch: 7 | Loss: 2.6430\n",

|

| 225 |

+

"Epoch: 8 | Loss: 2.2356\n",

|

| 226 |

+

"Epoch: 9 | Loss: 1.9081\n",

|

| 227 |

+

"Epoch: 10 | Loss: 1.6448\n",

|

| 228 |

+

"Epoch: 11 | Loss: 1.4331\n",

|

| 229 |

+

"Epoch: 12 | Loss: 1.2628\n",

|

| 230 |

+

"Epoch: 13 | Loss: 1.1260\n",

|

| 231 |

+

"Epoch: 14 | Loss: 1.0159\n",

|

| 232 |

+

"Epoch: 15 | Loss: 0.9275\n",

|

| 233 |

+

"Epoch: 16 | Loss: 0.8563\n",

|

| 234 |

+

"Epoch: 17 | Loss: 0.7991\n",

|

| 235 |

+

"Epoch: 18 | Loss: 0.7532\n",

|

| 236 |

+

"Epoch: 19 | Loss: 0.7162\n",

|

| 237 |

+

"Epoch: 20 | Loss: 0.6865\n",

|

| 238 |

+

"Epoch: 21 | Loss: 0.6626\n",

|

| 239 |

+

"Epoch: 22 | Loss: 0.6433\n",

|

| 240 |

+

"Epoch: 23 | Loss: 0.6279\n",

|

| 241 |

+

"Epoch: 24 | Loss: 0.6155\n",

|

| 242 |

+

"Epoch: 25 | Loss: 0.6055\n",

|

| 243 |

+

"Epoch: 26 | Loss: 0.5975\n",

|

| 244 |

+

"Epoch: 27 | Loss: 0.5910\n",

|

| 245 |

+

"Epoch: 28 | Loss: 0.5858\n",

|

| 246 |

+

"Epoch: 29 | Loss: 0.5816\n",

|

| 247 |

+

"Epoch: 30 | Loss: 0.5783\n",

|

| 248 |

+

"Epoch: 31 | Loss: 0.5756\n",

|

| 249 |

+

"Epoch: 32 | Loss: 0.5734\n",

|

| 250 |

+

"Epoch: 33 | Loss: 0.5717\n",

|

| 251 |

+

"Epoch: 34 | Loss: 0.5703\n",

|

| 252 |

+

"Epoch: 35 | Loss: 0.5691\n",

|

| 253 |

+

"Epoch: 36 | Loss: 0.5682\n",

|

| 254 |

+

"Epoch: 37 | Loss: 0.5675\n",

|

| 255 |

+

"Epoch: 38 | Loss: 0.5669\n",

|

| 256 |

+

"Epoch: 39 | Loss: 0.5664\n",

|

| 257 |

+

"Epoch: 40 | Loss: 0.5661\n",

|

| 258 |

+

"Epoch: 41 | Loss: 0.5658\n",

|

| 259 |

+

"Epoch: 42 | Loss: 0.5655\n",

|

| 260 |

+

"Epoch: 43 | Loss: 0.5653\n",

|

| 261 |

+

"Epoch: 44 | Loss: 0.5652\n",

|

| 262 |

+

"Epoch: 45 | Loss: 0.5650\n",

|

| 263 |

+

"Epoch: 46 | Loss: 0.5649\n",

|

| 264 |

+

"Epoch: 47 | Loss: 0.5649\n",

|

| 265 |

+

"Epoch: 48 | Loss: 0.5648\n",

|

| 266 |

+

"Epoch: 49 | Loss: 0.5647\n",

|

| 267 |

+

"Epoch: 50 | Loss: 0.5647\n",

|

| 268 |

+

"Epoch: 51 | Loss: 0.5647\n",

|

| 269 |

+

"Epoch: 52 | Loss: 0.5646\n",

|

| 270 |

+

"Epoch: 53 | Loss: 0.5646\n",

|

| 271 |

+

"Epoch: 54 | Loss: 0.5646\n",

|

| 272 |

+

"Epoch: 55 | Loss: 0.5646\n",

|

| 273 |

+

"Epoch: 56 | Loss: 0.5646\n",

|

| 274 |

+

"Epoch: 57 | Loss: 0.5646\n",

|

| 275 |

+

"Epoch: 58 | Loss: 0.5645\n",

|

| 276 |

+

"Epoch: 59 | Loss: 0.5645\n",

|

| 277 |

+

"Epoch: 60 | Loss: 0.5645\n",

|

| 278 |

+

"Epoch: 61 | Loss: 0.5645\n",

|

| 279 |

+

"Epoch: 62 | Loss: 0.5645\n",

|

| 280 |

+

"Epoch: 63 | Loss: 0.5645\n",

|

| 281 |

+

"Epoch: 64 | Loss: 0.5645\n",

|

| 282 |

+

"Epoch: 65 | Loss: 0.5645\n",

|

| 283 |

+

"Epoch: 66 | Loss: 0.5645\n",

|

| 284 |

+

"Epoch: 67 | Loss: 0.5645\n",

|

| 285 |

+

"Epoch: 68 | Loss: 0.5645\n",

|

| 286 |

+

"Epoch: 69 | Loss: 0.5645\n",

|

| 287 |

+

"Epoch: 70 | Loss: 0.5645\n",

|

| 288 |

+

"Epoch: 71 | Loss: 0.5645\n",

|

| 289 |

+

"Epoch: 72 | Loss: 0.5645\n",

|

| 290 |

+

"Epoch: 73 | Loss: 0.5645\n",

|

| 291 |

+

"Epoch: 74 | Loss: 0.5645\n",

|

| 292 |

+

"Epoch: 75 | Loss: 0.5645\n",

|

| 293 |

+

"Epoch: 76 | Loss: 0.5645\n",

|

| 294 |

+

"Epoch: 77 | Loss: 0.5645\n",

|

| 295 |

+

"Epoch: 78 | Loss: 0.5645\n",

|

| 296 |

+

"Epoch: 79 | Loss: 0.5645\n",

|

| 297 |

+

"Epoch: 80 | Loss: 0.5645\n",

|

| 298 |

+

"Epoch: 81 | Loss: 0.5645\n",

|

| 299 |

+

"Epoch: 82 | Loss: 0.5645\n",

|

| 300 |

+

"Epoch: 83 | Loss: 0.5645\n",

|

| 301 |

+

"Epoch: 84 | Loss: 0.5645\n",

|

| 302 |

+

"Epoch: 85 | Loss: 0.5645\n",

|

| 303 |

+

"Epoch: 86 | Loss: 0.5645\n",

|

| 304 |

+

"Epoch: 87 | Loss: 0.5645\n",

|

| 305 |

+

"Epoch: 88 | Loss: 0.5645\n",

|

| 306 |

+

"Epoch: 89 | Loss: 0.5645\n",

|

| 307 |

+

"Epoch: 90 | Loss: 0.5645\n",

|

| 308 |

+

"Epoch: 91 | Loss: 0.5645\n",

|

| 309 |

+

"Epoch: 92 | Loss: 0.5645\n",

|

| 310 |

+

"Epoch: 93 | Loss: 0.5645\n",

|

| 311 |

+

"Epoch: 94 | Loss: 0.5645\n",

|

| 312 |

+

"Epoch: 95 | Loss: 0.5645\n",

|

| 313 |

+

"Epoch: 96 | Loss: 0.5645\n",

|

| 314 |

+

"Epoch: 97 | Loss: 0.5645\n",

|

| 315 |

+

"Epoch: 98 | Loss: 0.5645\n",

|

| 316 |

+

"Epoch: 99 | Loss: 0.5645\n",

|

| 317 |

+

"Epoch: 100 | Loss: 0.5645\n",

|

| 318 |

+

"Epoch: 101 | Loss: 0.5645\n",

|

| 319 |

+

"Epoch: 102 | Loss: 0.5645\n",

|

| 320 |

+

"Epoch: 103 | Loss: 0.5645\n",

|

| 321 |

+

"Epoch: 104 | Loss: 0.5645\n",

|

| 322 |

+

"Epoch: 105 | Loss: 0.5645\n",

|

| 323 |

+

"Epoch: 106 | Loss: 0.5645\n",

|

| 324 |

+

"Epoch: 107 | Loss: 0.5645\n",

|

| 325 |

+

"Epoch: 108 | Loss: 0.5645\n",

|

| 326 |

+

"Epoch: 109 | Loss: 0.5645\n",

|

| 327 |

+