Spaces:

Sleeping

Sleeping

first

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +4 -0

- README.md +1 -0

- app.py +95 -0

- example/arcane/anne.jpg +0 -0

- example/arcane/boy2.jpg +0 -0

- example/arcane/cap.jpg +0 -0

- example/arcane/dune2.jpg +0 -0

- example/arcane/elon.jpg +0 -0

- example/arcane/girl.jpg +0 -0

- example/arcane/girl4.jpg +0 -0

- example/arcane/girl6.jpg +0 -0

- example/arcane/leo.jpg +0 -0

- example/arcane/man2.jpg +0 -0

- example/arcane/nat_.jpg +0 -0

- example/arcane/seydoux.jpg +0 -0

- example/arcane/tobey.jpg +0 -0

- example/face/anne.jpg +0 -0

- example/face/boy2.jpg +0 -0

- example/face/cap.jpg +0 -0

- example/face/dune2.jpg +0 -0

- example/face/elon.jpg +0 -0

- example/face/girl.jpg +0 -0

- example/face/girl4.jpg +0 -0

- example/face/girl6.jpg +0 -0

- example/face/leo.jpg +0 -0

- example/face/man2.jpg +0 -0

- example/face/nat_.jpg +0 -0

- example/face/seydoux.jpg +0 -0

- example/face/tobey.jpg +0 -0

- example/generate_examples.py +49 -0

- example/more/hayao_v2/pexels-arnie-chou-304906-1004122.jpg +0 -0

- example/more/hayao_v2/pexels-camilacarneiro-6318793.jpg +0 -0

- example/more/hayao_v2/pexels-haohd-19859127.jpg +0 -0

- example/more/hayao_v2/pexels-huy-nguyen-748440234-19838813.jpg +0 -0

- example/more/hayao_v2/pexels-huy-phan-316220-1422386.jpg +0 -0

- example/more/hayao_v2/pexels-jimmy-teoh-294331-951531.jpg +0 -0

- example/more/hayao_v2/pexels-nandhukumar-450441.jpg +0 -0

- example/more/hayao_v2/pexels-sevenstormphotography-575362.jpg +0 -0

- inference.py +410 -0

- losses.py +248 -0

- models/__init__.py +3 -0

- models/anime_gan.py +112 -0

- models/anime_gan_v2.py +65 -0

- models/anime_gan_v3.py +14 -0

- models/conv_blocks.py +171 -0

- models/layers.py +28 -0

- models/vgg.py +80 -0

- predict.py +35 -0

- train.py +163 -0

- trainer/__init__.py +437 -0

.gitignore

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.cache

|

| 2 |

+

__pycache__

|

| 3 |

+

output

|

| 4 |

+

.token

|

README.md

CHANGED

|

@@ -11,3 +11,4 @@ license: mit

|

|

| 11 |

---

|

| 12 |

|

| 13 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

| 14 |

+

<!-- https://huggingface.co/spaces/ptran1203/pytorchAnimeGAN -->

|

app.py

ADDED

|

@@ -0,0 +1,95 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import cv2

|

| 3 |

+

import numpy as np

|

| 4 |

+

import gradio as gr

|

| 5 |

+

from inference import Predictor

|

| 6 |

+

from utils.image_processing import resize_image

|

| 7 |

+

|

| 8 |

+

os.makedirs('output', exist_ok=True)

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

def inference(

|

| 12 |

+

image: np.ndarray,

|

| 13 |

+

style,

|

| 14 |

+

imgsz=None,

|

| 15 |

+

):

|

| 16 |

+

retain_color = False

|

| 17 |

+

|

| 18 |

+

weight = {

|

| 19 |

+

"AnimeGAN_Hayao": "hayao",

|

| 20 |

+

"AnimeGAN_Shinkai": "shinkai",

|

| 21 |

+

"AnimeGANv2_Hayao": "hayao:v2",

|

| 22 |

+

"AnimeGANv2_Shinkai": "shinkai:v2",

|

| 23 |

+

"AnimeGANv2_Arcane": "arcane:v2",

|

| 24 |

+

}[style]

|

| 25 |

+

predictor = Predictor(

|

| 26 |

+

weight,

|

| 27 |

+

device='cpu',

|

| 28 |

+

retain_color=retain_color,

|

| 29 |

+

imgsz=imgsz,

|

| 30 |

+

)

|

| 31 |

+

|

| 32 |

+

save_path = f"output/out.jpg"

|

| 33 |

+

image = resize_image(image, width=imgsz)

|

| 34 |

+

anime_image = predictor.transform(image)[0]

|

| 35 |

+

cv2.imwrite(save_path, anime_image[..., ::-1])

|

| 36 |

+

return anime_image, save_path

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

title = "AnimeGANv2: To produce your own animation."

|

| 40 |

+

description = r"""Turn your photo into anime style 😊"""

|

| 41 |

+

article = r"""

|

| 42 |

+

[](https://github.com/ptran1203/pytorch-animeGAN)

|

| 43 |

+

### 🗻 Demo

|

| 44 |

+

|

| 45 |

+

"""

|

| 46 |

+

|

| 47 |

+

gr.Interface(

|

| 48 |

+

fn=inference,

|

| 49 |

+

inputs=[

|

| 50 |

+

gr.components.Image(label="Input"),

|

| 51 |

+

gr.Dropdown(

|

| 52 |

+

[

|

| 53 |

+

'AnimeGAN_Hayao',

|

| 54 |

+

'AnimeGAN_Shinkai',

|

| 55 |

+

'AnimeGANv2_Hayao',

|

| 56 |

+

'AnimeGANv2_Shinkai',

|

| 57 |

+

'AnimeGANv2_Arcane',

|

| 58 |

+

],

|

| 59 |

+

type="value",

|

| 60 |

+

value='AnimeGANv2_Hayao',

|

| 61 |

+

label='Style'

|

| 62 |

+

),

|

| 63 |

+

gr.Dropdown(

|

| 64 |

+

[

|

| 65 |

+

None,

|

| 66 |

+

416,

|

| 67 |

+

512,

|

| 68 |

+

768,

|

| 69 |

+

1024,

|

| 70 |

+

1536,

|

| 71 |

+

],

|

| 72 |

+

type="value",

|

| 73 |

+

value=None,

|

| 74 |

+

label='Image size'

|

| 75 |

+

)

|

| 76 |

+

],

|

| 77 |

+

outputs=[

|

| 78 |

+

gr.components.Image(type="numpy", label="Output (The whole image)"),

|

| 79 |

+

gr.components.File(label="Download the output image")

|

| 80 |

+

],

|

| 81 |

+

title=title,

|

| 82 |

+

description=description,

|

| 83 |

+

article=article,

|

| 84 |

+

allow_flagging="never",

|

| 85 |

+

examples=[

|

| 86 |

+

['example/arcane/girl4.jpg', 'AnimeGANv2_Arcane', "Yes"],

|

| 87 |

+

['example/arcane/leo.jpg', 'AnimeGANv2_Arcane', "Yes"],

|

| 88 |

+

['example/arcane/girl.jpg', 'AnimeGANv2_Arcane', "Yes"],

|

| 89 |

+

['example/arcane/anne.jpg', 'AnimeGANv2_Arcane', "Yes"],

|

| 90 |

+

# ['example/boy2.jpg', 'AnimeGANv3_Arcane', "No"],

|

| 91 |

+

# ['example/cap.jpg', 'AnimeGANv3_Arcane', "No"],

|

| 92 |

+

['example/more/hayao_v2/pexels-camilacarneiro-6318793.jpg', 'AnimeGANv2_Hayao', "Yes"],

|

| 93 |

+

['example/more/hayao_v2/pexels-nandhukumar-450441.jpg', 'AnimeGANv2_Hayao', "Yes"],

|

| 94 |

+

]

|

| 95 |

+

).launch()

|

example/arcane/anne.jpg

ADDED

|

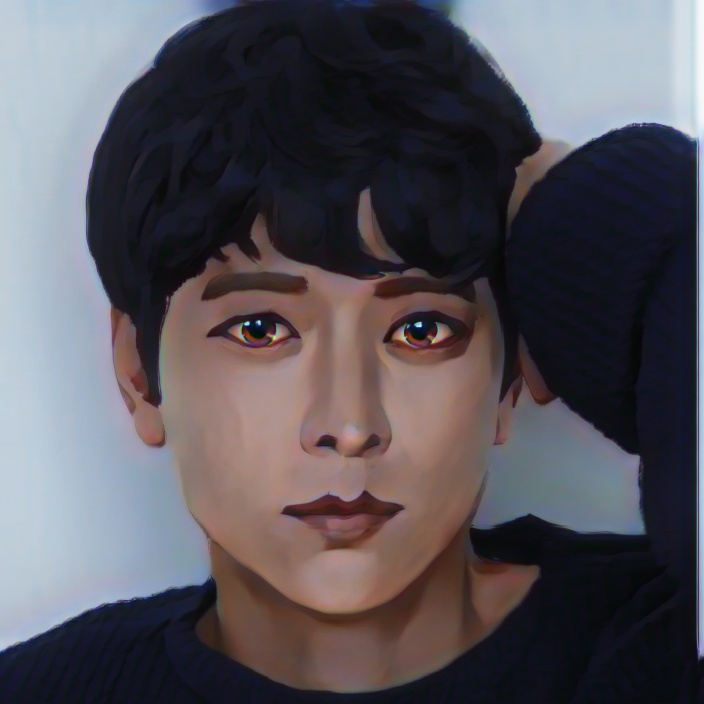

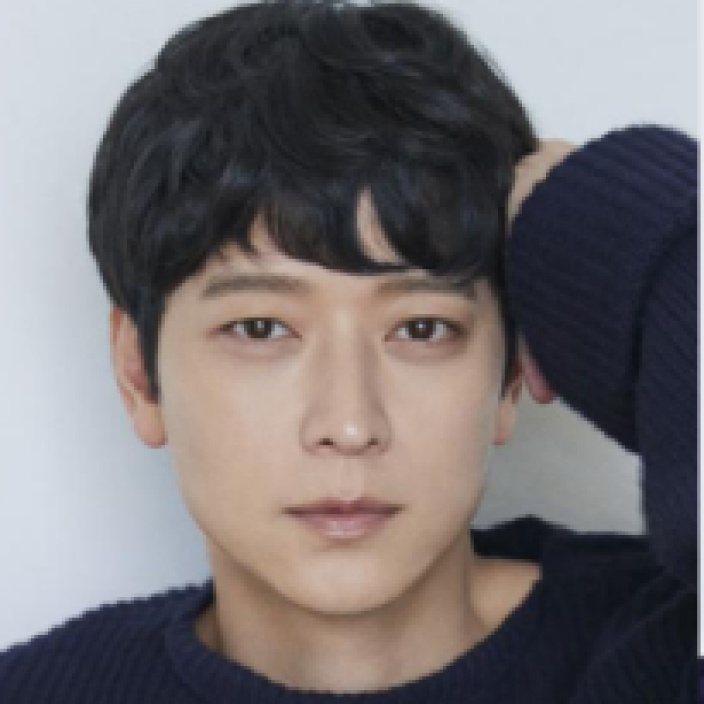

example/arcane/boy2.jpg

ADDED

|

example/arcane/cap.jpg

ADDED

|

example/arcane/dune2.jpg

ADDED

|

example/arcane/elon.jpg

ADDED

|

example/arcane/girl.jpg

ADDED

|

example/arcane/girl4.jpg

ADDED

|

example/arcane/girl6.jpg

ADDED

|

example/arcane/leo.jpg

ADDED

|

example/arcane/man2.jpg

ADDED

|

example/arcane/nat_.jpg

ADDED

|

example/arcane/seydoux.jpg

ADDED

|

example/arcane/tobey.jpg

ADDED

|

example/face/anne.jpg

ADDED

|

example/face/boy2.jpg

ADDED

|

example/face/cap.jpg

ADDED

|

example/face/dune2.jpg

ADDED

|

example/face/elon.jpg

ADDED

|

example/face/girl.jpg

ADDED

|

example/face/girl4.jpg

ADDED

|

example/face/girl6.jpg

ADDED

|

example/face/leo.jpg

ADDED

|

example/face/man2.jpg

ADDED

|

example/face/nat_.jpg

ADDED

|

example/face/seydoux.jpg

ADDED

|

example/face/tobey.jpg

ADDED

|

example/generate_examples.py

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import cv2

|

| 3 |

+

import re

|

| 4 |

+

|

| 5 |

+

REG = re.compile(r"[0-9]{3}")

|

| 6 |

+

dir_ = './example/result'

|

| 7 |

+

readme = './README.md'

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def anime_2_input(fi):

|

| 11 |

+

return fi.replace("_anime", "")

|

| 12 |

+

|

| 13 |

+

def rename(f):

|

| 14 |

+

return f.replace(" ", "").replace("(", "").replace(")", "")

|

| 15 |

+

|

| 16 |

+

def rename_back(f):

|

| 17 |

+

nums = REG.search(f)

|

| 18 |

+

if nums:

|

| 19 |

+

nums = nums.group()

|

| 20 |

+

return f.replace(nums, f"{nums[0]} ({nums[1:]})")

|

| 21 |

+

|

| 22 |

+

return f.replace('jpeg', 'jpg')

|

| 23 |

+

|

| 24 |

+

def copyfile(src, dest):

|

| 25 |

+

# copy and resize

|

| 26 |

+

im = cv2.imread(src)

|

| 27 |

+

|

| 28 |

+

if im is None:

|

| 29 |

+

raise FileNotFoundError(src)

|

| 30 |

+

|

| 31 |

+

h, w = im.shape[1], im.shape[0]

|

| 32 |

+

|

| 33 |

+

s = 448

|

| 34 |

+

size = (s, round(s * w / h))

|

| 35 |

+

im = cv2.resize(im, size)

|

| 36 |

+

|

| 37 |

+

print(w, h, im.shape)

|

| 38 |

+

cv2.imwrite(dest, im)

|

| 39 |

+

|

| 40 |

+

files = os.listdir(dir_)

|

| 41 |

+

new_files = []

|

| 42 |

+

for f in files:

|

| 43 |

+

input_ver = os.path.join(dir_, anime_2_input(f))

|

| 44 |

+

copyfile(f"dataset/test/HR_photo/{rename_back(anime_2_input(f))}", rename(input_ver))

|

| 45 |

+

|

| 46 |

+

os.rename(

|

| 47 |

+

os.path.join(dir_, f),

|

| 48 |

+

os.path.join(dir_, rename(f))

|

| 49 |

+

)

|

example/more/hayao_v2/pexels-arnie-chou-304906-1004122.jpg

ADDED

|

example/more/hayao_v2/pexels-camilacarneiro-6318793.jpg

ADDED

|

example/more/hayao_v2/pexels-haohd-19859127.jpg

ADDED

|

example/more/hayao_v2/pexels-huy-nguyen-748440234-19838813.jpg

ADDED

|

example/more/hayao_v2/pexels-huy-phan-316220-1422386.jpg

ADDED

|

example/more/hayao_v2/pexels-jimmy-teoh-294331-951531.jpg

ADDED

|

example/more/hayao_v2/pexels-nandhukumar-450441.jpg

ADDED

|

example/more/hayao_v2/pexels-sevenstormphotography-575362.jpg

ADDED

|

inference.py

ADDED

|

@@ -0,0 +1,410 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import time

|

| 3 |

+

import shutil

|

| 4 |

+

|

| 5 |

+

import torch

|

| 6 |

+

import cv2

|

| 7 |

+

import numpy as np

|

| 8 |

+

|

| 9 |

+

from models.anime_gan import GeneratorV1

|

| 10 |

+

from models.anime_gan_v2 import GeneratorV2

|

| 11 |

+

from models.anime_gan_v3 import GeneratorV3

|

| 12 |

+

from utils.common import load_checkpoint, RELEASED_WEIGHTS

|

| 13 |

+

from utils.image_processing import resize_image, normalize_input, denormalize_input

|

| 14 |

+

from utils import read_image, is_image_file, is_video_file

|

| 15 |

+

from tqdm import tqdm

|

| 16 |

+

from color_transfer import color_transfer_pytorch

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

try:

|

| 20 |

+

import matplotlib.pyplot as plt

|

| 21 |

+

except ImportError:

|

| 22 |

+

plt = None

|

| 23 |

+

|

| 24 |

+

try:

|

| 25 |

+

import moviepy.video.io.ffmpeg_writer as ffmpeg_writer

|

| 26 |

+

from moviepy.video.io.VideoFileClip import VideoFileClip

|

| 27 |

+

except ImportError:

|

| 28 |

+

ffmpeg_writer = None

|

| 29 |

+

VideoFileClip = None

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def profile(func):

|

| 33 |

+

def wrap(*args, **kwargs):

|

| 34 |

+

started_at = time.time()

|

| 35 |

+

result = func(*args, **kwargs)

|

| 36 |

+

elapsed = time.time() - started_at

|

| 37 |

+

print(f"Processed in {elapsed:.3f}s")

|

| 38 |

+

return result

|

| 39 |

+

return wrap

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

def auto_load_weight(weight, version=None, map_location=None):

|

| 43 |

+

"""Auto load Generator version from weight."""

|

| 44 |

+

weight_name = os.path.basename(weight).lower()

|

| 45 |

+

if version is not None:

|

| 46 |

+

version = version.lower()

|

| 47 |

+

assert version in {"v1", "v2", "v3"}, f"Version {version} does not exist"

|

| 48 |

+

# If version is provided, use it.

|

| 49 |

+

cls = {

|

| 50 |

+

"v1": GeneratorV1,

|

| 51 |

+

"v2": GeneratorV2,

|

| 52 |

+

"v3": GeneratorV3

|

| 53 |

+

}[version]

|

| 54 |

+

else:

|

| 55 |

+

# Try to get class by name of weight file

|

| 56 |

+

# For convenenice, weight should start with classname

|

| 57 |

+

# e.g: Generatorv2_{anything}.pt

|

| 58 |

+

if weight_name in RELEASED_WEIGHTS:

|

| 59 |

+

version = RELEASED_WEIGHTS[weight_name][0]

|

| 60 |

+

return auto_load_weight(weight, version=version, map_location=map_location)

|

| 61 |

+

|

| 62 |

+

elif weight_name.startswith("generatorv2"):

|

| 63 |

+

cls = GeneratorV2

|

| 64 |

+

elif weight_name.startswith("generatorv3"):

|

| 65 |

+

cls = GeneratorV3

|

| 66 |

+

elif weight_name.startswith("generator"):

|

| 67 |

+

cls = GeneratorV1

|

| 68 |

+

else:

|

| 69 |

+

raise ValueError((f"Can not get Model from {weight_name}, "

|

| 70 |

+

"you might need to explicitly specify version"))

|

| 71 |

+

model = cls()

|

| 72 |

+

load_checkpoint(model, weight, strip_optimizer=True, map_location=map_location)

|

| 73 |

+

model.eval()

|

| 74 |

+

return model

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

class Predictor:

|

| 78 |

+

"""

|

| 79 |

+

Generic class for transfering Image to anime like image.

|

| 80 |

+

"""

|

| 81 |

+

def __init__(

|

| 82 |

+

self,

|

| 83 |

+

weight='hayao',

|

| 84 |

+

device='cuda',

|

| 85 |

+

amp=True,

|

| 86 |

+

retain_color=False,

|

| 87 |

+

imgsz=None,

|

| 88 |

+

):

|

| 89 |

+

if not torch.cuda.is_available():

|

| 90 |

+

device = 'cpu'

|

| 91 |

+

# Amp not working on cpu

|

| 92 |

+

amp = False

|

| 93 |

+

print("Use CPU device")

|

| 94 |

+

else:

|

| 95 |

+

print(f"Use GPU {torch.cuda.get_device_name()}")

|

| 96 |

+

|

| 97 |

+

self.imgsz = imgsz

|

| 98 |

+

self.retain_color = retain_color

|

| 99 |

+

self.amp = amp # Automatic Mixed Precision

|

| 100 |

+

self.device_type = 'cuda' if device.startswith('cuda') else 'cpu'

|

| 101 |

+

self.device = torch.device(device)

|

| 102 |

+

self.G = auto_load_weight(weight, map_location=device)

|

| 103 |

+

self.G.to(self.device)

|

| 104 |

+

|

| 105 |

+

def transform_and_show(

|

| 106 |

+

self,

|

| 107 |

+

image_path,

|

| 108 |

+

figsize=(18, 10),

|

| 109 |

+

save_path=None

|

| 110 |

+

):

|

| 111 |

+

image = resize_image(read_image(image_path))

|

| 112 |

+

anime_img = self.transform(image)

|

| 113 |

+

anime_img = anime_img.astype('uint8')

|

| 114 |

+

|

| 115 |

+

fig = plt.figure(figsize=figsize)

|

| 116 |

+

fig.add_subplot(1, 2, 1)

|

| 117 |

+

# plt.title("Input")

|

| 118 |

+

plt.imshow(image)

|

| 119 |

+

plt.axis('off')

|

| 120 |

+

fig.add_subplot(1, 2, 2)

|

| 121 |

+

# plt.title("Anime style")

|

| 122 |

+

plt.imshow(anime_img[0])

|

| 123 |

+

plt.axis('off')

|

| 124 |

+

plt.tight_layout()

|

| 125 |

+

plt.show()

|

| 126 |

+

if save_path is not None:

|

| 127 |

+

plt.savefig(save_path)

|

| 128 |

+

|

| 129 |

+

def transform(self, image, denorm=True):

|

| 130 |

+

'''

|

| 131 |

+

Transform a image to animation

|

| 132 |

+

|

| 133 |

+

@Arguments:

|

| 134 |

+

- image: np.array, shape = (Batch, width, height, channels)

|

| 135 |

+

|

| 136 |

+

@Returns:

|

| 137 |

+

- anime version of image: np.array

|

| 138 |

+

'''

|

| 139 |

+

with torch.no_grad():

|

| 140 |

+

image = self.preprocess_images(image)

|

| 141 |

+

# image = image.to(self.device)

|

| 142 |

+

# with autocast(self.device_type, enabled=self.amp):

|

| 143 |

+

# print(image.dtype, self.G)

|

| 144 |

+

fake = self.G(image)

|

| 145 |

+

# Transfer color of fake image look similiar color as image

|

| 146 |

+

if self.retain_color:

|

| 147 |

+

fake = color_transfer_pytorch(fake, image)

|

| 148 |

+

fake = (fake / 0.5) - 1.0 # remap to [-1. 1]

|

| 149 |

+

fake = fake.detach().cpu().numpy()

|

| 150 |

+

# Channel last

|

| 151 |

+

fake = fake.transpose(0, 2, 3, 1)

|

| 152 |

+

|

| 153 |

+

if denorm:

|

| 154 |

+

fake = denormalize_input(fake, dtype=np.uint8)

|

| 155 |

+

return fake

|

| 156 |

+

|

| 157 |

+

def read_and_resize(self, path, max_size=1536):

|

| 158 |

+

image = read_image(path)

|

| 159 |

+

_, ext = os.path.splitext(path)

|

| 160 |

+

h, w = image.shape[:2]

|

| 161 |

+

if self.imgsz is not None:

|

| 162 |

+

image = resize_image(image, width=self.imgsz)

|

| 163 |

+

elif max(h, w) > max_size:

|

| 164 |

+

print(f"Image {os.path.basename(path)} is too big ({h}x{w}), resize to max size {max_size}")

|

| 165 |

+

image = resize_image(

|

| 166 |

+

image,

|

| 167 |

+

width=max_size if w > h else None,

|

| 168 |

+

height=max_size if w < h else None,

|

| 169 |

+

)

|

| 170 |

+

cv2.imwrite(path.replace(ext, ".jpg"), image[:,:,::-1])

|

| 171 |

+

else:

|

| 172 |

+

image = resize_image(image)

|

| 173 |

+

# image = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

|

| 174 |

+

# image = np.stack([image, image, image], -1)

|

| 175 |

+

# cv2.imwrite(path.replace(ext, ".jpg"), image[:,:,::-1])

|

| 176 |

+

return image

|

| 177 |

+

|

| 178 |

+

@profile

|

| 179 |

+

def transform_file(self, file_path, save_path):

|

| 180 |

+

if not is_image_file(save_path):

|

| 181 |

+

raise ValueError(f"{save_path} is not valid")

|

| 182 |

+

|

| 183 |

+

image = self.read_and_resize(file_path)

|

| 184 |

+

anime_img = self.transform(image)[0]

|

| 185 |

+

cv2.imwrite(save_path, anime_img[..., ::-1])

|

| 186 |

+

print(f"Anime image saved to {save_path}")

|

| 187 |

+

return anime_img

|

| 188 |

+

|

| 189 |

+

@profile

|

| 190 |

+

def transform_gif(self, file_path, save_path, batch_size=4):

|

| 191 |

+

import imageio

|

| 192 |

+

|

| 193 |

+

def _preprocess_gif(img):

|

| 194 |

+

if img.shape[-1] == 4:

|

| 195 |

+

img = cv2.cvtColor(img, cv2.COLOR_RGBA2RGB)

|

| 196 |

+

return resize_image(img)

|

| 197 |

+

|

| 198 |

+

images = imageio.mimread(file_path)

|

| 199 |

+

images = np.stack([

|

| 200 |

+

_preprocess_gif(img)

|

| 201 |

+

for img in images

|

| 202 |

+

])

|

| 203 |

+

|

| 204 |

+

print(images.shape)

|

| 205 |

+

|

| 206 |

+

anime_gif = np.zeros_like(images)

|

| 207 |

+

|

| 208 |

+

for i in tqdm(range(0, len(images), batch_size)):

|

| 209 |

+

end = i + batch_size

|

| 210 |

+

anime_gif[i: end] = self.transform(

|

| 211 |

+

images[i: end]

|

| 212 |

+

)

|

| 213 |

+

|

| 214 |

+

if end < len(images) - 1:

|

| 215 |

+

# transform last frame

|

| 216 |

+

print("LAST", images[end: ].shape)

|

| 217 |

+

anime_gif[end:] = self.transform(images[end:])

|

| 218 |

+

|

| 219 |

+

print(anime_gif.shape)

|

| 220 |

+

imageio.mimsave(

|

| 221 |

+

save_path,

|

| 222 |

+

anime_gif,

|

| 223 |

+

|

| 224 |

+

)

|

| 225 |

+

print(f"Anime image saved to {save_path}")

|

| 226 |

+

|

| 227 |

+

@profile

|

| 228 |

+

def transform_in_dir(self, img_dir, dest_dir, max_images=0, img_size=(512, 512)):

|

| 229 |

+

'''

|

| 230 |

+

Read all images from img_dir, transform and write the result

|

| 231 |

+

to dest_dir

|

| 232 |

+

|

| 233 |

+

'''

|

| 234 |

+

os.makedirs(dest_dir, exist_ok=True)

|

| 235 |

+

|

| 236 |

+

files = os.listdir(img_dir)

|

| 237 |

+

files = [f for f in files if is_image_file(f)]

|

| 238 |

+

print(f'Found {len(files)} images in {img_dir}')

|

| 239 |

+

|

| 240 |

+

if max_images:

|

| 241 |

+

files = files[:max_images]

|

| 242 |

+

|

| 243 |

+

bar = tqdm(files)

|

| 244 |

+

for fname in bar:

|

| 245 |

+

path = os.path.join(img_dir, fname)

|

| 246 |

+

image = self.read_and_resize(path)

|

| 247 |

+

anime_img = self.transform(image)[0]

|

| 248 |

+

# anime_img = resize_image(anime_img, width=320)

|

| 249 |

+

ext = fname.split('.')[-1]

|

| 250 |

+

fname = fname.replace(f'.{ext}', '')

|

| 251 |

+

cv2.imwrite(os.path.join(dest_dir, f'{fname}.jpg'), anime_img[..., ::-1])

|

| 252 |

+

bar.set_description(f"{fname} {image.shape}")

|

| 253 |

+

|

| 254 |

+

def transform_video(self, input_path, output_path, batch_size=4, start=0, end=0):

|

| 255 |

+

'''

|

| 256 |

+

Transform a video to animation version

|

| 257 |

+

https://github.com/lengstrom/fast-style-transfer/blob/master/evaluate.py#L21

|

| 258 |

+

'''

|

| 259 |

+

if VideoFileClip is None:

|

| 260 |

+

raise ImportError("moviepy is not installed, please install with `pip install moviepy>=1.0.3`")

|

| 261 |

+

# Force to None

|

| 262 |

+

end = end or None

|

| 263 |

+

|

| 264 |

+

if not os.path.isfile(input_path):

|

| 265 |

+

raise FileNotFoundError(f'{input_path} does not exist')

|

| 266 |

+

|

| 267 |

+

output_dir = os.path.dirname(output_path)

|

| 268 |

+

if output_dir:

|

| 269 |

+

os.makedirs(output_dir, exist_ok=True)

|

| 270 |

+

|

| 271 |

+

is_gg_drive = '/drive/' in output_path

|

| 272 |

+

temp_file = ''

|

| 273 |

+

|

| 274 |

+

if is_gg_drive:

|

| 275 |

+

# Writing directly into google drive can be inefficient

|

| 276 |

+

temp_file = f'tmp_anime.{output_path.split(".")[-1]}'

|

| 277 |

+

|

| 278 |

+

def transform_and_write(frames, count, writer):

|

| 279 |

+

anime_images = self.transform(frames)

|

| 280 |

+

for i in range(0, count):

|

| 281 |

+

img = np.clip(anime_images[i], 0, 255)

|

| 282 |

+

writer.write_frame(img)

|

| 283 |

+

|

| 284 |

+

video_clip = VideoFileClip(input_path, audio=False)

|

| 285 |

+

if start or end:

|

| 286 |

+

video_clip = video_clip.subclip(start, end)

|

| 287 |

+

|

| 288 |

+

video_writer = ffmpeg_writer.FFMPEG_VideoWriter(

|

| 289 |

+

temp_file or output_path,

|

| 290 |

+

video_clip.size, video_clip.fps,

|

| 291 |

+

codec="libx264",

|

| 292 |

+

# preset="medium", bitrate="2000k",

|

| 293 |

+

ffmpeg_params=None)

|

| 294 |

+

|

| 295 |

+

total_frames = round(video_clip.fps * video_clip.duration)

|

| 296 |

+

print(f'Transfroming video {input_path}, {total_frames} frames, size: {video_clip.size}')

|

| 297 |

+

|

| 298 |

+

batch_shape = (batch_size, video_clip.size[1], video_clip.size[0], 3)

|

| 299 |

+

frame_count = 0

|

| 300 |

+

frames = np.zeros(batch_shape, dtype=np.float32)

|

| 301 |

+

for frame in tqdm(video_clip.iter_frames(), total=total_frames):

|

| 302 |

+

try:

|

| 303 |

+

frames[frame_count] = frame

|

| 304 |

+

frame_count += 1

|

| 305 |

+

if frame_count == batch_size:

|

| 306 |

+

transform_and_write(frames, frame_count, video_writer)

|

| 307 |

+

frame_count = 0

|

| 308 |

+

except Exception as e:

|

| 309 |

+

print(e)

|

| 310 |

+

break

|

| 311 |

+

|

| 312 |

+

# The last frames

|

| 313 |

+

if frame_count != 0:

|

| 314 |

+

transform_and_write(frames, frame_count, video_writer)

|

| 315 |

+

|

| 316 |

+

if temp_file:

|

| 317 |

+

# move to output path

|

| 318 |

+

shutil.move(temp_file, output_path)

|

| 319 |

+

|

| 320 |

+

print(f'Animation video saved to {output_path}')

|

| 321 |

+

video_writer.close()

|

| 322 |

+

|

| 323 |

+

def preprocess_images(self, images):

|

| 324 |

+

'''

|

| 325 |

+

Preprocess image for inference

|

| 326 |

+

|

| 327 |

+

@Arguments:

|

| 328 |

+

- images: np.ndarray

|

| 329 |

+

|

| 330 |

+

@Returns

|

| 331 |

+

- images: torch.tensor

|

| 332 |

+

'''

|

| 333 |

+

images = images.astype(np.float32)

|

| 334 |

+

|

| 335 |

+

# Normalize to [-1, 1]

|

| 336 |

+

images = normalize_input(images)

|

| 337 |

+

images = torch.from_numpy(images)

|

| 338 |

+

|

| 339 |

+

images = images.to(self.device)

|

| 340 |

+

|

| 341 |

+

# Add batch dim

|

| 342 |

+

if len(images.shape) == 3:

|

| 343 |

+

images = images.unsqueeze(0)

|

| 344 |

+

|

| 345 |

+

# channel first

|

| 346 |

+

images = images.permute(0, 3, 1, 2)

|

| 347 |

+

|

| 348 |

+

return images

|

| 349 |

+

|

| 350 |

+

|

| 351 |

+

def parse_args():

|

| 352 |

+

import argparse

|

| 353 |

+

parser = argparse.ArgumentParser()

|

| 354 |

+

parser.add_argument(

|

| 355 |

+

'--weight',

|

| 356 |

+

type=str,

|

| 357 |

+

default="hayao:v2",

|

| 358 |

+

help=f'Model weight, can be path or pretrained {tuple(RELEASED_WEIGHTS.keys())}'

|

| 359 |

+

)

|

| 360 |

+

parser.add_argument('--src', type=str, help='Source, can be directory contains images, image file or video file.')

|

| 361 |

+

parser.add_argument('--device', type=str, default='cuda', help='Device, cuda or cpu')

|

| 362 |

+

parser.add_argument('--imgsz', type=int, default=None, help='Resize image to specified size if provided')

|

| 363 |

+

parser.add_argument('--out', type=str, default='inference_images', help='Output, can be directory or file')

|

| 364 |

+

parser.add_argument(

|

| 365 |

+

'--retain-color',

|

| 366 |

+

action='store_true',

|

| 367 |

+

help='If provided the generated image will retain original color of input image')

|

| 368 |

+

# Video params

|

| 369 |

+

parser.add_argument('--batch-size', type=int, default=4, help='Batch size when inference video')

|

| 370 |

+

parser.add_argument('--start', type=int, default=0, help='Start time of video (second)')

|

| 371 |

+

parser.add_argument('--end', type=int, default=0, help='End time of video (second), 0 if not set')

|

| 372 |

+

|

| 373 |

+

return parser.parse_args()

|

| 374 |

+

|

| 375 |

+

if __name__ == '__main__':

|

| 376 |

+

args = parse_args()

|

| 377 |

+

|

| 378 |

+

predictor = Predictor(

|

| 379 |

+

args.weight,

|

| 380 |

+

args.device,

|

| 381 |

+

retain_color=args.retain_color,

|

| 382 |

+

imgsz=args.imgsz,

|

| 383 |

+

)

|

| 384 |

+

|

| 385 |

+

if not os.path.exists(args.src):

|

| 386 |

+

raise FileNotFoundError(args.src)

|

| 387 |

+

|

| 388 |

+

if is_video_file(args.src):

|

| 389 |

+

predictor.transform_video(

|

| 390 |

+

args.src,

|

| 391 |

+

args.out,

|

| 392 |

+

args.batch_size,

|

| 393 |

+

start=args.start,

|

| 394 |

+

end=args.end

|

| 395 |

+

)

|

| 396 |

+

elif os.path.isdir(args.src):

|

| 397 |

+

predictor.transform_in_dir(args.src, args.out)

|

| 398 |

+

elif os.path.isfile(args.src):

|

| 399 |

+

save_path = args.out

|

| 400 |

+

if not is_image_file(args.out):

|

| 401 |

+

os.makedirs(args.out, exist_ok=True)

|

| 402 |

+

save_path = os.path.join(args.out, os.path.basename(args.src))

|

| 403 |

+

|

| 404 |

+

if args.src.endswith('.gif'):

|

| 405 |

+

# GIF file

|

| 406 |

+

predictor.transform_gif(args.src, save_path, args.batch_size)

|

| 407 |

+

else:

|

| 408 |

+

predictor.transform_file(args.src, save_path)

|

| 409 |

+

else:

|

| 410 |

+

raise NotImplementedError(f"{args.src} is not supported")

|

losses.py

ADDED

|

@@ -0,0 +1,248 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn.functional as F

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

from models.vgg import Vgg19

|

| 5 |

+

from utils.image_processing import gram

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

def to_gray_scale(image):

|

| 9 |

+

# https://github.com/pytorch/vision/blob/main/torchvision/transforms/v2/functional/_color.py#L33

|

| 10 |

+

# Image are assum in range 1, -1

|

| 11 |

+

image = (image + 1.0) / 2.0 # To [0, 1]

|

| 12 |

+

r, g, b = image.unbind(dim=-3)

|

| 13 |

+

l_img = r.mul(0.2989).add_(g, alpha=0.587).add_(b, alpha=0.114)

|

| 14 |

+

l_img = l_img.unsqueeze(dim=-3)

|

| 15 |

+

l_img = l_img.to(image.dtype)

|

| 16 |

+

l_img = l_img.expand(image.shape)

|

| 17 |

+

l_img = l_img / 0.5 - 1.0 # To [-1, 1]

|

| 18 |

+

return l_img

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

class ColorLoss(nn.Module):

|

| 22 |

+

def __init__(self):

|

| 23 |

+

super(ColorLoss, self).__init__()

|

| 24 |

+

self.l1 = nn.L1Loss()

|

| 25 |

+

self.huber = nn.SmoothL1Loss()

|

| 26 |

+

# self._rgb_to_yuv_kernel = torch.tensor([

|

| 27 |

+

# [0.299, -0.14714119, 0.61497538],

|

| 28 |

+

# [0.587, -0.28886916, -0.51496512],

|

| 29 |

+

# [0.114, 0.43601035, -0.10001026]

|

| 30 |

+

# ]).float()

|

| 31 |

+

|

| 32 |

+

self._rgb_to_yuv_kernel = torch.tensor([

|

| 33 |

+

[0.299, 0.587, 0.114],

|

| 34 |

+

[-0.14714119, -0.28886916, 0.43601035],

|

| 35 |

+

[0.61497538, -0.51496512, -0.10001026],

|

| 36 |

+

]).float()

|

| 37 |

+

|

| 38 |

+

def to(self, device):

|

| 39 |

+

new_self = super(ColorLoss, self).to(device)

|

| 40 |

+

new_self._rgb_to_yuv_kernel = new_self._rgb_to_yuv_kernel.to(device)

|

| 41 |

+

return new_self

|

| 42 |

+

|

| 43 |

+

def rgb_to_yuv(self, image):

|

| 44 |

+

'''

|

| 45 |

+

https://en.wikipedia.org/wiki/YUV

|

| 46 |

+

|

| 47 |

+

output: Image of shape (H, W, C) (channel last)

|

| 48 |

+

'''

|

| 49 |

+

# -1 1 -> 0 1

|

| 50 |

+

image = (image + 1.0) / 2.0

|

| 51 |

+

image = image.permute(0, 2, 3, 1) # To channel last

|

| 52 |

+

|

| 53 |

+

yuv_img = image @ self._rgb_to_yuv_kernel.T

|

| 54 |

+

|

| 55 |

+

return yuv_img

|

| 56 |

+

|

| 57 |

+

def forward(self, image, image_g):

|

| 58 |

+

image = self.rgb_to_yuv(image)

|

| 59 |

+

image_g = self.rgb_to_yuv(image_g)

|

| 60 |

+

# After convert to yuv, both images have channel last

|

| 61 |

+

return (

|

| 62 |

+

self.l1(image[:, :, :, 0], image_g[:, :, :, 0])

|

| 63 |

+

+ self.huber(image[:, :, :, 1], image_g[:, :, :, 1])

|

| 64 |

+

+ self.huber(image[:, :, :, 2], image_g[:, :, :, 2])

|

| 65 |

+

)

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

class AnimeGanLoss:

|

| 69 |

+

def __init__(self, args, device, gray_adv=False):

|

| 70 |

+

if isinstance(device, str):

|

| 71 |

+

device = torch.device(device)

|

| 72 |

+

|

| 73 |

+

self.content_loss = nn.L1Loss().to(device)

|

| 74 |

+

self.gram_loss = nn.L1Loss().to(device)

|

| 75 |

+

self.color_loss = ColorLoss().to(device)

|

| 76 |

+

self.wadvg = args.wadvg

|

| 77 |

+

self.wadvd = args.wadvd

|

| 78 |

+

self.wcon = args.wcon

|

| 79 |

+

self.wgra = args.wgra

|

| 80 |

+

self.wcol = args.wcol

|

| 81 |

+

self.wtvar = args.wtvar

|

| 82 |

+

# If true, use gray scale image to calculate adversarial loss

|

| 83 |

+

self.gray_adv = gray_adv

|

| 84 |

+

self.vgg19 = Vgg19().to(device).eval()

|

| 85 |

+

self.adv_type = args.gan_loss

|

| 86 |

+

self.bce_loss = nn.BCEWithLogitsLoss()

|

| 87 |

+

|

| 88 |

+

def compute_loss_G(self, fake_img, img, fake_logit, anime_gray):

|

| 89 |

+

'''

|

| 90 |

+

Compute loss for Generator

|

| 91 |

+

|

| 92 |

+

@Args:

|

| 93 |

+

- fake_img: generated image

|

| 94 |

+

- img: real image

|

| 95 |

+

- fake_logit: output of Discriminator given fake image

|

| 96 |

+

- anime_gray: grayscale of anime image

|

| 97 |

+

|

| 98 |

+

@Returns:

|

| 99 |

+

- Adversarial Loss of fake logits

|

| 100 |

+

- Content loss between real and fake features (vgg19)

|

| 101 |

+

- Gram loss between anime and fake features (Vgg19)

|

| 102 |

+

- Color loss between image and fake image

|

| 103 |

+

- Total variation loss of fake image

|

| 104 |

+

'''

|

| 105 |

+

fake_feat = self.vgg19(fake_img)

|

| 106 |

+

gray_feat = self.vgg19(anime_gray)

|

| 107 |

+

img_feat = self.vgg19(img)

|

| 108 |

+

# fake_gray_feat = self.vgg19(to_gray_scale(fake_img))

|

| 109 |

+

|

| 110 |

+

return [

|

| 111 |

+

# Want to be real image.

|

| 112 |

+

self.wadvg * self.adv_loss_g(fake_logit),

|

| 113 |

+

self.wcon * self.content_loss(img_feat, fake_feat),

|

| 114 |

+

self.wgra * self.gram_loss(gram(gray_feat), gram(fake_feat)),

|

| 115 |

+

self.wcol * self.color_loss(img, fake_img),

|

| 116 |

+

self.wtvar * self.total_variation_loss(fake_img)

|

| 117 |

+

]

|

| 118 |

+

|

| 119 |

+

def compute_loss_D(

|

| 120 |

+

self,

|

| 121 |

+

fake_img_d,

|

| 122 |

+

real_anime_d,

|

| 123 |

+

real_anime_gray_d,

|

| 124 |

+

real_anime_smooth_gray_d=None

|

| 125 |

+

):

|

| 126 |

+

if self.gray_adv:

|

| 127 |

+

# Treat gray scale image as real

|

| 128 |

+

return (

|

| 129 |

+

self.adv_loss_d_real(real_anime_gray_d)

|

| 130 |

+

+ self.adv_loss_d_fake(fake_img_d)

|

| 131 |

+

+ 0.3 * self.adv_loss_d_fake(real_anime_smooth_gray_d)

|

| 132 |

+

)

|

| 133 |

+

else:

|

| 134 |

+

return (

|

| 135 |

+

# Classify real anime as real

|

| 136 |

+

self.adv_loss_d_real(real_anime_d)

|

| 137 |

+

# Classify generated as fake

|

| 138 |

+

+ self.adv_loss_d_fake(fake_img_d)

|

| 139 |

+

# Classify real anime gray as fake

|

| 140 |

+

# + self.adv_loss_d_fake(real_anime_gray_d)

|

| 141 |

+

# Classify real anime as fake

|

| 142 |

+

# + 0.1 * self.adv_loss_d_fake(real_anime_smooth_gray_d)

|

| 143 |

+

)

|

| 144 |

+

|

| 145 |

+

def total_variation_loss(self, fake_img):

|

| 146 |

+

"""

|

| 147 |

+

A smooth loss in fact. Like the smooth prior in MRF.

|

| 148 |

+

V(y) = || y_{n+1} - y_n ||_2

|

| 149 |

+

"""

|

| 150 |

+

# Channel first -> channel last

|

| 151 |

+

fake_img = fake_img.permute(0, 2, 3, 1)

|

| 152 |

+

def _l2(x):

|

| 153 |

+

# sum(t ** 2) / 2

|

| 154 |

+

return torch.sum(x ** 2) / 2

|

| 155 |

+

|

| 156 |

+

dh = fake_img[:, :-1, ...] - fake_img[:, 1:, ...]

|

| 157 |

+

dw = fake_img[:, :, :-1, ...] - fake_img[:, :, 1:, ...]

|

| 158 |

+

return _l2(dh) / dh.numel() + _l2(dw) / dw.numel()

|

| 159 |

+

|

| 160 |

+

def content_loss_vgg(self, image, recontruction):

|

| 161 |

+

feat = self.vgg19(image)

|

| 162 |

+

re_feat = self.vgg19(recontruction)

|

| 163 |

+

feature_loss = self.content_loss(feat, re_feat)

|

| 164 |

+

content_loss = self.content_loss(image, recontruction)

|

| 165 |

+

return feature_loss# + 0.5 * content_loss

|

| 166 |

+

|

| 167 |

+

def adv_loss_d_real(self, pred):

|

| 168 |

+

"""Push pred to class 1 (real)"""

|

| 169 |

+

if self.adv_type == 'hinge':

|

| 170 |

+

return torch.mean(F.relu(1.0 - pred))

|

| 171 |

+

|

| 172 |

+

elif self.adv_type == 'lsgan':

|

| 173 |

+

# pred = torch.sigmoid(pred)

|

| 174 |

+

return torch.mean(torch.square(pred - 1.0))

|

| 175 |

+

|

| 176 |

+

elif self.adv_type == 'bce':

|

| 177 |

+

return self.bce_loss(pred, torch.ones_like(pred))

|

| 178 |

+

|

| 179 |

+

raise ValueError(f'Do not support loss type {self.adv_type}')

|

| 180 |

+

|

| 181 |

+

def adv_loss_d_fake(self, pred):

|

| 182 |

+

"""Push pred to class 0 (fake)"""

|

| 183 |

+

if self.adv_type == 'hinge':

|

| 184 |

+

return torch.mean(F.relu(1.0 + pred))

|

| 185 |

+

|

| 186 |

+

elif self.adv_type == 'lsgan':

|

| 187 |

+

# pred = torch.sigmoid(pred)

|

| 188 |

+

return torch.mean(torch.square(pred))

|

| 189 |

+

|

| 190 |

+

elif self.adv_type == 'bce':

|

| 191 |

+

return self.bce_loss(pred, torch.zeros_like(pred))

|

| 192 |

+

|

| 193 |

+

raise ValueError(f'Do not support loss type {self.adv_type}')

|

| 194 |

+

|

| 195 |

+

def adv_loss_g(self, pred):

|

| 196 |

+

"""Push pred to class 1 (real)"""

|

| 197 |

+

if self.adv_type == 'hinge':

|

| 198 |

+

return -torch.mean(pred)

|

| 199 |

+

|

| 200 |

+

elif self.adv_type == 'lsgan':

|

| 201 |

+

# pred = torch.sigmoid(pred)

|

| 202 |

+

return torch.mean(torch.square(pred - 1.0))

|

| 203 |

+

|

| 204 |

+

elif self.adv_type == 'bce':

|

| 205 |

+

return self.bce_loss(pred, torch.ones_like(pred))

|

| 206 |

+

|

| 207 |

+

raise ValueError(f'Do not support loss type {self.adv_type}')

|

| 208 |

+

|

| 209 |

+

|

| 210 |

+

class LossSummary:

|

| 211 |

+

def __init__(self):

|

| 212 |

+

self.reset()

|

| 213 |

+

|

| 214 |

+

def reset(self):

|

| 215 |

+

self.loss_g_adv = []

|

| 216 |

+

self.loss_content = []

|

| 217 |

+

self.loss_gram = []

|

| 218 |

+

self.loss_color = []

|

| 219 |

+

self.loss_d_adv = []

|

| 220 |

+

|

| 221 |

+

def update_loss_G(self, adv, gram, color, content):

|

| 222 |

+

self.loss_g_adv.append(adv.cpu().detach().numpy())

|

| 223 |

+

self.loss_gram.append(gram.cpu().detach().numpy())

|

| 224 |

+

self.loss_color.append(color.cpu().detach().numpy())

|

| 225 |

+

self.loss_content.append(content.cpu().detach().numpy())

|

| 226 |

+

|

| 227 |

+

def update_loss_D(self, loss):

|

| 228 |

+

self.loss_d_adv.append(loss.cpu().detach().numpy())

|

| 229 |

+

|

| 230 |

+

def avg_loss_G(self):

|

| 231 |

+

return (

|

| 232 |

+

self._avg(self.loss_g_adv),

|

| 233 |

+

self._avg(self.loss_gram),

|

| 234 |

+

self._avg(self.loss_color),

|

| 235 |

+

self._avg(self.loss_content),

|

| 236 |

+

)

|

| 237 |

+

|

| 238 |

+

def avg_loss_D(self):

|

| 239 |

+

return self._avg(self.loss_d_adv)

|

| 240 |

+

|

| 241 |

+

def get_loss_description(self):

|

| 242 |

+

avg_adv, avg_gram, avg_color, avg_content = self.avg_loss_G()

|

| 243 |

+

avg_adv_d = self.avg_loss_D()

|

| 244 |

+

return f'loss G: adv {avg_adv:2f} con {avg_content:2f} gram {avg_gram:2f} color {avg_color:2f} / loss D: {avg_adv_d:2f}'

|

| 245 |

+

|

| 246 |

+

@staticmethod

|

| 247 |

+

def _avg(losses):

|

| 248 |

+

return sum(losses) / len(losses)

|

models/__init__.py

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .anime_gan import GeneratorV1

|

| 2 |

+

from .anime_gan_v2 import GeneratorV2

|

| 3 |

+

from .anime_gan_v3 import GeneratorV3

|

models/anime_gan.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|