Spaces:

Running

on

Zero

Running

on

Zero

batching_and_3d_printing (#4)

Browse files- add fine control (7e6bc52ce03b857bb9117339e936f295b840be37)

- .gitattributes +2 -0

- .gitignore +4 -1

- CONTRIBUTING.md +15 -0

- LICENSE.txt +177 -0

- README.md +1 -1

- app.py +551 -114

- extrude.py +322 -0

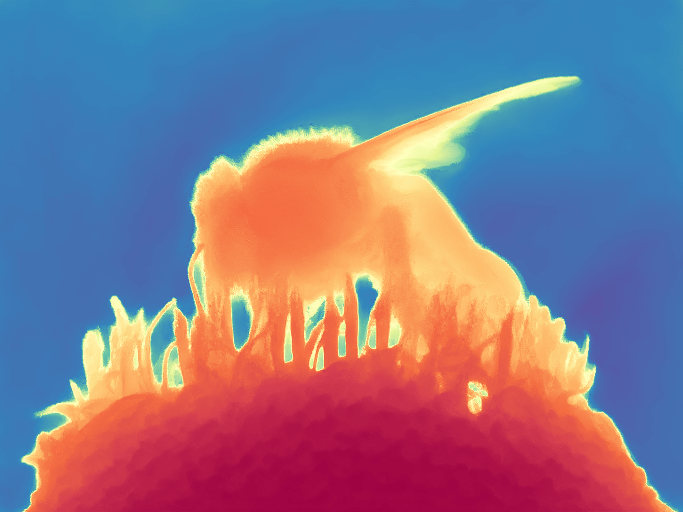

- files/bee_depth_16bit.png +0 -0

- files/bee_depth_colored.png +0 -0

- files/bee_depth_fp32.npy +3 -0

- files/bee_pred.jpg +0 -0

- files/bee_vis.jpg +0 -0

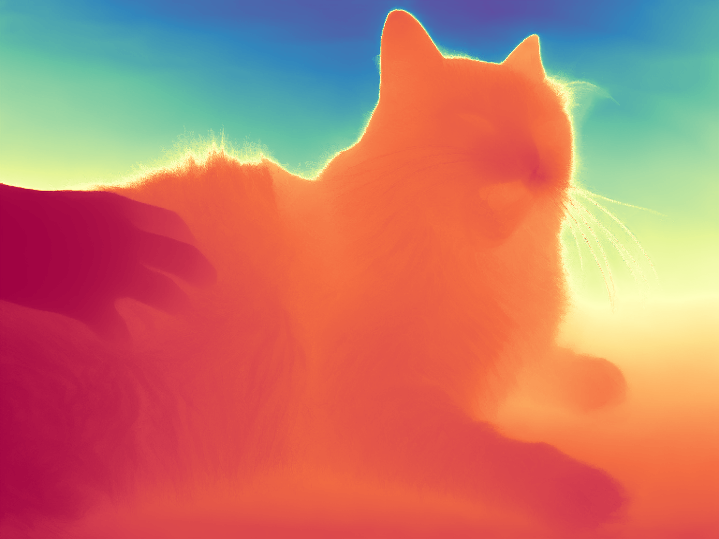

- files/cat_depth_16bit.png +0 -0

- files/cat_depth_colored.png +0 -0

- files/cat_depth_fp32.npy +3 -0

- files/cat_pred.jpg +0 -0

- files/cat_vis.jpg +0 -0

- files/einstein.jpg +0 -0

- files/einstein_depth_16bit.png +3 -0

- files/einstein_depth_colored.png +0 -0

- files/einstein_depth_fp32.npy +3 -0

- files/swings_depth_16bit.png +0 -0

- files/swings_depth_colored.png +0 -0

- files/swings_depth_fp32.npy +3 -0

- files/swings_pred.jpg +0 -0

- files/swings_vis.jpg +0 -0

- requirements.txt +11 -22

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

files/einstein_depth_fp32.npy filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

files/einstein_depth_16bit.png filter=lfs diff=lfs merge=lfs -text

|

.gitignore

CHANGED

|

@@ -1,2 +1,5 @@

|

|

| 1 |

.idea

|

| 2 |

-

.DS_Store

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

.idea

|

| 2 |

+

.DS_Store

|

| 3 |

+

__pycache__

|

| 4 |

+

gradio_cached_examples

|

| 5 |

+

Marigold

|

CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Contributing instructions

|

| 2 |

+

|

| 3 |

+

We appreciate your interest in contributing. Please follow these guidelines:

|

| 4 |

+

|

| 5 |

+

1. **Discuss Changes:** Start a GitHub issue to talk about your proposed change before proceeding.

|

| 6 |

+

|

| 7 |

+

2. **Pull Requests:** Avoid unsolicited PRs. Discussion helps align with project goals.

|

| 8 |

+

|

| 9 |

+

3. **License Agreement:** By submitting a PR, you accept our LICENSE terms.

|

| 10 |

+

|

| 11 |

+

4. **Legal Compatibility:** Ensure your change complies with our project's objectives and licensing.

|

| 12 |

+

|

| 13 |

+

5. **Attribution:** Credit third-party code in your PR if used.

|

| 14 |

+

|

| 15 |

+

Please, feel free to reach out for questions or assistance. Your contributions are valued, and we're excited to work together to enhance this project!

|

LICENSE.txt

ADDED

|

@@ -0,0 +1,177 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

Apache License

|

| 3 |

+

Version 2.0, January 2004

|

| 4 |

+

http://www.apache.org/licenses/

|

| 5 |

+

|

| 6 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 7 |

+

|

| 8 |

+

1. Definitions.

|

| 9 |

+

|

| 10 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 11 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 12 |

+

|

| 13 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 14 |

+

the copyright owner that is granting the License.

|

| 15 |

+

|

| 16 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 17 |

+

other entities that control, are controlled by, or are under common

|

| 18 |

+

control with that entity. For the purposes of this definition,

|

| 19 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 20 |

+

direction or management of such entity, whether by contract or

|

| 21 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 22 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 23 |

+

|

| 24 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 25 |

+

exercising permissions granted by this License.

|

| 26 |

+

|

| 27 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 28 |

+

including but not limited to software source code, documentation

|

| 29 |

+

source, and configuration files.

|

| 30 |

+

|

| 31 |

+

"Object" form shall mean any form resulting from mechanical

|

| 32 |

+

transformation or translation of a Source form, including but

|

| 33 |

+

not limited to compiled object code, generated documentation,

|

| 34 |

+

and conversions to other media types.

|

| 35 |

+

|

| 36 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 37 |

+

Object form, made available under the License, as indicated by a

|

| 38 |

+

copyright notice that is included in or attached to the work

|

| 39 |

+

(an example is provided in the Appendix below).

|

| 40 |

+

|

| 41 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 42 |

+

form, that is based on (or derived from) the Work and for which the

|

| 43 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 44 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 45 |

+

of this License, Derivative Works shall not include works that remain

|

| 46 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 47 |

+

the Work and Derivative Works thereof.

|

| 48 |

+

|

| 49 |

+

"Contribution" shall mean any work of authorship, including

|

| 50 |

+

the original version of the Work and any modifications or additions

|

| 51 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 52 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 53 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 54 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 55 |

+

means any form of electronic, verbal, or written communication sent

|

| 56 |

+

to the Licensor or its representatives, including but not limited to

|

| 57 |

+

communication on electronic mailing lists, source code control systems,

|

| 58 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 59 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 60 |

+

excluding communication that is conspicuously marked or otherwise

|

| 61 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 62 |

+

|

| 63 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 64 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 65 |

+

subsequently incorporated within the Work.

|

| 66 |

+

|

| 67 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 68 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 69 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 70 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 71 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 72 |

+

Work and such Derivative Works in Source or Object form.

|

| 73 |

+

|

| 74 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 75 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 76 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 77 |

+

(except as stated in this section) patent license to make, have made,

|

| 78 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 79 |

+

where such license applies only to those patent claims licensable

|

| 80 |

+

by such Contributor that are necessarily infringed by their

|

| 81 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 82 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 83 |

+

institute patent litigation against any entity (including a

|

| 84 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 85 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 86 |

+

or contributory patent infringement, then any patent licenses

|

| 87 |

+

granted to You under this License for that Work shall terminate

|

| 88 |

+

as of the date such litigation is filed.

|

| 89 |

+

|

| 90 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 91 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 92 |

+

modifications, and in Source or Object form, provided that You

|

| 93 |

+

meet the following conditions:

|

| 94 |

+

|

| 95 |

+

(a) You must give any other recipients of the Work or

|

| 96 |

+

Derivative Works a copy of this License; and

|

| 97 |

+

|

| 98 |

+

(b) You must cause any modified files to carry prominent notices

|

| 99 |

+

stating that You changed the files; and

|

| 100 |

+

|

| 101 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 102 |

+

that You distribute, all copyright, patent, trademark, and

|

| 103 |

+

attribution notices from the Source form of the Work,

|

| 104 |

+

excluding those notices that do not pertain to any part of

|

| 105 |

+

the Derivative Works; and

|

| 106 |

+

|

| 107 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 108 |

+

distribution, then any Derivative Works that You distribute must

|

| 109 |

+

include a readable copy of the attribution notices contained

|

| 110 |

+

within such NOTICE file, excluding those notices that do not

|

| 111 |

+

pertain to any part of the Derivative Works, in at least one

|

| 112 |

+

of the following places: within a NOTICE text file distributed

|

| 113 |

+

as part of the Derivative Works; within the Source form or

|

| 114 |

+

documentation, if provided along with the Derivative Works; or,

|

| 115 |

+

within a display generated by the Derivative Works, if and

|

| 116 |

+

wherever such third-party notices normally appear. The contents

|

| 117 |

+

of the NOTICE file are for informational purposes only and

|

| 118 |

+

do not modify the License. You may add Your own attribution

|

| 119 |

+

notices within Derivative Works that You distribute, alongside

|

| 120 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 121 |

+

that such additional attribution notices cannot be construed

|

| 122 |

+

as modifying the License.

|

| 123 |

+

|

| 124 |

+

You may add Your own copyright statement to Your modifications and

|

| 125 |

+

may provide additional or different license terms and conditions

|

| 126 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 127 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 128 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 129 |

+

the conditions stated in this License.

|

| 130 |

+

|

| 131 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 132 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 133 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 134 |

+

this License, without any additional terms or conditions.

|

| 135 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 136 |

+

the terms of any separate license agreement you may have executed

|

| 137 |

+

with Licensor regarding such Contributions.

|

| 138 |

+

|

| 139 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 140 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 141 |

+

except as required for reasonable and customary use in describing the

|

| 142 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 143 |

+

|

| 144 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 145 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 146 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 147 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 148 |

+

implied, including, without limitation, any warranties or conditions

|

| 149 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 150 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 151 |

+

appropriateness of using or redistributing the Work and assume any

|

| 152 |

+

risks associated with Your exercise of permissions under this License.

|

| 153 |

+

|

| 154 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 155 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 156 |

+

unless required by applicable law (such as deliberate and grossly

|

| 157 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 158 |

+

liable to You for damages, including any direct, indirect, special,

|

| 159 |

+

incidental, or consequential damages of any character arising as a

|

| 160 |

+

result of this License or out of the use or inability to use the

|

| 161 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 162 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 163 |

+

other commercial damages or losses), even if such Contributor

|

| 164 |

+

has been advised of the possibility of such damages.

|

| 165 |

+

|

| 166 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 167 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 168 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 169 |

+

or other liability obligations and/or rights consistent with this

|

| 170 |

+

License. However, in accepting such obligations, You may act only

|

| 171 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 172 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 173 |

+

defend, and hold each Contributor harmless for any liability

|

| 174 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 175 |

+

of your accepting any such warranty or additional liability.

|

| 176 |

+

|

| 177 |

+

END OF TERMS AND CONDITIONS

|

README.md

CHANGED

|

@@ -4,7 +4,7 @@ emoji: 🏵️

|

|

| 4 |

colorFrom: blue

|

| 5 |

colorTo: red

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version:

|

| 8 |

app_file: app.py

|

| 9 |

pinned: true

|

| 10 |

license: cc-by-sa-4.0

|

|

|

|

| 4 |

colorFrom: blue

|

| 5 |

colorTo: red

|

| 6 |

sdk: gradio

|

| 7 |

+

sdk_version: 4.9.1

|

| 8 |

app_file: app.py

|

| 9 |

pinned: true

|

| 10 |

license: cc-by-sa-4.0

|

app.py

CHANGED

|

@@ -1,127 +1,564 @@

|

|

|

|

|

| 1 |

import os

|

| 2 |

import shutil

|

|

|

|

| 3 |

|

|

|

|

| 4 |

import gradio as gr

|

|

|

|

|

|

|

|

|

|

| 5 |

|

|

|

|

| 6 |

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

|

| 30 |

-

|

| 31 |

-

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

|

| 45 |

-

os.

|

| 46 |

os.makedirs(path_output_dir, exist_ok=True)

|

| 47 |

-

shutil.copy(path_input, path_input_dir)

|

| 48 |

|

| 49 |

-

os.

|

| 50 |

-

|

| 51 |

-

|

| 52 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 53 |

)

|

| 54 |

|

| 55 |

-

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

|

| 59 |

-

|

| 60 |

-

|

| 61 |

-

|

| 62 |

-

|

| 63 |

-

|

| 64 |

-

|

| 65 |

-

|

| 66 |

-

|

| 67 |

-

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

|

| 73 |

-

gr.

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

|

| 78 |

-

|

| 79 |

-

|

| 80 |

-

|

| 81 |

-

|

| 82 |

-

)

|

| 83 |

-

|

| 84 |

-

|

| 85 |

-

|

| 86 |

-

|

| 87 |

-

|

| 88 |

-

|

| 89 |

-

|

| 90 |

-

|

| 91 |

-

|

| 92 |

-

|

| 93 |

-

),

|

| 94 |

-

|

| 95 |

-

|

| 96 |

-

|

| 97 |

-

|

| 98 |

-

|

| 99 |

-

|

| 100 |

-

|

| 101 |

-

|

| 102 |

-

|

| 103 |

-

|

| 104 |

-

|

| 105 |

-

|

| 106 |

-

|

| 107 |

-

|

| 108 |

-

|

| 109 |

-

|

| 110 |

-

|

| 111 |

-

|

| 112 |

-

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

|

| 116 |

-

|

| 117 |

-

.

|

| 118 |

-

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

|

| 122 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 123 |

|

| 124 |

|

| 125 |

if __name__ == "__main__":

|

| 126 |

-

|

| 127 |

-

iface.queue().launch(server_name="0.0.0.0", server_port=7860)

|

|

|

|

| 1 |

+

import functools

|

| 2 |

import os

|

| 3 |

import shutil

|

| 4 |

+

import sys

|

| 5 |

|

| 6 |

+

import git

|

| 7 |

import gradio as gr

|

| 8 |

+

import numpy as np

|

| 9 |

+

import torch as torch

|

| 10 |

+

from PIL import Image

|

| 11 |

|

| 12 |

+

from gradio_imageslider import ImageSlider

|

| 13 |

|

| 14 |

+

from extrude import extrude_depth_3d

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def process(

|

| 18 |

+

pipe,

|

| 19 |

+

path_input,

|

| 20 |

+

ensemble_size,

|

| 21 |

+

denoise_steps,

|

| 22 |

+

processing_res,

|

| 23 |

+

path_out_16bit=None,

|

| 24 |

+

path_out_fp32=None,

|

| 25 |

+

path_out_vis=None,

|

| 26 |

+

_input_3d_plane_near=None,

|

| 27 |

+

_input_3d_plane_far=None,

|

| 28 |

+

_input_3d_embossing=None,

|

| 29 |

+

_input_3d_filter_size=None,

|

| 30 |

+

_input_3d_frame_near=None,

|

| 31 |

+

):

|

| 32 |

+

if path_out_vis is not None:

|

| 33 |

+

return (

|

| 34 |

+

[path_out_16bit, path_out_vis],

|

| 35 |

+

[path_out_16bit, path_out_fp32, path_out_vis],

|

| 36 |

+

)

|

| 37 |

+

|

| 38 |

+

input_image = Image.open(path_input)

|

| 39 |

+

|

| 40 |

+

pipe_out = pipe(

|

| 41 |

+

input_image,

|

| 42 |

+

ensemble_size=ensemble_size,

|

| 43 |

+

denoising_steps=denoise_steps,

|

| 44 |

+

processing_res=processing_res,

|

| 45 |

+

show_progress_bar=True,

|

| 46 |

+

)

|

| 47 |

+

|

| 48 |

+

depth_pred = pipe_out.depth_np

|

| 49 |

+

depth_colored = pipe_out.depth_colored

|

| 50 |

+

depth_16bit = (depth_pred * 65535.0).astype(np.uint16)

|

| 51 |

+

|

| 52 |

+

path_output_dir = os.path.splitext(path_input)[0] + "_output"

|

| 53 |

os.makedirs(path_output_dir, exist_ok=True)

|

|

|

|

| 54 |

|

| 55 |

+

name_base = os.path.splitext(os.path.basename(path_input))[0]

|

| 56 |

+

path_out_fp32 = os.path.join(path_output_dir, f"{name_base}_depth_fp32.npy")

|

| 57 |

+

path_out_16bit = os.path.join(path_output_dir, f"{name_base}_depth_16bit.png")

|

| 58 |

+

path_out_vis = os.path.join(path_output_dir, f"{name_base}_depth_colored.png")

|

| 59 |

+

|

| 60 |

+

np.save(path_out_fp32, depth_pred)

|

| 61 |

+

Image.fromarray(depth_16bit).save(path_out_16bit, mode="I;16")

|

| 62 |

+

depth_colored.save(path_out_vis)

|

| 63 |

+

|

| 64 |

+

return (

|

| 65 |

+

[path_out_16bit, path_out_vis],

|

| 66 |

+

[path_out_16bit, path_out_fp32, path_out_vis],

|

| 67 |

)

|

| 68 |

|

| 69 |

+

|

| 70 |

+

def process_3d(

|

| 71 |

+

input_image,

|

| 72 |

+

files,

|

| 73 |

+

size_longest_px,

|

| 74 |

+

size_longest_cm,

|

| 75 |

+

filter_size,

|

| 76 |

+

plane_near,

|

| 77 |

+

plane_far,

|

| 78 |

+

embossing,

|

| 79 |

+

frame_thickness,

|

| 80 |

+

frame_near,

|

| 81 |

+

frame_far,

|

| 82 |

+

):

|

| 83 |

+

if input_image is None or len(files) < 1:

|

| 84 |

+

raise gr.Error("Please upload an image (or use examples) and compute depth first")

|

| 85 |

+

|

| 86 |

+

if plane_near >= plane_far:

|

| 87 |

+

raise gr.Error("NEAR plane must have a value smaller than the FAR plane")

|

| 88 |

+

|

| 89 |

+

def _process_3d(size_longest_px, filter_size, vertex_colors, scene_lights, output_model_scale=None):

|

| 90 |

+

image_rgb = input_image

|

| 91 |

+

image_depth = files[0]

|

| 92 |

+

|

| 93 |

+

image_rgb_basename, image_rgb_ext = os.path.splitext(image_rgb)

|

| 94 |

+

image_depth_basename, image_depth_ext = os.path.splitext(image_depth)

|

| 95 |

+

|

| 96 |

+

image_rgb_content = Image.open(image_rgb)

|

| 97 |

+

image_rgb_w, image_rgb_h = image_rgb_content.width, image_rgb_content.height

|

| 98 |

+

image_rgb_d = max(image_rgb_w, image_rgb_h)

|

| 99 |

+

image_new_w = size_longest_px * image_rgb_w // image_rgb_d

|

| 100 |

+

image_new_h = size_longest_px * image_rgb_h // image_rgb_d

|

| 101 |

+

|

| 102 |

+

image_rgb_new = image_rgb_basename + f"_{size_longest_px}" + image_rgb_ext

|

| 103 |

+

image_depth_new = image_depth_basename + f"_{size_longest_px}" + image_depth_ext

|

| 104 |

+

image_rgb_content.resize((image_new_w, image_new_h), Image.LANCZOS).save(

|

| 105 |

+

image_rgb_new

|

| 106 |

+

)

|

| 107 |

+

Image.open(image_depth).resize((image_new_w, image_new_h), Image.LANCZOS).save(

|

| 108 |

+

image_depth_new

|

| 109 |

+

)

|

| 110 |

+

|

| 111 |

+

path_glb, path_stl = extrude_depth_3d(

|

| 112 |

+

image_rgb_new,

|

| 113 |

+

image_depth_new,

|

| 114 |

+

output_model_scale=size_longest_cm * 10 if output_model_scale is None else output_model_scale,

|

| 115 |

+

filter_size=filter_size,

|

| 116 |

+

coef_near=plane_near,

|

| 117 |

+

coef_far=plane_far,

|

| 118 |

+

emboss=embossing / 100,

|

| 119 |

+

f_thic=frame_thickness / 100,

|

| 120 |

+

f_near=frame_near / 100,

|

| 121 |

+

f_back=frame_far / 100,

|

| 122 |

+

vertex_colors=vertex_colors,

|

| 123 |

+

scene_lights=scene_lights,

|

| 124 |

+

)

|

| 125 |

+

|

| 126 |

+

return path_glb, path_stl

|

| 127 |

+

|

| 128 |

+

path_viewer_glb, _ = _process_3d(256, filter_size, vertex_colors=False, scene_lights=True, output_model_scale=1)

|

| 129 |

+

path_files_glb, path_files_stl = _process_3d(size_longest_px, filter_size, vertex_colors=True, scene_lights=False)

|

| 130 |

+

|

| 131 |

+

# sanitize 3d viewer glb path to keep babylon.js happy

|

| 132 |

+

path_viewer_glb_sanitized = os.path.join(os.path.dirname(path_viewer_glb), "preview.glb")

|

| 133 |

+

if path_viewer_glb_sanitized != path_viewer_glb:

|

| 134 |

+

os.rename(path_viewer_glb, path_viewer_glb_sanitized)

|

| 135 |

+

path_viewer_glb = path_viewer_glb_sanitized

|

| 136 |

+

|

| 137 |

+

return path_viewer_glb, [path_files_glb, path_files_stl]

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

def run_demo_server(pipe):

|

| 141 |

+

process_pipe = functools.partial(process, pipe)

|

| 142 |

+

os.environ["GRADIO_ALLOW_FLAGGING"] = "never"

|

| 143 |

+

|

| 144 |

+

with gr.Blocks(

|

| 145 |

+

analytics_enabled=False,

|

| 146 |

+

title="Marigold Depth Estimation",

|

| 147 |

+

css="""

|

| 148 |

+

#download {

|

| 149 |

+

height: 118px;

|

| 150 |

+

}

|

| 151 |

+

.slider .inner {

|

| 152 |

+

width: 5px;

|

| 153 |

+

background: #FFF;

|

| 154 |

+

}

|

| 155 |

+

.viewport {

|

| 156 |

+

aspect-ratio: 4/3;

|

| 157 |

+

}

|

| 158 |

+

""",

|

| 159 |

+

) as demo:

|

| 160 |

+

gr.Markdown(

|

| 161 |

+

"""

|

| 162 |

+

<h1 align="center">Marigold Depth Estimation</h1>

|

| 163 |

+

<p align="center">

|

| 164 |

+

<a title="Website" href="https://marigoldmonodepth.github.io/" target="_blank" rel="noopener noreferrer" style="display: inline-block;">

|

| 165 |

+

<img src="https://www.obukhov.ai/img/badges/badge-website.svg">

|

| 166 |

+

</a>

|

| 167 |

+

<a title="arXiv" href="https://arxiv.org/abs/2312.02145" target="_blank" rel="noopener noreferrer" style="display: inline-block;">

|

| 168 |

+

<img src="https://www.obukhov.ai/img/badges/badge-pdf.svg">

|

| 169 |

+

</a>

|

| 170 |

+

<a title="Github" href="https://github.com/prs-eth/marigold" target="_blank" rel="noopener noreferrer" style="display: inline-block;">

|

| 171 |

+

<img src="https://img.shields.io/github/stars/prs-eth/marigold?label=GitHub%20%E2%98%85&logo=github&color=C8C" alt="badge-github-stars">

|

| 172 |

+

</a>

|

| 173 |

+

<a title="Social" href="https://twitter.com/antonobukhov1" target="_blank" rel="noopener noreferrer" style="display: inline-block;">

|

| 174 |

+

<img src="https://www.obukhov.ai/img/badges/badge-social.svg" alt="social">

|

| 175 |

+

</a>

|

| 176 |

+

</p>

|

| 177 |

+

<p align="justify">

|

| 178 |

+

Marigold is the new state-of-the-art depth estimator for images in the wild.

|

| 179 |

+

Upload your image into the <b>left</b> side, or click any of the <b>examples</b> below.

|

| 180 |

+

The result will be computed and appear on the <b>right</b> in the output comparison window.

|

| 181 |

+

<b style="color: red;">NEW</b>: Scroll down to the new 3D printing part of the demo!

|

| 182 |

+

</p>

|

| 183 |

+

"""

|

| 184 |

+

)

|

| 185 |

+

|

| 186 |

+

with gr.Row():

|

| 187 |

+

with gr.Column():

|

| 188 |

+

input_image = gr.Image(

|

| 189 |

+

label="Input Image",

|

| 190 |

+

type="filepath",

|

| 191 |

+

)

|

| 192 |

+

with gr.Accordion("Advanced options", open=False):

|

| 193 |

+

ensemble_size = gr.Slider(

|

| 194 |

+

label="Ensemble size",

|

| 195 |

+

minimum=1,

|

| 196 |

+

maximum=20,

|

| 197 |

+

step=1,

|

| 198 |

+

value=10,

|

| 199 |

+

)

|

| 200 |

+

denoise_steps = gr.Slider(

|

| 201 |

+

label="Number of denoising steps",

|

| 202 |

+

minimum=1,

|

| 203 |

+

maximum=20,

|

| 204 |

+

step=1,

|

| 205 |

+

value=10,

|

| 206 |

+

)

|

| 207 |

+

processing_res = gr.Radio(

|

| 208 |

+

[

|

| 209 |

+

("Native", 0),

|

| 210 |

+

("Recommended", 768),

|

| 211 |

+

],

|

| 212 |

+

label="Processing resolution",

|

| 213 |

+

value=768,

|

| 214 |

+

)

|

| 215 |

+

input_output_16bit = gr.File(

|

| 216 |

+

label="Predicted depth (16-bit)",

|

| 217 |

+

visible=False,

|

| 218 |

+

)

|

| 219 |

+

input_output_fp32 = gr.File(

|

| 220 |

+

label="Predicted depth (32-bit)",

|

| 221 |

+

visible=False,

|

| 222 |

+

)

|

| 223 |

+

input_output_vis = gr.File(

|

| 224 |

+

label="Predicted depth (red-near, blue-far)",

|

| 225 |

+

visible=False,

|

| 226 |

+

)

|

| 227 |

+

with gr.Row():

|

| 228 |

+

submit_btn = gr.Button(value="Compute Depth", variant="primary")

|

| 229 |

+

clear_btn = gr.Button(value="Clear")

|

| 230 |

+

with gr.Column():

|

| 231 |

+

output_slider = ImageSlider(

|

| 232 |

+

label="Predicted depth (red-near, blue-far)",

|

| 233 |

+

type="filepath",

|

| 234 |

+

show_download_button=True,

|

| 235 |

+

show_share_button=True,

|

| 236 |

+

interactive=False,

|

| 237 |

+

elem_classes="slider",

|

| 238 |

+

position=0.25,

|

| 239 |

+

)

|

| 240 |

+

files = gr.Files(

|

| 241 |

+

label="Depth outputs",

|

| 242 |

+

elem_id="download",

|

| 243 |

+

interactive=False,

|

| 244 |

+

)

|

| 245 |

+

|

| 246 |

+

demo_3d_header = gr.Markdown(

|

| 247 |

+

"""

|

| 248 |

+

<h3 align="center">3D Printing Depth Maps</h3>

|

| 249 |

+

<p align="justify">

|

| 250 |

+

This part of the demo uses Marigold depth maps estimated in the previous step to create a

|

| 251 |

+

3D-printable model. The models are watertight, with correct normals, and exported in the STL format.

|

| 252 |

+

We recommended creating the first model with the default parameters and iterating on it until the best

|

| 253 |

+

result (see Pro Tips below).

|

| 254 |

+

</p>

|

| 255 |

+

""",

|

| 256 |

+

render=False,

|

| 257 |

+

)

|

| 258 |

+

|

| 259 |

+

demo_3d = gr.Row(render=False)

|

| 260 |

+

with demo_3d:

|

| 261 |

+

with gr.Column():

|

| 262 |

+

with gr.Accordion("3D printing demo: Main options", open=True):

|

| 263 |

+

plane_near = gr.Slider(

|

| 264 |

+

label="Relative position of the near plane (between 0 and 1)",

|

| 265 |

+

minimum=0.0,

|

| 266 |

+

maximum=1.0,

|

| 267 |

+

step=0.001,

|

| 268 |

+

value=0.0,

|

| 269 |

+

)

|

| 270 |

+

plane_far = gr.Slider(

|

| 271 |

+

label="Relative position of the far plane (between near and 1)",

|

| 272 |

+

minimum=0.0,

|

| 273 |

+

maximum=1.0,

|

| 274 |

+

step=0.001,

|

| 275 |

+

value=1.0,

|

| 276 |

+

)

|

| 277 |

+

embossing = gr.Slider(

|

| 278 |

+

label="Embossing level",

|

| 279 |

+

minimum=0,

|

| 280 |

+

maximum=100,

|

| 281 |

+

step=1,

|

| 282 |

+

value=20,

|

| 283 |

+

)

|

| 284 |

+

with gr.Accordion("3D printing demo: Advanced options", open=False):

|

| 285 |

+

size_longest_px = gr.Slider(

|

| 286 |

+

label="Size (px) of the longest side",

|

| 287 |

+

minimum=256,

|

| 288 |

+

maximum=1024,

|

| 289 |

+

step=256,

|

| 290 |

+

value=512,

|

| 291 |

+

)

|

| 292 |

+

size_longest_cm = gr.Slider(

|

| 293 |

+

label="Size (cm) of the longest side",

|

| 294 |

+

minimum=1,

|

| 295 |

+

maximum=100,

|

| 296 |

+

step=1,

|

| 297 |

+

value=10,

|

| 298 |

+

)

|

| 299 |

+

filter_size = gr.Slider(

|

| 300 |

+

label="Size (px) of the smoothing filter",

|

| 301 |

+

minimum=1,

|

| 302 |

+

maximum=5,

|

| 303 |

+

step=2,

|

| 304 |

+

value=3,

|

| 305 |

+

)

|

| 306 |

+

frame_thickness = gr.Slider(

|

| 307 |

+

label="Frame thickness",

|

| 308 |

+

minimum=0,

|

| 309 |

+

maximum=100,

|

| 310 |

+

step=1,

|

| 311 |

+

value=5,

|

| 312 |

+

)

|

| 313 |

+

frame_near = gr.Slider(

|

| 314 |

+

label="Frame's near plane offset",

|

| 315 |

+

minimum=-100,

|

| 316 |

+

maximum=100,

|

| 317 |

+

step=1,

|

| 318 |

+

value=1,

|

| 319 |

+

)

|

| 320 |

+

frame_far = gr.Slider(

|

| 321 |

+

label="Frame's far plane offset",

|

| 322 |

+

minimum=1,

|

| 323 |

+

maximum=10,

|

| 324 |

+

step=1,

|

| 325 |

+

value=1,

|

| 326 |

+

)

|

| 327 |

+

with gr.Row():

|

| 328 |

+

submit_3d = gr.Button(value="Create 3D", variant="primary")

|

| 329 |

+

clear_3d = gr.Button(value="Clear 3D")

|

| 330 |

+

gr.Markdown(

|

| 331 |

+

"""

|

| 332 |

+

<h5 align="center">Pro Tips</h5>

|

| 333 |

+

<ol>

|

| 334 |

+

<li><b>Re-render with new parameters</b>: Click "Clear 3D" and then "Create 3D".</li>

|

| 335 |

+

<li><b>Adjust 3D scale and cut-off focus</b>: Set the frame's near plane offset to the

|

| 336 |

+

minimum and use 3D preview to evaluate depth scaling. Repeat until the scale is correct and

|

| 337 |

+

everything important is in the focus. Set the optimal value for frame's near

|

| 338 |

+

plane offset as a last step.</li>

|

| 339 |

+

<li><b>Increase details</b>: Decrease size of the smoothing filter (also increases noise).</li>

|

| 340 |

+

</ol>

|

| 341 |

+

"""

|

| 342 |

+

)

|

| 343 |

+

|

| 344 |

+

with gr.Column():

|

| 345 |

+

viewer_3d = gr.Model3D(

|

| 346 |

+

camera_position=(75.0, 90.0, 1.25),

|

| 347 |

+

elem_classes="viewport",

|

| 348 |

+

label="3D preview (low-res, relief highlight)",

|

| 349 |

+

interactive=False,

|

| 350 |

+

)

|

| 351 |

+

files_3d = gr.Files(

|

| 352 |

+

label="3D model outputs (high-res)",

|

| 353 |

+

elem_id="download",

|

| 354 |

+

interactive=False,

|

| 355 |

+

)

|

| 356 |

+

|

| 357 |

+

blocks_settings_depth = [ensemble_size, denoise_steps, processing_res]

|

| 358 |

+

blocks_settings_3d = [plane_near, plane_far, embossing, size_longest_px, size_longest_cm, filter_size,

|

| 359 |

+

frame_thickness, frame_near, frame_far]

|

| 360 |

+

blocks_settings = blocks_settings_depth + blocks_settings_3d

|

| 361 |

+

map_id_to_default = {b._id: b.value for b in blocks_settings}

|

| 362 |

+

|

| 363 |

+

inputs = [

|

| 364 |

+

input_image,

|

| 365 |

+

ensemble_size,

|

| 366 |

+

denoise_steps,

|

| 367 |

+

processing_res,

|

| 368 |

+

input_output_16bit,

|

| 369 |

+

input_output_fp32,

|

| 370 |

+

input_output_vis,

|

| 371 |

+

plane_near,

|

| 372 |

+

plane_far,

|

| 373 |

+

embossing,

|

| 374 |

+

filter_size,

|

| 375 |

+

frame_near,

|

| 376 |

+

]

|

| 377 |

+

outputs = [

|

| 378 |

+

submit_btn,

|

| 379 |

+

input_image,

|

| 380 |

+

output_slider,

|

| 381 |

+

files,

|

| 382 |

+

]

|

| 383 |

+

|

| 384 |

+

def submit_depth_fn(*args):

|

| 385 |

+

out = list(process_pipe(*args))

|

| 386 |

+

out = [gr.Button(interactive=False), gr.Image(interactive=False)] + out

|

| 387 |

+

return out

|

| 388 |

+

|

| 389 |

+

submit_btn.click(

|

| 390 |

+

fn=submit_depth_fn,

|

| 391 |

+

inputs=inputs,

|

| 392 |

+

outputs=outputs,

|

| 393 |

+

concurrency_limit=1,

|

| 394 |

+

)

|

| 395 |

+

|

| 396 |

+

gr.Examples(

|

| 397 |

+

fn=submit_depth_fn,

|

| 398 |

+

examples=[

|

| 399 |

+

[

|

| 400 |

+

"files/bee.jpg",

|

| 401 |

+

10, # ensemble_size

|

| 402 |

+

10, # denoise_steps

|

| 403 |

+

768, # processing_res

|

| 404 |

+

"files/bee_depth_16bit.png",

|

| 405 |

+

"files/bee_depth_fp32.npy",

|

| 406 |

+

"files/bee_depth_colored.png",

|

| 407 |

+

0.0, # plane_near

|

| 408 |

+

0.5, # plane_far

|

| 409 |

+

20, # embossing

|

| 410 |

+

3, # filter_size

|

| 411 |

+

0, # frame_near

|

| 412 |

+

],

|

| 413 |

+

[

|

| 414 |

+

"files/cat.jpg",

|

| 415 |

+

10, # ensemble_size

|

| 416 |

+

10, # denoise_steps

|

| 417 |

+

768, # processing_res

|

| 418 |

+

"files/cat_depth_16bit.png",

|

| 419 |

+

"files/cat_depth_fp32.npy",

|

| 420 |

+

"files/cat_depth_colored.png",

|

| 421 |

+

0.0, # plane_near

|

| 422 |

+

0.3, # plane_far

|

| 423 |

+

20, # embossing

|

| 424 |

+

3, # filter_size

|

| 425 |

+

0, # frame_near

|

| 426 |

+

],

|

| 427 |

+

[

|

| 428 |

+

"files/swings.jpg",

|

| 429 |

+

10, # ensemble_size

|

| 430 |

+

10, # denoise_steps

|

| 431 |

+

768, # processing_res

|

| 432 |

+

"files/swings_depth_16bit.png",

|

| 433 |

+

"files/swings_depth_fp32.npy",

|

| 434 |

+

"files/swings_depth_colored.png",

|

| 435 |

+

0.05, # plane_near

|

| 436 |

+

0.25, # plane_far

|

| 437 |

+

10, # embossing

|

| 438 |

+

1, # filter_size

|

| 439 |

+

0, # frame_near

|

| 440 |

+

],

|

| 441 |

+

[

|

| 442 |

+

"files/einstein.jpg",

|

| 443 |

+

10, # ensemble_size

|

| 444 |

+

10, # denoise_steps

|

| 445 |

+

768, # processing_res

|

| 446 |

+

"files/einstein_depth_16bit.png",

|

| 447 |

+

"files/einstein_depth_fp32.npy",

|

| 448 |

+

"files/einstein_depth_colored.png",

|

| 449 |

+

0.0, # plane_near

|

| 450 |

+

0.5, # plane_far

|

| 451 |

+

50, # embossing

|

| 452 |

+

3, # filter_size

|

| 453 |

+

-15, # frame_near

|

| 454 |

+

],

|

| 455 |

+

],

|

| 456 |

+

inputs=inputs,

|

| 457 |

+

outputs=outputs,

|

| 458 |

+

cache_examples=True,

|

| 459 |

+

)

|

| 460 |

+

|

| 461 |

+

demo_3d_header.render()

|

| 462 |

+

demo_3d.render()

|

| 463 |

+

|

| 464 |

+

def clear_fn():

|

| 465 |

+

out = []

|

| 466 |

+

for b in blocks_settings:

|

| 467 |

+

out.append(map_id_to_default[b._id])

|

| 468 |

+

out += [

|

| 469 |

+

gr.Button(interactive=True),

|

| 470 |

+

gr.Button(interactive=True),

|

| 471 |

+

gr.Image(value=None, interactive=True),

|

| 472 |

+

None, None, None, None, None, None, None,

|

| 473 |

+

]

|

| 474 |

+

return out

|

| 475 |

+

|

| 476 |

+

clear_btn.click(

|

| 477 |

+

fn=clear_fn,

|

| 478 |

+

inputs=[],

|

| 479 |

+

outputs=blocks_settings + [

|

| 480 |

+

submit_btn,

|

| 481 |

+

submit_3d,

|

| 482 |

+

input_image,

|

| 483 |

+

input_output_16bit,

|

| 484 |

+

input_output_fp32,

|

| 485 |

+

input_output_vis,

|

| 486 |

+

output_slider,

|

| 487 |

+

files,

|

| 488 |

+

viewer_3d,

|

| 489 |

+

files_3d,

|

| 490 |

+

],

|

| 491 |

+

)

|

| 492 |

+

|

| 493 |

+

def submit_3d_fn(*args):

|

| 494 |

+

out = list(process_3d(*args))

|

| 495 |

+

out = [gr.Button(interactive=False)] + out

|

| 496 |

+

return out

|

| 497 |

+

|

| 498 |

+

submit_3d.click(

|

| 499 |

+

fn=submit_3d_fn,

|

| 500 |

+

inputs=[

|

| 501 |

+

input_image,

|

| 502 |

+

files,

|

| 503 |

+

size_longest_px,

|

| 504 |

+

size_longest_cm,

|

| 505 |

+

filter_size,

|

| 506 |

+

plane_near,

|

| 507 |

+

plane_far,

|

| 508 |

+

embossing,

|

| 509 |

+

frame_thickness,

|

| 510 |

+

frame_near,

|

| 511 |

+

frame_far,

|

| 512 |

+

],

|

| 513 |

+

outputs=[submit_3d, viewer_3d, files_3d],

|

| 514 |

+

concurrency_limit=1,

|

| 515 |

+

)

|

| 516 |

+

|

| 517 |

+

def clear_3d_fn():

|

| 518 |

+

return [gr.Button(interactive=True), None, None]

|

| 519 |

+

|

| 520 |

+

clear_3d.click(

|

| 521 |

+

fn=clear_3d_fn,

|

| 522 |

+

inputs=[],

|

| 523 |

+

outputs=[submit_3d, viewer_3d, files_3d],

|

| 524 |

+

)

|

| 525 |

+

|

| 526 |

+

demo.queue().launch(server_name="0.0.0.0", server_port=7860)

|

| 527 |

+

|

| 528 |

+

|

| 529 |

+

def prefetch_hf_cache(pipe):

|

| 530 |

+

process(pipe, "files/bee.jpg", 1, 1, 64)

|

| 531 |

+

shutil.rmtree("files/bee_output")

|

| 532 |

+

|

| 533 |

+

|

| 534 |

+

def main():

|

| 535 |

+

REPO_URL = "https://github.com/prs-eth/Marigold.git"

|

| 536 |

+

REPO_HASH = "22437a9d"

|

| 537 |

+

REPO_DIR = "Marigold"

|

| 538 |

+

CHECKPOINT = "Bingxin/Marigold"

|

| 539 |

+

|

| 540 |

+

if os.path.isdir(REPO_DIR):

|

| 541 |

+

shutil.rmtree(REPO_DIR)

|

| 542 |

+

repo = git.Repo.clone_from(REPO_URL, REPO_DIR)

|

| 543 |

+

repo.git.checkout(REPO_HASH)

|

| 544 |

+

|

| 545 |

+

sys.path.append(os.path.join(os.getcwd(), REPO_DIR))

|

| 546 |

+

|

| 547 |

+

from marigold import MarigoldPipeline

|

| 548 |

+

|

| 549 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 550 |

+

|

| 551 |

+

pipe = MarigoldPipeline.from_pretrained(CHECKPOINT)

|

| 552 |

+

try:

|

| 553 |

+

import xformers

|

| 554 |

+

pipe.enable_xformers_memory_efficient_attention()

|

| 555 |

+

except:

|

| 556 |

+

pass # run without xformers

|

| 557 |

+

|

| 558 |

+

pipe = pipe.to(device)

|

| 559 |

+

prefetch_hf_cache(pipe)

|

| 560 |

+

run_demo_server(pipe)

|

| 561 |

|

| 562 |

|

| 563 |

if __name__ == "__main__":

|

| 564 |

+

main()

|

|

|

extrude.py

ADDED

|

@@ -0,0 +1,322 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|