Spaces:

Configuration error

Configuration error

Upload 23 files

Browse files- .gitattributes +3 -0

- .gitignore +143 -0

- README.md +118 -13

- __pycache__/helper.cpython-312.pyc +0 -0

- __pycache__/settings.cpython-312.pyc +0 -0

- app.py +110 -0

- assets/Objdetectionyoutubegif-1.m4v +0 -0

- assets/pic1.png +0 -0

- assets/pic3.png +0 -0

- assets/segmentation.png +0 -0

- helper.py +231 -0

- images/GS.jpg +0 -0

- images/deneme.jpeg +0 -0

- images/deneme_detected.png +0 -0

- images/office_4.jpg +0 -0

- images/office_4_detected.jpg +0 -0

- packages.txt +3 -0

- requirements.txt +87 -0

- settings.py +54 -0

- videos/video_1.mp4 +3 -0

- videos/video_2.mp4 +3 -0

- videos/video_3.mp4 +3 -0

- weights/yolov8m.pt +3 -0

- weights/yolov8n-seg.pt +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

videos/video_1.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

videos/video_2.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

videos/video_3.mp4 filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,143 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Local folder

|

| 10 |

+

local_folder

|

| 11 |

+

project_demo

|

| 12 |

+

project_demo/

|

| 13 |

+

logs/

|

| 14 |

+

local_folder/

|

| 15 |

+

/demo.py

|

| 16 |

+

demo.py

|

| 17 |

+

runs/

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

# Distribution / packaging

|

| 21 |

+

.Python

|

| 22 |

+

build/

|

| 23 |

+

develop-eggs/

|

| 24 |

+

dist/

|

| 25 |

+

downloads/

|

| 26 |

+

eggs/

|

| 27 |

+

.eggs/

|

| 28 |

+

lib/

|

| 29 |

+

lib64/

|

| 30 |

+

parts/

|

| 31 |

+

sdist/

|

| 32 |

+

var/

|

| 33 |

+

wheels/

|

| 34 |

+

pip-wheel-metadata/

|

| 35 |

+

share/python-wheels/

|

| 36 |

+

*.egg-info/

|

| 37 |

+

.installed.cfg

|

| 38 |

+

*.egg

|

| 39 |

+

MANIFEST

|

| 40 |

+

|

| 41 |

+

# PyInstaller

|

| 42 |

+

# Usually these files are written by a python script from a template

|

| 43 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 44 |

+

*.manifest

|

| 45 |

+

*.spec

|

| 46 |

+

|

| 47 |

+

.vscode

|

| 48 |

+

|

| 49 |

+

# Installer logs

|

| 50 |

+

pip-log.txt

|

| 51 |

+

pip-delete-this-directory.txt

|

| 52 |

+

|

| 53 |

+

# Unit test / coverage reports

|

| 54 |

+

htmlcov/

|

| 55 |

+

.tox/

|

| 56 |

+

.nox/

|

| 57 |

+

.coverage

|

| 58 |

+

.coverage.*

|

| 59 |

+

.cache

|

| 60 |

+

nosetests.xml

|

| 61 |

+

coverage.xml

|

| 62 |

+

*.cover

|

| 63 |

+

*.py,cover

|

| 64 |

+

.hypothesis/

|

| 65 |

+

.pytest_cache/

|

| 66 |

+

|

| 67 |

+

# Translations

|

| 68 |

+

*.mo

|

| 69 |

+

*.pot

|

| 70 |

+

|

| 71 |

+

# Django stuff:

|

| 72 |

+

*.log

|

| 73 |

+

local_settings.py

|

| 74 |

+

db.sqlite3

|

| 75 |

+

db.sqlite3-journal

|

| 76 |

+

|

| 77 |

+

# Flask stuff:

|

| 78 |

+

instance/

|

| 79 |

+

.webassets-cache

|

| 80 |

+

|

| 81 |

+

# Scrapy stuff:

|

| 82 |

+

.scrapy

|

| 83 |

+

|

| 84 |

+

# Sphinx documentation

|

| 85 |

+

docs/_build/

|

| 86 |

+

|

| 87 |

+

# PyBuilder

|

| 88 |

+

target/

|

| 89 |

+

|

| 90 |

+

# Jupyter Notebook

|

| 91 |

+

.ipynb_checkpoints

|

| 92 |

+

|

| 93 |

+

# IPython

|

| 94 |

+

profile_default/

|

| 95 |

+

ipython_config.py

|

| 96 |

+

|

| 97 |

+

# pyenv

|

| 98 |

+

.python-version

|

| 99 |

+

|

| 100 |

+

# pipenv

|

| 101 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 102 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 103 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 104 |

+

# install all needed dependencies.

|

| 105 |

+

#Pipfile.lock

|

| 106 |

+

|

| 107 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 108 |

+

__pypackages__/

|

| 109 |

+

|

| 110 |

+

# Celery stuff

|

| 111 |

+

celerybeat-schedule

|

| 112 |

+

celerybeat.pid

|

| 113 |

+

|

| 114 |

+

# SageMath parsed files

|

| 115 |

+

*.sage.py

|

| 116 |

+

|

| 117 |

+

# Environments

|

| 118 |

+

.env

|

| 119 |

+

.venv

|

| 120 |

+

env/

|

| 121 |

+

venv/

|

| 122 |

+

venv_/

|

| 123 |

+

ENV/

|

| 124 |

+

env.bak/

|

| 125 |

+

venv.bak/

|

| 126 |

+

|

| 127 |

+

# Spyder project settings

|

| 128 |

+

.spyderproject

|

| 129 |

+

.spyproject

|

| 130 |

+

|

| 131 |

+

# Rope project settings

|

| 132 |

+

.ropeproject

|

| 133 |

+

|

| 134 |

+

# mkdocs documentation

|

| 135 |

+

/site

|

| 136 |

+

|

| 137 |

+

# mypy

|

| 138 |

+

.mypy_cache/

|

| 139 |

+

.dmypy.json

|

| 140 |

+

dmypy.json

|

| 141 |

+

|

| 142 |

+

# Pyre type checker

|

| 143 |

+

.pyre/

|

README.md

CHANGED

|

@@ -1,13 +1,118 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Real-time Object Detection and Tracking with YOLOv8 and Streamlit

|

| 2 |

+

|

| 3 |

+

This repository is a comprehensive open-source project that demonstrates the integration of object detection and tracking using the YOLOv8 object detection algorithm and Streamlit, a popular Python web application framework for building interactive web applications. This project provides a user-friendly and customizable interface that can detect and track objects in real-time video streams.

|

| 4 |

+

|

| 5 |

+

## Demo WebApp

|

| 6 |

+

|

| 7 |

+

This app is up and running on Streamlit cloud server!!! Thanks 'Streamlit' for the community support for the cloud upload. You can check the demo of this web application on the link below.

|

| 8 |

+

|

| 9 |

+

[yolov8-streamlit-detection-tracking-webapp](https://codingmantras-yolov8-streamlit-detection-tracking-app-njcqjg.streamlit.app/)

|

| 10 |

+

|

| 11 |

+

## Tracking With Object Detection Demo

|

| 12 |

+

|

| 13 |

+

<https://user-images.githubusercontent.com/104087274/234874398-75248e8c-6965-4c91-9176-622509f0ad86.mov>

|

| 14 |

+

|

| 15 |

+

## Demo Pics

|

| 16 |

+

|

| 17 |

+

### Home page

|

| 18 |

+

|

| 19 |

+

<img src="https://github.com/CodingMantras/yolov8-streamlit-detection-tracking/blob/master/assets/pic1.png" >

|

| 20 |

+

|

| 21 |

+

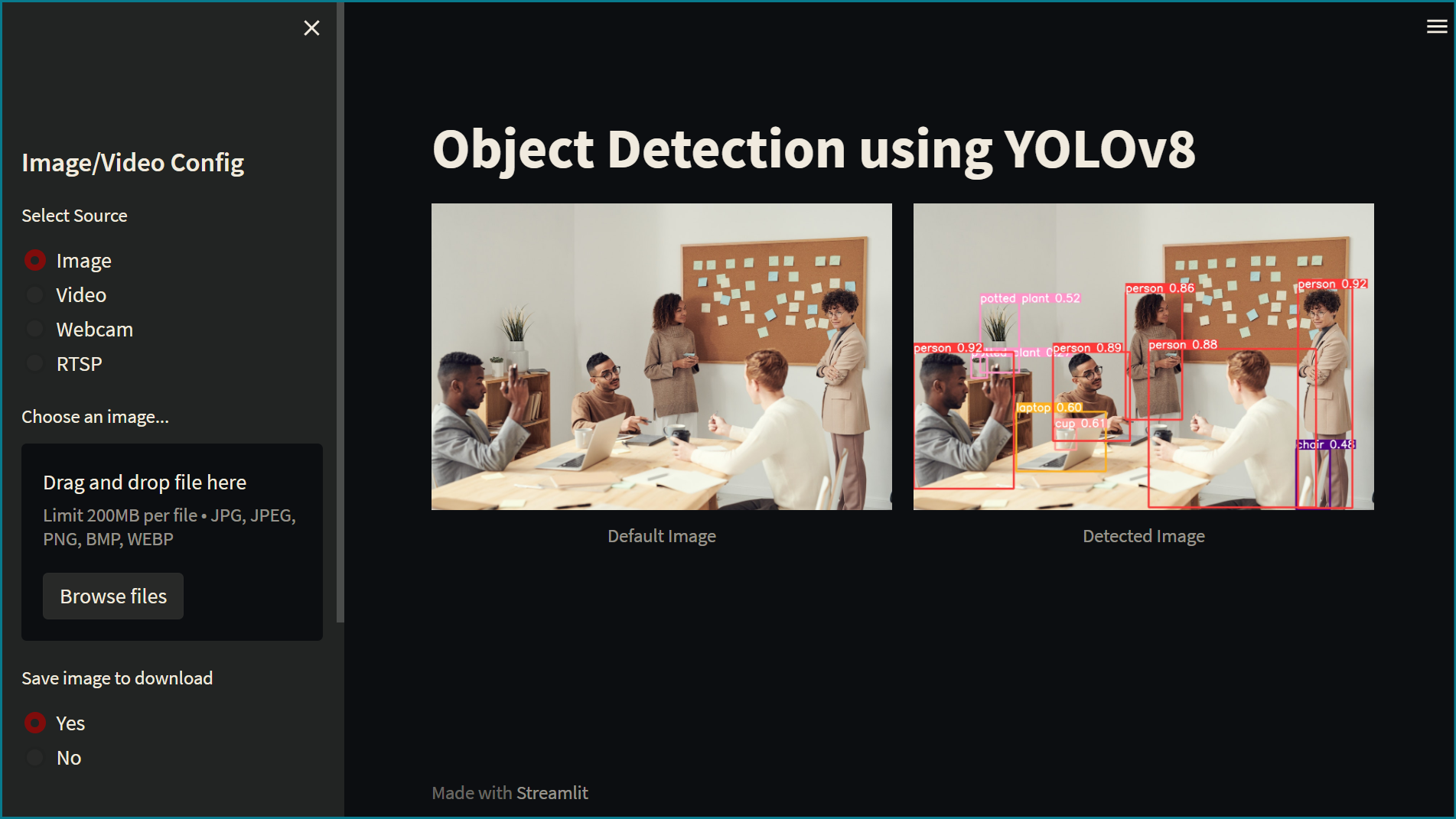

### Page after uploading an image and object detection

|

| 22 |

+

|

| 23 |

+

<img src="https://github.com/CodingMantras/yolov8-streamlit-detection-tracking/blob/master/assets/pic3.png" >

|

| 24 |

+

|

| 25 |

+

### Segmentation task on image

|

| 26 |

+

|

| 27 |

+

<img src="https://github.com/CodingMantras/yolov8-streamlit-detection-tracking/blob/master/assets/segmentation.png" >

|

| 28 |

+

|

| 29 |

+

## Requirements

|

| 30 |

+

|

| 31 |

+

Python 3.6+

|

| 32 |

+

YOLOv8

|

| 33 |

+

Streamlit

|

| 34 |

+

|

| 35 |

+

```bash

|

| 36 |

+

pip install ultralytics streamlit pytube

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

## Installation

|

| 40 |

+

|

| 41 |

+

- Clone the repository: git clone <https://github.com/CodingMantras/yolov8-streamlit-detection-tracking.git>

|

| 42 |

+

- Change to the repository directory: `cd yolov8-streamlit-detection-tracking`

|

| 43 |

+

- Create `weights`, `videos`, and `images` directories inside the project.

|

| 44 |

+

- Download the pre-trained YOLOv8 weights from (<https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt>) and save them to the `weights` directory in the same project.

|

| 45 |

+

|

| 46 |

+

## Usage

|

| 47 |

+

|

| 48 |

+

- Run the app with the following command: `streamlit run app.py`

|

| 49 |

+

- The app should open in a new browser window.

|

| 50 |

+

|

| 51 |

+

### ML Model Config

|

| 52 |

+

|

| 53 |

+

- Select task (Detection, Segmentation)

|

| 54 |

+

- Select model confidence

|

| 55 |

+

- Use the slider to adjust the confidence threshold (25-100) for the model.

|

| 56 |

+

|

| 57 |

+

One the model config is done, select a source.

|

| 58 |

+

|

| 59 |

+

### Detection on images

|

| 60 |

+

|

| 61 |

+

- The default image with its objects-detected image is displayed on the main page.

|

| 62 |

+

- Select a source. (radio button selection `Image`).

|

| 63 |

+

- Upload an image by clicking on the "Browse files" button.

|

| 64 |

+

- Click the "Detect Objects" button to run the object detection algorithm on the uploaded image with the selected confidence threshold.

|

| 65 |

+

- The resulting image with objects detected will be displayed on the page. Click the "Download Image" button to download the image.("If save image to download" is selected)

|

| 66 |

+

|

| 67 |

+

## Detection in Videos

|

| 68 |

+

|

| 69 |

+

- Create a folder with name `videos` in the same directory

|

| 70 |

+

- Dump your videos in this folder

|

| 71 |

+

- In `settings.py` edit the following lines.

|

| 72 |

+

|

| 73 |

+

```python

|

| 74 |

+

# video

|

| 75 |

+

VIDEO_DIR = ROOT / 'videos' # After creating the videos folder

|

| 76 |

+

|

| 77 |

+

# Suppose you have four videos inside videos folder

|

| 78 |

+

# Edit the name of video_1, 2, 3, 4 (with the names of your video files)

|

| 79 |

+

VIDEO_1_PATH = VIDEO_DIR / 'video_1.mp4'

|

| 80 |

+

VIDEO_2_PATH = VIDEO_DIR / 'video_2.mp4'

|

| 81 |

+

VIDEO_3_PATH = VIDEO_DIR / 'video_3.mp4'

|

| 82 |

+

VIDEO_4_PATH = VIDEO_DIR / 'video_4.mp4'

|

| 83 |

+

|

| 84 |

+

# Edit the same names here also.

|

| 85 |

+

VIDEOS_DICT = {

|

| 86 |

+

'video_1': VIDEO_1_PATH,

|

| 87 |

+

'video_2': VIDEO_2_PATH,

|

| 88 |

+

'video_3': VIDEO_3_PATH,

|

| 89 |

+

'video_4': VIDEO_4_PATH,

|

| 90 |

+

}

|

| 91 |

+

|

| 92 |

+

# Your videos will start appearing inside streamlit webapp 'Choose a video'.

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

- Click on `Detect Video Objects` button and the selected task (detection/segmentation) will start on the selected video.

|

| 96 |

+

|

| 97 |

+

### Detection on RTSP

|

| 98 |

+

|

| 99 |

+

- Select the RTSP stream button

|

| 100 |

+

- Enter the rtsp url inside the textbox and hit `Detect Objects` button

|

| 101 |

+

|

| 102 |

+

### Detection on YouTube Video URL

|

| 103 |

+

|

| 104 |

+

- Select the source as YouTube

|

| 105 |

+

- Copy paste the url inside the text box.

|

| 106 |

+

- The detection/segmentation task will start on the YouTube video url

|

| 107 |

+

|

| 108 |

+

<https://user-images.githubusercontent.com/104087274/226178296-684ad72a-fe5f-4589-b668-95c835cd8d8a.mov>

|

| 109 |

+

|

| 110 |

+

## Acknowledgements

|

| 111 |

+

|

| 112 |

+

This app is based on the YOLOv8(<https://github.com/ultralytics/ultralytics>) object detection algorithm. The app uses the Streamlit(<https://github.com/streamlit/streamlit>) library for the user interface.

|

| 113 |

+

|

| 114 |

+

### Disclaimer

|

| 115 |

+

|

| 116 |

+

Please note that this project is intended for educational purposes only and should not be used in production environments.

|

| 117 |

+

|

| 118 |

+

**Hit star ⭐ if you like this repo!!!**

|

__pycache__/helper.cpython-312.pyc

ADDED

|

Binary file (8.7 kB). View file

|

|

|

__pycache__/settings.cpython-312.pyc

ADDED

|

Binary file (1.49 kB). View file

|

|

|

app.py

ADDED

|

@@ -0,0 +1,110 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Python In-built packages

|

| 2 |

+

from pathlib import Path

|

| 3 |

+

import PIL

|

| 4 |

+

|

| 5 |

+

# External packages

|

| 6 |

+

import streamlit as st

|

| 7 |

+

|

| 8 |

+

# Local Modules

|

| 9 |

+

import settings

|

| 10 |

+

import helper

|

| 11 |

+

|

| 12 |

+

# Setting page layout

|

| 13 |

+

st.set_page_config(

|

| 14 |

+

page_title="YOLO İle Tahmin İşlemi",

|

| 15 |

+

page_icon="🤖",

|

| 16 |

+

layout="wide",

|

| 17 |

+

initial_sidebar_state="expanded"

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

# Main page heading

|

| 21 |

+

st.title("🚀 YOLO İle Nesne Tanıma İşlemi")

|

| 22 |

+

|

| 23 |

+

# Sidebar

|

| 24 |

+

st.sidebar.header("Derin Öğrenme Model Tahmin Ayarları")

|

| 25 |

+

|

| 26 |

+

# Model Options

|

| 27 |

+

model_type = st.sidebar.radio(

|

| 28 |

+

"Modeli Seçiniz", ['Detection', 'Segmentation'])

|

| 29 |

+

|

| 30 |

+

confidence = float(st.sidebar.slider(

|

| 31 |

+

"Model Doğruluğunu Seçiniz", 25, 100, 40)) / 100

|

| 32 |

+

|

| 33 |

+

# Selecting Detection Or Segmentation

|

| 34 |

+

if model_type == 'Detection':

|

| 35 |

+

model_path = Path(settings.DETECTION_MODEL)

|

| 36 |

+

elif model_type == 'Segmentation':

|

| 37 |

+

model_path = Path(settings.SEGMENTATION_MODEL)

|

| 38 |

+

|

| 39 |

+

# Load Pre-trained ML Model

|

| 40 |

+

try:

|

| 41 |

+

model = helper.load_model(model_path)

|

| 42 |

+

except Exception as ex:

|

| 43 |

+

st.error(f"Unable to load model. Check the specified path: {model_path}")

|

| 44 |

+

st.error(ex)

|

| 45 |

+

|

| 46 |

+

st.sidebar.header("Resim Ve Video Yapılandırılması")

|

| 47 |

+

source_radio = st.sidebar.radio(

|

| 48 |

+

"Kaynağı Seçin", settings.SOURCES_LIST)

|

| 49 |

+

|

| 50 |

+

source_img = None

|

| 51 |

+

# If image is selected

|

| 52 |

+

if source_radio == settings.IMAGE:

|

| 53 |

+

source_img = st.sidebar.file_uploader(

|

| 54 |

+

"Bir Resim Seçin...", type=("jpg", "jpeg", "png", 'bmp', 'webp'))

|

| 55 |

+

|

| 56 |

+

col1, col2 = st.columns(2)

|

| 57 |

+

|

| 58 |

+

with col1:

|

| 59 |

+

try:

|

| 60 |

+

if source_img is None:

|

| 61 |

+

default_image_path = str(settings.DEFAULT_IMAGE)

|

| 62 |

+

default_image = PIL.Image.open(default_image_path)

|

| 63 |

+

st.image(default_image_path, caption="Seçilen Resim",

|

| 64 |

+

use_column_width=True)

|

| 65 |

+

else:

|

| 66 |

+

uploaded_image = PIL.Image.open(source_img)

|

| 67 |

+

st.image(source_img, caption="Yüklenen Resim",

|

| 68 |

+

use_column_width=True)

|

| 69 |

+

except Exception as ex:

|

| 70 |

+

st.error("Error occurred while opening the image.")

|

| 71 |

+

st.error(ex)

|

| 72 |

+

|

| 73 |

+

with col2:

|

| 74 |

+

if source_img is None:

|

| 75 |

+

default_detected_image_path = str(settings.DEFAULT_DETECT_IMAGE)

|

| 76 |

+

default_detected_image = PIL.Image.open(

|

| 77 |

+

default_detected_image_path)

|

| 78 |

+

st.image(default_detected_image_path, caption='Tahmin İşlemi',

|

| 79 |

+

use_column_width=True)

|

| 80 |

+

else:

|

| 81 |

+

if st.sidebar.button('Tahmin Et'):

|

| 82 |

+

res = model.predict(uploaded_image,

|

| 83 |

+

conf=confidence

|

| 84 |

+

)

|

| 85 |

+

boxes = res[0].boxes

|

| 86 |

+

res_plotted = res[0].plot()[:, :, ::-1]

|

| 87 |

+

st.image(res_plotted, caption='Tahmin İşlemi',

|

| 88 |

+

use_column_width=True)

|

| 89 |

+

try:

|

| 90 |

+

with st.expander("Tahmin Edilen Nesneler"):

|

| 91 |

+

for box in boxes:

|

| 92 |

+

st.write(box.data)

|

| 93 |

+

except Exception as ex:

|

| 94 |

+

# st.write(ex)

|

| 95 |

+

st.write("No image is uploaded yet!")

|

| 96 |

+

|

| 97 |

+

elif source_radio == settings.VIDEO:

|

| 98 |

+

helper.play_stored_video(confidence, model)

|

| 99 |

+

|

| 100 |

+

elif source_radio == settings.WEBCAM:

|

| 101 |

+

helper.play_webcam(confidence, model)

|

| 102 |

+

|

| 103 |

+

elif source_radio == settings.RTSP:

|

| 104 |

+

helper.play_rtsp_stream(confidence, model)

|

| 105 |

+

|

| 106 |

+

elif source_radio == settings.YOUTUBE:

|

| 107 |

+

helper.play_youtube_video(confidence, model)

|

| 108 |

+

|

| 109 |

+

else:

|

| 110 |

+

st.error("Please select a valid source type!")

|

assets/Objdetectionyoutubegif-1.m4v

ADDED

|

Binary file (528 kB). View file

|

|

|

assets/pic1.png

ADDED

|

assets/pic3.png

ADDED

|

assets/segmentation.png

ADDED

|

helper.py

ADDED

|

@@ -0,0 +1,231 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from ultralytics import YOLO

|

| 2 |

+

import time

|

| 3 |

+

import streamlit as st

|

| 4 |

+

import cv2

|

| 5 |

+

from pytube import YouTube

|

| 6 |

+

|

| 7 |

+

import settings

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def load_model(model_path):

|

| 11 |

+

"""

|

| 12 |

+

Loads a YOLO object detection model from the specified model_path.

|

| 13 |

+

|

| 14 |

+

Parameters:

|

| 15 |

+

model_path (str): The path to the YOLO model file.

|

| 16 |

+

|

| 17 |

+

Returns:

|

| 18 |

+

A YOLO object detection model.

|

| 19 |

+

"""

|

| 20 |

+

model = YOLO(model_path)

|

| 21 |

+

return model

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

def display_tracker_options():

|

| 25 |

+

display_tracker = st.radio("Display Tracker", ('Yes', 'No'))

|

| 26 |

+

is_display_tracker = True if display_tracker == 'Yes' else False

|

| 27 |

+

if is_display_tracker:

|

| 28 |

+

tracker_type = st.radio("Tracker", ("bytetrack.yaml", "botsort.yaml"))

|

| 29 |

+

return is_display_tracker, tracker_type

|

| 30 |

+

return is_display_tracker, None

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

def _display_detected_frames(conf, model, st_frame, image, is_display_tracking=None, tracker=None):

|

| 34 |

+

"""

|

| 35 |

+

Display the detected objects on a video frame using the YOLOv8 model.

|

| 36 |

+

|

| 37 |

+

Args:

|

| 38 |

+

- conf (float): Confidence threshold for object detection.

|

| 39 |

+

- model (YoloV8): A YOLOv8 object detection model.

|

| 40 |

+

- st_frame (Streamlit object): A Streamlit object to display the detected video.

|

| 41 |

+

- image (numpy array): A numpy array representing the video frame.

|

| 42 |

+

- is_display_tracking (bool): A flag indicating whether to display object tracking (default=None).

|

| 43 |

+

|

| 44 |

+

Returns:

|

| 45 |

+

None

|

| 46 |

+

"""

|

| 47 |

+

|

| 48 |

+

# Resize the image to a standard size

|

| 49 |

+

image = cv2.resize(image, (720, int(720*(9/16))))

|

| 50 |

+

|

| 51 |

+

# Display object tracking, if specified

|

| 52 |

+

if is_display_tracking:

|

| 53 |

+

res = model.track(image, conf=conf, persist=True, tracker=tracker)

|

| 54 |

+

else:

|

| 55 |

+

# Predict the objects in the image using the YOLOv8 model

|

| 56 |

+

res = model.predict(image, conf=conf)

|

| 57 |

+

|

| 58 |

+

# # Plot the detected objects on the video frame

|

| 59 |

+

res_plotted = res[0].plot()

|

| 60 |

+

st_frame.image(res_plotted,

|

| 61 |

+

caption='Detected Video',

|

| 62 |

+

channels="BGR",

|

| 63 |

+

use_column_width=True

|

| 64 |

+

)

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

def play_youtube_video(conf, model):

|

| 68 |

+

"""

|

| 69 |

+

Plays a webcam stream. Detects Objects in real-time using the YOLOv8 object detection model.

|

| 70 |

+

|

| 71 |

+

Parameters:

|

| 72 |

+

conf: Confidence of YOLOv8 model.

|

| 73 |

+

model: An instance of the `YOLOv8` class containing the YOLOv8 model.

|

| 74 |

+

|

| 75 |

+

Returns:

|

| 76 |

+

None

|

| 77 |

+

|

| 78 |

+

Raises:

|

| 79 |

+

None

|

| 80 |

+

"""

|

| 81 |

+

source_youtube = st.sidebar.text_input("YouTube Video url")

|

| 82 |

+

|

| 83 |

+

is_display_tracker, tracker = display_tracker_options()

|

| 84 |

+

|

| 85 |

+

if st.sidebar.button('Detect Objects'):

|

| 86 |

+

try:

|

| 87 |

+

yt = YouTube(source_youtube)

|

| 88 |

+

stream = yt.streams.filter(file_extension="mp4", res=720).first()

|

| 89 |

+

vid_cap = cv2.VideoCapture(stream.url)

|

| 90 |

+

|

| 91 |

+

st_frame = st.empty()

|

| 92 |

+

while (vid_cap.isOpened()):

|

| 93 |

+

success, image = vid_cap.read()

|

| 94 |

+

if success:

|

| 95 |

+

_display_detected_frames(conf,

|

| 96 |

+

model,

|

| 97 |

+

st_frame,

|

| 98 |

+

image,

|

| 99 |

+

is_display_tracker,

|

| 100 |

+

tracker,

|

| 101 |

+

)

|

| 102 |

+

else:

|

| 103 |

+

vid_cap.release()

|

| 104 |

+

break

|

| 105 |

+

except Exception as e:

|

| 106 |

+

st.sidebar.error("Error loading video: " + str(e))

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

def play_rtsp_stream(conf, model):

|

| 110 |

+

"""

|

| 111 |

+

Plays an rtsp stream. Detects Objects in real-time using the YOLOv8 object detection model.

|

| 112 |

+

|

| 113 |

+

Parameters:

|

| 114 |

+

conf: Confidence of YOLOv8 model.

|

| 115 |

+

model: An instance of the `YOLOv8` class containing the YOLOv8 model.

|

| 116 |

+

|

| 117 |

+

Returns:

|

| 118 |

+

None

|

| 119 |

+

|

| 120 |

+

Raises:

|

| 121 |

+

None

|

| 122 |

+

"""

|

| 123 |

+

source_rtsp = st.sidebar.text_input("rtsp stream url:")

|

| 124 |

+

st.sidebar.caption('Example URL: rtsp://admin:12345@192.168.1.210:554/Streaming/Channels/101')

|

| 125 |

+

is_display_tracker, tracker = display_tracker_options()

|

| 126 |

+

if st.sidebar.button('Detect Objects'):

|

| 127 |

+

try:

|

| 128 |

+

vid_cap = cv2.VideoCapture(source_rtsp)

|

| 129 |

+

st_frame = st.empty()

|

| 130 |

+

while (vid_cap.isOpened()):

|

| 131 |

+

success, image = vid_cap.read()

|

| 132 |

+

if success:

|

| 133 |

+

_display_detected_frames(conf,

|

| 134 |

+

model,

|

| 135 |

+

st_frame,

|

| 136 |

+

image,

|

| 137 |

+

is_display_tracker,

|

| 138 |

+

tracker

|

| 139 |

+

)

|

| 140 |

+

else:

|

| 141 |

+

vid_cap.release()

|

| 142 |

+

# vid_cap = cv2.VideoCapture(source_rtsp)

|

| 143 |

+

# time.sleep(0.1)

|

| 144 |

+

# continue

|

| 145 |

+

break

|

| 146 |

+

except Exception as e:

|

| 147 |

+

vid_cap.release()

|

| 148 |

+

st.sidebar.error("Error loading RTSP stream: " + str(e))

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

def play_webcam(conf, model):

|

| 152 |

+

"""

|

| 153 |

+

Plays a webcam stream. Detects Objects in real-time using the YOLOv8 object detection model.

|

| 154 |

+

|

| 155 |

+

Parameters:

|

| 156 |

+

conf: Confidence of YOLOv8 model.

|

| 157 |

+

model: An instance of the `YOLOv8` class containing the YOLOv8 model.

|

| 158 |

+

|

| 159 |

+

Returns:

|

| 160 |

+

None

|

| 161 |

+

|

| 162 |

+

Raises:

|

| 163 |

+

None

|

| 164 |

+

"""

|

| 165 |

+

source_webcam = settings.WEBCAM_PATH

|

| 166 |

+

is_display_tracker, tracker = display_tracker_options()

|

| 167 |

+

if st.sidebar.button('Detect Objects'):

|

| 168 |

+

try:

|

| 169 |

+

vid_cap = cv2.VideoCapture(source_webcam)

|

| 170 |

+

st_frame = st.empty()

|

| 171 |

+

while (vid_cap.isOpened()):

|

| 172 |

+

success, image = vid_cap.read()

|

| 173 |

+

if success:

|

| 174 |

+

_display_detected_frames(conf,

|

| 175 |

+

model,

|

| 176 |

+

st_frame,

|

| 177 |

+

image,

|

| 178 |

+

is_display_tracker,

|

| 179 |

+

tracker,

|

| 180 |

+

)

|

| 181 |

+

else:

|

| 182 |

+

vid_cap.release()

|

| 183 |

+

break

|

| 184 |

+

except Exception as e:

|

| 185 |

+

st.sidebar.error("Error loading video: " + str(e))

|

| 186 |

+

|

| 187 |

+

|

| 188 |

+

def play_stored_video(conf, model):

|

| 189 |

+

"""

|

| 190 |

+

Plays a stored video file. Tracks and detects objects in real-time using the YOLOv8 object detection model.

|

| 191 |

+

|

| 192 |

+

Parameters:

|

| 193 |

+

conf: Confidence of YOLOv8 model.

|

| 194 |

+

model: An instance of the `YOLOv8` class containing the YOLOv8 model.

|

| 195 |

+

|

| 196 |

+

Returns:

|

| 197 |

+

None

|

| 198 |

+

|

| 199 |

+

Raises:

|

| 200 |

+

None

|

| 201 |

+

"""

|

| 202 |

+

source_vid = st.sidebar.selectbox(

|

| 203 |

+

"Choose a video...", settings.VIDEOS_DICT.keys())

|

| 204 |

+

|

| 205 |

+

is_display_tracker, tracker = display_tracker_options()

|

| 206 |

+

|

| 207 |

+

with open(settings.VIDEOS_DICT.get(source_vid), 'rb') as video_file:

|

| 208 |

+

video_bytes = video_file.read()

|

| 209 |

+

if video_bytes:

|

| 210 |

+

st.video(video_bytes)

|

| 211 |

+

|

| 212 |

+

if st.sidebar.button('Detect Video Objects'):

|

| 213 |

+

try:

|

| 214 |

+

vid_cap = cv2.VideoCapture(

|

| 215 |

+

str(settings.VIDEOS_DICT.get(source_vid)))

|

| 216 |

+

st_frame = st.empty()

|

| 217 |

+

while (vid_cap.isOpened()):

|

| 218 |

+

success, image = vid_cap.read()

|

| 219 |

+

if success:

|

| 220 |

+

_display_detected_frames(conf,

|

| 221 |

+

model,

|

| 222 |

+

st_frame,

|

| 223 |

+

image,

|

| 224 |

+

is_display_tracker,

|

| 225 |

+

tracker

|

| 226 |

+

)

|

| 227 |

+

else:

|

| 228 |

+

vid_cap.release()

|

| 229 |

+

break

|

| 230 |

+

except Exception as e:

|

| 231 |

+

st.sidebar.error("Error loading video: " + str(e))

|

images/GS.jpg

ADDED

|

images/deneme.jpeg

ADDED

|

images/deneme_detected.png

ADDED

|

images/office_4.jpg

ADDED

|

images/office_4_detected.jpg

ADDED

|

packages.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

freeglut3-dev

|

| 2 |

+

libgtk2.0-dev

|

| 3 |

+

libgl1-mesa-glx

|

requirements.txt

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

altair==5.1.1

|

| 2 |

+

attrs==23.1.0

|

| 3 |

+

backports.zoneinfo==0.2.1

|

| 4 |

+

blinker==1.6.2

|

| 5 |

+

cachetools==5.3.1

|

| 6 |

+

certifi==2023.7.22

|

| 7 |

+

charset-normalizer==3.2.0

|

| 8 |

+

click==8.1.7

|

| 9 |

+

cmake==3.27.4.1

|

| 10 |

+

contourpy==1.1.0

|

| 11 |

+

cycler==0.11.0

|

| 12 |

+

Cython==3.0.2

|

| 13 |

+

filelock==3.12.3

|

| 14 |

+

fonttools==4.42.1

|

| 15 |

+

gitdb==4.0.10

|

| 16 |

+

GitPython==3.1.35

|

| 17 |

+

idna==3.4

|

| 18 |

+

importlib-metadata==6.8.0

|

| 19 |

+

importlib-resources==6.0.1

|

| 20 |

+

Jinja2==3.1.2

|

| 21 |

+

jsonschema==4.19.0

|

| 22 |

+

jsonschema-specifications==2023.7.1

|

| 23 |

+

kiwisolver==1.4.5

|

| 24 |

+

lapx==0.5.4

|

| 25 |

+

lit==16.0.6

|

| 26 |

+

markdown-it-py==3.0.0

|

| 27 |

+

MarkupSafe==2.1.3

|

| 28 |

+

matplotlib==3.7.2

|

| 29 |

+

mdurl==0.1.2

|

| 30 |

+

mpmath==1.3.0

|

| 31 |

+

networkx==3.1

|

| 32 |

+

numpy==1.24.4

|

| 33 |

+

nvidia-cublas-cu11==11.10.3.66

|

| 34 |

+

nvidia-cuda-cupti-cu11==11.7.101

|

| 35 |

+

nvidia-cuda-nvrtc-cu11==11.7.99

|

| 36 |

+

nvidia-cuda-runtime-cu11==11.7.99

|

| 37 |

+

nvidia-cudnn-cu11==8.5.0.96

|

| 38 |

+

nvidia-cufft-cu11==10.9.0.58

|

| 39 |

+

nvidia-curand-cu11==10.2.10.91

|

| 40 |

+

nvidia-cusolver-cu11==11.4.0.1

|

| 41 |

+

nvidia-cusparse-cu11==11.7.4.91

|

| 42 |

+

nvidia-nccl-cu11==2.14.3

|

| 43 |

+

nvidia-nvtx-cu11==11.7.91

|

| 44 |

+

opencv-python-headless==4.8.0.76

|

| 45 |

+

packaging==23.1

|

| 46 |

+

pandas==2.0.3

|

| 47 |

+

Pillow==9.5.0

|

| 48 |

+

pkgutil-resolve-name==1.3.10

|

| 49 |

+

protobuf==4.24.3

|

| 50 |

+

psutil==5.9.5

|

| 51 |

+

py-cpuinfo==9.0.0

|

| 52 |

+

pyarrow==13.0.0

|

| 53 |

+

pydeck==0.8.0

|

| 54 |

+

Pygments==2.16.1

|

| 55 |

+

Pympler==1.0.1

|

| 56 |

+

pyparsing==3.0.9

|

| 57 |

+

python-dateutil==2.8.2

|

| 58 |

+

pytube==15.0.0

|

| 59 |

+

pytz==2023.3.post1

|

| 60 |

+

pytz-deprecation-shim==0.1.0.post0

|

| 61 |

+

PyYAML==6.0.1

|

| 62 |

+

referencing==0.30.2

|

| 63 |

+

requests==2.31.0

|

| 64 |

+

rich==13.5.2

|

| 65 |

+

rpds-py==0.10.2

|

| 66 |

+

scipy==1.10.1

|

| 67 |

+

seaborn==0.12.2

|

| 68 |

+

six==1.16.0

|

| 69 |

+

smmap==5.0.0

|

| 70 |

+

streamlit==1.26.0

|

| 71 |

+

sympy==1.12

|

| 72 |

+

tenacity==8.2.3

|

| 73 |

+

toml==0.10.2

|

| 74 |

+

toolz==0.12.0

|

| 75 |

+

torch==2.0.1

|

| 76 |

+

torchvision==0.15.2

|

| 77 |

+

tornado==6.3.3

|

| 78 |

+

tqdm==4.66.1

|

| 79 |

+

triton==2.0.0

|

| 80 |

+

typing-extensions==4.7.1

|

| 81 |

+

tzdata==2023.3

|

| 82 |

+

tzlocal==4.3.1

|

| 83 |

+

ultralytics==8.0.173

|

| 84 |

+

urllib3==2.0.4

|

| 85 |

+

validators==0.22.0

|

| 86 |

+

watchdog==3.0.0

|

| 87 |

+

zipp==3.16.2

|

settings.py

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from pathlib import Path

|

| 2 |

+

import sys

|

| 3 |

+

|

| 4 |

+

# Get the absolute path of the current file

|

| 5 |

+

file_path = Path(__file__).resolve()

|

| 6 |

+

|

| 7 |

+

# Get the parent directory of the current file

|

| 8 |

+

root_path = file_path.parent

|

| 9 |

+

|

| 10 |

+

# Add the root path to the sys.path list if it is not already there

|

| 11 |

+

if root_path not in sys.path:

|

| 12 |

+

sys.path.append(str(root_path))

|

| 13 |

+

|

| 14 |

+

# Get the relative path of the root directory with respect to the current working directory

|

| 15 |

+

ROOT = root_path.relative_to(Path.cwd())

|

| 16 |

+

|

| 17 |

+

# Sources

|

| 18 |

+

IMAGE = 'Resim'

|

| 19 |

+

VIDEO = 'Video'

|

| 20 |

+

WEBCAM = 'Webcam'

|

| 21 |

+

RTSP = 'RTSP'

|

| 22 |

+

YOUTUBE = 'YouTube'

|

| 23 |

+

|

| 24 |

+

SOURCES_LIST = [IMAGE, VIDEO, WEBCAM, RTSP, YOUTUBE]

|

| 25 |

+

|

| 26 |

+

# Images config

|

| 27 |

+

IMAGES_DIR = ROOT / 'images'

|

| 28 |

+

DEFAULT_IMAGE = IMAGES_DIR / 'deneme.jpeg'

|

| 29 |

+

DEFAULT_DETECT_IMAGE = IMAGES_DIR / 'deneme_detected.png'

|

| 30 |

+

|

| 31 |

+

# Videos config

|

| 32 |

+

VIDEO_DIR = ROOT / 'videos'

|

| 33 |

+

VIDEO_1_PATH = VIDEO_DIR / 'video_1.mp4'

|

| 34 |

+

VIDEO_2_PATH = VIDEO_DIR / 'video_2.mp4'

|

| 35 |

+

VIDEO_3_PATH = VIDEO_DIR / 'video_3.mp4'

|

| 36 |

+

VIDEO_4_PATH = VIDEO_DIR / 'airplane1.mp4'

|

| 37 |

+

VIDEOS_DICT = {

|

| 38 |

+

'video_1': VIDEO_1_PATH,

|

| 39 |

+

'video_2': VIDEO_2_PATH,

|

| 40 |

+

'video_3': VIDEO_3_PATH,

|

| 41 |

+

'video_4': VIDEO_4_PATH,

|

| 42 |

+

}

|

| 43 |

+

|

| 44 |

+

# ML Model config

|

| 45 |

+

MODEL_DIR = ROOT / 'weights'

|

| 46 |

+

DETECTION_MODEL = MODEL_DIR / 'yolov9e.pt'

|

| 47 |

+

# In case of your custome model comment out the line above and

|

| 48 |

+

# Place your custom model pt file name at the line below

|

| 49 |

+

# DETECTION_MODEL = MODEL_DIR / 'my_detection_model.pt'

|

| 50 |

+

|

| 51 |

+

SEGMENTATION_MODEL = MODEL_DIR / 'yolov8n-seg.pt'

|

| 52 |

+

|

| 53 |

+

# Webcam

|

| 54 |

+

WEBCAM_PATH = 0

|

videos/video_1.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:514107b98ebaf53069b2471234dd6eaa3d08ff6447433d06cbdea0cc6d822e13

|

| 3 |

+

size 14985417

|

videos/video_2.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0270dec433a05236c6d89c7cb007db1780d0f3ece738fc45137496c9812892d9

|

| 3 |

+

size 15235393

|

videos/video_3.mp4

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:cf59af9ffa88275c0f31250e18d0cd27eec554856074482b283f0a5614bc6b6e

|

| 3 |

+

size 1065720

|

weights/yolov8m.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6c25b0b63b1a433843f06d821a9ac1deb8d5805f74f0f38772c7308c5adc55a5

|

| 3 |

+

size 52117635

|

weights/yolov8n-seg.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d39e867b2c3a5dbc1aa764411544b475cb14727bf6af1ec46c238f8bb1351ab9

|

| 3 |

+

size 7054355

|