Spaces:

Runtime error

Runtime error

improve embeddings and app

Browse files- .gitignore +3 -0

- README.md +25 -0

- app.py +28 -14

- combine_actors_data.py +0 -61

- data/actors_embeddings.csv +2 -2

- data/imdb_actors.csv +2 -2

- images/example_frederick_douglass.jpg +0 -0

- images/example_leonardo_davinci.jpg +0 -0

- images/example_marie_curie.jpg +0 -0

- images/example_rb_ginsburg.jpg +0 -0

- images/example_scipio_africanus.jpg +0 -0

- images/example_sun_tzu.jpg +0 -0

- models/actors_annoy_index.ann +0 -0

- pipeline/__init__.py +0 -0

- analyze_actors_matching.ipynb → pipeline/actors_matching.ipynb +0 -0

- pipeline/combine_imdb_actors_data.ipynb +494 -0

- download_imdb_data.py → pipeline/download_imdb_data.py +0 -0

- get_images_data.py → pipeline/get_images_data.py +3 -1

- process_images.py → pipeline/process_images.py +6 -3

.gitignore

CHANGED

|

@@ -5,6 +5,9 @@

|

|

| 5 |

data/title.*.tsv*

|

| 6 |

data/name.*.tsv*

|

| 7 |

|

|

|

|

|

|

|

|

|

|

| 8 |

# Byte-compiled / optimized / DLL files

|

| 9 |

__pycache__/

|

| 10 |

*/__pycache__/

|

|

|

|

| 5 |

data/title.*.tsv*

|

| 6 |

data/name.*.tsv*

|

| 7 |

|

| 8 |

+

# Gradio local

|

| 9 |

+

flagged/

|

| 10 |

+

|

| 11 |

# Byte-compiled / optimized / DLL files

|

| 12 |

__pycache__/

|

| 13 |

*/__pycache__/

|

README.md

CHANGED

|

@@ -34,3 +34,28 @@ There are a few issues with the dataset and models used:

|

|

| 34 |

- Given the above, the database sampling will have several biases that are intrinsic to (a) the IMDb database and user base itself which is biased towards western/American movies, (b) the movie industry itself with a dominance of white male actors

|

| 35 |

- The pictures of actors and actresses was done through a simple Bing Search and not manually verified, there are several mistakes. For example, Graham Greene has a mix of pictures from Graham Greene, the canadian actor, and Graham Greene, the writer. You may get surprising results from time to time! Let me know if you find mistakes

|

| 36 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 34 |

- Given the above, the database sampling will have several biases that are intrinsic to (a) the IMDb database and user base itself which is biased towards western/American movies, (b) the movie industry itself with a dominance of white male actors

|

| 35 |

- The pictures of actors and actresses was done through a simple Bing Search and not manually verified, there are several mistakes. For example, Graham Greene has a mix of pictures from Graham Greene, the canadian actor, and Graham Greene, the writer. You may get surprising results from time to time! Let me know if you find mistakes

|

| 36 |

|

| 37 |

+

## Next steps

|

| 38 |

+

|

| 39 |

+

- Better image dataset (ie: identify and clean-up errors where multiple people where queried in the Bing Search)

|

| 40 |

+

- Larger dataset and more balanced dataset (to reduce the bias toward white male actors)

|

| 41 |

+

- Provide a way of looping through multiple people in a picture in the Gradio app

|

| 42 |

+

- Currently, I find the best matching actor using the average embedding for the actor. I plan to then do a second pass to find the closest matching picture(s) of this specific actor for a better user experience.

|

| 43 |

+

- Deeper analysis of which embedding dimensions are necessary. Might want to reweight them.

|

| 44 |

+

|

| 45 |

+

## Credits

|

| 46 |

+

|

| 47 |

+

Author: Nicolas Beuchat (nicolas.beuchat@gmail.com)

|

| 48 |

+

|

| 49 |

+

Thanks to the following open-source projects:

|

| 50 |

+

|

| 51 |

+

- [dlib](https://github.com/davisking/dlib) by [Davis King](https://github.com/davisking) ([@nulhom](https://twitter.com/nulhom))

|

| 52 |

+

- [face_recognition](https://github.com/ageitgey/face_recognition) by [Adam Geitgey](https://github.com/ageitgey)

|

| 53 |

+

- [annoy](https://github.com/spotify/annoy) by Spotify

|

| 54 |

+

|

| 55 |

+

Example images used in the Gradio app (most under [Creative Commons Attribution license](https://en.wikipedia.org/wiki/en:Creative_Commons)):

|

| 56 |

+

|

| 57 |

+

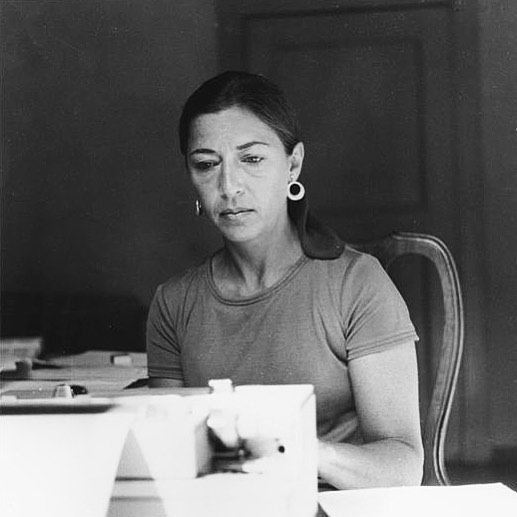

- [RB Ginsburg](https://www.flickr.com/photos/tradlands/25602059686) - CC

|

| 58 |

+

- [Frederik Douglass](https://commons.wikimedia.org/wiki/File:Frederick_Douglass_1856_sq.jpg) - CC

|

| 59 |

+

- [Leonardo da Vinci](https://commons.wikimedia.org/wiki/File:Leonardo_da_Vinci._Photograph_by_E._Desmaisons_after_a_print_Wellcome_V0027541EL.jpg) - CC

|

| 60 |

+

- [Hannibal Barca](https://en.wikipedia.org/wiki/Hannibal#/media/File:Mommsen_p265.jpg) - Public domain

|

| 61 |

+

- [Joan of Arc](https://de.wikipedia.org/wiki/Jeanne_d%E2%80%99Arc#/media/Datei:Joan_of_Arc_miniature_graded.jpg) - Public domain

|

app.py

CHANGED

|

@@ -1,6 +1,7 @@

|

|

| 1 |

import gradio as gr

|

| 2 |

-

import numpy as np

|

| 3 |

from actors_matching.api import analyze_image, load_annoy_index

|

|

|

|

|

|

|

| 4 |

|

| 5 |

annoy_index, actors_mapping = load_annoy_index()

|

| 6 |

|

|

@@ -18,29 +19,40 @@ def get_image_html(actor: dict):

|

|

| 18 |

</div>

|

| 19 |

'''

|

| 20 |

|

|

|

|

|

|

|

|

|

|

| 21 |

def get_best_matches(image, n_matches: int):

|

| 22 |

return analyze_image(image, annoy_index=annoy_index, n_matches=n_matches)

|

| 23 |

|

| 24 |

def find_matching_actors(input_img, title, n_matches: int = 10):

|

| 25 |

best_matches_list = get_best_matches(input_img, n_matches=n_matches)

|

| 26 |

-

best_matches = best_matches_list[0] # TODO: allow looping through characters

|

| 27 |

|

| 28 |

-

#

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 29 |

|

| 30 |

-

|

| 31 |

-

output_htmls = []

|

| 32 |

-

for match in best_matches["matches"]:

|

| 33 |

-

actor = actors_mapping[match]

|

| 34 |

-

output_htmls.append(get_image_html(actor))

|

| 35 |

|

| 36 |

-

|

|

|

|

| 37 |

|

| 38 |

iface = gr.Interface(

|

| 39 |

find_matching_actors,

|

| 40 |

title="Which actor or actress looks like you?",

|

| 41 |

description="""Who is the best person to play a movie about you? Upload a picture and find out!

|

| 42 |

Or maybe you'd like to know who would best interpret your favorite historical character?

|

| 43 |

-

Give it a shot or try one of the sample images below

|

|

|

|

|

|

|

| 44 |

inputs=[

|

| 45 |

gr.inputs.Image(shape=(256, 256), label="Your image"),

|

| 46 |

gr.inputs.Textbox(label="Who's that?", placeholder="Optional, you can leave this blank"),

|

|

@@ -48,11 +60,13 @@ iface = gr.Interface(

|

|

| 48 |

],

|

| 49 |

outputs=gr.outputs.Carousel(gr.outputs.HTML(), label="Matching actors & actresses"),

|

| 50 |

examples=[

|

| 51 |

-

["images/

|

| 52 |

["images/example_hannibal_barca.jpg", "Hannibal (the one with the elephants...)"],

|

| 53 |

-

["images/

|

| 54 |

-

["images/

|

|

|

|

|

|

|

| 55 |

]

|

| 56 |

)

|

| 57 |

|

| 58 |

-

iface.launch()

|

|

|

|

| 1 |

import gradio as gr

|

|

|

|

| 2 |

from actors_matching.api import analyze_image, load_annoy_index

|

| 3 |

+

from pathlib import Path

|

| 4 |

+

|

| 5 |

|

| 6 |

annoy_index, actors_mapping = load_annoy_index()

|

| 7 |

|

|

|

|

| 19 |

</div>

|

| 20 |

'''

|

| 21 |

|

| 22 |

+

def no_faces_found_html():

|

| 23 |

+

return f"""<div>No faces found in the picture</div>"""

|

| 24 |

+

|

| 25 |

def get_best_matches(image, n_matches: int):

|

| 26 |

return analyze_image(image, annoy_index=annoy_index, n_matches=n_matches)

|

| 27 |

|

| 28 |

def find_matching_actors(input_img, title, n_matches: int = 10):

|

| 29 |

best_matches_list = get_best_matches(input_img, n_matches=n_matches)

|

|

|

|

| 30 |

|

| 31 |

+

# TODO: allow looping through characters

|

| 32 |

+

if best_matches_list:

|

| 33 |

+

best_matches = best_matches_list[0]

|

| 34 |

+

|

| 35 |

+

# TODO: Show how the initial image was parsed (ie: which person is displayed)

|

| 36 |

+

|

| 37 |

+

# Build htmls to display the result

|

| 38 |

+

output_htmls = []

|

| 39 |

+

for match in best_matches["matches"]:

|

| 40 |

+

actor = actors_mapping[match]

|

| 41 |

+

output_htmls.append(get_image_html(actor))

|

| 42 |

|

| 43 |

+

return output_htmls

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

|

| 45 |

+

# No matches

|

| 46 |

+

return [no_faces_found_html()]

|

| 47 |

|

| 48 |

iface = gr.Interface(

|

| 49 |

find_matching_actors,

|

| 50 |

title="Which actor or actress looks like you?",

|

| 51 |

description="""Who is the best person to play a movie about you? Upload a picture and find out!

|

| 52 |

Or maybe you'd like to know who would best interpret your favorite historical character?

|

| 53 |

+

Give it a shot or try one of the sample images below.\nPlease read below for more information on biases

|

| 54 |

+

and limitations of the tool!""",

|

| 55 |

+

article=Path("README.md").read_text(),

|

| 56 |

inputs=[

|

| 57 |

gr.inputs.Image(shape=(256, 256), label="Your image"),

|

| 58 |

gr.inputs.Textbox(label="Who's that?", placeholder="Optional, you can leave this blank"),

|

|

|

|

| 60 |

],

|

| 61 |

outputs=gr.outputs.Carousel(gr.outputs.HTML(), label="Matching actors & actresses"),

|

| 62 |

examples=[

|

| 63 |

+

["images/example_rb_ginsburg.jpg", "RB Ginsburg in 1977"],

|

| 64 |

["images/example_hannibal_barca.jpg", "Hannibal (the one with the elephants...)"],

|

| 65 |

+

["images/example_frederick_douglass.jpg", "Frederik Douglass"],

|

| 66 |

+

["images/example_leonardo_davinci.jpg", "Leonoardo da Vinci"],

|

| 67 |

+

["images/example_joan_of_arc.jpg", "Jeanne d'Arc"],

|

| 68 |

+

["images/example_sun_tzu.jpg", "Sun Tzu"],

|

| 69 |

]

|

| 70 |

)

|

| 71 |

|

| 72 |

+

iface.launch()

|

combine_actors_data.py

DELETED

|

@@ -1,61 +0,0 @@

|

|

| 1 |

-

import pandas as pd

|

| 2 |

-

from datetime import datetime

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

def process_actors_data(keep_alive: bool = True):

|

| 6 |

-

current_year = datetime.now().year

|

| 7 |

-

|

| 8 |

-

# Read actors data

|

| 9 |

-

df = pd.read_csv("data/name.basics.tsv", sep="\t")

|

| 10 |

-

df["birthYear"] = pd.to_numeric(df["birthYear"], errors="coerce")

|

| 11 |

-

df["deathYear"] = pd.to_numeric(df["deathYear"], errors="coerce")

|

| 12 |

-

|

| 13 |

-

# Prepare and cleanup actors data

|

| 14 |

-

if keep_alive:

|

| 15 |

-

df = df[df["deathYear"].isna()]

|

| 16 |

-

df = df[df.knownForTitles.apply(lambda x: len(x)) > 0]

|

| 17 |

-

df = df.dropna(subset=["primaryProfession"])

|

| 18 |

-

df = df[df.primaryProfession.apply(lambda x: any([p in {"actor", "actress"} for p in x.split(",")]))]

|

| 19 |

-

df = df[df.knownForTitles != "\\N"]

|

| 20 |

-

df = df.dropna(subset=["birthYear"])

|

| 21 |

-

#df["knownForTitles"] = df["knownForTitles"].apply(lambda x: x.split(","))

|

| 22 |

-

|

| 23 |

-

#dfat = df[["nconst", "knownForTitles"]].explode("knownForTitles")

|

| 24 |

-

#dfat.columns = ["nconst", "tconst"]

|

| 25 |

-

dfat = pd.read_csv("data/title.principals.tsv.gz", sep="\t")

|

| 26 |

-

dfat = dfat[dfat.category.isin(["actor", "actress", "self"])][["tconst", "nconst"]]

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

# Get data for the movies/shows the actors were known for

|

| 30 |

-

dftr = pd.read_csv("data/title.ratings.tsv", sep="\t")

|

| 31 |

-

dftb = pd.read_csv("data/title.basics.tsv", sep="\t")

|

| 32 |

-

dftb["startYear"] = pd.to_numeric(dftb["startYear"], errors="coerce")

|

| 33 |

-

dftb["endYear"] = pd.to_numeric(dftb["endYear"], errors="coerce")

|

| 34 |

-

|

| 35 |

-

# Estimate last year the show/movie was released (TV shows span several years and might still be active)

|

| 36 |

-

dftb.loc[(dftb.titleType.isin(["tvSeries", "tvMiniSeries"]) & (dftb.endYear.isna())), "lastYear"] = current_year

|

| 37 |

-

dftb["lastYear"] = dftb["lastYear"].fillna(dftb["startYear"])

|

| 38 |

-

dftb = dftb.dropna(subset=["lastYear"])

|

| 39 |

-

dftb = dftb[dftb.isAdult == 0]

|

| 40 |

-

|

| 41 |

-

# Aggregate stats for all movies the actor was known for

|

| 42 |

-

dft = pd.merge(dftb, dftr, how="inner", on="tconst")

|

| 43 |

-

del dftb, dftr

|

| 44 |

-

dfat = pd.merge(dfat, dft, how="inner", on="tconst")

|

| 45 |

-

del dft

|

| 46 |

-

dfat["totalRating"] = dfat.averageRating*dfat.numVotes

|

| 47 |

-

dfat = dfat.groupby("nconst").agg({"averageRating": "mean", "totalRating": "sum", "numVotes": "sum", "tconst": "count", "startYear": "min", "lastYear": "max"})

|

| 48 |

-

|

| 49 |

-

# Merge everything with actor data and cleanup

|

| 50 |

-

df = df.drop(["deathYear", "knownForTitles", "primaryProfession"], axis=1)

|

| 51 |

-

df = pd.merge(df, dfat, how="inner", on="nconst").sort_values("totalRating", ascending=False)

|

| 52 |

-

df = df.dropna(subset=["birthYear", "startYear", "lastYear"])

|

| 53 |

-

df[["birthYear", "startYear", "lastYear"]] = df[["birthYear", "startYear", "lastYear"]].astype(int)

|

| 54 |

-

df = df.round(2)

|

| 55 |

-

|

| 56 |

-

return df

|

| 57 |

-

|

| 58 |

-

|

| 59 |

-

if __name__ == "__main__":

|

| 60 |

-

df = process_actors_data()

|

| 61 |

-

df.to_csv("data/imdb_actors.csv", index=False)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

data/actors_embeddings.csv

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:052a7779d98df4ccd54a403b6b2ca1d0da18ea3329b0b74ea2420938462fb9a2

|

| 3 |

+

size 90070629

|

data/imdb_actors.csv

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8a538576c57cf3f2a9041f4e1a224de259ae8e77c65e08add3956735414e89e5

|

| 3 |

+

size 10255395

|

images/example_frederick_douglass.jpg

ADDED

|

images/example_leonardo_davinci.jpg

ADDED

|

images/example_marie_curie.jpg

DELETED

|

Binary file (321 kB)

|

|

|

images/example_rb_ginsburg.jpg

ADDED

|

images/example_scipio_africanus.jpg

DELETED

|

Binary file (103 kB)

|

|

|

images/example_sun_tzu.jpg

ADDED

|

models/actors_annoy_index.ann

CHANGED

|

Binary files a/models/actors_annoy_index.ann and b/models/actors_annoy_index.ann differ

|

|

|

pipeline/__init__.py

ADDED

|

File without changes

|

analyze_actors_matching.ipynb → pipeline/actors_matching.ipynb

RENAMED

|

The diff for this file is too large to render.

See raw diff

|

|

|

pipeline/combine_imdb_actors_data.ipynb

ADDED

|

@@ -0,0 +1,494 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": []

|

| 7 |

+

},

|

| 8 |

+

{

|

| 9 |

+

"cell_type": "code",

|

| 10 |

+

"execution_count": 1,

|

| 11 |

+

"metadata": {},

|

| 12 |

+

"outputs": [],

|

| 13 |

+

"source": [

|

| 14 |

+

"import pandas as pd\n",

|

| 15 |

+

"from datetime import datetime\n",

|

| 16 |

+

"\n",

|

| 17 |

+

"current_year = datetime.now().year\n",

|

| 18 |

+

"keep_alive = True"

|

| 19 |

+

]

|

| 20 |

+

},

|

| 21 |

+

{

|

| 22 |

+

"cell_type": "code",

|

| 23 |

+

"execution_count": null,

|

| 24 |

+

"metadata": {},

|

| 25 |

+

"outputs": [],

|

| 26 |

+

"source": [

|

| 27 |

+

"# Read actors data\n",

|

| 28 |

+

"df = pd.read_csv(\"data/name.basics.tsv\", sep=\"\\t\")\n",

|

| 29 |

+

"df[\"birthYear\"] = pd.to_numeric(df[\"birthYear\"], errors=\"coerce\")\n",

|

| 30 |

+

"df[\"deathYear\"] = pd.to_numeric(df[\"deathYear\"], errors=\"coerce\")\n",

|

| 31 |

+

"\n",

|

| 32 |

+

"# Prepare and cleanup actors data\n",

|

| 33 |

+

"if keep_alive:\n",

|

| 34 |

+

" df = df[df[\"deathYear\"].isna()]\n",

|

| 35 |

+

"\n",

|

| 36 |

+

"# Drop rows with incomplete data\n",

|

| 37 |

+

"df = df.dropna(subset=[\"primaryProfession\", \"birthYear\"])\n",

|

| 38 |

+

"df = df[df.knownForTitles != \"\\\\N\"]\n",

|

| 39 |

+

"\n",

|

| 40 |

+

"# Get if a person is an actor or actress\n",

|

| 41 |

+

"df[\"is_actor\"] = df.primaryProfession.apply(lambda x: \"actor\" in x.split(\",\"))\n",

|

| 42 |

+

"df[\"is_actress\"] = df.primaryProfession.apply(lambda x: \"actress\" in x.split(\",\"))"

|

| 43 |

+

]

|

| 44 |

+

},

|

| 45 |

+

{

|

| 46 |

+

"cell_type": "markdown",

|

| 47 |

+

"metadata": {},

|

| 48 |

+

"source": [

|

| 49 |

+

"A note on genders: I do not have data as to which gender an actor or actress identify as. It does not matter for this exercise in any case as we plan to look at facial feature irrespective of gender. I use the actor/actress information for two reasons:\n",

|

| 50 |

+

"\n",

|

| 51 |

+

"1. I only want to keep people who acted in a movie/show, not the rest of the production crew (which may or may not be a good idea in the first place)\n",

|

| 52 |

+

"2. When doing the Bing Search, I realize that for some people that have homonyms in other professions (such as Graham Green), I need to add the word \"actor\" or \"actress\" to the search to get more reliable pictures. I initially only added *actor/actress* in the query which returned strange results in some cases"

|

| 53 |

+

]

|

| 54 |

+

},

|

| 55 |

+

{

|

| 56 |

+

"cell_type": "code",

|

| 57 |

+

"execution_count": 17,

|

| 58 |

+

"metadata": {},

|

| 59 |

+

"outputs": [

|

| 60 |

+

{

|

| 61 |

+

"data": {

|

| 62 |

+

"text/html": [

|

| 63 |

+

"<div>\n",

|

| 64 |

+

"<style scoped>\n",

|

| 65 |

+

" .dataframe tbody tr th:only-of-type {\n",

|

| 66 |

+

" vertical-align: middle;\n",

|

| 67 |

+

" }\n",

|

| 68 |

+

"\n",

|

| 69 |

+

" .dataframe tbody tr th {\n",

|

| 70 |

+

" vertical-align: top;\n",

|

| 71 |

+

" }\n",

|

| 72 |

+

"\n",

|

| 73 |

+

" .dataframe thead th {\n",

|

| 74 |

+

" text-align: right;\n",

|

| 75 |

+

" }\n",

|

| 76 |

+

"</style>\n",

|

| 77 |

+

"<table border=\"1\" class=\"dataframe\">\n",

|

| 78 |

+

" <thead>\n",

|

| 79 |

+

" <tr style=\"text-align: right;\">\n",

|

| 80 |

+

" <th></th>\n",

|

| 81 |

+

" <th></th>\n",

|

| 82 |

+

" <th>nconst</th>\n",

|

| 83 |

+

" </tr>\n",

|

| 84 |

+

" <tr>\n",

|

| 85 |

+

" <th>is_actor</th>\n",

|

| 86 |

+

" <th>is_actress</th>\n",

|

| 87 |

+

" <th></th>\n",

|

| 88 |

+

" </tr>\n",

|

| 89 |

+

" </thead>\n",

|

| 90 |

+

" <tbody>\n",

|

| 91 |

+

" <tr>\n",

|

| 92 |

+

" <th>False</th>\n",

|

| 93 |

+

" <th>True</th>\n",

|

| 94 |

+

" <td>1554197</td>\n",

|

| 95 |

+

" </tr>\n",

|

| 96 |

+

" <tr>\n",

|

| 97 |

+

" <th rowspan=\"2\" valign=\"top\">True</th>\n",

|

| 98 |

+

" <th>False</th>\n",

|

| 99 |

+

" <td>2537757</td>\n",

|

| 100 |

+

" </tr>\n",

|

| 101 |

+

" <tr>\n",

|

| 102 |

+

" <th>True</th>\n",

|

| 103 |

+

" <td>222</td>\n",

|

| 104 |

+

" </tr>\n",

|

| 105 |

+

" </tbody>\n",

|

| 106 |

+

"</table>\n",

|

| 107 |

+

"</div>"

|

| 108 |

+

],

|

| 109 |

+

"text/plain": [

|

| 110 |

+

" nconst\n",

|

| 111 |

+

"is_actor is_actress \n",

|

| 112 |

+

"False True 1554197\n",

|

| 113 |

+

"True False 2537757\n",

|

| 114 |

+

" True 222"

|

| 115 |

+

]

|

| 116 |

+

},

|

| 117 |

+

"execution_count": 17,

|

| 118 |

+

"metadata": {},

|

| 119 |

+

"output_type": "execute_result"

|

| 120 |

+

}

|

| 121 |

+

],

|

| 122 |

+

"source": [

|

| 123 |

+

"df.groupby([\"is_actor\", \"is_actress\"]).count()[[\"nconst\"]]"

|

| 124 |

+

]

|

| 125 |

+

},

|

| 126 |

+

{

|

| 127 |

+

"cell_type": "code",

|

| 128 |

+

"execution_count": 9,

|

| 129 |

+

"metadata": {},

|

| 130 |

+

"outputs": [

|

| 131 |

+

{

|

| 132 |

+

"data": {

|

| 133 |

+

"text/html": [

|

| 134 |

+

"<div>\n",

|

| 135 |

+

"<style scoped>\n",

|

| 136 |

+

" .dataframe tbody tr th:only-of-type {\n",

|

| 137 |

+

" vertical-align: middle;\n",

|

| 138 |

+

" }\n",

|

| 139 |

+

"\n",

|

| 140 |

+

" .dataframe tbody tr th {\n",

|

| 141 |

+

" vertical-align: top;\n",

|

| 142 |

+

" }\n",

|

| 143 |

+

"\n",

|

| 144 |

+

" .dataframe thead th {\n",

|

| 145 |

+

" text-align: right;\n",

|

| 146 |

+

" }\n",

|

| 147 |

+

"</style>\n",

|

| 148 |

+

"<table border=\"1\" class=\"dataframe\">\n",

|

| 149 |

+

" <thead>\n",

|

| 150 |

+

" <tr style=\"text-align: right;\">\n",

|

| 151 |

+

" <th></th>\n",

|

| 152 |

+

" <th>nconst</th>\n",

|

| 153 |

+

" <th>primaryName</th>\n",

|

| 154 |

+

" <th>birthYear</th>\n",

|

| 155 |

+

" <th>deathYear</th>\n",

|

| 156 |

+

" <th>primaryProfession</th>\n",

|

| 157 |

+

" <th>knownForTitles</th>\n",

|

| 158 |

+

" <th>is_actor</th>\n",

|

| 159 |

+

" <th>is_actress</th>\n",

|

| 160 |

+

" </tr>\n",

|

| 161 |

+

" </thead>\n",

|

| 162 |

+

" <tbody>\n",

|

| 163 |

+

" <tr>\n",

|

| 164 |

+

" <th>98892</th>\n",

|

| 165 |

+

" <td>nm0103696</td>\n",

|

| 166 |

+

" <td>Moya Brady</td>\n",

|

| 167 |

+

" <td>1962.0</td>\n",

|

| 168 |

+

" <td>NaN</td>\n",

|

| 169 |

+

" <td>actor,actress,soundtrack</td>\n",

|

| 170 |

+

" <td>tt0457513,tt1054606,tt0110647,tt0414387</td>\n",

|

| 171 |

+

" <td>True</td>\n",

|

| 172 |

+

" <td>True</td>\n",

|

| 173 |

+

" </tr>\n",

|

| 174 |

+

" <tr>\n",

|

| 175 |

+

" <th>116253</th>\n",

|

| 176 |

+

" <td>nm0122062</td>\n",

|

| 177 |

+

" <td>Debbie David</td>\n",

|

| 178 |

+

" <td>NaN</td>\n",

|

| 179 |

+

" <td>NaN</td>\n",

|

| 180 |

+

" <td>actor,actress,special_effects</td>\n",

|

| 181 |

+

" <td>tt0092455,tt0104743,tt0112178,tt0096875</td>\n",

|

| 182 |

+

" <td>True</td>\n",

|

| 183 |

+

" <td>True</td>\n",

|

| 184 |

+

" </tr>\n",

|

| 185 |

+

" <tr>\n",

|

| 186 |

+

" <th>301992</th>\n",

|

| 187 |

+

" <td>nm0318693</td>\n",

|

| 188 |

+

" <td>Kannu Gill</td>\n",

|

| 189 |

+

" <td>NaN</td>\n",

|

| 190 |

+

" <td>NaN</td>\n",

|

| 191 |

+

" <td>actress,actor</td>\n",

|

| 192 |

+

" <td>tt0119721,tt0130197,tt0150992,tt0292490</td>\n",

|

| 193 |

+

" <td>True</td>\n",

|

| 194 |

+

" <td>True</td>\n",

|

| 195 |

+

" </tr>\n",

|

| 196 |

+

" <tr>\n",

|

| 197 |

+

" <th>830244</th>\n",

|

| 198 |

+

" <td>nm0881417</td>\n",

|

| 199 |

+

" <td>Mansi Upadhyay</td>\n",

|

| 200 |

+

" <td>NaN</td>\n",

|

| 201 |

+

" <td>NaN</td>\n",

|

| 202 |

+

" <td>actress,actor</td>\n",

|

| 203 |

+

" <td>tt3815878,tt0374887,tt14412608,tt10719514</td>\n",

|

| 204 |

+

" <td>True</td>\n",

|

| 205 |

+

" <td>True</td>\n",

|

| 206 |

+

" </tr>\n",

|

| 207 |

+

" <tr>\n",

|

| 208 |

+

" <th>954524</th>\n",

|

| 209 |

+

" <td>nm10034909</td>\n",

|

| 210 |

+

" <td>Cheryl Kann</td>\n",

|

| 211 |

+

" <td>NaN</td>\n",

|

| 212 |

+

" <td>NaN</td>\n",

|

| 213 |

+

" <td>actor,actress</td>\n",

|

| 214 |

+

" <td>tt8813608</td>\n",

|

| 215 |

+

" <td>True</td>\n",

|

| 216 |

+

" <td>True</td>\n",

|

| 217 |

+

" </tr>\n",

|

| 218 |

+

" <tr>\n",

|

| 219 |

+

" <th>968196</th>\n",

|

| 220 |

+

" <td>nm1004934</td>\n",

|

| 221 |

+

" <td>Niloufar Safaie</td>\n",

|

| 222 |

+

" <td>NaN</td>\n",

|

| 223 |

+

" <td>NaN</td>\n",

|

| 224 |

+

" <td>actor,actress</td>\n",

|

| 225 |

+

" <td>tt0247638,tt1523296</td>\n",

|

| 226 |

+

" <td>True</td>\n",

|

| 227 |

+

" <td>True</td>\n",

|

| 228 |

+

" </tr>\n",

|

| 229 |

+

" <tr>\n",

|

| 230 |

+

" <th>975084</th>\n",

|

| 231 |

+

" <td>nm10056470</td>\n",

|

| 232 |

+

" <td>Lydia Barton</td>\n",

|

| 233 |

+

" <td>NaN</td>\n",

|

| 234 |

+

" <td>NaN</td>\n",

|

| 235 |

+

" <td>actor,actress</td>\n",

|

| 236 |

+

" <td>\\N</td>\n",

|

| 237 |

+

" <td>True</td>\n",

|

| 238 |

+

" <td>True</td>\n",

|

| 239 |

+

" </tr>\n",

|

| 240 |

+

" <tr>\n",

|

| 241 |

+

" <th>1235242</th>\n",

|

| 242 |

+

" <td>nm10334756</td>\n",

|

| 243 |

+

" <td>Chesca Foe-a-man</td>\n",

|

| 244 |

+

" <td>NaN</td>\n",

|

| 245 |

+

" <td>NaN</td>\n",

|

| 246 |

+

" <td>miscellaneous,actor,actress</td>\n",

|

| 247 |

+

" <td>tt9050468,tt5232792</td>\n",

|

| 248 |

+

" <td>True</td>\n",

|

| 249 |

+

" <td>True</td>\n",

|

| 250 |

+

" </tr>\n",

|

| 251 |

+

" <tr>\n",

|

| 252 |

+

" <th>1353828</th>\n",

|

| 253 |

+

" <td>nm10460818</td>\n",

|

| 254 |

+

" <td>Bhumika Barot</td>\n",

|

| 255 |

+

" <td>NaN</td>\n",

|

| 256 |

+

" <td>NaN</td>\n",

|

| 257 |

+

" <td>actress,actor</td>\n",

|

| 258 |

+

" <td>tt15102968,tt11569584,tt9747194,tt10795628</td>\n",

|

| 259 |

+

" <td>True</td>\n",

|

| 260 |

+

" <td>True</td>\n",

|

| 261 |

+

" </tr>\n",

|

| 262 |

+

" <tr>\n",

|

| 263 |

+

" <th>1461875</th>\n",

|

| 264 |

+

" <td>nm10576223</td>\n",

|

| 265 |

+

" <td>Allison Orr</td>\n",

|

| 266 |

+

" <td>NaN</td>\n",

|

| 267 |

+

" <td>NaN</td>\n",

|

| 268 |

+

" <td>actor,actress</td>\n",

|

| 269 |

+

" <td>\\N</td>\n",

|

| 270 |

+

" <td>True</td>\n",

|

| 271 |

+

" <td>True</td>\n",

|

| 272 |

+

" </tr>\n",

|

| 273 |

+

" </tbody>\n",

|

| 274 |

+

"</table>\n",

|

| 275 |

+

"</div>"

|

| 276 |

+

],

|

| 277 |

+

"text/plain": [

|

| 278 |

+

" nconst primaryName birthYear deathYear \\\n",

|

| 279 |

+

"98892 nm0103696 Moya Brady 1962.0 NaN \n",

|

| 280 |

+

"116253 nm0122062 Debbie David NaN NaN \n",

|

| 281 |

+

"301992 nm0318693 Kannu Gill NaN NaN \n",

|

| 282 |

+

"830244 nm0881417 Mansi Upadhyay NaN NaN \n",

|

| 283 |

+

"954524 nm10034909 Cheryl Kann NaN NaN \n",

|

| 284 |

+

"968196 nm1004934 Niloufar Safaie NaN NaN \n",

|

| 285 |

+

"975084 nm10056470 Lydia Barton NaN NaN \n",

|

| 286 |

+

"1235242 nm10334756 Chesca Foe-a-man NaN NaN \n",

|

| 287 |

+

"1353828 nm10460818 Bhumika Barot NaN NaN \n",

|

| 288 |

+

"1461875 nm10576223 Allison Orr NaN NaN \n",

|

| 289 |

+

"\n",

|

| 290 |

+

" primaryProfession \\\n",

|

| 291 |

+

"98892 actor,actress,soundtrack \n",

|

| 292 |

+

"116253 actor,actress,special_effects \n",

|

| 293 |

+

"301992 actress,actor \n",

|

| 294 |

+

"830244 actress,actor \n",

|

| 295 |

+

"954524 actor,actress \n",

|

| 296 |

+

"968196 actor,actress \n",

|

| 297 |

+

"975084 actor,actress \n",

|

| 298 |

+

"1235242 miscellaneous,actor,actress \n",

|

| 299 |

+

"1353828 actress,actor \n",

|

| 300 |

+

"1461875 actor,actress \n",

|

| 301 |

+

"\n",

|

| 302 |

+

" knownForTitles is_actor is_actress \n",

|

| 303 |

+

"98892 tt0457513,tt1054606,tt0110647,tt0414387 True True \n",

|

| 304 |

+

"116253 tt0092455,tt0104743,tt0112178,tt0096875 True True \n",

|

| 305 |

+

"301992 tt0119721,tt0130197,tt0150992,tt0292490 True True \n",

|

| 306 |

+

"830244 tt3815878,tt0374887,tt14412608,tt10719514 True True \n",

|

| 307 |

+

"954524 tt8813608 True True \n",

|

| 308 |

+

"968196 tt0247638,tt1523296 True True \n",

|

| 309 |

+

"975084 \\N True True \n",

|

| 310 |

+

"1235242 tt9050468,tt5232792 True True \n",

|

| 311 |

+

"1353828 tt15102968,tt11569584,tt9747194,tt10795628 True True \n",

|

| 312 |

+

"1461875 \\N True True "

|

| 313 |

+

]

|

| 314 |

+

},

|

| 315 |

+

"execution_count": 9,

|

| 316 |

+

"metadata": {},

|

| 317 |

+

"output_type": "execute_result"

|

| 318 |

+

}

|

| 319 |

+

],

|

| 320 |

+

"source": [

|

| 321 |

+

"df[df.is_actor & df.is_actress].head(10)"

|

| 322 |

+

]

|

| 323 |

+

},

|

| 324 |

+

{

|

| 325 |

+

"cell_type": "markdown",

|

| 326 |

+

"metadata": {},

|

| 327 |

+

"source": [

|

| 328 |

+

"A few people are marked both as actor and actress in the IMDb data. Manually looking at these cases, it seems to be an error in the DB and they are actually actresses. "

|

| 329 |

+

]

|

| 330 |

+

},

|

| 331 |

+

{

|

| 332 |

+

"cell_type": "code",

|

| 333 |

+

"execution_count": 12,

|

| 334 |

+

"metadata": {},

|

| 335 |

+

"outputs": [],

|

| 336 |

+

"source": [

|

| 337 |

+

"# Keep only actors and actresses in the dataset\n",

|

| 338 |

+

"# Assume that if someone is both marked as actor/actress, it's an actress\n",

|

| 339 |

+

"df = df[df.is_actor | df.is_actress]\n",

|

| 340 |

+

"\n",

|

| 341 |

+

"df[\"role\"] = \"other\"\n",

|

| 342 |

+

"df.loc[df.is_actor, \"role\"] = \"actor\"\n",

|

| 343 |

+

"df.loc[df.is_actress, \"role\"] = \"actress\" "

|

| 344 |

+

]

|

| 345 |

+

},

|

| 346 |

+

{

|

| 347 |

+

"cell_type": "code",

|

| 348 |

+

"execution_count": 18,

|

| 349 |

+

"metadata": {},

|

| 350 |

+

"outputs": [

|

| 351 |

+

{

|

| 352 |

+

"data": {

|

| 353 |

+

"text/html": [

|

| 354 |

+

"<div>\n",

|

| 355 |

+

"<style scoped>\n",

|

| 356 |

+

" .dataframe tbody tr th:only-of-type {\n",

|

| 357 |

+

" vertical-align: middle;\n",

|

| 358 |

+

" }\n",

|

| 359 |

+

"\n",

|

| 360 |

+

" .dataframe tbody tr th {\n",

|

| 361 |

+

" vertical-align: top;\n",

|

| 362 |

+

" }\n",

|

| 363 |

+

"\n",

|

| 364 |

+

" .dataframe thead th {\n",

|

| 365 |

+

" text-align: right;\n",

|

| 366 |

+

" }\n",

|

| 367 |

+

"</style>\n",

|

| 368 |

+

"<table border=\"1\" class=\"dataframe\">\n",

|

| 369 |

+

" <thead>\n",

|

| 370 |

+

" <tr style=\"text-align: right;\">\n",

|

| 371 |

+

" <th></th>\n",

|

| 372 |

+

" <th>nconst</th>\n",

|

| 373 |

+

" </tr>\n",

|

| 374 |

+

" <tr>\n",

|

| 375 |

+

" <th>role</th>\n",

|

| 376 |

+

" <th></th>\n",

|

| 377 |

+

" </tr>\n",

|

| 378 |

+

" </thead>\n",

|

| 379 |

+

" <tbody>\n",

|

| 380 |

+

" <tr>\n",

|

| 381 |

+

" <th>actor</th>\n",

|

| 382 |

+

" <td>2537757</td>\n",

|

| 383 |

+

" </tr>\n",

|

| 384 |

+

" <tr>\n",

|

| 385 |

+

" <th>actress</th>\n",

|

| 386 |

+

" <td>1554419</td>\n",

|

| 387 |

+

" </tr>\n",

|

| 388 |

+

" </tbody>\n",

|

| 389 |

+

"</table>\n",

|

| 390 |

+

"</div>"

|

| 391 |

+

],

|

| 392 |

+

"text/plain": [

|

| 393 |

+

" nconst\n",

|

| 394 |

+

"role \n",

|

| 395 |

+

"actor 2537757\n",

|

| 396 |

+

"actress 1554419"

|

| 397 |

+

]

|

| 398 |

+

},

|

| 399 |

+

"execution_count": 18,

|

| 400 |

+

"metadata": {},

|

| 401 |

+

"output_type": "execute_result"

|

| 402 |

+

}

|

| 403 |

+

],

|

| 404 |

+

"source": [

|

| 405 |

+

"df.groupby(\"role\")[[\"nconst\"]].count()"

|

| 406 |

+

]

|

| 407 |

+

},

|

| 408 |

+

{

|

| 409 |

+

"cell_type": "code",

|

| 410 |

+

"execution_count": null,

|

| 411 |

+

"metadata": {},

|

| 412 |

+

"outputs": [],

|

| 413 |

+

"source": [

|

| 414 |

+

"# Get full list of movies/shows by actor\n",

|

| 415 |

+

"dfat = pd.read_csv(\"data/title.principals.tsv.gz\", sep=\"\\t\")\n",

|

| 416 |

+

"dfat = dfat[dfat.category.isin([\"actor\", \"actress\", \"self\"])][[\"tconst\", \"nconst\"]]\n",

|

| 417 |

+

"\n",

|

| 418 |

+

"# Get data for the movies/shows the actors appeared in\n",

|

| 419 |

+

"dftr = pd.read_csv(\"data/title.ratings.tsv\", sep=\"\\t\")\n",

|

| 420 |

+

"dftb = pd.read_csv(\"data/title.basics.tsv\", sep=\"\\t\")\n",

|

| 421 |

+

"dftb[\"startYear\"] = pd.to_numeric(dftb[\"startYear\"], errors=\"coerce\")\n",

|

| 422 |

+

"dftb[\"endYear\"] = pd.to_numeric(dftb[\"endYear\"], errors=\"coerce\")\n",

|

| 423 |

+

"\n",

|

| 424 |

+

"# Estimate last year the show/movie was released (TV shows span several years and might still be active)\n",

|

| 425 |

+

"# This is used to later filter for actors that were recently acting in something\n",

|

| 426 |

+

"dftb.loc[(dftb.titleType.isin([\"tvSeries\", \"tvMiniSeries\"]) & (dftb.endYear.isna())), \"lastYear\"] = current_year\n",

|

| 427 |

+

"dftb[\"lastYear\"] = dftb[\"lastYear\"].fillna(dftb[\"startYear\"])\n",

|

| 428 |

+

"dftb = dftb.dropna(subset=[\"lastYear\"])\n",

|

| 429 |

+

"dftb = dftb[dftb.isAdult == 0]"

|

| 430 |

+

]

|

| 431 |

+

},

|

| 432 |

+

{

|

| 433 |

+

"cell_type": "code",

|

| 434 |

+

"execution_count": null,

|

| 435 |

+

"metadata": {},

|

| 436 |

+

"outputs": [],

|

| 437 |

+

"source": [

|

| 438 |

+

"# Aggregate stats for all movies the actor was known for\n",

|

| 439 |

+

"dft = pd.merge(dftb, dftr, how=\"inner\", on=\"tconst\")\n",

|

| 440 |

+

"del dftb, dftr\n",

|

| 441 |

+

"dfat = pd.merge(dfat, dft, how=\"inner\", on=\"tconst\")\n",

|

| 442 |

+

"del dft\n",

|

| 443 |

+

"dfat[\"totalRating\"] = dfat.averageRating*dfat.numVotes\n",

|

| 444 |

+

"dfat = dfat.groupby(\"nconst\").agg({\n",

|

| 445 |

+

" \"averageRating\": \"mean\", \n",

|

| 446 |

+

" \"totalRating\": \"sum\", \n",

|

| 447 |

+

" \"numVotes\": \"sum\", \n",

|

| 448 |

+

" \"tconst\": \"count\", \n",

|

| 449 |

+

" \"startYear\": \"min\", \n",

|

| 450 |

+

" \"lastYear\": \"max\"\n",

|

| 451 |

+

"})"

|

| 452 |

+

]

|

| 453 |

+

},

|

| 454 |

+

{

|

| 455 |

+

"cell_type": "code",

|

| 456 |

+

"execution_count": null,

|

| 457 |

+

"metadata": {},

|

| 458 |

+

"outputs": [],

|

| 459 |

+

"source": [

|

| 460 |

+

"# Merge everything with actor data and cleanup\n",

|

| 461 |

+

"df = df.drop([\"deathYear\", \"knownForTitles\", \"primaryProfession\"], axis=1)\n",

|

| 462 |

+

"df = pd.merge(df, dfat, how=\"inner\", on=\"nconst\").sort_values(\"totalRating\", ascending=False)\n",

|

| 463 |

+

"df = df.dropna(subset=[\"birthYear\", \"startYear\", \"lastYear\"])\n",

|

| 464 |

+

"df[[\"birthYear\", \"startYear\", \"lastYear\"]] = df[[\"birthYear\", \"startYear\", \"lastYear\"]].astype(int)\n",

|

| 465 |

+

"df = df.round(2)"

|

| 466 |

+

]

|

| 467 |

+

}

|

| 468 |

+

],

|

| 469 |

+

"metadata": {

|

| 470 |

+

"interpreter": {

|

| 471 |

+

"hash": "90e1e830ac57dfc2c41e3e7a76c8ffd4bb6262b307f4273d56b17cf39c34bbe6"

|

| 472 |

+

},

|

| 473 |

+

"kernelspec": {

|

| 474 |

+

"display_name": "Python 3.7.11 64-bit ('actor_matching': conda)",

|

| 475 |

+

"language": "python",

|

| 476 |

+

"name": "python3"

|

| 477 |

+

},

|

| 478 |

+

"language_info": {

|

| 479 |

+

"codemirror_mode": {

|

| 480 |

+

"name": "ipython",

|

| 481 |

+

"version": 3

|

| 482 |

+

},

|

| 483 |

+

"file_extension": ".py",

|

| 484 |

+

"mimetype": "text/x-python",

|

| 485 |

+

"name": "python",

|

| 486 |

+

"nbconvert_exporter": "python",

|

| 487 |

+

"pygments_lexer": "ipython3",

|

| 488 |

+

"version": "3.7.11"

|

| 489 |

+

},

|

| 490 |

+

"orig_nbformat": 4

|

| 491 |

+

},

|

| 492 |

+

"nbformat": 4,

|

| 493 |

+

"nbformat_minor": 2

|

| 494 |

+

}

|

download_imdb_data.py → pipeline/download_imdb_data.py

RENAMED

|

File without changes

|

get_images_data.py → pipeline/get_images_data.py

RENAMED

|

@@ -12,7 +12,7 @@ load_dotenv()

|

|

| 12 |

|

| 13 |

BING_API_KEY = os.getenv("BING_API_KEY", None)

|

| 14 |

|

| 15 |

-

def get_actor_images(name: str, count: int = 50, api_key: str = BING_API_KEY):

|

| 16 |

"""Get a list of actor images from the Bing Image Search API"""

|

| 17 |

if api_key is None:

|

| 18 |

raise ValueError("You must provide a Bing API key")

|

|

@@ -21,6 +21,8 @@ def get_actor_images(name: str, count: int = 50, api_key: str = BING_API_KEY):

|

|

| 21 |

"Ocp-Apim-Subscription-Key": BING_API_KEY

|

| 22 |

}

|

| 23 |

query = f'"{name}"'

|

|

|

|

|

|

|

| 24 |

params = {

|

| 25 |

"q": query,

|

| 26 |

"count": count,

|

|

|

|

| 12 |

|

| 13 |

BING_API_KEY = os.getenv("BING_API_KEY", None)

|

| 14 |

|

| 15 |

+

def get_actor_images(name: str, role: str = None, count: int = 50, api_key: str = BING_API_KEY):

|

| 16 |

"""Get a list of actor images from the Bing Image Search API"""

|

| 17 |

if api_key is None:

|

| 18 |

raise ValueError("You must provide a Bing API key")

|

|

|

|

| 21 |

"Ocp-Apim-Subscription-Key": BING_API_KEY

|

| 22 |

}

|

| 23 |

query = f'"{name}"'

|

| 24 |

+

if role:

|

| 25 |

+

query = f"{query} ({role})"

|

| 26 |

params = {

|

| 27 |

"q": query,

|

| 28 |

"count": count,

|

process_images.py → pipeline/process_images.py

RENAMED

|

@@ -7,7 +7,10 @@ from time import time

|

|

| 7 |

|

| 8 |

|

| 9 |

def get_image(url: str):

|

| 10 |

-

|

|

|

|

|

|

|

|

|

|

| 11 |

response.raise_for_status()

|

| 12 |

img_file_object = BytesIO(response.content)

|

| 13 |

return face_recognition.load_image_file(img_file_object)

|

|

@@ -50,5 +53,5 @@ def build_annoy_index():

|

|

| 50 |

pass

|

| 51 |

|

| 52 |

if __name__ == "__main__":

|

| 53 |

-

output_file = "data/actors_embeddings.csv"

|

| 54 |

-

df_embeddings = process_all_images(input_file="data/actors_images.csv", output_file=output_file)

|

|

|

|

| 7 |

|

| 8 |

|

| 9 |

def get_image(url: str):

|

| 10 |

+

headers = {

|

| 11 |

+

"User-Agent": "Actors matching app 1.0"

|

| 12 |

+

}

|

| 13 |

+

response = requests.get(url, headers=headers)

|

| 14 |

response.raise_for_status()

|

| 15 |

img_file_object = BytesIO(response.content)

|

| 16 |

return face_recognition.load_image_file(img_file_object)

|

|

|

|

| 53 |

pass

|

| 54 |

|

| 55 |

if __name__ == "__main__":

|

| 56 |

+

output_file = "../data/actors_embeddings.csv"

|

| 57 |

+

df_embeddings = process_all_images(input_file="../data/actors_images.csv", output_file=output_file)

|