Spaces:

Runtime error

Runtime error

Satoru

commited on

Commit

•

cab1b96

1

Parent(s):

b9daada

feat: initial commit

Browse files- .gitattributes +1 -0

- .gitignore +4 -0

- api.py +101 -0

- app.py +56 -0

- images/025_0085.jpg +0 -0

- images/046_0051.jpg +0 -0

- models/index.ann +3 -0

- models/mappings.json +0 -0

- src/build.py +109 -0

- src/dwn.py +21 -0

- src/mapping.py +27 -0

- src/mappings.json +0 -0

.gitattributes

CHANGED

|

@@ -29,3 +29,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 29 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 31 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 29 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 30 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 31 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.ann filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.DS_Store

|

| 2 |

+

__pycache__

|

| 3 |

+

input_img.png

|

| 4 |

+

src/data

|

api.py

ADDED

|

@@ -0,0 +1,101 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import annoy

|

| 2 |

+

from typing import Tuple

|

| 3 |

+

import json

|

| 4 |

+

|

| 5 |

+

EMBEDDING_DIMENSION = 4096

|

| 6 |

+

ANNOY_INDEX_FILE = "models/index.ann"

|

| 7 |

+

ANNOY_MAPPING_FILE = "models/mappings.json"

|

| 8 |

+

IMG_RESIZE_SIZE = 224

|

| 9 |

+

|

| 10 |

+

####

|

| 11 |

+

|

| 12 |

+

def load_annoy_index(

|

| 13 |

+

index_file=ANNOY_INDEX_FILE,

|

| 14 |

+

mapping_file=ANNOY_MAPPING_FILE,

|

| 15 |

+

) -> Tuple[annoy.AnnoyIndex, dict]:

|

| 16 |

+

"""Load annoy index and associated mapping file"""

|

| 17 |

+

|

| 18 |

+

annoy_index = annoy.AnnoyIndex(f=EMBEDDING_DIMENSION, metric='euclidean')

|

| 19 |

+

annoy_index.load(index_file)

|

| 20 |

+

|

| 21 |

+

with open(ANNOY_MAPPING_FILE) as f:

|

| 22 |

+

mappings = json.load(f)

|

| 23 |

+

|

| 24 |

+

with open(mapping_file) as f:

|

| 25 |

+

mapping = json.load(f)

|

| 26 |

+

mapping = {int(k): v for k, v in mapping.items()}

|

| 27 |

+

return annoy_index, mappings

|

| 28 |

+

|

| 29 |

+

###

|

| 30 |

+

|

| 31 |

+

import torch

|

| 32 |

+

from torch import optim, nn

|

| 33 |

+

from torchvision import models, transforms

|

| 34 |

+

model = models.vgg16(pretrained=True)

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

# Transform the image, so it becomes readable with the model

|

| 39 |

+

transform = transforms.Compose([

|

| 40 |

+

transforms.ToPILImage(),

|

| 41 |

+

# transforms.CenterCrop(512),

|

| 42 |

+

# transforms.Resize(448),

|

| 43 |

+

transforms.Resize((IMG_RESIZE_SIZE, IMG_RESIZE_SIZE)),

|

| 44 |

+

transforms.ToTensor()

|

| 45 |

+

])

|

| 46 |

+

|

| 47 |

+

import cv2

|

| 48 |

+

|

| 49 |

+

class FeatureExtractor(nn.Module):

|

| 50 |

+

def __init__(self, model):

|

| 51 |

+

super(FeatureExtractor, self).__init__()

|

| 52 |

+

# Extract VGG-16 Feature Layers

|

| 53 |

+

self.features = list(model.features)

|

| 54 |

+

self.features = nn.Sequential(*self.features)

|

| 55 |

+

# Extract VGG-16 Average Pooling Layer

|

| 56 |

+

self.pooling = model.avgpool

|

| 57 |

+

# Convert the image into one-dimensional vector

|

| 58 |

+

self.flatten = nn.Flatten()

|

| 59 |

+

# Extract the first part of fully-connected layer from VGG16

|

| 60 |

+

self.fc = model.classifier[0]

|

| 61 |

+

|

| 62 |

+

def forward(self, x):

|

| 63 |

+

# It will take the input 'x' until it returns the feature vector called 'out'

|

| 64 |

+

out = self.features(x)

|

| 65 |

+

out = self.pooling(out)

|

| 66 |

+

out = self.flatten(out)

|

| 67 |

+

out = self.fc(out)

|

| 68 |

+

return out

|

| 69 |

+

|

| 70 |

+

# Initialize the model

|

| 71 |

+

model = models.vgg16(pretrained=True)

|

| 72 |

+

new_model = FeatureExtractor(model)

|

| 73 |

+

|

| 74 |

+

# Change the device to GPU

|

| 75 |

+

device = torch.device('cuda:0' if torch.cuda.is_available() else "cpu")

|

| 76 |

+

new_model = new_model.to(device)

|

| 77 |

+

|

| 78 |

+

import PIL

|

| 79 |

+

|

| 80 |

+

def analyze_image(

|

| 81 |

+

image, annoy_index, n_matches: int = 1, num_jitters: int = 1, model: str = "large"

|

| 82 |

+

):

|

| 83 |

+

PIL.Image.fromarray(image).save("input_img.png")

|

| 84 |

+

img = cv2.imread("input_img.png")

|

| 85 |

+

# Transform the image

|

| 86 |

+

img = transform(img)

|

| 87 |

+

# Reshape the image. PyTorch model reads 4-dimensional tensor

|

| 88 |

+

# [batch_size, channels, width, height]

|

| 89 |

+

# img = img.reshape(1, 3, 448, 448)

|

| 90 |

+

img = img.reshape(1, 3, IMG_RESIZE_SIZE, IMG_RESIZE_SIZE)

|

| 91 |

+

img = img.to(device)

|

| 92 |

+

# We only extract features, so we don't need gradient

|

| 93 |

+

with torch.no_grad():

|

| 94 |

+

# Extract the feature from the image

|

| 95 |

+

feature = new_model(img)

|

| 96 |

+

# Convert to NumPy Array, Reshape it, and save it to features variable

|

| 97 |

+

v = feature.cpu().detach().numpy().reshape(-1)

|

| 98 |

+

|

| 99 |

+

results = annoy_index.get_nns_by_vector(v, n_matches, include_distances=True)

|

| 100 |

+

|

| 101 |

+

return results

|

app.py

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

|

| 3 |

+

import numpy as np

|

| 4 |

+

import re

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

from api import load_annoy_index, analyze_image

|

| 7 |

+

|

| 8 |

+

annoy_index, mappings = load_annoy_index()

|

| 9 |

+

|

| 10 |

+

def get_article_text():

|

| 11 |

+

article = Path("README.md").read_text()

|

| 12 |

+

# Remove the HuggingFace Space app information from the README

|

| 13 |

+

article = re.sub(r"^---.+---\s+", "", article, flags=re.MULTILINE + re.DOTALL)

|

| 14 |

+

return article

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def find_matching_images(input_img, n_matches: int = 10):

|

| 19 |

+

results = analyze_image(input_img, annoy_index, n_matches=n_matches)

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

indexes = results[0]

|

| 23 |

+

# scores = results[1]

|

| 24 |

+

|

| 25 |

+

images = []

|

| 26 |

+

|

| 27 |

+

for i in range(len(indexes)):

|

| 28 |

+

index = str(indexes[i])

|

| 29 |

+

|

| 30 |

+

mapping = mappings[index]

|

| 31 |

+

|

| 32 |

+

url = mapping["url"]

|

| 33 |

+

if url != "":

|

| 34 |

+

images.append(url)

|

| 35 |

+

|

| 36 |

+

return images

|

| 37 |

+

|

| 38 |

+

iface = gr.Interface(

|

| 39 |

+

find_matching_images,

|

| 40 |

+

title="類似貼り込み資料検索",

|

| 41 |

+

description="""類似する貼り込み資料を検索します。 Upload a picture and find out!

|

| 42 |

+

Give it a shot or try one of the sample images below.

|

| 43 |

+

|

| 44 |

+

Built with ❤️ using great open-source libraries such as PyTorch and Annoy.""",

|

| 45 |

+

article=get_article_text(),

|

| 46 |

+

inputs=[

|

| 47 |

+

gr.inputs.Image(shape=None, label="Your image"),

|

| 48 |

+

],

|

| 49 |

+

outputs=gr.Gallery(label="類似する貼り込み資料"),

|

| 50 |

+

examples=[

|

| 51 |

+

["images/025_0085.jpg"],

|

| 52 |

+

["images/046_0051.jpg"],

|

| 53 |

+

],

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

iface.launch()

|

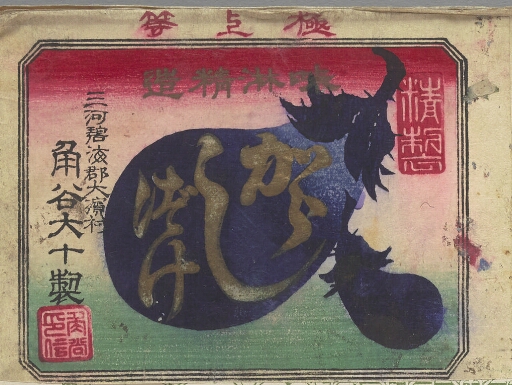

images/025_0085.jpg

ADDED

|

images/046_0051.jpg

ADDED

|

models/index.ann

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:008f512c1d862a9162807170d3ee5445df1bff76e26cb48f01ffabe3eb07284c

|

| 3 |

+

size 66534800

|

models/mappings.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

src/build.py

ADDED

|

@@ -0,0 +1,109 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import optim, nn

|

| 3 |

+

from torchvision import models, transforms

|

| 4 |

+

from torchvision.models.vgg import VGG16_Weights

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class FeatureExtractor(nn.Module):

|

| 8 |

+

def __init__(self, model):

|

| 9 |

+

super(FeatureExtractor, self).__init__()

|

| 10 |

+

# Extract VGG-16 Feature Layers

|

| 11 |

+

self.features = list(model.features)

|

| 12 |

+

self.features = nn.Sequential(*self.features)

|

| 13 |

+

# Extract VGG-16 Average Pooling Layer

|

| 14 |

+

self.pooling = model.avgpool

|

| 15 |

+

# Convert the image into one-dimensional vector

|

| 16 |

+

self.flatten = nn.Flatten()

|

| 17 |

+

# Extract the first part of fully-connected layer from VGG16

|

| 18 |

+

self.fc = model.classifier[0]

|

| 19 |

+

|

| 20 |

+

def forward(self, x):

|

| 21 |

+

# It will take the input 'x' until it returns the feature vector called 'out'

|

| 22 |

+

out = self.features(x)

|

| 23 |

+

out = self.pooling(out)

|

| 24 |

+

out = self.flatten(out)

|

| 25 |

+

out = self.fc(out)

|

| 26 |

+

return out

|

| 27 |

+

|

| 28 |

+

# Initialize the model

|

| 29 |

+

model = models.vgg16(weights=VGG16_Weights.DEFAULT)

|

| 30 |

+

new_model = FeatureExtractor(model)

|

| 31 |

+

|

| 32 |

+

# Change the device to GPU

|

| 33 |

+

device = torch.device('cuda:0' if torch.cuda.is_available() else "cpu")

|

| 34 |

+

new_model = new_model.to(device)

|

| 35 |

+

|

| 36 |

+

IMG_RESIZE_SIZE = 224

|

| 37 |

+

IMG_PATH = "data"

|

| 38 |

+

|

| 39 |

+

import cv2

|

| 40 |

+

from tqdm import tqdm

|

| 41 |

+

import numpy as np

|

| 42 |

+

|

| 43 |

+

# Transform the image, so it becomes readable with the model

|

| 44 |

+

transform = transforms.Compose([

|

| 45 |

+

transforms.ToPILImage(),

|

| 46 |

+

transforms.Resize((IMG_RESIZE_SIZE, IMG_RESIZE_SIZE)),

|

| 47 |

+

transforms.ToTensor()

|

| 48 |

+

])

|

| 49 |

+

|

| 50 |

+

# Will contain the feature

|

| 51 |

+

features = []

|

| 52 |

+

|

| 53 |

+

mappings = {}

|

| 54 |

+

|

| 55 |

+

import glob

|

| 56 |

+

# files = glob.glob("/content/drive/Shareddrives/ndl/kao/dataset 3/*.jpg")

|

| 57 |

+

files = glob.glob(f"{IMG_PATH}/*.jpg")

|

| 58 |

+

|

| 59 |

+

files.sort()

|

| 60 |

+

|

| 61 |

+

for index in tqdm(range(len(files))):

|

| 62 |

+

|

| 63 |

+

path = files[index]

|

| 64 |

+

|

| 65 |

+

img = cv2.imread(path)

|

| 66 |

+

# Transform the image

|

| 67 |

+

img = transform(img)

|

| 68 |

+

# Reshape the image. PyTorch model reads 4-dimensional tensor

|

| 69 |

+

# [batch_size, channels, width, height]

|

| 70 |

+

# img = img.reshape(1, 3, 448, 448)

|

| 71 |

+

img = img.reshape(1, 3, IMG_RESIZE_SIZE, IMG_RESIZE_SIZE)

|

| 72 |

+

img = img.to(device)

|

| 73 |

+

# We only extract features, so we don't need gradient

|

| 74 |

+

with torch.no_grad():

|

| 75 |

+

# Extract the feature from the image

|

| 76 |

+

feature = new_model(img)

|

| 77 |

+

# Convert to NumPy Array, Reshape it, and save it to features variable

|

| 78 |

+

features.append(feature.cpu().detach().numpy().reshape(-1))

|

| 79 |

+

|

| 80 |

+

mappings[index] = {

|

| 81 |

+

"nconst": path.split("/")[-1].split(".")[0],

|

| 82 |

+

"name": "",

|

| 83 |

+

"url": ""

|

| 84 |

+

}

|

| 85 |

+

|

| 86 |

+

# Convert to NumPy Array

|

| 87 |

+

features = np.array(features)

|

| 88 |

+

|

| 89 |

+

import json

|

| 90 |

+

|

| 91 |

+

with open('mappings.json', mode='wt', encoding='utf-8') as file:

|

| 92 |

+

json.dump(mappings, file, ensure_ascii=False, indent=2)

|

| 93 |

+

|

| 94 |

+

N_TREES = 1000

|

| 95 |

+

|

| 96 |

+

from annoy import AnnoyIndex

|

| 97 |

+

|

| 98 |

+

annoy_index = AnnoyIndex(features.shape[1], metric='euclidean')

|

| 99 |

+

|

| 100 |

+

for i in range(len(features)):

|

| 101 |

+

|

| 102 |

+

feature = features[i]

|

| 103 |

+

|

| 104 |

+

annoy_index.add_item(i, feature)

|

| 105 |

+

|

| 106 |

+

# k-d tree をビルドする

|

| 107 |

+

annoy_index.build(n_trees=N_TREES)

|

| 108 |

+

|

| 109 |

+

annoy_index.save("../models/index.ann")

|

src/dwn.py

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

from urllib import request

|

| 3 |

+

from tqdm import tqdm

|

| 4 |

+

import os

|

| 5 |

+

|

| 6 |

+

with open("/Users/nakamura/git/kunshujo/kunshujo/static/data/index.json") as f:

|

| 7 |

+

items = json.load(f)

|

| 8 |

+

for item in tqdm(items):

|

| 9 |

+

id = item["objectID"]

|

| 10 |

+

thumbnail = item["thumbnail"]

|

| 11 |

+

url = thumbnail.replace("/,300/", "/!512,512/")

|

| 12 |

+

|

| 13 |

+

opath = f"data/{id}.jpg"

|

| 14 |

+

|

| 15 |

+

if os.path.exists(opath):

|

| 16 |

+

continue

|

| 17 |

+

|

| 18 |

+

try:

|

| 19 |

+

request.urlretrieve(url, opath)

|

| 20 |

+

except:

|

| 21 |

+

pass

|

src/mapping.py

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

|

| 3 |

+

with open('mappings.json') as f:

|

| 4 |

+

df = json.load(f)

|

| 5 |

+

|

| 6 |

+

conf = {}

|

| 7 |

+

|

| 8 |

+

with open("/Users/nakamura/git/kunshujo/kunshujo/static/data/index.json") as f:

|

| 9 |

+

items = json.load(f)

|

| 10 |

+

for item in items:

|

| 11 |

+

conf[item["objectID"]] = item

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

for index in df:

|

| 15 |

+

item = df[index]

|

| 16 |

+

id = item["nconst"]

|

| 17 |

+

|

| 18 |

+

if id not in conf:

|

| 19 |

+

continue

|

| 20 |

+

|

| 21 |

+

c = conf[id]

|

| 22 |

+

|

| 23 |

+

item["url"] = c["thumbnail"]

|

| 24 |

+

item["name"] = c["label"]

|

| 25 |

+

|

| 26 |

+

with open('../models/mappings.json', mode='wt', encoding='utf-8') as file:

|

| 27 |

+

json.dump(df, file, ensure_ascii=False, indent=2)

|

src/mappings.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|