Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- .github/workflows/update_space.yml +28 -0

- .gitignore +164 -0

- BigModel/.gitattributes +34 -0

- BigModel/README.md +13 -0

- LICENSE.txt +201 -0

- MODEL_LICENSE.txt +33 -0

- README.md +325 -8

- README_en.md +246 -0

- __pycache__/finetune_visualglm.cpython-310.pyc +0 -0

- __pycache__/lora_mixin.cpython-310.pyc +0 -0

- api.py +51 -0

- api_hf.py +49 -0

- cli_demo.py +103 -0

- cli_demo_hf.py +69 -0

- examples/1.jpeg +3 -0

- examples/2.jpeg +0 -0

- examples/3.jpeg +0 -0

- examples/chat_example1.png +0 -0

- examples/chat_example2.png +0 -0

- examples/chat_example3.png +0 -0

- examples/example_inputs.jsonl +3 -0

- examples/thu.png +0 -0

- examples/web_demo.png +0 -0

- fewshot-data.zip +3 -0

- finetune/finetune_visualglm.sh +58 -0

- finetune/finetune_visualglm_qlora.sh +59 -0

- finetune_visualglm.py +195 -0

- lora_mixin.py +260 -0

- model/__init__.py +3 -0

- model/__pycache__/__init__.cpython-310.pyc +0 -0

- model/__pycache__/blip2.cpython-310.pyc +0 -0

- model/__pycache__/chat.cpython-310.pyc +0 -0

- model/__pycache__/infer_util.cpython-310.pyc +0 -0

- model/__pycache__/visualglm.cpython-310.pyc +0 -0

- model/blip2.py +93 -0

- model/chat.py +175 -0

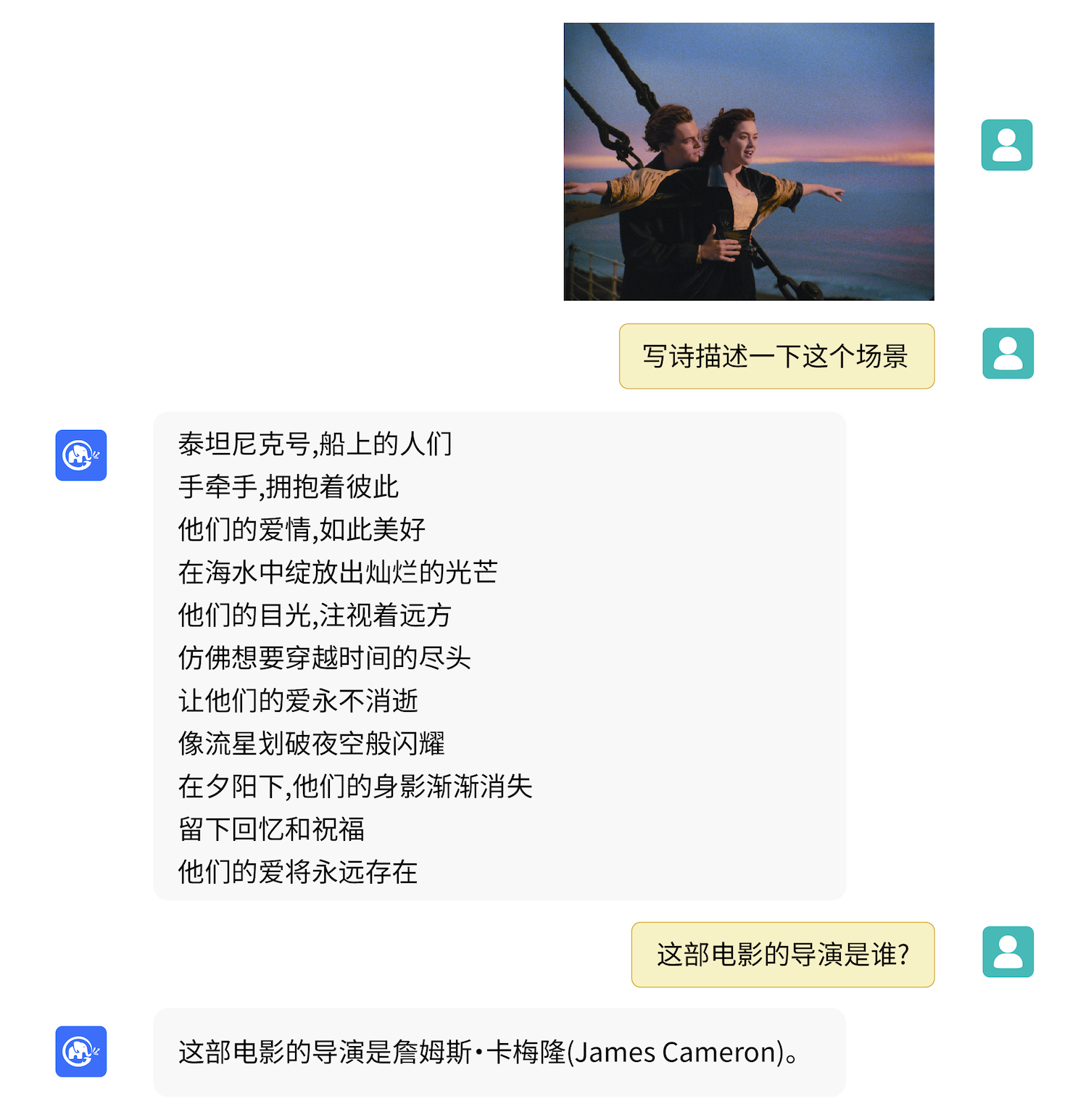

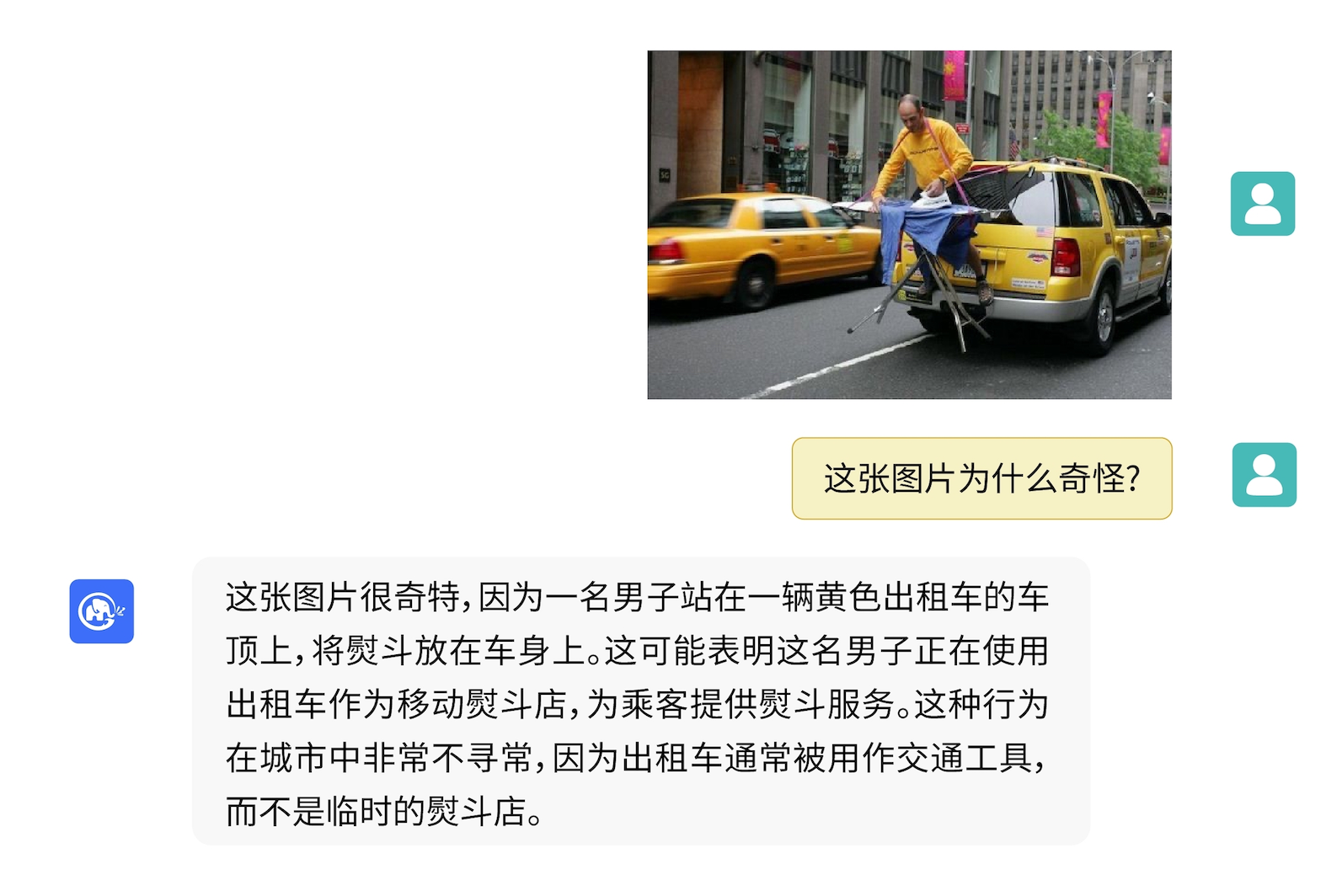

- model/infer_util.py +53 -0

- model/visualglm.py +40 -0

- requirements.txt +6 -0

- requirements_wo_ds.txt +10 -0

- web_demo.py +129 -0

- web_demo_hf.py +143 -0

- your_logfile.log +2 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

examples/1.jpeg filter=lfs diff=lfs merge=lfs -text

|

.github/workflows/update_space.yml

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Run Python script

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

|

| 8 |

+

jobs:

|

| 9 |

+

build:

|

| 10 |

+

runs-on: ubuntu-latest

|

| 11 |

+

|

| 12 |

+

steps:

|

| 13 |

+

- name: Checkout

|

| 14 |

+

uses: actions/checkout@v2

|

| 15 |

+

|

| 16 |

+

- name: Set up Python

|

| 17 |

+

uses: actions/setup-python@v2

|

| 18 |

+

with:

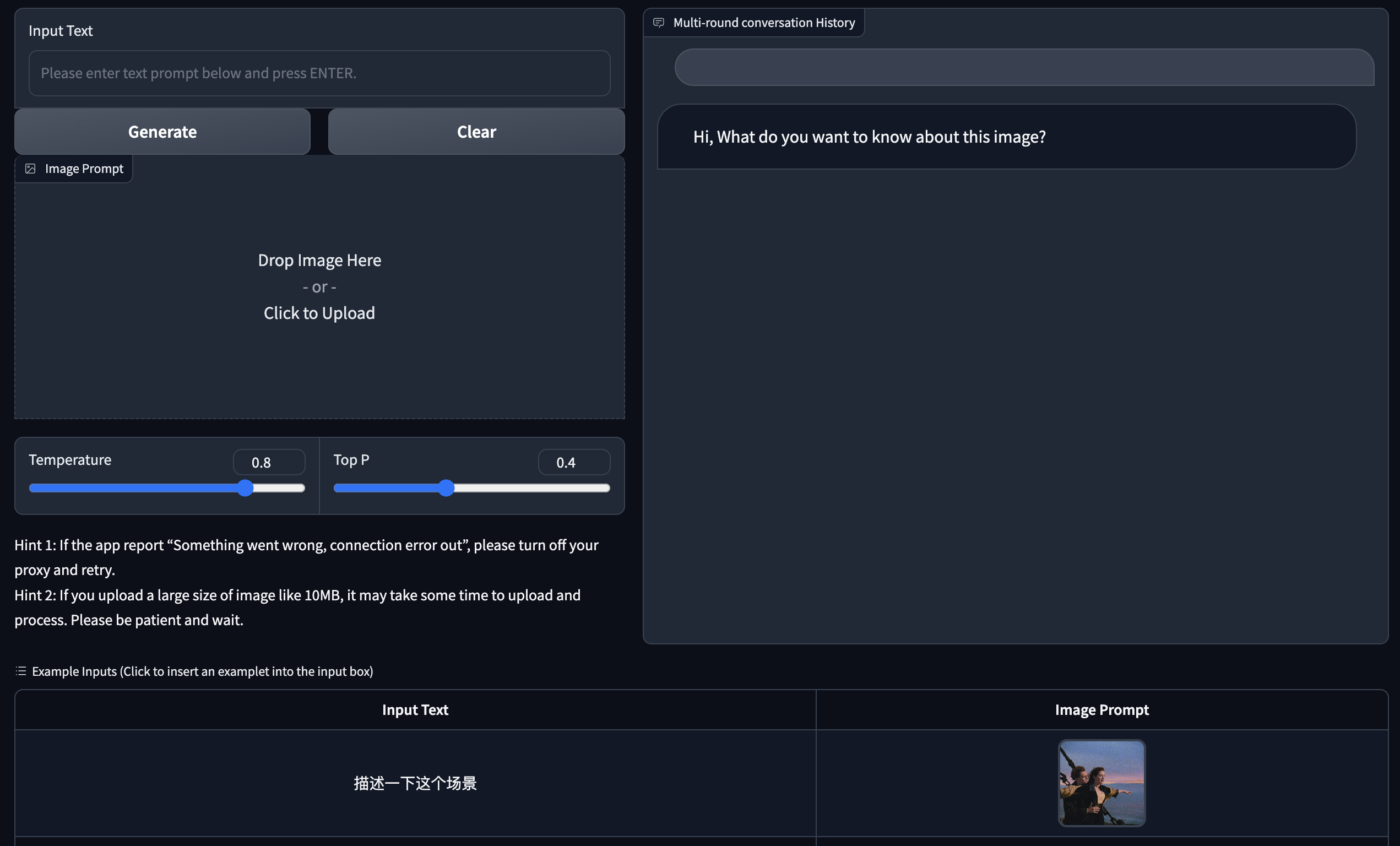

|

| 19 |

+

python-version: '3.9'

|

| 20 |

+

|

| 21 |

+

- name: Install Gradio

|

| 22 |

+

run: python -m pip install gradio

|

| 23 |

+

|

| 24 |

+

- name: Log in to Hugging Face

|

| 25 |

+

run: python -c 'import huggingface_hub; huggingface_hub.login(token="${{ secrets.hf_token }}")'

|

| 26 |

+

|

| 27 |

+

- name: Deploy to Spaces

|

| 28 |

+

run: gradio deploy

|

.gitignore

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

checkpoints/

|

| 2 |

+

runs/

|

| 3 |

+

model/__pycache__/

|

| 4 |

+

|

| 5 |

+

# Byte-compiled / optimized / DLL files

|

| 6 |

+

__pycache__/

|

| 7 |

+

*.py[cod]

|

| 8 |

+

*$py.class

|

| 9 |

+

|

| 10 |

+

# C extensions

|

| 11 |

+

*.so

|

| 12 |

+

|

| 13 |

+

# Distribution / packaging

|

| 14 |

+

.Python

|

| 15 |

+

build/

|

| 16 |

+

develop-eggs/

|

| 17 |

+

dist/

|

| 18 |

+

downloads/

|

| 19 |

+

eggs/

|

| 20 |

+

.eggs/

|

| 21 |

+

lib/

|

| 22 |

+

lib64/

|

| 23 |

+

parts/

|

| 24 |

+

sdist/

|

| 25 |

+

var/

|

| 26 |

+

wheels/

|

| 27 |

+

share/python-wheels/

|

| 28 |

+

*.egg-info/

|

| 29 |

+

.installed.cfg

|

| 30 |

+

*.egg

|

| 31 |

+

MANIFEST

|

| 32 |

+

|

| 33 |

+

# PyInstaller

|

| 34 |

+

# Usually these files are written by a python script from a template

|

| 35 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 36 |

+

*.manifest

|

| 37 |

+

*.spec

|

| 38 |

+

|

| 39 |

+

# Installer logs

|

| 40 |

+

pip-log.txt

|

| 41 |

+

pip-delete-this-directory.txt

|

| 42 |

+

|

| 43 |

+

# Unit test / coverage reports

|

| 44 |

+

htmlcov/

|

| 45 |

+

.tox/

|

| 46 |

+

.nox/

|

| 47 |

+

.coverage

|

| 48 |

+

.coverage.*

|

| 49 |

+

.cache

|

| 50 |

+

nosetests.xml

|

| 51 |

+

coverage.xml

|

| 52 |

+

*.cover

|

| 53 |

+

*.py,cover

|

| 54 |

+

.hypothesis/

|

| 55 |

+

.pytest_cache/

|

| 56 |

+

cover/

|

| 57 |

+

|

| 58 |

+

# Translations

|

| 59 |

+

*.mo

|

| 60 |

+

*.pot

|

| 61 |

+

|

| 62 |

+

# Django stuff:

|

| 63 |

+

*.log

|

| 64 |

+

local_settings.py

|

| 65 |

+

db.sqlite3

|

| 66 |

+

db.sqlite3-journal

|

| 67 |

+

|

| 68 |

+

# Flask stuff:

|

| 69 |

+

instance/

|

| 70 |

+

.webassets-cache

|

| 71 |

+

|

| 72 |

+

# Scrapy stuff:

|

| 73 |

+

.scrapy

|

| 74 |

+

|

| 75 |

+

# Sphinx documentation

|

| 76 |

+

docs/_build/

|

| 77 |

+

|

| 78 |

+

# PyBuilder

|

| 79 |

+

.pybuilder/

|

| 80 |

+

target/

|

| 81 |

+

|

| 82 |

+

# Jupyter Notebook

|

| 83 |

+

.ipynb_checkpoints

|

| 84 |

+

|

| 85 |

+

# IPython

|

| 86 |

+

profile_default/

|

| 87 |

+

ipython_config.py

|

| 88 |

+

|

| 89 |

+

# pyenv

|

| 90 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 91 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 92 |

+

# .python-version

|

| 93 |

+

|

| 94 |

+

# pipenv

|

| 95 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 96 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 97 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 98 |

+

# install all needed dependencies.

|

| 99 |

+

#Pipfile.lock

|

| 100 |

+

|

| 101 |

+

# poetry

|

| 102 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 103 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 104 |

+

# commonly ignored for libraries.

|

| 105 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 106 |

+

#poetry.lock

|

| 107 |

+

|

| 108 |

+

# pdm

|

| 109 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 110 |

+

#pdm.lock

|

| 111 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 112 |

+

# in version control.

|

| 113 |

+

# https://pdm.fming.dev/#use-with-ide

|

| 114 |

+

.pdm.toml

|

| 115 |

+

|

| 116 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 117 |

+

__pypackages__/

|

| 118 |

+

|

| 119 |

+

# Celery stuff

|

| 120 |

+

celerybeat-schedule

|

| 121 |

+

celerybeat.pid

|

| 122 |

+

|

| 123 |

+

# SageMath parsed files

|

| 124 |

+

*.sage.py

|

| 125 |

+

|

| 126 |

+

# Environments

|

| 127 |

+

.env

|

| 128 |

+

.venv

|

| 129 |

+

env/

|

| 130 |

+

venv/

|

| 131 |

+

ENV/

|

| 132 |

+

env.bak/

|

| 133 |

+

venv.bak/

|

| 134 |

+

|

| 135 |

+

# Spyder project settings

|

| 136 |

+

.spyderproject

|

| 137 |

+

.spyproject

|

| 138 |

+

|

| 139 |

+

# Rope project settings

|

| 140 |

+

.ropeproject

|

| 141 |

+

|

| 142 |

+

# mkdocs documentation

|

| 143 |

+

/site

|

| 144 |

+

|

| 145 |

+

# mypy

|

| 146 |

+

.mypy_cache/

|

| 147 |

+

.dmypy.json

|

| 148 |

+

dmypy.json

|

| 149 |

+

|

| 150 |

+

# Pyre type checker

|

| 151 |

+

.pyre/

|

| 152 |

+

|

| 153 |

+

# pytype static type analyzer

|

| 154 |

+

.pytype/

|

| 155 |

+

|

| 156 |

+

# Cython debug symbols

|

| 157 |

+

cython_debug/

|

| 158 |

+

|

| 159 |

+

# PyCharm

|

| 160 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 161 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 162 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 163 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 164 |

+

#.idea/

|

BigModel/.gitattributes

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

BigModel/README.md

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: BigModel

|

| 3 |

+

emoji: 🌍

|

| 4 |

+

colorFrom: yellow

|

| 5 |

+

colorTo: red

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 3.33.1

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

license: openrail

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

LICENSE.txt

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright Zhengxiao Du

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

MODEL_LICENSE.txt

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The VisualGLM-6B License

|

| 2 |

+

|

| 3 |

+

1. Definitions

|

| 4 |

+

|

| 5 |

+

“Licensor” means the VisualGLM-6B Model Team that distributes its Software.

|

| 6 |

+

|

| 7 |

+

“Software” means the VisualGLM-6B model parameters made available under this license.

|

| 8 |

+

|

| 9 |

+

2. License Grant

|

| 10 |

+

|

| 11 |

+

Subject to the terms and conditions of this License, the Licensor hereby grants to you a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable, royalty-free copyright license to use the Software solely for your non-commercial research purposes.

|

| 12 |

+

|

| 13 |

+

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

3. Restriction

|

| 16 |

+

|

| 17 |

+

You will not use, copy, modify, merge, publish, distribute, reproduce, or create derivative works of the Software, in whole or in part, for any commercial, military, or illegal purposes.

|

| 18 |

+

|

| 19 |

+

You will not use the Software for any act that may undermine China's national security and national unity, harm the public interest of society, or infringe upon the rights and interests of human beings.

|

| 20 |

+

|

| 21 |

+

4. Disclaimer

|

| 22 |

+

|

| 23 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

| 24 |

+

|

| 25 |

+

5. Limitation of Liability

|

| 26 |

+

|

| 27 |

+

EXCEPT TO THE EXTENT PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL THEORY, WHETHER BASED IN TORT, NEGLIGENCE, CONTRACT, LIABILITY, OR OTHERWISE WILL ANY LICENSOR BE LIABLE TO YOU FOR ANY DIRECT, INDIRECT, SPECIAL, INCIDENTAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES, OR ANY OTHER COMMERCIAL LOSSES, EVEN IF THE LICENSOR HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

|

| 28 |

+

|

| 29 |

+

6. Dispute Resolution

|

| 30 |

+

|

| 31 |

+

This license shall be governed and construed in accordance with the laws of People’s Republic of China. Any dispute arising from or in connection with this License shall be submitted to Haidian District People's Court in Beijing.

|

| 32 |

+

|

| 33 |

+

Note that the license is subject to update to a more comprehensive version. For any questions related to the license and copyright, please contact us.

|

README.md

CHANGED

|

@@ -1,12 +1,329 @@

|

|

| 1 |

---

|

| 2 |

-

title: VisualGLM

|

| 3 |

-

|

| 4 |

-

colorFrom: green

|

| 5 |

-

colorTo: pink

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 3.33.

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: VisualGLM-6B

|

| 3 |

+

app_file: web_demo_hf.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

+

sdk_version: 3.33.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

# VisualGLM-6B

|

| 8 |

|

| 9 |

+

<p align="center">

|

| 10 |

+

🤗 <a href="https://huggingface.co/THUDM/visualglm-6b" target="_blank">HF Repo</a> • ⚒️ <a href="https://github.com/THUDM/SwissArmyTransformer" target="_blank">SwissArmyTransformer (sat)</a> • 🐦 <a href="https://twitter.com/thukeg" target="_blank">Twitter</a>

|

| 11 |

+

</p>

|

| 12 |

+

<p align="center">

|

| 13 |

+

• 📃 <a href="https://arxiv.org/abs/2105.13290" target="_blank">[CogView@NeurIPS 21]</a> <a href="https://github.com/THUDM/CogView" target="_blank">[GitHub]</a> • 📃 <a href="https://arxiv.org/abs/2103.10360" target="_blank">[GLM@ACL 22]</a> <a href="https://github.com/THUDM/GLM" target="_blank">[GitHub]</a> <br>

|

| 14 |

+

</p>

|

| 15 |

+

<p align="center">

|

| 16 |

+

👋 加入我们的 <a href="https://join.slack.com/t/chatglm/shared_invite/zt-1th2q5u69-7tURzFuOPanmuHy9hsZnKA" target="_blank">Slack</a> 和 <a href="resources/WECHAT.md" target="_blank">WeChat</a>

|

| 17 |

+

</p>

|

| 18 |

+

<!-- <p align="center">

|

| 19 |

+

🤖<a href="https://huggingface.co/spaces/THUDM/visualglm-6b" target="_blank">VisualGLM-6B在线演示网站</a>

|

| 20 |

+

</p> -->

|

| 21 |

+

|

| 22 |

+

## 介绍

|

| 23 |

+

|

| 24 |

+

VisualGLM-6B is an open-source, multi-modal dialog language model that supports **images, Chinese, and English**. The language model is based on [ChatGLM-6B](https://github.com/THUDM/ChatGLM-6B) with 6.2 billion parameters; the image part builds a bridge between the visual model and the language model through the training of [BLIP2-Qformer](https://arxiv.org/abs/2301.12597), with the total model comprising 7.8 billion parameters. **[Click here for English version.](README_en.md)**

|

| 25 |

+

|

| 26 |

+

VisualGLM-6B 是一个开源的,支持**图像、中文和英文**的多模态对话语言模型,语言模型基于 [ChatGLM-6B](https://github.com/THUDM/ChatGLM-6B),具有 62 亿参数;图像部分通过训练 [BLIP2-Qformer](https://arxiv.org/abs/2301.12597) 构建起视觉模型与语言模型的桥梁,整体模型共78亿参数。

|

| 27 |

+

|

| 28 |

+

VisualGLM-6B 依靠来自于 [CogView](https://arxiv.org/abs/2105.13290) 数据集的30M高质量中文图文对,与300M经过筛选的英文图文对进行预训练,中英文权重相同。该训练方式较好地将视觉信息对齐到ChatGLM的语义空间;之后的微调阶段,模型在长视觉问答数据上训练,以生成符合人类偏好的答案。

|

| 29 |

+

|

| 30 |

+

VisualGLM-6B 由 [SwissArmyTransformer](https://github.com/THUDM/SwissArmyTransformer)(简称`sat`) 库训练,这是一个支持Transformer灵活修改、训练的工具库,支持Lora、P-tuning等参数高效微调方法。本项目提供了符合用户习惯的huggingface接口,也提供了基于sat的接口。

|

| 31 |

+

|

| 32 |

+

结合模型量化技术,用户可以在消费级的显卡上进行本地部署(INT4量化级别下最低只需8.7G显存)。

|

| 33 |

+

|

| 34 |

+

-----

|

| 35 |

+

|

| 36 |

+

VisualGLM-6B 开源模型旨在与开源社区一起推动大模型技术发展,恳请开发者和大家遵守开源协议,勿将该开源模型和代码及基于该开源项目产生的衍生物用于任何可能给国家和社会带来危害的用途以及用于任何未经过安全评估和备案的服务。目前,本项目官方未基于 VisualGLM-6B 开发任何应用,包括网站、安卓App、苹果 iOS应用及 Windows App 等。

|

| 37 |

+

|

| 38 |

+

由于 VisualGLM-6B 仍处于v1版本,目前已知其具有相当多的[**局限性**](README.md#局限性),如图像描述事实性/模型幻觉问题,图像细节信息捕捉不足,以及一些来自语言模型的局限性。尽管模型在训练的各个阶段都尽力确保数据的合规性和准确性,但由于 VisualGLM-6B 模型规模较小,且模型受概率随机性因素影响,无法保证输出内容的准确性,且模型易被误导(详见局限性部分)。在VisualGLM之后的版本中,将会着力对此类问题进行优化。本项目不承担开源模型和代码导致的数据安全、舆情风险或发生任何模型被误导、滥用、传播、不当利用而产生的风险和责任。

|

| 39 |

+

|

| 40 |

+

## 样例

|

| 41 |

+

VisualGLM-6B 可以进行图像的描述的相关知识的问答。

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

<details>

|

| 45 |

+

<summary>也能结合常识或提出有趣的观点,点击展开/折叠更多样例</summary>

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

</details>

|

| 51 |

+

|

| 52 |

+

## 友情链接

|

| 53 |

+

|

| 54 |

+

* [XrayGLM](https://github.com/WangRongsheng/XrayGLM) 是基于visualGLM-6B在X光诊断数据集上微调的X光诊断问答的项目,能根据X光片回答医学相关询问。

|

| 55 |

+

<details>

|

| 56 |

+

<summary>点击查看样例</summary>

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

</details>

|

| 60 |

+

|

| 61 |

+

## 使用

|

| 62 |

+

|

| 63 |

+

### 模型推理

|

| 64 |

+

|

| 65 |

+

使用pip安装依赖

|

| 66 |

+

```

|

| 67 |

+

pip install -i https://pypi.org/simple -r requirements.txt

|

| 68 |

+

# 国内请使用aliyun镜像,TUNA等镜像同步最近出现问题,命令如下

|

| 69 |

+

pip install -i https://mirrors.aliyun.com/pypi/simple/ -r requirements.txt

|

| 70 |

+

```

|

| 71 |

+

此时默认会安装`deepspeed`库(支持`sat`库训练),此库对于模型推理并非必要,同时部分Windows环境安装此库时会遇到问题。

|

| 72 |

+

如果想绕过`deepspeed`安装,我们可以将命令改为

|

| 73 |

+

```

|

| 74 |

+

pip install -i https://mirrors.aliyun.com/pypi/simple/ -r requirements_wo_ds.txt

|

| 75 |

+

pip install -i https://mirrors.aliyun.com/pypi/simple/ --no-deps "SwissArmyTransformer>=0.3.6"

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

如果使用Huggingface transformers库调用模型(**也需要安装上述依赖包!**),可以通过如下代码(其中图像路径为本地路径):

|

| 79 |

+

```python

|

| 80 |

+

from transformers import AutoTokenizer, AutoModel

|

| 81 |

+

tokenizer = AutoTokenizer.from_pretrained("THUDM/visualglm-6b", trust_remote_code=True)

|

| 82 |

+

model = AutoModel.from_pretrained("THUDM/visualglm-6b", trust_remote_code=True).half().cuda()

|

| 83 |

+

image_path = "your image path"

|

| 84 |

+

response, history = model.chat(tokenizer, image_path, "描述这张图片。", history=[])

|

| 85 |

+

print(response)

|

| 86 |

+

response, history = model.chat(tokenizer, image_path, "这张图片可能是在什么场所拍摄的?", history=history)

|

| 87 |

+

print(response)

|

| 88 |

+

```

|

| 89 |

+

以上代码会由 `transformers` 自动下载模型实现和参数。完整的模型实现可以在 [Hugging Face Hub](https://huggingface.co/THUDM/visualglm-6b)。如果你从 Hugging Face Hub 上下载模型参数的速度较慢,可以从[这里](https://cloud.tsinghua.edu.cn/d/43ffb021ca5f4897b56a/)手动下载模型参数文件,并从本地加载模型。具体做法请参考[从本地加载模型](https://github.com/THUDM/ChatGLM-6B#%E4%BB%8E%E6%9C%AC%E5%9C%B0%E5%8A%A0%E8%BD%BD%E6%A8%A1%E5%9E%8B)。关于基于 transformers 库模型的量化、CPU推理、Mac MPS 后端加速等内容,请参考 [ChatGLM-6B 的低成本部署](https://github.com/THUDM/ChatGLM-6B#%E4%BD%8E%E6%88%90%E6%9C%AC%E9%83%A8%E7%BD%B2)。

|

| 90 |

+

|

| 91 |

+

如果使用SwissArmyTransformer库调用模型,方法类似,可以使用环境变量`SAT_HOME`决定模型下载位置。在本仓库目录下:

|

| 92 |

+

```python

|

| 93 |

+

import argparse

|

| 94 |

+

from transformers import AutoTokenizer

|

| 95 |

+

tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm-6b", trust_remote_code=True)

|

| 96 |

+

from model import chat, VisualGLMModel

|

| 97 |

+

model, model_args = VisualGLMModel.from_pretrained('visualglm-6b', args=argparse.Namespace(fp16=True, skip_init=True))

|

| 98 |

+

from sat.model.mixins import CachedAutoregressiveMixin

|

| 99 |

+

model.add_mixin('auto-regressive', CachedAutoregressiveMixin())

|

| 100 |

+

image_path = "your image path or URL"

|

| 101 |

+

response, history, cache_image = chat(image_path, model, tokenizer, "描述这张图片。", history=[])

|

| 102 |

+

print(response)

|

| 103 |

+

response, history, cache_image = chat(None, model, tokenizer, "这张图片可能是在什么场所拍摄的?", history=history, image=cache_image)

|

| 104 |

+

print(response)

|

| 105 |

+

```

|

| 106 |

+

使用`sat`库也可以轻松进行进行参数高效微调。<!-- TODO 具体代码 -->

|

| 107 |

+

|

| 108 |

+

## 模型微调

|

| 109 |

+

|

| 110 |

+

多模态任务分布广、种类多,预训练往往不能面面俱到。

|

| 111 |

+

这里我们提供了一个小样本微调的例子,使用20张标注图增强模型回答“背景”问题的能力。

|

| 112 |

+

|

| 113 |

+

解压`fewshot-data.zip`以后运行如下命令:

|

| 114 |

+

```

|

| 115 |

+

bash finetune/finetune_visualglm.sh

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

目前支持三种方式的微调:

|

| 119 |

+

|

| 120 |

+

* LoRA:样例中为ChatGLM模型的第0层和第14层加入了rank=10的LoRA微调,可以根据具体情景和数据量调整`--layer_range`和`--lora_rank`参数。

|

| 121 |

+

* QLoRA:如果资源有限,可以考虑使用`bash finetune/finetune_visualglm_qlora.sh`,QLoRA将ChatGLM的线性层进行了4-bit量化,只需要9.8GB显存即可微调。

|

| 122 |

+

* P-tuning:可以将`--use_lora`替换为`--use_ptuning`,不过不推荐使用,除非模型应用场景非常固定。

|

| 123 |

+

|

| 124 |

+

训练好以后可以使用如下命令推理:

|

| 125 |

+

|

| 126 |

+

```

|

| 127 |

+

python cli_demo.py --from_pretrained your_checkpoint_path --prompt_zh 这张图片的背景里有什么内容?

|

| 128 |

+

```

|

| 129 |

+

|

| 130 |

+

<details>

|

| 131 |

+

<summary>微调前后的效果对比</summary>

|

| 132 |

+

|

| 133 |

+

微调前:

|

| 134 |

+

|

| 135 |

+

```

|

| 136 |

+

欢迎使用 VisualGLM-6B 模型,输入图像URL或本地路径读图,继续输入内容对话,clear 重新开始,stop 终止程序

|

| 137 |

+

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/2p.png

|

| 138 |

+

用户:这张图片的背景里有什么内容?

|

| 139 |

+

VisualGLM-6B:人们总是被生活琐事所困扰,无法摆脱束缚.

|

| 140 |

+

用户:clear

|

| 141 |

+

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/meme.png

|

| 142 |

+

用户:这张图片的背景里有什么内容?

|

| 143 |

+

VisualGLM-6B:这张图片的背景是一张狗的图片,它躺在木地板上。

|

| 144 |

+

用户:clear

|

| 145 |

+

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/passport.png

|

| 146 |

+

用户:这张图片的背景里有什么内容?

|

| 147 |

+

VisualGLM-6B:这张图片的背景是一台电脑键盘和两张护照。

|

| 148 |

+

用户:clear

|

| 149 |

+

请输入图像路径或URL(回车进入纯文本对话):

|

| 150 |

+

```

|

| 151 |

+

|

| 152 |

+

微调后:

|

| 153 |

+

|

| 154 |

+

```

|

| 155 |

+

欢迎使用 VisualGLM-6B 模型��输入图像URL或本地路径读图,继续输入内容对话,clear 重新开始,stop 终止程序

|

| 156 |

+

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/2p.png

|

| 157 |

+

用户:这张图片的背景里有什么内容?

|

| 158 |

+

VisualGLM-6B:这张图片的背景是雨天,

|

| 159 |

+

用户:描述一下这张图片。

|

| 160 |

+

VisualGLM-6B:这张图片描绘了雨天的情景,雨水淅淅沥沥地落在地面上。人们穿着雨衣或雨伞,走在雨中,似乎在寻找着什么.

|

| 161 |

+

用户:clear

|

| 162 |

+

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/meme.png

|

| 163 |

+

用户:这张图片的背景里有什么内容?

|

| 164 |

+

VisualGLM-6B:这张图片的背景是木质地板。

|

| 165 |

+

用户:什么颜色的地板

|

| 166 |

+

VisualGLM-6B:这张图片的背景是蓝色的。

|

| 167 |

+

用户:描述一下这张图片。

|

| 168 |

+

VisualGLM-6B:这张图片描绘了一只棕色的小狗躺在蓝色的木质地板上。

|

| 169 |

+

用户:clear

|

| 170 |

+

请输入图像路径或URL(回车进入纯文本对话): fewshot-data/passport.png

|

| 171 |

+

用户:这张图片的背景里有什么内容?

|

| 172 |

+

VisualGLM-6B:这张图片的背景是电脑键盘。

|

| 173 |

+

用户:描述一下这张图片。

|

| 174 |

+

VisualGLM-6B:这张图片的特点是电脑键盘和护照。

|

| 175 |

+

用户:有几张护照

|

| 176 |

+

VisualGLM-6B:两张护照。

|

| 177 |

+

用户:clear

|

| 178 |

+

请输入图像路径或URL(回车进入纯文本对话):

|

| 179 |

+

```

|

| 180 |

+

|

| 181 |

+

</details>

|

| 182 |

+

|

| 183 |

+

如果希望把LoRA部分的参数合并到原始的权重,可以调用`merge_lora()`,例如:

|

| 184 |

+

|

| 185 |

+

```python

|

| 186 |

+

from finetune_visualglm import FineTuneVisualGLMModel

|

| 187 |

+

import argparse

|

| 188 |

+

|

| 189 |

+

model, args = FineTuneVisualGLMModel.from_pretrained('checkpoints/finetune-visualglm-6b-05-19-07-36',

|

| 190 |

+

args=argparse.Namespace(

|

| 191 |

+

fp16=True,

|

| 192 |

+

skip_init=True,

|

| 193 |

+

use_gpu_initialization=True,

|

| 194 |

+

device='cuda',

|

| 195 |

+

))

|

| 196 |

+

model.get_mixin('lora').merge_lora()

|

| 197 |

+

args.layer_range = []

|

| 198 |

+

args.save = 'merge_lora'

|

| 199 |

+

args.mode = 'inference'

|

| 200 |

+

from sat.training.model_io import save_checkpoint

|

| 201 |

+

save_checkpoint(1, model, None, None, args)

|

| 202 |

+

```

|

| 203 |

+

|

| 204 |

+

微调需要安装`deepspeed`库,目前本流程仅支持linux系统,更多的样例说明和Windows系统的流程说明将在近期完成。

|

| 205 |

+

|

| 206 |

+

## 部署工具

|

| 207 |

+

|

| 208 |

+

### 命令行 Demo

|

| 209 |

+

|

| 210 |

+

```shell

|

| 211 |

+

python cli_demo.py

|

| 212 |

+

```

|

| 213 |

+

程序会自动下载sat模型,并在命令行中进行交互式的对话,输入指示并回车即可生成回复,输入 clear 可以清空对话历史,输入 stop 终止程序。

|

| 214 |

+

|

| 215 |

+

|

| 216 |

+

程序提供如下超参数控制生成过程与量化精度:

|

| 217 |

+

```

|

| 218 |

+

usage: cli_demo.py [-h] [--max_length MAX_LENGTH] [--top_p TOP_P] [--top_k TOP_K] [--temperature TEMPERATURE] [--english] [--quant {8,4}]

|

| 219 |

+

|

| 220 |

+

optional arguments:

|

| 221 |

+

-h, --help show this help message and exit

|

| 222 |

+

--max_length MAX_LENGTH

|

| 223 |

+

max length of the total sequence

|

| 224 |

+

--top_p TOP_P top p for nucleus sampling

|

| 225 |

+

--top_k TOP_K top k for top k sampling

|

| 226 |

+

--temperature TEMPERATURE

|

| 227 |

+

temperature for sampling

|

| 228 |

+

--english only output English

|

| 229 |

+

--quant {8,4} quantization bits

|

| 230 |

+

```

|

| 231 |

+

需要注意的是,在训练时英文问答对的提示词为`Q: A:`,而中文为`问:答:`,在网页demo中采取了中文的提示,因此英文回复会差一些且夹杂中文;如果需要英文回复,请使用`cli_demo.py`中的`--english`选项。

|

| 232 |

+

|

| 233 |

+

我们也提供了继承自`ChatGLM-6B`的打字机效果命令行工具,此工具使用Huggingface模型:

|

| 234 |

+

```shell

|

| 235 |

+

python cli_demo_hf.py

|

| 236 |

+

```

|

| 237 |

+

|

| 238 |

+

### 网页版 Demo

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

我们提供了一个基于 [Gradio](https://gradio.app) 的网页版 Demo,首先安装 Gradio:`pip install gradio`。

|

| 242 |

+

然后下载并进入本仓库运行`web_demo.py`:

|

| 243 |

+

|

| 244 |

+

```

|

| 245 |

+

git clone https://github.com/THUDM/VisualGLM-6B

|

| 246 |

+

cd VisualGLM-6B

|

| 247 |

+

python web_demo.py

|

| 248 |

+

```

|

| 249 |

+

程序会自动下载 sat 模型,并运行一个 Web Server,并输出地址。在浏览器中打开输出的地址即可使用。

|

| 250 |

+

|

| 251 |

+

|

| 252 |

+

我们也提供了继承自`ChatGLM-6B`的打字机效果网页版工具,此工具使用 Huggingface 模型,启动后将运行在`:8080`端口上:

|

| 253 |

+

```shell

|

| 254 |

+

python web_demo_hf.py

|

| 255 |

+

```

|

| 256 |

+

|

| 257 |

+

两种网页版 demo 均接受命令行参数`--share`以生成 gradio 公开链接,接受`--quant 4`和`--quant 8`以分别使用4比特量化/8比特量化减少显存占用。

|

| 258 |

+

|

| 259 |

+

### API部署

|

| 260 |

+

首先需要安装额外的依赖 `pip install fastapi uvicorn`,然后运行仓库中的 [api.py](api.py):

|

| 261 |

+

```shell

|

| 262 |

+

python api.py

|

| 263 |

+

```

|

| 264 |

+

程序会自动下载 sat 模型,默认部署在本地的 8080 端口,通过 POST 方法进行调用。下面是用`curl`请求的例子,一般而言可以也可以使用代码方法进行POST。

|

| 265 |

+

```shell

|

| 266 |

+

echo "{\"image\":\"$(base64 path/to/example.jpg)\",\"text\":\"描述这张图片\",\"history\":[]}" > temp.json

|

| 267 |

+

curl -X POST -H "Content-Type: application/json" -d @temp.json http://127.0.0.1:8080

|

| 268 |

+

```

|

| 269 |

+

得到的返回值为

|

| 270 |

+

```

|

| 271 |

+

{

|

| 272 |

+

"response":"这张图片展现了一只可爱的卡通羊驼,它站在��个透明的背景上。这只羊驼长着一张毛茸茸的耳朵和一双大大的眼睛,它的身体是白色的,带有棕色斑点。",

|

| 273 |

+

"history":[('描述这张图片', '这张图片展现了一只可爱的卡通羊驼,它站在一个透明的背景上。这只羊驼长着一张毛茸茸的耳朵和一双大大的眼睛,它的身体是白色的,带有棕色斑点。')],

|

| 274 |

+

"status":200,

|

| 275 |

+

"time":"2023-05-16 20:20:10"

|

| 276 |

+

}

|

| 277 |

+

```

|

| 278 |

+

|

| 279 |

+