Spaces:

Sleeping

Sleeping

svlipatov

commited on

Commit

·

87895a7

1

Parent(s):

adfb4d5

Add files via upload

Browse filesFormer-commit-id: 31ef92a7704222f12bc3f092fdaadc194dbb3413

- bezos500.jpg +0 -0

- gates500.jpg +0 -0

- jobs500.jpg +0 -0

- mask.jpg +0 -0

- model_execute.py +65 -0

- model_retrain.py +179 -0

- pickle_model.pkl.REMOVED.git-id +1 -0

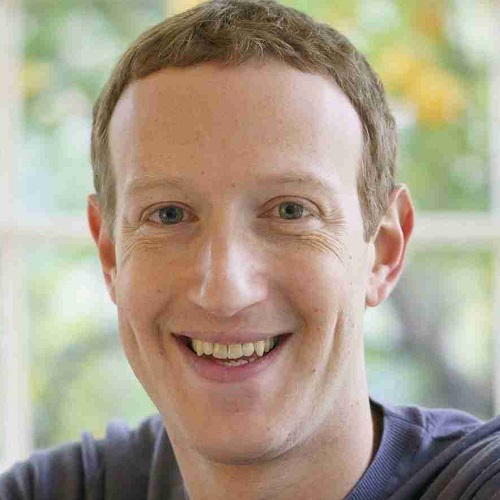

- zuckerberg500.jpg +0 -0

bezos500.jpg

ADDED

|

gates500.jpg

ADDED

|

jobs500.jpg

ADDED

|

mask.jpg

ADDED

|

model_execute.py

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pickle

|

| 2 |

+

import torch

|

| 3 |

+

import torchvision.transforms as transforms

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import torchvision

|

| 6 |

+

|

| 7 |

+

def check_photo(name, photo):

|

| 8 |

+

preprocess = transforms.Compose([

|

| 9 |

+

transforms.Resize(256),

|

| 10 |

+

transforms.CenterCrop(224),

|

| 11 |

+

transforms.ToTensor(),

|

| 12 |

+

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

|

| 13 |

+

])

|

| 14 |

+

input_tensor = preprocess(photo)

|

| 15 |

+

input_batch = input_tensor.unsqueeze(0) # create a mini-batch as expected by the model

|

| 16 |

+

|

| 17 |

+

# move the input and model to GPU for speed if available

|

| 18 |

+

if torch.cuda.is_available():

|

| 19 |

+

input_batch = input_batch.to('cuda')

|

| 20 |

+

model.to('cuda')

|

| 21 |

+

|

| 22 |

+

with torch.no_grad():

|

| 23 |

+

output = model(input_batch)

|

| 24 |

+

# Tensor of shape 1000, with confidence scores over ImageNet's 1000 classes

|

| 25 |

+

print(name, output[0])

|

| 26 |

+

# The output has unnormalized scores. To get probabilities, you can run a softmax on it.

|

| 27 |

+

probabilities = torch.nn.functional.softmax(output[0], dim=0)

|

| 28 |

+

print(name, probabilities)

|

| 29 |

+

|

| 30 |

+

pkl_filename = "pickle_model.pkl"

|

| 31 |

+

with open(pkl_filename, 'rb') as file:

|

| 32 |

+

model = pickle.load(file)

|

| 33 |

+

|

| 34 |

+

model.eval()

|

| 35 |

+

# sample execution (requires torchvision)

|

| 36 |

+

|

| 37 |

+

gates_photo = Image.open("gates500.jpg")

|

| 38 |

+

musk_photo = Image.open("mask.jpg")

|

| 39 |

+

bezos_photo = Image.open("bezos500.jpg")

|

| 40 |

+

zuker_photo = Image.open("zuckerberg500.jpg")

|

| 41 |

+

jobs_photo = Image.open("jobs500.jpg")

|

| 42 |

+

test_photos_dict = {'gates':gates_photo, 'musk':musk_photo, 'bezos':bezos_photo,'zuker': zuker_photo,'jobs': jobs_photo}

|

| 43 |

+

for name in test_photos_dict:

|

| 44 |

+

check_photo(name, test_photos_dict[name])

|

| 45 |

+

# preprocess = transforms.Compose([

|

| 46 |

+

# transforms.Resize(256),

|

| 47 |

+

# transforms.CenterCrop(224),

|

| 48 |

+

# transforms.ToTensor(),

|

| 49 |

+

# transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

|

| 50 |

+

# ])

|

| 51 |

+

# input_tensor = preprocess(test_photos_list)

|

| 52 |

+

# input_batch = input_tensor.unsqueeze(0) # create a mini-batch as expected by the model

|

| 53 |

+

#

|

| 54 |

+

# # move the input and model to GPU for speed if available

|

| 55 |

+

# if torch.cuda.is_available():

|

| 56 |

+

# input_batch = input_batch.to('cuda')

|

| 57 |

+

# model.to('cuda')

|

| 58 |

+

#

|

| 59 |

+

# with torch.no_grad():

|

| 60 |

+

# output = model(input_batch)

|

| 61 |

+

# # Tensor of shape 1000, with confidence scores over ImageNet's 1000 classes

|

| 62 |

+

# print(output[0])

|

| 63 |

+

# print(model)

|

| 64 |

+

|

| 65 |

+

# print(probabilities)

|

model_retrain.py

ADDED

|

@@ -0,0 +1,179 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import torch

|

| 3 |

+

import random

|

| 4 |

+

import numpy as np

|

| 5 |

+

import torchvision

|

| 6 |

+

import matplotlib.pyplot as plt

|

| 7 |

+

import torchvision.transforms as transforms

|

| 8 |

+

import shutil

|

| 9 |

+

import time

|

| 10 |

+

import xml.etree.ElementTree as et

|

| 11 |

+

import pickle

|

| 12 |

+

|

| 13 |

+

from tqdm import tqdm

|

| 14 |

+

from PIL import Image

|

| 15 |

+

from torchvision import models

|

| 16 |

+

from torch.utils.data import DataLoader

|

| 17 |

+

from torchvision.datasets import ImageFolder

|

| 18 |

+

BATCH_SIZE = 32

|

| 19 |

+

|

| 20 |

+

use_gpu = torch.cuda.is_available()

|

| 21 |

+

device = 'cuda' if use_gpu else 'cpu'

|

| 22 |

+

print('Connected device:', device)

|

| 23 |

+

|

| 24 |

+

musk = 'https://drive.google.com/uc?export=download&id=1BOuq35QzO1YtKQkYGfj_vtBj3Ps5xyBN'

|

| 25 |

+

gates ='https://drive.google.com/uc?export=download&id=1jgHQF_NMpH9uMTvic9rGnURu_8UOGdiz'

|

| 26 |

+

bezos = 'https://drive.google.com/uc?export=download&id=1n5UaLL-TAkjIeBbTNcn-Czkp_A3Eslhj'

|

| 27 |

+

zuker = 'https://drive.google.com/uc?export=download&id=1ncPmYTg6EPHlUFdcjl_bXTbtWRLv2DXy'

|

| 28 |

+

jobs = 'https://drive.google.com/uc?export=download&id=1TX3hiRyvSYiYVZUFrbAhN3Jpp9cd0Q9s'

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

# Метки

|

| 32 |

+

face_lst=[

|

| 33 |

+

["Bill Gates",'people/gates500.jpg'],

|

| 34 |

+

["Jeff Besoz",'people/bezos500.jpg'],

|

| 35 |

+

["Mark Zuckerberg", 'people/zuckerberg500.jpg'],

|

| 36 |

+

["Steve Jobs",'people/jobs500.jpg']

|

| 37 |

+

]

|

| 38 |

+

|

| 39 |

+

# Датасет скачивается во время выполнения программы

|

| 40 |

+

import wget

|

| 41 |

+

from zipfile import ZipFile

|

| 42 |

+

|

| 43 |

+

# os.mkdir('data')

|

| 44 |

+

|

| 45 |

+

# url = 'https://drive.google.com/uc?export=download&id=120xqh0mYtYZ1Qh7vr-XFzjPbSKivLJjA'

|

| 46 |

+

# file_name = wget.download(url, 'data/')

|

| 47 |

+

#

|

| 48 |

+

# with ZipFile(file_name, 'r') as zip_file:

|

| 49 |

+

# zip_file.extractall()

|

| 50 |

+

#

|

| 51 |

+

# link_lst = [musk, gates, bezos, zuker, jobs]

|

| 52 |

+

# for link in link_lst:

|

| 53 |

+

# wget.download(link, 'data/')

|

| 54 |

+

|

| 55 |

+

# Датасет для тренировки

|

| 56 |

+

train_dataset = ImageFolder(

|

| 57 |

+

root='people/train'

|

| 58 |

+

)

|

| 59 |

+

# Датасет для проверки

|

| 60 |

+

valid_dataset = ImageFolder(

|

| 61 |

+

root='people/valid'

|

| 62 |

+

)

|

| 63 |

+

|

| 64 |

+

# augmentations (ухудшение качество чтобы не было переобучения)

|

| 65 |

+

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

|

| 66 |

+

std=[0.229, 0.224, 0.225])

|

| 67 |

+

train_dataset.transform = transforms.Compose([

|

| 68 |

+

transforms.Resize([70, 70]),

|

| 69 |

+

transforms.RandomHorizontalFlip(),

|

| 70 |

+

transforms.RandomAutocontrast(),

|

| 71 |

+

transforms.RandomEqualize(),

|

| 72 |

+

transforms.ToTensor(),

|

| 73 |

+

normalize

|

| 74 |

+

])

|

| 75 |

+

|

| 76 |

+

valid_dataset.transform = transforms.Compose([

|

| 77 |

+

transforms.Resize([70, 70]),

|

| 78 |

+

transforms.ToTensor(),

|

| 79 |

+

normalize

|

| 80 |

+

])

|

| 81 |

+

|

| 82 |

+

# Размер каждого пакета при обучение

|

| 83 |

+

# Training data loaders.

|

| 84 |

+

train_loader = DataLoader(

|

| 85 |

+

train_dataset, batch_size=BATCH_SIZE,

|

| 86 |

+

shuffle=True

|

| 87 |

+

)

|

| 88 |

+

# Размер каждого пакета при проверке

|

| 89 |

+

# Validation data loaders.

|

| 90 |

+

valid_loader = DataLoader(

|

| 91 |

+

valid_dataset, batch_size=BATCH_SIZE,

|

| 92 |

+

shuffle=False

|

| 93 |

+

)

|

| 94 |

+

|

| 95 |

+

# Указание на используемую модель

|

| 96 |

+

def google(): # pretrained=True для tensorflow

|

| 97 |

+

model = models.googlenet(weights=models.GoogLeNet_Weights.IMAGENET1K_V1)

|

| 98 |

+

# Добавление линейного (выходного) слоя на основании которого идет дообучение

|

| 99 |

+

model.fc = torch.nn.Linear(1024, len(train_dataset.classes))

|

| 100 |

+

for param in model.parameters():

|

| 101 |

+

param.requires_grad = True

|

| 102 |

+

# Заморозка весов т.к. при переобучении модели они должны быть постоянны, а меняться будет только последний слой

|

| 103 |

+

model.inception3a.requires_grad = False

|

| 104 |

+

model.inception3b.requires_grad = False

|

| 105 |

+

model.inception4a.requires_grad = False

|

| 106 |

+

model.inception4b.requires_grad = False

|

| 107 |

+

model.inception4c.requires_grad = False

|

| 108 |

+

model.inception4d.requires_grad = False

|

| 109 |

+

model.inception4e.requires_grad = False

|

| 110 |

+

return model

|

| 111 |

+

|

| 112 |

+

# Функция обучения модели. Epoch - количество итераций обучения (прогонов по нейросети)

|

| 113 |

+

def train(model, optimizer, train_loader, val_loader, epoch=10):

|

| 114 |

+

loss_train, acc_train = [], []

|

| 115 |

+

loss_valid, acc_valid = [], []

|

| 116 |

+

# tqdm - прогресс бар

|

| 117 |

+

for epoch in tqdm(range(epoch)):

|

| 118 |

+

# Ошибки

|

| 119 |

+

losses, equals = [], []

|

| 120 |

+

torch.set_grad_enabled(True)

|

| 121 |

+

|

| 122 |

+

# Train. Обучение. В цикле проходится по картинкам и оптимизируются потери

|

| 123 |

+

model.train()

|

| 124 |

+

for i, (image, target) in enumerate(train_loader):

|

| 125 |

+

image = image.to(device)

|

| 126 |

+

target = target.to(device)

|

| 127 |

+

output = model(image)

|

| 128 |

+

loss = criterion(output,target)

|

| 129 |

+

|

| 130 |

+

losses.append(loss.item())

|

| 131 |

+

equals.extend(

|

| 132 |

+

[x.item() for x in torch.argmax(output, 1) == target])

|

| 133 |

+

|

| 134 |

+

optimizer.zero_grad()

|

| 135 |

+

loss.backward()

|

| 136 |

+

optimizer.step()

|

| 137 |

+

# Метрики отображающие резултитаты обучения модели

|

| 138 |

+

loss_train.append(np.mean(losses))

|

| 139 |

+

acc_train.append(np.mean(equals))

|

| 140 |

+

losses, equals = [], []

|

| 141 |

+

torch.set_grad_enabled(False)

|

| 142 |

+

|

| 143 |

+

# Validate. Оценка качества обучения

|

| 144 |

+

model.eval()

|

| 145 |

+

for i , (image, target) in enumerate(valid_loader):

|

| 146 |

+

image = image.to(device)

|

| 147 |

+

target = target.to(device)

|

| 148 |

+

|

| 149 |

+

output = model(image)

|

| 150 |

+

loss = criterion(output,target)

|

| 151 |

+

|

| 152 |

+

losses.append(loss.item())

|

| 153 |

+

equals.extend(

|

| 154 |

+

[y.item() for y in torch.argmax(output, 1) == target])

|

| 155 |

+

|

| 156 |

+

loss_valid.append(np.mean(losses))

|

| 157 |

+

acc_valid.append(np.mean(equals))

|

| 158 |

+

|

| 159 |

+

return loss_train, acc_train, loss_valid, acc_valid

|

| 160 |

+

|

| 161 |

+

criterion = torch.nn.CrossEntropyLoss()

|

| 162 |

+

criterion = criterion.to(device)

|

| 163 |

+

|

| 164 |

+

model = google() # здесь можете заменить на VGG

|

| 165 |

+

print('Model: GoogLeNet\n')

|

| 166 |

+

|

| 167 |

+

# оптимайзер - отвечает за поиск и подбор оптимальных весов

|

| 168 |

+

optimizer = torch.optim.Adam(model.parameters(), lr=1e-4)

|

| 169 |

+

model = model.to(device)

|

| 170 |

+

|

| 171 |

+

loss_train, acc_train, loss_valid, acc_valid = train(

|

| 172 |

+

model, optimizer, train_loader, valid_loader, 30)

|

| 173 |

+

print('acc_train:', acc_train, '\nacc_valid:', acc_valid)

|

| 174 |

+

|

| 175 |

+

# Сохранение модели в текущую рабочую директорию

|

| 176 |

+

pkl_filename = "pickle_model.pkl"

|

| 177 |

+

with open(pkl_filename, 'wb') as file:

|

| 178 |

+

pickle.dump(model, file)

|

| 179 |

+

|

pickle_model.pkl.REMOVED.git-id

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

7f6f655ec6c2e6c5e3909cb0b10f718b1e648be5

|

zuckerberg500.jpg

ADDED

|